#thermodynamics

Text

*upon someone mentioning the laws of thermodynamics or entropy to me* Ah yes, I have also seen Puella Magi Madoka Magica

#madoka invented the concept of entropy#don't bother looking it up I'm right#pmmm#madoka magica#puella magi madoka magica#entropy#thermodynamics#funny

428 notes

·

View notes

Text

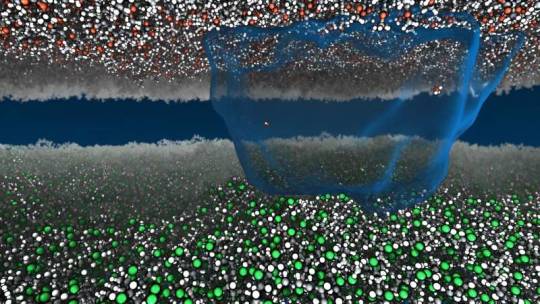

A team of researchers led by the University of Massachusetts Amherst has recently found an exception to the 200-year-old law, known as Fourier's Law, that governs how heat diffuses through solid materials.

Though scientists have shown previously that there are exceptions to the law at the nanoscale, the research, published in the Proceedings of the National Academy of Sciences, is the first to show that the law doesn't always hold true at the macro scale, and that pure electromagnetic radiation is also at work in some common materials like plastics and glasses.

Continue Reading.

197 notes

·

View notes

Text

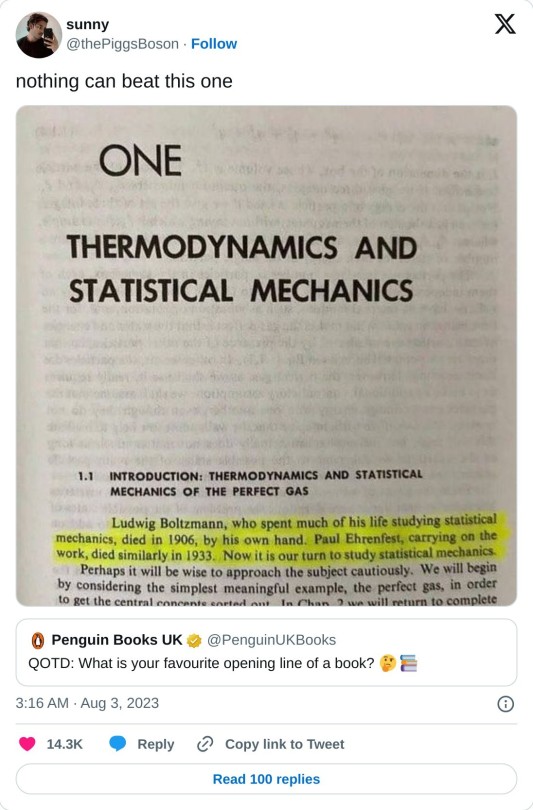

Now it is our turn to study statistical mechanics...

#physics#science#thermodynamics#statistical mechanics#mechanics#insidesjoke#memes#meme#funny#student memes#humour#humor#comedy#most popular

337 notes

·

View notes

Text

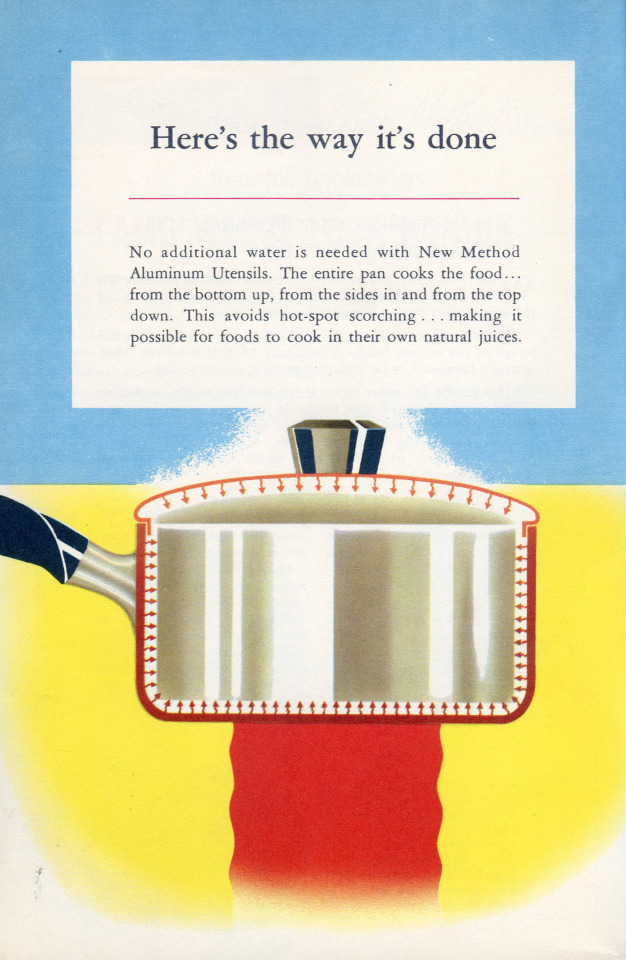

Thermodynamics

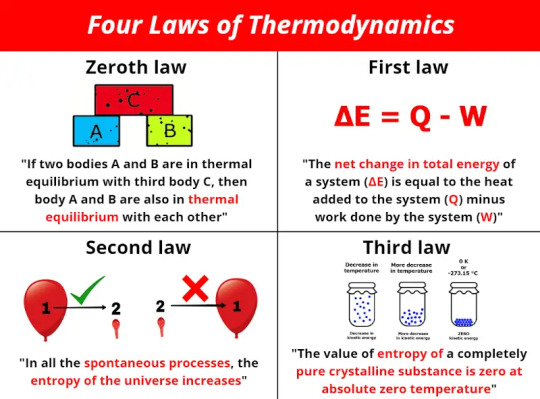

Thermodynamics is defined as a branch of physics that deals with energy, primarily heat, temperature, and the work of a system. More specifically, it deals with the relationships between these values and others, including entropy and the conversion of one form of energy to another. Only large scale, relatively speaking, system responses are defined as thermodynamics - the overall trend or reaction a system exhibits. There are four laws of thermodynamics, defined in the image above.

Sources/Further Reading: (Image source - LinkedIn) (Wikipedia) (NASA) (Harvard Lecture) (Livescience.com)

90 notes

·

View notes

Text

fun fact if you're writing about a character that doesn't or isn't actively generating heat, the only way they're getting warmer is by getting a heat source, and clothing and blankets are not heat sources. they're insulation.

the reason people are warmer sleeping under blankets is because their body is radiating heat, and the blanket is cycling that heat back.

if your character doesn't give off heat, they're getting net zero heat from that blanket, unless it's warm from some other source, at most it'll block wind, but they won't be getting warmer

if your character is Actively Generating Cold, the blanket will Make Them Colder, or insulate their cold from outer elements.

40 notes

·

View notes

Text

entropy and life//entropy and death

This is a discussion that spun out of a post on web novel The Flower That Bloomed Nowhere. However, it's mostly a chance to lay out the entropy thing. So most of it is not Flower related at all...

the thermodynamics lesson

Entropy is one of those subjects that tends to be described quite vaguely. The rigorous definition, on the other hand, is packed full of jargon like 'macrostates', which I found pretty hard to wrap my head around as a university student back in the day. So let's begin this post with an attempt to lay it out a bit more intuitively.

In the early days of thermodynamics, as 19th-century scientists like Clausius attempted to get to grips with 'how do you build a better steam engine', entropy was a rather mysterious quantity that emerged from their networks of differential equations. It was defined in relation to measurable quantities temperature and heat. If you add heat to a system at a given temperature, its entropy goes up. In an idealised reversible process, like compressing a piston infinitely slowly, the entropy stays constant.

Strangely, this convenient quantity always seemed to go up or stay the same, never ever down. This was so strictly true that it was declared to be a 'law of thermodynamics'. Why the hell should that be true? Turns out they'd accidentally stumbled on one of the most fundamental principles of the universe.

So. What actually is it? When we talk about entropy, we are talking about a system that can be described in two related ways: a 'nitty-gritty details' one that's exhaustively precise, and a 'broad strokes' one that brushes over most of those details. (The jargon calls the first one a 'microstate' and the second one a 'macrostate'.)

For example, say the thing you're trying to describe is a gas. The 'nitty gritty details' description would describe the position and velocity of every single molecule zipping around in that gas. The 'broad strokes' description would sum it all up with a few quantities such as temperature, volume and pressure, which describe how much energy and momentum the molecules have on average, and the places they might be.

In general there are many different possible ways you could arrange the molecules and their kinetic energy match up with that broad-strokes description.

In statistical mechanics, entropy describes the relationship between the two. It measures the number of possible 'nitty gritty details' descriptions that match up with the 'broad strokes' description.

In short, entropy could be thought of as a measure of what is not known or indeed knowable. It is sort of like a measure of 'disorder', but it's a very specific sense of 'disorder'.

For another example, let's say that you are running along with two folders. Each folder contains 100 pages, and one of them is important to you. You know for sure it's in the left folder. But then you suffer a comical anime collision that leads to your papers going all over the floor! You pick them up and stuff them randomly back in the folders.

In the first state, the macrostate is 'the important page is in the left folder'. There are 100 positions it could be. After your accident, you don't know which folder has that page. The macrostate is 'It could be in either folder'. So there are now 200 positions it could be. This means your papers are now in a higher entropy state than they were before.

In general, if you start out a system in a given 'broad strokes' state, it will randomly explore all the different 'nitty gritty details' states available in its phase space (this is called the ergodic hypothesis). The more 'nitty gritty details' states that are associated with a given 'broad strokes' state, the more likely that it will end up in that state. In practice, once you have realistic numbers of particles involved, the probabilities involved are so extreme that we can say that the system will almost certainly end up in a 'broad strokes' state with equal or higher entropy. This is called the Second Law of Thermodynamics: it says entropy will always stay the same, or increase.

This is the modern, statistical view of entropy developed by Ludwig Boltzmann in the 1870s and really nailed down at the start of the 20th century, summed up by the famous formula S=k log W. This was such a big deal that they engraved it on his tombstone.

Since the Second Law of Thermodynamics is statistical in nature, it applies anywhere its assumptions hold, regardless of how the underlying physics works. This makes it astonishingly powerful. Before long, the idea of entropy in thermodynamics inspired other, related ideas. Claude Shannon used the word entropy for a measure of the maximum information conveyed in a message of a certain length.

the life of energy and entropy

So, everything is made of energy, and that energy is in a state with a certain amount of thermodynamic entropy. As we just discussed, every chemical process must globally increase the entropy. If the entropy of one thing goes down, the entropy of something else must increase by an equal or greater amount.

(A little caveat: traditional thermodynamics was mainly concerned with systems in equilibrium. Life is almost by definition not in thermodynamic equilibrium, which makes things generally a lot more complicated. Luckily I'm going to talk about things at such a high level of abstraction that it won't matter.)

There are generally speaking two ways to increase entropy. You can add more energy to the system, and you can take the existing energy and distribute it more evenly.

For example, a fridge in a warm room is in a low entropy state. Left to its own devices, energy from outside would make its way into the fridge, lowering the temperature of the outside slightly and increasing the temperature of the inside. This would increase the entropy: there are more ways for the energy to be distributed when the inside of the fridge is warmer.

To cool the fridge we want to move some energy back to the outside. But that would lower entropy, which is a no-no! To get around this, the heat pump on a fridge must always add a bit of extra energy to the outside of the fridge. In this way it's possible to link the cooling of the inside of the fridge to the increase in entropy outside, and the whole process becomes thermodynamically viable.

Likewise, for a coherent pattern such as life to exist, it must slot itself into the constant transition from low to high entropy in a way that can dump the excess entropy it adds somewhere else.

Fortunately, we live on a planet that is orbiting a bright star, and also radiating heat into space. The sun provides energy in a relatively low-entropy state: highly directional, in a certain limited range of frequencies. The electromagnetic radiation leaving our planet is in a higher entropy state. The earth as a whole is pretty near equilibrium (although it's presently warming, as you might have heard).

Using a multistep process and suitable enzymes, photosynthesis can convert a portion of the incoming sunlight energy into sugars, which are in a tasty low entropy state. This is a highly unfavoured process in general, and it requires some shenanigans to get away with it. But basically, the compensating increase in entropy is achieved by heating up the surroundings, which radiate away lower-temperature infrared radiation.

the reason we don't live forever

Nearly all other lifeforms depend on these helpfully packaged low-entropy molecules. We take in molecules from outside by breathing in and eating and drinking, put them through a bunch of chemical reactions (respiration and so forth), and emit molecules at a higher entropy (breathing out, pissing, shitting, etc.). Since we constantly have to throw away molecules to get rid of the excess entropy produced by the processes of living, we constantly have to eat more food. This is what I was alluding to in the Dungeon Meshi post from the other day.

That's the short-timescale battle against entropy. On longer timescales, we can more vaguely say that life depends on the ability to preserve a low-entropy, non-equilibrium state. On the simplest level, a human body is in a very low entropy state compared to a cloud of carbon dioxide and water, but we generally speaking do not spontaneously combust because there is a high enough energy barrier in the way. But in a more abstract one, our cells continue to function in specialised roles, the complex networks of reaction pathways continue to tick over, and the whole machine somehow keeps working.

However, the longer you try to maintain a pattern, the more low-probability problems start to become statistical inevitabilities.

For example, cells contain a whole mess of chemical reactions which can gradually accumulate errors, waste products etc. which can corrupt their functioning. To compensate for this, multicellular organisms are constantly rebuilding themselves. On the one hand, their cells divide to create new cells; on the other, stressed cells undergo apoptosis, i.e. die. However, sometimes cells become corrupt in a way that causes them to fail to die when instructed. Our body has an entire complicated apparatus designed to detect those cells and destroy them before they start replicating uncontrollably. Our various defensive mechanisms detect and destroy the vast majority of potentially cancerous cells... but over a long enough period, the odds are not in our favour. Every cell has a tiny potential to become cancerous.

At this point we're really not in the realm of rigorous thermodynamic entropy calculations. However, we can think of 'dead body' as generally speaking a higher-entropy set of states than 'living creature'. There are many more ways for the atoms that make us up to be arranged as a dead person, cloud of gas, etc. than an alive person. Worse still should we find we were in a metastable state, where only a small boost over the energy barrier is needed to cause a runaway reaction that drops us into a lower energy, higher entropy state.

In a sense, a viral infection could be thought of as a collapse of a metastable pattern. The replication machinery in our cells could produce human cells but it can equally produce viruses, and it turns out stamping out viruses is (in this loose sense) a higher entropy pattern; the main thing that stops us from turning into a pile of viruses is the absence of a virus to kick the process off.

So sooner or later, we inevitably(?) hit a level of disruption which causes a cascading failure in all these interlinked biological systems. The pattern collapses.

This is what we call 'death'.

an analogy

If you're familiar with cellular automata like Conway's Game of Life, you'll know it's possible to construct incredibly elaborate persistent patterns. You can even build the game of life in the game of life. But these systems can be quite brittle: if you scribble a little on the board, the coherent pattern will break and it will collapse back into a random mess of oscillators. 'Random mess of oscillators' is a high-entropy state for the Game of Life: there are many many different board states that correspond to it. 'Board that plays the Game of Life' is a low-entropy state: there are a scant few states that fit.

The ergodic hypothesis does not apply to the Game of Life. Without manual intervention, the 'game of life in game of life' board would keep simulating a giant version of the game of life indefinitely. However...

For physical computer systems, a vaguely similar process of accumulating problems can occur. For example, a program with a memory leak will gradually request more and more memory from the operating system, leaving more and more memory in an inaccessible state. Other programs may end up running slowly, starved of resources.

In general, there are a great many ways a computer can go wrong, and few that represent it going right.

One of the ways our body avoids collapsing like this is by dedicating resources to cells whose job is to monitor the other cells and intervene if they show heuristic signs of screwing up. This is the evolutionary arms race between immune system and virus. The same can be true on computers, which also support 'viruses' in the form of programs that are able to hijack a computer and replicate themselves onto other computers - and one of our solutions is similar, writing programs which detect and terminate programs which have the appearance of viruses.

When a computer is running slowly, the first thing to do is to reboot it. This will reload all the programs from the unchanging version on disc.

The animal body's version of a reboot is to dump all the accumulated decay onto a corpse and produce a whole new organism from a single pair of cells. This is one function of reproduction, a chance to wipe the slate clean. (One thing I remain curious about is how the body keeps the gamete cells in good shape.)

but what if we did live forever?

I am not particularly up to date on senescence research, but in general the theories do appear to go along broad lines of 'accumulating damage', with disagreement over what represents the most fundamental cause.

Here's how Su discusses the problem of living indefinitely in The Flower That Blooms Nowhere, chapter 2:

The trouble, however, is that the longer you try to preserve a system well into a length of time it is utterly not designed (well, evolved, in this case) for, the more strange and complicated problems appear. Take cancer, humanity’s oldest companion. For a young person with a body that's still running according to program, it's an easy problem to solve. Stick a scepter in their business, cast the Life-Slaying Arcana with the 'cancerous' addendum script – which identifies and eliminates around the 10,000 most common types of defective cell – and that's all it takes. No problem! A monkey could do it.

But the body isn’t a thing unto itself, a inherently stable entity that just gets worn down or sometimes infected with nasty things. And cancer cells aren’t just malevolent little sprites that hop out of the netherworld. They’re one of innumerable quasi-autonomous components that are themselves important to the survival of the body, but just happen to be doing their job slightly wrong. So even the act of killing them causes disruption. Maybe not major disruption, but disruption all the same. Which will cause little stressors on other components, which in turn might cause them to become cancerous, maybe in a more 'interesting' way that’s a little harder to detect. And if you stop that...

Or hell, forget even cancer. Cells mutate all the time just by nature, the anima script becoming warped slightly in the process of division. Most of the time, it's harmless; so long as you stay up to date with your telomere extensions, most dysfunctional cells don't present serious problems and can be easily killed off by your immune system. But live long enough, and by sheer mathematics, you'll get a mutation that isn't. And if you live a really long time, you'll get a lot of them, and unless you can detect them perfectly, they'll build up, with, again, interesting results.

At a deep enough level, the problem wasn't biology. It was physics. Entropy.

A few quirks of the setting emerge here. Rather than DNA we have 'the anima script'. It remains to be seen if this is just another name for DNA or reflecting some fundamental alt-biology that runs on magic or some shit. Others reflect real biology: 'telomeres' are a region at the end of the DNA strands in chromosomes. They serve as a kind of ablative shield, protecting the end of the DNA during replication. The loss of the telomeres have been touted as a major factor in the aging process.

A few chapters later we encounter a man who does not think of himself as really being the same person as he was a hundred years ago. Which, mood - I don't think I'm really the same person I was ten years ago. Or five. Or hell, even one.

The problem with really long-term scifi life extension ends up being a kind of signal-vs-noise problem. Humans change, a lot, as our lives advance. Hell, life is a process of constant change. We accumulate experiences and memories, learn new things, build new connections, change our opinions. Mostly this is desirable. Even if you had a perfect down-to-the-nucleon recording of the state of a person at a given point in time, overwriting a person with that state many years later would amount to killing them and replacing them with their old self. So the problem becomes distinguishing the good, wanted changes ('character development', even if contrary to what you wanted in the past) from the bad unwanted changes (cancer or whatever).

But then it gets squirly. Memories are physical too. If you experienced a deeply traumatic event, and learned a set of unwanted behaviours and associations that will shit up your quality of life, maybe you'd want to erase that trauma and forget or rewrite that memory. But if you're gonna do that... do you start rewriting all your memories? Does space become limited at some point? Can you back up your memories? What do you choose to preserve, and what do you choose to delete?

Living forever means forgetting infinitely many things, and Ship-of-Theseusing yourself into infinitely many people... perhaps infinitely many times each. Instead of death being sudden and taking place at a particular moment in time, it's a gradual transition into something that becomes unrecognisable from the point of view of your present self. I don't think there's any coherent self-narrative that can hold up in the face of infinity.

That's still probably better than dying I guess! But it is perhaps unsettling, in the same way that it's unsettling to realise that whether or not Everett quantum mechanics is true, and if there is a finite amount information in the observable universe, an infinite universe must contain infinite exact copies of that observable universe, and infinite near variations, and basically you end up with many-worlds through the back door. Unless the universe is finite or something.

Anyway, living forever probably isn't on the cards for us. Honestly I think we'll be lucky if complex global societies make it through the next century. 'Making it' in the really long term is going to require an unprecedented megaproject of effort to effect a complete renewable transition and reorganise society to a steady state economy which, just like life, takes in only low-entropy energy and puts out high-entropy energy in the form of photons, with all the other materials - minerals etc. - circulating in a closed loop. That probably won't happen but idk, never say never.

Looking forward to how this book plays with all this stuff.

#fiction#sff#web serials#entropy#physics#biology#thermodynamics#the flower that bloomed nowhere#ok that's all i have to say about the first twelve chapters lmaooo

26 notes

·

View notes

Text

CALLING ALL ENGINEERING STUDENTS PAST AND PRESENT!

161 notes

·

View notes

Text

Physics Friday #7: It's getting hot in here! - An explanation of Temperature, Entropy, and Heat

THE PEOPLE HAVE SPOKEN. This one was decided by poll. The E = mc^2 post will happen sometime later in the future

Preamble: Thermodynamic Systems

Education level: High School (Y11/12)

Topic: Statistical Mechanics, Thermodynamics (Physics)

You'll hear the word system being used a lot ... what does that mean? Basically a thermodynamic system is a collection of things that we think affect each other.

A container of gas is a system as the particles of gas all interact with each other.

The planet is a system because we, and all life/particles on earth all interact together.

Often, when dealing with thermodynamic systems we differentiate between open, closed, and isolated systems.

An open system is where both the particles and energy contained inside the system interact outside the system. Closed systems only allow energy to enter and exit the system (usually via a "reservoir").

We will focus mainly on isolated systems, where nothing enters or exits the system at all. Unless if we physically change what counts as the "system".

Now imagine we have a system, say, a container of gas. This container will have a temperature, pressure, volume, density, etc.

Let's make an identical copy of this container and then combine it with it's duplicate.

What happens to the temperature? Well it stays the same. Whenever you combine two objects of the same temperature they stay the same. If you pour a pot of 20 C water into another pot of 20 C water, no temperature change occurs.

The same occurs with pressure and density. While there are physically more particles in the system, the volume has also increased.

This makes things like Temperature, Pressure, and Density intensive properties of a system - they don't change when you combine systems with copies of itself. They act more like averages.

However, duplicating a system and combining it with itself causes the volume to double, it also doubles the amount of 'stuff' inside the system.

Thus things like volume are called intensive, as they appear to be altered by count and size, they act more like proportional values.

This is important in both understanding heat and temperature. The energy of a system is an intensive property, whereas temperature is intensive.

Temperature appears to be a sort of average of thermal energy, which is how we can analyse it - but this only works in the case of gasses, which isn't universal. It's useful to use a more abstract definition of temperature.

Heat, on the other hand, is much more real. It is concerned with the amount of energy transferred cased by a change in temperature. This change is driven by the second law of thermodynamics, which requires a maximisation of entropy.

But instead of tackling this from a high-end perspective, let's jump into the nitty-gritty ...

Microstates and Macrostates

The best way we can demonstrate the concept of Entropy is via the analogy of a messy room:

We can create a macrostate of the room by describing the room:

There's a shirt on the floor

The bed is unmade

There is a heater in the centre

The heater is broken

Note how in this macrostate, we can have several possible arrangements that describe the same macrostate. For example, the shirt on the floor could be red, blue, black, green.

A microstate of the room is a specific arrangement of items, to the maximum specificity we require.

For example the shirt must be green, the right heater leg is broken. If we care about even more specificity we could say a microstate of the system is:

Atom 1 is Hydrogen in position (0, 0, 0)

Atom 2 is Carbon in position (1, 0, 0)

etc.

Effectively, a macrostate is a more general description of a system, while a microstate is a specific iteration of a system.

A microstate is considered attached to a macrostate if the conditions of the macrostate are required. "Dave is wearing a shirt" and "Dave is wearing a red shirt" can both be true, but it's clear that if Dave is wearing a red shirt, he is also wearing a shirt.

What Entropy Actually is (by Definition)

The multiplicity of a microstate is the total amount of microstates attached to it. It is basically a "count" of the total permutations given the restrictions.

We give the multiplicity an algebraic number Ω.

What we define entropy as is the natural logarithm of Ω.

S = k ln Ω

Where k is Boltzmann's constant, to give the entropy units. The reason why we take the logarithm is:

Combinatorics often involve factorials and exponents a.k.a. big numbers, so we use the log function to cut it down to size

When you combine a system with a macrostate of Ω=X with a macrostate of Ω=Y, the total multiplicity is X × Y. So this logarithm makes Entropy extensive

So that's what Entropy is, a measure of the amount of possible rearrangements of a system given a set of preconditions.

Order and Chaos

So how do we end up with the popular notion that Entropy is a measure of chaos, well, consider a sand castle,

Image Credit: Wall Street Journal

A sand castle, compared the surrounding beach, is a very specific structure. It requires specific arrangements of sand particles in order to form a proper structure.

This is opposed to the beach, where any loose arrangement of sand particles can be considered a 'beach'.

In this scenario, the castle has a very low multiplicity, because the macrostate of 'sandcastle' requires a very restrictive set of microstates. Whereas a sand dune has a very large set of possible microstates.

In this way, we can see how the 'order' and 'structure' of the sand castle results in a low-entropy system.

However this doesn't explain how we can get such complex systems if energy is always meant to increase. Like how can life exist if the universe intends to make things a mess of particles, AND the universe started as a mess of particles.

The consideration to make, as we'll see, is that chaos is not the full picture, large amounts of energy can be turned into making entropy lower.

Energy Macrostates

There's still a problem with our definition. Consider two macrostates:

The room exists

Atom 1 is Hydrogen in position (0, 0, 0), Atom 2 is Carbon in position (1, 0, 0), etc.

Macrostate one has a multiplicity so large it might as well be infinite, and is so general it encapsulates all possible states of the system.

Macrostate two is so specific that it only has a multiplicity of one.

Clearly we need some standard to set macrostates to.

What we do is that we define a macrostate by one single parameter: the amount of thermal energy in the system. We can also include things like volume or the amount of particles etc. But for now, a macrostate corresponds to a specific energy of the system.

This means that the multiplicity becomes a function of thermal energy, U.

S(U) = k ln Ω(U)

The Second Law of Thermodynamics

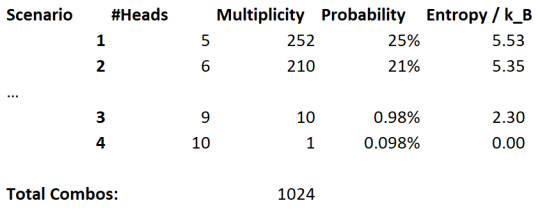

Let's consider a system which is determined by a bunch of flipped coins, say, 10 flipped coins.

H T H T H H H T T H

This may seem like a weird example, but there is a genuine usefulness to this. For example, consider atoms aligned in a magnetic field.

We can define the energy of the system as being a function of the amount of heads we see. Thus an energy macrostate would be "X coins are heads".

Let's now say that every minute, we reset the system. i.e. we flip the coins again and use the new result.

Consider the probability of ending up a certain macrostate every minute. We can use the binomial theorem to calculate this probability:

Here, the column notation gives the choose function, which accounts for duplicates, as we are not concerned with the order in which we get any two tails or heads.

The p to the power of k is the probability of landing a head (50%) to the power of the number of heads we get (k). n-k becomes the number of tails obtained.

The choose function is symmetric, so we end up with equal probabilities with k heads as with k tails.

Let's come up with some scenarios:

There are an equal amount of heads and tails flipped

There is exactly two more heads than tails (i.e. 6-4)

All coins are heads except for one

All coins are heads

And let's see these probabilities:

Clearly, it is more likely that we find an equal amount of coins, but all coins being heads is not too unlikely. Also notice that the entropy correlates with probability here. A large entropy is more likely to occur.

Let's now increase the number of coin flips to 1000:

Well, now we can see this probability difference much more clearly. The "all coins are heads" microstate is vanishingly unlikely, and microstates close to maximum entropy are very likely comparatively.

If we keep expanding the amount of flips, we end up pushing the limits of this relationship. And thus we get the tendency for this system to maximise the entropy, simply because it's most likely.

In real life, systems don't suddenly reset and restart. Instead we want to consider a system where every minute, one random coin is selected and then flipped.

Consider a state in maximum entropy undergoing this change. It's going to take an incredibly long amount of time to perform something incredibly unlikely.

But for a state in minimum entropy, any deviation from the norm brings the entropy higher.

Thus the system has the tendency to end up being "trapped" in the higher entropy states.

This is the second law of thermodynamics. It doesn't actually make a statement about a particularly small system. But for small systems we deal with statistics differently. For large systems, we end up ALWAYS seeing a global increase in entropy.

How we get temperature

Temperature is usually defined as the capacity to transfer thermal energy. It sort of acts like a "heat potential" in the same way we have a "gravitational potential" or "electrical potential".

Temperature and Thermal Energy

What is the mathematical meaning of temperature?

Temperature is defined as a rate of change, specifically:

(Apologies for the fucked image formatting)

(Reminder this assumes Entropy is exclusively a function of energy)

The reason we define it as a derivative of entropy as entropy is an emergent property of a system. But thermal energy is something we can personally change.

What this rule means is that a system with a very low temperature will react greatly to minor inputs in energy. A low temperature system thus is really good at drawing energy from outside.

Alternatively, a system with very high temperature will react very slightly to minor inputs in energy.

At the extremes, absolute zero is where any change to the internal energy of the system will cause the system to escape absolute zero. According to the third law of thermodynamics this is where entropy reaches a constant value, because it can't be changed any more after being changed by an infinite amount.

Infinite temperature is where it's effectively impossible to pump more heat into the system, because the system is so resistant to accepting new energy.

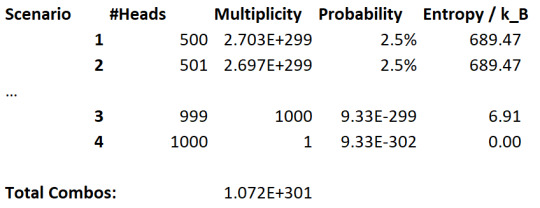

Negative Temperature???

Considering our coin-flipping example, let's try and define some numbers. Let's say that the thermal energy of the system is equal to the amount of heads flipped.

This gives us an entropy of:

S ≈ U ln[n/U - 1] + n ln[1 - U/n]

(hopefully this is correct)

The derivative of this is:

dS/dU = ln[n/U - 1] - 2n/(n-U)

Note how this value becomes negative if n is large enough. Implying a negative temperature!

But how is this possible? What does this mean?

Negative temperatures are nothing out of the ordinary actually, they just mean that inputting energy decreases entropy and removing energy increases entropy.

What this means is that a system at a negative temperature, when put in contact with another system, will spontaneously try to dump energy out of itself as it aims to increase entropy.

This actually means that negative temperature is beyond infinite temperature. A fully expanded temperature scale looks like this:

[The Planck temperature is the largest possible temperature that won't create a black hole at 1.4 × 10³² Kelvin]

[Note that 0 K = -273.15 C = -459.67 F and 273.15 K = 0 C = 32 F]

This implies that -0 is sort of the 'absolute hot'. A temperature so hot that the system will desperately try to bleed energy much like the absolute zero system tries to suck in energy.

Heat and the First Law of Thermodynamics

So, what do we do with this information then? How do we actually convert this into something meaningful?

Here, we start to pull in the first law of thermodynamics, which originates with the thermodynamic identity:

dU = T dS - P dV

Note that these dx parts just mean a 'tiny change' in that variable. Here, U is expanded to include situations where the thermal energy of the system has to include things like compression/expansion work done to the system.

This identity gives us the first law:

dU = Q - W

Where Q, the heat energy of the system is re-defined as being dS/dQ = 1/T.

And W, the work (mechanical) energy of the system is defined as being dU/dV.

Both heat and work describe the changes to the energy of the system. Heating a system means you are inputting an energy Q.

If no energy is entering or exiting the system, then we know that any work that is being applied must be matched by an equal change in heat energy.

Since we managed to phrase temperature as a function of thermal energy, we can now develop what's known as an equation of state of the system.

For an ideal gas made of only hydrogen, we have an energy:

U = 3/2 NkT

Where N is the number of particles and k is the boltzmann constant.

We can also define another equation of state for an ideal gas:

PV = NkT

Which is the ideal gas law.

So what is heat then?

Q is the change of heat energy in a system, whereas U is the total thermal energy.

From the ideal gas equation of state, the thermal energy is proportional to temperature. In most cases, we can often express thermal energy as being temperature scaled to size.

Thermal energy is strange. Unlike other classical forms of energy, it can be difficult to classify it as potential or kinetic.

It's a form of kinetic energy as U relies on the movement of particles and packets within the system.

It's a form of potential energy, as it has the potential to transfer it's energy to others.

Generally, the idea that thermal energy is related to temperature is related to the 'speed' at which particles move is not too far off. In fact, we often relate:

1/2 m v^2 = 3/2 N k T

When performing calculations, as it's generally useful.

Of course, temperature, as aforementioned, is a sort of potential describing the potential energy (that can be transferred) per particle.

Heat, then effectively, is the transfer of thermal energy caused by differences in temperature. Generally, we quantify the ability for a system to give off heat using it's heat capacity - note that this is different from temperature.

Temperature is about how much the system wants to give off energy, whereas heat capacity is how well it's able to do that. Heat aims to account for both.

Conclusion

This post honestly was a much nicer write-up. And I'd say the same about E = mc^2. The main reason why is because I already know about this stuff, I was taught about it all 1-4 years ago in high school or university.

My computer is busted, so I'm using a different device to work on this. And because of that I do not have access to my notes. So I don't actually know what I have planned for next week. I think I might decide to override it anyways with something else. Maybe the E = mc^2 one.

As always, feedback is very welcome. Especially because this topic is one I should be knowledgeable about at this point.

Don't forget to SMASH that subscribe button so I can continue to LARP as a youtuber. It's actually strangely fun saying "smash the like button" - but I digress. It doesn't ultimately matter if you wanna follow idc, some people just don't like this stuff weekly.

#stem#academics#physics friday#physics#stemblr#science#thermodynamics#temperature#entropy#statistical mechanics

39 notes

·

View notes

Text

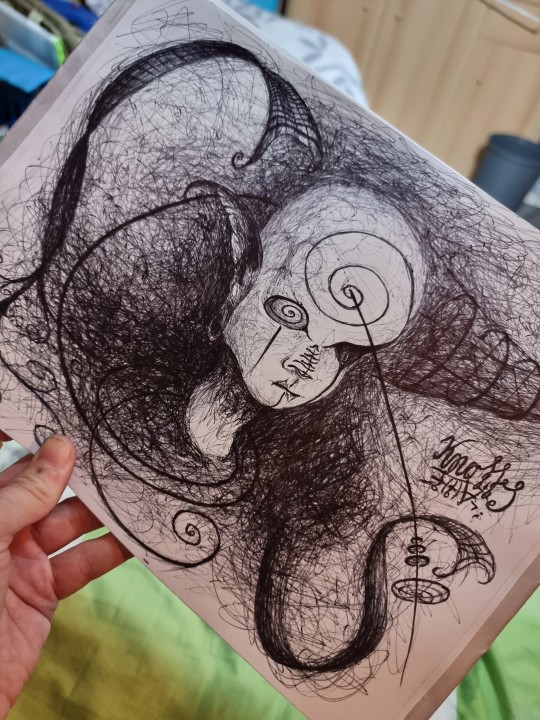

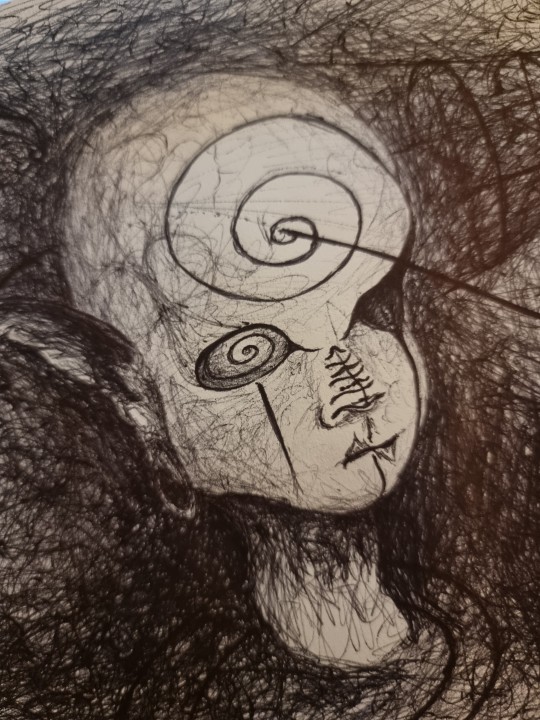

Das Gesicht des Turbulenzentänzers

Today's drawing - Title: "Face of the Turbulenzentänzer* - Disintegration and Re-integration"

* "Turbulenzentänzer" is a German neologism and translates to "dancer of turbulences" or "turbulence dancer".

(Ballpoint pen on DIN A4 paper)

[2023/10/31]

- - -- --- -----

Details:

#surrealism#surreal#surreal art#spirals#curved space#alien#alienation#depersonalization#derealisation#disintegration and reintegration#dissipation#thermodynamics#flow#turbulenzentänzer#turbulence#turbulent flow#fluid dynamics#life#metaphor#knottys art#knotty et al#drawing#ballpoint pen#the red thread of time#red thread of time#knotty#art

30 notes

·

View notes

Video

I’m sick and feel like I’ve been run over BUT I will be pulling thermo hoes🥱🫡

#video#tiktok#tiktoks#story time#funny#lmao#wtf#school#class#classes#university#college#thermodynamics#art#artist#artists#khaibellamy

65 notes

·

View notes

Text

Magma rise from deep underground and enters the magma chamber, then rises up through the volcanic vent and dinner is served.

30 notes

·

View notes

Text

Thermodynamics midterms are sucking the joy out of my existence

Laptop wallpaper by @emmastudies 🖤

77 notes

·

View notes

Text

At 5:29 am on the morning of 16 July 1945, in the state of New Mexico, a dreadful slice of history was made.

The dawn calm was torn asunder as the United States Army detonated a plutonium implosion device known as the Gadget – the world's very first test of a nuclear bomb, known as the Trinity test. This moment would change warfare forever.

The energy release, equivalent to 21 kilotons of TNT, vaporized the 30-meter test tower (98 ft) and miles of copper wires connecting it to recording equipment. The resulting fireball fused the tower and copper with the asphalt and desert sand below into green glass – a new mineral called trinitite.

Decades later, scientists discovered a secret hidden in a piece of that trinitite – a rare form of matter known as a quasicrystal, once thought to be impossible.

"Quasicrystals are formed in extreme environments that rarely exist on Earth," geophysicist Terry Wallace of Los Alamos National Laboratory explained in 2021.

Continue Reading.

166 notes

·

View notes

Text

42 notes

·

View notes

Text

The science of static shock jolted into the 21st century

Shuffling across the carpet to zap a friend may be the oldest trick in the book, but on a deep level that prank still mystifies scientists, even after thousands of years of study.

Now Princeton researchers have sparked new life into static. Using millions of hours of computational time to run detailed simulations, the researchers found a way to describe static charge atom-by-atom with the mathematics of heat and work. Their paper, "Thermodynamic driving forces in contact electrification between polymeric materials," appears in Nature Communications.

The study looked specifically at how charge moves between materials that do not allow the free flow of electrons, called insulating materials, such as vinyl and acrylic. The researchers said there is no established view on what mechanisms drive these jolts, despite the ubiquity of static: the crackle and pop of clothes pulled from a dryer, packing peanuts that cling to a box.

Read more.

32 notes

·

View notes

Text

2023 Feb 21

it’s been so long but finally understanding how the rankine cycle was so satisfying 🤧 hope everyone’s doing well <3

77 notes

·

View notes