#red data

Text

2024.4.28 鳴神山

カッコソウ

勝紅草

Primula kisoana var. shikokiana

シーボルトがオランダに標本を送る際に産地を「木曽」と間違えたとか?

世界でここにしか咲かない花

The flower that exists nowhere but here in the world

endemic species

red data

#flower#pink#primula#nature#wild flower#endemic species#red data#flower that exists nowhere but here#Mt.Narukami#Gunma prefecture#Japan

17 notes

·

View notes

Text

Mord Fustang - Red Data

Mord Fustang / © 2023 Dawn of Light | Record Cover | Made in 2023

62 notes

·

View notes

Text

first meeting

#rdr2#red dead redemption#red dead redemption 2#arthur morgan#issac morgan#man sees baby for the first time ever and is surprised that theyre tiny little creatures#arthur being the man he is mustve had SO many emotions holding issac for the first time. and this is all he manages to say in the moment#im lowkey projecting bc when i met my niece i absolutely REFUSED to hold her until i saw her the second time and i was like.#oh my fucking god shes so fucking tiny#anyway. i hope nothing bad happens to them ever<3#btw arthurs clothes come from an edit of a young version of him that i saw#and i also saw a video of someone managing to find a repurposed model of eliza in rdr2s data and shes got dark hair!#so i gave issac dark hair (and arthurs blue eyes<3)#arthurs definitrly into people with dark hair#pspspspsp arthur i have dark hair pspspspspspspsp#AHEM anyway-#sorry i cant draw babies even tho i see one all the time now LMFAO#my art

6K notes

·

View notes

Text

my suitcase for a week in Rome 🏛

#lolita fashion#classic lolita#oldschool lolita#egl fashion#baby the stars shine bright#mine#was going for a red-beige theme for this trip to match the architecture#I have more photos to post I just had no internet on the trip lol and tumblr does not load well on my data 😭#already back :( I missed my bunny and my apartment though#and going back to Europe soon ^^ so it’s not too sad

470 notes

·

View notes

Text

DP x DC AU: Danny desperately wants to find the explosion guy. Tim is really good at covering his tracks... he didn't account for ghosts.

The explosions make it onto TV as purported terror activity and most people haven't heard of that part of the world much less ever given a second thought to care about it. The only real reason it gets reported on has something to do with the Justice League and... Danny knows too much.

He's been in training for Clockwork's court (which he's suspicious of- feels like kingly duty bullshit- but Danny is playing along out of curiosity for now) and he's learned a lot about how the living and non-living worlds collide. That means learning about CW's usual suspects- one of which just happened to have a ton of bases around the area Danny was seeing on the news.

It didn't take long for Danny to try to piece together that whoever blew up Nanda Parbat was trying to fuck with the League of Shadows, and was doing it successfully. Less green portals in the world the better, same goes for assassins. But it gets Danny thinking... Maybe he can employ similar tactics on the GIW Bases that keep spawning on the edges of Amity Park. It would at least set them back while he and his friends navigated the help line desk to request Justice League intervention. None of them can leave Amity Park, so outreach is going to have to be creative.

So Danny figures he'll just find the guy. Call up some ghosts who were there, or er, came from there and get a profile and track him down. But the ghosts keep saying it was The Detective. Annoying!

Danny goes full conspiracy theory, gets Tucker and Sam involved, and begrudgingly asks Wes Weston his thoughts.

He hadn't expected Wes to garble out a thirty minute presentation (that had 100 more slides left to go before he cut it off) about how Batman totally trained with a cult and so did his kids. Danny kind of rolled his eyes but... hey, new avenue of searching in the Infinite Realms at least.

The ghosts confirm that Bombs is for sure not Batman's MO- But maybe his second kid would know? The second kid was already brought back to life though, so no way to easily reach him... Danny starts to realize that this might be the work of a Robin now. Wasn't the red one known for solving cold cases? (Sam provides this information- its a social faux pas to not know hero gossip at Gotham Galas- everything she's learned is against her will).

It all comes to a head when Danny goes about the hard task of opening a portal for the guy to come through at just the right time, explain the infinite realms so he doesn't panic and then describe what the fuck was going on with the GIW. It takes months, just over a full year, of random (educated guesses) portal generating- Finally, Red Robin drops into the land of the dead.

"So, you're the guy I've got to talk to about explosions right?" Danny enthusiastically asks.

Tim thinks he's died and landed in the after life following 56 hours of being awake and plummeting off the side of a building into a Lazarus pool. Nothing makes sense about the kid in front of him.

"Yeah, I got a guy for munitions." Tim answers cooly.

"How do you feel about secretly sanctioned government operations that violate protected rights?"

"Gotta get rid of 'em some how. Need me to point you in the right direction?" This might as well be happening.

#dcxdp#dpxdc#dp x dc#dc x dp#danny phantom#long post#tim drake#red robin#tim and danny team up to blow up the GIW au#Tim being known as the explosion guy is my favorite and i will not let this part of his lore go ignored#Jason is the munitions guy obviously and the ghosts go crazy over the gossip it enlights when he helps in amity park#Danny one hundred percent is living for this working relationship- what a weirdo -danny hasn't met someone stranger than himself in a min#tim bartering his services for a few more years of life and danny just pikachu facing him#Tim wants to improve his relations in the afterlife be he still completely thinks hes dead#danny: dude ur still alive#Tim: yeah thats the goal but i'll help you meet your goals first and then we can negotiate#Danny decides to make the guy super ghost rich (thinking big Haunt real estate) and send him home#Tim blows up the GIW with no remorse and with all the data back up for proper justice to be served court side#tim returns from the dead and this is how the bats learn that he's the one who blew up nanda parbat all those years ago#it takes danny so long to find tim bc tim was spiralling and only after bruce got back did he get into a normal routine enough to get got

641 notes

·

View notes

Text

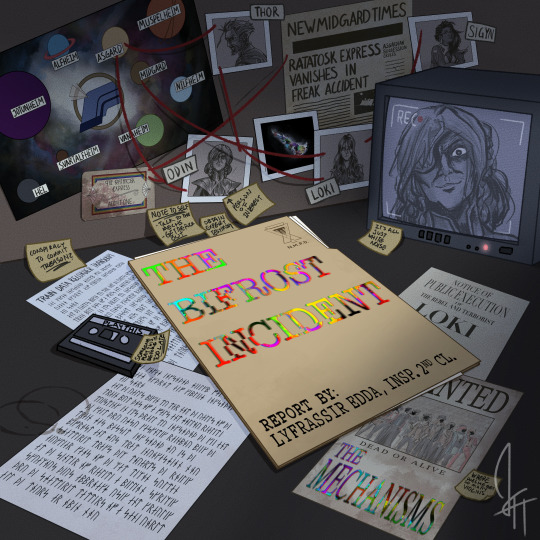

i just remembered that i can in fact post Old Art so here is an album cover project from last semester

Details below!

This was the second project of my Illustration Intensive I class last semester, and we had to either design or redesign an album cover, so I chose The Mechanisms

During the project I took a 4-hour detour trying to translate the Red Signal chant into norse runes, aka I threw it through a translator and tried (emphasis on tried) via wikipedia to fix any Weirdness despite knowing next to nothing about runes so its probably. Incredibly wrong lol

#the mechanisms#the bifrost incident#tbi#the mechs#artists on tumblr#digital art#my art#this was what listening to various mechs albums while working on projects Does to me#as a person who is Not a fan of perspective#having to draw Okay Perspective while everything is at an angle is a special ring of hell on its own#yes the runes on the train data transcript is still also just the red signal chant bc it was seven am#and like two hours before it was due lol#had to pull an all nighter for this#but still i rly enjoyed this project! 10/10 would never do it again <3 :D#jiaxxart

486 notes

·

View notes

Text

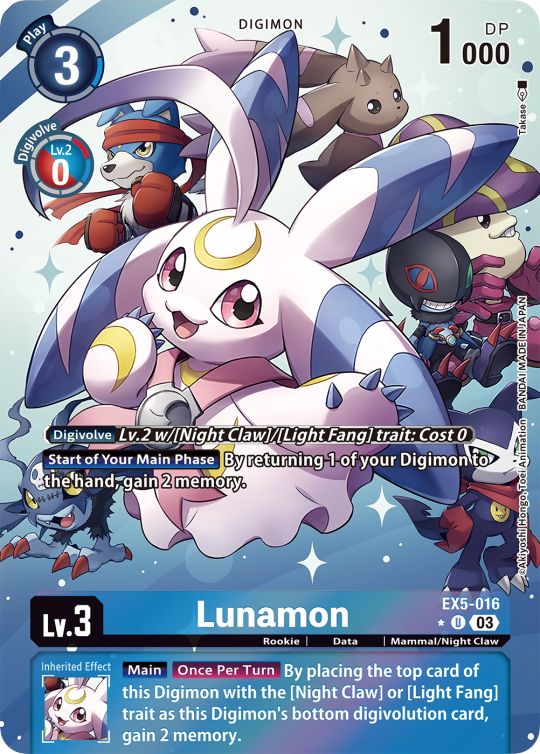

Lunamon EX5-016 and Coronamon EX5-007 Alternative Arts by Takase from EX-05 Theme Booster Animal Colosseum

These Alternative Arts show Lunamon and Coronamon alongside the other Digimon that were featured on their respective Japanese box arts of Digimon Story Moonlight and Sunburst!

#digimon#digimon tcg#digimon card game#digisafe#digica#デジカ#fan favorites#digimon references#Lunamon#Coronamon#Takase#EX5#digimon card#color: blue#color: red#Lv3#type: data#type: vaccine#trait: mammal#trait: beast#trait: night claw#trait: light fang#num: 03#AA

382 notes

·

View notes

Text

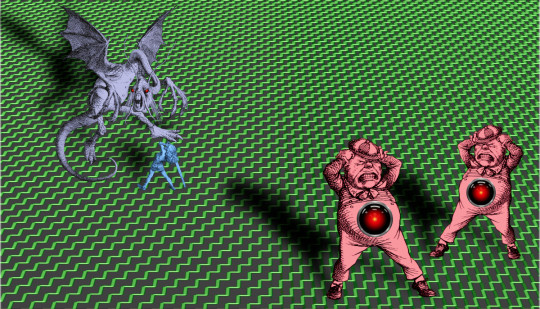

The real AI fight

Tonight (November 27), I'm appearing at the Toronto Metro Reference Library with Facebook whistleblower Frances Haugen.

On November 29, I'm at NYC's Strand Books with my novel The Lost Cause, a solarpunk tale of hope and danger that Rebecca Solnit called "completely delightful."

Last week's spectacular OpenAI soap-opera hijacked the attention of millions of normal, productive people and nonsensually crammed them full of the fine details of the debate between "Effective Altruism" (doomers) and "Effective Accelerationism" (AKA e/acc), a genuinely absurd debate that was allegedly at the center of the drama.

Very broadly speaking: the Effective Altruists are doomers, who believe that Large Language Models (AKA "spicy autocomplete") will someday become so advanced that it could wake up and annihilate or enslave the human race. To prevent this, we need to employ "AI Safety" – measures that will turn superintelligence into a servant or a partner, nor an adversary.

Contrast this with the Effective Accelerationists, who also believe that LLMs will someday become superintelligences with the potential to annihilate or enslave humanity – but they nevertheless advocate for faster AI development, with fewer "safety" measures, in order to produce an "upward spiral" in the "techno-capital machine."

Once-and-future OpenAI CEO Altman is said to be an accelerationists who was forced out of the company by the Altruists, who were subsequently bested, ousted, and replaced by Larry fucking Summers. This, we're told, is the ideological battle over AI: should cautiously progress our LLMs into superintelligences with safety in mind, or go full speed ahead and trust to market forces to tame and harness the superintelligences to come?

This "AI debate" is pretty stupid, proceeding as it does from the foregone conclusion that adding compute power and data to the next-word-predictor program will eventually create a conscious being, which will then inevitably become a superbeing. This is a proposition akin to the idea that if we keep breeding faster and faster horses, we'll get a locomotive:

https://locusmag.com/2020/07/cory-doctorow-full-employment/

As Molly White writes, this isn't much of a debate. The "two sides" of this debate are as similar as Tweedledee and Tweedledum. Yes, they're arrayed against each other in battle, so furious with each other that they're tearing their hair out. But for people who don't take any of this mystical nonsense about spontaneous consciousness arising from applied statistics seriously, these two sides are nearly indistinguishable, sharing as they do this extremely weird belief. The fact that they've split into warring factions on its particulars is less important than their unified belief in the certain coming of the paperclip-maximizing apocalypse:

https://newsletter.mollywhite.net/p/effective-obfuscation

White points out that there's another, much more distinct side in this AI debate – as different and distant from Dee and Dum as a Beamish Boy and a Jabberwork. This is the side of AI Ethics – the side that worries about "today’s issues of ghost labor, algorithmic bias, and erosion of the rights of artists and others." As White says, shifting the debate to existential risk from a future, hypothetical superintelligence "is incredibly convenient for the powerful individuals and companies who stand to profit from AI."

After all, both sides plan to make money selling AI tools to corporations, whose track record in deploying algorithmic "decision support" systems and other AI-based automation is pretty poor – like the claims-evaluation engine that Cigna uses to deny insurance claims:

https://www.propublica.org/article/cigna-pxdx-medical-health-insurance-rejection-claims

On a graph that plots the various positions on AI, the two groups of weirdos who disagree about how to create the inevitable superintelligence are effectively standing on the same spot, and the people who worry about the actual way that AI harms actual people right now are about a million miles away from that spot.

There's that old programmer joke, "There are 10 kinds of people, those who understand binary and those who don't." But of course, that joke could just as well be, "There are 10 kinds of people, those who understand ternary, those who understand binary, and those who don't understand either":

https://pluralistic.net/2021/12/11/the-ten-types-of-people/

What's more, the joke could be, "there are 10 kinds of people, those who understand hexadecenary, those who understand pentadecenary, those who understand tetradecenary [und so weiter] those who understand ternary, those who understand binary, and those who don't." That is to say, a "polarized" debate often has people who hold positions so far from the ones everyone is talking about that those belligerents' concerns are basically indistinguishable from one another.

The act of identifying these distant positions is a radical opening up of possibilities. Take the indigenous philosopher chief Red Jacket's response to the Christian missionaries who sought permission to proselytize to Red Jacket's people:

https://historymatters.gmu.edu/d/5790/

Red Jacket's whole rebuttal is a superb dunk, but it gets especially interesting where he points to the sectarian differences among Christians as evidence against the missionary's claim to having a single true faith, and in favor of the idea that his own people's traditional faith could be co-equal among Christian doctrines.

The split that White identifies isn't a split about whether AI tools can be useful. Plenty of us AI skeptics are happy to stipulate that there are good uses for AI. For example, I'm 100% in favor of the Human Rights Data Analysis Group using an LLM to classify and extract information from the Innocence Project New Orleans' wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

Automating "extracting officer information from documents – specifically, the officer's name and the role the officer played in the wrongful conviction" was a key step to freeing innocent people from prison, and an LLM allowed HRDAG – a tiny, cash-strapped, excellent nonprofit – to make a giant leap forward in a vital project. I'm a donor to HRDAG and you should donate to them too:

https://hrdag.networkforgood.com/

Good data-analysis is key to addressing many of our thorniest, most pressing problems. As Ben Goldacre recounts in his inaugural Oxford lecture, it is both possible and desirable to build ethical, privacy-preserving systems for analyzing the most sensitive personal data (NHS patient records) that yield scores of solid, ground-breaking medical and scientific insights:

https://www.youtube.com/watch?v=_-eaV8SWdjQ

The difference between this kind of work – HRDAG's exoneration work and Goldacre's medical research – and the approach that OpenAI and its competitors take boils down to how they treat humans. The former treats all humans as worthy of respect and consideration. The latter treats humans as instruments – for profit in the short term, and for creating a hypothetical superintelligence in the (very) long term.

As Terry Pratchett's Granny Weatherwax reminds us, this is the root of all sin: "sin is when you treat people like things":

https://brer-powerofbabel.blogspot.com/2009/02/granny-weatherwax-on-sin-favorite.html

So much of the criticism of AI misses this distinction – instead, this criticism starts by accepting the self-serving marketing claim of the "AI safety" crowd – that their software is on the verge of becoming self-aware, and is thus valuable, a good investment, and a good product to purchase. This is Lee Vinsel's "Criti-Hype": "taking press releases from startups and covering them with hellscapes":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

Criti-hype and AI were made for each other. Emily M Bender is a tireless cataloger of criti-hypeists, like the newspaper reporters who breathlessly repeat " completely unsubstantiated claims (marketing)…sourced to Altman":

https://dair-community.social/@emilymbender/111464030855880383

Bender, like White, is at pains to point out that the real debate isn't doomers vs accelerationists. That's just "billionaires throwing money at the hope of bringing about the speculative fiction stories they grew up reading – and philosophers and others feeling important by dressing these same silly ideas up in fancy words":

https://dair-community.social/@emilymbender/111464024432217299

All of this is just a distraction from real and important scientific questions about how (and whether) to make automation tools that steer clear of Granny Weatherwax's sin of "treating people like things." Bender – a computational linguist – isn't a reactionary who hates automation for its own sake. On Mystery AI Hype Theater 3000 – the excellent podcast she co-hosts with Alex Hanna – there is a machine-generated transcript:

https://www.buzzsprout.com/2126417

There is a serious, meaty debate to be had about the costs and possibilities of different forms of automation. But the superintelligence true-believers and their criti-hyping critics keep dragging us away from these important questions and into fanciful and pointless discussions of whether and how to appease the godlike computers we will create when we disassemble the solar system and turn it into computronium.

The question of machine intelligence isn't intrinsically unserious. As a materialist, I believe that whatever makes me "me" is the result of the physics and chemistry of processes inside and around my body. My disbelief in the existence of a soul means that I'm prepared to think that it might be possible for something made by humans to replicate something like whatever process makes me "me."

Ironically, the AI doomers and accelerationists claim that they, too, are materialists – and that's why they're so consumed with the idea of machine superintelligence. But it's precisely because I'm a materialist that I understand these hypotheticals about self-aware software are less important and less urgent than the material lives of people today.

It's because I'm a materialist that my primary concerns about AI are things like the climate impact of AI data-centers and the human impact of biased, opaque, incompetent and unfit algorithmic systems – not science fiction-inspired, self-induced panics over the human race being enslaved by our robot overlords.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Image:

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#criti-hype#ai doomers#doomers#eacc#effective acceleration#effective altruism#materialism#ai#10 types of people#data science#llms#large language models#patrick ball#ben goldacre#trusted research environments#science#hrdag#human rights data analysis group#red jacket#religion#emily bender#emily m bender#molly white

287 notes

·

View notes

Text

Bruh soriku got the floral arch, the dearly beloved wedding march, and the paopu fruit 💀

#they got the rings too if the halves of the keyblade count#an official jewelry maker seemed to think so#kingdom hearts#soriku#thinking about that door in recoded forever. like whatbthefuck#none of the other doors look like this just the one data riku makes for data sora#to leave his heart/system/whatever#the stained glass is obviously connected to heart stations#and the purple is similar to riku’s heart station#but data riku is not just riku. he has sora’s memories. which explains the red and pink#probably#but like why does this door look like this. what was the reason nomura#how much more marriage symbolism can you give them#also it’s funny of him to debate whether or not to have sora and kairi share paopu#bc he didn’t want to portray their feelings as too intense#after he gave sora and riku’s combined hearts a paopu fruit#like. okay

315 notes

·

View notes

Text

red bull: daniel’s bad habits from mclaren are fixed, he did well in the sim, and his tire test was so good that we immediately knew he was ready to be in a car.

alpha tauri & yuki: daniel’s feedback on the car has been instrumental, and he performed so well in difficult race conditions even though he didn’t have any upgrades and had some bad luck.

rando twitter user who doesn’t have the data, didn’t know liam lawson’s name three weeks ago, and constantly insults the way red bull quickly drops drivers who don’t perform: they’re only letting him drive for pr even though he’s a washed up failure.

#like is red bull too harsh on their drivers or are they hiring a pr merchant who can’t perform. pick a side.#it’s just hilarious that every single person who actually knows the sport & daniel’s data/contributions#won’t shut up about how helpful he’s been and how well he’s done and how mclaren doesn’t reflect his performance#but these random people on twitter think they know better#and their excuse is ‘well liam scored points’ as if he isn’t driving a different car entirely lmfao#like liam has done well! i like liam a lot!#but he’s benefited from a better car/things happening around him in the race/not being head to head against yuki#he’s done a great job but there’s literally been ZERO real head to head comparison between the 3 drivers#but alpha tauri/red bull rate daniel and they have his data and know what hes done for the car & setup#i don’t ever deny that daniel’s popularity certainly doesn’t hurt#but they dropped nyck like he was nothing#red bull axes anyone who can’t perform#if daniel couldn’t do it then he wouldn’t have a seat. not that hard to understand.#people on the internet make me feral. i cannot use the internet. i need to stick to my curated feeds bc everyone is so STUPID.

230 notes

·

View notes

Text

2023.5.21 高尾山

幻の花 ベニバナヤマシャクヤクが咲いていた!

開花了紅花山芍薬

157 notes

·

View notes

Text

the red mist

original image

143 notes

·

View notes

Text

#rvb#red vs blue#polls#I know there's so many polls but I need imperical evidence that Locus is the prettiest man through data

135 notes

·

View notes

Text

oops all omnimon but like. fighter mode sage y'know?

#sonic#sage#sage the ai#sage sonic frontiers#sonic frontiers#purp doot#she would mangle other titans in it too and the minibosses and shit but my hand is tired man#like the base form has 2 of the red arms. and she can morph them at will#as long as she has been exposed to the data before and integrated it into her system#yeag. wahoog. get digimonized idiot

97 notes

·

View notes

Photo

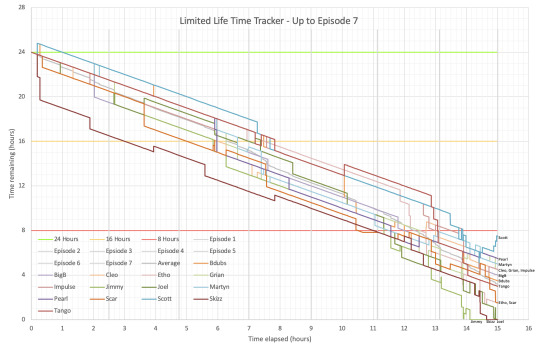

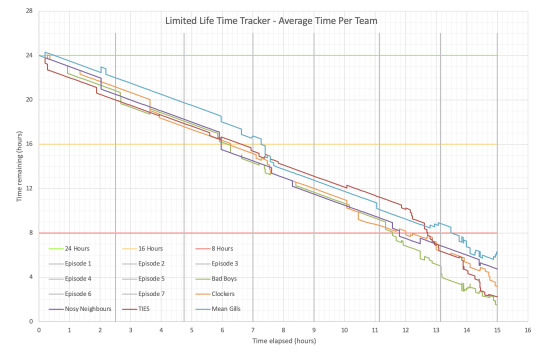

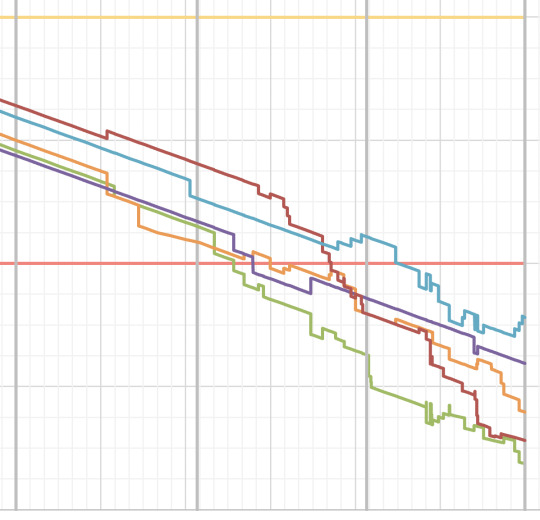

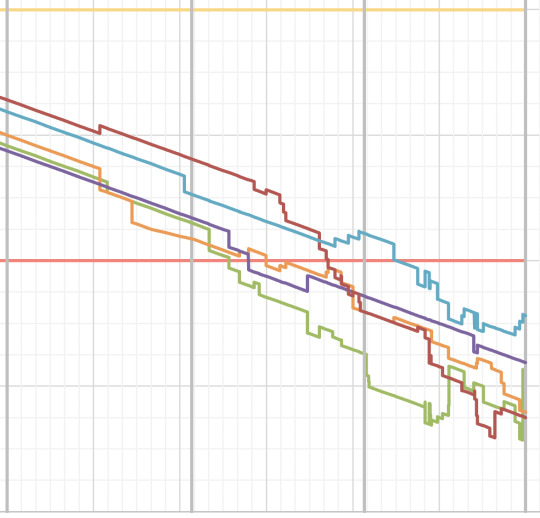

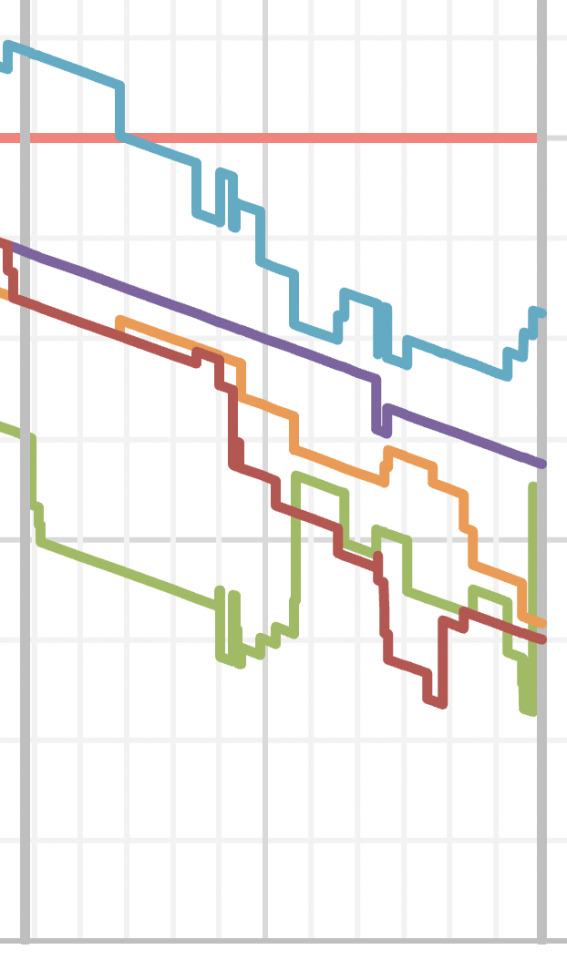

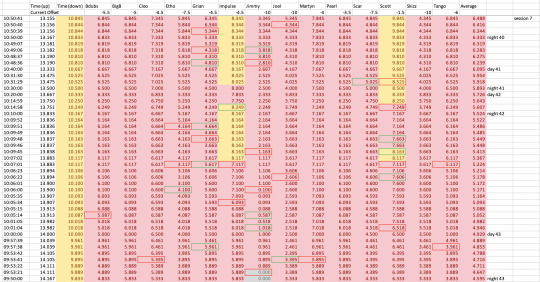

Life Tracker updated for Episode 7! This one is much quicker than Episode 6 on account of not being on holiday at the time, even though there were two thirds more deaths this time. Previous posts: Session 6, Session 5, Session 4. Also Session 8 (finale) post!

As usual, close ups and commentary below the cut. I’ve also added another graph for the average time of each team, which will also be below the cut.

There was so much carnage! 45 whole deaths in a single session! Not all deaths were awarded time during the session, but Scott’s video advised that it would be added by next session, so I have taken the liberty to add all the time as I see fit, hence why Scott is back to 7.5 hours. I haven’t seen every episode yet (in fact, other than Scott, I’ve only seen those that have perma-died), so I’m not sure if anyone else’s time is a mismatch, but if so I’m happy to explain where I’m getting my time additions and subtractions from!

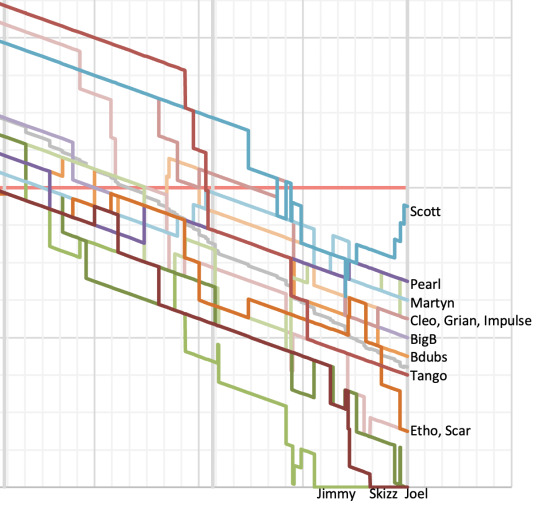

Now for some close ups.

First, there was enough chaos that I decided to take a close up of Session 6 and 7 together so we can properly appreciate it:

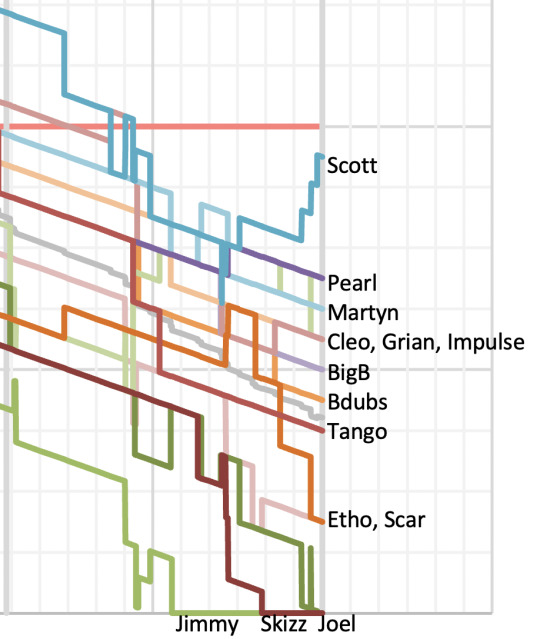

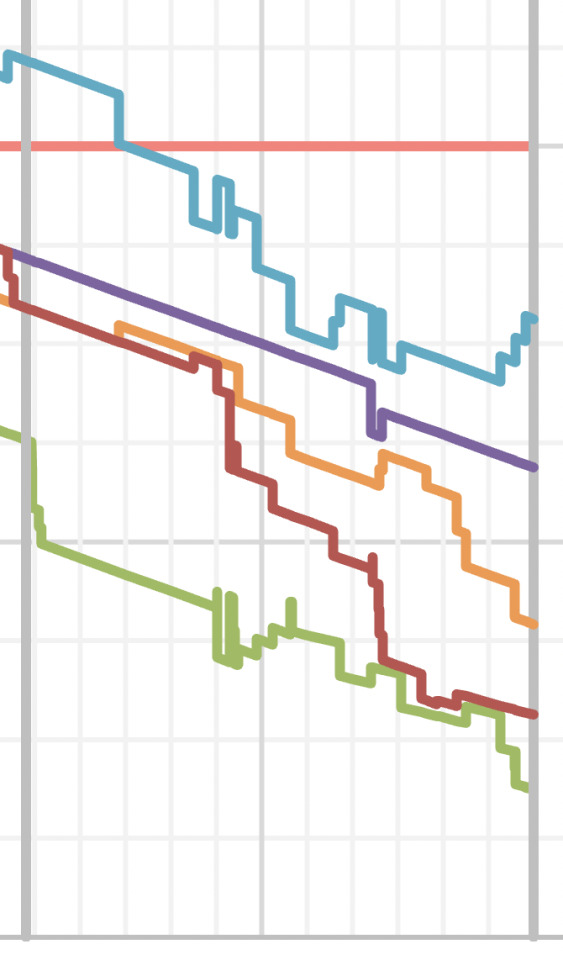

And a close up of Session 7 by itself:

So many people lost major time, so it’s interesting to see Scott’s uptick of time at the end - he ended on only 30 minutes less than he would have been if he hadn’t died at all this session. Pearl didn’t die at all, and got the kill credit for Martyn blowing himself up in a trap, so she actually ended the session 30 minutes better than she started it. Grian also did very well for himself - he killed and died so many times, but somehow ended on the exact time he would have been on if he had experienced a peaceful deathless session.

BigB, Cleo, and Martyn all ended the session 1 hour poorer than they started, and Bdubs and Scar ended 1.5 hours below where they would have been. Nosy Neighbours are thus doing super well, with Mean Gills and Clockers not too far behind, in terms of maintaining position from the start of the session.

TIES had an awful time this session, with Impulse and Tango both losing a net 2 hours, and Etho and Skizz losing a net 2.5 hours - and obviously Skizz entirely died.

Joel possibly had the worst time, losing a net 3.5 hours this session - though it didn’t help that 5 of his 7 deaths were all caused by the one person. Technically Jimmy didn’t do too badly, given he only lost a net 1.5 hours... but given that he was out of the series only an hour into the session, and also the first out entirely... it really didn’t go well for him either

I also find it interesting the sheer number of vertical lines this graph, the ones representing a death immediately followed by a kill or vice versa. I would love to figure out a way to show only one line at a time on the graph, so we can more easily see someone’s journey, but I haven’t had time to look into it yet.

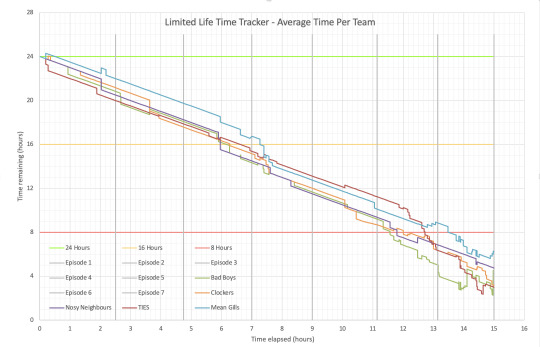

Now onto the graph of the average times per team.

This one is super interesting to me, especially TIES’s line - they had the lowest average life right from the start, but somehow by Session 4, through Session 5, and for most of Session 6, they were the team with the highest average time, and then it quite literally went downhill from there. The only thing saving them from being last now is the fact that the Bad Boys are down to only a single living player, and even then Grian is doing far better than most of TIES.

It’s also interesting to me how Mean Gills had a significant time uptick at the end of both Session 6 and Session 7 (the first due to Martyn and the second due to Scott). Scott’s time was so high that it kept Mean Gills’ average time as yellow for all of Session 6 despite Martyn being red for most of it... and Martyn then got enough kills to keep it there. Mean Gills is also the only team in the entire graph to anywhere gain such consistent significant time.

These averages also coincide with the comments I made above about the time offset difference for each player from the start to end of the session. Mean Gills are doing well, but they’ve been doing well for so long that I’m sure most players are aware that they need to be a target. Nosy Neighbours are also doing well but I feel like they’ve flown under the radar, and are not a significant target right now.

Here is a close up of this graph with Sessions 1-4:

And the close up for Session 5-7:

And the Session 7 only close up:

I kept the dead players in the teams’ averages, since I think it is a better reflection of the teams’ strength as a whole, but I also created a version that excluded dead players. In those screenshots you can really see Bad Boys’ and TIES’ time jumping up at a death, instead of falling as it did here.

Here are the alternate averages graph:

And close ups:

This makes Bad Boys look a lot better, because Grian does have a lot of time... but he is also alone. And there is definitely strength in numbers. Two players at an hour and a half each can fend off an attacker more easily than a single player at three hours can... unless nerves and panic get to them, as we definitely saw this session.

Wow and I almost forgot to include the raw data for this session!

The first hour of the session:

The second hour of the session:

There is just so much death! Look at all the box outlines!! I could barely fit this data on two screens on the zoom I was on, and I did not want to zoom out further.

I also obviously have data for the averages, but it was too far away from the column with the times on it that I wasn’t sure if it would still be useful on its own? Let me know if you want to see it!

This has once again been fascinating to see, and I cannot wait to see how Session 8 will go. Will it be the last session? Will they go until everyone is dead? Will they somehow have enough people with enough time to get to Session 9? Will Mean Gills be the final two and get to play fun relaxing games like Scott was suggesting?

Only time will tell.

#limited life smp#24lsmp#limlife#once again i hate trying to work out what tags for a post#i got to this point in the tags before i realised i forgot to add the number data#i still love that impulse accidentally killed scott and became yellow again#and that scott also became yellow again#so there was less than 14 minutes between there still being two yellows on the server... and jimmy permadying#thirteen minutes and 41 seconds exactly by my calculations#which is crazy given we had whole sessions of yellows only#which meant it was hours between last green and first red#so less than a quarter of an hour between last yellows (and two of them!) and first permadeath is crazy#i always ramble in the tags bc i dont know how to wrap the post up#why do i always post these as im going to bed#its after midnight and im yawning now#anyway enjoy!#very glad i got to post this before the weekend ended this time#well it was still sunday when i started writing the post#its 12:15am on monday now. technically#my spreadsheets

280 notes

·

View notes

Text

Agumon BT5-007 and Gabumon BT5-020 Alternative Arts by Kenji Watanabe from PB-13 Digimon Card Game Royal Knights Binder Set

#digimon#digimon tcg#digimon card game#digica#デジカ#Agumon#Gabumon#Omegamon#Omnimon#BT5#Kenji Watanabe#PB13#digimon card#color: red#color: blue#Lv3#type: vaccine#type: data#trait: reptile#num: 01

189 notes

·

View notes