#ai act

Text

The first legislation in the world dedicated to regulating AI could become a blueprint for others to follow. Here’s what to expect from the EU’s AI Act.

The word ‘risk’ is often seen in the same sentence as ‘artificial intelligence’ these days. While it is encouraging to see world leaders consider the potential problems of AI, along with its industrial and strategic benefits, we should remember that not all risks are equal.

On June 14, the European Parliament voted to approve its own draft proposal for the AI Act, a piece of legislation two years in the making, with the ambition of shaping global standards in the regulation of AI.

Read more from Nello Cristianini!

50 notes

·

View notes

Text

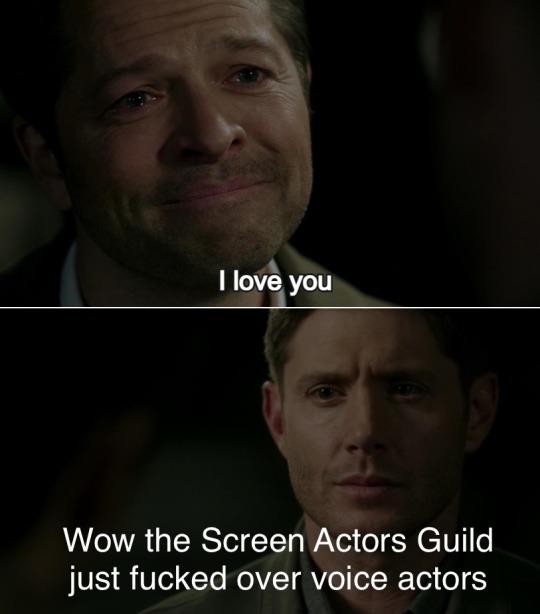

WE LIVE IN A HELL WORLD

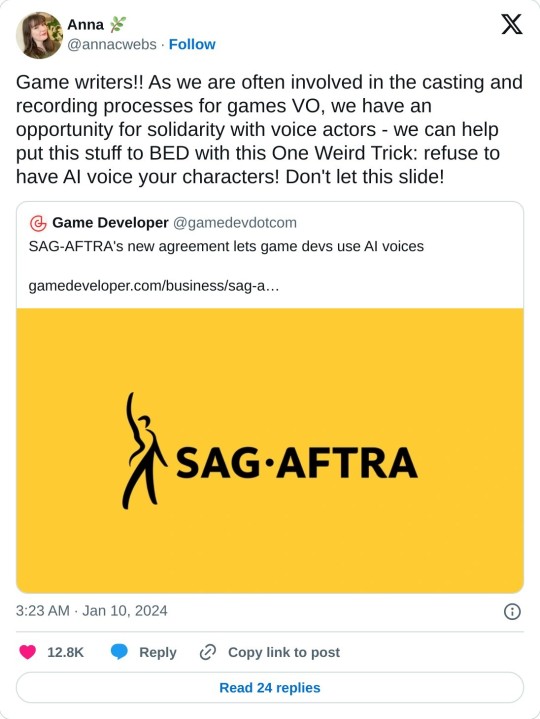

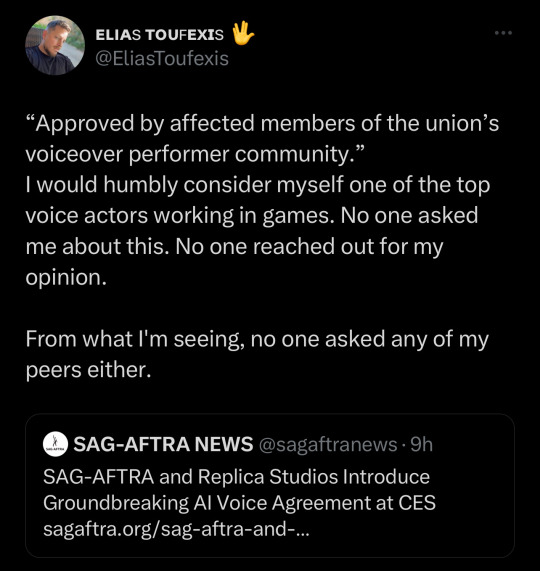

Snippets from the article by Karissa Bell:

SAG-AFTRA, the union representing thousands of performers, has struck a deal with an AI voice acting platform aimed at making it easier for actors to license their voice for use in video games. ...

the agreements cover the creation of so-called “digital voice replicas” and how they can be used by game studios and other companies. The deal has provisions for minimum rates, safe storage and transparency requirements, as well as “limitations on the amount of time that a performance replica can be employed without further payment and consent.”

Notably, the agreement does not cover whether actors’ replicas can be used to train large language models (LLMs), though Replica Studios CEO Shreyas Nivas said the company was interested in pursuing such an arrangement. “We have been talking to so many of the large AAA studios about this use case,” Nivas said. He added that LLMs are “out-of-scope of this agreement” but “they will hopefully [be] things that we will continue to work on and partner on.”

...Even so, some well-known voice actors were immediately skeptical of the news, as the BBC reports. In a press release, SAG-AFTRA said the agreement had been approved by "affected members of the union’s voiceover performer community." But on X, voice actors said they had not been given advance notice. "How has this agreement passed without notice or vote," wrote Veronica Taylor, who voiced Ash in Pokémon. "Encouraging/allowing AI replacement is a slippery slope downward." Roger Clark, who voiced Arthur Morgan in Red Dead Redemption 2, also suggested he was not notified about the deal. "If I can pay for permission to have an AI rendering of an ‘A-list’ voice actor’s performance for a fraction of their rate I have next to no incentive to employ 90% of the lesser known ‘working’ actors that make up the majority of the industry," Clark wrote.

SAG-AFTRA’s deal with Replica only covers a sliver of the game industry. Separately, the union is also negotiating with several of the major game studios after authorizing a strike last fall. “I certainly hope that the video game companies will take this as an inspiration to help us move forward in that negotiation,” Crabtree said.

And here are some various reactions I've found about things people in/adjacent to this can do

And in OTHER AI games news, Valve is updating it's TOS to allow AI generated content on steam so long as devs promise they have the rights to use it, which you can read more about on Aftermath in this article by Luke Plunkett

#video games#voice acting#voice actors#sag aftra#ai#ai news#ai voice acting#video game news#Destiel meme#industry bullshit

25K notes

·

View notes

Text

AI is changing the shape of leadership – how can business leaders prepare? - CyberTalk

New Post has been published on https://thedigitalinsider.com/ai-is-changing-the-shape-of-leadership-how-can-business-leaders-prepare-cybertalk/

AI is changing the shape of leadership – how can business leaders prepare? - CyberTalk

By Ana Paula Assis, Chairman, Europe, Middle East and Africa, IBM.

EXECUTIVE SUMMARY:

From the shop floor to the boardroom, artificial intelligence (AI) has emerged as a transformative force in the business landscape, granting organizations the power to revolutionize processes and ramp up productivity.

The scale and scope of this shift is fundamentally changing the parameters for business leaders in 2024, presenting new opportunities to increase competitiveness and growth.

Alongside these opportunities, the sophistication of AI – and, in particular, generative AI – has brought new threats and risks. Business leaders across all sectors are dealing with new concerns around data security, privacy, ethics and skills – bestowing additional responsibilities to consider.

To explore this in more detail, IBM commissioned a study, Leadership in the Age of AI. This surveyed over 1,600 senior European executives on how the AI revolution is transforming the role of company leaders as they seek to maximize its opportunities while also navigating its potential threats in an evolving regulatory and ethical landscape.

Powering AI growth in Europe

With seven out of ten of the world’s most innovative countries located in Europe, the region is well-positioned to capitalize on the soon-to-be-adopted EU AI Act, which offers the world’s first comprehensive regulatory framework. This regulatory confidence and clarity is expected to attract additional investments and new participants, further benefiting Europe’s AI ecosystem.

Against this promising backdrop, it is no surprise to see generative AI deployment at the top of CEOs’ priorities for 2024, with 82% of the business leaders surveyed having already deployed generative AI or intending to this year. This growing sense of urgency is driven by a desire to improve efficiency by automating routine processes and freeing employees to take on higher-value work, enhancing the customer experience and improving outcomes.

Despite this enthusiasm, concerns around security and privacy are tempering the rate of adoption – while 88% of business leaders were excited about the potential of AI within their business, 44% did not feel ready to deploy the technology yet, with privacy and security of data (43%), impact on workforce (32%) and ethical implications (30%) identified as the top three challenge facing business leaders. Instead of solely focusing on the financial benefits of AI, business leaders are now compelled to actively address the societal costs and risks associated with it.

A new era for leadership

Leadership in the age of AI requires executives to strike a balance between addressing the ethical and security implications of technology and harnessing its competitive advantages. This delicate balance lies at the core of the EU AI Act, endorsed by the European Parliament in March. It aims to promote innovation and competitiveness in Europe while ensuring transparency, accountability and human oversight in the development and implementation of AI.

The Act takes a risk-based approach, ascertaining the level of regulation required by the level of risk each use case poses. This spans from prohibitive, which includes practices such as social scoring; high-risk, which encompasses areas such as infrastructure and credit scoring; medium-risk, which includes chatbots and deep fakes; and low-risk, containing AI-enabled games and spam filters.

With these parameters in place, business leaders must realize regulatory compliance and prepare their operations and workforce for the upcoming shift. They must manage risk and reputation and future-proof their companies for further innovation and regulation, which will inevitably follow in the coming years.

There are two major priorities for business leaders in achieving this. The first is to create effective AI governance strategies built upon five pillars: explainability, fairness, robustness, transparency and privacy. These aim to promote transparency in data usage, equitable treatment, defence against attacks, system transparency and privacy protection.

Underpinned by human oversights, they will serve to mitigate risks and ensure AI systems are trustworthy. This comprehensive governance approach fosters responsible AI adoption, building trust among users and stakeholders while ensuring the ethical and responsible use of AI technologies.

The second, and equally important, action is to establish an AI ethics board. While the Act itself necessitates a certain level of ethical compliance, businesses should use this opportunity to establish their own ethical frameworks.

This will guide implementation now while laying out clear guardrails for future innovation. At IBM, for example, our ethical framework dictates what use cases we pursue, what clients we work with and our trusted approach to copyright. Establishing these foundations early serves to help prevent reputational risks or Act breaches further down the line.

The skills responsibility

There is also a clear responsibility for equipping workforces with the necessary skills to successfully navigate AI transformation. Another recent IBM study around AI in the workplace found that 87% of business leaders expect at least a quarter of their workforce will need to reskill in response to generative AI and automation.

Those who equip themselves with AI skills will have a significant advantage in the digital economy and job market over those who do not. Organizations are responsible for helping their employees upskill or reskill to adapt to this changing ecosystem.

Businesses take this duty seriously, with 95% of executives stating they are already taking steps to ensure they have the right AI skills in their organizations, and 44% actively upskilling themselves in the technology. The incentive comes from a competitive and a societal perspective, ensuring that large portions of the workforce are not excluded from participating in and benefiting from the thriving digital economy.

With legislative frameworks now in place, European CEOs and senior business leaders must navigate the evolving AI landscape with trust and openness, integrating good governance principles into its development and adoption, cementing ethical guardrails and building resilience across the workforce. This new era of leadership demands trust and transparency from the top down and will be a critical component for growth and return on investment.

This article was originally published via the World Economic Forum and has been reprinted with permission.

#2024#Africa#ai#ai act#AI adoption#AI Ethics#AI systems#approach#Article#artificial#Artificial Intelligence#automation#board#Building#Business#challenge#chatbots#Companies#compliance#comprehensive#copyright#credit scoring#customer experience#cyber security#cybersecurity#data#data security#data usage#deployment#development

0 notes

Text

Il PARLAMENTO EUROPEO ALLA PROVA DEL VOTO. Un punto a favore: l’ AI ACT (Regolamento Intelligenza Artificiale)

Dal 6 al 9 Giugno 2024 i cittadini UE sono chiamati a rinnovare il Parlamento europeo. L’evento riveste una grandissima importanza, specialmente in questo periodo in cui sembra che l’unica politica internazionale vincente sia farsi la guerra o affidarsi a partiti populisti di destra.

“Le prossime elezioni europee rappresentano un appuntamento tra i più importanti degli ultimi tempi. Il futuro…

View On WordPress

#AI Act#ChatGPT#Elezioni Europee#Intelligenza Artificiale#La Casa delle Donne Padova#Newsletter Casa delle Donne di Padova#Parlamento Europeo

0 notes

Text

Can AI show that it is unsure?

🇪🇺 NEW Europe AI Act

Practical examples of AIact safe prompts

#aiact

#machinelearning#artificialintelligence#art#digitalart#mlart#ai#datascience#algorithm#vr#ai act#europe#writing prompt

0 notes

Text

Menschenverstand vs. Künstliche Intelligenz – 1:1

Das Spiel ist grade erst eröffnet und wird noch einige spannende Wendungen parat halten – nun aber ist es der EU erstmal gelungen, einen Ausgleich zu erzielen. Mit dem „AI Act“ gibt es jetzt einen Gesetzentwurf, der europäisches Recht werden soll und der erstmalig Regeln für die Entwicklung und Anwendung von KI aufstellt. Waren die Tech-Firmen bisher unbeaufsichtigt im Sandkasten unterwegs, gilt…

View On WordPress

0 notes

Text

Just gonna have to wait and see, right? Just wait and see! Just gotta wait and see! Who knows, we'll just have to wait and see! It's anybody's guess, we'll just have to wait and see! The future is exciting, we just gotta wait and see!

#personal#my art#Fuck your fake ass 'i am very smart!' intellectualizing “observations” and open your god damn ears.#do something for fucks sake. it's sickening seeing videos of ai crap and seeing rows and rows of repliers using their one brain synapse#to type “wow! very exciting!” “haha this is kind of scary! but in a really interesting way!”#and then they go about their day without a second thought while creative industries burn around them#i go to one of america's top tech schools too and it's enough to make you wanna tear our your hair#every day it's seminars and talks about “the potential consequences of ai!” when the consequences are happening NOW#NO MORE DISCUSSING NO MORE INTELLECTUALIZING NO MORE SOCRATIC SEMINARS NO MORE DEBATING. ACT YOU COWARDS#people are getting hurt RIGHT NOW. stop pretending to care when you clearly don't! just be honest and say you wanna make money#my time here has really made me hate academic spaces. you people are so god damn useless and cowardly.

3K notes

·

View notes

Text

Gabriel, Jeremy, Susie and... Vanessa? Vanny???

oh, and Glitchtrap too (he's in the computer Vanessa is holding)

#Vanessa is a prodigy in this au#makes me think she'd be William's apprentice#WHAT IF UH. VANNY WANTED TO CREATE AN AI AND IT ACTUALLY WORK SO WELL THAT-#THE AI GAINED CONSCIOUSNESS TO THE POINT IT TALKED BACK TO HER IN A SCARY WAY AND ALMOST ACTED LIKE A VIRUS IDK#and then Max (Mxes) showed up like 'VANESSA! YOU SHOULDN'T BE TALKING TO STRANGERS ON THE INTERNET' and it is referring to Glitchtrap lmfao#and then Vanessa convinces it to talk to Glitchtrap-#ONLY FOR THEM TO GET ANNOYED AT EACH OTHER#springdad au#fnaf#five nights at freddy's#my art#freddy fazbear#bonnie the bunny#chica the chicken#fnaf vanessa#vanny

3K notes

·

View notes

Text

3K notes

·

View notes

Text

For more information.

#so fuck voice actors i guess huh#what the fuck was your point in being against ai in writing and producing films yet here you are supporting ai??#voice ACTORS do you see that word? sick of the disrespect va get!#is it because its voice actors? is it because its video games? because its not 'rEaL CINemA'?? ffs#sag aftra#sag afta strike#sag aftra strong my ASS#sag-aftra#union#union strong#voice actors#voice acting#evolutionary step? yeah it is. going forward to fucking hell.#ai#anti ai#fuck ai

1K notes

·

View notes

Text

Asking the voice actors on my video game project to consent to having AI models of their voices created specifically and exclusively for the purpose of making the dialogue say the player character's name out loud at appropriate junctures no matter what the player chooses.

1K notes

·

View notes

Text

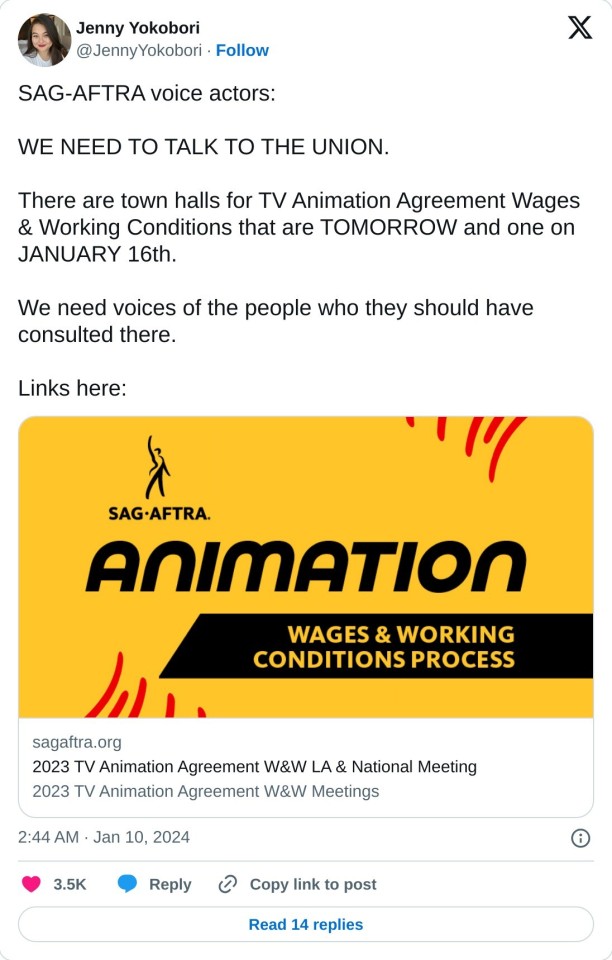

something kind of gross to me is the way that some artists who post about how AI is going to take their jobs speak in a way that makes it seem like they think they are the first and only profession who has ever faced this kind of existential threat. they just seem fundamentally uninterested in relating the fear they're currently experiencing to the larger context of labor movements and the history of technological advancement/automation's effects on other fields.

they only ever discuss the problem with automating creative labor. there's this sort of implicit stance that when labor they view as not requiring The Divine Spark Of Creation Only A Human Can Possess gets automated that is just the natural course of technological advancement. But when it comes for their labor it's suddenly a completely new thing and a threat to the fabric of our Culture. The idea that creative work should be venerated above other forms of labor and is uniquely deserving of protections is just kinda shitty and stupid.

#acting like ai generated images have cooties will not save creative jobs#pretending you can Un-Invent generative ai if you get mad enough on twitter will not save creative jobs#you know what has a decent chance of saving creative jobs. a push for unionization in those industries.

955 notes

·

View notes

Text

You know, when this whole AI art, deepfakes and other shit began, I was scared that the responsibility of convincing people that it can and will be used unethically would fall on the shoulders of small artists who would not be taken seriously. I expected change in the art world to be a slow, creeping transition into inevitable demise.

What I did not expect was hollywood studio execs doing that job for us by being so cartoonishly evil, impatient and releasing statements like "We're gonna starve you until you agree to work with us lol" and "We're gonna take your likeness and use it forever. You will be paid with jack and shit."

I also did not expect AI bros doing the same job for us by harassing a voice actor off of twitter.

#wga#wga strong#sag strong#sag strike#wga strike#wga solidarity#anti ai#writers guild of america#writer's strike#screen actors guild#actors strike#support unions#support the writers!#support the strikes#support human artists#fuck ai#anti ai art#anti ai writing#anti ai music#fuck ai voice acting#random thoughts

1K notes

·

View notes

Text

How Bias Will Kill Your AI/ML Strategy and What to Do About It

New Post has been published on https://thedigitalinsider.com/how-bias-will-kill-your-ai-ml-strategy-and-what-to-do-about-it/

How Bias Will Kill Your AI/ML Strategy and What to Do About It

‘Bias’ in models of any type describes a situation in which the model responds inaccurately to prompts or input data because it hasn’t been trained with enough high-quality, diverse data to provide an accurate response. One example would be Apple’s facial recognition phone unlock feature, which failed at a significantly higher rate for people with darker skin complexions as opposed to lighter tones. The model hadn’t been trained on enough images of darker-skinned people. This was a relatively low-risk example of bias but is exactly why the EU AI Act has put forth requirements to prove model efficacy (and controls) before going to market. Models with outputs that impact business, financial, health, or personal situations must be trusted, or they won’t be used.

Tackling Bias with Data

Large Volumes of High-Quality Data

Among many important data management practices, a key component to overcoming and minimizing bias in AI/ML models is to acquire large volumes of high-quality, diverse data. This requires collaboration with multiple organizations that have such data. Traditionally, data acquisition and collaborations are challenged by privacy and/or IP protection concerns–sensitive data can’t be sent to the model owner, and the model owner can’t risk leaking their IP to a data owner. A common workaround is to work with mock or synthetic data, which can be useful but also have limitations compared to using real, full-context data. This is where privacy-enhancing technologies (PETs) provide much-needed answers.

Synthetic Data: Close, but not Quite

Synthetic data is artificially generated to mimic real data. This is hard to do but becoming slightly easier with AI tools. Good quality synthetic data should have the same feature distances as real data, or it won’t be useful. Quality synthetic data can be used to effectively boost the diversity of training data by filling in gaps for smaller, marginalized populations, or for populations that the AI provider simply doesn’t have enough data. Synthetic data can also be used to address edge cases that might be difficult to find in adequate volumes in the real world. Additionally, organizations can generate a synthetic data set to satisfy data residency and privacy requirements that block access to the real data. This sounds great; however, synthetic data is just a piece of the puzzle, not the solution.

One of the obvious limitations of synthetic data is the disconnect from the real world. For example, autonomous vehicles trained solely on synthetic data will struggle with real, unforeseen road conditions. Additionally, synthetic data inherits bias from the real-world data used to generate it–pretty much defeating the purpose of our discussion. In conclusion, synthetic data is a useful option for fine tuning and addressing edge cases, but significant improvements in model efficacy and minimization of bias still rely upon accessing real world data.

A Better Way: Real Data via PETs-enabled Workflows

PETs protect data while in use. When it comes to AI/ML models, they can also protect the IP of the model being run–”two birds, one stone.” Solutions utilizing PETs provide the option to train models on real, sensitive datasets that weren’t previously accessible due to data privacy and security concerns. This unlocking of dataflows to real data is the best option to reduce bias. But how would it actually work?

For now, the leading options start with a confidential computing environment. Then, an integration with a PETs-based software solution that makes it ready to use out of the box while addressing the data governance and security requirements that aren’t included in a standard trusted execution environment (TEE). With this solution, the models and data are all encrypted before being sent to a secured computing environment. The environment can be hosted anywhere, which is important when addressing certain data localization requirements. This means that both the model IP and the security of input data are maintained during computation–not even the provider of the trusted execution environment has access to the models or data inside of it. The encrypted results are then sent back for review and logs are available for review.

This flow unlocks the best quality data no matter where it is or who has it, creating a path to bias minimization and high-efficacy models we can trust. This flow is also what the EU AI Act was describing in their requirements for an AI regulatory sandbox.

Facilitating Ethical and Legal Compliance

Acquiring good quality, real data is tough. Data privacy and localization requirements immediately limit the datasets that organizations can access. For innovation and growth to occur, data must flow to those who can extract the value from it.

Art 54 of the EU AI Act provides requirements for “high-risk” model types in terms of what must be proven before they can be taken to market. In short, teams will need to use real world data inside of an AI Regulatory Sandbox to show sufficient model efficacy and compliance with all the controls detailed in Title III Chapter 2. The controls include monitoring, transparency, explainability, data security, data protection, data minimization, and model protection–think DevSecOps + Data Ops.

The first challenge will be to find a real-world data set to use–as this is inherently sensitive data for such model types. Without technical guarantees, many organizations may hesitate to trust the model provider with their data or won’t be allowed to do so. In addition, the way the act defines an “AI Regulatory Sandbox” is a challenge in and of itself. Some of the requirements include a guarantee that the data is removed from the system after the model has been run as well as the governance controls, enforcement, and reporting to prove it.

Many organizations have tried using out-of-the-box data clean rooms (DCRs) and trusted execution environments (TEEs). But, on their own, these technologies require significant expertise and work to operationalize and meet data and AI regulatory requirements.

DCRs are simpler to use, but not yet useful for more robust AI/ML needs. TEEs are secured servers and still need an integrated collaboration platform to be useful, quickly. This, however, identifies an opportunity for privacy enhancing technology platforms to integrate with TEEs to remove that work, trivializing the setup and use of an AI regulatory sandbox, and therefore, acquisition and use of sensitive data.

By enabling the use of more diverse and comprehensive datasets in a privacy-preserving manner, these technologies help ensure that AI and ML practices comply with ethical standards and legal requirements related to data privacy (e.g., GDPR and EU AI Act in Europe). In summary, while requirements are often met with audible grunts and sighs, these requirements are simply guiding us to building better models that we can trust and rely upon for important data-driven decision making while protecting the privacy of the data subjects used for model development and customization.

#ai#ai act#AI bias#ai tools#AI/ML#apple#Art#autonomous vehicles#Bias#birds#box#Building#Business#challenge#Collaboration#collaboration platform#compliance#comprehensive#computation#computing#confidential computing#data#Data Governance#Data Management#data owner#data privacy#data privacy and security#data protection#data security#data-driven

0 notes

Text

SAG-AFTRA throwing voice actors under the bus after spending the last couple of months striking for protections against AI for on camera talent is some of the scummiest shit I have ever seen.

All these voice actors stood in solidarity with the on camera folks during the strike, but when push comes to shove their own union will gladly stab them in the fucking back because they don’t consider voice acting and performance capture “Real acting.”

Voice actors need their own union because this shit is ridiculous.

435 notes

·

View notes

Text

Fandom hates ai until someone makes an audio post of their fav talking dirty. "Protect artists" "protect writers" But fuck voice actors I guess?

Like I'm just saying, some of y'all passing around posts made using AI voice acting are the same people railing against ai in writing and visual art. Where is the line? Only when your job/hobby is threatened? Come on now.

#solavellan#:|#dragon age#do you care? if you don't then whatever#but what makes ai voice acting okay where other uses of ai are not?#if I'm wrong and that post with gdl dirty talking isn't ai then I'll shut up and be happy#but... I'm pretty sure that's ai?

1K notes

·

View notes