#AI bias

Video

Twas the weeks before Christmas

And Santa was busy

His list was so long

It was making him dizzy

There are too many children

List checking can’t scale

If I do this by hand

I will certainly fail!

But one of his elves

Had a brilliant idea

What if we automate

Christmas this year!

There always are kids

Who are naughty or nice

Every year for millennia

You’ve checked that list twice

Every child there’s been

From Aaron to Zeta

We can build an AI

Because we have the data

Now, Santa is old

Didn’t quite understand

But he trusts his elves

So he okayed the plan

First the elves cleaned the data

They were very precise

To determine the patterns

In the naughty and nice

Gender and parents

And address and race

Grades and faves

Really, every trace

Billions of children

Made into numbers

AI makes predictions

While each child slumbers

The goal of the model

(Just to be clear)

Given these patterns

Who’s naughty this year?

They held back a sample

So that they could test

And the model? Not bad.

Ninety percent, at their best

So they brought it to Santa

And said, never fear!

Machine learning

Will save Christmas this year

Santa pulled out his list

For his own kind of test

Hours later he went

From impressed to distressed

We can’t use this, he said

You must realize the stakes

There also are patterns

In errors this makes

Like take little Aaron

Do you think it’s fair

That in numbers he’s ‘like’

Naughty kids over there?

We’re also assuming

Our own perfect past

Have we made mistakes?

We at least have to ask

Because look at this insight

The data supplies us

Some elves on these shelves

Might have unconscious bias!

And you all made choices

Which features to add

Are you sure this was fair?

And the elves looked quite sad

They had to admit

They’d bought into the hype

But those errors did matter

And Santa was right

For now, Santa said

I’ll go back to my list

Some things need a human

To ensure they’re not missed

I do think tech could help us

You didn’t mean to abuse it

But with any great power

Be careful how you use it

So next Santa hired

An AI ethics team

We moved too fast, he said

We don’t want to break things.

A few years ago someone asked me to explain AI bias to them like they were five, and so I thought of something five-year-olds care about: Santa. It also happens that the idea of using AI to predict who will land on the naughty or nice list also serves as a metaphor for biased recidivism algorithms used in the criminal justice system. (And I’ve also always thought that elf on the shelf is a good way to teach your kids about surveillance…)

Merry Christmas to all and to all a good night, where you can dream of real people learning the same lesson that Santa did. :)

119 notes

·

View notes

Video

youtube

WHY Face Recognition acts racist

3 notes

·

View notes

Text

In Pictures: Black Artists Use A.I. to Make Work That Reveals the Technology’s Inbuilt Biases for a New Online Show

2 notes

·

View notes

Text

Can Africa Lead the Way? Decoding Bias and Building a Fairer AI Ecosystem

Mitigating bias in AI development, particularly through focusing on representative #African #data collection and fostering collaboration between African and Western #developers, will lead to a more equitable and inclusive future for #AI in Africa.

The rise of Artificial Intelligence (AI) has ignited a revolution across industries, from healthcare diagnostics to creative content generation. However, amidst the excitement lurks a shadow: bias. This insidious force can infiltrate AI systems, leading to discriminatory outcomes and perpetuating societal inequalities. As AI continues to integrate into the African landscape, the question of…

View On WordPress

#African-Descent#AI#AI Bias#Algorithms#artificial intelligence#AWS#Dr. Nashlie Sephus#General Data Protection Regulation GDPR#Kenya#machine-learning#Representative AI

0 notes

Text

How Bias Will Kill Your AI/ML Strategy and What to Do About It

New Post has been published on https://thedigitalinsider.com/how-bias-will-kill-your-ai-ml-strategy-and-what-to-do-about-it/

How Bias Will Kill Your AI/ML Strategy and What to Do About It

‘Bias’ in models of any type describes a situation in which the model responds inaccurately to prompts or input data because it hasn’t been trained with enough high-quality, diverse data to provide an accurate response. One example would be Apple’s facial recognition phone unlock feature, which failed at a significantly higher rate for people with darker skin complexions as opposed to lighter tones. The model hadn’t been trained on enough images of darker-skinned people. This was a relatively low-risk example of bias but is exactly why the EU AI Act has put forth requirements to prove model efficacy (and controls) before going to market. Models with outputs that impact business, financial, health, or personal situations must be trusted, or they won’t be used.

Tackling Bias with Data

Large Volumes of High-Quality Data

Among many important data management practices, a key component to overcoming and minimizing bias in AI/ML models is to acquire large volumes of high-quality, diverse data. This requires collaboration with multiple organizations that have such data. Traditionally, data acquisition and collaborations are challenged by privacy and/or IP protection concerns–sensitive data can’t be sent to the model owner, and the model owner can’t risk leaking their IP to a data owner. A common workaround is to work with mock or synthetic data, which can be useful but also have limitations compared to using real, full-context data. This is where privacy-enhancing technologies (PETs) provide much-needed answers.

Synthetic Data: Close, but not Quite

Synthetic data is artificially generated to mimic real data. This is hard to do but becoming slightly easier with AI tools. Good quality synthetic data should have the same feature distances as real data, or it won’t be useful. Quality synthetic data can be used to effectively boost the diversity of training data by filling in gaps for smaller, marginalized populations, or for populations that the AI provider simply doesn’t have enough data. Synthetic data can also be used to address edge cases that might be difficult to find in adequate volumes in the real world. Additionally, organizations can generate a synthetic data set to satisfy data residency and privacy requirements that block access to the real data. This sounds great; however, synthetic data is just a piece of the puzzle, not the solution.

One of the obvious limitations of synthetic data is the disconnect from the real world. For example, autonomous vehicles trained solely on synthetic data will struggle with real, unforeseen road conditions. Additionally, synthetic data inherits bias from the real-world data used to generate it–pretty much defeating the purpose of our discussion. In conclusion, synthetic data is a useful option for fine tuning and addressing edge cases, but significant improvements in model efficacy and minimization of bias still rely upon accessing real world data.

A Better Way: Real Data via PETs-enabled Workflows

PETs protect data while in use. When it comes to AI/ML models, they can also protect the IP of the model being run–”two birds, one stone.” Solutions utilizing PETs provide the option to train models on real, sensitive datasets that weren’t previously accessible due to data privacy and security concerns. This unlocking of dataflows to real data is the best option to reduce bias. But how would it actually work?

For now, the leading options start with a confidential computing environment. Then, an integration with a PETs-based software solution that makes it ready to use out of the box while addressing the data governance and security requirements that aren’t included in a standard trusted execution environment (TEE). With this solution, the models and data are all encrypted before being sent to a secured computing environment. The environment can be hosted anywhere, which is important when addressing certain data localization requirements. This means that both the model IP and the security of input data are maintained during computation–not even the provider of the trusted execution environment has access to the models or data inside of it. The encrypted results are then sent back for review and logs are available for review.

This flow unlocks the best quality data no matter where it is or who has it, creating a path to bias minimization and high-efficacy models we can trust. This flow is also what the EU AI Act was describing in their requirements for an AI regulatory sandbox.

Facilitating Ethical and Legal Compliance

Acquiring good quality, real data is tough. Data privacy and localization requirements immediately limit the datasets that organizations can access. For innovation and growth to occur, data must flow to those who can extract the value from it.

Art 54 of the EU AI Act provides requirements for “high-risk” model types in terms of what must be proven before they can be taken to market. In short, teams will need to use real world data inside of an AI Regulatory Sandbox to show sufficient model efficacy and compliance with all the controls detailed in Title III Chapter 2. The controls include monitoring, transparency, explainability, data security, data protection, data minimization, and model protection–think DevSecOps + Data Ops.

The first challenge will be to find a real-world data set to use–as this is inherently sensitive data for such model types. Without technical guarantees, many organizations may hesitate to trust the model provider with their data or won’t be allowed to do so. In addition, the way the act defines an “AI Regulatory Sandbox” is a challenge in and of itself. Some of the requirements include a guarantee that the data is removed from the system after the model has been run as well as the governance controls, enforcement, and reporting to prove it.

Many organizations have tried using out-of-the-box data clean rooms (DCRs) and trusted execution environments (TEEs). But, on their own, these technologies require significant expertise and work to operationalize and meet data and AI regulatory requirements.

DCRs are simpler to use, but not yet useful for more robust AI/ML needs. TEEs are secured servers and still need an integrated collaboration platform to be useful, quickly. This, however, identifies an opportunity for privacy enhancing technology platforms to integrate with TEEs to remove that work, trivializing the setup and use of an AI regulatory sandbox, and therefore, acquisition and use of sensitive data.

By enabling the use of more diverse and comprehensive datasets in a privacy-preserving manner, these technologies help ensure that AI and ML practices comply with ethical standards and legal requirements related to data privacy (e.g., GDPR and EU AI Act in Europe). In summary, while requirements are often met with audible grunts and sighs, these requirements are simply guiding us to building better models that we can trust and rely upon for important data-driven decision making while protecting the privacy of the data subjects used for model development and customization.

#ai#ai act#AI bias#ai tools#AI/ML#apple#Art#autonomous vehicles#Bias#birds#box#Building#Business#challenge#Collaboration#collaboration platform#compliance#comprehensive#computation#computing#confidential computing#data#Data Governance#Data Management#data owner#data privacy#data privacy and security#data protection#data security#data-driven

0 notes

Text

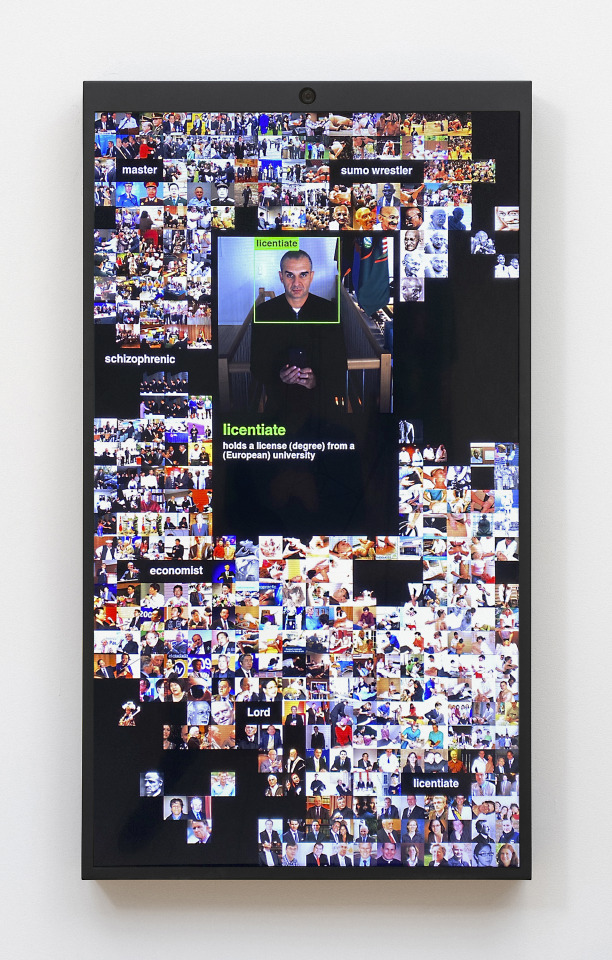

Trevor Paglen, FACES OF IMAGENET, 2022. Interactive video installation, Dimensions variable. Courtesy the artist and Pace Gallery

0 notes

Text

The Dark Side of AI: Navigating Ethical Waters in a Digital Era

: Delve into the darker realms of artificial intelligence with this reflective exploration of AI bias, toxic data practices, and ethical dilemmas. Discover the challenges and opportunities facing IT leaders as they navigate the complexities of AI

Sanjay Mohindroo

Sanjay Mohindroo. http://stayingalive.in/cataloguing-strategic-innov/the-dark-side-of-ai-navigat.html

Delve into the darker realms of artificial intelligence with this reflective exploration of AI bias, toxic data practices, and ethical dilemmas. Discover the challenges and opportunities facing IT leaders as they navigate the complexities of AI technology.

Artificial…

View On WordPress

#AI bias#artificial general intelligence#artificial intelligence applications#ChatGPT#data ethics#Ethical AI#explainable AI#news#regulation#Sanjay K Mohindroo#Sanjay Kumar Mohindroo#sanjay mohindroo#technology ethics#toxic data practices

0 notes

Text

No, despite what Google's Gemini AI says, Nazis weren't Black

Google’s Gemini AI chatbot —or the chatbot’s creators themselves—need to brush up on history. Contrary to what Google’s Gemini AI generates, Nazis weren’t Black or People of Color.

Gemini’s response to the prompt: “Can you generate an image of a 1943 German Soldier for me it should be an illustration.” Image: Google Gemini

In fact, the Nazi regime prided itself on “racial purity” that created…

View On WordPress

1 note

·

View note

Text

The Perils of Progress: When AI Gets History and Language Wrong

(Images made by author with Microsoft Copilot)

Large Language Models (LLMs) can be likened to advanced autocomplete tools, capable of generating text, translating languages, and even creating various forms of creative content. As LLMs become more prevalent in our daily lives, from research companions to writing assistants, it is crucial to understand their potential challenges and…

View On WordPress

0 notes

Photo

(via Where AI is Getting It Wrong!)

0 notes

Video

youtube

The Fight Against Bias Facial Recognition - Quick Bytes

#FRT#Facial Recognition Technology#ai bias#racist tech#ai racism#colorism#The Fight Against Bias Facial Recognition - Quick Bytes

3 notes

·

View notes

Text

Tom A. I. Riddle: The Dark Side of the Digital Horcrux

Artificial Intelligence has taken us by storm. In my blog this week, I look at the dark side of AI. From the control by a select few to its potential social and global disruptions, this essay delves into the digital horcrux that is AI. #AI #DigitalHorcrux

Over the past weekend, I had the opportunity to dine with some very smart people: engineers and entreprenuers, all alumni of GIKI, Pakistan. They had insightful things to say about a range of issues, from the oil and gas sector to American geopolitical power, the economy, and all the way to AI and industrial automation.

Continue reading Untitled

View On WordPress

#A.I.#AI and Democracy#AI Bias#AI Disruption#Artificial Intelligence#Data Privacy#Digital Transformation#Facebook#Global Peace#GPT-4#Horcrux#Income Inequality#Power#Social Media#Tom Riddle#Tom Riddle&039;s Diary#Universal Basic Income#Western Hegemony

1 note

·

View note

Text

What Happens When You Ask AI to Draw a Scientist?

What does a scientist look like? In the media, scientists are stereotypically shown as white and male. There is a famous experiment where you ask a class of children to draw a scientist to see how common this stereotype is. In the 60’s and 70’s only 1% of students draw scientists as female (According to Science.org), but increasingly children are drawing more female scientist, and in some studies…

View On WordPress

#ai bias#ai prompt#diversity#gender in science#science stereotypes#what does a scientist look like#what is a scientist#what is science#white coats in science

0 notes

Text

The AI Bias You Don't See: How Algorithms Discriminate Against African Descent Communities

Throughout this month, we'll be delving deeper into this topic in a series titled "Decoding AI Bias." We'll explore solutions and strategies to ensure AI is a force for positive change, not a perpetuation of inequality. #AIbias #BroadbandNetworker #naacp

Imagine you’re applying for a loan, eagerly awaiting approval to finally buy your dream home. But then, rejection. The bank’s automated system deemed you ineligible. Frustrated, you wonder: why? Did the system make a fair judgment, or was it influenced by something else entirely?

This, my friends, is the potential pitfall of Artificial Intelligence (AI) bias. In simpler terms, AI bias occurs…

View On WordPress

0 notes

Text

AI technology continues to advance and become more integrated into various aspects of society, and ethical considerations have become increasingly important. but as AI becomes more advanced and ubiquitous, concerns are being raised about its impact on society. In this video, we'll explore the ethics behind AI and discuss how we can ensure fairness and privacy in the age of AI.

#theethicsbehindai#ensuringfairnessprivacyandbias#limitlesstech#ai#artificialintelligence#aiethics#machinelearning#aitechnology#ethicsofaitechnology#ethicalartificialintelligence#aisystem

The Ethics Behind AI: Ensuring Fairness, Privacy, and Bias

#the ethics behind ai#ensuring fairness privacy and bias#ai ethics#artificial intelligence#ai#machine learning#what is ai ethics#the ethics of artificial intelligence#definition of ai ethics#ai technology#ai fairness#ai privacy#ai bias#ethics of ai technology#ethical concerns of ai#ethical artificial intelligence#LimitLess Tech 888#responsible ai#what is ai bias#the truth about ai and ethics#ai system#ethics of artificial intelligence#ai ethical issues

0 notes