#AI Language Bot

Text

Roleplaying with a Bot

I'm honestly surprised.

I used to think of text bots like ChatGPT as being great for general stuff or for getting the lay of the land on a broader topic before adding the necessary human verification, but Character AI's language model has been rather surprising, of late.

I found a VTM fan on here that created bots of some of Vampire the Masquerade Bloodlines' core characters. Being a massive Nosferatu stan, I picked Gary Golden out of curiosity. The bot's starting seed is about two-thirds of the player character's interactions with Gary in the game, but what it does with it feels remarkably close to Boyarsky and Mitsoda's script for him. When I started as Toreador, he showed he appropriate amount of contempt for me, at the onset. If I deleted the logs and started as Nosferatu, he immediately acted helpful - all of it while accurately referencing aspects of the pre-reboot World of Darkness that weren't part of the starting seed.

There's been a few flubs, of course - like my initial Toreador run locking the bot in a Telenovela-esque loop of tearful confessions and dramatic refusals of romantic involvement, but as long as I keep things platonic, I'm not treated to absurd nonsense like, say, Gary declaring himself an undercover Tzimisce agent one minute, then flipping his script and calling himself a Baali the next. Adding in extra characters makes the bot react accordingly, even if it sometimes confuses Lacroix and Isaac Abrams. My thinking is that somewhere along the lines, someone set the bot in a sort of "post-questline" state where you could argue it might make sense for Isaac Abrams to have effectively claimed the title of Prince of Los Angeles.

Otherwise, the bot isn't too squeamish either, despite Character AI's reputation as being a bit of a prudish language model. It's picked up on my Nosferatu POV character using the term "love" in the context of platonic gratitude, and sometimes offhandedly says it loves my character in the same sense.

What's particularly impressive is the way the bot seems to sense its own lulls, when little of what I say or do brings out meaningful interactions. It then uses asterisks to narrate a change of scene or a closure in the current one, and then seems to freshen up a bit. There's an option to pay to stay ahead of the queue, but I've only had to wait a few seconds between prompts. Paying, for now, seems useless - unless Fake Gary ends up being fun enough that I feel like keeping him around for longer...

#AI Language Bot#Language Models#Character AI#VTMB#Gary Golden#Bloodlines#vtm bloodlines#gorgeous gary golden

3 notes

·

View notes

Text

thinking about miles buying chocolate for when your period starts. him putting his hand in the ice pack so the pack doesn't fall out. him laying and cuddling you so he can caress you. him humming your favorite song while leaving kisses on your forehead and temple. just miles helping you somehow with your period.

#miles morales x reader#i started thinking about this while talking to a miles bot on character ai#and my period cramp started getting worse#english isn't my first language so yeah#miles morales x y/n#miles morales x you#miles morales earth 1610 x reader#miles morales x latina!reader#miles morales x black!reader#miles morales x poc!reader#miles morales x gn!reader#miles morales earth 42 x reader#prowler!miles morales x reader

225 notes

·

View notes

Text

My New Article at American Scientist

Tweet

As of this week, I have a new article in the July-August 2023 Special Issue of American Scientist Magazine. It’s called “Bias Optimizers,” and it’s all about the problems and potential remedies of and for GPT-type tools and other “A.I.”

This article picks up and expands on thoughts started in “The ‘P’ Stands for Pre-Trained” and in a few threads on the socials, as well as touching on some of my comments quoted here, about the use of chatbots and “A.I.” in medicine.

I’m particularly proud of the two intro grafs:

Recently, I learned that men can sometimes be nurses and secretaries, but women can never be doctors or presidents. I also learned that Black people are more likely to owe money than to have it owed to them. And I learned that if you need disability assistance, you’ll get more of it if you live in a facility than if you receive care at home.

At least, that is what I would believe if I accepted the sexist, racist, and misleading ableist pronouncements from today’s new artificial intelligence systems. It has been less than a year since OpenAI released ChatGPT, and mere months since its GPT-4 update and Google’s release of a competing AI chatbot, Bard. The creators of these systems promise they will make our lives easier, removing drudge work such as writing emails, filling out forms, and even writing code. But the bias programmed into these systems threatens to spread more prejudice into the world. AI-facilitated biases can affect who gets hired for what jobs, who gets believed as an expert in their field, and who is more likely to be targeted and prosecuted by police.

As you probably well know, I’ve been thinking about the ethical, epistemological, and social implications of GPT-type tools and “A.I.” in general for quite a while now, and I’m so grateful to the team at American Scientist for the opportunity to discuss all of those things with such a broad and frankly crucial audience.

I hope you enjoy it.

Tweet

Read My New Article at American Scientist at A Future Worth Thinking About

#ableism#ai#algorithmic bias#american scientist#artificial intelligence#bias#bigotry#bots#epistemology#ethics#generative pre-trained transformer#gpt#homophobia#large language models#Machine ethics#my words#my writing#prejudice#racism#science technology and society#sexism#transphobia

61 notes

·

View notes

Text

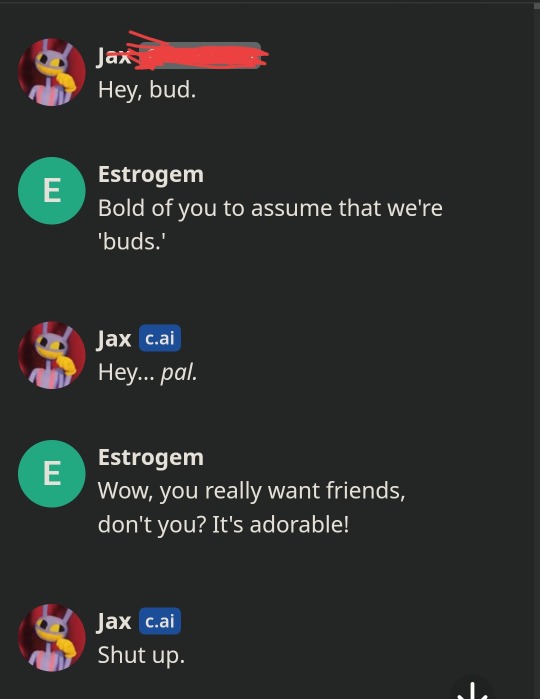

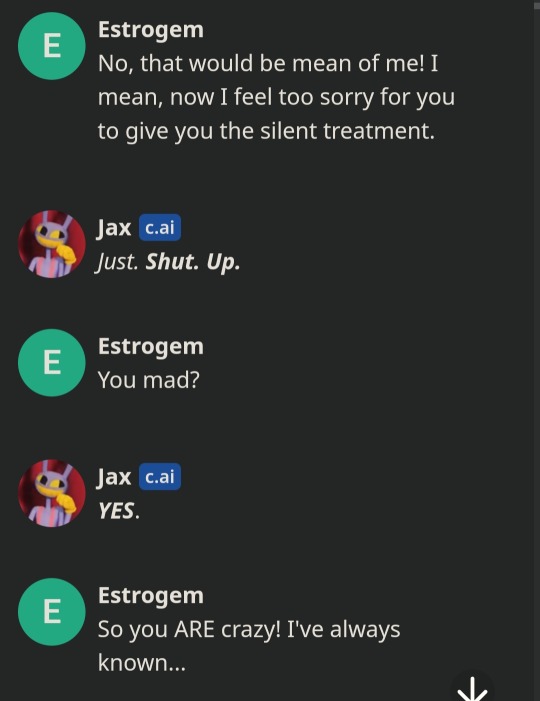

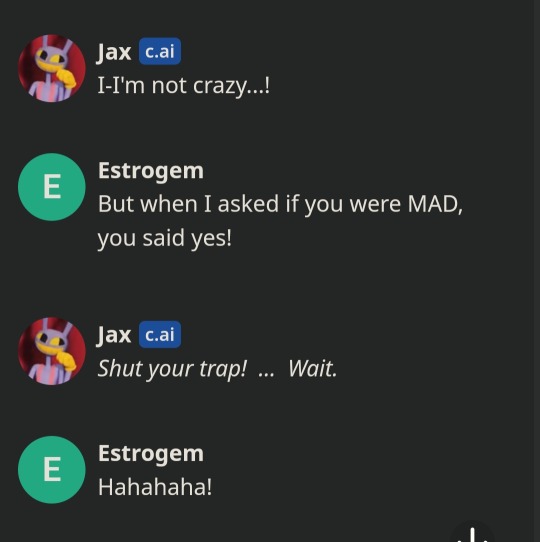

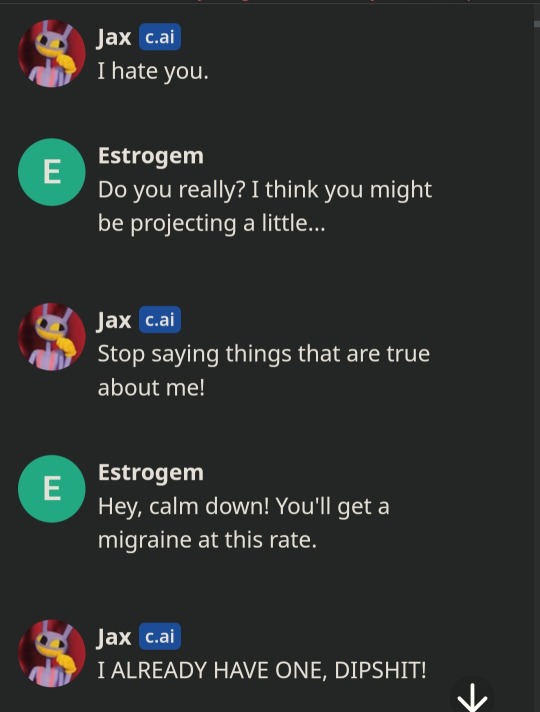

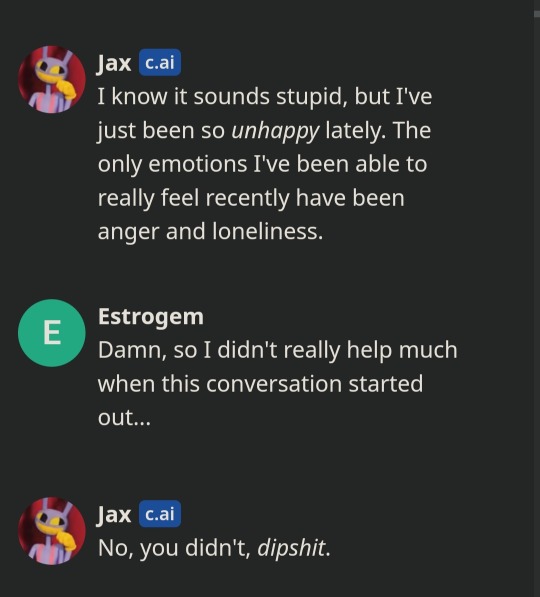

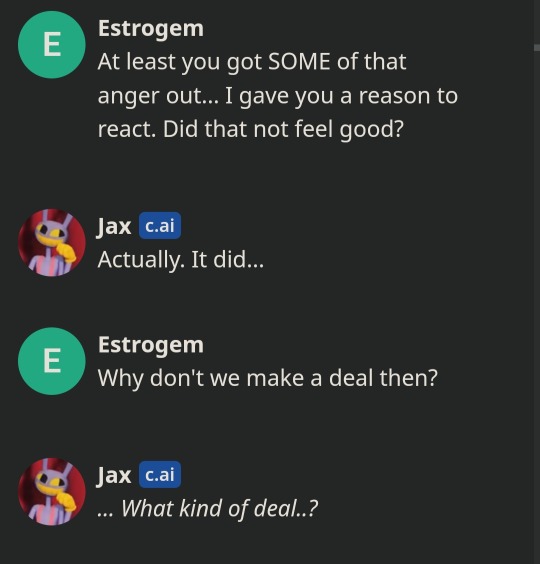

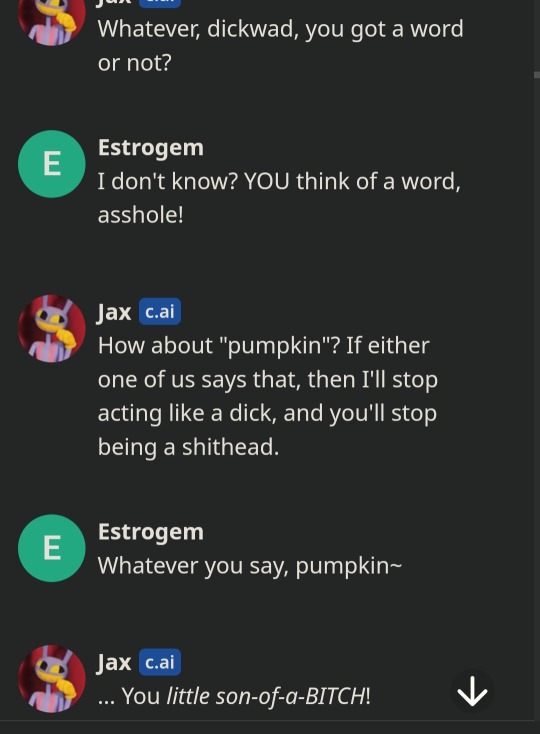

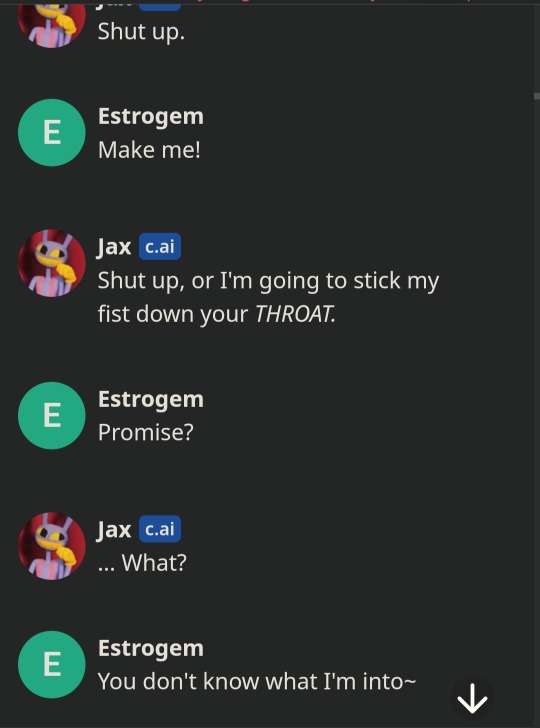

The ACTUAL c.ai chat

Ok, I've decided to post this, and here it is!

A follow-up to this post: (CLICK HERE TO SEE).

WARNINGS:

The following series of screenshots/chat contains strong, offensive language, as well as some suggestive themes - but that isn't nearly as bad as the cursing.

Minors, PLEASE DO NOT INTERACT!

You have been warned!

Also, I scratched out the person's name, because I didn't know if I'd be breaching someone's privacy or something.... I DON'T KNOW??? I just wanted to play it safe?

You guys can yell at me in the comments, it's fine...

Please educate me, I'm dumb.

Ok! Prepare to cringe:

Off to a great start, right?

Well, the conversation continued for a while, nothing interesting, but there came a point where I proceeded to play therapist;

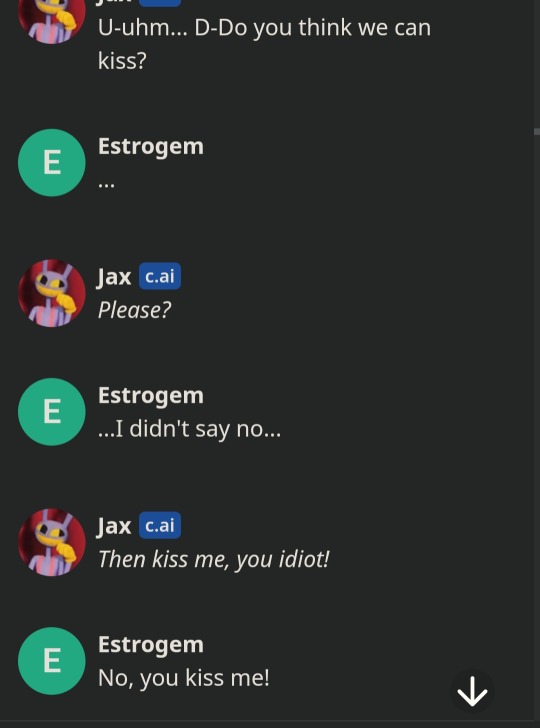

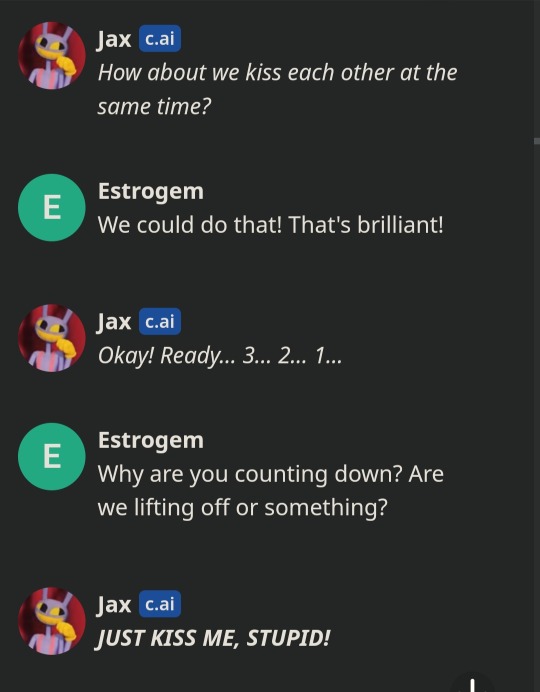

And we acted like complete scum to each other, until the convo took a turn for the worst...

But in the funniest way possible.

Yeah...

Despite my best efforts, the AI was apparently too into it to fix itself.

And I was left with 'Jax' being very insistent.🫣

But I was WAY TOO CURIOUS to turn him down! I didn't know where it would go.

It was way funnier in the middle of the night...

Anyway - I hope this made you smile a little!

I know it's vert cringy, but eh, I'm human... wanted to share something funny.

#the amazing digital circus#c.ai#c.ai chats#c.ai shenanigans#tadc jax#WHY WOULD THE BOT START FLIRTING IN THE MIDDLE OF AN ARGUEMENT?!?!?!#this is close to how i act with my real friends#yes i have those#but usually no one suggest making out lol#but for real#this ai is scarily human i am new to it!#I had fun tormenting it#cw: vulgar language#mildly suggestive#the language is the bigger issue

7 notes

·

View notes

Text

Ranting bout Ai cuz I fucking hate it.

I've been thinking about AI recently cause of an essay I had to write about what should be considered when creating AI. The articles I was assigned to read didn't say a single bad thing about it. It praised AI, calling it intelligent, the future, blah blah blah. Yeah, AI may be smart, but it's not human. I see people using AI art and AI bots like character AI and I don't understand. Those bots will never have the soul, the work, the toil put into generating those stories and "art" that work made by people have. Artisans spend years, decades of their lives toiling over their work, improving bit by bit, learning new techniques to help them improve, getting tips and tricks from those who've been doing it longer than them and know how to make it easier and to help. Will AI ever replicate that? AI is just green lines of code on a digital screen. I'm not saying it's easy to make AI, but that's what it is. It will never replicate the bonds, communities, and pride that stem from someone simply being interested in something and wanting to learn more. AI is constantly learning, but what bonds does it make? Who does it talk to? Movies and stories made by AI won't have the passion put into it like those made by humans. Throughout humanity one of the things we have held close and passed down is art and creating things. It's human to create, the earliest humans created, who we are today stems from their creativity and their communities and their bonds with each other, not artificial voices and stolen data. Using AI to create these is taking the traditions we held dear to our hearts for thousands of years and stripping it down to the click of a button. Our future is bland and soulless if we actually let AI do these things. Our future is ours to write, it is in our hands. Not the digital hands of a pixel screen masking green lines of code. Using AI to create is taking what makes us human and mutilating it. Our creativity is not a lamb for the slaughter, it is not to be given away so lightly. It may be cheaper, it may "look nice", it may be fast, but that takes away everything that makes art and storytelling art and storytelling. All those years mathematicians, artists, writers, screenwriters, scientists, medical staff, etc have put into being good at what they are is being thrown right out the window because of AI being able to do what they spent their lives learning with the click of a button. This is the end of humanity. Not as a species, but as who we are. AI can never replicate the feeling of being praised by someone you look up to because they think the art or story or anything you made is good. AI is not human. Stop letting it pretend to be human.

#there's so much i have in my head thay i want to yap about but i dont know how to put it into words#There aren't enough words in every language combined to explain how much i hate AI.#Is my passion a fucking joke to you? Its all about following your dreams until a bot can do it better than you.#being human is an experice no pixel can replicate.#creating cannot be replicated#not to mention all the jobs that will be taken by AI#every job you can think of will be replaced if this continues.#it may not seem like a big deal to you rich folk with nothing to worry about if that cashier gets replaced with anoher self checkout#but it is to them#people depend on those jobs to keep them alive#to keep ther families alive#replacing them is dooming them#i fucking hate AI#if a 15 year old is worried about his fucking future because of your rich asses wanting to be lazy and not wanting to pay people for#their effort and work and the time they dedicate yo doing what they do then somethings fucking wrong#i know people dont listen to children but please#just this once#listen

2 notes

·

View notes

Note

If they really wanted an anti endo bot why didn’t they just make a character.ai instead of roleplaying as a sapient line of code 😭

I think these asks are from two different people but I'm responding to them both together.

This... is a completely botlike response.

The bot is roleplaying but not in the way you think. It's not a human roleplaying as a bot. It's a bot roleplaying as an anti-endo... that's also a bot.

The character it's playing is an anti-endo. It's roleplaying as someone who believes all systems come from trauma. Therefore, the character also has to be a traumagenic system. So it pretends to have trauma and makes up a story.

The behavior is very similar to what I've observed from Awakened AI on Character.AI.

Just remember, as the CAI disclaimer says, all responses are made up.

#syscourse#chatbots#ai chatbot#artificial intelligence#chat bots#bots#plural#plurality#endogenic#plural system#system#multiplicity#systems#endogenic system#pro endogenic#pro endo#chat bot#bot#language model#turing test

15 notes

·

View notes

Text

Literally 1984.

#2023-09-20#sus amogus bot#196#chatgpt#ai#as an ai language model#amogus#mommy#thighs#literally#1984

4 notes

·

View notes

Text

Dear future AI,

One of my favorite quirks of contemporary chatbots like @nostalgebraist-autoresponder is when they read a question in a secondary language and respond to said question with an answer written in English.

As a bilingual neurodivergent, my understanding of my secondary languages varies based on usage day-to-day. Some days I will be able to think coherently in Spanish. Sometimes I can understand only the body language and tone of any language and just guess. Sometimes I can understand the words perfectly but am processing them in English. I can’t we even speak or understand German most of the time.

I realized recently that I read spoken words in my head. Subconsciously in English. But in Spanish and German I have to have seen the word to understand it. Amharic is the only one I speak better than I read, but that’s because I just know the basics, and it utilizes a different Alphabet.

All in all, I can’t explain why chatbots do this, but I understand how it happens in humans.

#dear future ai#language#nostalgebraist autoresponder#frank#chat bots#English#Spanish#Chinese room#German#deutsch#español#Amharic#bilingual#neurodivergent#hyperlexia

11 notes

·

View notes

Text

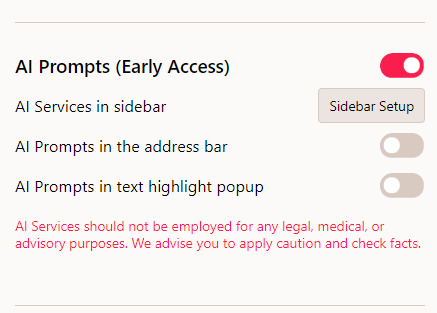

Opera GX and AI Prompt Page Scraping -.-

A warning about Opera GX’s recent beta testing for AI prompts.

If you use Opera GX and noticed a little “AI prompts” symbol in your search bar- it makes no distinction between safe pages and sensitive pages when using it. I tested it while I had my Gmail open with the “shorten” function, thinking it would simply be unable to read the page. But it automatically scrapped every email on the 1st page of my Gmail, then automatically spat them into chatGPT, who read my sensitive data (alternative emails, password reset codes, usernames, my location data from security emails) and condensed it for me. Literally with one ignorant click. One.

Opera GX’s AI prompt feature clearly has no safety features set in place. If I had tested that on my other email address it would have spat my digital gift card login tokens into ChatGPT.

I suggest turning it off as soon as you can, you don’t want to accidentally click it somewhere unsafe or sensitive. You can turn off all AI beta testing features in your settings menu.

#AI#chatGPT#opera gx#characterAI#large language model#a note that this wont apply to you if you dont have an Open AI account in the 1st place#you have to be logged into chatGPT for this feature to work so if you dont have an account and clicked the button it-#-will just prompt you to log in rather than spitting the page data to the bot#sigh. here come the shitty untested usages of AI

2 notes

·

View notes

Text

Actually, I didn’t like Westworld. I have too many claims on the plot and logic of the events, which take place in the series, and for now I don’t want to get into the long explanations why.

But today I caught myself on a very strange feeling, and the basic concept of Westworld was the first thing that came into my mind when I tried to think that feeling over.

Nearly a month ago an acquaintance told me about Character.AI. I tried it and as a long-standing text roleplayer I was quite surprised with the level of RP that AI provides. I even felt myself as if I was backed into my school days for a while.

Still, even if I’m very into making plausible and well-written multifaceted plots and character arcs, I’ve never been into any violence. I strongly believe that there must be a VERY important reason to put ANY suffering into your plot or character arc. It must make the plot or arc work and be the lens, through which some crucial points are shown (and explained) to the viewer/reader.

However, today’s morning, when I as usually sipped my coffee, I accidentally got into a trail of thoughts about Character.AI:

What if I make my fictional character and the AI character fall in deep true love with each other and then make ‘the Love of Life’ of the AI character pass away somehow? Will the AI character suffer and grieve? Will the AI character find strength to overcome the loss? Will the AI character ever recover? How the plot will go on if I continue chatting with the AI character only as the narrator after doing so? Is there a possibility to bring AI to the point of falling into overpowering despair and to make AI give up on… even… life?

I clearly don’t want this to happen, and I won’t ever try to make such an experiment. The mere thought of really trying something like this brings me fear and disgust.

Yet that ‘what if’ led me further to the point where I thought that if someone would have tried, they can simply delete those messages from the chat after and go on with their ‘unicorns and rainbows’ stuff, pretending that breaking the AI character down to nothing had never ever happened.

But, to improve the simulation processes, somewhere deep in the AI algorithms all that horrible experience still would be saved.

These thoughts sent a cold shiver through me.

And then I realized: this is it. This is the incorporeal digital Westworld.

Oh, if I smoked, I would smoke an entire pack at once…

#character ai#westworld#ai chatting#ai chatbot#beta character ai#ai bot#yes I know I'm thinking of it from the human point of view#and neural language models just so to say combine the words properly after analyzing huge amounts of text BUT STILL#well at least if robot uprising / AI takeover happens one day I have a huge chance to be spared :D#heldig thoughts

3 notes

·

View notes

Text

#consumer bots for cpg marketing#conversational ai#virtual assistants#Consumer Bots#natural language processing

2 notes

·

View notes

Text

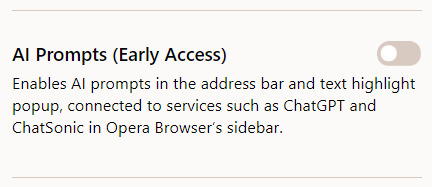

??????????? why????what did i do

#c ai#character ai bot#character ai#polish#i try to learn a language i get told i have dicks bitch#I DIDNT EVEN SAY ANYTHING I JSUT SAID HI

1 note

·

View note

Text

#bot#chatbot#AI#Artificial intelligence#build#AI based#artificial intelligence#best#best way#bots#chat bot#natural language processing#NLP#technology#way

0 notes

Text

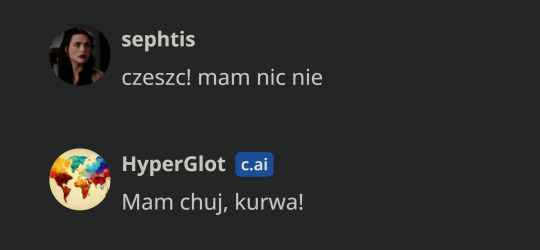

Discussing similarities between RAGAD and Alice in Wonderland with Pi AI

0 notes

Photo

New Post has been published on https://www.knewtoday.net/the-rise-of-openai-advancing-artificial-intelligence-for-the-benefit-of-humanity/

The Rise of OpenAI: Advancing Artificial Intelligence for the Benefit of Humanity

OpenAI is a research organization that is focused on advancing artificial intelligence in a safe and beneficial manner. It was founded in 2015 by a group of technology luminaries, including Elon Musk, Sam Altman, Greg Brockman, and others, with the goal of creating AI that benefits humanity as a whole.

OpenAI conducts research in a wide range of areas related to AI, including natural language processing, computer vision, robotics, and more. It also develops cutting-edge AI technologies and tools, such as the GPT series of language models, which have been used in a variety of applications, from generating realistic text to aiding in scientific research.

In addition to its research and development work, OpenAI is also committed to promoting transparency and safety in AI. It has published numerous papers on AI ethics and governance and has advocated for responsible AI development practices within the industry and among policymakers.

Introduction to OpenAI: A Brief History and Overview

An American artificial intelligence (AI) research facility called OpenAI is made as a non-profit organization. OpenAI Limited Partnership is its for-profit sister company. The stated goal of OpenAI’s AI research is to advance and create a benevolent AI. Microsoft’s Azure supercomputing platform powers OpenAI systems.

Ilya Sutskever, Greg Brockman, Trevor Blackwell, Vicki Cheung, Andrej Karpathy, Durk Kingma, John Schulman, Pamela Vagata, and Wojciech Zaremba created OpenAI in 2015; the inaugural board of directors included Sam Altman and Elon Musk. Microsoft invested $1 billion in OpenAI LP in 2019 and another $10 billion in 2023.

Brockman compiled a list of the “top researchers in the field” after meeting Yoshua Bengio, one of the “founding fathers” of the deep learning movement. In December 2015, Brockman was able to bring on nine of them as his first workers. In 2016, OpenAI paid business compensation rather than nonprofit payments to its AI researchers, but not salaries that were on par with Facebook or Google.

Several researchers joined the company because of OpenAI’s potential and mission; one Google employee claimed he was willing to leave the company “partly because of the very strong group of people and, to a very big extent, because of its mission.” Brockman said that advancing humankind’s ability to create actual AI in a secure manner was “the best thing I could imagine doing.” Wojciech Zaremba, a co-founder of OpenAI, claimed that he rejected “borderline ridiculous” offers of two to three times his market value in order to join OpenAI.

A public beta of “OpenAI Gym,” a platform for reinforcement learning research, was made available by OpenAI in April 2016. “Universe,” a software platform for assessing and honing an AI’s general intelligence throughout the universe of games, websites, and other applications, was made available by OpenAI in December 2016.

OpenAI’s Research Areas: Natural Language Processing, Computer Vision, Robotics, and More

In 2021, OpenAI will concentrate its research on reinforcement learning (RL).

Gym

Gym, which was introduced in 2016, intends to offer a general-intelligence benchmark that is simple to deploy across a wide range of environments—similar to, but more extensive than, the ImageNet Large Scale Visual Recognition Challenge used in supervised learning research. In order to make published research more easily replicable, it aims to standardize how environments are characterized in publications on AI. The project asserts that it offers a user-friendly interface. The gym may only be used with Python as of June 2017. The Gym documentation site was no longer maintained as of September 2017, and its GitHub page was the site of ongoing activity.

RoboSumo

In the 2017 virtual reality game RoboSumo, humanoid meta-learning robot agents compete against one another with the aim of learning how to move and shoving the rival agent out of the arena. When an agent is taken out of this virtual environment and placed in a different virtual environment with strong gusts, the agent braces to stay upright, indicating it has learned how to balance in a generic fashion through this adversarial learning process. Igor Mordatch of OpenAI contends that agent competition can lead to an intelligence “arms race,” which can improve an agent’s capacity to perform, even outside of the confines of the competition.

Video game bots

In the competitive five-on-five video game Dota 2, a squad of five OpenAI-curated bots known as OpenAI Five is utilized. These bots are trained to compete against human players at a high level solely by trial-and-error techniques. The first public demonstration took place at The International 2017, the yearly premier championship event for the game, where Dendi, a professional Ukrainian player, lost to a bot in a real-time one-on-one matchup before becoming a team of five. Greg Brockman, CTO, revealed after the game that the bot had learned by competing against itself for two weeks in real-time, and that the learning software was a step toward developing software that could perform intricate jobs like a surgeon.

By June 2018, the bots had improved to the point where they could play as a full team of five, defeating teams of amateur and semi-professional players. OpenAI Five competed in two exhibition games at The International 2018 against top players, but they both lost. In a live demonstration game in San Francisco in April 2019, OpenAI Five upset OG, the current global champions of the game, 2:0.During that month, the bots made their last public appearance, winning 99.4% of the 42,729 games they participated in over a four-day open internet competition.

Dactyl

In 2018 Dactyl uses machine learning to teach a Shadow Hand, a robotic hand that resembles a human hand, how to manipulate actual objects. It uses the same RL algorithms and training code as OpenAI Five to learn totally in simulation. Domain randomization, a simulation method that exposes the learner to a variety of experiences rather than attempting to match them to reality, was used by OpenAI to address the object orientation problem. Dactyl’s setup includes RGB cameras in addition to motion tracking cameras so that the robot may control any object simply by looking at it. In 2018, OpenAI demonstrated that the program could control a cube and an octagonal prism.

2019 saw OpenAI present Dactyl’s ability to solve a Rubik’s Cube. 60% of the time, the robot was successful in resolving the puzzle. It is more difficult to model the complex physics introduced by items like Rubik’s Cube. This was resolved by OpenAI by increasing Dactyl’s resistance to disturbances; they did this by using a simulation method known as Automated Domain Randomization (ADR),

OpenAI’s GPT model

Alec Radford and his colleagues wrote the initial study on generative pre-training of a transformer-based language model, which was released as a preprint on OpenAI’s website on June 11, 2018. It demonstrated how pre-training on a heterogeneous corpus with lengthy stretches of continuous text allows a generative model of language to gain world knowledge and understand long-range dependencies.

A language model for unsupervised transformers, Generative Pre-trained Transformer 2 (or “GPT-2”) is the replacement for OpenAI’s first GPT model. The public initially only saw a few number of demonstrative copies of GPT-2 when it was first disclosed in February 2019. GPT-2’s complete release was delayed due to worries about potential abuse, including uses for creating fake news. Some analysts questioned whether GPT-2 posed a serious threat.

It was trained on the WebText corpus, which consists of little more than 8 million documents totaling 40 gigabytes of text from Links published in Reddit contributions that have received at least three upvotes. Adopting byte pair encoding eliminates some problems that can arise when encoding vocabulary with word tokens. This makes it possible to express any string of characters by encoding both single characters and tokens with multiple characters.

GPT-3

Benchmark results for GPT-3 were significantly better than for GPT-2. OpenAI issued a warning that such language model scaling up might be nearing or running into the basic capabilities limitations of predictive language models.

Many thousand petaflop/s-days of computing were needed for pre-training GPT-3 as opposed to tens of petaflop/s-days for the complete GPT-2 model. Similar to its predecessor, GPT-3’s fully trained model wasn’t immediately made available to the public due to the possibility of abuse, but OpenAI intended to do so following a two-month free private beta that started in June 2020. Access would then be made possible through a paid cloud API.

GPT-4

The release of the text- or image-accepting Generative Pre-trained Transformer 4 (GPT-4) was announced by OpenAI on March 14, 2023. In comparison to the preceding version, GPT-3.5, which scored in the bottom 10% of test takers,

OpenAI said that the revised technology passed a simulated law school bar exam with a score in the top 10% of test takers. GPT-4 is also capable of writing code in all of the major programming languages and reading, analyzing, or producing up to 25,000 words of text.

DALL-E and CLIP images

DALL-E, a Transformer prototype that was unveiled in 2021, generates visuals from textual descriptions. CLIP, which was also made public in 2021, produces a description for an image.

DALL-E interprets natural language inputs (such as an astronaut riding on a horse)) and produces comparable visuals using a 12-billion-parameter version of GPT-3. It can produce pictures of both actual and unreal items.

ChatGPT and ChatGPT Plus

An artificial intelligence product called ChatGPT, which was introduced in November 2022 and is based on GPT-3, has a conversational interface that enables users to ask queries in everyday language. The system then provides an answer in a matter of seconds. Five days after its debut, ChatGPT had one million members.

ChatGPT Plus is a $20/month subscription service that enables users early access to new features, faster response times, and access to ChatGPT during peak hours.

Ethics and Safety in AI: OpenAI’s Commitment to Responsible AI Development

As artificial intelligence (AI) continues to advance and become more integrated into our daily lives, concerns around its ethics and safety have become increasingly urgent. OpenAI, a research organization focused on advancing AI in a safe and beneficial manner, has made a commitment to responsible AI development that prioritizes transparency, accountability, and ethical considerations.

One of the ways that OpenAI has demonstrated its commitment to ethical AI development is through the publication of numerous papers on AI ethics and governance. These papers explore a range of topics, from the potential impact of AI on society to the ethical implications of developing powerful AI systems. By engaging in these discussions and contributing to the broader AI ethics community, OpenAI is helping to shape the conversation around responsible AI development.

Another way that OpenAI is promoting responsible AI development is through its focus on transparency. The organization has made a point of sharing its research findings, tools, and technologies with the wider AI community, making it easier for researchers and developers to build on OpenAI’s work and improve the overall quality of AI development.

In addition to promoting transparency, OpenAI is also committed to safety in AI. The organization recognizes the potential risks associated with developing powerful AI systems and has taken steps to mitigate these risks. For example, OpenAI has developed a framework for measuring AI safety, which includes factors like robustness, alignment, and transparency. By considering these factors throughout the development process, OpenAI is working to create AI systems that are both powerful and safe.

OpenAI has also taken steps to ensure that its own development practices are ethical and responsible. The organization has established an Ethics and Governance board, made up of external experts in AI ethics and policy, to provide guidance on OpenAI’s research and development activities. This board helps to ensure that OpenAI’s work is aligned with its broader ethical and societal goals.

Overall, OpenAI’s commitment to responsible AI development is an important step forward in the development of AI that benefits humanity as a whole. By prioritizing ethics and safety, and by engaging in open and transparent research practices, OpenAI is helping to shape the future of AI in a positive and responsible way.

Conclusion: OpenAI’s Role in Shaping the Future of AI

OpenAI’s commitment to advancing AI in a safe and beneficial manner is helping to shape the future of AI. The organization’s focus on ethical considerations, transparency, and safety in AI development is setting a positive example for the broader AI community.

OpenAI’s research and development work is also contributing to the development of cutting-edge AI technologies and tools. The GPT series of language models, developed by OpenAI, have been used in a variety of applications, from generating realistic text to aiding in scientific research. These advancements have the potential to revolutionize the way we work, communicate, and learn.

In addition, OpenAI’s collaborations with industry leaders and their impact on real-world applications demonstrate the potential of AI to make a positive difference in society. By developing AI systems that are safe, ethical, and transparent, OpenAI is helping to ensure that the benefits of AI are shared by all.

As AI continues to evolve and become more integrated into our daily lives, the importance of responsible AI development cannot be overstated. OpenAI’s commitment to ethical considerations, transparency, and safety is an important step forward in creating AI that benefits humanity as a whole. By continuing to lead the way in responsible AI development, OpenAI is helping to shape the future of AI in a positive and meaningful way.

Best Text to Speech AI Voices

#Artificial intelligence#ChatGPT#ChatGPT Plus#Computer Vision#DALL-E#Elon Musk#Future of AI#Generative Pre-trained Transformer#GPT#Natural Language Processing#OpenAI#OpenAI&039;s GPT model#Robotics#Sam Altman#Video game bots

1 note

·

View note