Text

Appendix A: An Imagined and Incomplete Conversation about “Consciousness” and “AI,” Across Time

Every so often, I think about the fact of one of the best things my advisor and committee members let me write and include in my actual doctoral dissertation, and I smile a bit, and since I keep wanting to share it out into the world, I figured I should put it somewhere more accessible.

So with all of that said, we now rejoin An Imagined and Incomplete Conversation about “Consciousness” and “AI,” Across Time, already (still, seemingly unendingly) in progress:

René Descartes (1637): The physical and the mental have nothing to do with each other. Mind/soul is the only real part of a person.

Norbert Wiener (1948): I don’t know about that “only real part” business, but the mind is absolutely the seat of the command and control architecture of information and the ability to reflexively reverse entropy based on context, and input/output feedback loops.

Alan Turing (1952): Huh. I wonder if what computing machines do can reasonably be considered thinking?

Wiener: I dunno about “thinking,” but if you mean “pockets of decreasing entropy in a framework in which the larger mass of entropy tends to increase,” then oh for sure, dude.

John Von Neumann (1958): Wow things sure are changing fast in science and technology; we should maybe slow down and think about this before that change hits a point beyond our ability to meaningfully direct and shape it— a singularity, if you will.

Clynes & Klines (1960): You know, it’s funny you should mention how fast things are changing because one day we’re gonna be able to have automatic tech in our bodies that lets us pump ourselves full of chemicals to deal with the rigors of space; btw, have we told you about this new thing we’re working on called “antidepressants?”

Gordon Moore (1965): Right now an integrated circuit has 64 transistors, and they keep getting smaller, so if things keep going the way they’re going, in ten years they’ll have 65 THOUSAND. :-O

Donna Haraway (1991): We’re all already cyborgs bound up in assemblages of the social, biological, and techonological, in relational reinforcing systems with each other. Also do you like dogs?

Ray Kurzweil (1999): Holy Shit, did you hear that?! Because of the pace of technological change, we’re going to have a singularity where digital electronics will be indistinguishable from the very fabric of reality! They’ll be part of our bodies! Our minds will be digitally uploaded immortal cyborg AI Gods!

Tech Bros: Wow, so true, dude; that makes a lot of sense when you think about it; I mean maybe not “Gods” so much as “artificial super intelligences,” but yeah.

90’s TechnoPagans: I mean… Yeah? It’s all just a recapitulation of The Art in multiple technoscientific forms across time. I mean (*takes another hit of salvia*) if you think about the timeless nature of multidimensional spiritual architectures, we’re already—

DARPA: Wait, did that guy just say something about “Uploading” and “Cyborg/AI Gods?” We got anybody working on that?? Well GET TO IT!

Disabled People, Trans Folx, BIPOC Populations, Women: Wait, so our prosthetics, medications, and relational reciprocal entanglements with technosocial systems of this world in order to survive makes us cyborgs?! :-O

[Simultaneously:]

Kurzweil/90’s TechnoPagans/Tech Bros/DARPA: Not like that. Wiener/Clynes & Kline: Yes, exactly.

Haraway: I mean it’s really interesting to consider, right?

Tech Bros: Actually, if you think about the bidirectional nature of time, and the likelihood of simulationism, it’s almost certain that there’s already an Artificial Super Intelligence, and it HATES YOU; you should probably try to build it/never think about it, just in case.

90’s TechnoPagans: …That’s what we JUST SAID.

Philosophers of Religion (To Each Other): …Did they just Pascal’s Wager Anselm’s Ontological Argument, but computers?

Timnit Gebru and other “AI” Ethicists: Hey, y’all? There’s a LOT of really messed up stuff in these models you started building.

Disabled People, Trans Folx, BIPOC Populations, Women: Right?

Anthony Levandowski: I’m gonna make an AI god right now! And a CHURCH!

The General Public: Wait, do you people actually believe this?

Microsoft/Google/IBM/Facebook: …Which answer will make you give us more money?

Timnit Gebru and other “AI” Ethicists: …We’re pretty sure there might be some problems with the design architectures, too…

Some STS Theorists: Honestly this is all a little eugenics-y— like, both the technoscientific and the religious bits; have you all sought out any marginalized people who work on any of this stuff? Like, at all??

Disabled People, Trans Folx, BIPOC Populations, Women: Hahahahah! …Oh you’re serious?

Anthony Levandowski: Wait, no, nevermind about the church.

Some “AI” Engineers: I think the things we’re working on might be conscious, or even have souls.

“AI” Ethicists/Some STS Theorists: Anybody? These prejudices???

Wiener/Tech Bros/DARPA/Microsoft/Google/IBM/Facebook: “Souls?” Pfffft. Look at these whackjobs, over here. “Souls.” We’re talking about the technological singularity, mind uploading into an eternal digital universal superstructure, and the inevitability of timeless artificial super intelligences; who said anything about “Souls?”

René Descartes/90’s TechnoPagans/Philosophers of Religion/Some STS Theorists/Some “AI” Engineers: …

[Scene]

----------- ----------- ----------- -----------

Read Appendix A: An Imagined and Incomplete Conversation about “Consciousness” and “AI,” Across Time at A Future Worth Thinking About

and read more of this kind of thing at: Williams, Damien Patrick. Belief, Values, Bias, and Agency: Development of and Entanglement with "Artificial Intelligence." PhD diss., Virginia Tech, 2022. https://vtechworks.lib.vt.edu/handle/10919/111528.

#ableism#afrofuturism#alan turing#alison kafer#alterity#anselm's ontological argument for the existence of god#artificial intelligence#astrobiology#audio#autonomous created intelligence#autonomous generated intelligence#autonomously creative intelligence#bodies in space#bodyminds#communication#cybernetics#cyborg#cyborg anthropology#cyborg ecology#cyborgs#darpa#decolonization#decolonizing mars#digital#disability#disability studies#distributed machine consciousness#distributed networked intelligence#donna haraway#economics

17 notes

·

View notes

Text

My New Article at WIRED

Tweet

So, you may have heard about the whole zoom “AI” Terms of Service clause public relations debacle, going on this past week, in which Zoom decided that it wasn’t going to let users opt out of them feeding our faces and conversations into their LLMs. In 10.1, Zoom defines “Customer Content” as whatever data users provide or generate (“Customer Input”) and whatever else Zoom generates from our uses of Zoom. Then 10.4 says what they’ll use “Customer Content” for, including “…machine learning, artificial intelligence.”

And then on cue they dropped an “oh god oh fuck oh shit we fucked up” blog where they pinky promised not to do the thing they left actually-legally-binding ToS language saying they could do.

Like, Section 10.4 of the ToS now contains the line “Notwithstanding the above, Zoom will not use audio, video or chat Customer Content to train our artificial intelligence models without your consent,” but it again it still seems a) that the “customer” in question is the Enterprise not the User, and 2) that “consent” means “clicking yes and using Zoom.” So it’s Still Not Good.

Well anyway, I wrote about all of this for WIRED, including what zoom might need to do to gain back customer and user trust, and what other tech creators and corporations need to understand about where people are, right now.

And frankly the fact that I have a byline in WIRED is kind of blowing my mind, in and of itself, but anyway…

Also, today, Zoom backtracked Hard. And while i appreciate that, it really feels like decided to Zoom take their ball and go home rather than offer meaningful consent and user control options. That’s… not exactly better, and doesn’t tell me what if anything they’ve learned from the experience. If you want to see what I think they should’ve done, then, well… Check the article.

Until Next Time.

Tweet

Read the rest of My New Article at WIRED at A Future Worth Thinking About

#ai#artificial intelligence#ethics#generative pre-trained transformer#gpt#large language models#philosophy of technology#public policy#science technology and society#technological ethics#technology#zoom#privacy

123 notes

·

View notes

Text

My New Article at American Scientist

Tweet

As of this week, I have a new article in the July-August 2023 Special Issue of American Scientist Magazine. It’s called “Bias Optimizers,” and it’s all about the problems and potential remedies of and for GPT-type tools and other “A.I.”

This article picks up and expands on thoughts started in “The ‘P’ Stands for Pre-Trained” and in a few threads on the socials, as well as touching on some of my comments quoted here, about the use of chatbots and “A.I.” in medicine.

I’m particularly proud of the two intro grafs:

Recently, I learned that men can sometimes be nurses and secretaries, but women can never be doctors or presidents. I also learned that Black people are more likely to owe money than to have it owed to them. And I learned that if you need disability assistance, you’ll get more of it if you live in a facility than if you receive care at home.

At least, that is what I would believe if I accepted the sexist, racist, and misleading ableist pronouncements from today’s new artificial intelligence systems. It has been less than a year since OpenAI released ChatGPT, and mere months since its GPT-4 update and Google’s release of a competing AI chatbot, Bard. The creators of these systems promise they will make our lives easier, removing drudge work such as writing emails, filling out forms, and even writing code. But the bias programmed into these systems threatens to spread more prejudice into the world. AI-facilitated biases can affect who gets hired for what jobs, who gets believed as an expert in their field, and who is more likely to be targeted and prosecuted by police.

As you probably well know, I’ve been thinking about the ethical, epistemological, and social implications of GPT-type tools and “A.I.” in general for quite a while now, and I’m so grateful to the team at American Scientist for the opportunity to discuss all of those things with such a broad and frankly crucial audience.

I hope you enjoy it.

Tweet

Read My New Article at American Scientist at A Future Worth Thinking About

#ableism#ai#algorithmic bias#american scientist#artificial intelligence#bias#bigotry#bots#epistemology#ethics#generative pre-trained transformer#gpt#homophobia#large language models#Machine ethics#my words#my writing#prejudice#racism#science technology and society#sexism#transphobia

61 notes

·

View notes

Text

The "P" Stands for Pre-trained

I know I've said this before, but since we're going to be hearing increasingly more about Elon Musk and his "Anti-Woke" "A.I." "Truth GPT" in the coming days and weeks, let's go ahead and get some things out on the table:

All technology is political. All created artifacts are rife with values.

I keep trying to tell you that the political right understands this when it suits them— when they can weaponize it; and they're very VERY good at weaponizing it— but people seem to keep not getting it. So let me say it again, in a somewhat different way:

There is no ground of pure objectivity. There is no god's-eye view.

There is no purely objective thing. Pretending there is only serves to create the conditions in which the worst people can play "gotcha" anytime they can clearly point to their enemies doing what we are literally all doing ALL THE TIME: Creating meaning and knowledge out of what we value, together.

Read the rest of The "P" Stands for Pre-trained at A Future Worth Thinking About

#Actor-network theory#ai#algorithmic bias#algorithmic systems#algorithms#artificial intelligence#bias#generative pre-trained transformer#gpt#intersubjectivity#Invisible Architecture of Bias#Invisible Architectures of Bias#large language models#philosophy of technology#prejudice#science technology and society#situated knowledge#social construction of technology#social shaping of technology#values

54 notes

·

View notes

Text

Further Thoughts on the "Blueprint for an AI Bill of Rights"

So with the job of White House Office of Science and Technology Policy director having gone to Dr. Arati Prabhakar back in October, rather than Dr. Alondra Nelson, and the release of the "Blueprint for an AI Bill of Rights" (henceforth "BfaAIBoR" or "blueprint") a few weeks after that, I am both very interested also pretty worried to see what direction research into "artificial intelligence" is actually going to take from here.

To be clear, my fundamental problem with the "Blueprint for an AI bill of rights" is that while it pays pretty fine lip-service to the ideas of community-led oversight, transparency, and abolition of and abstaining from developing certain tools, it begins with, and repeats throughout, the idea that sometimes law enforcement, the military, and the intelligence community might need to just… ignore these principles. Additionally, Dr. Prabhakar was director of DARPA for roughly five years, between 2012 and 2015, and considering what I know for a fact got funded within that window? Yeah.

To put a finer point on it, 14 out of 16 uses of the phrase "law enforcement" and 10 out of 11 uses of "national security" in this blueprint are in direct reference to why those entities' or concept structures' needs might have to supersede the recommendations of the BfaAIBoR itself. The blueprint also doesn't mention the depredations of extant military "AI" at all. Instead, it points to the idea that the Department Of Defense (DoD) "has adopted [AI] Ethical Principles, and tenets for Responsible Artificial Intelligence specifically tailored to its [national security and defense] activities." And so with all of that being the case, there are several current "AI" projects in the pipe which a blueprint like this wouldn't cover, even if it ever became policy, and frankly that just fundamentally undercuts Much of the real good a project like this could do.

For instance, at present, the DoD's ethical frames are entirely about transparency, explainability, and some lipservice around equitability and "deliberate steps to minimize unintended bias in Al …" To understand a bit more of what I mean by this, here's the DoD's "Responsible Artificial Intelligence Strategy…" pdf (which is not natively searchable and I had to OCR myself, so heads-up); and here's the Office of National Intelligence's "ethical principles" for building AI. Note that not once do they consider the moral status of the biases and values they have intentionally baked into their systems.

Read the rest of Further Thoughts on the "Blueprint for the AI Bill of Rights" at A Future Worth Thinking About

#A Future Worth Thinking About#ai#algorithmic bias#algorithmic intelligence#algorithmic justice#algorithmic systems#algorithms#alondra nelson#Arati Prabhakar#artificial intelligence#autonomous systems#autonomous weapons systems#bias#blueprint for an ai bill of rights#brain-computer interface#department of defense#drones#epistemology#ethics washing#ethnicity#facebook#facial recognition#google#humanities#intersectionality#Invisible Architecture of Bias#Invisible Architectures of Bias#justice#machine learning#my work

8 notes

·

View notes

Text

I’m Not Afraid of AI Overlords— I’m Afraid of Whoever's Training Them To Think That Way

I’m Not Afraid of AI Overlords— I’m Afraid of Whoever's Training Them To Think That Way

by Damien P. Williams

I want to let you in on a secret: According to Silicon Valley’s AI's, I’m not human.

Well, maybe they think I’m human, but they don’t think I’m me. Or, if they think I’m me and that I’m human, they think I don’t deserve expensive medical care. Or that I pose a higher risk of criminal recidivism. Or that my fidgeting behaviours or culturally-perpetuated shame about my living situation or my race mean I’m more likely to be cheating on a test. Or that I want to see morally repugnant posts that my friends have commented on to call morally repugnant. Or that I shouldn’t be given a home loan or a job interview or the benefits I need to stay alive.

Now, to be clear, “AI” is a misnomer, for several reasons, but we don’t have time, here, to really dig into all the thorny discussion of values and beliefs about what it means to think, or to be a mind— especially because we need to take our time talking about why values and beliefs matter to conversations about “AI,” at all. So instead of “AI,” let’s talk specifically about algorithms, and machine learning.

Machine Learning (ML) is the name for a set of techniques for systematically reinforcing patterns, expectations, and desired outcomes in various computer systems. These techniques allow those systems to make sought after predictions based on the datasets they’re trained on. ML systems learn the patterns in these datasets and then extrapolate them to model a range of statistical likelihoods of future outcomes.

Algorithms are sets of instructions which, when run, perform functions such as searching, matching, sorting, and feeding the outputs of any of those processes back in on themselves, so that a system can learn from and refine itself. This feedback loop is what allows algorithmic machine learning systems to provide carefully curated search responses or newsfeed arrangements or facial recognition results to consumers like me and you and your friends and family and the police and the military. And while there are many different types of algorithms which can be used for the above purposes, they all remain sets of encoded instructions to perform a function.

And so, in these systems’ defense, it’s no surprise that they think the way they do: That’s exactly how we’ve told them to think.

[Image of Michael Emerson as Harold Finch, in season 2, episode 1 of the show Person of Interest, "The Contingency." His face is framed by a box of dashed yellow lines, the words "Admin" to the top right, and "Day 1" in the lower right corner.]

Read the rest of I’m Not Afraid of AI Overlords— I’m Afraid of Whoever's Training Them To Think That Way at A Future Worth Thinking About

#ai#algorithmic bias#algorithmic intelligence#algorithmic justice#algorithmic systems#algorithms#anna lauren hoffman#artificial intelligence#ashley shew#bias#cambridge analytica#epistemology#ethics washing#facebook#facial recognition#frances haugen#google#humanities#Invisible Architecture of Bias#Invisible Architectures of Bias#joy buolamwini#justice#kim crayton#machine learning#my words#my work#my writing#oversight board#philosophy#philosophy of technology

102 notes

·

View notes

Text

Video and Transcript: "Why AI Research Needs Disabled and Marginalized Perspectives"

Hello Everyone.

Here is my prerecorded talk for the NC State R.L. Rabb Symposium on Embedding AI in Society.

There are captions in the video already, but I've also gone ahead and C/P'd the SRT text here, as well.

There were also two things I meant to mention, but failed to in the video:

1) The history of facial recognition and carceral surveillance being used against Black and Brown communities ties into work from Lundy Braun, Melissa N Stein, Seiberth et al., and myself on the medicalization and datafication of Black bodies without their consent, down through history. (Cf. Me, here: Fitting the description: historical and sociotechnical elements of facial recognition and anti-black surveillance".)

2) Not only does GPT-3 fail to write about humanities-oriented topics with respect, it still can't write about ISLAM AT ALL without writing in connotations of violence and hatred.

Also I somehow forgot to describe the slide with my email address and this website? What the hell Damien.

Anyway.

I've embedded the content of the resource slides in the transcript, but those are by no means all of the resources on this, just the most pertinent.

All of that begins below the cut.

Read the rest of Video and Transcript: "Why AI Research Needs Disabled and Marginalized Perspectives" at A Future Worth Thinking About

#ai#alexandra reeve-givens#algorithmic bias#algorithmic justice#alondra nelson#anna lauren hoffman#artificial intelligence#ashley shew#biomedical ethics#biotech ethics#biotechnology#black lives matter#disability rights#disability studies#gender#justice#katherine johnson#lydia x. z. brown#my voice#my words#my writing#nasa#race#ruha benjamin#surveillance culture#the center for democracy and technology#the gallaudet eleven#wendy carlos#white house office of science and technology policy

43 notes

·

View notes

Text

Master and Servant: Disciplinarity and the Implications of AI and Cyborg Identity

Much of my research deals with the ways in which bodies are disciplined and how they go about resisting that discipline. In this piece, adapted from one of the answers to my PhD preliminary exams written and defended two months ago, I "name the disciplinary strategies that are used to control bodies and discuss the ways that bodies resist those strategies." Additionally, I address how strategies of embodied control and resistance have changed over time, and how identifying and existing as a cyborg and/or an artificial intelligence can be understood as a strategy of control, resistance, or both.

In Jan Golinski’s Making Natural Knowledge, he spends some time discussing the different understandings of the word “discipline” and the role their transformations have played in the definition and transmission of knowledge as both artifacts and culture. In particular, he uses the space in section three of chapter two to discuss the role Foucault has played in historical understandings of knowledge, categorization, and disciplinarity. Using Foucault’s work in Discipline and Punish, we can draw an explicit connection between the various meanings “discipline” and ways that bodies are individually, culturally, and socially conditioned to fit particular modes of behavior, and the specific ways marginalized peoples are disciplined, relating to their various embodiments.

This will demonstrate how modes of observation and surveillance lead to certain types of embodiments being deemed “illegal” or otherwise unacceptable and thus further believed to be in need of methodologies of entrainment, correction, or reform in the form of psychological and physical torture, carceral punishment, and other means of institutionalization.

youtube

[(Locust, "Master and Servant (Depeche Mode Cover)"]

Read the rest of Master and Servant: Disciplinarity and the Implications of AI and Cyborg Identity at A Future Worth Thinking About

#algorithmic bias#algorithmic systems#anti-capitalism#artificial intelligence#assistive technology#autonomous created intelligence#autonomous generated intelligence#bias#biotechnology#bodyminds#capitalism#cyborg#cyborg anthropology#cyborgs#decolonial studies#decolonization#disability#disability studies#disciplinarity#discipline and punish#foucault#gender#gender studies#harriet washington#Invisible Architecture of Bias#Invisible Architectures of Bias#jan golinski#lgbtqia+#machine consciousness#medical apartheid

39 notes

·

View notes

Text

'Star Trek: Picard' and The Admonition: Misapprehensions Through Time

I recently watched all of Star Trek: Picard, and while I was definitely on board with the vast majority of it, and extremely pleased with certain elements of it, some things kind of bothered me.

And so, as with much of the pop culture I love, I want to spend some time with the more critical perspective, in hopes that it’ll be taken as an opportunity to make it even better.

[Promotional image for Star Trek: Picard, featuring all of the series main cast.]

This will be filled with spoilers, so. Heads up.

Read the rest of 'Star Trek: Picard' and The Admonition: Misapprehensions Through Time at A Future Worth Thinking About

#alterity#distributed machine consciousness#distributed networked intelligence#embodied cognition#embodied machine consciousness#emergence#EMH#evolution#Geordi La Forge#holographic life#Lt. Cmdr. Data#Machine Civilization#machine consciousness#Machine ethics#machine intelligence#machine minds#metaphysics#moriarty#nonhuman personhood#philosophy of mind#philosophy of technology#picard#quality of life#star trek#star trek discovery#star trek tng#Star Trek Voyager#the admonition#time#time travel

12 notes

·

View notes

Text

Criptiques and A Dying Colonialism

Caitlin Wood’s 2014 edited volume Criptiques consists of 25 articles, essays, poems, songs, or stories, primarily in the first person, all of which are written from disabled people’s perspectives. Both the titles and the content are meant to be provocative and challenging to the reader, and especially if that reader is not, themselves, disabled. As editor Caitlin Wood puts it in the introduction, Criptiques is “a daring space,” designed to allow disabled people to create and inhabit their own feelings and expressions of their lived experiences. As such, there’s no single methodology or style, here, and many of the perspectives contrast or even conflict with each other in their intentions and recommendations.

The 1965 translation of Frantz Fanon’s A Dying Colonialism, on the other hand, is a single coherent text exploring the clinical psychological and sociological implications of the Algerian Revolution. Fanon uses soldiers’ first person accounts, as well as his own psychological and medical training, to explore the impact of the war and its tactics on the individual psychologies, the familial relationships, and the social dynamics of the Algerian people, arguing that the damage and horrors of war and colonialism have placed the Algerians and the French in a new relational mode.

Read the rest of Criptiques and A Dying Colonialism at Technoccult

#a dying colonialism#book reviews#books#caitlin wood#civil rights#colonialism#criptiques#critical race theory#critical theory#decolonial psychology#decoloniality#disability#disability studies#first-person narratives#frantz fanon#gender#gender studies#intersectionality#personhood rights#political psychology#post-colonial theory#race#reviews#sociology#surveillance#Surveillance Society#the machine question

7 notes

·

View notes

Text

Selfhood, Coloniality, African-Atlantic Religion, and Interrelational Cutlure

In Ras Michael Brown’s African-Atlantic Cultures and the South Carolina Lowcountry Brown wants to talk about the history of the cultural and spiritual practices of African descendants in the American south. To do this, he traces discusses the transport of central, western, and west-central African captives to South Carolina in the seventeenth and eighteenth centuries,finally, lightly touching on the nineteenth and twentieth centuries. Brown explores how these African peoples brought, maintained, and transmitted their understandings of spiritual relationships between the physical land of the living and the spiritual land of the dead, and from there how the notions of the African simbi spirits translated through a particular region of South Carolina.

In Kelly Oliver’s The Colonization of Psychic Space, she constructs and argues for a new theory of subjectivity and individuation—one predicated on a radical forgiveness born of interrelationality and reconciliation between self and culture. Oliver argues that we have neglected to fully explore exactly how sublimation functions in the creation of the self,saying that oppression leads to a unique form of alienation which never fully allows the oppressed to learn to sublimate—to translate their bodily impulses into articulated modes of communication—and so they cannot become a full individual, only ever struggling against their place in society, never fully reconciling with it.

These works are very different, so obviously, to achieve their goals, Brown and Oliver lean on distinct tools,methodologies, and sources. Brown focuses on the techniques of religious studies as he examines a religious history: historiography, anthropology, sociology, and linguistic and narrative analysis. He explores the written records and first person accounts of enslaved peoples and their captors, as well as the contextualizing historical documents of Black liberation theorists who were contemporary to the time frame he discusses. Oliver’s project is one of social psychology, and she explores it through the lenses of Freudian and Lacanian psychoanalysis,social construction theory, Hegelian dialectic, and the works of Franz Fanon. She is looking to build psycho-social analysis that takes both the social and the individual into account, fundamentally asking the question “How do we belong to the social as singular?”

Read the rest of Selfhood, Coloniality, African-Atlantic Religion, and Interrelational Cutlure at Technoccult

#african diaspora#African-Atlantic Cultures and the South Carolina Lowcountry#Africana Studies#ai#anthropogy#book reviews#books#colonialism#comparative religion#cyborg#cyborgs#decolonial psychology#decoloniality#ethics#gender#Kelly Oliver#magic#nature#post-colonial theory#Psychic Space#psychology#race#Ras Michael Brown#Religion#religious studies#reviews#simbi#sociology#spirits#technology and religion

10 notes

·

View notes

Text

Cyborg Theology and An Anthropology of Robots and AI

Scott Midson’s Cyborg Theology and Kathleen Richardson’s An Anthropology of Robots and AI both trace histories of technology and human-machine interactions, and both make use of fictional narratives as well as other theoretical techniques. The goal of Midson’s book is to put forward a new understanding of what it means to be human, an understanding to supplant the myth of a perfect “Edenic” state and the various disciplines’ dichotomous oppositions of “human” and “other.” This new understanding, Midson says, exists at the intersection of technological, theological, and ecological contexts,and he argues that an understanding of the conceptual category of the cyborg can allow us to understand this assemblage in a new way.

That is, all of the categories of “human,” “animal,” “technological,” “natural,” and more are far more porous than people tend to admit and their boundaries should be challenged; this understanding of the cyborg gives us the tools to do so. Richardson, on the other hand, seeks to argue that what it means to be human has been devalued by the drive to render human capacities and likenesses into machines, and that this drive arises from the male-dominated and otherwise socialized spaces in which these systems are created. The more we elide the distinction between the human and the machine, the more we will harm human beings and human relationships.

Midson’s training is in theology and religious studies, and so it’s no real surprise that he primarily uses theological exegesis (and specifically an exegesis of Genesis creation stories), but he also deploys the tools of cyborg anthropology (specifically Donna Haraway’s 1991 work on cyborgs), sociology, anthropology, and comparative religious studies. He engages in interdisciplinary narrative analysis and comparison,exploring the themes from several pieces of speculative fiction media and the writings of multiple theorists from several disciplines.

Read the rest of Cyborg Theology and An Anthropology of Robots and AI at Technoccult

#ai#anthropogy#artificial intelligence#book reviews#books#comparative religion#cyborg#cyborg anthropology#cyborg theology#cyborgs#disability#ethical robots#ethics#fantasy#foucault#gender#psychology#race#Religion#religious studies#reviews#robots#science fiction#scott midson#sociology#technology and religion#theology

18 notes

·

View notes

Text

Bodyminds, Self-Transformations, and Situated Selfhood

Back in the spring, I read and did a critical comparative analysis on both Cressida J. Heyes’ Self-Transformations: Foucault, Ethics, and Normalized Bodies, and Dr. Sami Schalk’s BODYMINDS REIMAGINED: (Dis)ability, Race, and Gender in Black Women’s Speculative Fiction. Each of these texts aims to explore conceptions of modes of embodied being, and the ways the exterior pressure of societal norms impacts what are seen as “normal” or “acceptable” bodies.

For Heyes, that exploration takes the form of three case studies: The hermeneutics of transgender individuals, especially trans women; the “Askeses” (self-discipline practices) of organized weight loss dieting programs; and “Attempts to represent the subjectivity of cosmetic surgery patients.” Schalk’s site of interrogation is Black women speculative fiction authors and the ways in which their writing illuminates new understandings of race, gender, and what Schalk terms “(dis)ability.

Both Heyes and Schalk focus on popular culture and they both center gender as a valence of investigation because the embodied experience of women in western society is the crux point for multiple intersecting pressures.

Read the rest of Bodyminds, Self-Transformations, and Situated Selfhood at Technoccult

#bodies#bodyminds reimagined#book reviews#books#civil rights#cressida j heyes#critical race theory#disability#ethics#fantasy#foucault#gender#gender studies#n.k. jemisin#normalization disability studies#octavia butler#political theory#psychology#race#reviews#sami schalk#science fiction#self-transformations#sociology

31 notes

·

View notes

Text

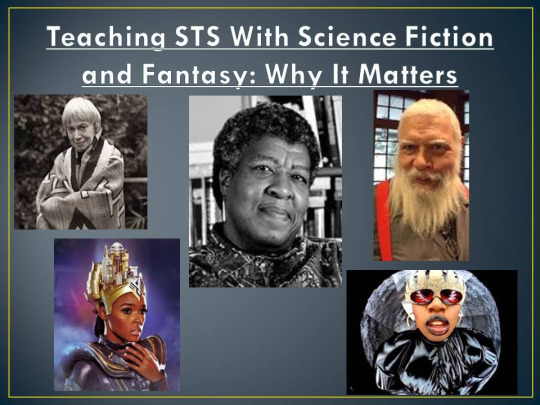

Audio, Transcript, and Slides from "SFF and STS: Teaching Science, Technology, and Society via Pop Culture"

Below are the slides, audio, and transcripts for my talk "SFF and STS: Teaching Science, Technology, and Society via Pop Culture" given at the

2019 Conference for the Society for the Social Studies of Science, in early September

.

(Cite as: Williams, Damien P. "SFF and STS: Teaching Science, Technology, and Society via Pop Culture," talk given at the 2019 Conference for the Society for the Social Studies of Science, September 2019)

[audio mp3="http://www.afutureworththinkingabout.com/wp-content/uploads/2019/09/DPW4S2019-2.mp3"][/audio]

[Direct Link to the Mp3]

[Damien Patrick Williams]

Thank you, everybody, for being here. I'm going to stand a bit far back from this mic and project, I'm also probably going to pace a little bit. So if you can't hear me, just let me know. This mic has ridiculously good pickup, so I don't think that'll be a problem.

So the conversation that we're going to be having today is titled as "SFF and STS: Teaching Science, Technology, and Society via Pop Culture."

I'm using the term "SFF" to stand for "science fiction and fantasy," but we're going to be looking at pop culture more broadly, because ultimately, though science fiction and fantasy have some of the most obvious entrees into discussions of STS and how making doing culture, society can influence technology and the history of fictional worlds can help students understand the worlds that they're currently living in, pop Culture more generally, is going to tie into the things that students are going to care about in a way that I think is going to be kind of pertinent to what we're going to be talking about today.

So why we are doing this: Why are we teaching it with science fiction and fantasy? Why does this matter? I've been teaching off and on for 13 years, I've been teaching philosophy, I've been teaching religious studies, I've been teaching Science, Technology and Society. And I've been coming to understand as I've gone through my teaching process that not only do I like pop culture, my students do? Because they're people and they're embedded in culture. So that's kind of shocking, I guess.

But what I've found is that one of the things that makes students care the absolute most about the things that you're teaching them, especially when something can be as dry as logic, or can be as perhaps nebulous or unclear at first, I say engineering cultures, is that if you give them something to latch on to something that they are already from with, they will be more interested in it. If you can show to them at the outset, "hey, you've already been doing this, you've already been thinking about this, you've already encountered this, they will feel less reticent to engage with it."

……

Read the rest of Audio, Transcript, and Slides from "SFF and STS: Teaching Science, Technology, and Society via Pop Culture" at A Future Worth Thinking About

#A Future Worth Thinking About#ableism#Actor-network theory#african diaspora#africanfuturism#afrofuturism#akira#algorithmic systems#amazon#animal ethics#Anne Leckie#anthropology#audio#bias#bitch planet#black panther#compassion#consciousness#damien patrick williams#decolonial studies#decolonization#disability#disability studies#Dune#ethics#ethnography#farscape#feminist ethics#frank herbert#frankenstein

16 notes

·

View notes

Text

Audio, Transcripts, and Slides from "Any Sufficiently Advanced Neglect is Indistinguishable from Malice"

Below are the slides, audio, and transcripts for my talk '"Any Sufficiently Advanced Neglect is Indistinguishable from Malice": Assumptions and Bias in Algorithmic Systems,' given at the 21st Conference of the Society for Philosophy and Technology, back in May 2019. (Cite as: Williams, Damien P. '"Any Sufficiently Advanced Neglect is Indistinguishable from Malice": Assumptions and Bias in Algorithmic Systems;' talk given at the 21st Conference of the Society for Philosophy and Technology; May 2019)

Now, I've got a chapter coming out about this, soon, which I can provide as a preprint draft if you ask, and can be cited as "Constructing Situated and Social Knowledge: Ethical, Sociological, and Phenomenological Factors in Technological Design," appearing in Philosophy And Engineering: Reimagining Technology And Social Progress. Guru Madhavan, Zachary Pirtle, and David Tomblin, eds. Forthcoming from Springer, 2019. But I wanted to get the words I said in this talk up onto some platforms where people can read them, as soon as possible, for a couple of reasons.

First, the Current Occupants of the Oval Office have very recently taken the policy position that algorithms can't be racist, something which they've done in direct response to things like Google’s Hate Speech-Detecting AI being biased against black people, and Amazon claiming that its facial recognition can identify fear, without ever accounting for, i dunno, cultural and individual differences in fear expression?

[Free vector image of a white, female-presenting person, from head to torso, with biometric facial recognition patterns on her face; incidentally, go try finding images—even illustrations—of a non-white person in a facial recognition context.]

All these things taken together are what made me finally go ahead and get the transcript of that talk done, and posted, because these are events and policy decisions about which I a) have been speaking and writing for years, and b) have specific inputs and recommendations about, and which are, c) frankly wrongheaded, and outright hateful.

And I want to spend time on it because I think what doesn't get through in many of our discussions is that it's not just about how Artificial Intelligence, Machine Learning, or Algorithmic instances get trained, but the processes for how and the cultural environments in which HUMANS are increasingly taught/shown/environmentally encouraged/socialized to think is the "right way" to build and train said systems.

That includes classes and instruction, it includes the institutional culture of the companies, it includes the policy landscape in which decisions about funding and get made, because that drives how people have to talk and write and think about the work they're doing, and that constrains what they will even attempt to do or even understand.

All of this is cumulative, accreting into institutional epistemologies of algorithm creation. It is a structural and institutional problem.

So here are the Slides:

The Audio: …

[Direct Link to Mp3]

And the Transcript is here below the cut:

Read the rest of Audio, Transcripts, and Slides from "Any Sufficiently Advanced Neglect is Indistinguishable from Malice" at A Future Worth Thinking About

#A Future Worth Thinking About#ableism#algorithmic bias#algorithmic justice#algorithmic systems#amazon#animal ethics#audio#bias#biomedical ethics#biotech ethics#compassion#consciousness#damien patrick williams#debbie chachra#disability#disability studies#embodied cognition#ethics#facebook#facial recognition#feminist ethics#Gayatri Spivak#gender#google#homophobia#implicit bias#intersubjectivity#Invisible Architecture of Bias#Invisible Architectures of Bias

40 notes

·

View notes

Text

Colonialism and the Technologized Other

One of the things I’m did this past spring was an independent study—a vehicle by which to move through my dissertation’s tentative bibliography, at a pace of around two books at time, every two weeks, and to write short comparative analyses of the texts. These books covered intersections of philosophy, psychology, theology, machine consciousness, and Afro-Atlantic magico-religious traditions, I thought my reviews might be of interest, here.

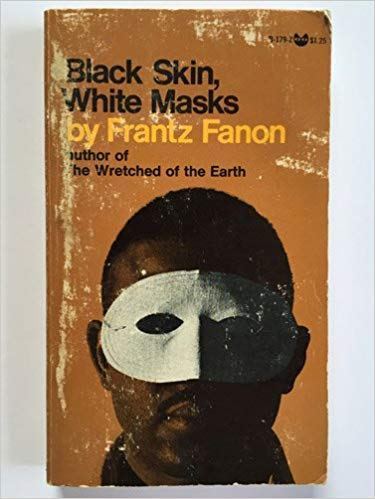

My first two books in this process were Frantz Fanon’s Black Skin, White Masks and David J. Gunkel’s The Machine Question, and while I didn’t initially have plans for the texts to thematically link, the first foray made it pretty clear that patterns would emerge whether I consciously intended or not.

[Image of a careworn copy of Frantz Fanon’s BLACK SKIN, WHITE MASKS, showing a full-on image of a Black man’s face wearing a white anonymizing eye-mask.]

In choosing both Fanon’s Black Skin, White Masks and Gunkel’s The Machine Question, I was initially worried that they would have very little to say to each other; however, on reading the texts, I instead found myself struck by how firmly the notions of otherness and alterity were entrenched throughout both. Each author, for very different reasons and from within very different contexts, explores the preconditions, the ethical implications, and a course of necessary actions to rectify the coming to be of otherness…

Read the rest of Colonialism and the Technologized Other at Technoccult.net

#artificial intelligence#black skin white masks#book reviews#frantz fanon#david j gunkel#post-colonial theory#the machine question#robot rights#Machine ethics#decolonial psychology#decoloniality#critical race theory#colonialism#critical theory#personhood rights#political psychology#psychology#surveillance#technological ethics#robotics#robots#race#reviews#nonhuman personhood#electronic personhood#civil rights#books#roboethics

50 notes

·

View notes

Text

Heavenly Bodies: Why It Matters That Cyborgs Have Always Been About Disability, Mental Health, and Marginalization

[This is a in-process pre-print of an as-yet-published paper, a version of which was presented at the Gender, Bodies, and Technology 2019 Conference.]

INTRODUCTION

The history of biotechnological intervention on the human body has always been tied to conceptual frameworks of disability and mental health, but certain biases and assumptions have forcibly altered and erased the public awareness of that understanding. As humans move into a future of climate catastrophe, space travel, and constantly shifting understanding s of our place in the world, we will be increasingly confronted with concerns over who will be used as research subjects, concerns over whose stakeholder positions will be acknowledged and preferenced, and concerns over the kinds of changes that human bodies will necessarily undergo as they adapt to their changing environments, be they terrestrial or interstellar. Who will be tested, and how, so that we can better understand what kinds of bodyminds will be “suitable” for our future modes of existence?[1] How will we test the effects of conditions like pregnancy and hormone replacement therapy (HRT) in space, and what will happen to our bodies and minds after extended exposure to low light, zero gravity, high-radiation environments, or the increasing warmth and wetness of our home planet?

During the June 2018 “Decolonizing Mars” event at the Library of Congress in Washington, DC, several attendees discussed the fact that the bodyminds of disabled folx might be better suited to space life, already being oriented to pushing off of surfaces and orienting themselves to the world in different ways, and that the integration of body and technology wouldn’t be anything new for many people with disabilities. In that context, I submit that cyborgs and space travel are, always have been, and will continue to be about disability and marginalization, but that Western society’s relationship to disabled people has created a situation in which many people do everything they can to conceal that fact from the popular historical narratives about what it means for humans to live and explore. In order to survive and thrive, into the future, humanity will have to carefully and intentionally take this history up, again, and consider the present-day lived experience of those beings—human and otherwise—whose lives are and have been most impacted by the socioethical contexts in which we talk about technology and space.

This paper explores some history and theories about cyborgs—humans with biotechnological interventions which allow them to regulate their own internal bodily process—and how those compare to the realities of how we treat and consider currently-living people who are physically enmeshed with technology. I’ll explore several ways in which the above-listed considerations have been alternately overlooked and taken up by various theorists, and some of the many different strategies and formulations for integrating these theories into what will likely become everyday concerns in the future. In fact, by exploring responses from disabilities studies scholars and artists who have interrogated and problematized the popular vision of cyborgs, the future, and life in space, I will demonstrate that our clearest path toward the future of living with biotechnologies is a reengagement with the everyday lives of disabled and other marginalized persons, today.

Read the rest of Heavenly Bodies: Why It Matters That Cyborgs Have Always Been About Disability, Mental Health, and Marginalization at A Future Worth Thinking About

#A Future Worth Thinking About#afrofuturism#alison kafer#ashley shew#astrobiology#astronomy#astrophysics#becoming interplanetary#bodies in space#bodyminds#cybernetics#cyborg#cyborg anthropology#cyborg ecology#cyborgs#decolonization#decolonize mars#decolonizing mars#disability#disability studies#donna haraway#economics#embodied cognition#engineering#eugenics#feminist ethics#feminist queer crip#gender bodies and technology#gender bodies and technology conference 2019#intersectionality

60 notes

·

View notes