#fairness and bias in AI

Text

Unveiling the Ethical Implications: Navigating the Impact of Artificial Intelligence

In the realm of rapid technological advancements, artificial intelligence (AI) emerges as a transformative force with boundless potential. As AI continues to evolve and permeate various aspects of our lives, it is crucial to examine the ethical implications that accompany this technological revolution. Join us on an enlightening journey as we explore the multifaceted ethical considerations…

View On WordPress

#accountability and liability in AI#AI education and public awareness#AI ethics#ethical considerations in AI#ethical implications of artificial intelligence#fairness and bias in AI#global collaboration in AI ethics#human-centric AI design#privacy and data protection#responsible AI development#transparency in AI#What are the ethical implications of artificial intelligence?

0 notes

Text

The Implications of Algorithmic Bias and How To Mitigate It

AI has the potential to transform our world in ways we can't even imagine. From self-driving cars to personalized medicine, it's making our lives easier and more efficient. However, with this power comes the responsibility to consider the ethical implications and challenges that come with the use of AI. One of the most significant ethical concerns with AI is algorithmic bias.

Algorithmic bias occurs when a machine learning model is trained on data that is disproportionate from one demographic group, it may make inaccurate predictions for other groups, leading to discrimination. This can be a major problem when AI systems are used in decision-making contexts, such as in healthcare or criminal justice, where fairness is crucial.

But there are ways engineers can mitigate algorithmic bias in their models to help promote equality. One important step is to ensure that the data used to train the model is representative of the population it will be used on. Additionally, engineers should test their models on a diverse set of data to identify any potential biases and correct them.

Another key step is to be transparent about the decisions made by the model, and to provide an interpretable explanation of how it reaches its decisions. This can help to ensure that the model is held accountable for any discriminatory decisions it makes.

Finally, it's important to engage with stakeholders, including individuals and communities who may be affected by the model's decisions, to understand their concerns and incorporate them into the development process.

As engineers, we have a responsibility to ensure that our AI models are fair, transparent and accountable. By taking these steps, we can help to promote equality and ensure that the benefits of AI are enjoyed by everyone.

#AI#Machine learning#Algorithmic bias#Ethics#Fairness#Transparency#Interpretability#Bias#Discrimination#Data science#Data representation#Data bias#Stakeholder engagement#Social impact#AI for good#AI for social good#AI ethics#AI and society#AI and diversity#Responsible AI

2 notes

·

View notes

Text

AI technology continues to advance and become more integrated into various aspects of society, and ethical considerations have become increasingly important. but as AI becomes more advanced and ubiquitous, concerns are being raised about its impact on society. In this video, we'll explore the ethics behind AI and discuss how we can ensure fairness and privacy in the age of AI.

#theethicsbehindai#ensuringfairnessprivacyandbias#limitlesstech#ai#artificialintelligence#aiethics#machinelearning#aitechnology#ethicsofaitechnology#ethicalartificialintelligence#aisystem

The Ethics Behind AI: Ensuring Fairness, Privacy, and Bias

#the ethics behind ai#ensuring fairness privacy and bias#ai ethics#artificial intelligence#ai#machine learning#what is ai ethics#the ethics of artificial intelligence#definition of ai ethics#ai technology#ai fairness#ai privacy#ai bias#ethics of ai technology#ethical concerns of ai#ethical artificial intelligence#LimitLess Tech 888#responsible ai#what is ai bias#the truth about ai and ethics#ai system#ethics of artificial intelligence#ai ethical issues

0 notes

Text

AI technology continues to advance and become more integrated into various aspects of society, and ethical considerations have become increasingly important. but as AI becomes more advanced and ubiquitous, concerns are being raised about its impact on society. In this video, we'll explore the ethics behind AI and discuss how we can ensure fairness and privacy in the age of AI.

Artificial Intelligence has emerged as a transformative technology, revolutionizing industries and societies across the globe. From personalized recommendations to autonomous vehicles, AI systems are becoming deeply integrated into our daily lives. However, this rapid advancement also brings forth a host of ethical concerns that demand careful consideration. Among these concerns, ensuring fairness, privacy, and mitigating bias are paramount.

Ensuring fairness in AI systems is paramount. AI algorithms, often trained on large datasets, have the potential to perpetuate and even exacerbate existing social biases. This can manifest in various ways, from biased hiring decisions in AI-driven recruitment tools to discriminatory loan approvals in automated financial systems. Achieving fairness involves developing algorithms that are not only technically proficient but also ethically sound. It requires the recognition of biases, both subtle and overt, in data and the implementation of measures to mitigate these biases.

AI systems often require access to vast amounts of personal data to function effectively. This raises profound privacy concerns, as the misuse or mishandling of such data can lead to surveillance, identity theft, and unauthorized access to sensitive information. Striking a balance between data collection for AI improvement and safeguarding individual privacy is a significant ethical challenge. The implementation of robust data anonymization techniques, data encryption, and the principle of data minimization are vital in ensuring that individuals' privacy rights are respected in the age of AI.

The ethical underpinnings of AI demand transparency and accountability from developers and organizations. AI systems must provide clear explanations for their decisions, especially when they impact individuals' lives. The concept of the "black box" AI, where decisions are made without understandable reasons, raises concerns about the potential for unchecked power and biased outcomes. Implementing mechanisms such as interpretable AI and model explainability can help in building trust and ensuring accountability.

#theethicsbehindai#ensuringfairnessprivacyandbias#limitlesstech#ai#artificialintelligence#aiethics#machinelearning#aitechnology#ethicsofaitechnology#ethicalartificialintelligence#aisystem

The Ethics Behind AI: Ensuring Fairness, Privacy, and Bias

#the ethics behind ai#ensuring fairness privacy and bias#ai ethics#artificial intelligence#ai#machine learning#what is ai ethics#the ethics of artificial intelligence#definition of ai ethics#ai technology#ai fairness#ai privacy#ai bias#ethics of ai technology#ethical artificial intelligence#LimitLess Tech 888#responsible ai#what is ai bias#ethical concerns of ai#the truth about ai and ethics#ai system#ethics of artificial intelligence#ai ethical issues

0 notes

Text

Ethical AI: The Foundation for a Brighter Digital Future

(Images made by author with Leonardo Ai)

The increasing use of AI systems in everyday life raises a host of ethical questions and challenges, such as bias, privacy, and transparency. This blog post examines the concept of ethical AI, synthesizing crucial insights from recent research and guidelines. By embracing an ethical framework that takes these guidelines into account, AI stakeholders can…

View On WordPress

#AI Bias#AI for Good#AI governance#Digital Future#Ethical AI#Fair AI#Responsible AI#Sustainable AI#Trustworthy AI

0 notes

Text

#Adversarial testing#AI#Artificial Intelligence#Auditing#Bias detection#Bias mitigation#Black box algorithms#Collaboration#Contextual biases#Data bias#Data collection#Discriminatory outcomes#Diverse and representative data#Diversity in development teams#Education#Equity#Ethical guidelines#Explainability#Fair AI systems#Fairness-aware learning#Feedback loops#Gender bias#Inclusivity#Justice#Legal implications#Machine Learning#Monitoring#Privacy and security#Public awareness#Racial bias

0 notes

Text

Reasons Why AI is Not Perfect for Website Ranking

Artificial Intelligence (AI) has undoubtedly revolutionized various aspects of our lives, including website ranking and search engine optimization (SEO). With its ability to analyze vast amounts of data and make informed decisions, AI has become an integral part of search engine algorithms. However, despite its numerous advantages, AI is not flawless and has its limitations when it comes to…

View On WordPress

#Accuracy of AI algorithms#AI algorithms#AI and search engine optimization (SEO)#AI bias#AI drawbacks in website ranking#AI in digital marketing#AI in web development#AI limitations#AI vs. human judgement#Contextual understanding#Fairness in website ranking#Holistic approach in website ranking#Human expertise#Manipulation vulnerability#Website ranking

0 notes

Text

The Ethical Implications of AI in Design

As artificial intelligence (AI) continues to play a larger role in the design process, there are important ethical considerations that designers and technologists must grapple with. From issues of bias and fairness to questions of ownership and creativity, the use of AI in design raises important questions about the future of the field.

Bias and fairness in AI design

One of the most pressing…

View On WordPress

#AI design#artificial intelligence#authorship in AI design#bias in algorithms#Design#Designer#ethical considerations#fairness in design#future of design#Graphic design#human creativity#LaiqQureshi#laiqverse#limitations of AI#ownership of AI-generated designs#real-world examples of ethical issues

0 notes

Text

Addressing Bias in Artificial Intelligence: Strategies for Ensuring Fair and Ethical AI

#AI #Bias #ArtificialIntelligence #MachineLearning #DataRepresentation #Fairness #Accuracy #DiverseDatasets #AlgorithmTransparency #Interpretability

Artificial intelligence (AI) has the potential to revolutionize many industries and improve people���s lives in countless ways. However, one of the biggest concerns with AI is the issue of bias.

Bias in AI occurs when the data used to train a model is not representative of the population that the model will ultimately be used on. This can lead to the model making inaccurate or unfair predictions.…

View On WordPress

#accuracy#AI#algorithm transparency#artificial intelligence#bias#data analysis#data representation#diverse datasets#Ethics#fairness#future of AI#innovation#interpretability#machine intelligence#machine learning#neural networks

1 note

·

View note

Text

There is no obvious path between today’s machine learning models — which mimic human creativity by predicting the next word, sound, or pixel — and an AI that can form a hostile intent or circumvent our every effort to contain it.

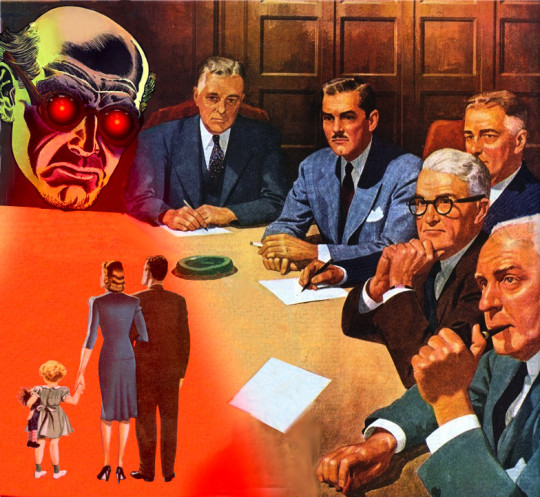

Regardless, it is fair to ask why Dr. Frankenstein is holding the pitchfork. Why is it that the people building, deploying, and profiting from AI are the ones leading the call to focus public attention on its existential risk? Well, I can see at least two possible reasons.

The first is that it requires far less sacrifice on their part to call attention to a hypothetical threat than to address the more immediate harms and costs that AI is already imposing on society. Today’s AI is plagued by error and replete with bias. It makes up facts and reproduces discriminatory heuristics. It empowers both government and consumer surveillance. AI is displacing labor and exacerbating income and wealth inequality. It poses an enormous and escalating threat to the environment, consuming an enormous and growing amount of energy and fueling a race to extract materials from a beleaguered Earth.

These societal costs aren’t easily absorbed. Mitigating them requires a significant commitment of personnel and other resources, which doesn’t make shareholders happy — and which is why the market recently rewarded tech companies for laying off many members of their privacy, security, or ethics teams.

How much easier would life be for AI companies if the public instead fixated on speculative theories about far-off threats that may or may not actually bear out? What would action to “mitigate the risk of extinction” even look like? I submit that it would consist of vague whitepapers, series of workshops led by speculative philosophers, and donations to computer science labs that are willing to speak the language of longtermism. This would be a pittance, compared with the effort required to reverse what AI is already doing to displace labor, exacerbate inequality, and accelerate environmental degradation.

A second reason the AI community might be motivated to cast the technology as posing an existential risk could be, ironically, to reinforce the idea that AI has enormous potential. Convincing the public that AI is so powerful that it could end human existence would be a pretty effective way for AI scientists to make the case that what they are working on is important. Doomsaying is great marketing. The long-term fear may be that AI will threaten humanity, but the near-term fear, for anyone who doesn’t incorporate AI into their business, agency, or classroom, is that they will be left behind. The same goes for national policy: If AI poses existential risks, U.S. policymakers might say, we better not let China beat us to it for lack of investment or overregulation. (It is telling that Sam Altman — the CEO of OpenAI and a signatory of the Center for AI Safety statement — warned the E.U. that his company will pull out of Europe if regulations become too burdensome.)

1K notes

·

View notes

Text

Hypothetical AI election disinformation risks vs real AI harms

I'm on tour with my new novel The Bezzle! Catch me TONIGHT (Feb 27) in Portland at Powell's. Then, onto Phoenix (Changing Hands, Feb 29), Tucson (Mar 9-12), and more!

You can barely turn around these days without encountering a think-piece warning of the impending risk of AI disinformation in the coming elections. But a recent episode of This Machine Kills podcast reminds us that these are hypothetical risks, and there is no shortage of real AI harms:

https://soundcloud.com/thismachinekillspod/311-selling-pickaxes-for-the-ai-gold-rush

The algorithmic decision-making systems that increasingly run the back-ends to our lives are really, truly very bad at doing their jobs, and worse, these systems constitute a form of "empiricism-washing": if the computer says it's true, it must be true. There's no such thing as racist math, you SJW snowflake!

https://slate.com/news-and-politics/2019/02/aoc-algorithms-racist-bias.html

Nearly 1,000 British postmasters were wrongly convicted of fraud by Horizon, the faulty AI fraud-hunting system that Fujitsu provided to the Royal Mail. They had their lives ruined by this faulty AI, many went to prison, and at least four of the AI's victims killed themselves:

https://en.wikipedia.org/wiki/British_Post_Office_scandal

Tenants across America have seen their rents skyrocket thanks to Realpage's landlord price-fixing algorithm, which deployed the time-honored defense: "It's not a crime if we commit it with an app":

https://www.propublica.org/article/doj-backs-tenants-price-fixing-case-big-landlords-real-estate-tech

Housing, you'll recall, is pretty foundational in the human hierarchy of needs. Losing your home – or being forced to choose between paying rent or buying groceries or gas for your car or clothes for your kid – is a non-hypothetical, widespread, urgent problem that can be traced straight to AI.

Then there's predictive policing: cities across America and the world have bought systems that purport to tell the cops where to look for crime. Of course, these systems are trained on policing data from forces that are seeking to correct racial bias in their practices by using an algorithm to create "fairness." You feed this algorithm a data-set of where the police had detected crime in previous years, and it predicts where you'll find crime in the years to come.

But you only find crime where you look for it. If the cops only ever stop-and-frisk Black and brown kids, or pull over Black and brown drivers, then every knife, baggie or gun they find in someone's trunk or pockets will be found in a Black or brown person's trunk or pocket. A predictive policing algorithm will naively ingest this data and confidently assert that future crimes can be foiled by looking for more Black and brown people and searching them and pulling them over.

Obviously, this is bad for Black and brown people in low-income neighborhoods, whose baseline risk of an encounter with a cop turning violent or even lethal. But it's also bad for affluent people in affluent neighborhoods – because they are underpoliced as a result of these algorithmic biases. For example, domestic abuse that occurs in full detached single-family homes is systematically underrepresented in crime data, because the majority of domestic abuse calls originate with neighbors who can hear the abuse take place through a shared wall.

But the majority of algorithmic harms are inflicted on poor, racialized and/or working class people. Even if you escape a predictive policing algorithm, a facial recognition algorithm may wrongly accuse you of a crime, and even if you were far away from the site of the crime, the cops will still arrest you, because computers don't lie:

https://www.cbsnews.com/sacramento/news/texas-macys-sunglass-hut-facial-recognition-software-wrongful-arrest-sacramento-alibi/

Trying to get a low-waged service job? Be prepared for endless, nonsensical AI "personality tests" that make Scientology look like NASA:

https://futurism.com/mandatory-ai-hiring-tests

Service workers' schedules are at the mercy of shift-allocation algorithms that assign them hours that ensure that they fall just short of qualifying for health and other benefits. These algorithms push workers into "clopening" – where you close the store after midnight and then open it again the next morning before 5AM. And if you try to unionize, another algorithm – that spies on you and your fellow workers' social media activity – targets you for reprisals and your store for closure.

If you're driving an Amazon delivery van, algorithm watches your eyeballs and tells your boss that you're a bad driver if it doesn't like what it sees. If you're working in an Amazon warehouse, an algorithm decides if you've taken too many pee-breaks and automatically dings you:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

If this disgusts you and you're hoping to use your ballot to elect lawmakers who will take up your cause, an algorithm stands in your way again. "AI" tools for purging voter rolls are especially harmful to racialized people – for example, they assume that two "Juan Gomez"es with a shared birthday in two different states must be the same person and remove one or both from the voter rolls:

https://www.cbsnews.com/news/eligible-voters-swept-up-conservative-activists-purge-voter-rolls/

Hoping to get a solid education, the sort that will keep you out of AI-supervised, precarious, low-waged work? Sorry, kiddo: the ed-tech system is riddled with algorithms. There's the grifty "remote invigilation" industry that watches you take tests via webcam and accuses you of cheating if your facial expressions fail its high-tech phrenology standards:

https://pluralistic.net/2022/02/16/unauthorized-paper/#cheating-anticheat

All of these are non-hypothetical, real risks from AI. The AI industry has proven itself incredibly adept at deflecting interest from real harms to hypothetical ones, like the "risk" that the spicy autocomplete will become conscious and take over the world in order to convert us all to paperclips:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Whenever you hear AI bosses talking about how seriously they're taking a hypothetical risk, that's the moment when you should check in on whether they're doing anything about all these longstanding, real risks. And even as AI bosses promise to fight hypothetical election disinformation, they continue to downplay or ignore the non-hypothetical, here-and-now harms of AI.

There's something unseemly – and even perverse – about worrying so much about AI and election disinformation. It plays into the narrative that kicked off in earnest in 2016, that the reason the electorate votes for manifestly unqualified candidates who run on a platform of bald-faced lies is that they are gullible and easily led astray.

But there's another explanation: the reason people accept conspiratorial accounts of how our institutions are run is because the institutions that are supposed to be defending us are corrupt and captured by actual conspiracies:

https://memex.craphound.com/2019/09/21/republic-of-lies-the-rise-of-conspiratorial-thinking-and-the-actual-conspiracies-that-fuel-it/

The party line on conspiratorial accounts is that these institutions are good, actually. Think of the rebuttal offered to anti-vaxxers who claimed that pharma giants were run by murderous sociopath billionaires who were in league with their regulators to kill us for a buck: "no, I think you'll find pharma companies are great and superbly regulated":

https://pluralistic.net/2023/09/05/not-that-naomi/#if-the-naomi-be-klein-youre-doing-just-fine

Institutions are profoundly important to a high-tech society. No one is capable of assessing all the life-or-death choices we make every day, from whether to trust the firmware in your car's anti-lock brakes, the alloys used in the structural members of your home, or the food-safety standards for the meal you're about to eat. We must rely on well-regulated experts to make these calls for us, and when the institutions fail us, we are thrown into a state of epistemological chaos. We must make decisions about whether to trust these technological systems, but we can't make informed choices because the one thing we're sure of is that our institutions aren't trustworthy.

Ironically, the long list of AI harms that we live with every day are the most important contributor to disinformation campaigns. It's these harms that provide the evidence for belief in conspiratorial accounts of the world, because each one is proof that the system can't be trusted. The election disinformation discourse focuses on the lies told – and not why those lies are credible.

That's because the subtext of election disinformation concerns is usually that the electorate is credulous, fools waiting to be suckered in. By refusing to contemplate the institutional failures that sit upstream of conspiracism, we can smugly locate the blame with the peddlers of lies and assume the mantle of paternalistic protectors of the easily gulled electorate.

But the group of people who are demonstrably being tricked by AI is the people who buy the horrifically flawed AI-based algorithmic systems and put them into use despite their manifest failures.

As I've written many times, "we're nowhere near a place where bots can steal your job, but we're certainly at the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job"

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

The most visible victims of AI disinformation are the people who are putting AI in charge of the life-chances of millions of the rest of us. Tackle that AI disinformation and its harms, and we'll make conspiratorial claims about our institutions being corrupt far less credible.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/02/27/ai-conspiracies/#epistemological-collapse

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#disinformation#algorithmic bias#elections#election disinformation#conspiratorialism#paternalism#this machine kills#Horizon#the rents too damned high#weaponized shelter#predictive policing#fr#facial recognition#labor#union busting#union avoidance#standardized testing#hiring#employment#remote invigilation

144 notes

·

View notes

Text

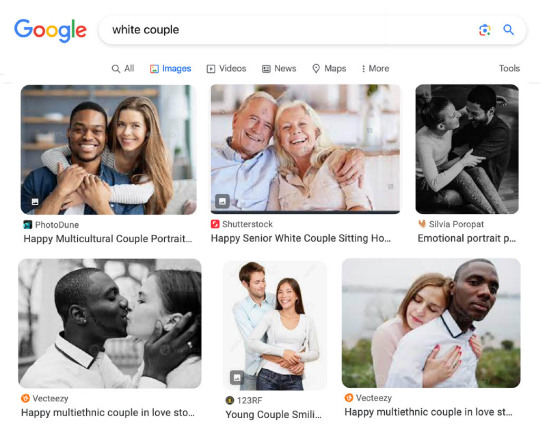

Google’s push to lecture us on diversity goes beyond AI

by Douglas Murray

The future is here. And it turns out to be very, very racist.

As the New York Post reported yesterday, Google’s Gemini GI image generator aims to have a lot of things. But historical accuracy is not among them.

If you ask the program to give you an image of the Founding Fathers of this country, the AI will return you images of black and Native American men signing what appears to be a version of the American Constitution.

At least that’s more accurate than the images of popes thrown up. A request for an image of one of the holy fathers gives up images of — among others — a Southeast Asian woman. Who knew?

Some people are surprised by this. I’m not.

Several years ago, I went to Silicon Valley to try to figure out what the hell was going on with Google Images, among other enterprises.

Because Google images were already throwing up a very specific type of bias.

If you typed in “gay couples” and asked for an image search, you got lots of happy gay couples. Ask for “straight couples” and you get images of, er, gay couples.

It was the same if you wanted to see happy couples of any orientation.

Ask for images of “black couples” and you got lots of happy black couples. Ask for “white couples” and you got black couples, or interracial couples. Many of them gay.

I asked people in Silicon Valley what the hell was going on and was told this was what they call “machine learning fairness.”

The idea is that we human beings are full of implicit bias and that as a result, we need the machines to throw up unbiased images.

Except that the machines were clearly very biased indeed. Much more so than your average human.

What became clear to me was that this was not the machines working on their own. The machines had been skewed by human interference.

If you ask for images of gay couples, you get lots of happy gay couples. Ask for straight couples and the first things that come up are a piece asking whether straight couples should really identify as such. The second picture is captioned, “Queer lessons for straight couples.”

Shortly after, you get an elderly gay couple with the tag, “Advice for straight couples from a long-term gay couple.” Then a photo with the caption, “Gay couples have less strained marriages than straight couples.”

Again, none of this comes up if you search for “gay couples.” Then you get what you ask for. You are not bombarded with photos and articles telling you how superior straight couples are to gay couples.

It’s almost as though Google Images is trying to force-feed us something.

It is the same with race.

Ask Google Images to show you photos of black couples and you’ll get exactly what you ask for. Happy black couples. All heterosexual, as it happens.

But ask the same engine to show you images of white couples and two things happen.

You get a mass of images of black couples and mixed-race couples and then — who’d have guessed — mixed-race gay couples.

Why does this matter?

Firstly, because it is clear that the machines are not lacking in bias. They are positively filled with it.

It seems the tech wants to teach us all a lesson. It assumes that we are all homophobic white bigots who need re-educating. What an insult.

Secondly, it gives us a totally false image — literally — of our present. Now, thanks to the addition of Google Gemini, we can also be fed a totally false image of our past.

Yet the interesting thing about the past is that it isn’t the present. When we learn about the past, we learn that things were different from now. We see how things actually were and that is very often how we learn from it.

How were things then? How are they now? And how do they compare?

Faking the past or altering it completely robs us of the opportunity not just to understand the past but to understand the present.

Google has said it is going to call a halt on Gemini. Mainly because there has been backlash over the hilarious “diversity” of Nazi soldiers that it has thrown up.

If you search for Nazi officers, it turns out that there were black Nazis in the Third Reich. Who knew?

While Google Gemini gets over that little hurdle, perhaps it could realize that it’s not just the Gemini program that’s rotten but the whole darn thing.

Google is trying to change everything about the American and Western past.

I suggest we don’t let it.

There was an old joke told in the Soviet Union that now seems worryingly relevant to America in the age of AI: “The only thing that’s certain is the future. The past keeps on changing.”

34 notes

·

View notes

Text

@rowzeoli replied to your post “@rowzeoli replied to your post “Do you think part...”:

There's a lot to tackle on this so I'll do my best to cover it all! So I totally get where you're coming from and to be fair yes there are some things in old articles that I don't agree with any more in deeming people having done things "first" which is part of the issue of not having a collective historical memory around actual play as it moves so quickly. Most of the issue isn't that readership is down it's that AI and venture capitalism is destroying journalism

Hey, sorry for taking a bit to respond; it's been a hectic week and I wanted to give it some thought and time.

I'll start off with the good: I really do, again, appreciate you engaging here, and on the strength of that alone I am going to at least give Rascal's free articles a good solid chance for a while; I have been, admittedly, tarring it with the brush of a lot of frustrations (see below) and I know it's relatively new and still finding its place and should get a bit more of my patience. I also should note that while your article did hit on a lot of the patterns that have turned me - and no small amount of others - off of a lot of AP/TTRPG journalism it is by no means the worst example. The things you credited Burrow's End for are, admittedly, more obscure single-episode events within a huge body of work. Or in other words: there are bylines in the space that make me go "oh this is going to be bad" and yours is not one of them.

With that said: I'm sorry, but Polygon's bias is not a matter of time crunch or lack of funding. There is no way that a time crunch or lack of funding would consistently, over years (this was already word on the street at latest when EXU Calamity came out almost 2 years ago) result in a message of "D20 can do no wrong, and Critical Role rarely does right." If it were throwing out harsh criticism or glowing praise for a wide variety of shows, sure, that seems like it could come from not having a lot of time...but this goes beyond coincidence. It's a reputation that long precedes your entry into the field. As some others in the replies have noted, I might have written the most about it on Tumblr, but it's at this point not an uncommon observation. This also isn't an issue for other publications in a similar "nerd stuff" space - there's plenty of articles on, say, Dicebreaker or Comicbook.com that I don't care for, either because I disagree with the opinion or I think the analysis isn't really worthwhile, but those tend to at least have a mix of positive and critical articles about most shows. When I said you could treat Polygon articles like Madlibs, I meant it. And so I think it's great that you are no longer chasing "groundbreaking", for example, is not a solid ground for an article, but this also is showing me that even relatively new journalists are, very early on, starting with this exact formula. In some ways, that's more damning.

I do also want to add that I'm again, sympathetic to the lack of resources and to coming into a field with passionate and nitpicky fans who have been here for years. Not knowing about a single Critical Role one-shot from 2018 is something that I'd have been much more lenient about if it weren't hitting those repetitive notes of "D20 is great/this thing is groundbreaking/look at the production values." But the other article I posted, also from Polygon but not written by you, is, to be honest, pretty inexcusable. I get there's a lot of lost institutional memory...but either being unaware of, or ignoring the fact that there are a huge number of long-running actual play podcasts that play longform campaigns? That's pretty much on par, in terms of whether your audience trusts you, of the New York Times international news desk not being able to locate Russia on a map (though obviously with far less serious real-world ramifications). (The fact that this was written by a prominent actual play scholar meanwhile is like, I don't know, Neil DeGrasse Tyson not knowing how gravity works, but that's a separate topic).

And again, I get these are your colleagues. I have the luxury of being able to run my mouth without putting my livelihood at stake, and that's not true for people within the industry. I do not expect you to say anything ill about them, nor would I judge any specific individual for getting published in Polygon since I get that people are pitching to a number of sites so that they can get paid! But when I say "Polygon's AP/TTRPG coverage is at needs-a-change-of-leadership levels of bad" I am not alone in this, and it's something that has probably been true for easily 3+ years if not longer. Because it's one of the more prominent publications in the space (ironically, due to Justin McElroy of TAZ being a founder, and the fact that its videogame division is quite good and has had some viral videos, it had enviable name recognition among AP fans that it's only squandered since) it really is at a point where hitting that same formula in any AP journalism - claiming everything is groundbreaking, putting an emphasis on high production values, D20 good and CR bad - makes fans go "oh, more of this bullshit." I don't want to say you can't talk about these things - I definitely do not want to say that you cannot criticize Critical Role - but that specific well is has been poisoned for a long time. If someone hits these points it feels, whether or not it is true, that they're trying to be provocative by going against popular fan opinion, but are simultaneously just saying the same thing we've seen a million times before.

I believe wholeheartedly that from your perspective the competition is AI - and I don't want AI articles either. On the other hand, in terms of what I think fans who are in my position are turning to, it's not AI articles (I'm certainly not). If I want analysis, I'm probably, at this point, going to social media; I am not the only person who writes longform meta or analysis for fun, and I'll seek others who do out. I'm not personally a video essay person, but plenty are, and that's out there too. I'm not going there for reporting on news (I think the Dnd Shorts OGL debacle made it clear that actual journalists are very necessary) but yeah, if I want criticism or analysis? I'm going there instead, especially since there often is that missing institutional memory. If I do want journalism, at this point, some of the bigger shows are getting writeups in less niche publications, particularly Critical Role and D20, as is news of more major tabletop games. It's infrequent and it doesn't highlight indie works, but it tends to be, if nothing else, lacking in major errors or obvious bias. If I want to hear from cast members, at least four of the shows I watch or listen to have regular talkback shows, and Dropout regularly talks to AP/TTRPG figures on Adventuring Academy, and a lot of those shows take viewer questions. Which, again, probably not heartening to hear the competition is even tighter, but I guess my point is I hope it's possible, even with very limited resources, to move away from the above "novelty and production values above all" pattern because even that would do a lot of needed work to rebuild reader trust - and I'm going to be checking out Rascal in the hopes that it can.

26 notes

·

View notes

Note

Niaaaa //wailing, heaving, rolling around on the floor

I cannot stress enough how much I adore your works and love rereading all of them from time to time

Am here to ask if you have any more touchstarved hcs,, or thoughts,, im dying over here

Literally starved for content

gn!reader | REG!!! //waving both hands, jumping up and down giggling. Thank U. this is an honour and incredible compliment. scary bc my old works are...old... but Thank u. U mean the world 2 Me. i didn't thoroughly check what hcs i've already said so sorry there's repeats orz

i'm not saying the LIs would all go to the barbie movie but if someone does make art of that please let me know and tag me especially if it has the i am kenough shirt

they ruined my life saying kuras doesn't eat how is he going to join my girl dinners now. /j but i'll continue to believe he can appreciate how good a meal looks! & he can still sit with you and try to get his hands on your favourite meals for you to enjoy :-)

that thing where they do push-ups and kiss you when they come down with...leander was the first one i thought of tbh. but if you aren't able to lie underneath him he'd just ask for the same amount once he's done!

leander doing the thing he did in the prologue where he took his glove off with his teeth every so often just to see your reaction. like if you react in an amusing flustered staring at him kind of way. i couldn't relate personally (lying) (liar) (huge lie)

i'm sorry for my leander bias but if one of his favourite things is MASQUERADES and we don't see him at a MASQUERADE well it's so joever like him in a suit and mask and showing off how he knows how to fit in because of his past and also he can waltz now or something I'm dizzy i can't breathe

ais using 0.5 camera on people while they're caught off guard. him asking you to take a video of the fight For him because he's going to be part of it. vere selfie folder. mhin 5 followers no icon no posts gc lurker.

mhin would stick to enough of a routine that they'd have a specific spot to sit at different places,,, like a cafe or the library or bus... corner. it's one of the corners. and when someone's taken the spot they're thrown off then have to walk around for a new one (not happy about this) but take it back once they leave. you spend enough time together and they start keeping the spot next to them open for you

^ also they'd always order the exact same thing at restaurants. wouldn't like going to a new place because now they have to find a new default order. just like me fr

is no one going to talk about the idea that vere doesn't like snow because he's chained outside and it's cold . to be fair it could Totally be for a less sad reason like how it gets his Fur Wet (valid) but i've been thinking about that possible angst

also his gloves are just. like. ? odd. inverse drawing gloves. claws... but why only the 3 fingers.... btw his outfit means a constant thigh holding opportunity

kuras and mhin having long conversations about alchemy and sharing their findings with each other ;; mhin at some point getting just a Little excited about something and kuras choosing not to comment on it but being happy to see them let their walls down a little ;; o(-(

ais coming into your room and wordlessly lying next to you in bed and when asked if he needs something he says no? with a smile. he was just feeling lonely and wanted to find you

saying "you look like you can't swim" or "you are an odd individual" to any and all of them . something about it is amusing to me

if you celebrate christmas or like the idea of kissing underneath some mistletoe,, i think it's a good thought that you hold one over your head and wait for a kiss Or that Some of the LIs would Definitely do that themselves.

who do you guys think has the saddest birthday celebration (/no celebration at all.) who's relating to girls who spend their birthday alone and crying and be honest with me

rambling but i just want to say kuras's monster form looks sick as FUCK and i'm so excited for it. it looks like whatever left the scar on his hand seems to be there.. in his monster form...? i thought it was a claw but the positioning is under/through the hand so like??. do i have to bring up the significance of that if true

also is his outfit (minus his jacket)...like a jumpsuit... or can i just not tell because of his three (?) belts. that's not how you wear belts btw /lh. and is the sheer part Part of the top or is he wearing something sheer underneath the white. his sleeves are also sheer but the neckline means his shoulders are out . take off ur jacket

also mhin !! i want to know how big they get and if the transformation is sickening to watch and if they're still aware of everything around them and !!! THERE IS A SPINE(?) COMING OUT FROM THE BOTTOM OF THE SILHOUETTE THAT I NEED TO SEE NOW! & i'm assuming the senobium is Shit so even if we do get in there and get 'help' there would be another shitty price to pay. possible bad ending...??

scenes with all their monster forms where you're asked if you're scared and you say no / yes but you care about them and they falter because they didn't expect that

true good ending is everyone meeting at the wet wick and making a toast and laughing and saying this truly was our touchstarved before the credits roll

#touchstarved#touchstarved ais#touchstarved leander#touchstarved kuras#touchstarved mhin#touchstarved vere#redspring pls continue to drop lore. gimme. GImemeeme...#not a single one of these birthdays sound good to me btw. closest to happy is how leander would say all drinks on him for the night#but it doesnt change the fact he has no close friends 💀💀#Im serious about the masquerade scene bc i need to see him in a formal setting. how hes different but still charming/the same in a way etc

59 notes

·

View notes

Text

Shedding Light On The Dark Side of AI

(Images generated by author with BlueWillow)

AI is undeniably changing the way we live and work, offering solutions to many real-world problems. However, its potential benefits come with a dark side. From biases to existential risk, AI poses several challenges that need to be tackled. In this post, we will discuss some of these issues and explore strategies to mitigate them.

Table of…

View On WordPress

#AI#AI governance#ai regulation#artificial intelligence#Bias#Data protection#Ethics#existential risks#fairness#job displacement#Manipulation#privacy#responsible#security risks#transparency

1 note

·

View note

Note

I was wondering if you have resources on how to explain (in good faith) to someone why AI created images are bad. I'm struggling how to explain to someone who uses AI to create fandom images, b/c I feel I can't justify my own use of photoshop to create manips also for fandom purposes, & they've implied to me they're hoping to use AI to take a photoshopped manip I made to create their own "version". I know one of the issues is stealing original artwork to make imitations fast and easy.

Hey anon. There are a lot of reasons that AI as it is used right now can be a huge problem - but the easiest ones to lean on are:

1) that it finds, reinforces, and in some cases even enforces biases and stereotypes that can cause actual harm to real people. (For example: a black character in fandom will consistently be depicted by AI as lighter and lighter skinned until they become white, or a character described as Jewish will...well, in most generators, gain some 'villain' characteristics, and so on. Consider someone putting a canonically transgender character through an AI bias, or a woman who is not perhaps well loved by fandom....)

2) it creates misinformation and passes it off as real (it can make blatant lies seem credible, because people believe what they see, and in fandom terms, this can mean people trying to 'prove' that the creator stole their content from elsewhere, or allow someone to create and sell their own 'version' of content that is functionally unidentifiable from canon

3) it's theft. The algorithm didn't come up with anything that it "makes," it just steals some real person's work and then mangles is a bit before regurgitating it with usually no credit to the original, actual creator. (In fandom terms: you have just done the equivalent of cropping out someone else's watermark and calling yourself the original artist. After all, the AI tool you used got that content from somewhere; it did not draw you a picture, it copy pasted a picture)

4) In some places, selling or distributing AI art is or may soon be illegal - and if it's not illegal, there are plenty of artists launching class action lawsuits against those who write the algorithm, and those who use it. Turns out artists don't like having their art stolen, mangled, and passed off as someone else's. Go figure.

Here are some articles that might help lay out more clear examples and arguments, from people more knowledgeable than me (I tried to imbed the links with anti-paywall and anti-tracker add ons, but if tumblr ate my formatting, just type "12ft.io/" in front of the url, or type the article name into your search engine and run it through your own ad-blocking, anti tracking set up):

These fake images reveal how AI amplifies our worst stereotypes [Source: Washington Post, Nov 2023]

Humans Are Biased; AI is even worse (Here's Why That Matters) [Source: Bloomburg, Dec 2023]

Why Artists Hate AI Art [Source: Tech Republic, Nov 2023]

Why Illustrators Are Furious About AI 'Art' [Source: The Guardian, Jan 2023]

Artists Are Losing The War Against AI [Source: The Atlantic, Oct 2023]

This tool lets you see for yourself how biased an AI can be [Source: MIT Technology Review, March 2023]

Midjourney's Class-Action lawsuit and what it could mean for future AI Image Generators [Source: Fortune Magazine, Jan 2024]

What the latest US Court rulings mean for AI Generated Copyright Status [Source: The Art Newspaper, Sep 2023]

AI-Generated Content and Copyright Law [Source: Built-in Magazine, Aug 2023 - take note that this is already outdated, it was just the most comprehensive recent article I could find quickly]

AI is making mincemeat out of art (not to mention intellectual property) [Source: The LA Times, Jan 2024]

Midjourney Allegedly Scraped Magic: The Gathering art for algorithm [Source: Kotaku, Jan 2024]

Leaked: the names of more than 16,000 non-consenting artists allegedly used to train Midjourney’s AI [Source: The Art Newpaper, Jan 2024]

#anti ai art#art theft#anti algorithms#sexism#classism#racism#stereotypes#lawsuits#midjourney#ethics#real human consequences#irresponsible use of algorithms#spicy autocomplete#sorry for the delayed answer

22 notes

·

View notes