#deep fakes

Text

Brooke Monk

89 notes

·

View notes

Text

Imagine being the loser ass tool, Yisha Raziel, who made a deepfake of Bella Hadid saying she supports Israel. 🤮

If you’re reading this, I am telling you right now, you better second and third guess what you see and hear on social media and the news. Stick to reliable news sources. Vet them. Require multiple, trusted sources. Validate links to sources. In the last several months, I’ve seen the deepfake of President Nixon talking about the failed NASA mission that never happened. I’ve seen a deepfake of Joe Biden hilariously using profanity to trash talk Trump - Biden’s deepfake, however, was made to be intentionally obvious that it wasn’t his words, or something he would actually say.

But imagine a viral deepfake video of Biden announcing a nuclear strike on Russia within the next 20 minutes? Or a deepfake of Biden reintroducing the draft to support Israel? Or a deepfake of Biden withdrawing from the 2024 election and endorsing Trump…

These kinds of things are going to begin happening a lot more, especially with the proliferation of troll farms, and especially since YouTube, Twitter (I refuse to call it X), and Facebook have all eviscerated their verification and factcheck teams that used to at least attempt to limit disinformation and misinformation.

Pay attention, peeps.

Don’t get bamboozled.

663 notes

·

View notes

Text

NOTE: NBC needs to green light ASAP a 2 hour LAW & ORDER: SVU w/ special guest star: Taylor Swift. Everyone would watch it. It would be topical and shine more public awareness on this issue. Plus, the ratings would be HUGE!!!

#taylor swift#swifties#cyberpunk#deep fakes#ai#ai artwork#law and order svu#law & order: svu#svu#nbc#NFL#Super Bowl#olivia benson#giphy#television#law and order special victims unit#artificial intelligence#kansas city chiefs#49ers#taylor's version#bad blood#twitter#x#instagram#facebook#tumblr#social media#blockbuster#the eras tour#cats

11 notes

·

View notes

Text

Misinformation won't change until people with massive followings and channels gain integrity and post for beneficial reasons instead of engagement. And they never will.

Unless there is regulation around actively spreading misinformation there's no incentive for influencers to do any research. They can spew vile horrible things and then maybe a few years later they'll pay a hefty fine like Alex Jones. But that's still several years of spouting misinformation for engagement that's influenced millions of people.

Regulation doesn't act fast enough and influencers have no incentive to do a shred of research or have any accountability for what they post.

With the rise of AI and rapid advances in deep fakes it's only going to get harder to tell what's real and what's fake, even with a strong sense of media literacy and heavy scepticism.

Once something is on the internet and catches, it's out there. People have proven they can't be bothered checking if something is real especially if it aligns with how they value themselves and they're caught up in the virality of it.

That only leaves the social media platforms to regulate and they won't or can't scale it adequately. And they also have weird rules about specific celebrities and public figures being above their regulation which makes zero sense.

If there's ever a time to reduce internet usage it's probably now.

I'm genuinely terrified for this deregulated and chaotic time of technology and social media we are entering.

92 notes

·

View notes

Text

youtube

Hmmmmm 🤔🤔🤔😳🫣😬😓😱

Actors on the SAG-AFTRA picket line have good reasons to be concerned.

21 notes

·

View notes

Text

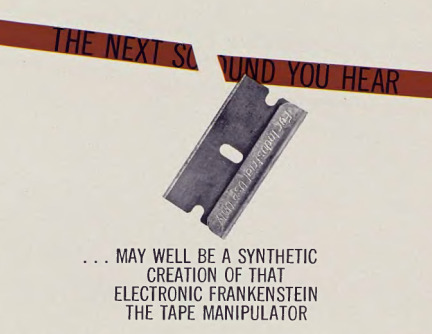

Deepfakes, 1962

From the Sept 1962 Playboy article on the 'new' phenomenon of editing news and interviews with razor blades and tape, and what that does to media trust.

5 notes

·

View notes

Text

By Olivia Rosane

Common Dreams

Dec. 26, 2023

"If people don't ultimately trust information related to an election, democracy just stops working," said a senior fellow at the Alliance for Securing Democracy.

As 2024 approaches and with it the next U.S. presidential election, experts and advocates are warning about the impact that the spread of artificial intelligence technology will have on the amount and sophistication of misinformation directed at voters.

While falsehoods and conspiracy theories have circulated ahead of previous elections, 2024 marks the first time that it will be easy for anyone to access AI technology that could create a believable deepfake video, photo, or audio clip in seconds, The Associated Press reported Tuesday.

"I expect a tsunami of misinformation," Oren Etzioni, n AI expert and University of Washington professor emeritus, told the AP. "I can't prove that. I hope to be proven wrong. But the ingredients are there, and I am completely terrified."

"If a misinformation or disinformation campaign is effective enough that a large enough percentage of the American population does not believe that the results reflect what actually happened, then Jan. 6 will probably look like a warm-up act."

Subject matter experts told the AP that three factors made the 2024 election an especially perilous time for the rise of misinformation. The first is the availability of the technology itself. Deepfakes have already been used in elections. The Republican primary campaign of Florida Gov. Ron DeSantis circulated images of former president Donald Trump hugging former White House Coronavirus Task Force chief Anthony Fauci as part of an ad in June, for example.

"You could see a political candidate like President [Joe] Biden being rushed to a hospital," Etzioni told the AP. "You could see a candidate saying things that he or she never actually said."

The second factor is that social media companies have reduced the number of policies designed to control the spread of false posts and the amount of employees devoted to monitoring them. When billionaire Elon Musk acquired Twitter in October of 2022, he fired nearly half of the platform's workforce, including employees who worked to control misinformation.

Yet while Musk has faced significant criticism and scrutiny for his leadership, co-founder of Accountable Tech Jesse Lehrich told the AP that other platforms appear to have used his actions as an excuse to be less vigilant themselves. A report published by Free Press in December found that Twitter—now X—Meta, and YouTube rolled back 17 policies between November 2022 and November 2023 that targeted hate speech and disinformation. For example, X and YouTube retired policies around the spread of misinformation concerning the 2020 presidential election and the lie that Trump in fact won, and X and Meta relaxed policies aimed at stopping Covid 19-related falsehoods.

"We found that in 2023, the largest social media companies have deprioritized content moderation and other user trust and safety protections, including rolling back platform policies that had reduced the presence of hate, harassment, and lies on their networks," Free Press said, calling the rollbacks "a dangerous backslide."

Finally, Trump, who has been a big proponent of the lie that he won the 2020 presidential election against Biden, is running again in 2024. Since 57% of Republicans now believe his claim that Biden did not win the last election, experts are worried about what could happen if large numbers of people accept similar lies in 2024.

"If people don't ultimately trust information related to an election, democracy just stops working," Bret Schafer, a senior fellow at the nonpartisan Alliance for Securing Democracy, told the AP. "If a misinformation or disinformation campaign is effective enough that a large enough percentage of the American population does not believe that the results reflect what actually happened, then Jan. 6 will probably look like a warm-up act."

The warnings build on the alarm sounded by watchdog groups like Public Citizen, which has been advocating for a ban on the use of deepfakes in elections. The group has petitioned the Federal Election Commission to establish a new rule governing AI-generated content, and has called on the body to acknowledge that the use of deepfakes is already illegal under a rule banning "fraudulent misrepresentation."

"Specifically, by falsely putting words into another candidate's mouth, or showing the candidate taking action they did not, the deceptive deepfaker fraudulently speaks or act[s] 'for' that candidate in a way deliberately intended to damage him or her. This is precisely what the statute aims to proscribe," Public Citizen said.

The group has also asked the Republican and Democratic parties and their candidates to promise not to use deepfakes to mislead voters in 2024.

In November, Public Citizen announced a new tool tracking state-level legislation to control deepfakes. To date, laws have been enacted in California, Michigan, Minnesota, Texas, and Washington.

"Without new legislation and regulation, deepfakes are likely to further confuse voters and undermine confidence in elections," Ilana Beller, democracy campaign field manager for Public Citizen, said when the tracker was announced. "Deepfake video could be released days or hours before an election with no time to debunk it—misleading voters and altering the outcome of the election."

Our work is licensed under Creative Commons (CC BY-NC-ND 3.0). Feel free to republish and share widely.

#artificial intelligence#ai#deep fakes#2024 elections#public citizen#federal election commission#fec#democracy#social media

10 notes

·

View notes

Text

Can you spot a deepfake because I barely can

https://careerswithstem.com.au/deep-fake-quiz/#gsc.tab=0

3 notes

·

View notes

Text

I want to pose a question to any celebrity. It’s not really hypothetical, as this scenario already exists.

Let’s say a fan wrote fanfiction about you and another real life celebrity. They firmly believe you’re a real-life couple, and believe in their freedom of speech to write fictional scenes about you. Nothing you do or say can change the fans’ minds.

The fan then use AI technology to generate your celeb voices, possibly even make deep fake videos of you and the other celebrity re-enacting their fanfiction. These scenes never actually took place, but the deep fakes and AI voices are meant to obfuscate and disseminate disinformation. It goes viral.

The fan shares this content publicly, believing all the while that their freedom of speech is greater than your right to privacy, the copyright protection of your name, and your freedom from public harassment. (Other issues, including the cumulative damage to your mental health from years of invasive speculation and impingement on your image, go unspoken. Your “biggest fans” online in fact have huge, published lists of fanfiction recommendations that keep the fandom mired in a stranglehold.) Some of this content is sexually explicit. The AI-generated content goes viral, and is shared widely.

The fans gain social media attention, which they may then monetize.

This use of AI is possibly a trademark violation, may be classifiable as libel, and is obviously deeply unethical.

How do you, the celebrity, feel about the deep fakes? What legal action can you take to protect yourself, your career, your brand?

12 notes

·

View notes

Text

#Science#science communication#scicomm#Stem#science education#science blog#AI#Deep fakes#Computer science

4 notes

·

View notes

Text

Alison Brie

18 notes

·

View notes

Text

You. have. been. warned. (source)

#politics#technology#deepfakes#synthetic reality#morgan freeman#tech#deep fakes#disinformation#diep nep

43 notes

·

View notes

Text

youtube

Nowadays you can't really escape AI Art. It is everywhere on social media and the news. And it's a super divisive topic. A lot of things like the legal aspects and the technical limitations are quite unsure. So I tried to bring some clarity and did a crap-ton of research, looking at this situation from all sorts of angles, and made a long, informative video out of it.

#ai art#ai image generators#generative art#deep learning#art#discussion#legal issues#copyright#identity#privacy#how it works#deep fakes#limitations#Youtube

4 notes

·

View notes

Text

DAILY DOSE: Global Coral Bleaching Crisis Now Fourth Event; Brexit Exacerbates UK's Growing Drug Shortages.

NOAA REPORTS FOURTH GLOBAL CORAL BLEACHING CRISIS BEGINS.

The National Oceanic and Atmospheric Administration’s (NOAA) Coral Reef Watch has announced the onset of the fourth global coral bleaching event, signaling a dire phase for the world’s corals and the communities dependent on them. Triggered by a consecutive ten-month streak of record-breaking global air temperatures in 2024, ocean…

View On WordPress

#Africa#artificial intelligence#Asia#Australia#climate change#coral reefs#deep fakes#environment#Europe#evolution#Featured#geology#meteorology#North America#pharmaceutical industry#South America#sustainability#technology

0 notes

Text

Enjoy the pseudo nationalist fascism while it lasts. It's about to get way way worse.

Of course the right wing hate is using deep fakes to encouraging left wing voters to not vote. And the fucking legislation some states are working on might as well just be pro deep fake usage in elections.

People are obviously too innately gullible and generally ignorant to handle social media as it already is. We love to laugh at boomers and how stupid they are with recognizing lies and bullshit but my Gen Y friends can't tell bullshit from real off of conspiracy tiktok.

We're in trouble.

#deep fakes#deep fakes in 2024 elections#evil#international problems#right wing populism#we are in trouble#new hampshire#todays news#news#international news#2024 elections#us news

0 notes

Text

🚨 Deep Fakes Alert! Taylor Swift's recent ordeal exposes the rise of AI-generated fake content. Learn about the risks and join the discussion on solutions for online safety. Read more: https://t.ly/-9gGO

0 notes