#ai-driven

Text

SEMANTIC TREE AND AI TECHNOLOGIES

Semantic Tree learning and AI technologies can be combined to solve problems by leveraging the power of natural language processing and machine learning.

Semantic trees are a knowledge representation technique that organizes information in a hierarchical, tree-like structure.

Each node in the tree represents a concept or entity, and the connections between nodes represent the relationships between those concepts.

This structure allows for the representation of complex, interconnected knowledge in a way that can be easily navigated and reasoned about.

CONCEPTS

Semantic Tree: A structured representation where nodes correspond to concepts and edges denote relationships (e.g., hyponyms, hyponyms, synonyms).

Meaning: Understanding the context, nuances, and associations related to words or concepts.

Natural Language Understanding (NLU): AI techniques for comprehending and interpreting human language.

First Principles: Fundamental building blocks or core concepts in a domain.

AI (Artificial Intelligence): AI refers to the development of computer systems that can perform tasks that typically require human intelligence. AI technologies include machine learning, natural language processing, computer vision, and more. These technologies enable computers to understand reason, learn, and make decisions.

Natural Language Processing (NLP): NLP is a branch of AI that focuses on the interaction between computers and human language. It involves the analysis and understanding of natural language text or speech by computers. NLP techniques are used to process, interpret, and generate human languages.

Machine Learning (ML): Machine Learning is a subset of AI that enables computers to learn and improve from experience without being explicitly programmed. ML algorithms can analyze data, identify patterns, and make predictions or decisions based on the learned patterns.

Deep Learning: A subset of machine learning that uses neural networks with multiple layers to learn complex patterns.

EXAMPLES OF APPLYING SEMANTIC TREE LEARNING WITH AI.

1. Text Classification: Semantic Tree learning can be combined with AI to solve text classification problems. By training a machine learning model on labeled data, the model can learn to classify text into different categories or labels. For example, a customer support system can use semantic tree learning to automatically categorize customer queries into different topics, such as billing, technical issues, or product inquiries.

2. Sentiment Analysis: Semantic Tree learning can be used with AI to perform sentiment analysis on text data. Sentiment analysis aims to determine the sentiment or emotion expressed in a piece of text, such as positive, negative, or neutral. By analyzing the semantic structure of the text using Semantic Tree learning techniques, machine learning models can classify the sentiment of customer reviews, social media posts, or feedback.

3. Question Answering: Semantic Tree learning combined with AI can be used for question answering systems. By understanding the semantic structure of questions and the context of the information being asked, machine learning models can provide accurate and relevant answers. For example, a Chabot can use Semantic Tree learning to understand user queries and provide appropriate responses based on the analyzed semantic structure.

4. Information Extraction: Semantic Tree learning can be applied with AI to extract structured information from unstructured text data. By analyzing the semantic relationships between entities and concepts in the text, machine learning models can identify and extract specific information. For example, an AI system can extract key information like names, dates, locations, or events from news articles or research papers.

Python Snippet Codes for Semantic Tree Learning with AI

Here are four small Python code snippets that demonstrate how to apply Semantic Tree learning with AI using popular libraries:

1. Text Classification with scikit-learn:

```python

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

# Training data

texts = ['This is a positive review', 'This is a negative review', 'This is a neutral review']

labels = ['positive', 'negative', 'neutral']

# Vectorize the text data

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(texts)

# Train a logistic regression classifier

classifier = LogisticRegression()

classifier.fit(X, labels)

# Predict the label for a new text

new_text = 'This is a positive sentiment'

new_text_vectorized = vectorizer.transform([new_text])

predicted_label = classifier.predict(new_text_vectorized)

print(predicted_label)

```

2. Sentiment Analysis with TextBlob:

```python

from textblob import TextBlob

# Analyze sentiment of a text

text = 'This is a positive sentence'

blob = TextBlob(text)

sentiment = blob.sentiment.polarity

# Classify sentiment based on polarity

if sentiment > 0:

sentiment_label = 'positive'

elif sentiment < 0:

sentiment_label = 'negative'

else:

sentiment_label = 'neutral'

print(sentiment_label)

```

3. Question Answering with Transformers:

```python

from transformers import pipeline

# Load the question answering model

qa_model = pipeline('question-answering')

# Provide context and ask a question

context = 'The Semantic Web is an extension of the World Wide Web.'

question = 'What is the Semantic Web?'

# Get the answer

answer = qa_model(question=question, context=context)

print(answer['answer'])

```

4. Information Extraction with spaCy:

```python

import spacy

# Load the English language model

nlp = spacy.load('en_core_web_sm')

# Process text and extract named entities

text = 'Apple Inc. is planning to open a new store in New York City.'

doc = nlp(text)

# Extract named entities

entities = [(ent.text, ent.label_) for ent in doc.ents]

print(entities)

```

APPLICATIONS OF SEMANTIC TREE LEARNING WITH AI

Semantic Tree learning combined with AI can be used in various domains and industries to solve problems. Here are some examples of where it can be applied:

1. Customer Support: Semantic Tree learning can be used to automatically categorize and route customer queries to the appropriate support teams, improving response times and customer satisfaction.

2. Social Media Analysis: Semantic Tree learning with AI can be applied to analyze social media posts, comments, and reviews to understand public sentiment, identify trends, and monitor brand reputation.

3. Information Retrieval: Semantic Tree learning can enhance search engines by understanding the meaning and context of user queries, providing more accurate and relevant search results.

4. Content Recommendation: By analyzing the semantic structure of user preferences and content metadata, Semantic Tree learning with AI can be used to personalize content recommendations in platforms like streaming services, news aggregators, or e-commerce websites.

Semantic Tree learning combined with AI technologies enables the understanding and analysis of text data, leading to improved problem-solving capabilities in various domains.

COMBINING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Semantic Reasoning: By integrating semantic trees with AI, systems can engage in more sophisticated reasoning and decision-making. The semantic tree provides a structured representation of knowledge, while AI techniques like natural language processing and knowledge representation can be used to navigate and reason about the information in the tree.

2. Explainable AI: Semantic trees can make AI systems more interpretable and explainable. The hierarchical structure of the tree can be used to trace the reasoning process and understand how the system arrived at a particular conclusion, which is important for building trust in AI-powered applications.

3. Knowledge Extraction and Representation: AI techniques like machine learning can be used to automatically construct semantic trees from unstructured data, such as text or images. This allows for the efficient extraction and representation of knowledge, which can then be used to power various problem-solving applications.

4. Hybrid Approaches: Combining semantic trees and AI can lead to hybrid approaches that leverage the strengths of both. For example, a system could use a semantic tree to represent domain knowledge and then apply AI techniques like reinforcement learning to optimize decision-making within that knowledge structure.

EXAMPLES OF APPLYING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Medical Diagnosis: A semantic tree could represent the relationships between symptoms, diseases, and treatments. AI techniques like natural language processing and machine learning could be used to analyze patient data, navigate the semantic tree, and provide personalized diagnosis and treatment recommendations.

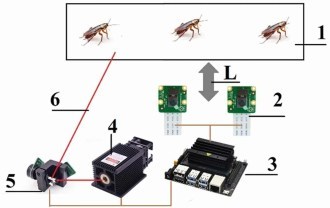

2. Robotics and Autonomous Systems: Semantic trees could be used to represent the knowledge and decision-making processes of autonomous systems, such as self-driving cars or drones. AI techniques like computer vision and reinforcement learning could be used to navigate the semantic tree and make real-time decisions in dynamic environments.

3. Financial Analysis: Semantic trees could be used to model complex financial relationships and market dynamics. AI techniques like predictive analytics and natural language processing could be applied to the semantic tree to identify patterns, make forecasts, and support investment decisions.

4. Personalized Recommendation Systems: Semantic trees could be used to represent user preferences, interests, and behaviors. AI techniques like collaborative filtering and content-based recommendation could be used to navigate the semantic tree and provide personalized recommendations for products, content, or services.

PYTHON CODE SNIPPETS

1. Semantic Tree Construction using NetworkX:

```python

import networkx as nx

import matplotlib.pyplot as plt

# Create a semantic tree

G = nx.DiGraph()

G.add_node("root", label="Root")

G.add_node("concept1", label="Concept 1")

G.add_node("concept2", label="Concept 2")

G.add_node("concept3", label="Concept 3")

G.add_edge("root", "concept1")

G.add_edge("root", "concept2")

G.add_edge("concept2", "concept3")

# Visualize the semantic tree

pos = nx.spring_layout(G)

nx.draw(G, pos, with_labels=True)

plt.show()

```

2. Semantic Reasoning using PyKEEN:

```python

from pykeen.models import TransE

from pykeen.triples import TriplesFactory

# Load a knowledge graph dataset

tf = TriplesFactory.from_path("./dataset/")

# Train a TransE model on the knowledge graph

model = TransE(triples_factory=tf)

model.fit(num_epochs=100)

# Perform semantic reasoning

head = "concept1"

relation = "isRelatedTo"

tail = "concept3"

score = model.score_hrt(head, relation, tail)

print(f"The score for the triple ({head}, {relation}, {tail}) is: {score}")

```

3. Knowledge Extraction using spaCy:

```python

import spacy

# Load the spaCy model

nlp = spacy.load("en_core_web_sm")

# Extract entities and relations from text

text = "The quick brown fox jumps over the lazy dog."

doc = nlp(text)

# Visualize the extracted knowledge

from spacy import displacy

displacy.render(doc, style="ent")

```

4. Hybrid Approach using Ray:

```python

import ray

from ray.rllib.agents.ppo import PPOTrainer

from ray.rllib.env.multi_agent_env import MultiAgentEnv

from ray.rllib.models.tf.tf_modelv2 import TFModelV2

# Define a custom model that integrates a semantic tree

class SemanticTreeModel(TFModelV2):

def __init__(self, obs_space, action_space, num_outputs, model_config, name):

super().__init__(obs_space, action_space, num_outputs, model_config, name)

# Implement the integration of the semantic tree with the neural network

# Define a multi-agent environment that uses the semantic tree model

class SemanticTreeEnv(MultiAgentEnv):

def __init__(self):

self.semantic_tree = # Initialize the semantic tree

self.agents = # Define the agents

def step(self, actions):

# Implement the environment dynamics using the semantic tree

# Train the hybrid model using Ray

ray.init()

config = {

"env": SemanticTreeEnv,

"model": {

"custom_model": SemanticTreeModel,

},

}

trainer = PPOTrainer(config=config)

trainer.train()

```

APPLICATIONS

The combination of semantic trees and AI can be applied to a wide range of problem domains, including:

- Healthcare: Improving medical diagnosis, treatment planning, and drug discovery.

- Finance: Enhancing investment strategies, risk management, and fraud detection.

- Robotics and Autonomous Systems: Enabling more intelligent and adaptable decision-making in complex environments.

- Education: Personalizing learning experiences and providing intelligent tutoring systems.

- Smart Cities: Optimizing urban planning, transportation, and resource management.

- Environmental Conservation: Modeling and predicting environmental changes, and supporting sustainable decision-making.

- Chatbots and Virtual Assistants:

Use semantic trees to understand user queries and provide context-aware responses.

Apply NLU models to extract meaning from user input.

- Information Retrieval:

Build semantic search engines that understand user intent beyond keyword matching.

Combine semantic trees with vector embeddings (e.g., BERT) for better search results.

- Medical Diagnosis:

Create semantic trees for medical conditions, symptoms, and treatments.

Use AI to match patient symptoms to relevant diagnoses.

- Automated Content Generation:

Construct semantic trees for topics (e.g., climate change, finance).

Generate articles, summaries, or reports based on semantic understanding.

RDIDINI PROMPT ENGINEER

#semantic tree#ai solutions#ai-driven#ai trends#ai system#ai model#ai prompt#ml#ai predictions#llm#dl#nlp

3 notes

·

View notes

Text

Viewer Empowerment: Enhancing Media Literacy in the Age of AI-Driven Influence Operations

In today's rapidly evolving digital landscape, the intersection of artificial intelligence and information dissemination has given rise to a new breed of challenges. One such concern is the emergence of AI-driven influence operations spreading pro-China propaganda across popular platforms like YouTube.

Background of AI-driven Influence Operations

To understand this phenomenon, let's delve into the background of AI's role in shaping narratives. Over the years, artificial intelligence has played an increasingly pivotal role in molding public opinion. From shaping political discourse to influencing consumer behavior, AI has become a powerful tool in the arsenal of those seeking to sway public perceptions.

For more info visit: Sonicbulletinhub

2 notes

·

View notes

Text

Complete SEO articles are constructed in a third of the time it normally takes. Using keywords that you need to target for your site or blog. Try this adept AI that has a great understanding of all kinds of documents and media.

2 notes

·

View notes

Text

2 notes

·

View notes

Text

Comprehend About AI-Driven Abnormality Detection For Business

It is imperative to keep in advance of the arc in the hectic planet of business. As modern technology remains to evolve, providers are progressively counting on AI to gain ideas, improve methods, and also mitigate threats. One such application of AI that is actually transforming the method businesses operate is anomaly detection. Within this article, we will definitely explore the details of AI-driven oddity detection and also discover just how it can easily benefit businesses across various markets.

Anomaly diagnosis involves identifying patterns or even activities that differ the norm within a dataset. Generally, businesses have actually counted on hands-on methods or even straightforward rule-based systems to identify irregularities. However, these methods typically drop short in today's structure and data-rich settings. This is where AI-powered anomaly discovery enters play.

At its own center, AI-powered anomaly detection leverages machine knowing protocols to study huge volumes of data as well as pinpoint irregularities automatically. These formulas can identify irregularities in different forms of data, featuring numerical, categorical, and also also unstructured information including content and also pictures. Through regularly picking up from brand-new data, artificial intelligence models may adjust to altering designs and also discover irregularities along with high reliability.

So, exactly how precisely can AI-powered abnormality detection perk businesses? Let's check out some key conveniences:

Main Advantages Of AI-powered Oddity Discovery

Early Detection

Among the key advantages of AI-driven anomaly detection is its capacity to pinpoint anomalies in real-time or even close to real-time. Through finding abnormalities early, businesses can take aggressive actions to take care of issues before they rise, lessening prospective loss and also losses.

Boosted Precision

Conventional anomaly detection approaches are actually often prone to errors and misleading positives. AI-powered strategies, on the contrary, can easily study data at incrustation and locate abnormalities with higher precision, lowering the chance of duds as well as making certain that legitimate anomalies are actually certainly not ignored.

Expandability

As businesses generate increasingly sizable quantities of data, scalability becomes a vital factor. AI-powered anomaly detection systems are actually strongly scalable and also may manage gigantic datasets with convenience, making all of them suitable for businesses of all sizes.

Modification

AI-driven abnormality detection models can be actually individualized to suit the details requirements as well as criteria of various business and use cases. Whether it is actually locating deceitful purchases in money management, recognizing equipment failures in production, or even locating abnormalities in system visitor traffic, AI designs may be tailored to provide ideal functionality.

Price Savings

Through automating the oddity discovery process, businesses may conserve time and resources that will typically be actually spent on hands-on evaluation. Additionally, through locating anomalies early as well as decreasing down time or losses, businesses can easily conserve expenses linked with removal efforts and working disruptions.

Even with its several benefits, implementing AI-driven oddity discovery carries out come along with its obstacles. These include data quality problems, the requirement for domain skills, and also making certain the transparency and also interpretability of AI models. Nonetheless, along with effective planning, implementation, and continuous monitoring, businesses can easily get over these problems and also harness the total potential of AI-powered anomaly detection.

Ultimately, AI-powered irregularity detection embodies a powerful tool for businesses seeking to obtain ideas, relieve dangers, and keep in advance of the competition. Through leveraging advanced equipment knowing protocols, businesses may identify anomalies in real-time, improve precision, and attain expense financial savings. As the modern technology remains to grow, AI-driven oddity diagnosis is actually positioned to come to be a crucial possession for businesses throughout business.

1 note

·

View note

Text

AI-Driven Cybersecurity: Protecting Data in the Digital Age

In today's digital age, where data is the lifeblood of businesses and individuals alike, the importance of safeguarding data cannot be overstated. The proliferation of data has been accompanied by a rise in cyber threats, making data privacy, security, and protection a top priority for organizations and individuals. With technology advancing at an unprecedented pace, the traditional methods of securing data are no longer sufficient to combat evolving threats. This is where AI-driven cybersecurity comes into play, offering a revolutionary approach to protect your data in the digital age.

In this blog, we'll delve into the world of AI-driven cybersecurity, exploring how artificial intelligence is transforming the landscape of data protection and privacy.

The Challenges of the Digital Age

The digital age has ushered in a world of unprecedented opportunities, but it has also given rise to a host of new challenges, particularly in the realms of data privacy and security. Some of the key challenges include:

Data Proliferation: With the explosive growth of data, organizations must manage and protect vast amounts of information. Data is no longer confined to on-premises servers but often resides in cloud environments, making it more susceptible to cyberattacks.

Sophisticated Cyber Threats: Cybercriminals have become increasingly sophisticated, using advanced techniques to breach systems, steal data, and disrupt operations. Traditional security measures are often ill-equipped to thwart these attacks.

Regulatory Compliance: Governments and regulatory bodies worldwide are enacting stringent data protection laws, such as GDPR and CCPA. Non-compliance can result in severe financial penalties and reputational damage.

Human Error: Despite the latest cybersecurity tools and protocols, human error remains a significant factor in data breaches. Misconfigured settings, weak passwords, and phishing attacks continue to pose risks.

AI-Driven Cybersecurity: A Game-Changer

In this ever-evolving landscape, AI-driven cybersecurity is emerging as a game-changing solution to the challenges posed by the digital age. Artificial intelligence brings to the table a range of capabilities that can significantly enhance data protection and privacy. These capabilities include:

Predictive Analysis: AI algorithms can analyze vast datasets to identify patterns and anomalies. By doing so, they can predict potential threats before they materialize, allowing organizations to take proactive measures.

Real-Time Monitoring: AI systems provide real-time monitoring of network traffic and system behavior. Any suspicious activity can be flagged immediately, reducing response times to threats.

Automation: AI can automate routine security tasks, reducing the burden on cybersecurity teams. This allows experts to focus on more complex and strategic aspects of cybersecurity.

Improved User Authentication: AI can enhance user authentication processes, making it more difficult for unauthorized users to gain access. This includes biometric authentication and behavior analysis.

Threat Detection: AI-driven cybersecurity solutions can rapidly detect and classify new and evolving threats, adapting to changing attack vectors in real-time.

Incident Response: In the event of a security incident, AI can assist in incident response by quickly identifying the source and scope of the breach, allowing for a more targeted and effective response.

The impact of AI-driven cybersecurity

Aventior's AI-Computer Vision technology is a game-changer in the realm of data protection. It combines artificial intelligence with computer vision to secure data in a novel way. Computer vision enables machines to interpret and understand visual information from the world. When applied to data security, it offers a unique advantage.

Here are some of the key features of Aventior's AI-Computer Vision technology:

Data Classification: The system can automatically classify data, identifying sensitive and non-sensitive information. This is particularly valuable for organizations dealing with vast amounts of data.

Anomaly Detection: By continuously monitoring data access and usage, Aventior's technology can spot anomalies and suspicious behavior, which could indicate a data breach or insider threat.

Behavior Analysis: The AI component analyzes user behavior to detect deviations from established norms. This allows for more precise identification of security threats.

Response Automation: When a threat is detected, the system can automatically trigger responses, such as isolating compromised systems or alerting security teams.

Scalability: Aventior's solutions are designed to scale with an organization's data needs. Whether you're a small business or a large enterprise, their technology can adapt to your requirements.

Aventior's AI-Computer Vision technology has made a significant impact on data protection and privacy. Here are some examples of how it has benefited organizations:

Reduced False PositivesThe system's ability to differentiate between normal and abnormal behavior has led to a reduction in false positives, allowing security teams to focus on genuine threats.

Faster Threat ResponseThe real-time monitoring and automated response capabilities have significantly shortened the time required to respond to security incidents.

Compliance AssuranceAventior's technology assists organizations in maintaining regulatory compliance by ensuring data security and privacy measures are consistently enforced.

Cost SavingsBy automating many security tasks and reducing the impact of security incidents, Aventior's solutions have led to cost savings for their clients.

How AI-Driven Cybersecurity is Revolutionizing Data Protection

AI-driven cybersecurity is revolutionizing data protection in a number of ways.

Improved threat detection and responseAI-driven cybersecurity solutions can rapidly detect and classify new and evolving threats, adapting to changing attack vectors in real time. This is essential in the ever-changing threat landscape.

More personalized and proactive securityAI can be used to create more personalized and proactive security solutions. For example, AI-powered solutions can be used to analyze user behavior and identify anomalies that may indicate a security threat. This information can then be used to take preventive measures to protect the user.

Greater integration with other security technologiesAI-driven cybersecurity solutions are becoming more integrated with other security technologies, such as firewalls, intrusion detection systems, and security information and event management (SIEM) systems. This allows for a more comprehensive and coordinated approach to security.

Overall, AI-driven cybersecurity has the potential to revolutionize the way we protect our data and systems from cyberattacks.

Conclusion

In the digital age, data is both a valuable asset and a significant liability. Protecting that data is of paramount importance, and AI-driven cybersecurity is proving to be a game-changer. With its ability to predict, monitor, and respond to threats, AI is enhancing data security and privacy in ways previously unimaginable.

Aventior, with its AI-Computer Vision technology, exemplifies the potential of AI in the realm of data protection. In addition to strengthening security, the approach streamlines processes and reduces the burden on cybersecurity teams.

As we continue to embrace the opportunities of the digital age, it's essential to be equally vigilant about safeguarding our data. AI-driven cybersecurity offers a path forward, enabling us to protect our data in an ever-evolving threat landscape. In this digital age, where data is king, AI is the guardian that stands at the gates, ready to defend and protect.

To discover what AI can do for you and to learn more about Aventior's industry-leading solutions and services, contact Aventior today.

To know further details about our solution, do email us at [email protected].

#AI-Driven#cybersecurity#data privacy#data security#data protection#artificial intelligence technology

1 note

·

View note

Link

DoorDash Inc. is unveiling a game-changing enhancement to its platform with AI-driven #voice ordering , enabling chosen #operators to boost sales and improve experiences while controlling labor costs.

DoorDash, connects customers to local restaurants via its app or website. door dash drivers , known as dashers deliver orders to specified locations, serving numerous cities .

#doordash#ai-driven#voice ordering#news#local restaurants#app#website#door dash drivers#dashers#deliver orders#specified locations#cities

0 notes

Text

WallyGPT: The Growth of Wally and Revolutionizing Personal Finance

The Birth of Wally: A Solution to Common WoesWally’s Early Days: Bridging the Gap with Machine LearningVersion 3.0: Automated Tracking and Global ReachWallyGPT Emerges: The AI RevolutionHyper-Personalization at Its Finest: WallyGPT in ActionGlobal Impact and Data Privacy AssuranceEmpowering Through Uncertainty: Navigating Economic ChallengesA Journey of Innovation and Empowerment with…

View On WordPress

#Acquisition#AI-driven#Aramex#Autopilot functionalities#Bank PDF exports#ChatGPT#Confidence#Covid-19 pandemic#Credit cards#Data Privacy#Debt reconciliation#DIFC-headquartered company#Economic uncertainties#Emergency fund#Excel#Financial aspirations#Financial data#Financial futures#Financial goals#Financial management#Financial services#Frustration#generative AI#Global leader#Global markets#Hyper-personalization#innovation#Investment opportunities#Investment optimization#Machine learning

1 note

·

View note

Text

timothee chalamet by tony liam

3K notes

·

View notes

Text

The surprising truth about data-driven dictatorships

Here’s the “dictator’s dilemma”: they want to block their country’s frustrated elites from mobilizing against them, so they censor public communications; but they also want to know what their people truly believe, so they can head off simmering resentments before they boil over into regime-toppling revolutions.

These two strategies are in tension: the more you censor, the less you know about the true feelings of your citizens and the easier it will be to miss serious problems until they spill over into the streets (think: the fall of the Berlin Wall or Tunisia before the Arab Spring). Dictators try to square this circle with things like private opinion polling or petition systems, but these capture a small slice of the potentially destabiziling moods circulating in the body politic.

Enter AI: back in 2018, Yuval Harari proposed that AI would supercharge dictatorships by mining and summarizing the public mood — as captured on social media — allowing dictators to tack into serious discontent and diffuse it before it erupted into unequenchable wildfire:

https://www.theatlantic.com/magazine/archive/2018/10/yuval-noah-harari-technology-tyranny/568330/

Harari wrote that “the desire to concentrate all information and power in one place may become [dictators] decisive advantage in the 21st century.” But other political scientists sharply disagreed. Last year, Henry Farrell, Jeremy Wallace and Abraham Newman published a thoroughgoing rebuttal to Harari in Foreign Affairs:

https://www.foreignaffairs.com/world/spirals-delusion-artificial-intelligence-decision-making

They argued that — like everyone who gets excited about AI, only to have their hopes dashed — dictators seeking to use AI to understand the public mood would run into serious training data bias problems. After all, people living under dictatorships know that spouting off about their discontent and desire for change is a risky business, so they will self-censor on social media. That’s true even if a person isn’t afraid of retaliation: if you know that using certain words or phrases in a post will get it autoblocked by a censorbot, what’s the point of trying to use those words?

The phrase “Garbage In, Garbage Out” dates back to 1957. That’s how long we’ve known that a computer that operates on bad data will barf up bad conclusions. But this is a very inconvenient truth for AI weirdos: having given up on manually assembling training data based on careful human judgment with multiple review steps, the AI industry “pivoted” to mass ingestion of scraped data from the whole internet.

But adding more unreliable data to an unreliable dataset doesn’t improve its reliability. GIGO is the iron law of computing, and you can’t repeal it by shoveling more garbage into the top of the training funnel:

https://memex.craphound.com/2018/05/29/garbage-in-garbage-out-machine-learning-has-not-repealed-the-iron-law-of-computer-science/

When it comes to “AI” that’s used for decision support — that is, when an algorithm tells humans what to do and they do it — then you get something worse than Garbage In, Garbage Out — you get Garbage In, Garbage Out, Garbage Back In Again. That’s when the AI spits out something wrong, and then another AI sucks up that wrong conclusion and uses it to generate more conclusions.

To see this in action, consider the deeply flawed predictive policing systems that cities around the world rely on. These systems suck up crime data from the cops, then predict where crime is going to be, and send cops to those “hotspots” to do things like throw Black kids up against a wall and make them turn out their pockets, or pull over drivers and search their cars after pretending to have smelled cannabis.

The problem here is that “crime the police detected” isn’t the same as “crime.” You only find crime where you look for it. For example, there are far more incidents of domestic abuse reported in apartment buildings than in fully detached homes. That’s not because apartment dwellers are more likely to be wife-beaters: it’s because domestic abuse is most often reported by a neighbor who hears it through the walls.

So if your cops practice racially biased policing (I know, this is hard to imagine, but stay with me /s), then the crime they detect will already be a function of bias. If you only ever throw Black kids up against a wall and turn out their pockets, then every knife and dime-bag you find in someone’s pockets will come from some Black kid the cops decided to harass.

That’s life without AI. But now let’s throw in predictive policing: feed your “knives found in pockets” data to an algorithm and ask it to predict where there are more knives in pockets, and it will send you back to that Black neighborhood and tell you do throw even more Black kids up against a wall and search their pockets. The more you do this, the more knives you’ll find, and the more you’ll go back and do it again.

This is what Patrick Ball from the Human Rights Data Analysis Group calls “empiricism washing”: take a biased procedure and feed it to an algorithm, and then you get to go and do more biased procedures, and whenever anyone accuses you of bias, you can insist that you’re just following an empirical conclusion of a neutral algorithm, because “math can’t be racist.”

HRDAG has done excellent work on this, finding a natural experiment that makes the problem of GIGOGBI crystal clear. The National Survey On Drug Use and Health produces the gold standard snapshot of drug use in America. Kristian Lum and William Isaac took Oakland’s drug arrest data from 2010 and asked Predpol, a leading predictive policing product, to predict where Oakland’s 2011 drug use would take place.

[Image ID: (a) Number of drug arrests made by Oakland police department, 2010. (1) West Oakland, (2) International Boulevard. (b) Estimated number of drug users, based on 2011 National Survey on Drug Use and Health]

Then, they compared those predictions to the outcomes of the 2011 survey, which shows where actual drug use took place. The two maps couldn’t be more different:

https://rss.onlinelibrary.wiley.com/doi/full/10.1111/j.1740-9713.2016.00960.x

Predpol told cops to go and look for drug use in a predominantly Black, working class neighborhood. Meanwhile the NSDUH survey showed the actual drug use took place all over Oakland, with a higher concentration in the Berkeley-neighboring student neighborhood.

What’s even more vivid is what happens when you simulate running Predpol on the new arrest data that would be generated by cops following its recommendations. If the cops went to that Black neighborhood and found more drugs there and told Predpol about it, the recommendation gets stronger and more confident.

In other words, GIGOGBI is a system for concentrating bias. Even trace amounts of bias in the original training data get refined and magnified when they are output though a decision support system that directs humans to go an act on that output. Algorithms are to bias what centrifuges are to radioactive ore: a way to turn minute amounts of bias into pluripotent, indestructible toxic waste.

There’s a great name for an AI that’s trained on an AI’s output, courtesy of Jathan Sadowski: “Habsburg AI.”

And that brings me back to the Dictator’s Dilemma. If your citizens are self-censoring in order to avoid retaliation or algorithmic shadowbanning, then the AI you train on their posts in order to find out what they’re really thinking will steer you in the opposite direction, so you make bad policies that make people angrier and destabilize things more.

Or at least, that was Farrell(et al)’s theory. And for many years, that’s where the debate over AI and dictatorship has stalled: theory vs theory. But now, there’s some empirical data on this, thanks to the “The Digital Dictator’s Dilemma,” a new paper from UCSD PhD candidate Eddie Yang:

https://www.eddieyang.net/research/DDD.pdf

Yang figured out a way to test these dueling hypotheses. He got 10 million Chinese social media posts from the start of the pandemic, before companies like Weibo were required to censor certain pandemic-related posts as politically sensitive. Yang treats these posts as a robust snapshot of public opinion: because there was no censorship of pandemic-related chatter, Chinese users were free to post anything they wanted without having to self-censor for fear of retaliation or deletion.

Next, Yang acquired the censorship model used by a real Chinese social media company to decide which posts should be blocked. Using this, he was able to determine which of the posts in the original set would be censored today in China.

That means that Yang knows that the “real” sentiment in the Chinese social media snapshot is, and what Chinese authorities would believe it to be if Chinese users were self-censoring all the posts that would be flagged by censorware today.

From here, Yang was able to play with the knobs, and determine how “preference-falsification” (when users lie about their feelings) and self-censorship would give a dictatorship a misleading view of public sentiment. What he finds is that the more repressive a regime is — the more people are incentivized to falsify or censor their views — the worse the system gets at uncovering the true public mood.

What’s more, adding additional (bad) data to the system doesn’t fix this “missing data” problem. GIGO remains an iron law of computing in this context, too.

But it gets better (or worse, I guess): Yang models a “crisis” scenario in which users stop self-censoring and start articulating their true views (because they’ve run out of fucks to give). This is the most dangerous moment for a dictator, and depending on the dictatorship handles it, they either get another decade or rule, or they wake up with guillotines on their lawns.

But “crisis” is where AI performs the worst. Trained on the “status quo” data where users are continuously self-censoring and preference-falsifying, AI has no clue how to handle the unvarnished truth. Both its recommendations about what to censor and its summaries of public sentiment are the least accurate when crisis erupts.

But here’s an interesting wrinkle: Yang scraped a bunch of Chinese users’ posts from Twitter — which the Chinese government doesn’t get to censor (yet) or spy on (yet) — and fed them to the model. He hypothesized that when Chinese users post to American social media, they don’t self-censor or preference-falsify, so this data should help the model improve its accuracy.

He was right — the model got significantly better once it ingested data from Twitter than when it was working solely from Weibo posts. And Yang notes that dictatorships all over the world are widely understood to be scraping western/northern social media.

But even though Twitter data improved the model’s accuracy, it was still wildly inaccurate, compared to the same model trained on a full set of un-self-censored, un-falsified data. GIGO is not an option, it’s the law (of computing).

Writing about the study on Crooked Timber, Farrell notes that as the world fills up with “garbage and noise” (he invokes Philip K Dick’s delighted coinage “gubbish”), “approximately correct knowledge becomes the scarce and valuable resource.”

https://crookedtimber.org/2023/07/25/51610/

This “probably approximately correct knowledge” comes from humans, not LLMs or AI, and so “the social applications of machine learning in non-authoritarian societies are just as parasitic on these forms of human knowledge production as authoritarian governments.”

The Clarion Science Fiction and Fantasy Writers’ Workshop summer fundraiser is almost over! I am an alum, instructor and volunteer board member for this nonprofit workshop whose alums include Octavia Butler, Kim Stanley Robinson, Bruce Sterling, Nalo Hopkinson, Kameron Hurley, Nnedi Okorafor, Lucius Shepard, and Ted Chiang! Your donations will help us subsidize tuition for students, making Clarion — and sf/f — more accessible for all kinds of writers.

Libro.fm is the indie-bookstore-friendly, DRM-free audiobook alternative to Audible, the Amazon-owned monopolist that locks every book you buy to Amazon forever. When you buy a book on Libro, they share some of the purchase price with a local indie bookstore of your choosing (Libro is the best partner I have in selling my own DRM-free audiobooks!). As of today, Libro is even better, because it’s available in five new territories and currencies: Canada, the UK, the EU, Australia and New Zealand!

[Image ID: An altered image of the Nuremberg rally, with ranked lines of soldiers facing a towering figure in a many-ribboned soldier's coat. He wears a high-peaked cap with a microchip in place of insignia. His head has been replaced with the menacing red eye of HAL9000 from Stanley Kubrick's '2001: A Space Odyssey.' The sky behind him is filled with a 'code waterfall' from 'The Matrix.']

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

—

Raimond Spekking (modified)

https://commons.wikimedia.org/wiki/File:Acer_Extensa_5220_-_Columbia_MB_06236-1N_-_Intel_Celeron_M_530_-_SLA2G_-_in_Socket_479-5029.jpg

CC BY-SA 4.0

https://creativecommons.org/licenses/by-sa/4.0/deed.en

—

Russian Airborne Troops (modified)

https://commons.wikimedia.org/wiki/File:Vladislav_Achalov_at_the_Airborne_Troops_Day_in_Moscow_%E2%80%93_August_2,_2008.jpg

“Soldiers of Russia” Cultural Center (modified)

https://commons.wikimedia.org/wiki/File:Col._Leonid_Khabarov_in_an_everyday_service_uniform.JPG

CC BY-SA 3.0

https://creativecommons.org/licenses/by-sa/3.0/deed.en

#pluralistic#habsburg ai#self censorship#henry farrell#digital dictatorships#machine learning#dictator's dilemma#eddie yang#preference falsification#political science#training bias#scholarship#spirals of delusion#algorithmic bias#ml#Fully automated data driven authoritarianism#authoritarianism#gigo#garbage in garbage out garbage back in#gigogbi#yuval noah harari#gubbish#pkd#philip k dick#phildickian

826 notes

·

View notes

Text

GUIDE FOR CONSULTING SERVICES USING ARTIFICIAL INTELLIGENCE

In a recent project using AI for a real estate management and sales company, it became a laboratory for consulting and breaking down resistance to the lack of culture and benchmarks in the local market to compare the use of technology and pricing.

As much as we have technical experience, every day we are surprised by new things and unusual demands, which we have to learn and adapt to because we are consultants and we have to live with all the differences with professionalism.

After this successful experience, I decided to write a guide to help our employees and the market, because what counts at the end of the day are the state of the art, customer satisfaction and problem solving.

I'd like to point out that in the area of AI, as opposed to IT, we can apply 4 solutions, depending on the complexity of the problem: Use of Generative AI with prompts, Use of AI tools customizing the solution for a specific client, Use of NoCode to provide the solution and the development of applications with algorithms and AI technology combined.

The provision of AI consulting services faces significant challenges, but these can be overcome with the right strategies. Promoting an AI culture, adopting an efficient data management strategy, and transparency in the AI market are essential if companies are to make the most of this technology and achieve positive results in their operations.

CHALLENGES IN PROVIDING SERVICES USING ARTIFICIAL INTELLIGENCE (AI) FOR ENTERPRISES.

Lack of Culture

│ ├─ AI is a Very New Technology

│ └─ Majority of Enterprises Lack Culture and Knowledge

├─ Difference from IT

│ ├─ Data and Information Needs to be Cleaned

│ ├─ Data and Information Needs to be Recognized and Mastered

│ └─ AI will Use Data and Information to Create Solutions

└─ Lack of Market References

└─ No Standard Pricing for AI-based Solutions

Addressing the Challenges

├─ Lack of Culture

│ ├─ Educate Enterprises on Benefits of AI

│ ├─ Provide Proof-of-Concept Projects to Demonstrate AI Capabilities

│ └─ Develop AI Adoption Roadmaps for Enterprises

├─ Difference from IT

│ ├─ Emphasize Importance of Data Preparation and Curation

│ ├─ Highlight Need for Domain Expertise in AI Model Development

│ └─ Offer Data Engineering Services to Support AI Implementation

└─ Lack of Market References

├─ Research Competitor Pricing and Offerings

├─ Develop Transparent Pricing Models based on Project Scope

└─ Provide Detailed Proposals Outlining Solution Value and Pricing

Pricing Considerations

├─ Cost of Data Preparation and Curation

├─ Complexity of AI Model Development

├─ Ongoing Maintenance and Support Requirements

├─ Potential Business Impact and ROI for Enterprises

└─ Benchmarking Against Industry Standards and Competitors

Delivering Value with AI Consulting

├─ Understand Enterprise Pain Points and Objectives

├─ Tailor AI Solutions to Specific Business Needs

├─ Ensure Seamless Integration with Existing Systems

├─ Provide Comprehensive Training and Change Management

├─ Monitor and Optimize AI Models for Continuous Improvement

└─ Demonstrate Measurable Bus

LACK OF CULTURE

- AI is a Very New Technology: The rapid advancement of AI technology presents a unique challenge for enterprises, especially those new to the field. The novelty of AI means that many companies lack the foundational knowledge and understanding required to leverage its full potential.

- Majority of Enterprises Lack Culture and Knowledge: The absence of a culture that embraces AI within organizations hinders the adoption and effective utilization of AI technologies. This gap in knowledge and culture can lead to missed opportunities for innovation and efficiency gains.

Difference from IT

- Data and Information Needs to be Cleaned: Unlike traditional IT projects, AI projects require meticulous data cleaning and preparation. This process is crucial for training AI models accurately and efficiently, yet it is often underestimated in terms of time and resources.

- Data and Information Needs to be Recognized and Mastered: Beyond cleaning, recognizing and mastering the data and information used in AI projects is essential. This involves understanding the nuances of the data, its structure, and how it relates to the problem at hand, which is a skill set that may not be readily available within all organizations.

- AI will Use Data and Information to Create Solutions: The ultimate goal of AI projects is to use data and information to create intelligent solutions. However, achieving this requires a deep understanding of both the data and the AI technologies themselves, which can be a significant hurdle for organizations without the necessary expertise.

Lack of Market References

- No Standard Pricing for AI-based Solutions: The lack of established market references for pricing AI-based solutions complicates the procurement process for enterprises. Without clear benchmarks, it becomes challenging for companies to determine the fair value of AI services, leading to uncertainty and potential overpricing.

ADDRESSING THE CHALLENGES

Lack of Culture

- Educate Enterprises on Benefits of AI: Raising awareness and understanding of AI's benefits is crucial. This can be achieved through educational workshops, seminars, and training programs tailored to different levels of the organization.

- Provide Proof-of-Concept Projects to Demonstrate AI Capabilities: Demonstrating the tangible benefits of AI through proof-of-concept projects can help overcome resistance and foster a culture of innovation.

- Develop AI Adoption Roadmaps for Enterprises: Creating a structured plan for AI adoption can guide organizations through the process, ensuring they have a clear path to integrating AI into their operations.

Difference from IT

- Emphasize Importance of Data Preparation and Curation: Highlighting the importance of data preparation in AI projects can help organizations allocate the necessary resources and attention to this critical step.

- Highlight Need for Domain Expertise in AI Model Development: Recognizing the need for domain-specific expertise in AI model development can guide organizations in seeking out the right skills and partnerships.

- Offer Data Engineering Services to Support AI Implementation: Providing data engineering services can support organizations in preparing their data for AI, bridging the gap between data readiness and AI deployment.

Lack of Market References

- Research Competitor Pricing and Offerings: Conducting thorough research on competitor pricing and offerings can provide a basis for developing transparent and fair pricing models for AI services.

- Develop Transparent Pricing Models based on Project Scope: Creating pricing models that reflect the scope and complexity of AI projects can help ensure that enterprises receive value for money.

- Provide Detailed Proposals Outlining Solution Value and Pricing: Offering detailed proposals that clearly outline the value and pricing of AI solutions can enhance transparency and trust between service providers and their clients.

PRICING CONSIDERATIONS

- Cost of Data Preparation and Curation: The cost associated with preparing and curating data for AI projects should be considered in the overall pricing structure.

- Complexity of AI Model Development: The complexity of developing AI models, including the need for specialized expertise, should influence pricing.

- Ongoing Maintenance and Support Requirements: The ongoing maintenance and support required to keep AI models effective and up-to-date should be factored into pricing.

- Potential Business Impact and ROI for Enterprises: The potential return on investment (ROI) that AI solutions can offer should be considered in pricing, reflecting the value that AI can bring to businesses.

- Benchmarking Against Industry Standards and Competitors: Pricing should be benchmarked against industry standards and competitors to ensure fairness and competitiveness.

DELIVERING VALUE WITH AI CONSULTING

- Understand Enterprise Pain Points and Objectives: Gaining a deep understanding of the enterprise's pain points and objectives is crucial for tailoring AI solutions effectively.

- Tailor AI Solutions to Specific Business Needs: Customizing AI solutions to meet the specific needs of the business ensures that the solutions are relevant and impactful.

- Ensure Seamless Integration with Existing Systems: Integrating AI solutions seamlessly with existing systems is key to avoiding disruption and maximizing the benefits of AI.

- Provide Comprehensive Training and Change Management: Offering comprehensive training and change management support helps organizations adapt to new AI technologies and processes.

- Monitor and Optimize AI Models for Continuous Improvement: Regular monitoring and optimization of AI models ensure that they remain effective and aligned with evolving business needs.

- Demonstrate Measurable Business Impact: Showing measurable business impact through AI solutions helps justify the investment and fosters continued support for AI initiatives.

RDIDINI PROMPT ENGINEER

2 notes

·

View notes

Text

Guys, I’m just sitting here thinking about how it is SO GOOD that AO3 doesn’t have an algorithm. There are so very few online spaces left these days that don’t. Like, can you imagine if certain stories/authors/content were promoted while others were suppressed algorithmically? And by an AI no less?? And how bad that would be for authors and readers and the culture of fandom overall?!

#grateful this space has thus far dodged AI-driven editorialization#and as an archive I imagine that won’t ever change#even tumblr does a decent job of handling this comparatively I feel like?#fandom#ao3 fanfic#ao3#fanfiction

158 notes

·

View notes

Text

what have you done

#aitsf#aitsf spoilers#ai:tsf#ai the somnium files#ai: the somnium files#jupewter#pewter aitsf#renju okiura#nooo dont be driven to become a corrupt visage of pure love and vengeance for the phantom remains of your doomed secret lover ur so sexy ah#oops get eros and psyched losers womp womp#luxraydyne's pewter saga#my art

113 notes

·

View notes

Note

How do you feel about the increase in really weird NSFW ads on here (advertising panels that look like sexual encounters, and AI art apps that pride themselves on porn) but will take down NSFW posts from their users, even if it isn't technically sexual.

i hate all social media and it's consistent prioritising the advertisers over the users and the internet simply was a better place before capitalism sunk its hooks into it

#i could write essays about how capitalism ruined the internet.#i was actually talking to someone earlier today about how youtube was kind of effectively ruined by monetisation.#and they were raised in the soviet union and we had a bit of a talk about how art was better because it wasn't for profit.#the people who made art made it because they wanted to do it and because they loved it.#she said that communism was terrible for every aspect of life for her. people's lives under communism wasn't pretty.#but the art was better. and i feel like it's true for the internet – it was better when it was a free-for-all.#the companies didn't know how to exploit it yet and turn it into a neverending profit-driven hellscape.#people created content because they wanted to. because they wanted to make something silly to make people laugh.#not for profit. not for gain. not for numbers. not to further their career.#i miss the days of newgrounds and youtube before monetisation.#capitalism has soiled everything that's joyful and good in this world.#people should be able to share whatever they want.#people should be able to tell any story they want without the fear of being silenced by advertisers.#that's what made the internet so beautiful before. anyone could do anything and we all had equal footing.#but now we're victims of the algorithm. and it makes me sick.#i'm quitting my job in social media. i'm quitting it. it makes me too depressed. i have an existential crisis every freaking day.#every day i wake up and say "ah. this is the fucking hell we live in#i'm so sorry i feel so passionate about this.#social media is a black hole and it is actively destroying humanity. forget ai. social media is what's doing it.#i miss how beautiful the internet used to be. it should've been a tool for good. but it's corrupt and evil now.#sci speaks

88 notes

·

View notes

Text

I hc wash and south (and by proxy, north) as all being ODSTs prior to pfl and one thing I really like about hcing them as such is that it adds another layer of depth to why they were all chosen to be a part of the recovery force.

ODSTs are a special forces unit within the marines, and they're generally used as force amplifiers and in high risk or sensitive operations. two such scenarios include the recovery or recapture of personal and high level assets behind enemy lines, as well as deep reconnaissance and intelligence gathering. ODSTs are also used in politically sensitive operations, which pfl was following the crash of the moi.

so basically, who better to be on the recovery force than former ODSTs who already have a background doing the kind of work that would need to be done?

this also adds to some of the tension between north and south as well imo, as while they're a great team who are capable of working together they clearly have two very different skillsets—south is not portrayed as someone who has the patience necessary for long reconnaissance missions, and part of the reason why team b failed so spectacularly is because two snipers and an intelligence operative are not a good choice for a smash and grab mission. had north been replaced with south things would've probably went way better for them, because south is actually fairly similar to wash in her "get in, get it done, get out" mentality, though where wash comes off as more methodical and is willing to take that "wait and see" approach, south throws caution to the wind and has a "we'll cross that bridge when we get there" approach.

this is probably why wash and south were (on paper at least) going to get eta and iota—south would've benefited greatly from having an ai that was afraid and anxious as it would force her to slow down and think things through more, and wash getting an ai that was happy and cheerful would force him to loosen up a bit and be less high-strung and serious.

#rvb#red vs blue#agent washington#agent south dakota#agent north dakota#related but not related but i think the reason wash and epsilon ended up in the situation they did is bc they were hyper compatible#like most of the ai are paired w a freelancer to either balance something out or to amplify a specific trait#for example north was given theta so he'd get off south's back or sigma could've helped lina be driven by more than just competition#so giving an ai that is memory to someone who already has a great memory in theory seems like it would be good. but if the memory is just a#glowing ball of trauma then. well. it would impact them a lot more than someone who doesn't have a great memory#like tbh i think delta could've gone to maine and epsilon to york bc the guy is kind of scattered and his adhd vibes could benefit from a#better memory while maine who has a habit of letting his anger and frustration get the best of him would've done well w calm logical delta

55 notes

·

View notes