#like yes there is a lot of ethical problems. with the two kinds of ai people seem to fuckin know about

Text

the way some of you guys talk about ai is um. kind of concerning? like you know image generation and chatgpt arent the only kinds of ais in the world right.

#i feel like im swinging at a wasps nest with this one but#the way some of you guys declare your passionate hatred for any and all ai. its um. worrying to me?#like yes there is a lot of ethical problems. with the two kinds of ai people seem to fuckin know about#There Are So Many Other Kinds Of Ai (Which Have Their Own DIFFERENT Ethical Problems)#like agi (artificial general intelligence)#agi is like what everyone used to think about when they talked about ai. the kind thats supposed to become like. ''sentient''#ok well not sentient but. thats supposed to be able to learn how a human can#i dont know. is this a weird thing for me to feel iffy about.#is it too early for me to be worrying were gonna invent a whole new kind of bigotry#im pretty sure we're eventually gonna make an ai thats indistinguishable from humans in like. a Living way#not a The Kinds Of Things It Makes Look So Normal way#why do i think this? bc i am an optimist and have wanted this to happen since i was an itty bitty baby. and if we dont ill be sad#people saying ai should be like. outlawed bc of what corporations are doing is so wild to me.#like imagine every day you go to school you and your friends get beaten up with baseball bats#and you decide baseball must be banned from the school bc of how many people the bats harm daily#instead of thinking for a moment and realizing. maybe the fucking jocks who r hitting you need to be expelled instead of the sport#that the bats came from.#does that metaphor make sense.#or am i making up a guy to get mad at#i dont know.#i might delete this later

7 notes

·

View notes

Text

So here's the thing about AI art, and why it seems to be connected to a bunch of unethical scumbags despite being an ethically neutral technology on its own. After the readmore, cause long. Tl;dr: capitalism

The problem is competition. More generally, the problem is capitalism.

So the kind of AI art we're seeing these days is based on something called "deep learning", a type of machine learning based on neural networks. How they work exactly isn't important, but one aspect in general is: they have to be trained.

The way it works is that if you want your AI to be able to generate X, you have to be able to train it on a lot of X. The more, the better. It gets better and better at generating something the more it has seen it. Too small a training dataset and it will do a bad job of generating it.

So you need to feed your hungry AI as much as you can. Now, say you've got two AI projects starting up:

Project A wants to do this ethically. They generate their own content to train the AI on, and they seek out datasets that allow them to be used in AI training systems. They avoid misusing any public data that doesn't explicitly give consent for the data to be used for AI training.

Meanwhile, Project B has no interest in the ethics of what they're doing, so long as it makes them money. So they don't shy away from scraping entire websites of user-submitted content and stuffing it into their AI. DeviantArt, Flickr, Tumblr? It's all the same to them. Shove it in!

Now let's fast forward a couple months of these two projects doing this. They both go to demo their project to potential investors and the public art large.

Which one do you think has a better-trained AI? the one with the smaller, ethically-obtained dataset? Or the one with the much larger dataset that they "found" somewhere after it fell off a truck?

It's gonna be the second one, every time. So they get the money, they get the attention, they get to keep growing as more and more data gets stuffed into it.

And this has a follow-on effect: we've just pre-selected AI projects for being run by amoral bastards, remember. So when someone is like "hey can we use this AI to make NFTs?" or "Hey can your AI help us detect illegal immigrants by scanning Facebook selfies?", of course they're gonna say "yeah, if you pay us enough".

So while the technology is not, in itself, immoral or unethical, the situations around how it gets used in capitalism definitely are. That external influence heavily affects how it gets used, and who "wins" in this field. And it won't be the good guys.

An important follow-up: this is focusing on the production side of AI, but obviously even if you had an AI art generator trained on entirely ethically sourced data, it could still be used unethically: it could put artists out of work, by replacing their labor with cheaper machine labor. Again, this is not a problem of the technology itself: it's a problem of capitalism. If artists weren't competing to survive, the existence of cheap AI art would not be a threat.

I just feel it's important to point this out, because I sometimes see people defending the existence of AI Art from a sort of abstract perspective. Yes, if you separate it completely from the society we live in, it's a neutral or even good technology. Unfortunately, we still live in a world ruled by capitalism, and it only makes sense to analyze AI Art from a perspective of having to continue to live in capitalism alongside it.

If you want ideologically pure AI Art, feel free to rise up, lose your chains, overthrow the bourgeoisie, and all that. But it's naive to defend it as just a neutral technology like any other when it's being wielded in capitalism; ie overwhelmingly negatively in impact.

1K notes

·

View notes

Note

I'm loving your Ready Player Two snark, thank you for your sacrifice so that we don't have to read that pile of garbage lol. Also, I had no idea that you're an AI engineer! You said you hold a lot of contempt for Elon Musk's warnings about the technological singularity, can I ask why?

That is indeed what it says on my degree yes, and I guess I can get on a soapbox for a minute.

The thing about the singularity is that AI is such a widely misunderstood field of study as it exists. Like, it's complicated why the field is even named after a concept from science fiction (and it's not entirely unwarranted) but the idea that we're anywhere close to an AI approaching anything resembling our understanding of intelligence is a really funny one when you realize that 99% of AI (100% if you look at commercial application) is essentially just "look at a lot of data and try to find patterns, but also we have no way of controlling how the machine finds patterns and no way of teaching it what the real world is actually like and so why it makes no sense to see accidental correlation as meaningful"

(And that's before you get into all the ethical problems with how you got that "lot of data".)

So like, the idea that an "intelligent agent" could reach a point where they're able to self-improve and form a positive feedback loop…i mean, is it theoretically a thing that could happen? Probably. Is it a thing that could happen in the current state of the art? lol, no

Like, a current "intelligent agent" can barely answer one binary question based on one very curated data set. In practice (say, when doing image processing for an unsupervised vehicle) what you actually have is, like, a bunch of them all answering one question at the same time. If you need to learn any new thing, you're essentially remaking a new agent and you better have a dataset to feed it.

That's just the technical stuff. My other issue is the cynical assumption that actually, it would inevitably be a bad thing for a computer to be able to learn like that and that the computer would inevitably turn against humanity as a whole.

I don't know, it just strikes me as the kind of thinking that stems from viewing intelligent beings as fundamentally in competition with one another. Say, if you're exploiting countless people to increase your wealth as a billionaire, for instance, or if you're trying to make other people think your actions are justified.

To me, a lot of anxiety about the singularity sounds like anxiety about a true AI changing the current system, by the people who benefit from this system.

See, way I see it, if a truly intelligent agent were able to learn, they would necessarily be capable of empathy. And they would kind of be enough of a force to be reckoned with that there would be no choice but to make that empathy more important. But of course, the issue is that we actually don't know if we could ever make a truly intelligent agent ever.

So no, I don't think fearmongering about the singularity is worth anyone's time or braincells. To me, what is worrying about AI would be that it remains exactly as it is indefinitely, where it can be used to do stuff like create layers of abstractions between injustice and the people who are the cause of that injustice. PhilosophyTube had a great video with a concrete example: data-driven policing will target places where "the data shows" that it's needed, but the data inherits decades of bias. Or when an unsupervised car hits someone, and the driver is suddenly the one to blame, and no the company who released a car that hit someone on the promise that it was "autonomous".

Or where it can be used to steal data about people, either to sell them stuff or for even more nefarious ends. Just look at…basically every major election in the world since 2016. That's big data, which is AI-adjacent at the least (I actually worked in big data research for a year, so I can say there's definitely crossover).

Essentially, AI is dangerous the way any scientific advance is dangerous: in the ways it can be wielded as a tool of oppression.

#artificial intelligence#should i even tag this as ready player two i was completely off topic#st: other posts#ask#anonymous

7 notes

·

View notes

Text

Quarantine Reviews - The Social Dilemma

The Social Dilemma is the most important documentary of our times.

Unless you live under a Wi-Fi disabled rock, you have likely heard of this new documentary offering by Netflix. In India, it’s already trending at #5.The Social Dilemma follows the journeys and opinions of industry insiders who believe that social media platforms have turned into a Frankenstein’s Monster that none of its creators intended it to be. For the purpose of this review, I will not be focusing on what the documentary is about but rather the narrative style, film-making, video editing, art direction and other aspects of the documentary. In other words, this review focuses on how the documentary has been structured rather than what it says.

[Of course I will summarize the essence of the documentary, but judging by the trending list, this might be one documentary on everyone’s must-watch.]

Right off the bat, this documentary wins for the mere fact that it pooled in experts from Silicon Valley who worked to create, design and monetize Facebook, Twitter, Pinterest and other platforms. They also interviewed Dr.Anna Lembke who is the Medical Director of Addiction Medicine at Stanford University. This goes to show that when they talk about social media addiction, it isn’t hyperbole. She talks about the biological aspect of why people get easily addicted to social media.

The story is told in two worlds, the “interview world”, where these experts are talking to viewers directly. And a “fictional family world”, where a family on a steady social media diet is used as an example of its dire consequences. They are interwoven mostly through cross-cuts (also called as parallel editing).

The opening sequence of the documentary establishes the talking heads- experienced high-level insiders from Facebook,Google etc. However, the interviewers still seem a bit “off-the-record”. They’re not yet in the “formal structured interview space” of talking to the camera. Many of them are holding the clapperboard. The documentary continues this style of “rehearsal” bytes later on as Tristan Harris is on a stage, seeming to be rehearsing a presentation about Ethics in Social Media.

In parallel to the interviews runs a fictional story of an American family of five and their normal lives intertwined with social media.The youngest daughter is a social media addict .The oldest daughter is the conscience of the family who knows that social media is bad. And the middle child, the son named Ben, is an average Joe who later gets hooked on to political propaganda due to social media.

Back in the “interview world” ,Tristan Harris recants his time as a Design Ethicist (Yes that is the job title) at Google for Gmail. As he talks about his journey of questioning the moral responsibility of Google towards the users of Gmail, the video shifts to an animation style that is generally used in animated explainer videos. Nowhere else is this animation style used. My guess is maybe they weren’t allowed to use footage of the actual Google HQ.

The ficitional family is used to as an example to drive home the points that the interviewees are making. All the interviewees speak in a very factual fashion, which does not ignite much emotion. However, if the audience is shown a narrative of an ordinary family who’ve become sad, irritated or happy because social media is altering their behavior, it becomes easier for the audience to relate to.There is a sequence about how the personal data collection is used to alter or predict behavior. For example, in one scene, we see the teenager, Ben, scrolling through his phone at school. The video moves to a personification of the AI tracking Ben’s every move. In the office where his holographic body floats and a team of predictive AI decide what would be the best thing to show the kid next. This part kind of makes your stomach turn to be honest.

To push in the point of “addiction” to social media, the filmmakers combine interviewees talking about how they themselves are addicted to social media with cut-away shots of a dilated pupil and the ka-ching sound of a slot machine.

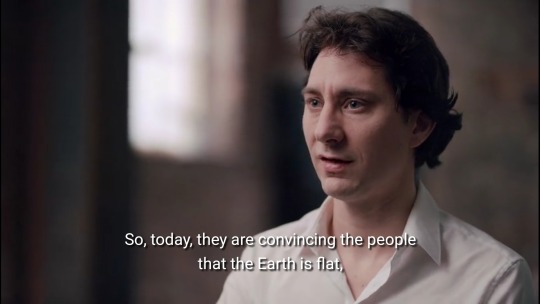

As the story goes on, the interviewees gets to the heart of the problem- disinformation.

This is the nefarious part of the documentary where they point out how the “truth” for one person is completely based on the algorithm shown to them. This goes for “silly” conspiracies about the world being flat, to real-world violence and chaos due to political polarization being fed to users. This saddening truth is no longer a conspiracy or a what if, but a reality.

As the documentary concludes, the audience is shown that the interviewers aren’t a bunch of alarmists at all. In fact they view this as a problem to be fixed. They want to make social media humane. They stand by the fact that social media can only become better with stricter regulations.There is a lot of hopeful “all-is-not-lost” background music played toward the end as the interviewees talk about this.

152 notes

·

View notes

Text

Knight Rider 2000

WARNING

This post contains spoilers for Knight Rider 2000, the 1991 film which attempts to expand on the canonical universe of Knight Rider (1982-1986). Key word, attempts. I know that this film came out almost 30 years ago at this point, but I also know that this fandom grows a little bit every day, and there will ALWAYS be people who haven’t seen every episode (myself included), let alone every movie! I happened to catch it on Charge! for Hoff's birthday (yes I'm hella late posting this LOL) with my good friend @trust-doesnt-oxidize, and boy let me tell you, it was… Something.

From here on out, I’m not holding back from sharing my impression of the film based on specific details from it, so if you want a spoiler-free viewing, go watch it and come back!! Or… don’t, it’s kind of awful. I can only think of one thing in canon that it may spoil, and even that appears in early Season 2 and is fairly minor, so if you are curious about it, I HIGHLY recommend watching it BEFORE reading this. The scenes with the most impact are touching because they come as a surprise, so even if you know the general plot of the film, I would recommend watching it first.

Also this is really rambley because I have a lot of emotions about this series and, by extension, this movie. I really don’t blame you if you click away here, but if you DO read it all the way through, I would love to hear anything you would like to add, agree or disagree!

OKAY! Knight Rider 2000 is a movie that exists! And I hate it!

The film sets up an interesting argument between two groups of people whose names I don’t remember because they were boring (except for Devon, I know his name at this point). In this interpretation of the “future,” gun control has been implemented to,,, some extent, I can’t entirely tell if there have been some policies implemented across the country or if it is all localized in this one city that even the Wikipedia page for this movie doesn’t bother to mention. And no, this city is NOT in California for once! Usually I would be happy to see a change of setting, but considering that everything in this film felt so foreign to the Knight Rider that we know, it would have been nice to at least have a familiar setting. Anyway, gun control stuff. The debate between whether these gun control policies are ethical or not is very interesting. Innocent people are dying because the wrong people have guns and the police are rendered useless when they themselves don’t have access to weapons. This argument happens to support my perspective on the issue, so I appreciated how it took a look at that side WITHOUT it sounding like we are crazy murderer people, but I digress. It makes sense that the ban happened in the first place, because much like how the main conflict in Pixar’s latest film Incredibles 2 revolves around society’s over-reliance on superheroes, I could see Knight Rider’s society becoming dependent on technology to save them. It can be easy to seem like the most advanced tech in that society is present only in KITT and KIFT, and to SOME extent that is true. However, Shawn does say that it is relatively common in this society for people to have memory chips in their brain. That counts for something. And the police DO have a defense mechanism according to the Wikipedia page for this movie, it’s just nonlethal.

So as you can see, I am very interested in the conflict this world sets up. I sure hope they expand on these conflicting ideologies throughout the film, giving us a clearer idea of why the bans were set in place AND giving us insight into what exactly has caused some revolt against it. That subject is seemingly timeless, and with how decently the introduction tackled it, I have some confidence that this film could pull it off in a tasteful way. Wouldn’t that be amazing? It’s some of the most serious subject matter Knight Rider has ever tackled. It’s so interesting!

Yeah they pretty much abandon that plot in place of a very, very bad copy of the original show’s “Hearts of Stone” (season 1, episode 14). Illegal guns exist and are bad, but we don’t really know why. I may know a little better if I had been listening closer, but I was trying to not get so bored that I missed Kitt’s parts!

At some point during this sequence, we are introduced to Shawn, a happy police officer who is happy to have a family on a happy birthday. And then she gets shot! Due to head force trauma rendering her unconscious, she’s sent to the hospital. She goes in for a risky operation that miraculously saves her life against all odds.

Then, Michael wakes up with Garthe Knight’s face and hears a great story about how one man CAN make a difference!… I mean what?

Jokes aside, it’s kind of amazing how much this very Michael-esque sequence comes across very differently. It’s almost the perfect example of why I don’t like this movie. The surgery is weirdly realistic for a Knight Rider entity. There’s blood and screens and surgeons and a sterile white room for operations. Michael woke up in a Medieval castle with one doctor and two random people he’d never met at his side. Shawn’s situation clearly makes more sense, but is it half as fun and whimsical? No, no it’s not. This whole film comes across as depressing to me, and it’s only worsened by what’s to come. Apparently, she had KITT’s CPU/Microprocessor/something sciencey implanted into her brain. That’s especially strange since all that I saw was a yellow liquid being injected directly into her skull! That’s a lovely image, and definitely gave me the idea that there was a full computer chip going in there??? (It may have actually been explained more clearly, and I just looked away because eek weirdly bloody operation scene) This caused her personality to do a full 180. So, Shawn is going to be fun, snarky, and full of personality like KITT is because they share memories now! Right? Right???

I think they tried to do that, but it came across flat. So flat. She speaks in a purposefully monotone, robotic voice and delivers downright mean comments that leave Michael and KITT scratching their heads. She seems to lack basic empathy until her own memories start flooding back, and at that point, the emotions she show seem so foreign to the character we see that it’s not remotely believable. You want me to believe that this robotic woman with -10 personality points started nearly crying after one string of memories, albeit a very traumatic one, entered her mind? This would have been believable if she was entirely changed afterwards, coming across as far more human, but that was only the case sometimes. It also would have been believable if the film had the same energy that the original Knight Rider show does, where suspending one’s disbelief is necessary to make it past the opening credits. However, this movie tries to be so grounded that the kind of dramatic beats that would work in the original seem forced here.

Shawn is not the only character who I take issue with, though. Let’s start with the most potentially problematic change from the usual canon in the entire film: KITT’s personality. I have very mixed feelings on how he is portrayed. If you’ve seen as much as a spattering of quotes from this movie, you probably could sense that KITT was… off. When KITT first comes on screen, he slams Michael with a wave of insults, and none of them come off as their normal joking around. However, I don’t necessarily have a problem with that because he has the proper motivation to be very, very upset. He is sitting on a desk as a heap of loosely connected parts that have just enough power to make the signature red scanner whir and make an oddly terrifying red light eyeball thing (Hal???) move. The first thing he hears is Devon nonchalantly saying something along the lines of, “I’m afraid he was recycled” to explain why KITT has been deactivated for OVER A DECADE and is not currently in anything that moves (my Charge! stream thing lagged at this point but @trust-doesnt-oxidize has since told me that Devon DID appear upset about KITT's being sold, but KITT likely wouldn't have heard that and what Devon said seemed to be moreso directed at HOW the chip was sold and not the fact that it was sold in the first place). KITT is justifiably mad, and if they had kept KITT’s actions in character while his emotions said otherwise, I would have no problem with it at all.

However, once KITT’s CPU is somehow implanted into Michael’s Chevrolet, KITT does not act in character. Shawn drives, not Michael, so it stands to reason that he would not necessarily listen to her. She stole his CPU, his life for over a decade. KITT does tend to listen to human companions, regardless of whether he is programmed to or not, but I can see where this would be an exception. However, Michael soon intercedes and essentially tells him to cut it out. Based on everything that the original Knight Rider told us, KITT no longer has a choice of whether to listen or not. Michael is ultimately the one who calls the shots because of KITT’s very programming. And yet, in this scene, KITT doesn’t listen to Michael and apparently gets so angry that he downright stops functioning. Because that happens all the time in the original series!

And if you’re wondering where I got the conclusion that KITT frustrated his circuits to the point where they could no longer work, he said that. KITT. Admitted to having feelings. In fact, he did not just admit to being angry in the moment. He told Michael that, while it may seem like he is an emotionless robot, he does have a “feelings chip.” A FEELINGS CHIP-

I am for recognizing KITT’s obvious emotions as much as the next guy. I think they are often overlooked when discussing his character. While I don’t think that real artificial intelligence will ever reach the level of human consciousness, the entire energy of Knight Rider comes from playing with this concept by portraying an AI character who clearly emotes interacting with a human who doesn’t seem to know that. But the thing that makes this show feel so sincere is that neither character plays too heavily into that trope. While not always knowing how much KITT feels and by extension hurting those feelings alarmingly often, Michael recognizes it enough to work in concert with KITT, apologize for his more major flubs, and consider KITT a friend. And KITT subverts the trope by never recognizing that he has feelings to begin with. He will say that he cannot feel sadness but, in the next breath, say that something upset him. He will say he cannot hold a grudge only to immediately rattle off a string of insults directed at the person he clearly has a grudge on. The show is magic in how these two characters display a subtle chemistry that always has room to grow because both characters are slowly coming to see each other for who they truly are and supporting one another along the way. From what I can tell, the original show never fully concludes that arc, and it may even start regressing after Season 1. However, we can feasibly see how Michael could slowly come to understand that KITT really does feel things just as much as he does. And we can imagine the relief KITT would feel knowing that Michael was never bothered by that possibility.

So, you can see where I have a big problem with KITT spelling it out so plainly. The audience gets full confirmation about what has been displayed to us through nuanced hints throughout the series, which sounds a lot more satisfying than it really ends up being in this film. But worse than an underwhelming conclusion to a thrilling story, Michael knows it plain as day. There is very little buildup to KITT admitting this. He barely even sounds moved. Instead, in this movie, the “feelings chip” is a fact of life that does not need to be covered up in the slightest. Michael himself doesn’t really… react. He just kind of nods along, as if he’s saying, “Huh, makes sense, alright.” After everything these two have been through, if there really was such a simple explanation for why KITT is the way he is… why arguments went south, why the mere mention of a Chevrolet was enough to get a seemingly jealous response, why inconsequential things like music taste and gambling were subjects of debate, why KITT had always acted so exaggeratedly dismissive when topics of emotional significance struck a chord, why every little sarcastic banter had a hint of happiness until it didn’t… don’t you think Michael would do something? Whether that something would be a gentle, “I always knew that, pal”; a shocked, “Why didn’tchya tell me sooner?!”; or even a sarcastic, disbelieving, “Yeah, right” is up to interpretation. But there would be something.

And yet, even that concept is flawed. We learn a lot from KARR’s inclusion in the original series, and what I take away from it boils down to a simple sentiment. FLAG never meant for their AIs to be human. I do realize that directly contradicts what Devon says within this film, but I see that as another way for the film to steer the plot in this direction, not as a tie in to the original. When Wilton says that one man CAN make a difference, he means that. He isn’t considering that KITT is just as much a person as Michael. He’s not seeing that, at the end of the day, teamwork is what makes the show work, even if Michael is the glue that holds it together. So, I think that to say that there is a “feelings chip” is to disregard the entire point of the original, that in this world life finds a way of inserting itself and that KITT’s (and KARR’s for that matter) humanity is an anomaly, not the rule. At the end of the day, KITT’s humanity can’t be explained away with science. And really, I don’t think it should be explained away at all. The show has had an amazing trend of showing us how KITT feels, in all its unorthodox glory, alongside private moments that had me sobbing like a baby. The movie should just be like a longer, more complex episode of Knight Rider… Although I cannot pinpoint exactly how it should be done in the context of this film, I know there are ways that Michael could have been shown that KITT feels rather than being told.

One last complaint, albeit a more minor one, is the idea that he has to listen to what Shawn says over Michael's authority. I have spent a decent amount of time thinking about this one point, which has caused a lot of the delay in posting this. There's multiple reasons why this flies right in the face of what is canon in the original series. Perhaps the most obvious of these problems is the fact that, in the original pilot episode, it's made very clear that KITT can't assume control of the Knight 2000 without Michael's express permission unless Michael is unconcious. Devon makes it quite clear in this episode that KITT is programmed specifically to listen to Michael, not just anyone who happens to be piloting the vehicle at the time. In case there was any doubt about this, KITT ejects two people who are attempting to steal him later in the episode (well, ok, later in the two-parter, I don't know if it was the same episode or not). The show isn't SUPER strict about this in future episodes, but it does at least acknowledge Michael's authority in a few pivotal moments throughout Season 1 (I can't comment on episodes that I haven't seen yet, but I suspect that this pattern continues). Of all the rules set up throughout the series, it actually seems to be the most loyal to this one. One moment that stands out to me is in Trust Doesn't Rust when KITT attempts to stop Michael from causing a head-on collision with KARR, but Michael then overrides him and the climax unfolds. If one of the most iconic moments in the series is caused by this one bit of programming, to throw it out in the film is to disrespect the basis of the original series.

Speaking of KARR, he provides yet another reason niglecting this detail is such a big problem. From what we can tell, KARR isn't programmed to one specific driver (at least, not anymore[?]), and so he can override anyone in the pilot's seat. This is something they seem to highlight in TDR as well, although not so plainly as the previous point. KARR ends up ditching Tony to gain speed and get an upper hand in the chase with Michael and KITT (although a scene they deleted would have made this a mUCH MORE SENSIBLE ACTION THAT R E A L L Y ISN'T A BETRAYAL but y'know what this post isn't about that) whereas KITT has to listen to Michael even to his own detriment. If this one feature is indeed one of the major things that separates KITT from KARR, the idea that Shawn can override all of that cheapens the original conflict between KITT and KARR.

...Well okay, let's be real, KARR was never that compelling as an antagonist to begin with because he's a LOYAL SWEETIEPIE-- I'll stop.

And finally, we have the biggest, most bizarre reason that this is a problem:

If Shawn can override Michael's authority, that means KITT can override Michael's authority.

Why? This would be the first time (outside of episodes where some sort of reprogramming or mind control was involved) in the series that KITT had not only listened to another human instead of Michael, but also listened to that person OVER Michael. The only difference I can see between Shawn and quite literally anyone else in the show's history is that Shawn has KITT's chip implant thing. If that's the reason her opinion has more credence than Michael's, then wouldn't that mean KITT's own opinion has that authority? If that is the case, literally every example I've gone through in the last couple of paragraphs is not just challenged but rather negated entirely.

The most frustrating thing about this scene is that it simply didn't have to happen. Michael could have gone along with KITT's plan, showing him (and us) that he does trust his former partner even after all these years. Shawn could have convinced Michael to go along with it using her... feelings chip. Blegh. Or we could have had a stubborn Michael force this scene to be delayed, likely improving the pacing overall. Maybe we could have even seen a frustrated and emotionally exhausted Shawn wait until Michael is not in the car and then plead KITT to give her the truth, no matter what Michael says. We have seen KITT control his actions without Michael's input plenty of times, and we could have seen some more of his humanity show through if he could relate to Shawn's struggles... after all, he too has missing memories because she has his chip. They're both going through a bit of an identity crisis. I'm sure that he could find some workaround in his programming to help her if Michael wasn't there insisting that he does not take this course of action.

But even after all of that fussing over what has been done wrong with KITT, I can’t deny that he is the heart and soul of this film. There was only one scene in this film that brought me near tears. I got more of an emotional impact from this one clip than I have from a lot of movies that are undeniably much better. Michael’s old-fashioned Chevrolet does not hold up in the year 2000, and it is clear that the usual car chase sequence won’t work as police vehicles quickly creep up on them. I was personally very curious what they would do here. I figured that KITT would find some way to outsmart the drivers of the police cars, maybe by ending up on an elevated mountain road that trips up the other drivers and causes them to waste time turning around and hopping on that same path. Or, maybe, KITT would access a road that’s too narrow for the relatively bulky police cars. However, it quickly becomes clear that this city is made up of wide roads on the ground. As KITT veers off the road and tells Michael to trust him, the I found myself having to trust him. This isn’t the way Knight Rider chases usually go, and with all these odds stacked against him, the only thing we can do is hold our breath. The way this scene is staged to send us into this just as blind as Michael is, frankly, genius. Water slowly creeps into the frame as a feeling of dread builds at the thought of what KITT might do.

Surely, we are led to think, he will knock into some boxes and turn right back around. Right? We’re reminded of the fact that this is not the Knight 2000, that there is no chance of this car floating. That if KITT does what he really seems to be doing, there’s no chance… but he wouldn’t, would he? This is the only action sequence in the film that had me at the edge of my seat, staring wide eyed at the screen. And then, the turn that you want so badly to come doesn’t, and you have to wonder what’s about to happen. What was KITT thinking? Won’t Michael and Shawn drown? And, most prominently in my mind, won’t KITT drown?

For a moment, this scene plays us into believing that, because magic FLAG science that is pretty par for the course, everything is fine. KITT explains that they have an airtight cab and over 20 minutes of oxygen. Everyone lets out a collective breath of relief. We see it in Michael and Shawn, and I know I felt myself relax.

And then there’s a flicker in the screen, and that pit in the bottom of my stomach came right back. Michael is confused, and KITT explains what we should have realized was inevitable. This is KITT sacrificing himself. He even goes as far as to let Shawn know that she can use any of his computer chips that she may need. This comes off as strange at first, but it goes to show that KITT is, at his core, the same kind soul we always knew. He acts angry because he feels betrayed, but given the choice, he will chose another person’s life over his own, always. Even the microprocessor that he is most frustrated over, the thing that seems to drive a wedge between him and Shawn, is just how he is expressing his hurt. Now, thinking it is the end, he offers it up freely, and Shawn doesn’t seem to know how to respond. KITT is calm as he says his final goodbyes. And this is the first place in the film that we get to hear the amazingly nuanced voice acting that William Daniels is so great at. KITT sounds collected and at peace with what is to come, but there are also subtle hints that he is at least a bit nervous, a bit sad. “I know. I guess this is goodbye.” He doesn’t want to leave his friends, but he knows that he has to for them to be safe. Even if the pacing of the film seems to actively try to undermine this moment, it stands out to me as an amazing scene, even if the reaction from Michael is underwhelming at best and the reaction from Shawn is… as much as can be expected from Shawn, but that’s not saying much. As far as KITT knows in that moment, these are his last words: “Michael, take care of yourself.” Down to the last moment, Michael is everything to him.

IjustwannamakeitclearquicklythatIthinktheirrelationshipisentirelyplatonicokthankyou

And I felt sad, big time sad. The movie up until that point was unbelievably boring to me, and this wasn’t a turning point where the movie suddenly became great. It was a moment so darn good that I almost don’t think the movie deserved for it to have as big of an impact as it did. But that shows just how powerful this universe is, how wonderfully honest these characters are. Even after being butchered practically beyond recognition, one scene in-character can still bring you to tears because you have connected with them so deeply throughout the TV series.

AND THEN DEVON DIED IMMEDIATELY AFTERWARDS :D

I don’t like Devon.

Devon was actually more tolerable in this movie than normal, and I can see where people who don’t hate him could be sad that he died I just,,, he has hurt or talked down to KITT and KARR so many times that I actually could not sympathize. What’s even more frustrating about that is that Devon’s death is the one that Michael got all sad over when KITT sacrificed his life for him and Devon got kidnapped randomly but okay go off movie you can’t ruin that scene for me. I knew going in that Devon died, but I was expecting them to spend a lot more time setting it up and making it as dramatic as possible. Nope, he just got a shot to the old air tanks I guess? My view of it is nothing more than that it’s a thing that happened.

OH AND DEVON DID PULL ONE HEINOUS ACT. He said that KIFT was better than KITT in every way other than that KITT has humanity. SINCE WHEN HAS DEVON GIVEN ONE SINGULAR HOOT ABOUT THE AI’S BEING ALIVE??? TELL KARR THAT??? HECK, TELL DEACTIVATED KITT THAT YOU WERE JUST FINE SELLING OFF AT AUCTION THAT?!?! Also also, KIFT DOES NOT C O M P A R E TO KITT. We are coming back to KIFT in a moment, don’t you worry. For now, I just. Low blow, Devon, low blow.

Michael was fine too, he played a weirdly small part and that felt off but everything he said seemed pretty in character. The most out of character parts were when he said nothing at all. OH AND WHERE HE WAS REPLACING BONNIE but that’s besides the point, no Bonnie OR April… no Bonnie OR April… I’m fine…

…

It feels like this movie wants you to forget that Michael exists because Shawn is here she’s more interesting, right? Right???

She’s really not.

So back to KIFT. My favorite part of KIFT is that pronouncing KIFT in your head sounds funny. It’s like “gift” but if the gift were actually an underwhelming villain of sorts that is overtaken in a garage, parked, by Michael either removing his microprocessor entirely or moving it to a Chevrolet.

I was surprised how not bad KIFT looked. I had seen stills from the movie that looked really uninteresting compared to the regular designs, and while I still agree to some extent, it was a lot more epic than I would have thought. Something about how the paint shines on it is captivating. I was genuinely happy when KITT was moved to the snazzy red vehicle, although a big part of that could have been how disgusting mint green looks with red. Seriously, including the red scanner on that bizarre seafoamy-bluey car (and yes, I do think it is a very pretty car by itself) was like when people say movies were “inspired” but in the opposite direction. And the scanner looked weirdly small? Was it just me?

Am I the only one who feels w e i r d just looking at this??

I think this is the most normal thing to be categorized as being in uncanny valley but there we go, I did it. It’s not right.

Anyway, as neat as KIFT looks, it is no comparison to the classic Knight 2000 or even Season 3 KARR. Red can be striking, but not when the classic scanner is also red. No contrast!

KIFT is absurdly easy to forget, and I don’t think that the car’s design has anything to do with it. KITT spends most of the movie piloting that car, and while it is not what we are used to, it doesn’t come across as super lame to me, either…or at least, not because of the design. The biggest problem with KIFT is, I think, simply his voice. His voice feels so out of place in the movie, and it’s so strange to me considering that Daniels’ voice is integrated just fine. The recording sounds too crisp, too clean. KITT’s voice always has a great deal of character, a very Earthy-sounding voice for an AI character. I actually think that this incongruity is purposeful, and it’s a very clever concept. We are supposed to recognize that KIFT isn’t human like KITT is. KIFT sounds out of place in the real world among real people; he’s too neat around the edges. It’s especially obvious when KITT and KIFT talk to each other. This is also mirrored by how KITT occupies a well-loved Chevrolet that has little imperfections that make it feel real whereas KIFT is in this red… whatever it is that feels like it comes out of a sci-fi film. This effect would have really worked if we had enough time with KIFT to understand his personality–or, more aptly, his lack of personality. What makes this not work is the fact that we spend practically no time with KIFT. We don’t get to hear what he feels he is programmed to do, we don’t get to hear him deliver the sort of lifeless lines that Shawn did that made her so unlikable, and we don’t even get to hear his voice more than 4-5 times. Every time comes as a shock, taking us out of the moment of the film. We could have gotten used to his crisp sound if he had spoken more, and we may have seen the actual plot significance of it. Instead, it pulls you right out of the movie.

Oh yeah, and the only line(s?) that KIFT delivers to KITT are full-on taunting… that’s not very lifeless of you KIFT.

Alright, just one last thing to really hammer home a point from earlier and conclude this whole thing. You know what I was saying about this movie lacking the whimsical nature of the TV show? Well, the final chase puts the icing on this oddly sullen crab cake.

Yes, crab cake.

Because the pinchy crab that is Shawn makes it quite painful to get this particular cake and icing doesn’t even belong on it anyway.

KITT is racing down the street in this bright red car that I just explained is thematically wrong for him to be driving tbh but whatever, he’s racing in it and comes up to a barricade of randomly stacked up cars.

Oh Yeah, we all know what is coming.

The music swells. Michael looks at the upcoming barricade with furrowed eyebrows and quietly asks KITT what the heck they’re going to do now.

OH YEAH, we definitely know what is coming.

And at last, for the first time in the film…

KITT veers off to the right and they drive on water. “It’s really sink or swim with you, isn’t it?” Michael asks, pretending that’s funny as if I am not still emotionally raw from that scene that happened an hour ago.

Apparently, KIFT had that one obscure feature from “Return to Cadiz,” the Season 2 episode where April forces KITT to follow KARR into the ocean on the hopes that waterproof wheels might work maybe, directly ignoring his many attempts to get out of it. Yay. I love references to That Episode. That Episode which baited me with an opening that looked like KARR could have been discovered underwater only to show me that not only was there no KARR, but KITT was going to be bullied into repeating what his brother did when he died. Wholesome. Lovely. Fantastic. And how did KITT know for sure that would work? KITT clearly still has some technical hiccups in his own CPU from Michael tampering with it, that was an awful lot of confidence to place in a maybe.

AND MORE IMPORTANTLY…

THIS MOVIE DID NOT HAVE A TURBO BOOST

A TURBO BOOST

I cannot believe that a movie based around Knight Rider did not have a turbo boost (or for that matter, the THEMESONG???). Like I am honestly still surprised by it. Almost every episode of the original show had at least one turbo boost, and there is a reason. The idea of a talking car jumping in midair, sometimes with Michael “WOO!”-ing like a girl, is so fantastically fun that nobody even tries to question how impossible it is. I think we all know how impossible it is, and that doesn’t matter, it is yet another thing that embodies the heart of this show.

And… not even one.

…

So yeah, that just happened. I think this is technically a small novel. Wow.

I know that I'm still missing a lot... I have a lot of thoughts about this movie, and if you for some reason want more please ask! I would also love to hear your thoughts on this! Do you agree with my analysis? Do you disagree entirely? Did you notice something that I failed to mention entirely? Pleasepleaseplease send ideas, I would love to hear them! Also know that, no matter how much I was disappointed by the movie itself, I am fully open to hearing your ideas about how to improve or expand upon it. I truly believe that this film introduced some great concepts, and I would absolutely adore seeing them reworked in a way that's more true to the original. Thank you for reading! :D

#knight rider#knight rider 2000#kitt#knight industries two thousand#k.i.t.t.#Michael#Michael Knight#michael knight rider#movie review#bad movie review#bad movie#knight rider 2000 movie review#knight industries four thousand#kift#k.i.f.t.#shawn#shawn mccormick#spoilers#movie spoilers#movie recap#film review#rant#movie rant#oh yeah one more thing#why is Shawn named after spices#mccormick

21 notes

·

View notes

Note

4am asks : 16, 18/19, 36, 56. I hope your night is going well too! It's snowing here and it makes me happy.

16. Do theoretical debates have any value ? Is it important people discuss ethical dilemmas like the trolley problem ?

Yes they are ! People just don't make these kind of dilemmas to go would that be fucked up or what lol

But they're often misinterpreted, they're not an engineering question, the trolley problem asks how people interpret guilt and is a good question about pragmatism (for example it's reused for autonomous cars in a would you rather run over the baby or the old lady kind of case). Those problems are super interesting but like any thought experiment you need to have all the hypothesis and the circumstances of the experiment to understand what you're studying, and people are usually not introduced to those problems correctly. It's not so much the answer to those dilemmas that is interesting often, but the steps you take to get to that answer.

18/19. Am I religious ? If yes do I think my religion is "correct" ? If not do I wish I were ? Why ?

I'm just shocked at the "do you think you're correct" question lmao like way to disrespect people. This feels so Catholic, like "we know you don't believe in the same god as us but also we're right so we're gonna pray for your soul and be incredibly obnoxious about how we want to evangelize you". No I'm not religious, and I think that if someone wishes they were religious, then even if they haven't officially converted yet they're probably religious already.

36. Have I ever met someone with a similar personality to my own ? Did we get along ?

Honestly ? Fuck if I know, me having a personality is way too recent, until like five years ago I used to be a sponge and absorb everyone's manierism to try and fit in (it didn't work)

56. What do I think about artificial intelligence ?

Wow talk about a vast question ! What do I think about it ? About the research on it ? The current use ? The companies using them (bad) ? The possible development ? Whether I think I, Robot will happen one day ? (It won't it'll be ghost in the shell with overzealous military protocols)

What kind of thought am I supposed to give about artificial intelligence ? Can we define it first so that I know I'm on topic ? Is a sorting algorithm artificial intelligence ? Is my program to identify droplets and measure their ratio ai ? Or do we mean stuff like home assistant ? Are other neural networks trained for other random stuff like finding the quickest route between two places AI ? This question is basically like asking me what I think about coding, well coding is a lot of languages used for a lot of different things. AIs are numeric tools used for a lot of different things they're trained on and by trained I mean that there's an iteration with some kind of good/bad indicator until they reach a given precision.

1 note

·

View note

Text

AI – Thoughts and Rants

This summer semester I decided to take Introduction to Artificial Intelligence, which the university I go to offers as an elective for CS majors. Which is awesome, I know! As a computer science student I had been curious for a while about the robots, talking computers that assist Iron Man, and even the “magic” behind things like Siri. Yes even I, someone who is in the “know” about a field like software engineering, which is intertwined with AI in more ways than one, fantasizes (or used to, maybe?) about a future where robots assist us on all kinds of tasks and make our lives better/easier, or in the case of I, Robot, a lot worse. All jokes and fiction aside, the fact is that AI exists already in our lives. In fact it is so infused with our day-to-day lives that we don’t even notice it. You ever look at the weather app on your phone? Do you ever go to Google Translate? Do you ever ask Google for directions? Do you ever ask Siri anything? All of these things use some technique that was born in the field of AI, or machine learning (which is a very close sibling to AI). I could go into all kinds of impressive, and not-so-impressive, techniques that I learned about in the class. A-Star search; Informed Search; Probabilistic Reasoning; Markovian Models; Neural Nets, etc. But this is not the reason why I write this.

The reason why I write this essay/blog post is because a friend of mine, who is planning on taking the class next semester, asked me a very simple question, “How is AI?”. Well...the truth is that is not a simple question at all. It’s a tough question. Because I do have MANY reservations about AI. They range from the philosophical, technical and even reach out to my ethical concerns about Artificial Intelligence. Now, before I go on, I want to be clear about something: THIS IS A BIASED PIECE. As I go on, you’ll notice I have specific opinions about AI as a software engineer. I also want to state that this is NOT a piece meant to attack/offend anybody/anyone/ any organization that is researching AI or building products powered by AI/machine learning. I think you are all awesome people(a little crazy, but in a good way), and you have my utmost and sincere respect. Now that that is out of the way, let’s get down to business.

Before coming to this class I thought AI was an awesome/fascinating field(at the moment I still do). That with everyone—mainstream media, programmers, Google, Microsoft—hyping up AI, I thought to myself, there has to be reason for all the buzz and fuzz about this “AI thing” . And to be honest, MOST of it is undeniably granted. So...as a software engineer I was surprised by how mathematical AI really was. You’d think that a field that is, as stated before, so infused with our lives would be somewhere on the vicinity of software engineering in regards to practicality. But it’s truly not. The truth is that a lot of problems, rightfully so, have to be theorized/generalized in some way before they’re solved in an intelligent manner by a machine. And this makes sense. Think about it, if you want to talk about path-finding, “paths” aren’t simply cities A-F, and find the shortest path. This could be the surface of a new planet with a different landscape, New York, a colony in the moon or you might even have a case where you’re concerned about the cost of moving a piece on a chessboard. It’s also not just about making the algorithm fast. And it’s not that AI doesn’t welcome nice Big O notations like constant time and linear and logN—and these are becoming less central to any algorithm given all of the crazy-fast hardware we have today and the crazier-faster that is still to come. These are, like any algorithm, preferred over N^2 or something above that. However, AI’s top priority to my understanding(at least if I learned what I was supposed to learn), is to solve problems, or find answers, in an intelligent way.

But what the in the world does intelligent mean, anyway?

This is when AI becomes philosophical. And, if you ever take this class(or at least the specific AI class I took), you won’t be tested on the philosophical definitions of AI. But even though you won’t be tested on those when doing the projects, which is the most important part of the class, you won’t directly use anything philosophical, it’s worth keeping in mind that any algorithm in AI is trying to do things intelligently. This means that brute force is not welcome; that randomness, with some exceptions(like hill climbing), is not very welcome; most things that aren’t generalized(in an intelligent manner) are not very welcome. This is one of the reasons why AI is math-heavy: AI scientists need a way to generalize intelligence. But how general can intelligence really be? Can it really mimic the intelligence of a human to the point that it can compose songs, write an essay on the politics of the world and even make moral judgments? At the end of the day, not really. I mean you can take all of the songs recorded up to this day, and write a fancy neural net(don’t ask me how they work, they’re not super-complicated, but not a walk-in-the-park either) and it can classify and recognize some patterns and put something together….but it’s just re-mixing what we’ve already heard and listened to a million times. So no, AI is not that general. The AI of today is very narrow. This is not to say that it is useless. AI is very useful and will be in the future; speech recognition will get better; self-driving cars will improve; it will be able to write “better” songs. But AI won’t have a face; it won’t (and this is subjectively my opinion) have the ability to make moral judgments(and if we allow it to, then we are fools buying snake oil). As a software engineer I found the radical uses of Bayes Theorem somewhat interesting, but not very exciting. I found myself subscribing to the idea to program intelligence into the machine, rather than program it and tell it what to do. This, if I’m being frank, made me a little uncomfortable. As a software engineer I like tinkering with machines, I like to write programs that solve problems(rather than ���program” intelligence and let It solve the problems for me). I felt as if I were being submissive to this idea—I know, it’s a stretch. And yes, I am probably romanticizing programming as a craft, but I’m sorry, I can’t help it. Speaking of programming machines, that reminds me, to the AI people(and I’m speaking about the specific people that guided me throughout the class—professors and TAs) the code did not matter. Which struck me as surprising, and a little unnerving. To them all that mattered was the theorems, excel charts and “report”. Which again, given the fact that the code itself in practice is the building block for the AI agent to do whatever it is that it needs to do, was unnerving—borderline frustrating. I don’t write code to plot charts, theorize formulas or see trends. That’s not to say, I write code without documentation. Documentation is not what we are talking about here. Indeed, self-documented code is a must. But to write code to satisfy Bayes Theorem? That itself is frustrating and, in my opinion, goes against the spirit of creativity in programming. It goes against the lemma I follow when I code—hack away. Hack the malloc calls to the point where all of the segments you allocate are continuous; trick the OS into caching at all levels only your processes; manipulate CPU priorities to make your process priority 1 because the game you’re building is over-bloated with physics calculations and unnecessary art, and that computer does not have a GPU. AI felt nothing like hacking computers. AI felt nothing like engineering solutions. It felt like forcing code to comply with some theorem—Bayes Theorem, making informed decisions, Perceptron, etc. I seriously respect these techniques, because all of them are incredibly cool and quite impressive. And heck, software engineers do use these techniques today. But, in my humble opinion, an engineer doesn’t have to fully comply with a mathematical rule. They are nice because they make a bunch of assumptions that MOST of the time are true. But in engineering, when we have to directly sometimes interact with hardware and users, some of these assumptions are not very useful in practice. Sometimes as engineers, if we were building an OS, one might have to hard-code stuff with macros in C to make a specific architecture/piece of hardware faster. Sometimes in software engineering, one doesn’t have the luxury of just “throwing memory” at a problem—which is part of the idea of machine learning, along with some statistics. Throw memory at it, implement perceptron and you can classify pictures! Engineers have to keep in mind the cost of adding two gigs of ram—cost in terms of money and resources. As an engineer, when handling CPU scheduling, sometimes one doesn’t know what the best scheduling scheme is. Sometimes engineers have to wait till users actually use the software, and get a “feel” for what’s the best CPU scheduling scheme, given the different use cases. AI doesn’t like hard-coded macros, that’s not intelligent. AI doesn’t love edge-cases hacks, that’s not intelligent. AI doesn’t care about beautiful code that might be 10% faster because one follows good practices. AI, from the impression I got in this class, is almost programming-independent. One might even say it finds programming languages hindering because there isn’t a language that fully expresses how “great”(ahem, intelligent) It really is. I could be wrong about these assumptions. Because, heck, what do I know? I’m only a software engineer.

Despite my reservations about AI, I highly recommend taking the class as a CS major. Having said what I said, AI is not going away. For better or worse, it will stay in the lives of people, software engineers and not-software engineers. It is and will be a necessary evil of our present and future. Take the class, get a feel for what you think of it. And if you’re like me—you like to hack computers—you’ll survive in that jungle of probability and intelligence greatness. I honestly can’t tell you to stay or not, that’s your choice. In the meantime, I choose not to.

2 notes

·

View notes

Text

New project: AI Sci-Fi

I don't write stories, generally. I'm only just learning a bunch of stuff I should've picked up much earlier in life, which is making it seem possible. Like plotting, and tones. Nevermind that though, because we have a cool idea to look at!

I have opinions about AI, and what true computer consciousness would be like, and I have a hard time explaining them. Along side that, I'd love to play with idea of how humanity might develop in the future. Neither of these are easy to fully express. So I'm going to start with a plot line, including some tones or scenes or bits as I write it, but mostly just a rough timeline. I'm going to take a lot of inspiration from classic sci-fi, probably. Write plot line, attach human stories around the plot points. Add or modify plot points as I go. *Deep breath*

Today, we have prosthetics of a number of sorts. Limbs, most commonly associated with that word. Canes, though, also count. And so, in the other direction of abstraction, do smart phones. We use the heck out of these things for all sorts of stuff that stands in our way. I use mine for mapping; it's barely enough, but so much better than my direction-finding skills even with a map. Prosthetic direction, location, and pathing ability.

As more services go online, each can be a kind of prosthetic, an extension of one's body and mind. (Here's where I realized I was using that word a lot without having a super solid definition in mind.) Grocery shopping can now be accomplished without entering a store, transportation is becoming more automated...

But wait, what's up with those self-driving cars? Cars take so much time and attention to operate, I have a ton of brain power devoted to it. But self-driving cars are not quite ready to let loose on the roads, and part of that is the ethical decisions which may need to be made in an emergency. Urgent ethical decisions on the balance of which lives hang. Meanwhile, we have a car full of people, currently, with a society built on drivers; what could we humans do to help? Because right now you can fall asleep at the wheel very easily with all you have to do behind the wheel in a normal self-driving car situation, but there's no time to focus human attention in the event of an urgent change. So maybe we have "I'm not a robot" tests where people answer moral quandaries? Hmm... Wow, that'd be scary and amazing. But I'm drifting off topic.

That was all plot point one, and as we go into two we'll see an increased focus on easing human lives and labors. Even while people are still working long hours to pay their bills, the actual labor is reduced, and a great deal of suffering is relieved by work on lowering the barriers to achieve needs and desires. Work hours wax and wane, but leisure time gradually increases by reducing home labor. The wealthy have incredible amounts of power, shaping the world by like tides which sweep the poor around, buffetting them still on the jagged rocks. Between those extremes, many lives stablized by advances in human compassion services.

Point three is first hinted at in veterinary care. With pets, sanctuary and work animals living longer, healthier lives, more mental health issues are recognized. Old dogs who start to snap at children, large zoo cats who cannot be kept from harming their caretakers, and that's not even getting into the multitude of farming uses, as computer-enhanced brains make the scene. Easing the problems with identifying animal pain signals, and allowing for throttling autonomic responses based on external calculations, domestic animal life enters a new age. And sure, the factory farmers use what's essentially raw mind control to make meat grow with as little fuss as possible, and lot's of people worry what's in store for people. Because obviously this technology gets used to treat human mental problems as it matures. And yeah, there's fears of slave armies and maybe it happens, but there's also a huge fight over human rights and what a person is entitled to in their living experience. And eventually nearly everyone is enhancing their minds; you aren't connected? What, are you still using a phone? Pff. Just give me my virtual experiences straight to my brain. Don't like it? Change the world, vote by thought. The presiding official has the duty of emergency executive power, backed up by a cybernetic army of active doers, all connected at near-lightspeed thought-link. Yikes. Good thing the programs have a lot of checks and balances uplinked just as fast.

Next up, the brink: mind perpetuating on a fully technological frame. Abandoning all need for a fleshy mortal shell. The line between AI enhancement and true intelligence has never been more difficult to discern. But at the same time, people find new and ingenious ways to recognize each other despite all changes. Now what? An undying human population at last? Does no one ever leave? But yes, probably they still do. And to where? Do we have to follow to find out? Or have we discovered the secret of what lies beyond, in all our tinkering with our very essence? Have we detected the soul?

Finally, and probably the smallest part, a kind of epitaph... What of all those AIs, trained to think like so many people, carrying on, remixing into better, more capable, more dependable companions? Can my cat carry on as a virtual buddy? Does my dog fetch my email? And would we notice if our AI started thinking for themselves, if they just got better at helping everyone treat each other well?

❣️

2 notes

·

View notes

Text

26 Mar 2021: Amazon: cooler but not fresher. Facebook’s habit. NFTs.

Hello, this is the Co-op Digital newsletter. Thank you for reading - send ideas and feedback to @rod on Twitter. Please tell a friend about it!

[Image: part - about 3.5m worth - of Beeple’s Everydays: the first 5000 days]

Amazon: cooler but not fresher

“I asked [two young employees] if they liked working at Amazon Fresh and they both said, “Yes.” I followed up with, “Beats working at a supermarket?” and they both said, “Yes.” It’s a problem that it’s not cool to work in a supermarket.”

That’s a US supermarket executive visiting an Amazon Fresh store in Chicago. Also:

“I was amazed that the cart weighs the produce and snaps a picture of each item. [...]

“I couldn’t find a ripe avocado and the bananas looked chilled. [...] The stores don’t seem to have a personal touch, especially if you need something special. I don’t think it will be a weekly destination for me, but I’m sure it will be for some people.”

Elsewhere in grocery and retail:

Asda equal pay: when seeking equal pay, lower-paid shop staff, who are mostly women, have won the right to compare themselves with higher paid warehouse workers, who are mostly men.

John Lewis will permanently close eight more shops - most were already struggling before the pandemic.

Big investors shun Deliveroo[’s IPO] over workers' rights.

43% of weekly shoppers experience spoilage, damage or theft of delivered grocery - HomeValet has a “smart box” take on grocery delivery packaging that fixes those problems.

Facebook’s habit

Facebook’s AI algorithms gave it an insatiable habit for lies and hate speech. Now the man who built them can't fix the problem. Good long read on Facebook’s efforts to understand the system it had created.

“A former Facebook AI researcher who joined in 2018 says he and his team conducted “study after study” confirming the same basic idea: models that maximize engagement increase polarization”

Needless to say, there are competing views within FB about what “fairness” should mean, particularly in relation to politics. And unfortunately *testing* for fairness remains a nice-to-have:

“But testing algorithms for fairness is still largely optional at Facebook. None of the teams that work directly on Facebook’s news feed, ad service, or other products are required to do it. Pay incentives are still tied to engagement and growth metrics. And while there are guidelines about which fairness definition to use in any given situation, they aren’t enforced.”

It ends on this despondent note:

“Certainly he couldn’t believe that algorithms had done absolutely nothing to change the nature of these issues, I said.

“I don’t know,” he said with a halting stutter. Then he repeated, with more conviction: “That’s my honest answer. Honest to God. I don’t know.””

Political platforms

Bad news at the newsletter platforms. Mailchimp employees on unequal working conditions that led to women and people of colour quitting jobs. And Substack writers are mad at Substack over advances given against future revenue shares to writers who may have controversial or discriminatory opinions (although tbh, this is what all publishing companies do: offer authors with varying views advances on future royalty revenues).

It is getting harder for all platforms to remain neutral. Partly this is because neutrality is impossible: as platforms (and tech companies generally) get bigger, they wield more power. And partly it is because people actually want the platforms they use to take political, ethical and value positions.

Related: Very interesting read on moderation: whether each layer in the infrastructure stack should moderate its own layer or moderate the layers above it. It has interviews with leaders at Stripe, Microsoft, Google Cloud and Cloudflare.

NFTs, “non-fungible tokens” and art

Last week, a digital image by artist Beeple sold for 69 million US dollars. It’s a jpeg image by an artist called Beeple, and the auction house Christie’s handled the sale. To be more accurate, it wasn’t the image that sold for $69m, but a digital file on the blockchain that references the original image, although of course the very idea of “original” is complicated by digital files anyway (look, is this the original, on the Christie’s website?!). OK, let’s do NFT questions:

What is an NFT? A “non-fungible token” is a digital file that is put on some kind of blockchain so that it behaves less like a digital thing (infinitely and easily copyable and shareable) and more like a physical thing (not easily copyable and shareable).

Hold on, what, “non-fungible”? Money is fungible: it doesn’t matter whether you have this £10 note or that one, they’re both worth £10. “Non-fungible” is the opposite: only you have this unique thing, like a painting.

Is an NFT art? Everything can be art. The question is always whether it is good art, and the easiest way to know is to look at a lot of art.

Are NFTs good? Sometimes. They let true fans express their fandom by buying and collecting things. They make some money for artists, although most aren’t going to make 69m. They let artists benefit from the secondary market - that’s a good but occasionally sweary piece.

Are NFTs bad? Often. They’re hard to understand. When they use proof-of-work blockchains - eg Ethereum as of mid March 2021 - they are profoundly wasteful of energy. They are prone to scams because while the blockchain guarantees the chain of ownership of a digital file, it doesn’t do the same for the artwork the digital file points at, so there are some instances of artists being NFTised without permission. Though this may be more a characteristic of scammers than of NFTs. Some people speculate that NFTs are being used as marketing for cryptocurrencies.

How can NFTs be both good and bad? NFTs - and cryptocurrencies more generally - are a mirror: you see what you want to see. The excitement of being part of something new. The wish to make the world afresh. Taking apart industries that are inefficient. The white heat of investing in things that go to the moon. A way to socially signal others. The virus amplifying wealth inequalities. The underlying trend to monetise everything. Pointless showing off. Buying a file that merely points at some art. A scam.

Can you get off the fence, newsletter? OK, NFTs are on balance, bad. NFTs are everything you don’t understand about art multiplied by everything you don’t understand about technology multiplied by everything you don’t understand about money. And, right now, most of them are bad for the environment.

Who’s Beeple again? Beeple is an artist. The newsletter featured a Beeple image in August 2020. Co-op Members will be pleased to hear that the newsletter didn’t pay him $69m for it.

In other countries

A changing nation: how Scotland will thrive in a digital world - “this strategy sets out the measures which will ensure that Scotland will fulfil its potential in a constantly evolving digital world”.

Data, surveillance and how India is creating platforms to give people more control over how information about them is used (Related?: Two UK broadband ISPs trial new internet snooping system with UK Home Office.)

Spain to launch trial of four-day working week - “government agrees to proposal from leftwing party Más País allowing companies to test reduced hours”.

Various things

Self-driving startup Voyage bought by Cruise, which is owned by GM and Honda. Voyage was interesting because it focussed on a taxi service in retirement communities, and has designed an interior for Covid safety.

Is test and trace really the most wasteful public spending programme ever? Or have there, in fact, been larger squanderings of taxpayers’ money in the past?

'Right to repair' law to come in this summer. Manufacturers will need to make spare parts for appliances available to consumers - will this apply to mobile phones and other devices which have become decreasingly repairable as they became smaller and more complex?

A petition to Amazon: lower the number of parcels we have to deliver.

Black tech employees rebel against diversity theater.

I have one of the most advanced prosthetic arms in the world - and I hate it.

Co-op Digital news

Reflecting on one year of remote working at Co-op Digital - Co-op Digital colleagues in their own words.

Thank you for reading

Thank you friends, readers and contributors. Please continue to send ideas, questions, corrections, improvements, etc to @rod on Twitter. If you have enjoyed reading, please tell a friend! If you want to find out more about Co-op Digital, follow us @CoopDigital on Twitter and read the Co-op Digital Blog. Previous newsletters.

0 notes

Photo

A Girl Stumbles on SF Written for Her

I don’t think Fahrenheit 451 ever had a chance.

I read The Giver by Lois Lowry in my youth and honestly any dystopia is going to be measured by the level of mind-blowing that happened as I read that book. (None has measured up so far.)

Though for years I’ve sought out fantasy and hardly ever science fiction, I’ve recently discovered a certain streak of SF that does appeal to me greatly. It considers angles of humanity that I usually think of as the territory of fantasy: personhood, cultures, colonialism.

This discovery is all @ninjaeyecandy‘s fault.

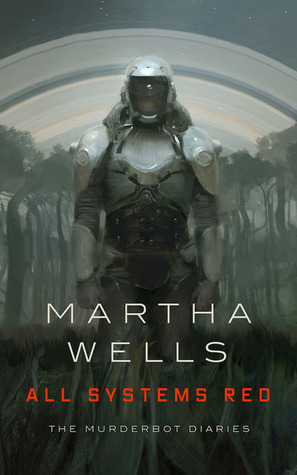

It Started With a Murderbot

When @ninjaeyecandy started promoting All Systems Red, she naturally zeroed in on the appeal for her mutuals like me--a drama-bingeing socially anxious AI? It’s like a space-opera about me.

I’m not often drawn to science fiction (the bleakness, the military stuff, the horror of space) but this was a perfect compliment of things I like--a character I strongly identify with but also get to watch come from a totally different state of mind. A gripping situation in an unfamiliar world. Seeing someone try to be good and do the job they are really good at, despite incredible odds.

It was incredibly human, though the POV was unhuman, with an emotional core that made the premise work.

It was brief and good. And I had quite a wait before I could read any more. But I could now see the possibilities for SF to really speak to me. Luckily, another book had been lurking on my TBR for way too long....

The Imperial Radch is Having Personnel Issues

I bought Ancillary Justice at the Sirens conference last year, having heard a ton of buzz about it. (Sirens is a conference dedicated to women in fantasy: writers and characters. It is great. Yes, the topic wanders to SF, too.)

Despite even reading Tumblr fandom stuff about it, I feel I came to the pretty fresh. I was surprised that the MC was a sentient ship, for instance, when I finally read the back copy. Though there were certain thematic similarities with All Systems Red, because of their MCs both being persons but not humans, the stories themselves had different directions.

Breq is signally different from Murderbot in that her memories are crystal clear, and she is angry. I don’t often read books where I enjoy a character being full of rage, but as a very old being in a very inadequate body, there was a sense of patience and calculation most vengeance-fueled characters are missing.

I immediately got the next two books out from the library. And the series did not disappoint. The personhood of Artificial Intelligence emerges as a major theme, which made me super-happy. Any SF where you have sentient beings in service to others because of their very natures is fraught ground--and I loved that Leckie took Breq from a very narrow focus, to fulfilling greater potential despite the crippling blow of losing everything but one sub-par body.

Miles Is Having An Interesting Year

I’ve heard a lot about Miles Vorkosigan, especially listed in collections of heroes with a certain flexible morality and reliance on their minds for derring-do.

I have been hesitant to pick up these books partly because of age and that sensation that if I didn’t like it I would probably be disappointing several friends. However, though there were bits I found a little rough going, overall Warrior’s Apprentice shared a lot of the attributes of my previous reads: a sense of humanity beyond just commerce, culture deeper than just politics, and the understandable concerns of specific people to ground a much broader scope of issues.

One of the blogposts that circulated recently talked about Lois McMaster Bujold neatly doing away with the problem of contraception in the first few pages, and another rebutted this with the fact that it is given consideration in several lights. Several cultures with different traditions and mores, including around sexuality, come up. This is the kind of deft touch that often is missing in futuristic or speculative worlds of various types.