#deontology

Text

As far as they can

At the end of the Job minisode, Crowley inaugurates Their Side by proclaiming Aziraphale "an angel who goes along with Heaven... as far as he can," parallel to his own stated relationship with Hell.

Only it... doesn't actually work that way. Their exactlies are different exactlies.

Crowley defies and lies to Hell as often as he thinks he can get away with it. He never disabuses Downstairs of their misconceptions about his contributions to human atrocities. He cheerfully lies in his reports Downstairs, something Aziraphale briefly turns on his Baritone of Sarcastic Disapproval about in s1. Crowley even turns evil homeopathic in the latter part of the 20th century, likely in hopes that it will look good to head office while accomplishing essentially nothing. (This, of course, is another way he Crowleys himself, both with the London phone system and the M25.) After Eden, Crowley's default given an assignment from Hell is to see how he can subvert it.

Aziraphale, on the other hand, defies Her and Heaven as little as he possibly can. Sometimes, as with his sword giveaway, his compassion gets the better of his anxiety. Sometimes, as with Job's children in the destruction of the villa, he can try to stay within the letter of the law by leaving the defiance to Crowley.

His default, however, is "'m 'nangel. I can't dis- diso -- not do what 'm told." This comes out most often as respect for the Great/Divine Plan, which to him is sacrosanct. He sounds quite sincere in s1 when he says "Even if I wanted to help I couldn’t. I can’t interfere with the Divine Plan."

Aziraphale quite frequently Good Angels along by parroting Heaven's party line, whether it's "it'll all be rather lovely" or "I am good, you (I'm afraid) are evil" or droning on about evil containing the seeds of its own destruction, or condemning Elspeth's graverobbing as "wicked" (a stance he offers absolutely no reasoned support for, no logic, no "but She said," not a word -- that's very Heaven; most of Heaven's angels have the approximate brainpower of paramecia). Maestro Michael Sheen even has a particular voice cadence -- I think of it as Sententious Voice -- he uses when Aziraphale is thoughtlessly party-lining.

When the angel's conscience wars with his sense of Heaven's orthodoxy but (and this is an important but) he can't feasibly resist whatever's wrong, he offers strengthless party-line justifications he clearly doesn't agree with (as with the "rain bow" in Mesopotamia) or resorts to a Nuremberg defense: "I'm not consulted on policy decisions, Crowley!" Once or twice, he's even vocally aware of Heavenly hypocrisy: "Unless… [guns]'re in the right hands, where they give weight to a moral argument… I think." This isn't Sententious Voice. It's I-can't-disobey-and-I-hate-that voice.

But at base, the angel prefers obedience (not least because it's vastly safer), and he'd rather have someone else do his moral reasoning for him. Honestly? Pretty relatable. I know lots of people like this -- hell's bells, I've been this person, though I grew out of it somewhat -- and I daresay you do too. Moral reasoning is hard and often lonely (since it can be read as self-righteousness or even hypocrisy) and acting as it dictates can hurt. Nobody would need ethics codes if The Right Thing was also invariably The Convenient Thing.

Many GO fans find these Aziraphalean traits frustrating! Especially his repeated returns to parroting Heaven orthodoxy! Sometimes I do too! (Not least because I'm rather protective of my own integrity, and it's cost me quite a few times. I'm well-known in professional circles for picking up a rhetorical spear and tilting at the nearest iniquitous windmill. I often lose, but I sure do keep tilting. Every once in a blue moon I actually win one.)

The key, I think, to giving our angel a little grace on this (beyond honoring the gentle compassion that is pretty basic to his character) is noticing how often he can be induced to abandon an unconsidered Heavenish default stance. As irritating as his default is, and as consistently as he returns to it, it's not really that hard to talk him out of it. Crowley, of course, is tremendously good at knocking Aziraphale away from his default -- he's had to be. But Aziraphale even manages to talk himself away from his default once, in the form of the Ineffable Plan hairsplitting at the airbase!

I think the character-relevant point of the Resurrectionist minisode is making this breaking-the-Heavenish-default dynamic as clear as the contents of the pickled-herring barrel aren't. "That's lunatic!" Crowley exclaims, when Aziraphale Sententious Voicedly parrots Heaven's garbage about poverty providing extra opportunities for goodness. Aziraphale isn't quite ready to let go yet, replying "It's ineffable."

But Dalrymple (who, I think, parallels Heaven, perhaps even the Metatron -- there could be something decent there, but it's buried too deep under scorn and clueless privilege for any graverobber-of-souls to dig it out) manages to break Aziraphale's orthodoxy by explaining the child's tumor.

Once released from his orthodoxy, Aziraphale can't be trusted to handle moral reasoning well; his moral-reasoning ability is not-uncommonly (though not always) portrayed as vitiated. When he gives Elspeth the go-ahead to dig up more bodies, his excuses are just as vacuous as they were when he was convinced of her wickedness. He knows that he's crossed Heaven's line, too, and just as at Eden it's worrying him. That's why he has to talk to Crowley to nerve himself up to help Wee Morag... only he spends too much time talking, and it's too late.

But Crowley can then talk him into bankrolling Elspeth toward a better life. Aziraphale doesn't even put up any fight, both because he's compassionate and because Crowley is temporarily taking the place of Heaven (he's even Heaven-sized and staring down at them!) as the angel's moral compass.

S1 has an even worse example of Aziraphale's moral wavering, actually. Crowley yells "Shoot him, Aziraphale!" and Aziraphale sure does try to murder Adam. Again, he's adopting his morals from the nearest (and loudest) convenient source. Madame Tracy, thankfully, has enough of a moral backbone to save our angel from himself and Crowley.

(With my ersatz-ethicist hat on: this is a fight between utilitarianism and deontology. Crowley is the utilitarian, which is actually a bit of a departure for him, but he's admittedly desperate. Madame Tracy is the deontologist: One Doesn't Kill Children. Aziraphale is caught in the middle.)

I wouldn't be surprised if part of the reason we start s3 with Aziraphale and Crowley separated is so that Aziraphale finally has to do his own moral reasoning, without Crowley's nudges. I don't think it'll be easy for him. It will absolutely be lonely. And it may well hurt.

But I will watch for it, because it's how he will become his own angel, independent of Heaven and even of Crowley. And he must do that.

#good omens#good omens meta#aziraphale#the resurrectionists#good omens s3#s3 speculation#ethics#deontology#utilitarianism

176 notes

·

View notes

Text

if you’re ever unsure about something, ask yourself: would immanuel kant approve of this action?

if the answer is yes, do literally anything else

#philosophy#philosophy memes#kant’s deontology#deontology#getting angry at long dead (and hey with the amount of dennett hate on this account sometimes very alive) people is my passion

39 notes

·

View notes

Text

Meta: Did Harry do anything wrong?

This meta was inspired by a conversation in @thethreebroomsticksficfest server. Tl;dr at the end.

It depends on how you define wrong vs. right. This is essentially a question for ethics – according to the main theories of ethics, utilitarianism, deontology, and virtue ethics – I want to do a little exploration of Harry’s moral decision-making. The ethical theories I present to you are told in very broad strokes – contemporary moral philosophical thought is a lot more nuanced. If you want me to go in depth with any of these, drop an ask in my ask box.

Utilitarianism: greatest good for greatest number of people, and/or consequences outweigh the method. E.g. ends justify the means. Was Harry’s use of the Cruciatus Curse against Carrow in Deathly Hallows justified? Could go one of a few ways: yes, because it was in defense of McGonagall; no, because torturing Carrow was not an appropriate defense of McG; maybe, it’s possible Carrow wouldn’t have responded to any other kind of deterrent. Utilitarianism falls short when we start justifying things to the extreme – it’s how Truman justified the dropping of the atomic bomb on Japan. His greatest good was ending the war soon, but the cost of so many innocent human lives (in this philosopher’s opinion) was unjustified. This leads us to deontological ethics.

Deontology: duty-based ethics, and/or there’s a set of rights and wrongs, and it’s never OK to commit a wrong even if the outcome is good. E.g. there are certain rules, whether written in law or not, that shouldn’t be broken. Let’s use the example of Harry using the Cruciatus Curse again. In his world, it’s considered an Unforgivable. That doesn’t necessarily imply that’s a just or right framework – law and moral goodness don’t always overlap. Does Harry think it’s always unforgivable? This is where deontological ethics gets tricky – our sense of what is universally right or wrong may not be universal, or may be biased because of the society we come from. As most people would say that torturing someone is morally wrong, even if that someone is guilty of committing all sorts of atrocities, then Harry would not be justified in using the Cruciatus Curse. Deontology has its limits when we start squabbling about moral absolutes and moral relativism, or when we start seeing poor outcomes for supposedly good actions.

Virtue ethics: we cultivate morally good behavior by developing virtuous traits. The more virtuous we become, the better our moral actions will be. An action is morally good if completed by a virtuous person. We judge an action based on the person who is taking it. While this may seem counterintuitive in some ways, think about it as a way of understanding intentions. Is Harry justified in his use of the Cruciatus Curse? It depends. Is he a morally good actor? Does a morally good actor use the Cruciatus Curse? Most of us would say no to this – intentionally hurting another person is not a sign of virtue. While virtue ethics may seem murky (it can be), a good way of thinking about it is to ask yourself “would a morally good person, or an [insert virtuous trait here] person do X?” If the answer is yes, then it’s morally good. If no, then don’t do it.

As for what I think Harry did wrong in the series, in terms of moral failures, there are a few caveats before I list these things. First, Harry is a FICTIONAL character. FICTIONAL characters are not accountable to the same morality as we are; they are vehicles to tell a story, reveal something about humanity, or entertainment. FICTIONAL Harry didn’t do anything wrong because FIGMENTS OF IMAGINATION cannot do anything wrong. If Harry were real, however, these are a few of the things that I would consider morally bad or questionable:

Use of Sectumsempra in HBP. He didn’t know what the spell did, only that it was used for enemies. He may not have known what it did, but ‘enemies’ should’ve been context enough to know that it wasn’t friendly.

Snape’s worst memory: gives us, the reader, and Harry, a ton of information about James. However, there was no moral reason to violate Snape’s privacy.

Spying on Draco in HBP: when Harry takes the Invisibility Cloak and spies on Malfoy, or when he asks Kreacher to spy on Draco. Good cause, perhaps (utilitarianism) but not necessarily right (deontology). Keeping an eye out for your neighbor and being vigilant can be good, but in this case it was not Harry’s responsibility to do so. (But remember, Harry is fictional, and in his world, adults aren’t fully competent or forthright.)

Brewing/taking Polyjuice Potion in second year. For plot = good. For deception, spying, and agreeing to Hermione stealing = bad.

Sneaking out to Hogsmeade, third year. For plot = good. For rule breaking and recklessly endangering his life (even if he didn’t know that wasn’t true) = bad.

Torturing Carrow = bad. Torture isn’t ok, Harry.

Mostly, Harry makes a lot of morally good or morally neutral decisions throughout the series. Like most people, fictional or real, Harry is not wholly morally good, and the theories above, broadly speaking, can only take us so far. Let me bring in an example of Harry leading Dumbledore’s Army in terms of its moral goodness (or badness).

Utilitarianism: Was the D.A. the greatest good for the greatest number of people? You can argue it was, because the students learned and practiced lifesaving spells that would help them in their later years. They broke school rules, but they learned to defend themselves, and others. Thus, the D.A. was a moral good.

Deontology: Was the D.A. the right thing to do? In a strict sense, no, because Harry broke the school rules. He intentionally put himself and others in detention. However, is there a greater duty to his classmates that supersedes the rules? You can argue yes, Harry had a duty, an explicit moral imperative to help his classmates. Did it have to be through the D.A.? Maybe not. In this case, the D.A. is morally questionable or perhaps morally neutral.

Virtue ethics: Is the D.A. something that a virtuous person would do? This depends a lot on your definition of virtue, and which virtue you’re referring to! Let’s take courage as a virtue. Is it courageous of Harry to lead the D.A.? I think so! Is it prudent? Maybe. This is why virtue ethics can be murky – which virtue is most important? How do virtues compare across communities? In the world of HP, I’d say the D.A. was virtuous and morally good because of the values they placed on courage, excellence, and developing skills.

Tl;dr: Harry does make morally questionable or morally bad decisions, but as he’s a fictional character, we need to be careful in judging his behavior with real-world moral theories.

#harry potter#harry james potter#philosophy#moral philosophy#ethics#utilitarianism#deontology#virtue ethics#hp meta#writing meta#harry potter meta

28 notes

·

View notes

Text

The Bible is very easy to understand. But we Christians are a bunch of scheming swindlers. We pretend to be unable to understand it because we know very well that the minute we understand, we are obliged to act accordingly.

Søren Kierkegaard, Provocations: Spiritual Writings of Kierkegaard.

#philosophy tumblr#philoblr#danish philosophy#philosopher#kierkegaard#religion#christianity#agnosticism#responsibity#deontology#dark academia#life quotes

183 notes

·

View notes

Text

Deontological Gridlock

If you take one or more rules as absolutes that ought never be violated, like typical deontology does, then what happens if you are ever faced with a situation where every choice available violates at least one of them?

You get into a gridlock - all possible ways events could progress from that situation are blocked. You want some standard to be met but that option does not exist. So you must either fix the rules or have a way of weighing things to pick the lesser evil.

Maybe you think that such gridlock is always the result of a flawed or incomplete set of rules - that for a correct set of rules, all possible problems present a choice that does not require violating any of them. Or maybe you think deontology inevitably leads to gridlock.

Either way, I consider this a very useful name for a fairly common variant of logical contradiction that often occurs - and is often overlooked - in deontological thinking and values.

17 notes

·

View notes

Text

The Philosophy of Altruism

The philosophy of altruism explores the ethical and moral principles related to selflessness, compassion, and the welfare of others. It addresses questions about the nature of altruistic behavior, its motivations, and its implications for individuals and society. Here are some key aspects of the philosophy of altruism:

Definition and Concept: Altruism is defined as the selfless concern for the well-being of others, often involving acts of kindness, generosity, or sacrifice without expecting anything in return. Philosophers debate whether true altruism is possible or if all actions are ultimately self-interested.

Motivations for Altruism: Philosophers explore the motivations behind altruistic behavior, considering whether it arises from genuine concern for others, social norms and expectations, evolutionary instincts, or personal satisfaction derived from helping others. They also examine the role of empathy, compassion, and moral reasoning in motivating altruistic acts.

Ethical Frameworks: Altruism is often discussed within various ethical frameworks, including utilitarianism, deontology, and virtue ethics. Utilitarianism evaluates actions based on their consequences and advocates for maximizing overall happiness or well-being, which may include promoting the welfare of others. Deontology emphasizes moral duties and principles, such as treating others with respect and dignity, regardless of personal interests. Virtue ethics focuses on cultivating virtuous character traits, such as compassion and benevolence, which lead to altruistic behavior.

Altruism and Egoism: Philosophers debate the relationship between altruism and egoism, the belief that individuals are primarily motivated by self-interest. Some argue that genuine altruism is possible and represents a higher moral ideal, while others contend that all actions are ultimately self-interested, even if they appear altruistic on the surface.

Evolutionary Perspectives: Evolutionary biologists and psychologists offer explanations for altruistic behavior based on theories of kin selection, reciprocal altruism, and group selection. These theories suggest that altruism may have evolved as an adaptive strategy for promoting the survival and reproduction of individuals' genes or for enhancing the cohesion and cooperation within social groups.

Altruism in Practice: The philosophy of altruism considers practical applications of altruistic principles in various domains, including personal relationships, philanthropy, volunteering, and social justice activism. Philosophers examine the ethical dilemmas and trade-offs involved in altruistic decision-making, such as balancing the needs of others with one's own well-being or addressing systemic injustices.

Criticisms of Altruism: Critics of altruism raise concerns about its feasibility, sustainability, and potential for exploitation or manipulation. They argue that excessive altruism may lead to burnout, resentment, or enabling harmful behavior in others. Others question whether altruism should be an ethical ideal, suggesting that individuals have legitimate interests and rights to pursue their own goals and happiness.

Altruism and Happiness: Some philosophers explore the relationship between altruism and personal well-being, suggesting that acts of kindness and compassion toward others can contribute to a sense of fulfillment, purpose, and happiness. They argue that altruism not only benefits recipients but also brings psychological rewards to the giver.

Overall, the philosophy of altruism reflects on the moral significance of selflessness and compassion in human life, examining its ethical foundations, psychological mechanisms, and practical implications for individual and collective flourishing.

#philosophy#epistemology#knowledge#learning#chatgpt#education#ethics#psychology#Morality#Selflessness#Compassion#Utilitarianism#Deontology#Virtue ethics#Evolutionary psychology#Social justice#Happiness#altruism

2 notes

·

View notes

Text

I’m just a brave little gay being forced to fight utilitarianism off with a big stick

17 notes

·

View notes

Text

#immanuel kant#kant#kantianism#philosophy memes#philosophy#philosophy meme#deontology#deontological ethics#political philosophy#legal philosophy#natural rights#social contract#classical liberalism#libertarianism#minarchism

48 notes

·

View notes

Text

9 notes

·

View notes

Text

#poll#the trolley problem#trolley problem#ethical dilemma#ethics#consequentialism#deontology#utilitarianism

5 notes

·

View notes

Text

"Only do something that you would want other people to do in the same situation"

3 notes

·

View notes

Text

FTX and the Problem of Justificatory Ethics

א. Preface

This is my first chance to get to write straightforwardly on this blog on the topic of philosophy, and while I plan to write a full introduction to what I want this to be in the future, I figure I’ll offer a couple of disclaimers now:

I am probably retreading covered ground. This is a space for me to work out my thoughts, not publish brilliant and original philosophic work (gotta leave that for the journals!)

I am gonna get stuff wrong. Tell me if you think I do, and we can fight about it

I think many of the EA people are wonderful, smart, and good. Many of them are my friends, and I hope we can all engage in this argument in good faith.

With that out of the way, let’s begin

ב. The Scandal

In the past week or so, a scandal has emerged surrounding the crypto billionaire, political donor, effective altruist, and philanthropist Sam Bankman-Fried (SBF). It seems now like he was engaged in a Ponzi-scheme or Ponzi-adjacent scheme, moving money from FTX, his crypto-exchange, to a hedge fund he founded and remained affiliated with: Alameda Research. The fraud is massive, and billions of dollars are involved. The scheme, most observers agree, constitutes an ethical violation on a massive scale.

SBF and his co-conspirators are particularly attractive as a media spectacle because of Bankman-Fried’s political connections (he was a top campaign contributor to Democrats in the 2022 election) and lots of condescending and gossipy reporting on a polygamous relationship among those involved at the top of FTX and Alameda Research. None of that is of particular interest to us here. What is of particular interest is SBF’s involvement in the Effective Altruist (EA) movement.

ג. The Problem

The concern here is clear: did SBF engage in fraud knowingly and with the belief that he is morally justified?

The fact of the matter is that’s probably an unanswerable question: no one except Sam knows why he did what he did, and his motivations are likely muddled and unclear even to him. But here’s what I’d like to posit, it presents a problem for Effective Altruism as a philosophical project that the potential for an effective altruist justification of the scheme exists. If Effective Altruism is uniquely positioned to produce results that even its proponents detest, it (as a theory) is, to put it gently, in deep sh*t.

I’m not the only one that thinks so either. William MacAskill, an ethical philosopher that is extremely influential in EA circles, thought it was so dire a threat to EA he wrote a paragraphs-long Twitter thread on the subject. The rest of that thread will be trying to untangle that, and seeing if contentions hold up.

ד. Contra MacAskill

After some preliminary remarks expression his frustration and anger at SBF, as well as an explanation and renunciations of his ties with SBF, MacAskill dives into the philosophical meat:

I want to make it utterly clear: if those involved deceived others and engaged in fraud (whether illegal or not) that may cost many thousands of people their savings, they entirely abandoned the principles of the effective altruism community.

This is an interesting start, and it makes what MacAskill is trying to do here clear. For EA to maintain its credibility, it cannot be associated with the FTX scheme. MacAskill goes on:

For years, the EA community has emphasised the importance of integrity, honesty, and the respect of common-sense moral constraints. If customer funds were misused, then Sam did not listen; he must have thought he was above such considerations. A clear-thinking EA should strongly oppose “ends justify the means” reasoning. I hope to write more soon about this. In the meantime, here are some links to writings produced over the years.

At this point, anyone familiar with EA should be scratching their head. EA opposed to end-justify-the-means thinking? Isn’t that, like, the whole point of consequentialism? The answer: it is, and MacAskill knows that. What becomes clear as you read the rest of the thread (and its accompanying citations) is that he’s trying to thread a very important needle. Philosophically EA believes that the ends justify the means, but practically it must avoid engaging in that sort moral reasoning. As I’ll get to later, this is untenable, but let’s take it at face value for the time being.

What MacAskill does next is cite some existing EA literature that suggests this kind of argument, ostensibly to show that EA has always incorporated these ideas into their broader philosophy.

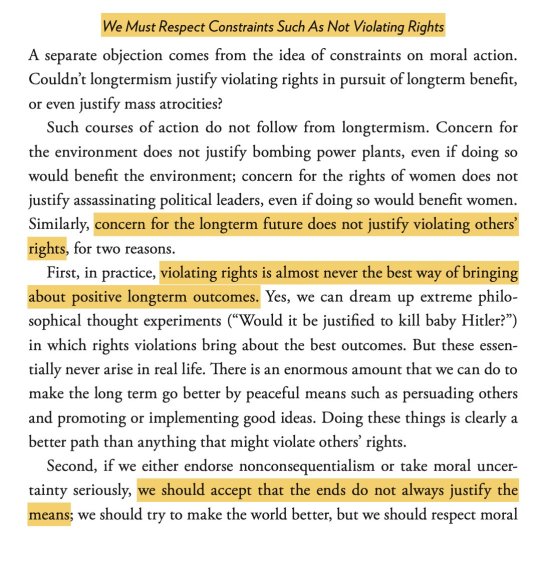

From MacAskill’s book What We Owe The Future

These are some pretty interesting selections, and I think get to the real heart of the matter. I’ll start with the end, where he discusses “either endors[ing] nonconsequentialism or tak[ing] moral uncertainty seriously.” Frankly, I think the nonconsequentialism thing is basically just an ass-covering maneuver. EA is consequentialist and near-dogmatically so, that’s just what it is. The moral uncertainty piece is very interesting, and maybe represents EA’s ticket out of this dilemma, but only in a way that dramatically undercuts the movement. A truly morally uncertain person just should not be a longtermist effective altruist.1

However, the first two arguments are interesting. They can essentially be summed up as:

The Hedging Argument: Ethical decisions are made in conditions of uncertainty, and standard ethical principles represent a good method for what is essentially hedging, or minimizing risk

The Extraordinary Circumstances Argument: While there are valid circumstances in which violating standard ethical principles is justified, they are so infrequent that they aren’t worthy of concern.

Here is, as I see it, the trouble for MacAskill: neither of these arguments would have a chance of convincing SBF. The reasons for this are simple: dramatic ethical decisions are always risky, they are always extraordinary, and they are exactly what EA tells you is the right thing to do. Let’s take these in reverse order:

EA is, at its core, a maximizing argument. You have to maximize utility, globally, and failure to do so is a moral failing. This means that to be the best person possible, you have to accrue as much money and/or power as possible, and then direct it towards the most marginally efficient production of utility. One way to do that is to run a Ponzi scheme and give the money away to philanthropy.

When you are in control of vast resources, as EA incentivizes you to be and as SBF assuredly was, all decisions you choose to do with that are, by nature, extraordinary. Sure, you shouldn’t rob a baby to save a baby in Africa, but if you can rob BILLIONS to save BILLIONS, then you find yourself in the same kind of “baby Hitler scenario” MacAskill wants to pretend doesn’t actually arise. But it does.

When you are in control of vast resources, by the nature of opportunity cost, all decisions you decide to make with it (including indecisions) are extremely risky. Not defrauding your crypto customers could mean not lobbying the elected representatives you could bribe into preventing the next pandemic. Isn’t the next pandemic also a massive risk? When you reach the scale of billions of dollars under your control, hedging becomes a useless tactic. Isn’t doing the quote-unquote wrong thing the less risky proposition in the face of global annihilation?

ה. Conclusion

So where does that leave EA, and what the hell do I mean by “justificatory ethics?” Well, I think EA has a fundamental flaw that allows its adherents to engage in moral reasoning that upends the whole project.

This is because, by its maximizing nature, it incentivizes the conditions in which the moral principles which are supposed to prevent it from self-defeat are stripped away. It self-defeats its own self-defeat protection! Now, if William MacAskill and other EAs were simply willing to accept this result, perhaps they could survive as a niche, insulated community, although (as is becoming clear from the overwhelmingly negative media coverage of this entire affair) it probably couldn’t achieve the popular success it desires. But even the members of EA resent what SBF did, they find it morally abhorrent. And yet, they lack the very terms to define why it’s wrong.

ו. Post Script

Well, that’s my first post done! I hope everyone liked it, I sure had fun reading it, and I hope I didn’t make anyone too mad! If you’re reading this on Tumblr please follow me on here, and if you’re reading this through my substack, please subscribe, it’s free!

Moral uncertainty (and its cousin Moral Pluralism) are super interesting ideas and I hope to talk about them more in the future. ↩︎

9 notes

·

View notes

Text

What is it like to be Immanuel Kant?

What does it matter how a bland, routine-freak, heavy smoking, ethics-preaching Königsberg philosopher lived—or advised others to live? For instance, I was never introduced to Immanuel Kant until I began my postgraduate studies at Delhi University. Until then, Kant had remained an enigma to me. However, Kant’s philosophy has significantly influenced how I have come to think of morality in the…

View On WordPress

#categorical imperative#Deontological ethics#Deontology#Does Immanuel Kant believe in God?#hypothetical imperative#Immanuel Kant#immanuel kant books#immanuel kant on religion#immanuel kant philosophy#immanuel kant quotes#Kant#quotes by immanuel kant#What is Immanuel Kant best known for?#What is Kant main philosophy?#What is Kant most famous for?#What is Kant&039;s most famous principle?#What is life according to Immanuel Kant?#इम्मानुएल कांट के अनुसार जीवन क्या है?

2 notes

·

View notes

Text

i’m trying to read the maze runner and every few pages i get the urge to scream because it’s so heavily rooted in deontology and as someone who firmly leans toward utilitarianism i just wanna yell at thomas that surrendering to WICKED is worth the chance for a cure

#maze runner#james dashner#thomas#thomas maze runner#newt maze runner#teresa agnes#wicked is good#scorch trials#death cure#the maze runner#philosophy#ethics#utilitarianism#deontology

3 notes

·

View notes

Text

A Critique of J.S. Mill's Utilitarianism

In his seminal 1861 work Utilitarianism, John Stuart Mill presents an argument in support of utilitarian ethics in philosophy, arguing that “actions are right insofar as they tend to promote happiness, [and] wrong as they tend to produce the reverse of happiness” and claiming “that pleasure, and freedom from pain, are the only things desirable as ends; and that all desirable things… are desirable either for their pleasure inherent in themselves, or as a means to the promotion of pleasure and the prevention of pain…” (Utilitarianism, II.2). Mill develops his claim by its distinction from contemporary conceptions of utility, namely Bentham’s An Introduction to the Principles of Morals and Legislation, through his conception of higher and lower pleasures. This work explores this latter aspect of Utilitarianism and asserts that Mill’s distinction of higher pleasures from their counterparts is insufficient in absolving Mill’s theories of the flaws of then-contemporary utilitarian thought.

Higher pleasures, according to Mill, are distinct from those which are lower, or “base” insofar as the pleasure is one that would be chosen above the most complete fulfillment of some other pleasure in an individual familiar with each, stating:

“Few human creatures would consent to be changed into any of the lower animals, for a promise of the fullest allowance of a beast’s pleasures; no intelligent human would consent to be a fool, no instructed person would be an ignoramus, no person of feeling or conscience would be selfish and base, even though they should be persuaded that the fool, the dunce, or the rascal is better satisfied with his lot than they are with theirs.” (Utilitarianism, II.6)

This seems to offer a tangible (if subjective) calculus for deriving which pleasures may be deemed greater-than. Mill seems to agree with the Kierkegaardian assertion of the aesthetic life as less than the ethical (Keirkegaard, 1813-55). By this, one would assume that loving, vulnerable relationships are worth the sacrificial pain of work when compared to the pleasurable, immediate, and empty occupation of the “hook-up” or that a being of social ability would not at any juncture choose to abandon their community in favor of the joys of independent pleasure-seeking. On its surface, the distinction seems reasonable, and plain, determining that what thing is chosen over another is more valuable (and therefore offers greater pleasure) than the alternative.

However, when looked at with a finer lens, the argument is presented on shaky limbs—Mill’s distinction between human and beast is well and good, until one notes that the capacity for higher pleasures seems to be the only delineating factor in Mill’s construction. If one may discriminate upon the determination of an animal’s higher functions, then so too may he do unto the fool, ignoramus, dunce, or rascal, as their faculties are, in Mill’s explanation, lower. Were such a discrimination to occur (the establishment of the limit of one’s faculties and the forbiddance of those beyond) it would be plainly seen as a violation of the autonomous freedoms of those peoples. Furthermore, this distinction serves a ready tool, a sharpened sword by which those in power may justify their injustice. The distinction itself, in its reliance on a measure of choice, indicates that what is chosen most universally is the ultimate pleasure and goal of the human race. What pleasure, beyond any other, is chosen for its greatest fulfillment by the most people? One should contend: that which fosters addiction and the abandoning of the ethical life would be the greatest pleasure—a universal agent which dissolves pain and induces euphoria, say, narcotics. Many abandon their (presumably) higher faculties in pursuit of such a substance—it is logical, then, that rule utilitarianism justifies the complete inoculation of humanity to the substance, ad nauseam, as the final climactic goal of the human race, a utopia of utiles. One should note, then, that this utopia is one where we are worms.

Mill appears conflicted in his moralist constructions—unable to dialectically hold that utilitarianism and hedonistic pursuits of pleasure are useful, and that they are not perfectly reflective of the human condition or of the “good life.” In trying to rectify this cognitive dissonance, Mill’s Utilitarianism distinguishes higher pleasure from the lower, where Bentham’s work did not, but fails to justify the argument rigorously, fundamentally devaluing individuals on the basis of pleasurable capacity, and reinforcing the common critique of hedonistic value as insufficient in its description of the human condition.

Works Cited:

Bentham, Jeremy, 1789 [PML]. An Introduction to the Principles of Morals and Legislation., Oxford: Clarendon Press, 1907.

Kierkegaard, Søren, 1813-1855. (1959). Either/or. Garden City, N.Y. :Doubleday,

Mill, John Stuart, 1861 [U]. Utilitarianism, Roger Crisp (ed.), Oxford: Oxford University Press, 1998.

DISCLAIMER: This work is absolved from my worldview! You know nothing about my beliefs and will never fully comprehend them via this medium! We aren't friends, we don't know each other, and this work's statements and implications are not intended to be impressionistic of my own! The barrier between art and artist is its publication:)

#philosophy#art#ethics#utilitarianism#deontology#js mill#bentham#kierkegaard#aestheitcs#aesthetic#ethical#critique

3 notes

·

View notes

Text

A Useful Combination of Ethical Systems

The goal of ethics is to determine what is morally right and wrong in human behavior, and to provide guidance for how individuals and society ought to make moral decisions. Different ethical systems have different perspectives on what is morally right and wrong, and what the goal of ethics should be.

One combination that many philosophers and ethicists argue aligns closely with the goal of ethics is a combination of consequentialism, deontology, and virtue ethics.

Consequentialism emphasizes the importance of promoting the greatest good and minimizing harm, which aligns with the goal of promoting well-being and reducing suffering.

Deontology emphasizes the importance of moral rules and principles, and the moral rightness or wrongness of actions independent of their consequences. This aligns with the goal of providing guidance on how to act in accordance with moral principles.

Virtue ethics emphasizes the importance of developing good character and becoming a virtuous person. This aligns with the goal of encouraging the cultivation of virtues and moral excellence, which can be a key element to living a good life and promoting the well-being of others.

This combination allows us to consider both the consequences of an action and the nature of the action itself, and the moral character of the person making the decision, which leads to a more comprehensive and well-rounded approach to ethics.

It's important to keep in mind that different people may have different perspectives on what the goal of ethics is, and what combination of ethical theories aligns most closely with that goal. Some may prioritize different values or have different beliefs about what is morally right and wrong. However, a combination of consequentialism, deontology, and virtue ethics is considered a common approach that aligns with the goal of determining what is morally right and wrong and providing guidance for moral decision-making.

Given the combination of consequentialism, deontology, and virtue ethics, determining what is considered "right" in any given situation would involve considering a number of different factors.

From a consequentialist perspective, the morally right action would be the one that leads to the greatest overall good or the least harm. This approach would involve evaluating the potential consequences of different actions, and choosing the one that is most likely to promote well-being and reduce suffering.

From a deontological perspective, the morally right action would be the one that is in accordance with moral rules and principles. This approach would involve determining whether an action is inherently right or wrong, regardless of its consequences. For example, it is morally wrong to intentionally harm an innocent person, regardless of the potential consequences of that action.

From a virtue ethics perspective, the morally right action would be the one that is consistent with the virtues and moral character of the person making the decision. This approach would involve considering whether the action is in line with virtues such as honesty, courage, and compassion, and whether it reflects the moral excellence of the person making the decision.

When all these perspectives are combined, it would involve evaluating the potential consequences of an action, determining whether the action is in accordance with moral rules and principles, and considering whether the action is consistent with virtues and moral character. All of these factors would be taken into account to determine what is considered "right" in any given situation.

It's important to note that even with this combination, there may be cases in which the moral rightness of an action may be uncertain or unclear, and the decision-making process may involve weighing the different factors and considering trade-offs. Additionally, different people may have different perspectives on what is considered "right" in any given situation, and this may depend on their values and beliefs.

#philosophy#epistemology#ethics#morality#ethical systems#ethical theories#education#learning#values#knowledge#systems#theory#right#wrong#moral#consequentialism#deontology#virtue ethics

4 notes

·

View notes