#Peter Mullin

Text

The Lord of the Rings-The Rings of Power Review

The Lord of the Rings was a book by J.R.R. Tolkien adapted into three films in the early 2000s by Peter Jackson, The Fellowship of the Ring, The Two Towers, and The Return of the King. These three films garnered critical acclaim, winning many Academy Awards, and fan success making quite a bit of money in the theaters. It makes sense that somebody would want to buy the rights to this successful IP…

View On WordPress

#Amazon Prime#Benjamin Walker#Charles Edwards#Gennifer Hutchinson#Ismael Cruz Cordova#JA Bayona#Jeff Bezos#JRR Tolkien#Markella Kavanagh#Morfydd Clark#Nazanin Boniadi#Owain Arthur#Patrick McKay#Peter Mullin#Prime Video#Robert Aramayo#The Lord of the Rings#The Rings of Power

1 note

·

View note

Text

Edit:

Here's the link

https://discord.com/invite/xbUyStxSwm

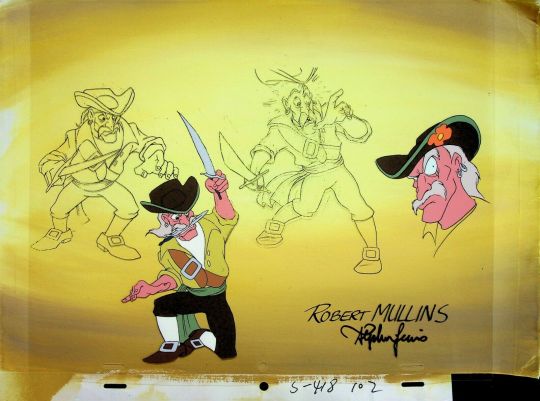

#fox kids peter pan#foxs peter pan and the pirates#fox's peter pan and the pirates#fox's captain hook#fox kids#fox kids captain hook#90's cartoons#peter pan#captain hook#wendy darling#poll#tumblr polls#Tinkerbell#mr. smee#robert mullins#billy jukes#cookson#alf mason#gentleman Starkey#fox#20th century#20th centery fox#20th century fox

25 notes

·

View notes

Text

#pp&p#peter pan and the pirates#fanart#fox's peter pan & the pirates#screencap#panoramic#ages of pan#pirates#captain hook#smee#cookson#mullins#starkey#jukes#mason

14 notes

·

View notes

Photo

7 notes

·

View notes

Text

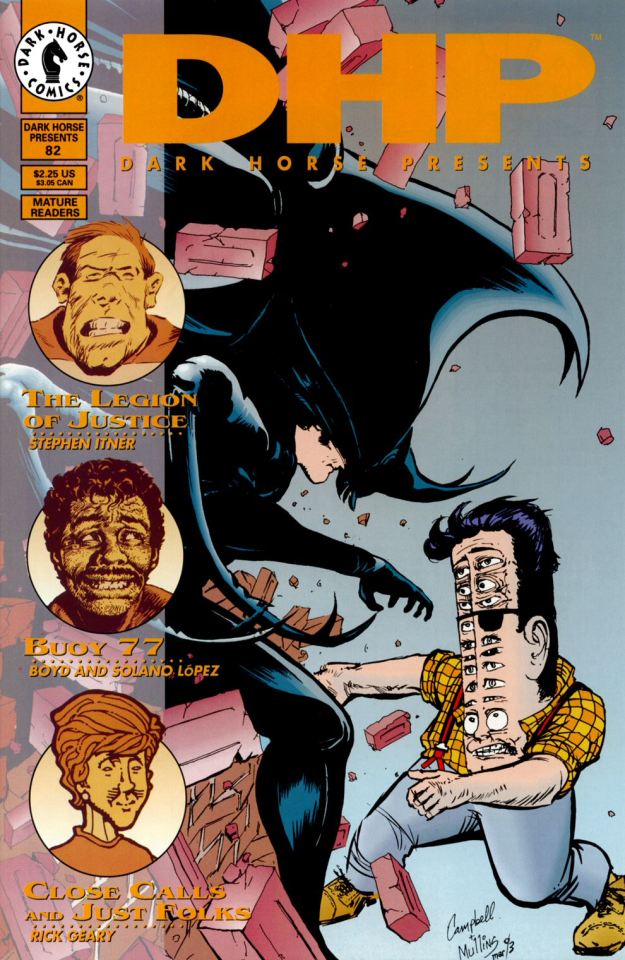

Dark Horse Presents #98 (June 1995) cover by Eddie Campbell, Peter Mullins, Hayley Campbell and Perry McNamee.

#dark horse presents#comic covers#90s#dark horse#eddie campbell#peter mullins#hayley campbell#perry mcnamee

4 notes

·

View notes

Text

youtube

The final LPs that were not intended as such rarely give us a glimpse into a potential departure that is just around the corner, they are basically understood as business as usual. For instance, R.E.M. shocked me with their decision to stop. While they apparently did plan to end with Collapse Into Now – they said so themselves – , one could foresee a future, where they continue to release their records at the elder statesmen everyone's familiar with, yet few pay attention to. To be honest, R.E.M. were like this for the last couple of albums, yet they still had a cachet of their past. However, they always seemed like a band on the constant move, so the last phase probably suffocated them – were the times actually telling them to quit?

#Youtube#r.e.m.#collapse into now#walk it back#michael stipe#mike mills#peter buck#bill rieflin#greg hicks#craig klein#mark mullins#jacknife lee#10's music#rock

1 note

·

View note

Text

Bugatti 'Aerolithe'

Only four Type 57 Atlantic Coupes were ever produced by Bugatti. One of them went to Parisian entrepreneur Jacques Holzshuh, before meeting its end on a railway crossing. Another was delivered new to Baron Victor Rothschild and is owned today by American collector Peter Mullin. A third is currently in the hands of Ralph Lauren, while the fourth? Nobody knows. One of the great mysteries of the car world is the whereabouts of ‘La Voiture Noire’: Jean Bugatti’s very own Type 57 Atlantic.

But the car that previewed the lot of them is the one above. Sort of. It’s a rebodied, restored Bugatti ‘Aerolithe’; a prototype first shown off at the 1935 Paris Motor Show.

A man named David Grainger from The Guild of Automotive Restoration was commissioned to recreate the car that paved the way for the most famous pre-war car ever built. He had the original chassis of the Aerolithe – number 57104 – as well as its original 3.3-litre eight-cylinder engine, and rear axle. But no body.

Indeed, David’s team at The Guild had but 11 photos to work from… and had to adhere to coachbuilding standards of the day. Which means they had to fashion the body from magnesium, which is – by all accounts – incredibly difficult to work with. They spent years riveting and shaping the panels into that simply gorgeous Aerolithe form.

#art#design#luxury cars#supercars#sport cars#luxurycars#luxurylifestyle#supercar#vintage cars#luxurycar#bugatti#recreation#aerolithe#type 57#bugatti type 57#david grainger#vintagecars#vintagecar#collectors#unique car#history#style

331 notes

·

View notes

Text

i finished the KOSA

On the Subject of Safety

Blaine/Alex Smith

A paper on the harmful effects of the Kid’s Online Safety Act (informally known as KOSA) on the internet as a whole, as written by a minor.

Censorship. Control. Tyranny. All of these will come to pass if we allow the Kids Online Safety Act (KOSA) to become law and cause the internet as we know it to cease to exist, completely and utterly. KOSA is a new bill that has been introduced by senators Blumenthal and Blackburn, which has the stated goal “to protect the safety of children on the internet” (found on the official website of the United States Congress, here.) This is either a lie, or an incredibly misguided attempt to do what it says it sets out to do. Though it is more likely a lie, or at the very least not the entire truth. First, I must note, if for no other reason than posterity and comprehensiveness, that the bill was introduced by both a Republican senator (Blackburn) and a Democratic senator (Blumenthal). It was also backed by twenty-one Democrats (Lujan, Baldwin, Klobuchar, Peters, Hickenlooper, Warner, Coons, Schatz, Murphy, Welch, Hassan, Durbin, Casey, Whitehouse, Kelly, Carper, Cardin, Menendez, Warren, Kaine, and Shaheen) and nineteen Republicans (Capito, Cassidy, Ernst, Daines, Rubio, Sullivan, Young, Grassley, Graham, Marshall, Hyde-Smith, Mullin, Risch, Britt, Lummis, Murkowski, Lankford, Crapo, and Hawley). That totals forty-two supporters of this heinous piece of legislature. Considering that there are one-hundred Senators, if you do some simple math, you will realize that fifty-eight Senators did not support KOSA. In the context of this monumental decision, that is a frighteningly close vote. If this bill were to pass, nearly all online fandom presence would be completely eradicated, and online privacy, as well as personal autonomy, would become nonexistent. And so, I say it again, KOSA supporters are just barely not the majority in the Senate, by a margin of sixteen individuals. Even though the rewrite of the bill is a slight improvement, at it’s core, KOSA is still a harmful internet censorship bill that will damage the very communities it claims to try protect.

In this bill, a “child” is defined as any person under the age of thirteen, and a “minor” is defined as any person under the age of seventeen. A "parent” is defined as any legal adult who is the biological or adopted parent of a minor, or holds legal custody over a minor. “Compulsive usage” is defined as “any response stimulated by external factors that causes an individual to engage in repetitive behavior reasonably likely to cause psychological distress, loss of control, anxiety or depression”, “Covered platforms” are defined as “an online platform (i.e. Snapchat, Tumblr, Reddit, Twitter/X, Facebook, Instagram, etc.), online video game (i.e. Helldivers 2, Halo, Mario Kart, Civilization 6, etc.), messaging platform (i.e. Snapchat, 4chan, etc.), or video streaming service (i.e. Twitch, YouTube, etc.) that connects to the internet that is used, or is reasonably likely to be used, by a minor” with the exception of “an entity acting in its capacity of: a common carrier service subject to the Communications Act of 1934 (47 U.S.C. 151 et seq.) and all Acts amendatory thereof and supplementary thereto, a broadband internet access service (as such term is defined for purposes of section 8.1(b) of title 47, Code of Federal Regulations, or any successor regulation), an email service, a teleconferencing or video conferencing service that allows reception and transmission of audio and video signals for real-time communication, provided that it is not an online platform, including a social media service or social network; and the real-time communication is initiated using a unique link or identifier to facilitate access, or a wireless messaging service, including such a service provided through short messaging service or multimedia messaging service protocols, that is not a component of or linked to an online platform and where the predominant or exclusive function is direct messaging consisting of the transmission of text, photos or videos that are sent by electronic means, where messages are transmitted from the sender to a recipient, and are not posted within an online platform or publicly, an organization not organized to carry on business for its own profit or that of its members, any public or private institution of education, a library, a news platform where the inclusion of video content on the platform is related to the platform’s own gathering, reporting or publishing of news content or the website or app is not otherwise an online platform, a product or service the primarily functions as a business to business software or a VPN or similar service that exists solely to route internet traffic between locations.

Geolocation is defined as “information sufficient to identify a street name and name of a city or town.” Individual-specific advertising to minors is defined as any form of targeted advertising towards a person who is or reasonably could be a minor, with the exception of advertising in the context of the website or app the advertising is present on, (i.e. an ad for Grammarly on a dictionary website), or for research on the effectiveness of the advertising. The rule of construction is stated to be that "no part of KOSA should be misconstrued to prohibit a lawfully operating entity from delivering content to any person that they know to be or reasonably believe is over the age of seventeen, provided that content is appropriate for the age of the person involved". To "know" something in the context of this bill is to have actual information, or to have information that is fairly implied or assumed under the basis of objective circumstances. A “mental health disorder” is defined as “a condition which causes a clinically significant disturbance or impairment on one’s cognition, emotional regulation, or behavior. “Online platform” is defined as any public platform that mainly provides a means of communication through the form of a public forum or private chatroom (i.e., Discord, Reddit, Tumblr, Twitter, TikTok, etc.). An “online video game” is defined as any video game or game-adjacent service, including those that provide an educational element, that allows users to create or upload content, engage in microtransactions, communicate with other users, or includes minor specific advertising (i.e., Happy Wheels, Adventure Academy, Meet Your Maker, etc.). “Personal data” is defined as any data or information that is linked or could reasonably be linked to a minor. A “personalized recommendation system (PRS)” is defined as any system that presents content to a user based on date and/or analytics from the user or similar users (i.e., a like-based system, a view-based system, a “for you” system, or a “people that watched also liked” system). Finally, “sexual exploitation or abuse” is defined as: coercion and enticement, as described in section 2422 of title 18, United States Code, child sexual abuse material, as described in sections 2251, 2252, 2252A, and 2260 of title 18, United States Code, trafficking for the production of images (child pornography), as described in section 2251A of title 18, United States Code, or sex trafficking of children, as described in section 1591 of title 18, United States Code.

Before I continue with this paper, I would like to draw attention to the fact that nowhere in the defined terms does it define any form of physical, mental, or emotional violence towards minors, only sexual abuse or assault. This will become important later.

Continuing with the bill, we arrive at the actual regulations, policies and laws proposed by the bill. Here begins Section 3 of the bill, or “Duty of care”. This section includes regulations on the online platform used by a minor, and the duties of it and its owner(s), creator(s), moderator(s), or manager(s). In this section, it is stated that a covered platform must, to the best of its reasonable ability, limit the following harms or dangers to any person who is known to be or reasonably believed to be a minor:

Anxiety, depression, substance use disorders, suicidal disorders, and other similar conditions.

Patterns of use that promote or encourage addiction-like behavior.

Physical violence, online bullying, and harassment of the minor.

Sexual exploitation or abuse.

Promotion and marketing of narcotic drugs (as defined in section 102 of the Controlled Substances Act (21 U.S.C. 802)), tobacco products, gambling, or alcohol.

Predatory, unfair, or deceptive marketing, or other financial harms.

With the exception of:

Any content that is deliberately, independently, and willingly requested or sought out by the minor.

Any platform that provides resources or information to help mitigate the aforementioned harms to the minor.

Again, I call attention to the fact that although physical violence is now mentioned and prohibited, it is done so by burying it in the middle of a wall of text. With as touchy of a subject as this is, one would think that more attention would be drawn to it.

And now we get into the meat and potatoes of this rotten bill, with Section 4, or “Safeguards for minors”. In this section, it is stated that any covered platform shall provide any user who is known to be a minor or is reasonably believed to be a minor, with easy-to-use and readily accessible “safeguards” such as:

Limiting the ability of other individuals to communicate with the minor.

Restricting public access to the personal data of the minor.

Limiting features that increase, sustain, or extend use of the covered platform by the minor, such as automatic playing of media, rewards for time spent on the platform, notifications, and other features that result in compulsive usage of the covered platform by the minor.

Controlling personalized recommendation systems for the minor, and giving them the option to either opt out of the system entirely or to limit the types of content shown through the PRS.

Restricting the sharing of GPS data of the minor sufficient for geolocation.

As well as the options for the minor:

Delete the account of the minor, as well as any data or content associated with the account, effectively removing any trace of the account’s existence from the platform, and the internet at large.

Limit the amount of time spent on the platform.

A covered platform shall also provide parents with the following tools:

Requirements:

The ability to manage a minor’s privacy and account settings, including the safeguards and options established under subsection (a), in a manner that allows parents to:

View the privacy and account settings of the minor.

In the case of a user that the platform knows is a child, change and control the privacy and account settings of the account of the minor.

Restrict purchases and financial transactions by the minor, where applicable.

View metrics of total time spent on the platform and restrict time spent on the covered platform by the minor.

The bill also states that any minor(s) affected by the aforementioned systems should be given clear, prompt, and easily understood and accessible notice of if the systems have been applied, in what capacity they are applied in, and how they are applied.

Default Tools:

A covered platform shall provide the following tools and systems to both minor and parent(s) of that minor, and have them be enabled by default:

A reporting mechanism, which is readily available and easily accessible and useable, to report any incidents or crimes involving the minor(s), as well as confirmation that such a report is received, and the means to track it.

To respond to the report in a timely and appropriate manner, within a reasonable timeframe.

An understanding and appreciation of the fact that a reasonable timeframe is:

(A) 10 days (about 1 and a half weeks) after the receipt of a report, if, for the most recent calendar year, the platform averaged more than 10,000,000 active users monthly in the United States.

(B) 21 days (about 3 weeks) after the receipt of a report, if, for the most recent calendar year, the platform averaged less than 10,000,000 active users monthly in the United States.

(C) notwithstanding subparagraphs (A) and (B), if the report involves an imminent threat to the safety of a minor, as promptly as needed to address the reported threat to safety.

Accessibility :

With respect to the aforementioned safeguards, a covered platform shall provide:

Information and control options in a clear and conspicuous manner that takes into consideration the differing ages, capacities, and developmental needs of the minors most likely to access the covered platform and does not encourage minors or parents to weaken or disable safeguards or parental controls.

Readily accessible and easy-to-use controls to enable or disable safeguards or parental controls, as appropriate.

Information and control options in the same language, form, and manner as the covered platform provides the product or service used by minors and their parents.

The bill also states that no part of the previous statements should be construed to prohibit or prevent a covered platform from blocking, banning, filtering, flagging, or deleting content inappropriate for minors from their platform, or to prevent general management of spam, as well as security risks. It also states that regarding PRSs, it shall be illegal for a covered platform to alter or distort any analytics or data gathered from their platform with the purpose of limiting user autonomy. Also included in this section is that no part of KOSA shall be construed to require the disclosure of a minor’s personal data, including search history, contacts, messages, and location. Also stated is that a PRS for a minor is allowed under the condition that it only uses data publicly available, such as the language the minor speaks, or the minor’s age, and that covered platforms are allowed to integrate third-party systems into their platform, if they meet the requirements laid out in the bill.

And now our train arrives at Section 5, “Disclosure”. This section includes rules regarding the disclosure of policies, rules, and terms to a user that is a minor or is reasonably believed to be a minor prior to their registration with a covered platform.

Registration or Purchase:

Prior to registration or purchase of a covered platform, the platform must provide clear and easily understood information on the policies of the platform, its rules, regulations, etc. As well as any PRS used by the platform, and information on how to turn it and the parental controls on and off.

Notification:

Notice and Acknowledgement, Reasonable Effort, and Consolidated Notices:

In the case of an individual that a covered platform knows is a child, the platform shall additionally provide information about the parental tools and safeguards required under section 4 to a parent of the child and obtain verifiable parental consent (as defined in section 1302(9) of the Children's Online Privacy Protection Act (15 U.S.C. 6501(9))) from the parent prior to the initial use of the covered platform by the child.

A covered platform shall be deemed to have satisfied the requirement described in subparagraph (A) if the covered platform is in compliance with the requirements of the Children's Online Privacy Protection Act (15 U.S.C. 6501 et seq.) to use reasonable efforts (taking into consideration available technology) to provide a parent with the information and to obtain verifiable parental consent as required.

A covered platform may consolidate the process for providing information under this subsection and obtaining verifiable parental consent or the consent of the minor involved (as applicable) as required under this subsection with its obligations to provide relevant notice and obtain verifiable parental consent under the Children's Online Privacy Protection Act (15 U.S.C. 6501 et seq.).

Guidance: the FTC may assist covered platforms with compliance with the rules and regulations in this section.

A covered platform that operates a personalized recommendation system shall set out in its terms and conditions, in a clear, conspicuous, and easy-to-understand manner:

An overview of how such personalized recommendation system is used by the covered platform to provide information to users of the platform who are minors, including how such systems use the personal data of minors; and

Information about options for minors or their parents to opt out of or control the personalized recommendation system (as applicable).

Advertising and Marketing Information and Labels:

A covered platform that facilitates advertising aimed at users that the platform knows are minors shall provide clear, conspicuous, and easy-to-understand information and labels to minors on advertisements regarding:

The name of the product, service, or brand and the subject matter of an advertisement;

If the covered platform engages in individual-specific advertising to minors, why a particular advertisement is directed to a specific minor, including material information about how the minor's personal data is used to direct the advertisement to the minor; and

Whether media displayed to the minor is an advertisement or marketing material, including disclosure of endorsements of products, services, or brands made for commercial consideration by other users of the platform.

Resources for Parents and Minors:

A covered platform shall provide to minors and parents clear, conspicuous, easy-to-understand, and comprehensive information in a prominent location regarding:

Its policies and practices with respect to personal data and safeguards for minors.

How to access the safeguards and tools required under section four.

Resources in Additional Languages:

A covered platform shall ensure, to the extent practicable, that the disclosures required by this section are made available in the same language, form, and manner as the covered platform provides any product or service used by minors and their parents.

That concludes Section 5, and now our next stop is Section 6: “Transparency”. This section includes protocols for public transparency for covered platforms, including public reports on the status of potential harm to minors on their platforms.

Scope of Application: the requirements of this section shall apply to a covered platform if:

For the most recent calendar year, the platform averaged more than 10,000,000 active users monthly in the United States.

The platform predominantly provides a community forum for user-generated content and discussion, including sharing videos, images, games, audio files, discussion in a virtual setting, or other content, such as acting as a social media platform, virtual reality environment, or a social network service.

Transparency: The public reports required of a covered platform under this section shall include:

An assessment of the extent to which the platform is likely to be accessed by minors;

A description of the commercial interests of the covered platform in use by minors;

An accounting, based on the data held by the covered platform, of:

The number of individuals using the covered platform reasonably believed to be minors in the United States;

The median and mean amounts of time spent on the platform by minors in the United States who have accessed the platform during the reporting year on a daily, weekly, and monthly basis; and

The amount of content being accessed by individuals that the platform knows to be minors that is in English, and the top 5 non-English languages used by individuals accessing the platform in the United States;

An accounting of total reports received regarding, and the prevalence (which can be based on scientifically valid sampling methods using the content available to the covered platform in the normal course of business) of content related to, the harms described in section 3, disaggregated by category of harm and language, including English and the top five non-English languages used by individuals accessing the platform from the United States.

A description of any material breaches of parental tools or assurances regarding minors, representations regarding the use of the personal data of minors, and other matters regarding non-compliance.

Reasonably Foreseeable Risk of Harm to Minors: the public reports required of covered platforms under this section shall include:

An assessment of the reasonably foreseeable risk of harm to minors posed by the covered platform, including identifying any other physical, mental, developmental, or financial harms.

An assessment of how personalized recommendation systems and individual-specific advertising to minors can contribute to harm to minors.

A description of whether and how the covered platform uses system design features that increase, sustain, or extend use of a product or service by a minor, such as automatic playing of media, rewards for time spent, and notifications.

A description of whether, how, and for what purpose the platform collects or processes categories of personal data that may cause reasonably foreseeable risk of harm to minors.

An evaluation of the efficacy of safeguards for minors under section 4, and any issues in delivering such safeguards and the associated parental tools.

An evaluation of any other relevant matters of public concern over risk of harm to minors.

An assessment of differences in risk of harm to minors across different English and non-English languages and efficacy of safeguards in those languages.

Mitigation: The public reports required of a covered platform under this section shall include, for English and the top 5 non-English languages used by individuals accessing the platform from the United States:

A description of the safeguards and parental tools available to minors and parents on the covered platform.

A description of interventions by the covered platform when it had or has reason to believe that harms to minors could occur.

A description of the prevention and mitigation measures intended to be taken in response to the known and emerging risks identified in its assessment of system risks, including steps taken to:

Prevent harm to minors, including adapting or removing system design features or addressing through parental controls.

Provide the most protective level of control over privacy and safety by default.

Adapt recommendation systems to mitigate reasonably foreseeable risk of harms to minors.

A description of internal processes for handling reports and automated detection mechanisms for harms to minors, including the rate, timeliness, and effectiveness of responses.

The status of implementing prevention and mitigation measures identified in prior assessments.

A description of the additional measures to be taken by the covered platform to address the circumvention of safeguards for minors and parental tools.

Reasonable Inspection: when conducting an inspection on the systemic risk of harm to minors on their platforms under this section, a covered platform shall:

Consider the function any PRS on their platform.

Consult relevant parties with authority on topics relevant to the inspection.

Conduct their research based on experiences of minors using the platform that the study is being conducted on, including reports and information provided by law enforcement.

Take account of outside research, but not outside data for the study itself.

Consider any implied, indicated, inferred, or known information on the age of users.

Consider the presence of both English and non-English speaking users on their platform.

Cooperation With Independent, Third Pary Audit: To facilitate the report required by this section, a covered platform shall:

Provide directly or otherwise make accessible to the third party conducting the audit all:

Information and data that the platform has the access and authority to disclose that are relevant to the audit.

Platforms, systems and assets that the platform has the access and authority to disclose that are relevant to the audio.

Facts relevant to the audit, unaltered and correctly and fully represented.

Privacy Safeguards: In this subsection, the term “de-identified” means data that does not identify and is not linked or reasonably linkable to a device that is linked or reasonably linkable to an individual, regardless of whether the information is aggregated. In issuing the public reports required under this section, a covered platform shall take steps to safeguard the privacy of its users, including ensuring that data is presented in a de-identified, aggregated format such that it is reasonably impossible for the data to be linked back to any individual user. A covered platform must also present or publish the information on a publicly available and accessible webpage.

And now the next stop on our tour is Section 7: Independent Research on Social Media and Minors. This section details the interactions between the FTC and the National Academy of Sciences in the months following the passing of this bill, if and when it passes. It states that the FTC is to seek to enter a contract with the Academy, in which the Academy is to conduct no less that 5 separate independent scientific studies on the risk of harms to minors on social media, addressing:

Anxiety, depression, eating and suicidal disorders.

Substance use (drugs, narcotics, alcohol, etc.) and gambling.

Sexual exploitation and abuse.

Addiction like behaviors leading to compulsive usage.

Additional Study: after at least 4 years since the passing of the bill, the FTC and the Academy shall enter another contract, in which the Academy shall conduct another study on the above topics, to provide more up to data information.

Content of Reports: The comprehensive studies and reports conducted pursuant to this section shall seek to evaluate impacts and advance understanding, knowledge, and remedies regarding the harms to minors posed by social media and other online platforms and may include recommendations related to public policy.

Active Studies: If the Academy is already engaged in any extant studies regarding the topics described above, it may base the studies required of this section off said study, if it is otherwise compliant.

Collaboration: In conducting the studies required under this section, the FTC, National Academy of Sciences, and the Secretary of Health and Human Services shall consult with the Surgeon General and the Kids Online Safety Council (KOSC).

Access to Data: The FTC may issue orders to covered platforms to gather and compile data and information needed for the studies required under this section, as well as issue orders under section 6(b) of the Federal Trade Commission Act (15 U.S.C. 46(b)) to no more than 5 covered platforms per study under this section. Pursuant to subsections (b) and (f) of section 6 of the Federal Trade Commission Act (15 U.S.C. 46), the FTC shall also enter in agreements with the National Academy to share appropriate information received from a covered platform pursuant to an order under such subsection (b) for a comprehensive study under this section in a confidential and secure manner, and to prohibit the disclosure or sharing of such information by the National Academy.

Next is a short Section 8: Market Research.

Market Research by Covered Platforms: The FTC, in consultation with the Secretary of Commerce, shall issue guidance for covered platforms seeking to conduct market- and product-focused research on minors. Such guidance shall include:

A standard consent form that provides minors and their parents a clear, conspicuous, and easy-to-understand explanation of the scope and purpose of the research to be conducted, and provides an opportunity for informed consent in the language in which the parent uses the covered platform; and

Recommendations for research practices for studies that may include minors, disaggregated by the age ranges of 0-5, 6-9, 10-12, and 13-16.

The FTC shall issue such guidance no later than 18 months (about 1 and a half years) after the date of enactment of this Act. In doing so, they shall seek input from members of the public and the representatives of the KOSC established under section 12.

As we pull off interstate 8 we come into Section 9: Age Verification Study and Report. This is where the writers evidently started pounding shots of tequila at their desks, as some of the regulations in this section are completely asinine. Here we are told about a mandatory age verification system. Gone will be the days of being born in 1427 as a kid, now our age will be known to any covered platform. Is that what you want?

Study: The Director of the National Institute of Standards and Technology, in coordination with the FCC, FTC, and the SoC, shall conduct a study evaluating the most technologically feasible methods and options for developing systems to verify age at the device or operating system level.

Such study shall consider:

The benefits of creating a device or operating system level age verification system.

What information may need to be collected to create this type of age verification system.

The accuracy of such systems and their impact or steps to improve accessibility, including for individuals with disabilities.

How such a system or systems could verify age while mitigating risks to user privacy and data security and safeguarding minors' personal data, emphasizing minimizing the amount of data collected and processed by covered platforms and age verification providers for such a system.

The technical feasibility, including the need for potential hardware and software changes, including for devices currently in commerce and owned by consumers.

The impact of different age verification systems on competition, particularly the risk of different age verification systems creating barriers to entry for small companies.

Report: No later than 1 year after the passing of KOSA, the agencies described in subsection (a) shall submit a report containing the results of the study conducted under such subsection to the Committee on Commerce, Science, and Transportation of the Senate and the Committee on Energy and Commerce of the House of Representatives.

Section 10: Guidance.

No later than 18 months (about 1 and a half years) after the date of enactment of this Act, the FTC, in consultation with the KOSC established under section 12, shall issue guidance to:

Provide information and examples for covered platforms and auditors regarding the following, with consideration given to differences across English and non-English languages:

Identifying features used to increase, sustain, or extend use of the covered platform by a minor (compulsive usage).

Safeguarding minors against the possible misuse of parental tools.

Best practices in providing minors and parents the most protective level of control over privacy and safety.

Using indicia or inferences of age of users for assessing use of the covered platform by minors.

Methods for evaluating the efficacy of safeguards; and

Providing additional control options that allow parents to address the harms described in section 3.

Outline conduct that does not have the purpose or substantial effect of subverting or impairing user autonomy, decision-making, or choice, or of causing, increasing, or encouraging compulsive usage for a minor, such as:

Minute user interface changes derived from testing consumer preferences, including different styles, layouts, or text, where such changes are not done with the purpose of weakening or disabling safeguards or parental controls, such as the following, are exceptions to this:

Algorithms or data outputs outside the control of a covered platform.

The establishing of default settings that provide enhanced privacy protection to users or otherwise enhance their autonomy and decision-making ability.

Guidance to Schools: No later than 18 months (about 1 and a half years) after the date of enactment of this act, the SoE, in consultation with the FTC and the KOSC established under section 12, shall issue guidance to assist to assist elementary and secondary schools in using the notice, safeguards and tools provided under this Act and providing information on online safety for students and teachers.

Guidance on Knowledge Standards: No later than 18 months (about 1 and a half years) after the date of enactment of this act, the FTC shall issue guidance to provide information, including best practices and examples, for covered platforms to understand the Commission’s determination of whether a covered platform “had knowledge fairly implied on the basis of objective circumstances” for purposes of this act.

Limitation on FTC Guidance: No guidance issued by the FTC with respect to this act shall:

Confer any rights on any person, State, or locality; or

Operate to bind the FTC or any person to the approach recommended in such guidance.

Enforcement Actions: In any enforcement action made to a covered platform in violation of this act, the FTC:

Shall allege a violation of this act.

May not base such enforcement action on, or execute a consent order based on, practices that are alleged to be inconsistent with guidance issued by the FTC with respect to this aact, unless the practices are alleged to violate a provision of this act.

And now we’re almost on the home stretch, with Section 11, otherwise known as Enforcement. This section outlines, you guessed it, the enforcement of this act.

Enforcement by the FTC:

Unfair and Deceptive Acts or Practices: A violation of this act shall be treated as a violation of a rule defining an unfair or deceptive act or practice prescribed under section 18(a)(1)(B) of the Federal Trade Commission Act.

Powers of the Commission: The FTC shall enforce this act in the same manner, by the same means, and with the same jurisdiction, powers, and duties as though all applicable terms and provisions of the Federal Trade Commission Act (15 U.S.C. 41 et seq.) were incorporated into and made a part of this act.

Privileges and Immunities: Any person that violates this act shall be subject to the penalties, and entitled to the privileges and immunities, provided in the Federal Trade Commission Act (15 U.S.C. 41 et seq.).

Authority Preserved: Nothing in this act shall be construed to limit the authority of the FTC under any other provision of law.

Enforcement by State Attorney General:

Civil Actions: In any case in which the attorney general of a State has reason to believe that an interest of the residents of that State has been or is threatened or adversely affected by the engagement of any person in a practice that violates this act, the State, as parens patrie (de facto parent of any citizens unable to defend themselves) , may bring a civil action on behalf of the residents of the State in a district court of the United States or a State court of appropriate jurisdiction to:

Enjoin that practice.

Enforce compliance with this act.

On behalf of residents of the State, obtain damages, restitution, or other compensation, each of which shall be distributed in accordance with State law.

Obtain such other relief as the court may consider to be appropriate.

Notice: Before filing an action, the attorney general of the State involved shall provide to the Commission:

Written notice of that action.

A copy of the complaint for that action.

Exemption: Clause (i) shall not apply with respect to the filing of an action by an attorney general of a State under this paragraph if the attorney general of the State determines that it is not feasible to provide the notice described in that clause before the filing of the action.

Notification: In an action described in subclause (I), the attorney general of a State shall provide notice and a copy of the complaint to the Commission at the same time as the attorney general files the action.

Intervention: On receiving notice, the Commission shall have the right to intervene in the action that is the notice's subject.

Effect of Intervention: If the Commission intervenes in an action under paragraph (1), it shall have the right:

To be heard with respect to any matter that arises in that action.

To file a petition for appeal.

Construction: For purposes of bringing any civil action under paragraph (1), nothing in this act shall be construed to prevent an attorney general of a State from exercising the powers conferred on the attorney general by the laws of that State to:

Conduct investigations;

Administer oaths or affirmations.

Compel the attendance of witnesses or the production of documentary and other evidence.

Actions by the Commission: In any case in which an action is instituted by or on behalf of the Commission for violation of this act, no State may, during the pendency of that action, institute a separate action under paragraph (1) against any defendant named in the complaint in the action instituted by or on behalf of the Commission for that violation.

Venue; Service of Progress:

Venue: Any action brought under paragraph (1) may be brought in either:

The district court of the United States that meets applicable requirements relating to venue under section 1391 of title 28, United States Code.

A State court of competent authority.

Service of Progress: In an action brought under paragraph (1) in a district court of the United States, process may be served wherever the defendant:

Is an inhabitant.

May be found.

And now we are going to hear about the Kids Online Safety Council we’ve been hearing so much about in the last few sections in Section 12: Kids Online Safety Council.

Establishment: No later than 180 days (about 6 months) after the enactment date of this act, the Secretary of Commerce shall establish and convene the Kids Online Safety Council to provide advice on matters related to this act.

The Kids Online Safety Council shall include diverse participation from:

Academic experts, health professionals, and members of civil society with expertise in mental health, substance use disorders, and the prevention of harm to minors.

Representatives in academia and civil society with specific expertise in privacy and civil liberties.

Parents and youth representation.

Representatives of covered platforms.

Representatives of the NTIA, the NIST, the FTC, the DoJ, and the DHHS.

(6) State attorneys general or their designees acting in State or local government.

Educators.

Representatives of communities of socially disadvantaged individuals (as defined in section 8 of the Small Business Act (15 U.S.C. 637)).

Activities: The matters to be addressed by the Kids Online Safety Council shall include:

Identifying emerging or current risks of harm to minors associated with online platforms.

Recommending measures and methods for assessing, preventing, and mitigating harms to minors online.

Recommending methods and themes for conducting research regarding online harms to minors, including in English and non-English languages.

Recommending best practices and clear, consensus-based technical standards for transparency reports and audits, as required under this a ct, including methods, criteria, and scope to promote overall accountability.

And now for Section 13, Filter Bubble Transparency Requirements. Almost to the end!

Definitions: In this section:

The term “algorithmic ranking system” means a computational process, including one derived from algorithmic decision-making, machine learning, statistical analysis, or other data processing or artificial intelligence techniques, used to determine the selection, order, relative prioritization, or relative prominence of content from a set of information that is provided to a user on a covered internet platform, including the ranking of search results, the provision of content recommendations, the display of social media posts, or any other method of automated content selection.

The term “approximate geolocation information” means information that identifies the location of an individual, but with a precision of less than 5 miles.

The term “Commission” means the Federal Trade Commission.

The term “connected device” means an electronic device that:

Can connect to the internet, either directly or indirectly through a network, to communicate information in the direction of an individual.

Has computer processing capabilities for collecting, sending, receiving, or analyzing data.

Is primarily designed for or marketed to consumers.

Covered Internet Platform: The term “covered internet platform” means any public-facing website, internet application, or mobile application, including a social network site, video sharing service, search engine, or content aggregation service. Such term shall not include a platform that:

Is wholly owned, controlled, and operated by a person that:

For the most recent 6-month period, did not employ more than 500 employees.

For the most recent 3-year period, averaged less than $50,000,000 in annual gross revenue.

Collects or processes on an annual basis the user-specific data of less than 1,000,000 users (about the population of Delaware) or is operated for the sole purpose of conducting research that is not made for profit either directly or indirectly.

Input Transparent Algorithm: The term “input-transparent algorithm” means an algorithmic ranking system that does not use the user-specific data of a user to determine the selection, order, relative prioritization, or relative prominence of information that is furnished to such user on a covered internet platform, unless the user-specific data is expressly provided to the platform by the user for such purpose.

Data Provided for Express Purpose of Interaction with Platform For purposes of subparagraph (A), user-specific data that is provided by a user for the express purpose of determining the selection, order, relative prioritization, or relative prominence of information that is furnished to such user on a covered internet platform:

Shall include user-supplied search terms, filters, speech patterns (if provided for the purpose of enabling the platform to accept spoken input or selecting the language in which the user interacts with the platform), saved preferences, and the current precise geolocation information that is supplied by the user.

Shall include the user's current approximate geolocation information.

Shall include data affirmatively supplied to the platform by the user that expresses the user's desire to receive particular information, such as the social media profiles the user follows, the video channels the user subscribes to, or other content or sources of content on the platform the user has selected.

Shall NOT include the history of the user's connected device, including the user's history of web searches and browsing, previous geographical locations, physical activity, device interaction, and financial transactions.

Shall NOT include inferences about the user or the user's connected device, without regard to whether such inferences are based on data described in clause (i) or (iii).

Opaque Algorithm: The term “opaque algorithm” means an algorithmic ranking system that determines the selection, order, relative prioritization, or relative prominence of information that is furnished to such user on a covered internet platform based, in whole or part, on user-specific data that was not expressly provided by the user to the platform for such purpose.

Exception for Age-Appropriate Content Filtering: Such term shall not include an algorithmic ranking system used by a covered internet platform if:

The only user-specific data (including inferences about the user) that the system uses is information relating to the age of the user.

Such information is only used to restrict a user's access to content on the basis that the individual is not old enough to access such content (i.e., not allowing a 13-year-old to access pornography).

Precise Geolocation Information: The term “precise geolocation information” means geolocation information that identifies an individual’s location to within a range of 5 miles or less.

Search Syndication Contract, Upstream Provider, Downstream Provider:

The term “search syndication contract” means a contract or subcontract for the sale of, license of, or other right to access an index of web pages or search results on the internet for the purpose of operating an internet search engine.

The term “upstream provider” means, with respect to a search syndication contract, the person that grants access to an index of web pages or search results on the internet to a downstream provider pursuant to the contract.

The term “downstream provider” means, with respect to a search syndication contract, the person that receives access to an index of web pages on the internet from an upstream provider under such contract.

User Specific Data: The term “user-specific data” means information relating to an individual or a specific connected device that would not necessarily be true of every individual or device.

Requirement to Allow Users to View Unaltered Content on Internet Platforms: Beginning on the date that is 1 year after the date of enactment of this Act, it shall be illegal:

For any person to operate a covered internet platform that uses an opaque algorithm unless the person complies with the opaque algorithm requirements.

For any upstream provider to grant access to an index of web pages on the internet under a search syndication contract that does not comply with the requirements of paragraph (3).

Opaque Algorithm Requirements: The requirements of this paragraph with respect to a person that operates a covered internet platform that uses an opaque algorithm are the following:

The person provides notice to users of the platform:

That the platform uses an opaque algorithm that uses user-specific data to select the content the user sees. Such notice shall be presented in a clear, conspicuous manner on the platform whenever the user interacts with an opaque algorithm for the first time and may be a one-time notice that can be dismissed by the user.

In the terms and conditions of the covered internet platform, in a clear, accessible, and easily comprehensible manner to be updated no less frequently than once every 6 months:

The most salient features, inputs, and parameters used by the algorithm.

How any user-specific data used by the algorithm is collected or inferred about a user of the platform, and the categories of such data.

Any options that the covered internet platform makes available for a user of the platform to opt out or exercise options under clause (ii), modify the profile of the user or to influence the features, inputs, or parameters used by the algorithm.

Any quantities, such as time spent using a product or specific measures of engagement or social interaction, that the algorithm is designed to optimize, as well as a general description of the relative importance of each quantity for such ranking.

The person makes available a version of the platform that uses an input-transparent algorithm and enables users to easily switch between the version of the platform that uses an opaque algorithm and the version of the platform that uses the input-transparent algorithm.

Exceptions for Certain Downstream Providers: Subparagraph (A) shall not apply with respect to an internet search engine if:

The search engine is operated by a downstream provider with fewer than 1,000 employees.

The search engine uses an index of web pages on the internet to which such provider received access under a search syndication contract.

Search Syndication Contract Requirements: The requirements of this paragraph with respect to a search syndication contract are that:

As part of the contract, the upstream provider makes available to the downstream provider the same input-transparent algorithm used by the upstream provider for purposes of complying with paragraph (2)(A)(ii).

The upstream provider does not impose any additional costs, degraded quality, reduced speed, or other constraint on the functioning of such algorithm when used by the downstream provider to operate an internet search engine relative to the performance of such algorithm when used by the upstream provider to operate an internet search engine.

Prohibition on Differential Pricing: A covered internet platform shall not deny, charge different prices or rates for, or condition the provision of a service or product to an individual based on the individual’s election to use a version of the platform that uses an input-transparent algorithm as provided under paragraph (2)(A)(ii).

Enforcement by FTC:

Unfair or Deceptive Acts or Practices: A violation of this section by an operator of a covered internet platform shall be treated as a violation of a rule defining an unfair or deceptive act or practice prescribed under section 18(a)(1)(B) of the Federal Trade Commission Act (15 U.S.C. 57a(a)(1)(B)).

Powers of Commision: Except as provided in subparagraph (C), the FTC shall enforce this section in the same manner, by the same means, and with the same jurisdiction, powers, and duties as though all applicable terms and provisions of the Federal Trade Commission Act (15 U.S.C. 41 et seq.) were incorporated into and made a part of this section.

Privileges and Immunities: Except as provided in subparagraph (C), any person who violates this Act shall be subject to the penalties and entitled to the privileges and immunities provided in the Federal Trade Commission Act (15 U.S.C. 41 et seq.).

Common Carriers and Nonprofit Institutions: Notwithstanding section 4, 5(a)(2), or 6 of the Federal Trade Commission Act (15 U.S.C. 44, 45(a)(2), 46) or any jurisdictional limitation of the Commission, the Commission shall also enforce this act, in the same manner provided in subparagraphs (A) and (B) of this paragraph, with respect to:

Common carriers subject to the Communications Act of 1934 (47 U.S.C. 151 et seq.) and acts amendatory thereof and supplementary thereto

Organizations are not organized to carry on business for their own profit or that of their members.

Authority Preserved: Nothing in this section shall be construed to limit the authority of the Commission under any other provision of law.

Rule of Application: Section 11 shall not apply to this section.

Rule of Construction to Preserve Personal Blocks: Nothing in this section shall be construed to limit or prohibit a covered internet platform’s ability to, at the direction of an individual user or group of users, restrict another user from searching for, finding, accessing, or interacting with such user’s or group’s account, content, data, or online community.

Skipping over Section 14, as it is incredibly short (don’t worry, it’s one line, and I’ll say it at the end), we arrive at Section 15, or “Rules of Construction and Other Matters”.

Relationships to Other Laws: Nothing in this Act shall be construed to:

Preempt section 444 of the General Education Provisions Act (20 U.S.C. 1232g (about 2.72 lb), commonly known as the “Family Educational Rights and Privacy Act of 1974”) or other Federal or State laws governing student privacy.

Preempt the Children's Online Privacy Protection Act of 1998 (15 U.S.C. 6501 et seq.) or any rule or regulation promulgated under such Act.

Authorize any action that would conflict with section 18(h) of the Federal Trade Commission Act (15 U.S.C. 57a(h)).

Fairly Implied on the Basis of Objective Circumstances: For purposes of enforcing this act, in making a determination as to whether covered platform has knowledge fairly implied on the basis of objective circumstances that a user is a minor, the FTC shall rely on competent and reliable empirical evidence, taking into account the totality of the circumstances, including consideration of whether the operator, using available technology, exercised reasonable care.

Protections for Privacy: Nothing in this act shall be construed to require:

The affirmative collection of any personal data with respect to the age of users that a covered platform is not already collecting in the normal course of business.

A covered platform to implement an age gating or age verification functionality.

Compliance: Nothing in this act shall be construed to restrict a covered platform's ability to:

Cooperate with law enforcement agencies regarding activity that the covered platform reasonably and in good faith believes may violate Federal, State, or local laws, rules, or regulations.

Comply with a civil, criminal, or regulatory inquiry or any investigation, subpoena, or summons by Federal, State, local, or other government authorities.

Investigate, establish, exercise, respond to, or defend against legal claims.

Application to Video Streaming Services: A video streaming service shall be deemed to be in compliance with this act if it predominantly consists of news, sports, entertainment, or other video programming content that is preselected by the provider and not user-generated, and:

Any chat, comment, or interactive functionality is provided incidental to, directly related to, or dependent on provision of such content.

If such video streaming service requires account owner registration and is not predominantly news or sports, the service includes the capability:

To limit a minor’s access to the service, which may utilize a system of age-rating.

To limit the automatic playing of on-demand content selected by a personalized recommendation system for an individual that the service knows is a minor.

To provide an individual that the service knows is a minor with readily-accessible and easy-to-use options to delete an account held by the minor and delete any personal data collected from the minor on the service, or, in the case of a service that allows a parent to create a profile for a minor, to allow a parent to delete the minor’s profile, and to delete any personal data collected from the minor on the service.

For a parent to manage a minor’s privacy and account settings, and restrict purchases and financial transactions by a minor, where applicable.

To provide an electronic point of contact specific to matters described in this paragraph.

To offer clear, conspicuous, and easy-to-understand notice of its policies and practices with respect to personal data and the capabilities described in this paragraph.

When providing on-demand content, to employ measures that safeguard against serving advertising for narcotic drugs (as defined in section 102 of the Controlled Substances Act (21 U.S.C. 802)), tobacco products, gambling, or alcohol directly to the account or profile of an individual that the service knows is a minor.

And finally, we arrive at Section 16, the last Section, combined with Section 14, which I glossed over earlier. If any part of this act is deemed obsolete, ineffective, or unnecessary at any point in time, it may be removed from the act without any effect to the rest of it. Unless otherwise specified, all regulations in this act shall come into effect 18 months (about 1 and a half years) after the passing of this bill.

As you can see, this bill makes an impressive amount of sense, and if it were simply used as intended, it would be wonderful. However, because we can never have nice things, legislators, politicians, and the common public have twisted this bill, warping it to serve their selfish desires. Firstly, Senator Blackburn, one of the people behind this whole thing, blatantly said to a camera that she was going to “use KOSA to protect kids from the transgender in this culture.” With the queer community already under fire from all sides, this is the last thing they need, as all it does is further demonize them, and paint them as evil, pedophilic freaks to be feared and hated. A lot of regulations outlined in this bill also provide a concerning amount of control over the children it claims to protect, and it isn’t giving it to the child. It’s giving it to their parents. The adults who, as much as we hate to admit it, don’t always have the best interests of their children at heart. And what’s more, now the conservative Republicans care about people with disabilities, but it’s only when they’re trying to push a different marginalized group off the net. However, as soon as we try to do something as simple as mandating wheelchair ramps for all government buildings, they say “we don’t have the money for that!”. Okay, since obviously you don’t understand basic common sense Mr. Conservative, let me explain this for you: let’s say that KOSA will be fully enforced on every single website out of the over 1 BILLION that currently exist. Let’s say that about half of those comply fully willingly with KOSA, just for the benefit of the doubt. That still lives 500 million noncompliant websites. Let’s say that with legal proceedings and other costs, it would cost 500 dollars in USD per website to bring those websites into compliance. It would cost 250 BILLION dollars in USD to bring 500 million websites into compliance. Now let’s do some math on the wheelchair ramp problem, I’ll use small businesses as an example, since that’s the main subject of complaints on this topic. There are 33,185,550 small businesses registered in the United States. With the average cost per linear foot of concrete ramp being 225 dollars in USD, and saying that every small business has 2 doors that need ramps that are 1 linear foot, that means that every small business would have to pay 450 dollars in USD to make their businesses accessible. Every 3-5 years, you’ll want to reseal that concrete, costing about 3-5 dollars in USD per square foot. That means that a one time expense of 450 dollars to build the original ramps (on average, grossly oversimplified, but you get it), with an recurring cost every 3-5 years of a mere 6-10 dollars in USD. It would cost 4e-9% of what it would cost to enforce KOSA to make every registered small business in the US accessible. That is less than 1% of the money it would cost to enforce KOSA.

This bill would also enforce a strict “no horny” policy on damn near every website that you can think of. And as much as we like to demonize and vilify them, sex workers, porn actors, and hentai artists are people too, and some of them make a living off what they do. By enacting KOSA, you are driving the lives of more than 2 million people (about the population of Nebraska) into the dirt. KOSA places the value of a child’s innocence over those lives. Now, don’t get me wrong, I am absolutely against child pornography and exposing small children to porn, but we shouldn’t lock down everyone's access to explicit content to preserve 22.1% of the US population’s childlike innocence. KOSA also can lock those children into abusive and dangerous conditions and make the lives of orphans a living hell. By giving the parents of minors total control over their child’s account, you are allowing them to lock down their lives, and expose these children to, at best, harmful misinformation, extremism, and general naivety, and at worst, physical abuse and/or death. That’s another 600,000 lives ground into the dirt under the boot of “safety”. And that little bit about “parens patrie”? That basically means that if you can’t defend yourself (i.e. are homeless, an orphan, etc.), the government is your parent now. Growing up with a faceless, nameless entity in place of any parental figure is NOT a healthy way for a child to live. Again, that’s another 2,823,104 human beings crushed by KOSA. In total, out of just the three categories of people I listed here, over 4,883,104 human beings, with lives, emotions, thoughts, joys and pains, are being royally screwed over by this absolutely horrid bill.

We as a society are better than this. Come on people. What memo did I miss that decided that we only need equality for some people? Instead of bickering amongst ourselves like children, we should work together and solve our problems! Isn’t that what we all want!? But no, instead we fight over our inviolable rights as human beings like toddlers over a shovel in the sandbox, instead of behaving like civilized people. And what’s worse, it paints anyone who doesn’t fit the perfect ideal of an American citizen in shades of evil, demonizing and alienating them even more than they already are. What the hell are we doing here? Instead of waving our bureaucracy around, we should get off the chair and do something about it. How many times have we done something without taking a risk? That’s right, NEVER.

If we claim to be champions of equality, yet only desire it for ourselves, we are just as bad as those whose actions we decry. So please, dear reader, I beg of you. Stop KOSA, stop censorship, stop tyranny if you value this world at all.

54 notes

·

View notes

Text

Where California politicians stand on Palestine

The majority of Democrats in DC, despite their voter base being overwhelmingly in support of a ceasefire, refuse to disavow the genocide in Gaza or call for ceasefire.

They are able to do this, to disregard the outrage of their constituency, because they feel certain that no matter how many letters we send, we will show up and vote for them when the time comes. They are certain their actions have no consequences.

Senator Alex Padilla received about $31k from Israeli lobby groups. He refuses to call for a ceasefire. This was his going rate to enable genocide.

Senator Laphonza Butler was appointed in Oct 2023 after the death of Diane Feinstein, who was pro-Israel. No campaign finance info on file. She refuses to call for a ceasefire. Perhaps she's waiting for her payout.

12/52 California congressional representatives are Republicans. None have called for ceasefire. Palestinian genocide is core to the Republican platform.

Of the 40 Democrat representatives, only 11 have called for ceasefire. The following CA Democrat representatives have called for ceasefire. They were not swayed by money received from Israeli lobby groups.

Jared Huffman, 2 - not swayed by $1k from Israeli lobby groups this election cycle, down from 13.5k and 9.5k in the last two

John Garamendi, 8 - not swayed by $500 whole dollars from Israeli lobby groups, down from 5k and 15k

Mark Desaulnier, 10 - not swayed by $1k, down from 4k and 4.5k

Barbara Lee, 12 - not swayed by $13.5k, down from 27k and just 4k in 2020

Ro Khanna, 17 - not swayed by $12k, down from 45.5k and 23k

Judy Chu, 28 - not swayed by $1k, down from 7k and 10.5k

Tony Cardenas, 29 - not swayed by 35k, down from 70k and 38.5k

Jimmy Gomez, 34 - received nothing this cycle or 2020, not swayed by the $13.5k Israeli lobby groups gave in 2022

Robert Garcia, 42 - has received nothing from Israeli lobby groups since taking office in 2023

Maxine Waters, 43 - has received nothing from Israeli lobby groups in the past 3 election cycles

Sara Jacobs, 51 - not swayed by $9k, down from $26.5k in her first election cycle

The following CA Democrat representatives have refused to call for ceasefire. The approximate amount they've received from Israeli lobby groups since 2019 (the price it cost to buy their endorsement of genocide) is after each name and district number.

Mike Thompson, 4 (24k); Ami Bera, 6 (46.5k); Doris Matsui, 7 (9k); Josh Harder, 9 (159k); Nancy Pelosi, 11 (92.5k); Eric Swalwell, 14 (54.5k); Kevin Mullin, 15 (0 - 19-22 data missing); Anna Eshoo, 16 (34.5k); Zoe Lofgren, 18 (22k); Jimmy Panetta, 19 (14k); Jim Costa, 21 (127k); Salud Carbajal, 24 (27k); Raul Ruiz, 25 (24.5k); Julia Brownley, 26 (47.5k); Adam Schiff, 30 (205.5k); Grace Napolitano, 31 (8k); Brad Sherman, 32 (132k); Pete Aguilar, 33 (315.5k); Norma Torres, 35 (14.5k); Ted Lieu, 36 (122k); Sydney Kamlager, 37 (15.5k - 22 data missing); Linda Sanchez, 38 (36.5k); Mark Takano, 39 (17k); Nanette Barragan, 44 (69.5k); Lou Carrera, 46 (12.5k); Katie Porter, 47 (152.5k); Mike Levin, 49 (143k); Scott Peters, 50 (34k); Juan Vargas, 52; (208.5k)

The Republicans will be trash regardless, but we cannot let our Democrats skate by thinking there are no consequences for supporting a genocide. They are slaughtering people with your tax dollars, Americans. It's time to get serious. It's time to tell these people that they cannot have our votes for free. It's time to start talking about primary opposition and third party voting. It's time to start exercising our power as voting citizens.

37 notes

·

View notes

Text

Source

[ID: a quote tweet by Stephen Semler (@stephensemler) dated March 23, 2024. It is responding to a tweet by the Associated Press (@AP) that says, “BREAKING: Senate passes $1.2 trillion funding package in early morning vote, ending threat of partial shutdown.”

It says, “All but two Democratic senators just voted to:

-give Israel $3.8B in weapons, violating US law

-defund a UN inquiry into Israel's violations of international law

-defund UNRWA, worsening famine in Gaza

-sanction the UN Human Rights Council if it highlights Israeli abuses.”

It contains an image of only text showing who voted yea, who voted nay, and who abstained. 74 senators voted yea, including 47 democrats, 25 republicans, and 2 independents. 24 senators voted nay: all republicans except Senator Bennet (Democrat, Colorado), and Senator Sanders (Independent, Vermont). 2 Republican senators abstained. Full transcription of this image under the cut.]

Transcript of the image contained in the tweet:

“YEAS --- 74

Baldwin (D-WI)

Blumenthal (D-CT)

Booker (D-NJ)

Boozman (R-AR)

Britt (R-AL)

Brown (D-OH)

Butler (D-CA)

Cantwell (D-WA)

Capito (R-WV)

Cardin (D-MD)

Carper (D-DE)

Casey (D-PA)

Cassidy (R-LA)

Collins (R-ME)

Coons (D-DE)

Cornyn (R-TX)

Cortez Masto (D-NV)

Cotton (R-AR)

Cramer (R-ND)

Duckworth (D-IL)

Durbin (D-IL)

Ernst (R-IA)

Fetterman (D-PA)

Fischer (R-NE)

Gillibrand (D-NY)

Graham (R-SC)

Grassley (R-IA)

Hassan (D-NH)

Heinrich (D-NM)

Hickenlooper (D-CO)

Hirono (D-HI)

Hoeven (R-ND)

Hyde-Smith (R-MS)

Kaine (D-VA)

Kelly (D-AZ)

King (1-ME)

Klobuchar (D-MN)

Lujan (D-NM)

Manchin (D-WV)

Markey (D-MA)

McConnell (R-KY)

Menendez (D-NJ)

Merkley (D-OR)

Moran (R-KS)

Mullin (R-OK)

Murkowski (R-AK)

Murphy (D-CT)

Murray (D-WA)

Ossoff (D-GA)

Padilla (D-CA)

Peters (D-MI)

Reed (D-RI)

Romney (R-UT)

Rosen (D-NV)

Rounds (R-SD)

Schatz (D-HI)

Schumer (D-NY)

Shaheen (D-NH)

Sinema (I-AZ)

Smith (D-MN)

Stabenow (D-MI)

Sullivan (R-AK)

Tester (D-MT)

Thune (R-SD)

Tillis (R-NC)

Van Hollen (D-MD)

Warner (D-VA)

Warnock (D-GA)

Warren (D-MA)

Welch (D-VT)

Whitehouse (D-RI)

Wicker (R-MS)

Wyden (D-OR)

Young (R-IN)

(This is the end of the yeas.)

NAYS --- 24

Barrasso (R-WY)

Bennet (D-CO)

Blackburn (R-TN)

Budd (R-NC)

Crapo (R-ID)

Cruz (R-TX)

Daines (R-MT)

Hagerty (R-TN)

Hawley (R-MO)

Johnson (R-WI)

Kennedy (R-LA)

Lankford (R-OK)

Lee (R-UT)

Lummis (R-WY)

Marshall (R-KS)

Paul (R-KY)

Ricketts (R-NE)

Risch (R-ID)

Rubio (R-FL)

Sanders (I-VT)

Schmitt (R-MO)

Scott (R-SC)

Tuberville (R-AL)

Vance (R-OH)

(This is the end of the nays.)

Not Voting - 2

Braun (R-IN)

Scott (R-FL)”

(This is the end of the transcribed image.)

#palestine#free palestine#gaza#gaza strip#free gaza#israel#palestinian genocide#unrwa#us politics#genocide joe#genocidin biden#democrats#blue maga#vote blue no matter who#human rights violations#international law#cross posted from twitter#twitter#my post#original post#(but not really)#this post was queued

33 notes

·

View notes

Text

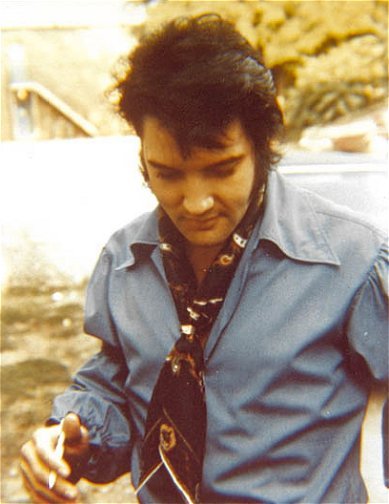

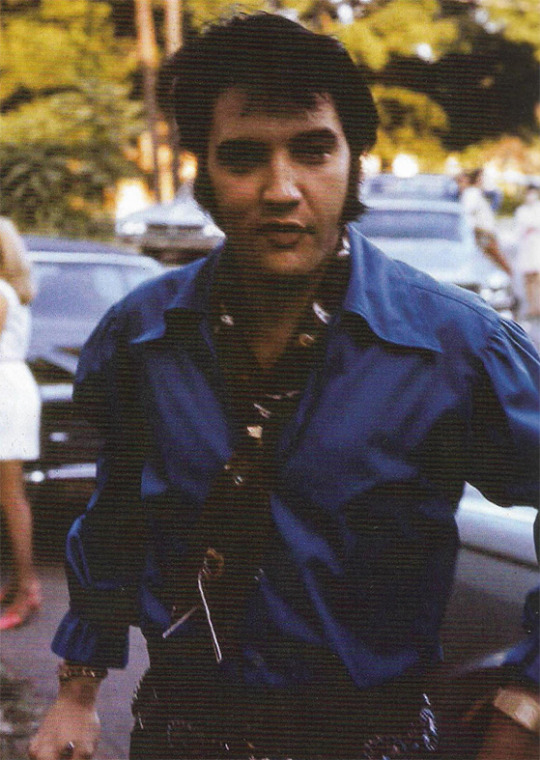

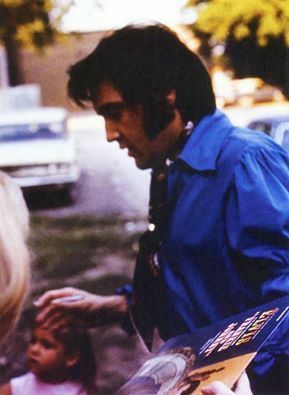

"Heart of Rome" (1970/1971)

youtube

Written by Geoff Stephens, Alan Blaikley and Ken Howard, recorded by Elvis Presley on June 6, 1970 at the RCA’s Studio B in Nashville, Tennessee, "Heart of Rome" was released on the album "Love Letters from Elvis" on June 16, 1971.

MUSICIANS FOR THE TRACK

Guitar: James Burton, Chip Young, Elvis Presley. Bass: Norbert Putnam. Drums: Jerry Carrigan. Piano: David Briggs. Organ & Harmonica: Charlie McCoy. OVERDUBS, Guitar: James Burton. Organ: David Briggs. Percussion: Jerry Carrigan. Percussion & Vibes: Farrell Morris. Steel Guitar: Weldon Myrick. Trumpet: Charlie McCoy, George Tidwell, Don Sheffield, Glenn Baxter. Saxophone: Wayne Butler, Norman Ray. Flute, Saxophone & Clarinet: Skip Lane. Trombone: Gene Mullins. Flute & Trombone: William Puett. Vocals: Elvis Presley, Mary Holladay, Mary (Jeannie) Green, Dolores Edgin, Ginger Holladay, Millie Kirkham, June Page, Temple Riser, Sonja Montgomery, Joe Babcock, The Jordanaires, The Imperials.

THE RECORDING SESSION

Studio Sessions for RCA on June 6, 1970: RCA’s Studio B, Nashville

The last entry of the evening, “Heart Of Rome,” was an up-tempo dramatic ballad in the operatic vein of “It’s Now Or Never” or “Surrender”; it may have had a little more irony going for it than the earlier cuts, but by the end it had Elvis straining for the high notes — and the band struggling to keep awake.

Excerpt: "Elvis Presley: A Life in Music" by Ernst Jorgensen. Foreword by Peter Guralnick (1998)

The song was recorded on the same day as the hits "You Don't Have To Say You Love Me" and "Just Pretend", as well as "I Didn't Make It On Playing Guitar", "It Ain't No Big Thing ( But It's Growing)", "This is Our Dance" and "Life".

It was past midnight when they were working on "Heart of Rome", the last song recorded that night. Elvis, the recording team and musicians spent about ten hours working at the RCA's studio during the Nashville sessions from June 4 to 8, 1970, reporting each evening at 6pm and working until the wee hours, wrapping up the sessions around 4:30 am. Elvis and his band recorded 35 masters over the five-days 1970 recording sessions in Nashville.

June 1970, at RCA's Studio B in Nashville, Tennessee.

Top (left to right) David Briggs (piano), Norbert Putnam (bass), Elvis (vocals and guitar), Al Pachucki (engineer), Jerry Carrigan (drums/percussion); bottom Felton Jarvis (producer), Chip Young (guitar), Charlie McCoy (organ & harmonica/trumpet), James Burton (guitar).

ADDITIONAL INFO

Elvis on June 4, 1970 in Nashville, stepping out of a car at RCA's studio B parking lot on his way to the studio's back entrance. Photography source: elvis-collectors.com.

During that first recording session in Nashville in June 1970, Elvis would record the songs "Twenty Days And Twenty Nights", "I've Lost You", "I Was Born About Ten Thousand Years Ago", "Little Cabin On The Hill", "The Fool", "The Sound Of Your Cry", "A Hundred Years From Now" and "Cindy, Cindy".

#“Heart of Rome” is currently my N.1 most played song on my Spotify#I guess that can tell which song of Elvis is my favorite of all#there's something about this song that is so dreamy#i know its kinda a sad song but its also very touching... missing the one person you want to spend more time with like its never enough 🥹#its so beautiful#absolutely one underrated elvis song#i get goosebumps every damn time i listen to this track#cant and won't ever get over it#elvis presley#elvis music#1970-1971#love letters from elvis#Youtube

13 notes

·

View notes

Text

the usa senate passed the budget that banned all aid to UNRWA and Biden signed it.

the senators who voted for this budget (preventing usa from funding UNRWA) are under the readmore. if your senator is on this list, call (202) 224-3121 and demand they find another way of funding relief to palestine.

Tammy Baldwin Wis.

Richard Blumenthal Conn.

Cory Booker N.J.

John Boozman Ark.

Katie Britt Ala.

Sherrod Brown Ohio

Laphonza Butler Calif.

Maria Cantwell Wash.

S. Capito W.Va.

Benjamin L. Cardin Md.

Tom Carper Del.

Bob Casey Pa.

Bill Cassidy La.

Susan Collins Maine

Chris Coons Del.

John Cornyn Tex.

C. Cortez Masto Nev.

Tom Cotton Ark.

Kevin Cramer N.D.

Tammy Duckworth Ill.

Dick Durbin Ill.

Joni Ernst Iowa

John Fetterman Pa.

Deb Fischer Neb.

Kirsten Gillibrand N.Y.

Lindsey Graham S.C.

Chuck Grassley Iowa

M. Hassan N.H.

Martin Heinrich N.M.

John Hickenlooper Colo.

Mazie Hirono Hawaii

John Hoeven N.D.

Cindy Hyde-Smith Miss.

Tim Kaine Va.

Mark Kelly Ariz.

Angus King Maine

Amy Klobuchar Minn.

Ben Ray Luján N.M.

Joe Manchin III W.Va.

Edward J. Markey Mass.

Mitch McConnell Ky.

Robert Menendez N.J.

Jeff Merkley Ore.

Jerry Moran Kan.

Markwayne Mullin Okla.

Lisa Murkowski Alaska

Chris Murphy Conn.

Patty Murray Wash.

Jon Ossoff Ga.

Alex Padilla Calif.

Gary Peters Mich.

Jack Reed R.I.

Mitt Romney Utah

Jacky Rosen Nev.

Mike Rounds S.D.

Brian Schatz Hawaii

Charles E. Schumer N.Y.

Jeanne Shaheen N.H.

Kyrsten Sinema Ariz.

Tina Smith Minn.

Debbie Stabenow Mich.

Dan Sullivan Alaska

Jon Tester Mont.

John Thune S.D.

Thom Tillis N.C.

Chris Van Hollen Md.

Mark R. Warner Va.