#fullyconnected

Photo

#HarvestMoon #FullMoon #CapturedonHuawei #HuaweiP30PH #HuaweiP30Pro #RewriteTheNight #RewriteTheRules #EMUIMoments #HelloHuawei #FullyConnected #NaturallyConnected (at Potrero, Malabon City) https://www.instagram.com/p/CUFebSThwOBBm_JDjAKOrCtR4DDKAq-AfK-S0g0/?utm_medium=tumblr

#harvestmoon#fullmoon#capturedonhuawei#huaweip30ph#huaweip30pro#rewritethenight#rewritetherules#emuimoments#hellohuawei#fullyconnected#naturallyconnected

1 note

·

View note

Video

OBASANJO FORMER NIGERIAN PRESIDENT TAKES BISOLA CEO OF BISOLA HAIR FOR A CRUISE AROUND THE BEAUTIFUL PRESIDENTIAL LIBRARY IN OGUN STATE NIGERIA. #bisolahair @bisolahair #proudlynigerian #politics #presidentobasanjo #presidentiallibary #ogunstate #nigeria #dreambig #getbig #power #godey #worksmarter #selfmade #fullyconnected #bossdoll💋💋 (at London, United Kingdom)

#politics#presidentobasanjo#selfmade#godey#presidentiallibary#nigeria#dreambig#bossdoll💋💋#getbig#worksmarter#ogunstate#fullyconnected#power#bisolahair#proudlynigerian

1 note

·

View note

Text

Procrastinate at a Fully-Connected Writer’s Retreat

Procrastinate at a Fully-Connected Writer’s Retreat

[ad_1]

Have you always fantasized about leaving it all behind for a few days or weeks so you can finally fully devote yourself to the novel, screenplay, or other creative project you’ve been meaning to make? Our secluded cabin in the woods feels far from the hustle and bustle of your regular life—but is also equipped with fast, reliable Wi-Fi to enable you to constantly distract yourself from…

View On WordPress

0 notes

Photo

#ATL I know y’all miss me 😏. Soon I promise! #Repost from @daheavyweight I do a lot of #DopeShit #ROTB #QuisLife #QuisKing #FullyConnected #A2O #NoDebates #QuisTalk #QuisApproved #GreatOnes #YoungKing #FutureLegend #IconLiving

#quislife#futurelegend#greatones#atl#iconliving#repost#a2o#quisking#rotb#fullyconnected#quistalk#dopeshit#nodebates#quisapproved#youngking

0 notes

Text

Ideas Lab: Practical Fully-Connected Quantum Computer Challenge (PFCQC)

Ideas Lab: Practical Fully-Connected Quantum Computer Challenge (PFCQC)

Preliminary Proposal Deadline Date: June 19, 2017

Program Guidelines: NSF 17-548

Quantum computing is a revolutionary approach to information processing based on the quantum physics of coherent superposition and entanglement. Advantages of quantum computing include efficient algorithms for computationally difficult tasks, efficient use of resources such as memory and energy needed for…

View On WordPress

0 notes

Photo

Welcome to day 5 of the #OolaforWomen Challenge! Yesterday we celebrated all the amazing stay-at-home moms. Today we are going to dive into the fifth F of Oola - Faith. Oola does not shy away from faith. What you believe in, and who you believe in, is up to you, but we do know it is rooted in gratitude and humility and having an understanding of your greater purpose in the world. It's easy to get caught up in our thoughts. We overanalyze, re-think, over generalize, and jump to conclusions. The patterns of our lives and the world we live in make it easy to do. We wake up thinking, and we have a hard time falling asleep because our minds are running. Our thoughts turn into worry. But guess what? It takes the same amount of energy to pray as it does to think. So...Today's #OolaforWomen Challenge is to pray about something as often as you think about it. This will require us to pray bold prayers. The things that take up so much space in our mind and in our thoughts usually make for the boldest prayers. Pray about your worries, your stresses, your fears, your questions, your blessings, your loves ones. Nothing is too big. Start by simply starting your day with a prayer and ending your day with a prayer. And if you really want to challenge yourself, whenever you find yourself thinking about something let that be your cue to pray about it. Use today to pray about things as much as you think about them. It'll help you remember that you are grateful, humble, and fully connected. And if you have the oil blend Oola Faith, you can Diffuse or inhale the aroma of the oil at the beginning of the day and as needed for prayer or meditation. You can also massage on the bottoms of the feet.This blend is designed to help you feel grateful, humble, and fully secure in your place in this world. This blend can boost confidence, enhance spiritual influences, and promote deeper meditation. #Oolaforwomen #grateful #humble #fullyconnected #spiritual #meditate #meditation #prayers #blessings #fears #stresses #questions #lovedones

#stresses#spiritual#humble#questions#oolaforwomen#meditation#prayers#meditate#grateful#lovedones#fullyconnected#blessings#fears

0 notes

Text

Why diversity in artificial intelligence development matters

There is a flawed but common notion when it comes to artificial intelligence: Machines are neutral — they have no bias.

In reality, machines are a lot more like people: If it’s taught implicit bias by being fed non-inclusive data, it will behave with bias.

This was the topic of the panel “Artificial Intelligence: Calculating Our Culture” at the HUE Tech Summit on day one of Philly Tech Week presented by Comcast. (Here’s why it matters that Philly has a conference for women of color in tech.)

“In Silicon Valley, Black people make up only about 2% [of technologists],” said Asia Rawls, director of software and education for the Chicago-based intelligent software company Reveal. “When Google Analytics labeled Black people as apes, that’s not the algorithm. It’s you. The root is people. Tech is ‘neutral,’ but we define it.”

“Machine learning learns not by itself, but by our data,” said moderator Annalisa Nash Fernandez, intercultural strategist for Because Culture. “We feed it data. We’re feeding it flawed data.”

Often, the flaw is that the data isn’t inclusive. For example, when developers assume that the tech will react to dark skin the same as light skin, they’re creating a neutrality that doesn’t actually exist — so, an automated soap dispenser won’t sense dark skin.

“Implicit bias in computer vision technology means that cameras that don’t see dark skin are in Teslas, telling them whether to stop or not,” said Ayodele Odubela, founder of fullyConnected, an education platform for underrepresented people.

If there’s a positive note, panelists said, it’s that companies are learning to expand their data sets when a lack of diversity in their product development becomes apparent.

AI can expose bias, too. Odubela works with Astral AR, a Texas-based company that’s part of FEMA’s Emerging Technology Initiative. The company builds drones that can intervene when someone — including a police officer — pulls a gun on an unarmed person and actually stops the bullet they fire.

“It can identify a weapon versus a non-weapon and will deescalate a situation regardless of who is escalating,” Odubela said.

What can be done now to make AI and machine learning less biased? More people from underrepresented groups are needed in tech, but even if you’re not working in AI (and even if you’re not working in tech at all), there’s one ridiculously simple thing you can do to help increase the datasets: Take those surveys when they pop up on your screen, asking for feedback about a company or digital product.

“Take a survey, hit the chatbot,” said Amanda McIntyre Chavis, New York ambassador of Women of Wearables. “They need the data analytics.”

“People don’t respond to those surveys, then they complain,” said Rawls. “I always respond, and I’ll go off in the comments.”

Ultimately, if our machines are going to be truly unbiased anytime soon, there needs to be an understanding that humans are biased, even when they don’t mean to be.

“We need to get to a place where we can talk about racism,” said Rawls.

If we don’t, eventually the machines will probably be the ones to bring it up.

-30-

Source: https://technical.ly/philly/2019/05/07/importance-of-diversity-in-artificial-intelligence-development-hue-tech-summit/

0 notes

Photo

"[D] I'm writing a full C++ wrapper for Tensorflow, is anyone at all interested?"- Detail: I've asked this question around long before I began working on this project and here's a (non-exhaustive) list of answers I got:1- Building ML models in Python is faster and easier2- There's absolutely no use-case where you might need to train in C++3- If performance is what you're after, why not train in Python then export your model for inference in C++4- If you insist on C++, why not use caffe, mxnet or pytorchAnd my answers are the following:1- It's easier if you're comfortable with Python. Personally, I hate Python and I am never comfortable working with untyped languages. I may be old school but I have 15+ years of C++ experience and that makes it easier and faster for me.2- Here's a few use cases off the top of my head: - Using the library to perform tensor calculations on the GPU, for non machine-learning uses (such as Audio DSPs, Ray Tracing, etc...) while still benefitting from TF's optimizations and distributed graph computation capabilities - Training Unsupervised ML models with data read from physical sensors in realtime - Training models that require some lengthy data preprocessing or postprocessing that need to be done on CPU - Online-Training models on devices that are memory or battery constrained where having a Python interpreter and a webserver to serve the inference model would be wasteful3- See #2 There are many cases where this assumption doesn't hold4- I tried mxnet for a year before eventually giving up. The library is so unstable and buggy it's barely usable. Also tensorflow is truly remarkable when it comes to its distributed graph computation capabilities and seems to be the most evolved in terms of portability. It works across OSs, GPUs, TPUs, different CPU arch, etc... Not to mention the large community and active Google supportWith all that being said, I'm interested to know what everyone thinks. I plan on Open-Sourcing the wrapper eventually but that would require some extra work on my behalf, proper documentation and working examples, etc... I'd push that further if I know there's absolutely no interest in the project.What are your thoughts?EDIT: I'm actually wrapping the already existing C++ interface, which basically offers a very raw access to the computation graph and lacks all the necessary useful additions that Keras or even the raw Python interface offers, such as Symbolic wrappers for common NN layers like FullyConnected, Dropout, BatchNorm, ..., commonly used loss functions, evaluation metrics, optimizer schedulers, variable initializers, .... Caption by memento87. Posted By: www.eurekaking.com

0 notes

Photo

Another one! *****FEED THE STREETS***** Save the date friday night 11/23 @trueraent "FEED THE STREETS SHOWCASE" (black Friday edition) Hosted by: @prettypsworld & @sogravvy Sounds by: @djflexpalo @djproverbz *Note: For more info; details about slots dm @godplayssims @daheavyweight or submit music links for review to [email protected] or [email protected] #networking #music #artist #dj #talent #artists #djs #network #party #hiphop #hiphopculture #newenglandartist #newenglandmusic #truera #sogravvy #massmovement #crownsquad #collaboration #unity #heav #theplug #theconnect #trulife #trumusic #doitfortheculture #fullyconnected #heavy #feedthestreets #work #Splish #SoGravvy #LenniLinguini (at 41 Bow St) https://www.instagram.com/p/BpRnIMBn5eH/?utm_source=ig_tumblr_share&igshid=14vng2k7zm6hp

#networking#music#artist#dj#talent#artists#djs#network#party#hiphop#hiphopculture#newenglandartist#newenglandmusic#truera#sogravvy#massmovement#crownsquad#collaboration#unity#heav#theplug#theconnect#trulife#trumusic#doitfortheculture#fullyconnected#heavy#feedthestreets#work#splish

0 notes

Photo

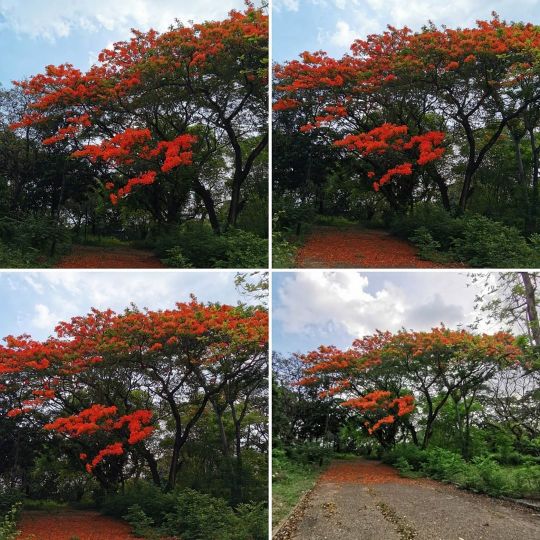

#HelloFrom the hidden, forbidden place.🔥🌳🍂😍 . . . . . . . . . . #FireTree #ECQSeason4 #TGIF #HappyWeekend #CapturedonHuawei #HuaweiP30PH #HuaweiP30Pro #RewriteTheDay #RewriteTheRules #EMUIMoments #HelloHuawei #FullyConnected #NaturallyConnected (at Potrero, Malabon City) https://www.instagram.com/p/CAeNl-OhwgIeruSAf49FlTjBNfC6vMyoCZbsH80/?igshid=kx8t5vykuq1r

#hellofrom#firetree#ecqseason4#tgif#happyweekend#capturedonhuawei#huaweip30ph#huaweip30pro#rewritetheday#rewritetherules#emuimoments#hellohuawei#fullyconnected#naturallyconnected

1 note

·

View note

Video

OBASANJO FORMER NIGERIAN PRESIDENT TAKES BISOLA CEO OF BISOLA HAIR FOR A CRUISE AROUND THE BEAUTIFUL PRESIDENTIAL LIBRARY IN OGUN STATE NIGERIA. #bisolahair @bisolahair #proudlynigerian #politics #presidentobasanjo #presidentiallibary #ogunstate #nigeria #dreambig #getbig #power #godey #worksmarter #selfmade #fullyconnected #bossdoll💋💋 (at London, United Kingdom)

#ogunstate#fullyconnected#dreambig#politics#power#presidentiallibary#proudlynigerian#getbig#godey#bossdoll💋💋#nigeria#worksmarter#selfmade#bisolahair#presidentobasanjo

0 notes

Text

Tweeted

RT @CBraddick: No #hashtag for @meylercampbell CPD event w/ @juliahobsbawm on #FullyConnected #oversharing #overload #intimacy #networks #s…

— john tipper (@Time57Tipper) September 28, 2017

0 notes

Photo

#IAM #humble, and #fullyconnected #connected #faith #frequency #empowered #encourage #life #love #family #power #happiness #God #connected #spirit #love #life #spiritualawakening #meditation #spirituality #spiritual #lawofattraction #awakening #lightworker #enlightenment #consciousness #universe #energy #spiritualgrowth #inspiration #soul #healing #higherconsciousness #spirit #spiritualjourney #wisdom #vibration #innerpeace #truth https://www.instagram.com/p/BoKdHTCAyME/?utm_source=ig_tumblr_share&igshid=1ftczv4hdd9ji

#iam#humble#fullyconnected#connected#faith#frequency#empowered#encourage#life#love#family#power#happiness#god#spirit#spiritualawakening#meditation#spirituality#spiritual#lawofattraction#awakening#lightworker#enlightenment#consciousness#universe#energy#spiritualgrowth#inspiration#soul#healing

0 notes

Photo

Meeting done #stratplan #airespring_ph #fullyconnected (at Caloocan City, Philippines) https://www.instagram.com/p/BnuwTxFFUWomK0i8J18cOo7O-KYiS5QG8p2lZU0/?utm_source=ig_tumblr_share&igshid=1bap1rftttmvi

0 notes

Photo

"[P] Sigma – Creating a machine learning framework from scratch (Update on high school thesis advice thread)"- Detail: TLDR: Asked this subreddit for advice in deciding on ML topic for high school thesis 2 years ago (see original thread), ended up writing a machine learning framework from (almost) scratch in C#/F#. It can’t do as much as all the others, isn’t as fast or as pretty, but we still think it’s kind of cool. Here it is: our github repo and a short UI demo.ResultsUpfront the current feature set of our framework Sigma, to give you an idea of what the next few paragraphs are about:Input, Output, Dense, Dropout, Recurrent, SoftmaxCE / SquaredDiff cost layersGradient descent, Momentum, Adadelta, Adagrad optimisersHooks for storing / restoring checkpoints, timekeeping, stopping (or doing other things) on certain criteria, computing and reporting runtime metricsEasy addition of new layers with functional automatic differentiationLinear and non-linear networks with arbitrarily connected constructsDistributed multi- and single- CPU and GPU (CUDA) backendsNative graphical interface where parameters can be interacted with and monitored in real-time 1. IntroductionThis is the story of us writing a machine learning framework for our high school thesis, of what we learned and how we went about writing one from scratch. The story starts about 3 years ago: we saw a video of MarI/O, a Super Mario AI that could learn to play Super Mario levels. We thought that was about the coolest thing of all time and wanted to do something at least kind of similar for our high school thesis. 1.1 The Original PlanFast forward, over 2 years ago we asked for help in deciding what kind of machine learning project we could feasibly do for our senior year high school thesis (see original thread). Quite ambitiously, we proposed a time investment of about 1000 hours total (as in 500 hours each over the course of 8 months) – for what project, we didn’t know yet. In that thread, we were generously met with a lot of help, advice ranged from reproducing existing papers to implementing specific things to getting to understand the material and then seeing what peaked our interest. After some consideration, we figured we would implement something along the lines of DeepMinds arcade game AI and then make it more general, figuring that would be easy for some reason. When planning our project in more detail we however quickly realised thatwe had no idea what we were doing andit would be a shame to do all that work from scratch and have it be so arbitrarily specific. 1.2 PivotingBefore doing anything very productive, we had to properly study machine learning. We figured this might take a while and allocated that part of our time to writing the theoretical part of our thesis, which conveniently overlapped. But because around that time we had to hand in an official target definition for our thesis, we set the most generic “goals” we could get away with. For reference, for a concerning number of months our project was officially named “Software framework for diverse machine learning tasks” with an even longer and even less specific subtitle. During further study and first attempts to draft the actual target definition for our project, our plans gradually shifted from a machine learning framework for playing specific types of games pivoted to an “any kind of visual input” learning framework and then finally to an “anything” machine learning framework – because why not, it seemed like an interesting challenge and we were curious to see how far we would get. 2. Research and PlanningAlright, so we've decided to write a machine learning framework. How does one create a machine learning framework? It takes many weeks to get reasonably proficient in just using a given framework, and that with proper guides, video tutorials and forums to ask for help. Creating a machine learning framework is a whole other story, with no 12 step guidelines to follow. For a considerable amount of time, we were at a loss at what we actually needed to implement – constantly encountering new and conflicting terms, definitions and not-so-obvious-“but the actual conclusion is obvious”-articles. After a little over a month we slowly got a very basic grasp of how this whole machine learning thing worked – something with functions that are approximated at certain points in steps typically using differentiation to make some metric go down – still magic, but a bit less so (until we read about CNNs, LSTMs and then GANs, each of which confused the heck out of us for some time). 2.1 Sketching our FrameworkAs soon as we knew a bit about the art of machine learning we got more serious about the writing a new framework part. Because there are no guides for that, we resorted to reading the source code of established frameworks – all to us relevant parts, many times, until it made some sense. In the meantime, we had decided to use C# as our primary language – mostly because we were already very familiar with it and didn’t want to also have to learn a new language, but officially also because there were no other proper neural network frameworks for .NET. Alongside reading the source code of machine learning libraries (mainly Deeplearning4J, Brainstorm and Tensorflow) we sketched out how we wanted our own framework to be used. After some time, we felt like there was some unnecessary confusion in getting to know machine learning frameworks as an outsider and we set out to design our API to avoid that. Note that because our design makes sense to us doesn’t mean that it makes more sense than the existing ones to other people, nor do we recommend everyone wishing to use machine learning to write their own framework, just to spare their own sanity.Our naïve ideas on how a machine learning should look like was clearly inspired by our C#/Java based programming experience, as is evident from the code example we drafted a few weeks in:Sigma sigma = Sigma.Create("minsttest"); GUIMonitor gui = (GUIMonitor) sigma.AddMonitor(new GUIMonitor("Sigma GUI Demo")); gui.AddTabs({"Overview", "Data", "Tests"}); sigma.Prepare(); DataSetSource inputSource = new MultiDataSetSource(new FileSource("mnist.inputs"), new CompressedFileSource(new FileSource("mnist.inputs.tar.gz"), new URLSource("http://....url...../mnist.inputs.targ.gz"))); DataSetSource targetSource = new MultiDataSetSource(new FileSource("mnist.targets"), new CompressedFileSource(new FileSource("mnist.targets.tar.gz"), new URLSource("http://....url...../mnist.targets.targ.gz" [, output: "otherthandefault"]) [, compression: new TarGZUnpacker(), output: "mnist.inputs" , forceUpdate: false])); DataSet data = new DataSet(new ImageRecordReader(inputSource, {28, 28}).Extractor({ALL} => {inputs: {Extractor.BatchSize, 1, 28, 28}}).Preprocess(Normalisor()), new StringRecordReader(targetSource).Extractor({0} => {targets: {Extractor.BatchSize, 1}} [, blockSize: auto/all/1024^3]); Network network = new Network("mynetwork"); network.Architecture = Input(inputShape: {28, 28}) + 2 * FullyConnected(size: 1024) + SoftmaxCE() + Loss(); Trainer trainer = sigma.CreateTrainer("mytrainer"); trainer.SetNetwork(network); trainer.SetInitialiser(new GaussianInitialiser(mean: 0.0, standardDeviation: 0.05)); trainer.SetTrainingDataIterator(MinibatchIterator(batchSize: 50, data["inputs"], data["targets"]); trainer.SetOptimiser(new SGDOptimiser(learningRate: 0.01); trainer.AddActiveHook(EarlyStopper(patience: 3)); trainer.AddActiveHook(StopAfterEpoch(epoch: 2000)); gui.AccentColor["trainer1"] = Colors.DeepOrange; gui.tabs["overview"].AddSubWindow(new LineChartWindow(name: "Error", sources: {"*.Training.Error"}) [, x: 1, y: 0, width: 2, height: 1]); gui.tabs["overview"].AddSubWindow(new LineChartWindow(name: "Accuracy", sources: {"*.Training.Accuracy"})); sigma.Run(); And skipping ahead a bit, it should be noted that the final framework is extremely similar to what we envisioned here: merely changing around a few syntax things and names, the above example from about a year ago can be used 1:1 in our current framework. The jury is still out on whether that’s a sign of really good or really bad design. Also note the python-style kwargs notation for layer constructor arguments, which was soon discarded in favour of something that actually compiles in C#. But back to the timeline. 2.2 The Sigma ArchitectureAfter defining the code examples and sketching out the rough parts we felt a machine learning framework needed, we arrived at this general architecture for “Sigma.Core”, divided into core components (which translate almost 1:1 to namespace in our project):Util: Mostly boring, well, utility stuff, but also registries, a key part of our architecture. Because we wanted to be able to inspect and visualise everything we needed a global way to access things by identifier – a registry. Our registry is essentially a dictionary with a string key which may contain more registries. Nested registries can be resolved using registry resolvers in dot notation with some fancy wildcards and tags in angel brackets (e.g. “network.layers.*.weights”).Data: Datasets, the records that make them up in various formats, the pipeline to load, extract, prepare and cache them from disk, web, or wherever they come from and make them available as “blocks”. These blocks are parts of an extracted dataset, consist of many individual records, and are used to avoid loading all of a potentially very large dataset into memory at once. Also, data iterators, which slices larger blocks from datasets into pieces that are then fed to the model.Architecture: Abstract definitions for machine learning models, consisting of layer “constructs”, which are lightweight placeholder layers defining what a layer will look like before its fully instantiated. These layers may be in any order and connected with however many other layers they would like.Layers: Unfortunately named since we started out with just neural networks, but these are the individual layers of our machine learning networks – they store meta-parameters (e.g. size) and actual trainable parameters (e.g. the actual weights).Math: Everything that has directly to do with math and low-level computations. All the automatic differentiation logic (which is very much required for doing proper machine learning) and everything that modifies our data is processed here in various backends (e.g. distributed CPU / GPU). To support calculating derivatives with respect to anything we opted for an approach with symbolic objects – essentially an object for a number or an array where the actual data was hidden (it can be fetched, but only via copies). These symbolic objects are passed around through a handler which does the actual data modification. This abstraction proved to be useful when implementing CUDA support where, due to the asynchronous execution of the CUDA stream, the raw data could not exposed to the user anyway, at least not without major performance hits (host-device synchronisation is very slow).Training: The largest component with many subcomponents, all revolving around the actual training process. A training process is defined in a “trainer”, which specifies the following:Initialisers, that define how a models parameters are initialised, which can be configured with registry identifiers. For example, trainer.addInitialiser(“layers.*.biases”, new GaussianInitialiser(0.1, 0.0)); would initialise all parameters named “biases” with a Gaussian distribution of 0.1 (mean 0).Modifiers, that would modify parameters at runtime, for example to clip weights to a certain range.Optimisers, that define how a model learns (e.g. gradient descent). Because we mainly considered neural networks we only implemented gradient based optimisers, but the interface theoretically supports any kind of optimisation.Hooks, that “hook” into the training process at certain time steps and can do whatever you want (e.g. update visualisations, store / restore checkpoints, compute and log metrics, do something (e.g. stop) when some criteria are satisfied).Operators, that delegate work to workers which execute it with a certain backend computation handler according to some parameters. Notable is our differentiation of “global” and “local” processing, where global is the most recent global state. This global state is fetched by local workers that then do the actual work, publish their results to the operator which merges it back into the global scope. A global timestep event is only ejected when all local workers have submitted their work for that timestep, enabling more fine control in distributed learning, at least in theory.Sigma: The root namespace that can create Sigma environments and trainers. An environment may contain multiple trainers, which are all run and, if specified, visualised simultaneously (which was supposed to be helpful in hyperparameter search).Monitors: Technically outside of the core project, but still a component. These monitors can be attached to a Sigma environment and can then, well, monitor almost everything about the trainers of that environment using the aforementioned registry entries. Behaviour can be injected using commands, a special form of hooks that are only invoked once. This way monitors can be used almost independently of the core Sigma project and can be pretty much anything, like a graphical interface or a live, locally hosted website. 3. ImplementationAnd that’s what we implemented, step by step. We started out with me mainly working on Sigma.Core and my partner on our visualisation interface, working to a common interface for months until we could finally combine our individual parts and have it miraculously work in a live graphical interface. The specifics of implementation were very interesting and quite challenging to us, but most of the particulars are probably rather dull to read – after all, most of the time things didn’t work and when we fixed something, we moved on to the next something that didn’t. 3.1 Low-level Data and Mathematical ProcessingThe very first thing we did was getting the data “ETL” (extract transform load) pipeline up and running, mainly fetching data from a variety of sources, loading them into a dataset and extracting them as blocks. I then focused on the mathematical processing part – everything that had to do with using math and calculating derivatives in our framework. I based our functional automatic differentiation, aptly named “SigmaDiff”, on an F# library for autodiff named “DiffSharp”, which I modified heavily to support n-dimensional arrays, improve performance significantly, fix a few bugs, support multiple simultaneous non-global backends, variable data types, and some more stuff that I’m forgetting. The specific details of getting that to work aren’t very interesting – a lot of glue code, refactoring and late-night bug-chasing because the backpropagation didn’t work as it should with some specific combination of operations. One memorable bug was that when remapping backend operations to my own OpenBLAS-based backend I forgot that matrix transposition did more than just change its shape – a mistake that cost me weeks in debugging efforts down the line, because things just didn’t work properly with large layers or more than 1 record per minibatch (duh). 3.2 Performance OptimisationsCompared to all the backend work, the “middleware” of layers and optimisers was rather trivial to implement, as there are hundreds of tutorials and papers on how to create certain layers and optimisers, where I only had to map them to our own solution. Really, that part should have taken a few weeks at most, but took that much longer because we only then discovered dozens of bugs and stability issues. Skipping over a lot of uninteresting details here, it should be noted that at this point performance of the framework was quite bad. I’m talking 300ms/iteration of 100 MNIST records with just a few dense layers on a high-end computer bad. This bad performance not only slowed training but also actual development down by quite a lot, hiding a few critical bugs and never letting us test the entire framework in a real-world use case within a reasonable time. You might wonder why we didn’t just fix the performance from the get-go, but we wanted to make the actual training work first so we would have something to show for our thesis. In hindsight not the ideal choice, but it still worked out quite well and otherwise we wouldn’t have been able to demonstrate our project adequately in time for the final presentation. 3.2.1 A Self-Adjusting BufferIt took many months before we finally got around to addressing the performance issues, but there was no single fix in sight, rather a collection of hundreds of small to medium sized improvements. A major issue was the way our SigmaDiff math processor handled operations: for every operation, a copy was created for the resulting data. That added up. The copying was necessary because backwards differentiation requires all intermediate values, so we couldn’t just not copy things. We couldn’t even create all required buffers in a static way ahead of time because there was (and still is) no way to traverse the operations that will be executed – the computation graph is constructed anew every time, and we can’t completely rely on them to remain constant. To introduce a reliable way of buffering anyway, we introduced the concept of sessions: A session was meant to be a set of operations that would be repeated many times. Iterations, essentially. When a session is started we would start storing all created arrays in our own store and when an array of the same dimensions was requested in the next session we could return the one from last session, all without allocating any new memory. If more memory was required than last time, we could still allocate it, if less was used, we could discard it for the next session, rendering this neatly self-adjusting. To not overwrite data that was created within a session but was needed for the next one (e.g. parameters) we added an explicit “limbo” buffer, which was basically just a flag that could be set at runtime for a certain array that marked it as “do not reuse”. 3.2.2 SIMD and Avoiding Intermediate AllocationOther significant performance improvements were adding SIMD instructions (which enables processing of typically 8 values at the same time for CPU-bound arithmetic operations) wherever possible and reducing other memory allocation to a minimum by adding some in-place operations wherever intermediate values weren't strictly needed. For example, copying results when accumulating gradients during backpropoagation on nodes with multiple operands is unnecessary because the intermediate values aren't used. By analysing profilers to death, I eventually got the iteration time for my MNIST sample down to an acceptable 18ms in release configuration (speedup of about 17x). Incidentally, the core was now so fast that our visualiser sometimes crashed because it couldn’t keep up with all the incoming data.3.3 Monitoring with Sigma 3.3.1 The monitoring SystemWhen developing Sigma, we not only focused on the “mathematical” backend but also implemented a feature rich monitoring system which allows any application to be built on top of Sigma (or better said Sigma.Core). Every parameter can be observed, every change hooked, every parameter managed. With this monitoring system, we built a monitor (i.e. application) that can be used to learn Sigma and machine learning in general. 3.3.2 The WPF MonitorUsers should be able to not only use Sigma, but also learn with Sigma. To address this issue, we built a feature-rich application (with WPF) that allows users to interact with Sigma. plot learning graphs, manage parameters and control the AI like controlling a music player. This monitor, as every other component of Sigma, is fully customisable and extensible. All components were designed with reusability in mind, which allows users to build their own complex application on top of the default monitor. But why describe a graphical user interface? See it for yourself, here is the UI (and Sigma) in action. (Example builds of Sigma can be downloaded on GitHub).Learn to learn at the press of a buttonDirect interaction during the learning processSave, restore and share checkpoints3.4 CUDA Support and Finishing TouchesOnly 2 months ago we started finalising and polishing our framework: adding CUDA support, fixing many stability issues and rounding off a few rough spots that annoyed us. The CUDA support part was particularly tricky as I could only use CuBLAS, not CuDNN, because our backend doesn’t, by design, understand individual layers but just raw computation graphs. A problematic side effect of the previously described session-logic was that there was no guarantee when buffers would be freed, as that was the job of the indeterministic GC. To not leak CUDA device memory I added my own bare-bones reference counter to the device memory allocator, which would be updated when buffers were created / finalised, which works surprisingly well. With CuBLAS, many custom optimised kernels and many nights of my time we achieved around 5ms/iteration for the same sample on a single GTX 1080, which we deemed acceptable for our envisioned use cases. 4. ConclusionApproximately 3000 combined hours, tens of thousands of lines of code and many long nights later we are proud to finally present something we deem reasonably usable for what it is: Sigma, a machine learning framework that might help you understand a little bit more about machine learning. As of now, we probably won’t be adding many new features to Sigma, mainly because we’re working on a new project related to it that’s now taking up most of our available time. Even though it lacks a lot of default features (most importantly the host of default layer types other frameworks offer), we’re quite happy with how far we got with our project and hope that it’s an adequate update to our original question 2 years ago. We would be happy if some of you could check it out and give us some feedback. 4.1 The Cost of Creating a Machine Learning FrameworkExcluding time, it’s quite cheap. Honestly, with some solid prior programming experience (so that the low-level programming part doesn't become an issue), the whole thing isn't terribly difficult and is probably something most people could do, given enough time. A lot of time. Overall, we it took us approximately:Some 600 hours of researchSome 2400 hours of development2 tortured souls, preferably sold to the devil in exchange for less bugsWe have long since stopped properly counting, so take these numbers with a grain of salt, but they should be in the right ballpark. 4.2 Final remarksAll in all, an undertaking like this is extremely time intensive. It was very much overkill for a high school thesis from the get-go, and we knew that, but it just kept getting more and more elaborate, essentially taking up all of our available time and then some. It was definitely worth it though, for now we have a solid understanding how things work on a lower level and, most importantly, we can say we’ve actually written a machine learning framework, which grants us additional bragging rights :). Caption by flotothemoon. Posted By: www.eurekaking.com

0 notes

Photo

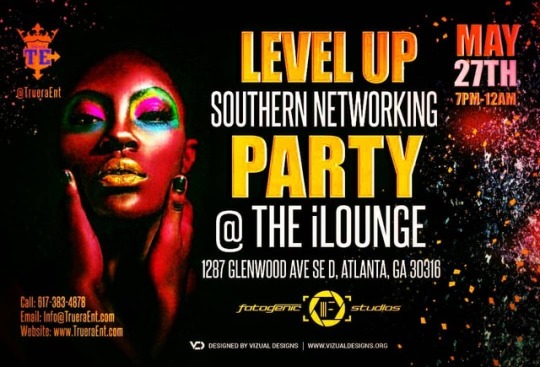

#Splish check it out #Networking #Party in #Atlanta #Georgia #May27 Come splish in another state with yours gravvy #SoGravvy gonna be a professional spill that night @theiloungeofficial #Artist #BodyPaint #entrepreneur #Music #Fashion #Makeup #Networking #Boston #Massachusetts #South #east #truera #Entertainment #Levelup #FullyConnected #BlackBusiness #Business #Promoters #Management (at Atlanta, Georgia)

#atlanta#boston#promoters#makeup#levelup#south#party#business#entrepreneur#artist#splish#fashion#music#entertainment#bodypaint#sogravvy#truera#networking#massachusetts#management#east#fullyconnected#may27#blackbusiness#georgia

0 notes