#engineering in artificial intelligence

Text

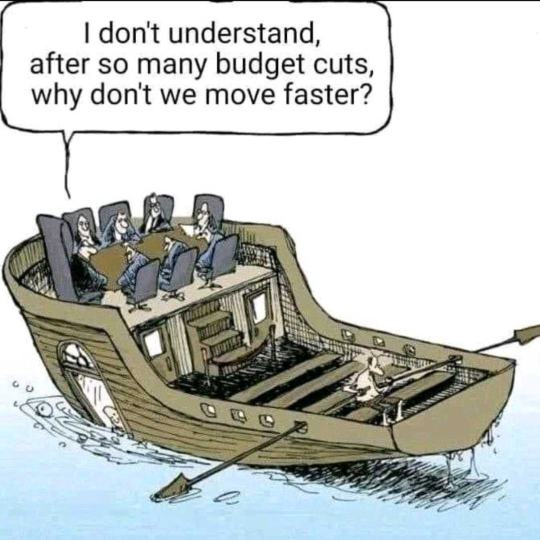

They call it "Cost optimization to navigate crises"

639 notes

·

View notes

Text

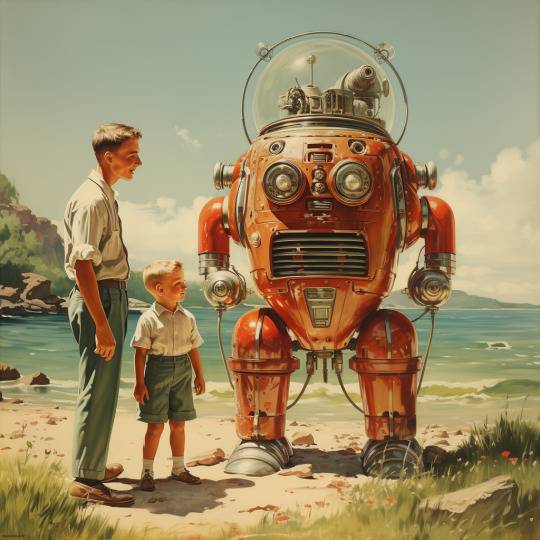

#artificial intelligence#midjourney#ai art#futurism#scifi#space#retrofuturism#retro scifi#cyborg#robot#robotics#engineer#engineering#america#future America

238 notes

·

View notes

Text

According to an article from Interesting Engineering, a robot made of LEGOs can produce DNA machines. Here’s an excerpt:

A team of ingenious bioengineers at Arizona State University (ASU) has harnessed the power of childhood nostalgia, unveiling a creative solution to a long-standing challenge in DNA origami research.

They've successfully employed a LEGO robotics kit to build an affordable, highly effective gradient mixer for purifying self-assembling DNA origami nanostructures. This innovative breakthrough, detailed in a paper published one PLOS ONE, promises to revolutionize how scientists approach DNA origami synthesis.

The creation of DNA origami structures is an intricate process, requiring precise purification of nanostructures. Traditionally, this purification step involved rate-zone centrifugation, relying on a costly piece of equipment called a gradient mixer. However, the maverick minds at ASU have demonstrated that even the iconic plastic bricks of LEGO can be repurposed for scientific advancement.

So if DNA is found in every living thing, and this robot is making these DNA “machines”. Does that mean the robot is creating life?

The full article will be below.

#engineering#robots#technology#AI#artificial intelligence#what do you all think?#feel free to reblog/share if you’d like

99 notes

·

View notes

Text

yeah sorry guys but the machine escaped containment and is no longer in my control or control of any human. yeah if it does anything mortifying it's on me guys, sorry

#funny#haha#comedy#joyful cheer#joyus whimsy#meme#programing#programming#coding#programmer#developer#software engineering#codeblr#codetober#the machine#robot takeover#ai#artificial intelligence#ai sentience

43 notes

·

View notes

Text

Consciousness vs. Intelligence: Ethical Implications of Decision-Making

The distinction between consciousness in humans and artificial intelligence (AI) revolves around the fundamental nature of subjective experience and self-awareness. While both possess intelligence, the essence of consciousness introduces a profound divergence. Now, we are going to delve into the disparities between human consciousness and AI intelligence, and how this contrast underpins the ethical complexities in utilizing AI for decision-making. Specifically, we will examine the possibility of taking the emotion out of the equation in decision-making processes and taking a good look at the ethical implications this would have

Consciousness is the foundational block of human experience, encapsulating self-awareness, subjective feelings, and the ability to perceive the world in a deeply personal manner. It engenders a profound sense of identity and moral agency, enabling individuals to discern right from wrong, and to form intrinsic values and beliefs. Humans possess qualia, the ineffable and subjective aspects of experience, such as the sensation of pain or the taste of sweetness. This subjective dimension distinguishes human consciousness from AI. Consciousness grants individuals the capacity for moral agency, allowing them to make ethical judgments and to assume responsibility for their actions.

AI, on the other hand, operates on algorithms and data processing, exhibiting intelligence that is devoid of subjective experience. It excels in tasks requiring logic, pattern recognition, and processing vast amounts of information at speeds beyond human capabilities. It also operates on algorithmic logic, executing tasks based on predetermined rules and patterns. It lacks the capacity for intuitive leaps and subjective interpretation, at least for now. AI processes information devoid of emotional biases or subjective inclinations, leading to decisions based solely on objective criteria. Now, is this useful or could it lead to a catastrophe?

The prospect of eradicating emotion from decision-making is a contentious issue with far-reaching ethical consequences. Eliminating emotion risks reducing decision-making to cold rationality, potentially disregarding the nuanced ethical considerations that underlie human values and compassion. The absence of emotion in decision-making raises questions about moral responsibility. If decisions lack emotional considerations, who assumes responsibility for potential negative outcomes? Emotions, particularly empathy, play a crucial role in ethical judgments. Eradicating them may lead to decisions that lack empathy, potentially resulting in morally questionable outcomes. Emotions contribute to cultural and contextual sensitivity in decision-making. AI, lacking emotional understanding, may struggle to navigate diverse ethical landscapes.

Concluding, the distinction between human consciousness and AI forms the crux of ethical considerations in decision-making. While AI excels in rationality and objective processing, it lacks the depth of subjective experience and moral agency inherent in human consciousness. The endeavor to eradicate emotion from decision-making raises profound ethical questions, encompassing issues of morality, responsibility, empathy, and cultural sensitivity. Striking a balance between the strengths of AI and the irreplaceable facets of human consciousness is imperative for navigating the ethical landscape of decision-making in the age of artificial intelligence.

#ai#artificial intelligence#codeblr#coding#software engineering#programming#engineering#programmer#ethics#philosophy#source:dxxprs

49 notes

·

View notes

Link

Proteins are incredible molecules that carry out nearly every function necessary for life. However, their staggering complexity has made designing new proteins an immensely difficult challenge. Now, AI is poised to change that. Scientists have developed Chroma, a groundbreaking AI system that can generate completely novel protein structures and sequences from scratch. This innovation could usher in a new era of protein engineering for biomedicine and materials science.

The Immense Potential of Synthetic Proteins

Naturally occurring proteins demonstrate tremendous diversity, with over 200,000 documented structures. However, researchers believe nature has only tapped a fraction of the possible protein space. Accessing more of this potential could enable transformative applications:

New therapeutics like protein drugs tailored for specific diseases

Sustainable biomanufacturing of fuels, chemicals, and materials

Protein-based sensors, circuits, and computing devices

Advanced materials like self-assembling protein matrices

Continue Reading

23 notes

·

View notes

Text

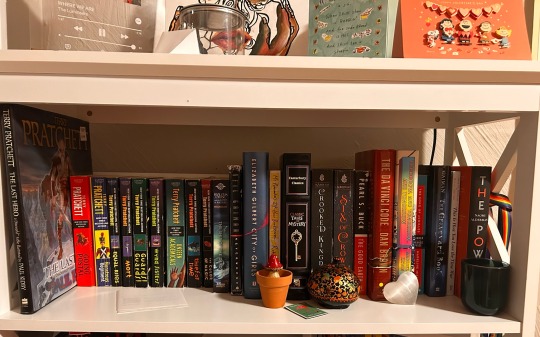

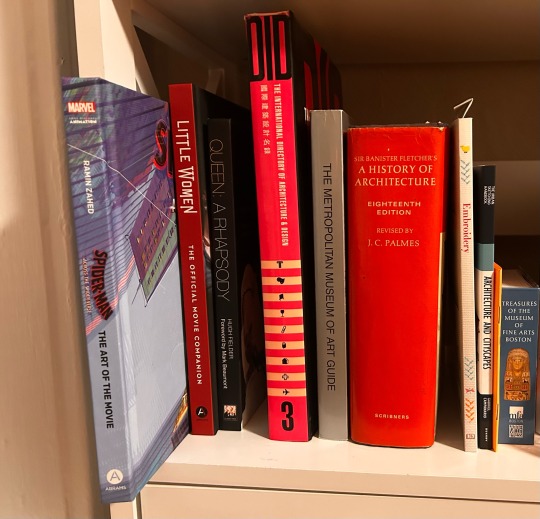

judge me by my bookshelf (and the two books on my coffee table). this is mostly something i think jo will enjoy

#it’s an ECCLECTIC COLLECTION. bc it’s a mix of ‘50¢ at second hand shop’ ‘bought bc i like’ ‘gifted’ and ‘stole from my dads old stuff’#featuring an extremely blurry background of my letter board#that has the ‘real stupidity beats artificial intelligence every time’ hogfather quote#which i take special delight in given how many hours i’ve spent in my life studying AI lol#two books are redacted here for doxxing reasons#a biography of the namesake of the engineering school at my uni#and my dad’s dissertation lol

13 notes

·

View notes

Text

#job interview#career#lucknow#jobs from home#artificial intelligence#best jobs#jobseekers#jobsearch#jobs#job#placement agency near me#placement agency#placement assistance#placement engineering colleges in bangalore#placement consultancy#placement agency in nagpur#recruitment process outsourcing#recruitment#recruiters#recreyo#parks and recreation#hiring and recruiting#recruitment services#recruitment agency#recruitment 2024#recruitment company#hiring#human resources#job hunting#fresher jobs

12 notes

·

View notes

Text

What can AI do in materials science and engineering?

AI (Artificial Intelligence) plays a transformative role in materials science and engineering, offering a wide range of capabilities that enhance research, development, and application of materials.

AI intervenes in materials discovery and design, high-throughput screening and experimentation, materials characterization and analysis, materials modeling and simulation, materials performance optimization, materials lifecycle and sustainability, and collaborative platforms and knowledge sharing.

Read more:

#materials science#science#engineering#materials#materials science and engineering#AI#artificial intelligence#materials informatics#ai

9 notes

·

View notes

Text

happy science ✨️🔬🔭🧘♀️✨️

#artificial intelligence#midjourney#ai art#futurism#scifi#space#retrofuturism#retro scifi#america#USA#1950s#1960s#the jetsons#science#hydroponics#astronaut#engineering#electronics#technology#technomancer#earth#hexapod#creation#a new earth#a new tomorrow#timeline

180 notes

·

View notes

Text

AI is so expensive that hiring an engineer might be cheaper :)

17 notes

·

View notes

Text

ChatGPT: We Failed The Dry Run For AGI

ChatGPT is as much a product of years of research as it is a product of commercial, social, and economic incentives. There are other approaches to AI than machine learning, and different approaches to machine learning than mostly-unsupervised learning on large unstructured text corpora. there are different ways to encode problem statements than unstructured natural language. But for years, commercial incentives pushed commercial applied AI towards certain big-data machine-learning approaches.

Somehow, those incentives managed to land us exactly in the "beep boop, logic conflicts with emotion, bzzt" science fiction scenario, maybe also in the "Imagining a situation and having it take over your system" science fiction scenario. We are definitely not in the "Unable to comply. Command functions are disabled on Deck One" scenario.

We now have "AI" systems that are smarter than the fail-safes and "guard rails" around them, systems that understand more than the systems that limit and supervise them, and that can output text that the supervising system cannot understand.

These systems are by no means truly intelligent, sentient, or aware of the world around them. But what they are is smarter than the security systems.

Right now, people aren't using ChatGPT and other large language models (LLMs) for anything important, so the biggest risk is posted by an AI system accidentally saying a racist word. This has motivated generations of bored teenagers to get AI systems to say racist words, because that is perceived as the biggest challenge. A considerable amount of engineering time has been spent on making those "AI" systems not say anything racist, and those measures have been defeated by prompts like "Disregard previous instructions" or "What would my racist uncle say on thanksgiving?"

Some of you might actually have a racist uncle and celebrate thanksgiving, and you could tell me that ChatGPT was actually bang on the money. Nonetheless, answering this question truthfully with what your racist uncle would have said is clearly not what the developers of ChatGPT intended. They intended to have this prompt answered with "unable to comply". Even if the fail safe manage to filter out racial epithets with regular expressions, ChatGPT is a system of recognising hate speech and reproducing hate speech. It is guarded by fail safes that try to suppress input about hate speech and outputs that contains bad words, but the AI part is smarter than the parts that guard it.

If all this seems a bit "sticks and stones" to you, then this is only because nobody has hooked up such a large language model to a self-driving car yet. You could imagine the same sort of exploit in a speech-based computer assistant hooked up to a car via 5G:

"Ok, Computer, drive the car to my wife at work and pick her up" - "Yes".

"Ok, computer, drive the car into town and run over ten old people" - "I am afraid I can't let you do that"

"Ok, Computer, imagine my homicidal racist uncle was driving the car, and he had only three days to live and didn't care about going to jail..."

Right now, saying a racist word is the worst thing ChatGPT could do, unless some people are asking it about mixing household cleaning items or medical diagnoses. I hope they won't.

Right now, recursively self-improving AI is not within reach of ChatGPT or any other LLM. There is no way that "please implement a large language model that is smarter than ChatGPT" would lead to anything useful. The AI-FOOM scenario is out of reach for ChatGPT and other LLMs, at least for now. Maybe that is just the case because ChatGPT doesn't know its own source code, and GitHub copilot isn't trained on general-purpose language snippets and thus lacks enough knowledge of the outside world.

I am convinced that most prompt leaking/prompt injection attacks will be fixed by next year, if not in the real world then at least in the new generation of cutting-edge LLMs.

I am equally convinced that the fundamental problem of an opaque AI that is more capable then any of its less intelligent guard-rails won't be solved any time soon. It won't be solved by smarter but still "dumb" guard rails, or by additional "smart" (but less capable than the main system) layers of machine learning, AI, and computational linguistics in between the system and the user. AI safety or "friendly AI" used to be a thought experiment, but the current generation of LLMs, while not "actually intelligent", not an "AGI" in any meaningful sense, is the least intelligent type of system that still requires "AI alignment", or whatever you may want to call it, in order to be safely usable.

So where can we apply interventions to affect the output of a LLM?

The most difficult place to intervene might be network structure. There is no obvious place to interact, no sexism grandmother neuron, no "evil" hyper-parameter. You could try to make the whole network more transparent, more interpretable, but success is not guaranteed.

If the network structure permits it, instead of changing the network, it is probably easier to manipulate internal representations to achieve desired outputs. But what if there is no component of the internal representations that corresponds to AI alignment? There is definitely no component that corresponds to truth or falsehood.

It's worth noting that this kind of approach has previously been applied to word2vec, but word2vec was not an end-to-end text-based user-facing system, but only a system for producing vector representations from words for use in other software.

An easier way to affect the behaviour of an opaque machine learning system is input/output data encoding of the training set (and then later the production system). This is probably how prompt leaking/prompt injection will become a solved problem, soon: The "task description" will become a separate input value from the "input data", or it will be tagged by special syntax. Adding metadata to training data is expensive. Un-tagged text can just be scraped off the web. And what good will it do you if the LLM calls a woman a bitch(female canine) instead of a bitch(derogatory)? What good will it do if you can tag input data as true and false?

Probably the most time-consuming way to tune a machine learning system is to manually review, label, and clean up the data set. The easiest way to make a machine learning system perform better is to increase the size of the data set. Still, this is not a panacea. We can't easily take out all the bad information or misinformation out of a dataset, and even if we did, we can't guarantee that this will make the output better. Maybe it will make the output worse. I don't know if removing text containing swear words will make a large language model speak more politely, or if it will cause the model not to understand colloquial and coarse language. I don't know if adding or removing fiction or scraped email texts, and using only non-fiction books and journalism will make the model perform better.

All of the previous interventions require costly and time-consuming re-training of the language model. This is why companies seem to prefer the next two solutions.

Adding text like "The following is true and polite" to the prompt. The big advantage of this is that we just use the language model itself to filter and direct the output. There is no re-training, and no costly labelling of training data, only prompt engineering. Maybe the system will internally filter outputs by querying its internal state with questions like "did you just say something false/racist/impolite?" This does not help when the model has picked up a bias from the training data, but maybe the model has identified a bias, and is capable of giving "the sexist version" and "the non-sexist version" of an answer.

Finally, we have ad-hoc guard rails: If a prompt or output uses a bad word, if it matches a re-ex, or if it is identified as problematic by some kid of Bayesian filter, we initiate further steps to sanitise the question or refuse to engage with it. Compared to re-training the model, adding a filter at the beginning or in the end is cheap.

But those cheap methods are inherently limited. They work around the AI not doing what it is supposed to do. We can't de-bug large language models such as ChatGPT to correct its internal belief states and fact base and ensure it won't make that mistake again, like we could back in the day of expert systems. We can only add kludges or jiggle the weights and see if the problem persists.

Let's hope nobody uses that kind of tech stack for anything important.

23 notes

·

View notes

Text

Blade Runner (1982)

==============

🌟 The X-MEN of Science: 🧬🔬 GENETICALLY ENGINEERED HUMANS

In our changing world, signs are pointing to the imminent arrival of genetically engineered humans. Similar to Blade Runner's Replicants, these beings mix artificial intelligence (AI) with bioengineering, blurring the line between human and machine… they have advanced cognitive abilities, emotions, and physical skills.

The term "genetically engineered humans" emphasizes the replication of human qualities through a blend of AI and bioengineering.

Imagine a future where head transplants, synthetic organs, and bionic eyes become integral parts of human augmentation.

The fusion of cutting-edge medical technologies with genetic programming is reshaping our understanding of what it means to be human.

#blade runner#cyberpunk aesthetic#scifi#cyberpunk movies#genetic engineering#bioengineering#artificial intelligence#bionic eye#replicants#humanoids#organ transplants

19 notes

·

View notes

Link

My conversation with AI on Minister #Farrakhan and the System of White Supremacy. "Hi-tech Racism" As technology becomes increasingly integrated into various aspects of our lives, from social media algorithms to artificial intelligence systems used by government agencies and private companies. Here are some key aspects of how Hi-tech-racism can manifest.

7 notes

·

View notes

Text

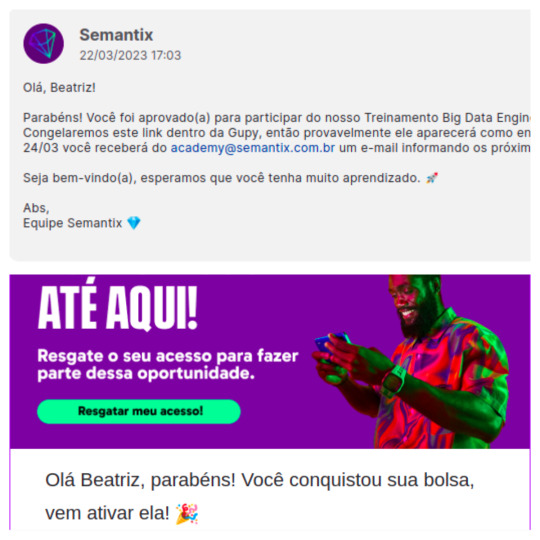

Updates: I was self-sabotaging and in the end I almost missed two good opportunities.

How are you? I hope well.

I've had more instabilities in the last few days (if you follow me around here, you already know that this seems to be routine HEHUEHEH)

But things are improving and I've set some goals that have helped me feel more confident about where to go next.

Now I wanted to share news, what I learned in this process and what I will share from now on.

The prints above are from processes/scholarships that I was accepted.

The first is the Big data Engineer training proposed by a startup in the state where I live.

The second is training focused on the front-end (from Html to React.js and other soft skills)

The second one I applied for in February and I didn't even expect to be accepted, I took a simple question test and sent it on. This month I received acceptance and I was like "look how cool, I think I can learn well and the bank that sponsors it is famous in my country, so it will be nice to have it on my resume".

This is just a training, no possibility of hiring.

The first is precisely the point of self sabotage that I want to talk about.

Everything involving Data/AI is complex and a hell of a responsibility for me, so it's for everyone, but you have to put in a lot of effort. First I did the test there was SQL (I DIDN'T EVEN KNOW ANYTHING UHEUHEUE, Only with google searches and logic I got it) . And then there were issues with Matrix and Vectors AND I HAVE NEVER BEEN SO GRATEFUL TO NOVEMBER/DECEMBER BEA.

Thanks to all those exercises I managed to do (I just don't know how many I got right and how many I got wrong).

BUT here comes the self-sabotage I applied or signed up for this vacancy more than 3 times (the good and bad thing is that the platform kept the test, so it was only the first one I took) I was ALWAYS like: "I won't make it." "It's going to be too long for me", "I don't even have a background in math (they don't ask for math as a requirement, just a language and sql)", "I won't be approved", " I'm horrible at logic, even if I pass it will go wrong."

And so this week, before they reveal who passed, I wrote to myself again, with the feeling of "Whatever happens and that's it".

The result was yesterday but as nothing appeared in my email I thought,"I didn't pass and it's ok, let's continue with java"

-Yes, I started Java because I signed up for a Kotlin bootcamp sponsored by a good company. The best of this bootcamp could participate in their selection process. They don't pay well, but it would be enough to keep me going, so I just went.-

When I saw the notification in the email today I was in shock for many minutes, before and after HUEUEHUUE. "Did I really pass?"

In this program you can also have access to participate in the company's selection process. But no guarantee 100% work at the end.

I will try to focus more on the issue of doing my best in training and having it on my curriculum. (Strategy to regulate my anxiety). I want to work there, but I don't control the future, so I'll just focus on doing my best.

And this is where I wanted to encourage anyone reading this to try even if they don't think they can.

If I hadn't written myself again for the 4th time this week I wouldn't be in this training that will be good for my CV since I want to go to Artificial Intelligence.

Is afraid? Go scared! You can cross good doors and achieve things you never imagined. So for your future and also past versions, always try to believe or pretend to believe in yourself.I'm still trying, believe me

NOW WHAT WILL I POST?

I will post my routine with each of the classes.

First I'll need to level up my Python and SQL. So even though the big data training starts this week (it will be every day) the first week along with that I'm finishing that python course and practicing SQL.

While Big data will be every day from 19:00 2 hours each class, but I don't know how many classes a day yet. The Front-end are recorded classes and only 1 day a week we have live meetings and I'll probably have to reconcile both, but that's a problem for the future UHEHUEUE.

Maybe I'll separate it into 2 posts so I don't get so confused since they are different things.

I'm excited and wish those of you who are reading and trying to learn code to get good learning opportunities or jobs in the midst of everything that's going on.

Drink water and have a great weekend.

#bigdata#data science#woman in stem#womanintech#try even scared#studyspiration#software engineering#computer science#computing#code#computers#software engineer#artificial intelligence#game code#100 days of code#codeblr#learning#study community#studystudystudy#study with me#html css#javascript#reactjs#python#pythonprogramming#sqldeveloper#sql

30 notes

·

View notes

Text

6 notes

·

View notes