#can't copyright AI!

Text

Are we all just very tired yeah?

Follow for more art | Explore the Shop | Commission

279 notes

·

View notes

Text

The day of the rescheduled tournament that they finally made it to and won before having a barbecue to celebrate.

And the last day the sky was blue.

#mostly just to get my kid designs out there#i for depressed drawing this tho#terry won't ever get to see the blue sky again after everything they fought for#FUCK dude#young nick looks exactly like my taylor design tho 😭😭 i can't HELP it#the doodler design shamelessly inspired by an ai generated one posted on reddit#ai shit free from copyright claims yo 😤😤#my art#dndads#dndads art#dungeons and daddies#dungeons and daddies art#nick close#nicky foster#?? 😭😭 what do i do with that kid??#lark oak garcia#lark oak#sparrow oak garcia#sparrow oak#grant wilson#terry jr#terry stampler#dungeons and daddies s1#dndads s1#eventually I'll do art for that one line willy gives the dads when they first get the kids from ravenloft#it's like#“if you raise them they will be unhappy or dangerous and give in to their worst temptations”#and holy fuck does that line hit different now#I'll get there. probably.

147 notes

·

View notes

Text

it would be SO funny to me if Ao3 really goes down because of the AI debacle. the cognitive dissonance is unreal, imagine going against a website dedicated to protecting copyright infringement and protecting the speech of the most vile, racist, pedophilic fans of media... for not being against copyright infringement. girl u can't have it both ways!!

#rambles#ur fanfic is already copyright infringement sorry to tell you#thats why AO3 dissalows any moneymaking on their site#because the second they claim you make money off your fanfic they WILL take you to court#like AO3 exists because anne rice was batshit insane about copyright#you can't just claim that AI is somehow infringing your copyright of your fanfic when your fanfic IS the infringement

11 notes

·

View notes

Text

Théâtre d'Opéra Spatial, a work of Artificial Intelligence is free to use by all, at least in the US, because it can't be copyrighted.

Some sort of pressure must exist; the artist exists because the world is not perfect. Art would be useless if the world were perfect, as man wouldn’t look for harmony but would simply live in it. Art is born out of an ill-designed world.

― Andrei Tarkovsky

4 notes

·

View notes

Text

the ai art discourse is so fucking stupid man (remembers like half my friends go to art school) (clamps a hand over my mouth)

#i rlly cant see it as anything but a moral panic. ive read panicked editorials panning the use of computers in art#if it causes mass layoffs while simultaneously making every movie look like shit (have you SEEN THAT FUCKING AI GENERATED MARVEL MOVIE OUTR#then thats capitalism treating artists like it always has#im also not disabled but seeing people go “why can't you just draw using a pencil in your mouth instead of STEALING!!!!!!!”#is arguably more ableist than saying “hey lol lets see what disabled people can make with an image scraper”#ai is going to be regulated through god knows how much legal discourse. But if the act of “scraping other peoples art with an computer” its#Lf becomes illegal? Say goodbye to popart and superflat. Adobe is already lobbying for stricter copyright#im mainly concerned abt the layoffs soon to arise from this but why be morally opposed to ai itself as a tool? It's not going away#we need stronger emphasis on unions and ending harsh layoffs of artists and crunch#idk whats going to happen#i jist dont want stricter copyright thats the death of art#not a vague abt any of my followers#fish talks

4 notes

·

View notes

Text

If you want to make an anime character sing a thing just get a fucking vocalsynth. Utau and synthv basic are free and have a ton of good voicebanks

#ik those ppl that make ai covers wouldn't use them but cmon. hatsune miku is literally right there#also how are the ai voice things getting around copyright laws anyway. like w the chatbots i get it#bc they're just trained to act like a character but like. you can't distribute jinriki utaus but you can distribute ai voices???#i don't get it#roseflower.txt

3 notes

·

View notes

Text

.

#you know#since ai art can't be copyrighted maybe people should start reposting ai stuff everywhere#not crediting the person who made the ai generate that piece#to show those people how much it sucks when you take something from others without asking and claim it as 'your work'#yes i'm somehow in a very petty mood rn

2 notes

·

View notes

Text

I feel you guys forget that the current use of AI is pretty much another form of worker exploitation. Yeah yeah copyright laws are awful and idiotic but until the system becomes fair I don't think any of this will matter if they blatantly steal from us while keeping us in poverty and unable to fight back

#copyright laws are terrible for creators and only good for companies yes. but this is not the issue we have with AI#the issue we have is that they steal everything from us and we can't even claim the efforts from our labor.#we're straight up working for free?#that wouldn't be a problem if we didn't live under a system where everything is paid but y'now. not how we work rn#jorjposting#idk man i just think y'all are unaware of how much you're siding with the oppressor in this.#no tool is worth the fetishization of indigenous art as we're currently dealing with with AIs.#would you say a whip is just a tool when you know its only use is for oppression?

0 notes

Text

People who latch onto ai as a buzzword thing to hate are both a) buying into the marketing of everything called 'ai' being the same magic artificial intelligence technology and b) entirely limiting their idea of what it is to how it personally affects/might affect them. You will not stick it to the exploitation of artists by loudly condemning like... Sci-fi that explores the concept of artificial nonhuman sentience just cause they refer to it as artificial intelligence.

#yes I have seen many people with this take#so strictly confined to how something affects you and your community specifically!!#as if artists are the only people to have their jobs taken by machines.#as if it was fine when it happened to farmworkers to calculators to typists to weavers to swordsmiths to... you get the idea#as if dependence on your training being the most efficient way for a profit seeking entity to make what they want to sell is sustainable#or even fucking DESIRED for the state of the artform or whatever#this economic system and art are inherently incompatable#programs marketed as ai are not the cause and blindly rallying against whatever ai means to you isn't the answer#in fact it'd probably hurt you if you succeeded in either banning the tech (ppl would lie abt using it cause u can't make ppl unknow things#(and it'd be so hard to legally define without being meaningless or also catching tech that could like. save lives.)#or if you got perfectly enforced more stringent copyright (just. look at what happened to the music world. it's a hellscape)#(non-huge music artists only avoid getting sued for every musical idea by not making enough money to be worth going after)#(and huge ones stick with what has been done enough times that no one could even claim to own it or give nonsense songwriting creds)#anyway. just an understandable but short-sighted and self-centred reactionary worldview exemplified by getting mad at 'robots good?' scifi#I have seen so many instances (irl) of people on principle refusing to learn anything new abt the scary thing#when it's my friend talking about like. building certain navigation systems. cause it's called ai.#ghost.personal#<= cause this is pure frustrated rant not My Thinkpiece

1 note

·

View note

Text

Looking for a good article to source about illustrators and the best way to get hired, but all I'm finding is how AI is replacing artists and how illustrators are "throwing a fit" over it.

#well#yeah!#You stole a bunch of artist's work-melted it down into a monstrosity-and proceeded to brag about it as if it's doing the job better#and artists are upset about pouring blood sweat and tears just to be replaced by something souless#Also reminder but ig you can't copyright ai so have fun ig

0 notes

Text

Yeah, I'm gonna make some enemies over AI art

#AI art#rants#this might be why kurenaiwataru blocked me#this is fucking culture war now#you gotta stop#this isn't healthy#you can treat every issue as good vs evil#you gotta have nuance#in this case you can't be calling for banning AI#first off you can't#second it's easier to go after copyright and that's gonna hurt you

0 notes

Text

Something I really don't understand about AI companies buying the rights to steal content from social media to feed their data training sets is, well. It's illegal as hell.

That content does not belong to the social media companies to start with. Personal data is a bit nebulous as it is, and some countries have better protection about it than others, but posting a picture on tumblr doesn't mean that Automatic automatically has the right to use, distribute and sell it as it sees fit.

I mean. Official accounts for big companies like Disney use social media for advertising. But posting a picture of Elsa on Twitter doesn't mean they're giving Twitter the right to use and sell that picture. But suddenly Twitter sells the nebulous ability to "scrape content from Twitter for AI training", so now Midjourney owns that picture of Elsa? What's the fucking ruling there?

People own the rights of what they have created even without officially registering it for trademarking (which is expensive as hell, by the way). Social media selling content means that they are selling copyrighted material created by its users- Some of it coming from big companies that have trademarked the shit out of everything, some of it coming from small creators who STILL have the rights to what they've created even without a trademark.

Curently, what you produce through AI generators is not actually copyrighted, since it was not made by a human, but what gets fed into the data training sets is often copyrighted material from unconsenting people. It basically is a copyright laundering scheme.

I do wholeheartedly hope that some regulations will be put in place, and hoping that big companies will, at least, do their best to help this case even if its just to protect their own IPs and property. Given how overprotective have Disney, Nintendo and other big names been about their content, I can't expect they'll be happy having it being sold to Midjourney, OpenAI and other crap for free, without their consent.

2K notes

·

View notes

Text

I just can't fathom how any artist could possibly support proposals to expand the scope of copyright so that stylistically similar works can be held to infringe as a defence against AI art. The content ID algorithms of major media platforms are implemented in a way which already establishes a de facto presumption that the sum total of humanity's creative output is owned by approximately six major media corporations, placing the burden of proof on the individual artist to demonstrate otherwise, and they've managed to do this in a legislative environment in which only directly derivative works may infringe. Can you imagine what copyright strikes on YouTube would look like if the RIAA and its cronies were obliged merely to assert that your work exhibits stylistic similarity to literally any piece of content that they own? Do you imagine that Google wouldn't cheerfully help them do it?

4K notes

·

View notes

Text

ok, i've gotta branch off the current ai disc horse a little bit because i saw this trash-fire of a comment in the reblogs of that one post that's going around

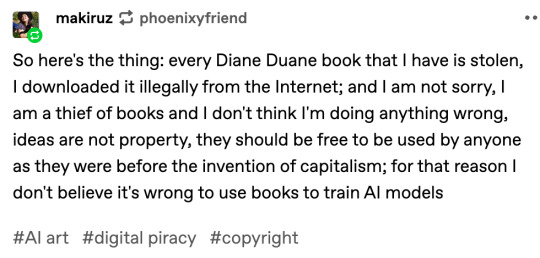

[reblog by user makiruz (i don't feel bad for putting this asshole on blast) that reads "So here's the thing: every Diane Duane book that I have is stolen, I downloaded it illegally from the Internet; and I am not sorry, I am a thief of books and I don't think I'm doing anything wrong, ideas are not property, they should be free to be used by anyone as they were before the invention of capitalism; for that reason I don't believe it's wrong to use books to train AI models"]

this is asshole behavior. if you do this and if you believe this, you are a Bad Person full stop.

"Capitalism" as an idea is more recent than commerce, and i am So Goddamn Tired of chuds using the language of leftism to justify their shitty behavior. and that's what this is.

like, we live in a society tm

if you like books but you don't have the means to pay for them, the library exists! libraries support authors! you know what doesn't support authors? stealing their books! because if those books don't sell, then you won't get more books from that author and/or the existing books will go out of print! because we live under capitalism.

and like, even leaving aside the capitalism thing, how much of a fucking piece of literal shit do you have to be to believe that you deserve art, that you deserve someone else's labor, but that they don't deserve to be able to live? to feed and clothe themselves? sure, ok, ideas aren't property, and you can't copyright an idea, but you absolutely can copyright the Specific Execution of an idea.

so makiruz, if you're reading this, or if you think like this user does, i hope you shit yourself during a job interview. like explosively. i hope you step on a lego when you get up to pee in the middle of the night. i hope you never get to read another book in your whole miserable goddamn life until you disabuse yourself of the idea that artists are "idea landlords" or whatever the fuck other cancerous ideas you've convinced yourself are true to justify your abhorrent behavior.

4K notes

·

View notes

Text

I can’t interact with this person without my blood pressure soaring to dangerous levels but I also can’t leave my astonishment at this unvoiced.

I can’t believe I have to say this but an AI algorithm isn’t a human being or a human brain and an AI algorithm mindlessly tracing over someone’s work isn’t the same as someone learning how to draw by being inspired by another person’s work.

the AI doesn’t have a brain and neither does this person.

#and even then human artists get in trouble for tracing and stealing all the time???????#companies even get in trouble for using each others designs and assets and shit????#why are we acting like plagiarism and copyright just doesn't exist because hurdur automation?#the whole point is that AI need to be held to the same standards as everyone else#and right now they can't be because artist consent isn't being asked for#idk how this person is so dumb fr

1 note

·

View note

Text

Often when I post an AI-neutral or AI-positive take on an anti-AI post I get blocked, so I wanted to make my own post to share my thoughts on "Nightshade", the new adversarial data poisoning attack that the Glaze people have come out with.

I've read the paper and here are my takeaways:

Firstly, this is not necessarily or primarily a tool for artists to "coat" their images like Glaze; in fact, Nightshade works best when applied to sort of carefully selected "archetypal" images, ideally ones that were already generated using generative AI using a prompt for the generic concept to be attacked (which is what the authors did in their paper). Also, the image has to be explicitly paired with a specific text caption optimized to have the most impact, which would make it pretty annoying for individual artists to deploy.

While the intent of Nightshade is to have maximum impact with minimal data poisoning, in order to attack a large model there would have to be many thousands of samples in the training data. Obviously if you have a webpage that you created specifically to host a massive gallery poisoned images, that can be fairly easily blacklisted, so you'd have to have a lot of patience and resources in order to hide these enough so they proliferate into the training datasets of major models.

The main use case for this as suggested by the authors is to protect specific copyrights. The example they use is that of Disney specifically releasing a lot of poisoned images of Mickey Mouse to prevent people generating art of him. As a large company like Disney would be more likely to have the resources to seed Nightshade images at scale, this sounds like the most plausible large scale use case for me, even if web artists could crowdsource some sort of similar generic campaign.

Either way, the optimal use case of "large organization repeatedly using generative AI models to create images, then running through another resource heavy AI model to corrupt them, then hiding them on the open web, to protect specific concepts and copyrights" doesn't sound like the big win for freedom of expression that people are going to pretend it is. This is the case for a lot of discussion around AI and I wish people would stop flagwaving for corporate copyright protections, but whatever.

The panic about AI resource use in terms of power/water is mostly bunk (AI training is done once per large model, and in terms of industrial production processes, using a single airliner flight's worth of carbon output for an industrial model that can then be used indefinitely to do useful work seems like a small fry in comparison to all the other nonsense that humanity wastes power on). However, given that deploying this at scale would be a huge compute sink, it's ironic to see anti-AI activists for that is a talking point hyping this up so much.

In terms of actual attack effectiveness; like Glaze, this once again relies on analysis of the feature space of current public models such as Stable Diffusion. This means that effectiveness is reduced on other models with differing architectures and training sets. However, also like Glaze, it looks like the overall "world feature space" that generative models fit to is generalisable enough that this attack will work across models.

That means that if this does get deployed at scale, it could definitely fuck with a lot of current systems. That said, once again, it'd likely have a bigger effect on indie and open source generation projects than the massive corporate monoliths who are probably working to secure proprietary data sets, like I believe Adobe Firefly did. I don't like how these attacks concentrate the power up.

The generalisation of the attack doesn't mean that this can't be defended against, but it does mean that you'd likely need to invest in bespoke measures; e.g. specifically training a detector on a large dataset of Nightshade poison in order to filter them out, spending more time and labour curating your input dataset, or designing radically different architectures that don't produce a comparably similar virtual feature space. I.e. the effect of this being used at scale wouldn't eliminate "AI art", but it could potentially cause a headache for people all around and limit accessibility for hobbyists (although presumably curated datasets would trickle down eventually).

All in all a bit of a dick move that will make things harder for people in general, but I suppose that's the point, and what people who want to deploy this at scale are aiming for. I suppose with public data scraping that sort of thing is fair game I guess.

Additionally, since making my first reply I've had a look at their website:

Used responsibly, Nightshade can help deter model trainers who disregard copyrights, opt-out lists, and do-not-scrape/robots.txt directives. It does not rely on the kindness of model trainers, but instead associates a small incremental price on each piece of data scraped and trained without authorization. Nightshade's goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.

Once again we see that the intended impact of Nightshade is not to eliminate generative AI but to make it infeasible for models to be created and trained by without a corporate money-bag to pay licensing fees for guaranteed clean data. I generally feel that this focuses power upwards and is overall a bad move. If anything, this sort of model, where only large corporations can create and control AI tools, will do nothing to help counter the economic displacement without worker protection that is the real issue with AI systems deployment, but will exacerbate the problem of the benefits of those systems being more constrained to said large corporations.

Kinda sucks how that gets pushed through by lying to small artists about the importance of copyright law for their own small-scale works (ignoring the fact that processing derived metadata from web images is pretty damn clearly a fair use application).

1K notes

·

View notes