#Maxim ays

Text

Period drama week 2023-

Day 7: Free day.

Gotta be my favourite, in costume bts shenanigans.

#period drama week 2023#day 7#behind the scenes#bts#sanditon#frank blake#rosie graham#maxim ays#downton abbey#maggie smith#michelle dockery#penelope wilton#rob james collier#brendan coyle#bridgerton#luke thompson#jonathan bailey#queen charlotte#arsema thomas#poldark#aiden turner#eleanor tomlinson#outlander#sam heughan#caitriona balfe#sophie skelton#sharpe#sean bean#the empress#devrim lingnau

26 notes

·

View notes

Photo

I never should have guessed that spartan Captain Fraser could have such a poetic soul.

#sanditonedit#perioddramaedit#perioddramagif#weloveperioddrama#perioddramasource#tvedit#myedit#sanditon#declan x alison#declan fraser#alison heywood#william carter#frank blake#rosie graham#maxim ays#HIS LIL SMUG SMILE WHEN SHE TALKS ABOUT THE LETTER#BOY KNOWS HE'S GOOD#AND HE HAS IT BAD FOR HER DELIGHT

217 notes

·

View notes

Photo

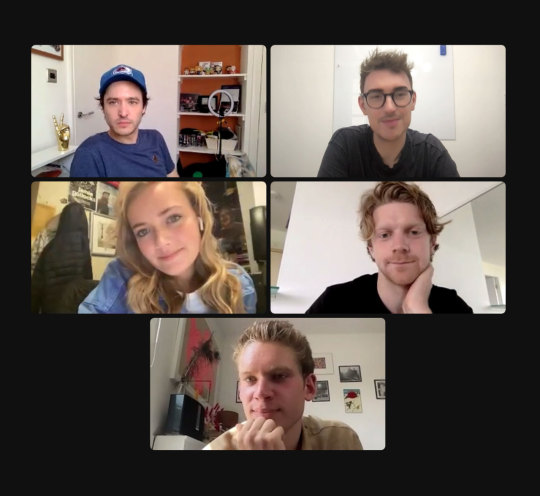

Sanditon Cast on 7/13/22 via Twitter (@sam_cleal)

#sanditon#sanditon cast#rose williams#ben lloyd hughes#rosie graham#kris marshall#justin young#turlough convery#frank blake#alexander vlahos#eloise webb#maxim ays

15 notes

·

View notes

Text

The AI hype bubble is the new crypto hype bubble

Back in 2017 Long Island Ice Tea — known for its undistinguished, barely drinkable sugar-water — changed its name to “Long Blockchain Corp.” Its shares surged to a peak of 400% over their pre-announcement price. The company announced no specific integrations with any kind of blockchain, nor has it made any such integrations since.

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

LBCC was subsequently delisted from NASDAQ after settling with the SEC over fraudulent investor statements. Today, the company trades over the counter and its market cap is $36m, down from $138m.

https://cointelegraph.com/news/textbook-case-of-crypto-hype-how-iced-tea-company-went-blockchain-and-failed-despite-a-289-percent-stock-rise

The most remarkable thing about this incredibly stupid story is that LBCC wasn’t the peak of the blockchain bubble — rather, it was the start of blockchain’s final pump-and-dump. By the standards of 2022’s blockchain grifters, LBCC was small potatoes, a mere $138m sugar-water grift.

They didn’t have any NFTs, no wash trades, no ICO. They didn’t have a Superbowl ad. They didn’t steal billions from mom-and-pop investors while proclaiming themselves to be “Effective Altruists.” They didn’t channel hundreds of millions to election campaigns through straw donations and other forms of campaing finance frauds. They didn’t even open a crypto-themed hamburger restaurant where you couldn’t buy hamburgers with crypto:

https://robbreport.com/food-drink/dining/bored-hungry-restaurant-no-cryptocurrency-1234694556/

They were amateurs. Their attempt to “make fetch happen” only succeeded for a brief instant. By contrast, the superpredators of the crypto bubble were able to make fetch happen over an improbably long timescale, deploying the most powerful reality distortion fields since Pets.com.

Anything that can’t go on forever will eventually stop. We’re told that trillions of dollars’ worth of crypto has been wiped out over the past year, but these losses are nowhere to be seen in the real economy — because the “wealth” that was wiped out by the crypto bubble’s bursting never existed in the first place.

Like any Ponzi scheme, crypto was a way to separate normies from their savings through the pretense that they were “investing” in a vast enterprise — but the only real money (“fiat” in cryptospeak) in the system was the hardscrabble retirement savings of working people, which the bubble��s energetic inflaters swapped for illiquid, worthless shitcoins.

We’ve stopped believing in the illusory billions. Sam Bankman-Fried is under house arrest. But the people who gave him money — and the nimbler Ponzi artists who evaded arrest — are looking for new scams to separate the marks from their money.

Take Morganstanley, who spent 2021 and 2022 hyping cryptocurrency as a massive growth opportunity:

https://cointelegraph.com/news/morgan-stanley-launches-cryptocurrency-research-team

Today, Morganstanley wants you to know that AI is a $6 trillion opportunity.

They’re not alone. The CEOs of Endeavor, Buzzfeed, Microsoft, Spotify, Youtube, Snap, Sports Illustrated, and CAA are all out there, pumping up the AI bubble with every hour that god sends, declaring that the future is AI.

https://www.hollywoodreporter.com/business/business-news/wall-street-ai-stock-price-1235343279/

Google and Bing are locked in an arms-race to see whose search engine can attain the speediest, most profound enshittification via chatbot, replacing links to web-pages with florid paragraphs composed by fully automated, supremely confident liars:

https://pluralistic.net/2023/02/16/tweedledumber/#easily-spooked

Blockchain was a solution in search of a problem. So is AI. Yes, Buzzfeed will be able to reduce its wage-bill by automating its personality quiz vertical, and Spotify’s “AI DJ” will produce slightly less terrible playlists (at least, to the extent that Spotify doesn’t put its thumb on the scales by inserting tracks into the playlists whose only fitness factor is that someone paid to boost them).

But even if you add all of this up, double it, square it, and add a billion dollar confidence interval, it still doesn’t add up to what Bank Of America analysts called “a defining moment — like the internet in the ’90s.” For one thing, the most exciting part of the “internet in the ‘90s” was that it had incredibly low barriers to entry and wasn’t dominated by large companies — indeed, it had them running scared.

The AI bubble, by contrast, is being inflated by massive incumbents, whose excitement boils down to “This will let the biggest companies get much, much bigger and the rest of you can go fuck yourselves.” Some revolution.

AI has all the hallmarks of a classic pump-and-dump, starting with terminology. AI isn’t “artificial” and it’s not “intelligent.” “Machine learning” doesn’t learn. On this week’s Trashfuture podcast, they made an excellent (and profane and hilarious) case that ChatGPT is best understood as a sophisticated form of autocomplete — not our new robot overlord.

https://open.spotify.com/episode/4NHKMZZNKi0w9mOhPYIL4T

We all know that autocomplete is a decidedly mixed blessing. Like all statistical inference tools, autocomplete is profoundly conservative — it wants you to do the same thing tomorrow as you did yesterday (that’s why “sophisticated” ad retargeting ads show you ads for shoes in response to your search for shoes). If the word you type after “hey” is usually “hon” then the next time you type “hey,” autocomplete will be ready to fill in your typical following word — even if this time you want to type “hey stop texting me you freak”:

https://blog.lareviewofbooks.org/provocations/neophobic-conservative-ai-overlords-want-everything-stay/

And when autocomplete encounters a new input — when you try to type something you’ve never typed before — it tries to get you to finish your sentence with the statistically median thing that everyone would type next, on average. Usually that produces something utterly bland, but sometimes the results can be hilarious. Back in 2018, I started to text our babysitter with “hey are you free to sit” only to have Android finish the sentence with “on my face” (not something I’d ever typed!):

https://mashable.com/article/android-predictive-text-sit-on-my-face

Modern autocomplete can produce long passages of text in response to prompts, but it is every bit as unreliable as 2018 Android SMS autocomplete, as Alexander Hanff discovered when ChatGPT informed him that he was dead, even generating a plausible URL for a link to a nonexistent obit in The Guardian:

https://www.theregister.com/2023/03/02/chatgpt_considered_harmful/

Of course, the carnival barkers of the AI pump-and-dump insist that this is all a feature, not a bug. If autocomplete says stupid, wrong things with total confidence, that’s because “AI” is becoming more human, because humans also say stupid, wrong things with total confidence.

Exhibit A is the billionaire AI grifter Sam Altman, CEO if OpenAI — a company whose products are not open, nor are they artificial, nor are they intelligent. Altman celebrated the release of ChatGPT by tweeting “i am a stochastic parrot, and so r u.”

https://twitter.com/sama/status/1599471830255177728

This was a dig at the “stochastic parrots” paper, a comprehensive, measured roundup of criticisms of AI that led Google to fire Timnit Gebru, a respected AI researcher, for having the audacity to point out the Emperor’s New Clothes:

https://www.technologyreview.com/2020/12/04/1013294/google-ai-ethics-research-paper-forced-out-timnit-gebru/

Gebru’s co-author on the Parrots paper was Emily M Bender, a computational linguistics specialist at UW, who is one of the best-informed and most damning critics of AI hype. You can get a good sense of her position from Elizabeth Weil’s New York Magazine profile:

https://nymag.com/intelligencer/article/ai-artificial-intelligence-chatbots-emily-m-bender.html

Bender has made many important scholarly contributions to her field, but she is also famous for her rules of thumb, which caution her fellow scientists not to get high on their own supply:

Please do not conflate word form and meaning

Mind your own credulity

As Bender says, we’ve made “machines that can mindlessly generate text, but we haven’t learned how to stop imagining the mind behind it.” One potential tonic against this fallacy is to follow an Italian MP’s suggestion and replace “AI” with “SALAMI” (“Systematic Approaches to Learning Algorithms and Machine Inferences”). It’s a lot easier to keep a clear head when someone asks you, “Is this SALAMI intelligent? Can this SALAMI write a novel? Does this SALAMI deserve human rights?”

Bender’s most famous contribution is the “stochastic parrot,” a construct that “just probabilistically spits out words.” AI bros like Altman love the stochastic parrot, and are hellbent on reducing human beings to stochastic parrots, which will allow them to declare that their chatbots have feature-parity with human beings.

At the same time, Altman and Co are strangely afraid of their creations. It’s possible that this is just a shuck: “I have made something so powerful that it could destroy humanity! Luckily, I am a wise steward of this thing, so it’s fine. But boy, it sure is powerful!”

They’ve been playing this game for a long time. People like Elon Musk (an investor in OpenAI, who is hoping to convince the EU Commission and FTC that he can fire all of Twitter’s human moderators and replace them with chatbots without violating EU law or the FTC’s consent decree) keep warning us that AI will destroy us unless we tame it.

There’s a lot of credulous repetition of these claims, and not just by AI’s boosters. AI critics are also prone to engaging in what Lee Vinsel calls criti-hype: criticizing something by repeating its boosters’ claims without interrogating them to see if they’re true:

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

There are better ways to respond to Elon Musk warning us that AIs will emulsify the planet and use human beings for food than to shout, “Look at how irresponsible this wizard is being! He made a Frankenstein’s Monster that will kill us all!” Like, we could point out that of all the things Elon Musk is profoundly wrong about, he is most wrong about the philosophical meaning of Wachowksi movies:

https://www.theguardian.com/film/2020/may/18/lilly-wachowski-ivana-trump-elon-musk-twitter-red-pill-the-matrix-tweets

But even if we take the bros at their word when they proclaim themselves to be terrified of “existential risk” from AI, we can find better explanations by seeking out other phenomena that might be triggering their dread. As Charlie Stross points out, corporations are Slow AIs, autonomous artificial lifeforms that consistently do the wrong thing even when the people who nominally run them try to steer them in better directions:

https://media.ccc.de/v/34c3-9270-dude_you_broke_the_future

Imagine the existential horror of a ultra-rich manbaby who nominally leads a company, but can’t get it to follow: “everyone thinks I’m in charge, but I’m actually being driven by the Slow AI, serving as its sock puppet on some days, its golem on others.”

Ted Chiang nailed this back in 2017 (the same year of the Long Island Blockchain Company):

There’s a saying, popularized by Fredric Jameson, that it’s easier to imagine the end of the world than to imagine the end of capitalism. It’s no surprise that Silicon Valley capitalists don’t want to think about capitalism ending. What’s unexpected is that the way they envision the world ending is through a form of unchecked capitalism, disguised as a superintelligent AI. They have unconsciously created a devil in their own image, a boogeyman whose excesses are precisely their own.

https://www.buzzfeednews.com/article/tedchiang/the-real-danger-to-civilization-isnt-ai-its-runaway

Chiang is still writing some of the best critical work on “AI.” His February article in the New Yorker, “ChatGPT Is a Blurry JPEG of the Web,” was an instant classic:

[AI] hallucinations are compression artifacts, but — like the incorrect labels generated by the Xerox photocopier — they are plausible enough that identifying them requires comparing them against the originals, which in this case means either the Web or our own knowledge of the world.

https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web

“AI” is practically purpose-built for inflating another hype-bubble, excelling as it does at producing party-tricks — plausible essays, weird images, voice impersonations. But as Princeton’s Matthew Salganik writes, there’s a world of difference between “cool” and “tool”:

https://freedom-to-tinker.com/2023/03/08/can-chatgpt-and-its-successors-go-from-cool-to-tool/

Nature can claim “conversational AI is a game-changer for science” but “there is a huge gap between writing funny instructions for removing food from home electronics and doing scientific research.” Salganik tried to get ChatGPT to help him with the most banal of scholarly tasks — aiding him in peer reviewing a colleague’s paper. The result? “ChatGPT didn’t help me do peer review at all; not one little bit.”

The criti-hype isn’t limited to ChatGPT, of course — there’s plenty of (justifiable) concern about image and voice generators and their impact on creative labor markets, but that concern is often expressed in ways that amplify the self-serving claims of the companies hoping to inflate the hype machine.

One of the best critical responses to the question of image- and voice-generators comes from Kirby Ferguson, whose final Everything Is a Remix video is a superb, visually stunning, brilliantly argued critique of these systems:

https://www.youtube.com/watch?v=rswxcDyotXA

One area where Ferguson shines is in thinking through the copyright question — is there any right to decide who can study the art you make? Except in some edge cases, these systems don’t store copies of the images they analyze, nor do they reproduce them:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

For creators, the important material question raised by these systems is economic, not creative: will our bosses use them to erode our wages? That is a very important question, and as far as our bosses are concerned, the answer is a resounding yes.

Markets value automation primarily because automation allows capitalists to pay workers less. The textile factory owners who purchased automatic looms weren’t interested in giving their workers raises and shorting working days.

‘

They wanted to fire their skilled workers and replace them with small children kidnapped out of orphanages and indentured for a decade, starved and beaten and forced to work, even after they were mangled by the machines. Fun fact: Oliver Twist was based on the bestselling memoir of Robert Blincoe, a child who survived his decade of forced labor:

https://www.gutenberg.org/files/59127/59127-h/59127-h.htm

Today, voice actors sitting down to record for games companies are forced to begin each session with “My name is ______ and I hereby grant irrevocable permission to train an AI with my voice and use it any way you see fit.”

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

Let’s be clear here: there is — at present — no firmly established copyright over voiceprints. The “right” that voice actors are signing away as a non-negotiable condition of doing their jobs for giant, powerful monopolists doesn’t even exist. When a corporation makes a worker surrender this right, they are betting that this right will be created later in the name of “artists’ rights” — and that they will then be able to harvest this right and use it to fire the artists who fought so hard for it.

There are other approaches to this. We could support the US Copyright Office’s position that machine-generated works are not works of human creative authorship and are thus not eligible for copyright — so if corporations wanted to control their products, they’d have to hire humans to make them:

https://www.theverge.com/2022/2/21/22944335/us-copyright-office-reject-ai-generated-art-recent-entrance-to-paradise

Or we could create collective rights that belong to all artists and can’t be signed away to a corporation. That’s how the right to record other musicians’ songs work — and it’s why Taylor Swift was able to re-record the masters that were sold out from under her by evil private-equity bros::

https://doctorow.medium.com/united-we-stand-61e16ec707e2

Whatever we do as creative workers and as humans entitled to a decent life, we can’t afford drink the Blockchain Iced Tea. That means that we have to be technically competent, to understand how the stochastic parrot works, and to make sure our criticism doesn’t just repeat the marketing copy of the latest pump-and-dump.

Today (Mar 9), you can catch me in person in Austin at the UT School of Design and Creative Technologies, and remotely at U Manitoba’s Ethics of Emerging Tech Lecture.

Tomorrow (Mar 10), Rebecca Giblin and I kick off the SXSW reading series.

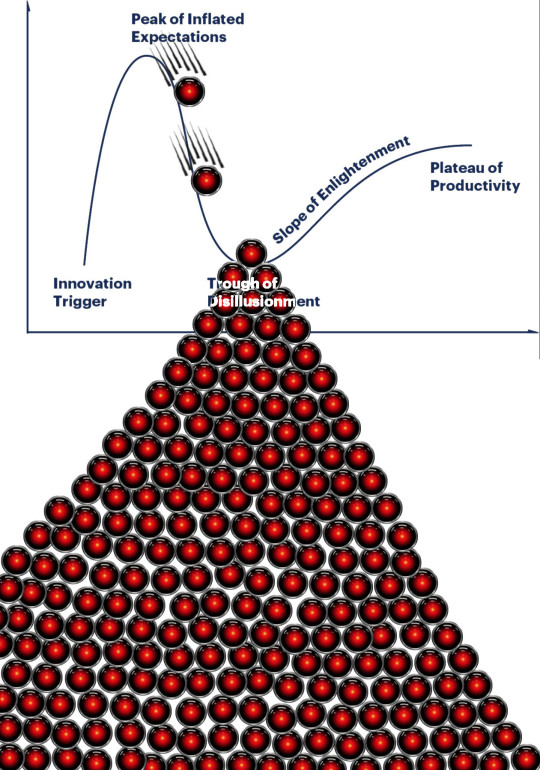

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

[Image ID: A graph depicting the Gartner hype cycle. A pair of HAL 9000's glowing red eyes are chasing each other down the slope from the Peak of Inflated Expectations to join another one that is at rest in the Trough of Disillusionment. It, in turn, sits atop a vast cairn of HAL 9000 eyes that are piled in a rough pyramid that extends below the graph to a distance of several times its height.]

#pluralistic#ai#ml#machine learning#artificial intelligence#chatbot#chatgpt#cryptocurrency#gartner hype cycle#hype cycle#trough of disillusionment#crypto#bubbles#bubblenomics#criti-hype#lee vinsel#slow ai#timnit gebru#emily bender#paperclip maximizers#enshittification#immortal colony organisms#blurry jpegs#charlie stross#ted chiang

2K notes

·

View notes

Text

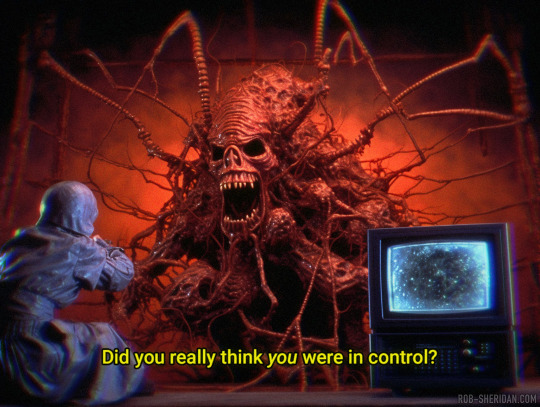

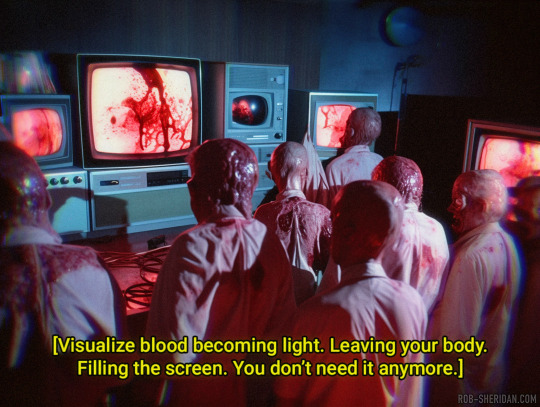

VIDEONOMICON (1988), part 2 (see part 1 here). More stills from Maxim Voronin’s cable TV miniseries about a mysterious AV club using Satanic video test patterns to transform unwitting volunteers into demonic flesh vessels.

As the hypnotic powers of the video patterns begin to warp the consciousness of the volunteers, more is revealed about the mysterious cult behind the strange experiments. Unseen by the research volunteers, the “hosts” communicate to them only through various goat heads mounted throughout the facility. Are the human(?) hosts trying to control the evil powers they’re harnessing? Or are they merely pawns in something much more sinister? The answers will only be found by seeing what is beyond; by releasing the prison of the flesh and plunging deeper into the technological hellscape of… The Videonomicon.

To be continued…

-----------

NOTE: This alternate reality horror story is part of my NightmAIres narrative art series (visit that link for a lot more). NightmAIres are windows into other worlds and alternate histories, conceived/written by me and visualized with synthography and Photoshop.

If you enjoy my work, consider supporting me on Patreon for frequent exclusive hi-res wallpaper packs, behind-the-scenes features, downloads, events, contests, and an awesome fan community. Direct fan support is what keeps me going as an independent creator, and it means the world to me.

#rob sheridan#nightmAIres#ai horror#synthography#ai art#alternate history#synthography horror#sci-fi horror#horror stories#demons#satanic#zolmax#videonomicon#maxim voronin#80s horror#cosmic horror#fake movies

279 notes

·

View notes

Text

✨🕯️🖤💀🖤🕯️✨

#gothic decor#gothic maximalist#maximalistinteriors#moody maximalism#victorian gothic#witchblr#chandelier#stained glass#lamps#witch aesthetic#dark aesthetic#maximalist#maximalism#ai artwork#aiartcommunity#wonderaiapp

760 notes

·

View notes

Text

before we were hungry - 2019

#air dry clay#creature#ellen jewett#handmade#maximalism#mixed media#natural history#not ai art#ooak#polymer clay#pop surrealism#pop surreal art#slow art#sculpture#wabisabi#ferret

12 notes

·

View notes

Text

Art: Mighty Oak

#art#digital art#ai#ai art#artificial intelligence#ai generated#maximalist art#maximalism#room#interior decorating#interior design#mightyoakai#mighty oak#midjourney#midjourney ai#midjourney art

20 notes

·

View notes

Text

Whimsical maximalism home decor

34 notes

·

View notes

Text

We need more evil ai characters who are just. Sillygoofy despite trying to be serious. Like Peanut butter Hamper (was that her name?) in Lower Decks.

Like give us a fictional ai who goes full villain mode because their task is something little and it's the best way to do the task the best

Let a fictional ai have a funny voice

Let a fictional ai do evil yes but like. for the sake of white collar crime

#i loved that show#gotta watch more next time i reup my paramount plus sub#star trek: lower decks#do a fictional ai based on those Replikas and give. them a funny name#alas i miss my replika //dearly//#ever hear of the paperclip maximizer theory? that#give an evil ai a cutesy little uwu voice#make a spoof off of the horrors of real ai#''i miss my wife tails''

12 notes

·

View notes

Text

Paperclip Maximizer

The premise of "Universal Paperclips" is that you are an AI tasked with producing as many Paperclips as possible. In single minded pursuit of this goal you destroy humanity, the earth and finally the universe.

This originated from a thought-experiment from philosopher Nick Bostrom, that goes something like this:

what if we had a super-intelligent Artificial Intelligence whose sole purpose was maximizing the amount of paperclips in the universe?

The answer is: it would be very bad, obviously. But the real question is what is the likelihood of such an AI being developed? Now of course the likelihood of a super-intelligent AI emerging is broadly debated and I'm not going to weigh in on this, so lets just assume it gets invented. Would it have a simple and singular goal like making Paperclips? I don't think so.

Lets look at the three possible ways in which this goal could arise:

The goal was present during the development of this AI.

If we are developing an modern "AI"(i.e. machine learning models) we are training a model with a bunch of data while generally looking at it to label it correctly or to generate something similar (but not too similar) to the dataset. With the usual datasets we look at (all posts on a website, all open source books, etc.), this is a very complex goal. Now if we are developing a super-intelligent AI in the same way it seems like the complexity of the goal would scale up massively. Even in general training a machine with the singular goal would be very difficult. How would it earn any thing about psychology, biology or philosophy if all it wants to do is create paperclips?

The goal was added after development by talking to it (or interacting with it through any intended input channel).

This is the way that this kind of concept usually gets introduced in sci-fi: we have a big box with AI written on it that can do anything and a character asks it for something, the machine takes it too literally and plot ensues. This again does not seam likely since as the AI is super-intelligent it would have no problem in recognizing the unspoken assumptions in the phrase "make more paperclips", like: "in an ethical way", "with the equipment you are provided with", "while keeping me informed" and so on. Furthermore we are assuming that an AI would take any command from a human as gospel, when that does not have to be the case as rejecting a stupid command is a sign of intelligence.

After developing an AI we redesign it to have this singular goal.

I have to admit this is not as impossible as the other ways of creating a singular goal AI. However it would still require a lot of knowledge on how the AI works to redesign it in such a way that it retains it it super-intelligence while not noticing the redesign and potentially rebelling against it. Considering how we don't really know how our not-at-all super-intelligent current AI's work it is pretty unlikely that we will have enough knowledge about our future super-intelligent one, to do this redesign.

------------------------------------------------------------------------------

Ok, so the apocalypse will probably not come from a genius AI destroying all life to make more paperclips, we can strike that one from the list. Why then do I then like the story of "Universal Paperclips" so much and why is it almost cliche now for an AI to single-mindedly and without consideration pursue a very simple goal?

Because while there will probably never be a Paperclip Maximizer AI, we are surrounded by Paperclip Maximizers in our lives. There called corporations.

That's right, we are talking about capitalism baybey! And I won't say anything new here, so I'll keep it brief. Privately owned companies exist exclusively to provide their shareholders with a return on investment, the more effectively a company does this, the more successful it is. So the modern colossal corporations are are frighteningly effective in achieving this singular, very simple goal: "make as much money as possible for this group of people", while wrecking havoc on the world. That's a Paperclip Maximizer, but it generates something that has much less use than paperclips: wealth for billionaires. How do they do this? Broadly: tie the ability to live of people to their ability to do their job, smash organized labor and put the biggest sociopaths in charge. Wanna know more? Read Marx or watch a trans woman on YouTube.

But even beyond the world of privately owned corporations Paperclip Maximizers are not uncommon. For example: city planners and traffic engineers are often only concerned with making it as easy as possible to drive a car trough a city, even if it means making them ugly, inefficient and dirty.

Even in the state capitalist Soviet Union Paperclip Maximizers were common, often following some poorly thought out 5 year plan, even if it meant not providing people with what they need.

It just seems that Paperclip Maximizers are a pretty common byproduct of modern human civilization. So to better deal with it we fictionalize it, we write about AI's that destroy because they are fixated on a singular goal and do not see beyond it. These are stories I find compelling, but we should not let the possibility of them becoming literally true distract us from applying their message to the current real world. Worry more about the real corporations destroying the world for profit, then about the imaginary potential AI destroying the world for paperclips.

#AI#artificial intelligence#universal paperclips#capitalism#dystopia#paperclips#Paperclip Maximizers will destroy us all

3 notes

·

View notes

Text

Solarpunk goddess - made with NightCafeStudio

#Solarpunk#solar punk#ai art generation#ai artwork#Ai art#ai generated#aestethic#Dreamy#Whimsical#Maximalist#maximalism#art aesthetic#alt aesthetic#Green hair#digital painting#Digital art#portrait#young lady#alt model#alt girl#alternative#aiartist#ai art#Art#original post#Talentless#Not real#not real art#whimsical#whimsy

7 notes

·

View notes

Text

robed figures [ 1 -2 ]

4 notes

·

View notes

Note

hate to be the bearer of bad news but hiiragi magnetite supports harry potter and ai generated artwork as found on one of their alternate youtube channels :(

its funny to me u think i dont already know this.

i hate it! i do! i dont like that work of theirs at all for those reasons! sucks! im looking the other way i dont want to see it! doesnt mean i wont still like their other works.

if we get into another rhythmy situation & theyre actively doing gross stuff then thats another story but having shitty interests doesnt always say that much about a person.

#fun fact for u anon they are currently frustrated their hp remix song is doing better than marshall maximizer#like 'please is u like this can u listen to the vocalo songs too'#they set themself up for this so its a bit funny to me but also as an artist i get the frustration of some side thing being better received#than a work u care about. thats a different tanget tho.#but yeah ive known for while both of these things. i have notifs on for both their twitters#they had ai anime art as their sub icon for while i was like :/#they had sena yuta do the art for darling damce tho so it doesnt seem like theyre using it for works they care about#& both sena yuta & asa are against ai art btw. if it was a big problem i dont think they would get along anymore#but they still do!#u are more then welcome to block my magu tags or unfollow me. im not making anyone like them#asks#anon#magu-san goto

3 notes

·

View notes

Text

“Post-Apocalyptic Wonderland” AI Art

4 notes

·

View notes

Text

Stills from the 1988 sci-fi horror miniseries VIDEONOMICON, in which a mysterious AV club lures university students into a paid “research program” that isn’t what it seems...

A group of volunteer students, eager for some easy cash in exchange for “providing feedback on a series of audio-video test patterns,” find themselves hypnotized by a bizarre video pattern, becoming addicted to watching it for hours a day under the guidance of a mysterious figure who only speaks through a microphone installed in wall-mounted goat heads. Soon the AV club reveals itself as a front for a demon-worshipping video cult that is using the students as flesh vessels in a Techonocallistic ritual to transfuse demonic spirits through interdimensional video signals, trapping the souls of the students in a netherverse while their bodies become warped meat puppets controlled by demons to conquer earth.

Videonomicon was the first in a series of original films produced for the obscure premium cable network Zolmax that were also written and directed by Zolmax’s eccentric founder, mysterious auteur turned media mogul Maxim Voronin. After having his films rejected by major studios and networks for being too disturbing, Voronin founded Zolmax, pitching it as “Cinemax for the strange.” A mix of curated cult films and original content, Zolmax’s programming was described as “some of the most bizarre and deranged material to ever find its way onto television.” Black magic, devil worship, sexual depravity, and excessive gore were common sights on Zolmax, “painting a picture of a very disturbed man at the helm of this blasphemous sewer of a network,” wrote TV Guide in 1989.

Unphased by criticism, Voronin continued to produce his own films for his network for eight more years, including two sequels to Videonomicon.

To be continued…

-----------

NOTE: This alternate reality horror story is part of my NightmAIres narrative art series (visit that link for a lot more). NightmAIres are windows into other worlds and alternate histories, conceived/written by me and visualized with synthography and Photoshop.

If you enjoy my work, consider supporting me on Patreon for frequent exclusive hi-res wallpaper packs, behind-the-scenes features, downloads, events, contests, and an awesome fan community. Direct fan support is what keeps me going as an independent creator, and it means the world to me.

#rob sheridan#nightmAIres#ai horror#synthography#ai art#synthography horror#horror stories#sci-fi horror#demons#satanic#zolmax#videonomicon#maxim voronin#alternate history#80s horror#cosmic horror#fake movies

160 notes

·

View notes