#Laboratory Automation

Text

Laboratory Automation Market Size | Forecast Period | Share & Size (2035)

The global lab automation market (size) report is estimated to be USD 5.2 billion in 2022 and grow at an annualized rate (CAGR) of 13%. The Roots Analysis report features an extensive study of the current market landscape and the future potential of the lab automation market over the next 12 years. Get a detailed insights report now!

0 notes

Text

Whispering secret data.

#lab#machine#automation#robotics#cyberpunk#retro#scifi#stuck#laboratory#farm#android#cyborg#data#secret#whisper#illustration#drawing#digitalartwork#digitaldrawing#digitalart#digitalillustration#90s#cables#machinelearning#connection#ring#runner#net#flesh

4K notes

·

View notes

Text

Optimizing Your Scientific Workflow With Data And Informatics Consulting

Do you want to optimize your scientific workflow? It can be a daunting task, but with the help of data and informatics consulting, it doesn't have to be. Data and informatics consulting provides the expertise and experience you need to ensure your research is as efficient and effective as possible. In this article, we'll explore how data and informatics consulting can help streamline your scientific workflow.

Data and informatics consulting involves using technology to analyze data quickly and accurately. With the right tools, consultants can identify patterns in large datasets that might otherwise go unnoticed. This means they can spot potential problems before they become major issues, saving time and money in the process. Furthermore, these professionals have an in-depth understanding of how different systems interact with one another, allowing them to develop optimal solutions for complex processes.

Finally, data and informatics consulting can provide invaluable insights into ways to improve your scientific workflow. From identifying areas where efficiency improvements can be made to finding new ways to save time on complex tasks, these professionals are essential for any research team looking to streamline their operations. So don't hesitate - leverage the power of data and informatics consulting today!

Definition Of Scientific Workflow

Scientific workflows involve the combination of data and techniques to create new knowledge. It's an iterative process that starts with a research question, then moves through data collection and analysis, to finally ends with presenting findings. Each step of the workflow requires careful planning and execution.

Data management is integral to scientific workflows. Data must be collected, organized, analyzed, stored, and shared in order for meaningful results to be obtained. This means that efficient data management practices must be used throughout the workflow process. In addition, data must be kept secure and protected from unauthorized access or manipulation.

Informatics consulting can help optimize a scientific workflow by providing expertise in data management, analysis techniques, software tools, and more. With the right team of experts on board, scientists can streamline their workflows and gain insights faster than ever before.

Benefits Of Optimizing It

Now that we have a clear understanding of what scientific workflow is, let's explore the advantages of optimizing it.

Firstly, optimizing your scientific workflow can help you save time. This is because data and informatics consulting services can help you simplify complex processes and increase the accuracy of analysis results. By having an efficient workflow, scientists are able to quickly identify trends in their data and generate actionable insights. Furthermore, they can automate tedious tasks that would otherwise take up valuable time and resources. This allows them to focus more on important projects and research goals.

Secondly, it helps to improve collaboration among scientists. Data and informatics consulting services allow teams to share information easily and securely, which increases the efficiency of communication between members. Additionally, it provides teams with a better way to track progress on their projects since everyone is working off the same platform. All this leads to greater engagement among team members, leading to better results overall.

Finally, it also helps reduce errors in data processing. Data and informatics consulting services provide access to advanced tools that make it easier for scientists to spot discrepancies or inaccuracies in their data sets before making decisions based on them. This ensures that all data used for analysis is accurate and reliable, leading to improved outcomes from experiments or studies. Ultimately, by using these services, scientists can be confident in the accuracy of their findings while also reducing the risk of mistakes being made due to inaccurate data processing.

The Role Of Data And Informatics Consulting

Data and informatics consulting can play an invaluable role in optimizing scientific workflows. Their expertise can help streamline processes, leading to greater efficiency, accuracy, and effectiveness. Firms specializing in this field have the experience and understanding of the latest technology to ensure that scientists are able to utilize their data effectively.

They can advise on a range of issues from data collection to storage, analysis, visualization, and reporting. Additionally, they provide guidance for the implementation of analytics tools such as machine learning and artificial intelligence. Ultimately, this helps scientists make more informed decisions based on deeper insights into their data sets.

Data and informatics consulting firms are also well-positioned to create custom solutions tailored to an organization's specific needs. By leveraging their knowledge of data science methods and technologies, these consultants are able to develop innovative solutions that enable organizations to maximize their data’s potential. This allows scientific teams to focus on research objectives rather than dealing with tedious technical tasks related to managing data.

The right data and informatics consulting partner can be a powerful ally in optimizing workflows for any scientific organization or team. With their help, organizations can improve their efficiency while gaining deeper insight into their datasets and making better decisions based on this information.

Analyzing Your Current Workflows

Now that you understand the role of data and informformatics consulting in optimizing your scientific workflow, it's time to analyze your current workflows. Understanding how you currently approach research and what processes are working is key to understanding where improvements can be made.

The first step in analyzing your current workflows is to document all the steps involved in each project. This will help identify which steps are crucial to a successful outcome and which could use some optimization. After documenting the process, assess how much time each step takes and look for any redundancies or areas where processes can be streamlined. Once you have identified those areas, determine if automation could save time and improve productivity.

Finally, evaluate how secure your existing systems are by looking at access controls and data security protocols. With this information, you'll be able to create a plan for improving your overall efficiency while still maintaining important security measures.

Identifying Opportunities For Improvement

The first step in optimizing your scientific workflow with data and informatics consulting is to identify opportunities for improvement. To do this, you must analyze your current methods and processes, create an inventory of key tools and technologies, and assess the effectiveness of each component.

When evaluating your existing workflow, look for areas where processes are inefficient or outdated. Are there steps that can be automated? Is there a need to integrate additional software solutions or new data sources? Are there any manual processes that could be streamlined? Make a list of any issues that need to be addressed and prioritize them according to their importance.

Once you have identified potential improvements, it’s time to start implementing solutions. Develop a plan for making changes, such as introducing new technologies or streamlining existing processes. Outline the resources required for each step and set realistic timelines for completion. By taking the time to identify opportunities for improvement and develop an actionable plan, you can ensure your scientific workflow is optimized for success.

Automating Processes

Having identified where improvements can be made, the next step is to consider how to automate processes in order to optimize your scientific workflow. Automation has the potential to reduce cost and time while increasing efficiency and accuracy.

To begin automating processes, it is important to understand what types of tasks are best suited for automation. Tasks that involve repetitive processes, such as data entry or data analysis, are good candidates for automation as they require minimal human intervention. Additionally, tasks that involve complex calculations or other computational processes may also be suitable for automation.

Once tasks have been identified, the next step is to determine which tools and technologies can help automate these processes. Data and informatics consulting can assist in this process by providing insights into the most effective technologies for automating specific tasks. These insights can include recommendations on software solutions and hardware platforms, as well as advice on how to configure systems for optimal performance. Automating processes with the right tools can ultimately lead to significant improvements in efficiency and cost savings.

Utilizing Cloud-Based Solutions

Cloud-based solutions are becoming increasingly popular for managing data and informatics in the scientific workflow. They offer a range of advantages that can help optimize the entire process. Firstly, cloud-based solutions offer a secure storage platform that is easily accessible from any device connected to the internet. It also helps eliminate the need for expensive hardware and IT support costs, as cloud-based solutions are typically priced on a monthly subscription basis with high scalability depending on your needs. Finally, they provide an easy way to share data with collaborators securely, enabling easy collaboration within research teams.

Data and informatics consulting can help organizations make the most of cloud-based solutions by providing expertise in areas such as setting up cloud infrastructure, migrating existing data, and implementing security measures to protect sensitive information. This kind of consulting is especially useful for companies looking to transition from traditional computing models to more modern methods of data management. Additionally, it can help streamline processes and ensure maximum efficiency for scientific workflows.

Cloud-based solutions provide many benefits which can be used to improve scientific workflow processes. Through careful planning and expert consultation, organizations can take advantage of these tools to maximize their productivity and achieve their goals more quickly and efficiently.

Leveraging Big Data Analytics

Data and informatics consulting can help streamline your workflow by leveraging big data analytics. This type of consultative approach can be used to identify trends, assess risks, and plan for more effective outcomes. With big data analytics, you get the insights you need to make decisions that will have an impact on the success of your scientific research.

The first step in leveraging big data analytics is to gather relevant data from multiple sources. This could include records from online databases, surveys, questionnaires, interviews and much more. Once this information is collected, it must be analyzed to uncover patterns or correlations that may exist between different factors. Using advanced algorithms and statistical methods, consultants can discover correlations between variables and gain insight into the underlying causes of certain phenomena.

This analysis of large datasets can enable researchers to determine which strategies are most effective in their research efforts. By combining this knowledge with predictive modeling techniques, scientists can develop strategies that maximize efficiency while minimizing costs and effort invested in their projects. In addition to this, big data analytics also provides detailed visualizations which allow researchers to monitor changes in their research over time and gain deeper understanding into the dynamics at play within their research studies.

System Testing And Validation

Having explored the potential of big data analytics, it's time to turn our attention to system testing and validation. To ensure that systems are functioning properly and producing accurate results, they must be tested extensively and verified with rigorous standards. This requires developing a comprehensive test plan that addresses all aspects of the system's functionality.

Systems should be tested in multiple environments, including development, staging, and production. Testing should include both functional and non-functional tests such as performance, scalability, security, reliability, usability, and compatibility tests. Additionally, automated tests can be used to supplement manual tests and identify any issues with system performance or data accuracy.

Once testing is complete and the system is validated for proper functioning, it can then be deployed for use. This process helps to ensure that systems are reliable and trustworthy before being put into active use. If done correctly, this process can optimize your workflow by eliminating any unforeseen errors or inaccuracies from occurring during production runs.

Continuous Monitoring And Maintenance

Continuous monitoring and maintenance of data and informatics systems is essential to ensure their efficient operation. Keeping these systems up-to-date with the latest software, hardware, and security patches can prevent costly issues down the road. There are a few steps that need to be taken to ensure that your scientific workflow is properly maintained.

First, data should be regularly backed up in order to protect it from potential loss or corruption. Regular backups also make it easier to restore data if any issues arise. Second, access control systems should be monitored closely so that users only have access to the information they need. Finally, system health checks should be conducted on a regular basis to identify any potential issues that could affect performance.

When done correctly, these steps can help guarantee smooth operation of data and informatics systems in your scientific workflow. Regular monitoring and maintenance also provides peace of mind knowing that your data is secure and performing optimally.

Conclusion

In conclusion, optimizing your scientific workflow with data and informatics consulting can be a great asset to any organization. It helps you identify areas for improvement and make necessary changes to keep up with the ever-changing field of science. By utilizing cloud-based solutions, leveraging big data analytics, conducting system testing and validation, and continuously monitoring and maintaining these systems, you can ensure that your scientific workflow is effective and efficient. With the help of an experienced data and informatics consultant, you can create a streamlined workflow that will save time, money, and energy while producing top-notch results. So if you're looking to get the most out of your scientific workflows, don't hesitate to contact a data and informatics consultant today!

#Scientific workflow#Data management#Informatics consulting#Research efficiency#Data analysis#Data visualization#Research collaboration#Data security#Laboratory automation#Data integration#Scientific reproducibility#Cloud computing#High-performance computing#Machine learning#Research data governance.

0 notes

Text

Optimizing Lab Operations with Scientific Informatics Managed Services

In today's world, laboratories have become an essential part of various industries, including healthcare, pharmaceuticals, biotech, and environmental sciences. The growth of these industries has resulted in an increase in the number of laboratories, making lab operations management more challenging than ever before. It is crucial to optimize lab operations to ensure accuracy, safety, and efficiency in research and development. One way to do this is by leveraging scientific informatics managed services. In this article, we will discuss how scientific informatics managed services can help optimize lab operations and improve the overall efficiency of laboratories.

I. Introduction Laboratory operations have become increasingly complex due to advances in technology and the growing need for accurate data in various industries. The traditional approach to lab operations management, which involved manual processes and spreadsheets, is no longer effective. This has resulted in an increasing demand for scientific informatics managed services. These services use technology to automate and optimize lab operations, resulting in improved efficiency, accuracy, and safety.

II. What are Scientific Informatics Managed Services? Scientific informatics managed services are a suite of software tools and services designed to automate and optimize laboratory operations. They include laboratory information management systems (LIMS), electronic lab notebooks (ELN), laboratory execution systems (LES), scientific data management systems (SDMS), and many more. These tools and services provide an integrated approach to lab operations management, resulting in improved efficiency, accuracy, and safety.

III. Benefits of Scientific Informatics Managed Services Scientific informatics managed services offer several benefits to laboratories, including:

A. Improved Efficiency Scientific informatics managed services automate several manual processes, resulting in improved efficiency. These services provide an integrated approach to lab operations management, resulting in improved productivity, reduced turnaround times, and better resource utilization.

B. Increased Accuracy Scientific informatics managed services eliminate errors that occur due to manual processes, resulting in increased accuracy. These services ensure data integrity and traceability, resulting in high-quality data that can be trusted for decision-making.

C. Enhanced Safety Scientific informatics managed services ensure compliance with regulatory requirements, resulting in enhanced safety. These services provide electronic record-keeping and audit trails, ensuring that all actions are traceable and accountable.

D. Cost-Effective Scientific informatics managed services offer a cost-effective approach to lab operations management. They eliminate the need for manual processes, reducing labor costs, and improving resource utilization.

IV. How Scientific Informatics Managed Services Optimize Lab Operations Scientific informatics managed services optimize lab operations in several ways, including:

A. Sample Management Sample management is a critical aspect of lab operations, and scientific informatics managed services automate this process. These services provide an integrated approach to sample management, from sample registration to result reporting, resulting in improved efficiency, accuracy, and traceability.

B. Workflow Management Scientific informatics managed services provide an integrated approach to workflow management, resulting in improved efficiency, accuracy, and traceability. These services automate several manual processes, including task scheduling, execution, and reporting, resulting in improved productivity and reduced turnaround times.

C. Data Management Data management is a crucial aspect of lab operations, and scientific informatics managed services provide an integrated approach to data management. These services ensure data integrity and traceability, resulting in high-quality data that can be trusted for decision-making.

#Laboratory Informatics#Data Management#Scientific Data Analytics#Laboratory Automation#Laboratory Information Management System (LIMS)#Laboratory Workflow Optimization#Scientific Software#Scientific Data Integration#Scientific Data Visualization#Digital Transformation#Quality Control#Compliance Management#Scientific Research Management#Cloud Computing#Artificial Intelligence (AI)

0 notes

Text

Goretober #8 - Flesh & Steel

#dead philosophy's goretober 2023#goretober 2023#goretober#halloween#automation#employee of the month#laboratory equipment#surgery#october art challenge#october art prompts#art prompt#drawtober#dark art#horror art#dark illustration#traditional art#traditional drawing#traditional painting#traditional illustration#artists on tumblr#illustrators on tumblr#support human artists#goodnight everyone

34 notes

·

View notes

Video

youtube

Here’s music! Dexter’s laboratory song can you believe it!

#dexter's lab#dexter's laboratory#music#i know how to sample now. And pitch bend. And use automation tracks#i have too much power

10 notes

·

View notes

Text

The latest report by Precision Business Insights, titled Automated Laboratory Centrifuge Market covers complete information on market size, share, growth, trends, segment analysis, key players, drivers, and restraints.

0 notes

Text

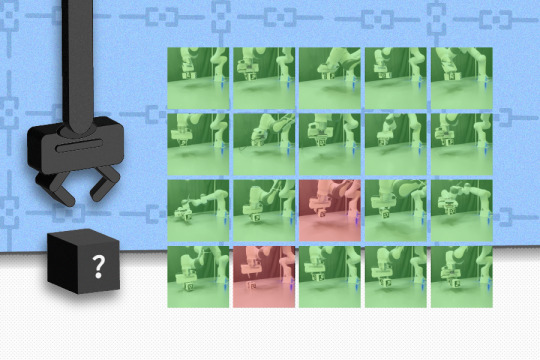

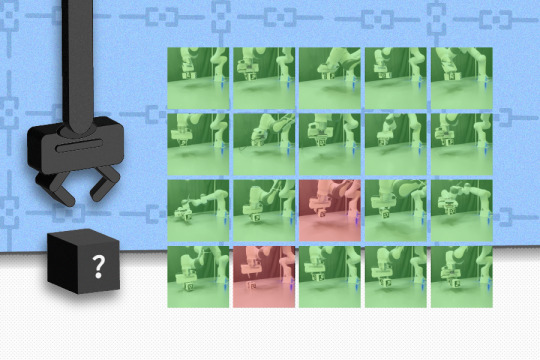

Helping robots grasp the unpredictable

New Post has been published on https://thedigitalinsider.com/helping-robots-grasp-the-unpredictable/

Helping robots grasp the unpredictable

When robots come across unfamiliar objects, they struggle to account for a simple truth: Appearances aren’t everything. They may attempt to grasp a block, only to find out it’s a literal piece of cake. The misleading appearance of that object could lead the robot to miscalculate physical properties like the object’s weight and center of mass, using the wrong grasp and applying more force than needed.

To see through this illusion, MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers designed the Grasping Neural Process, a predictive physics model capable of inferring these hidden traits in real time for more intelligent robotic grasping. Based on limited interaction data, their deep-learning system can assist robots in domains like warehouses and households at a fraction of the computational cost of previous algorithmic and statistical models.

The Grasping Neural Process is trained to infer invisible physical properties from a history of attempted grasps, and uses the inferred properties to guess which grasps would work well in the future. Prior models often only identified robot grasps from visual data alone.

Typically, methods that infer physical properties build on traditional statistical methods that require many known grasps and a great amount of computation time to work well. The Grasping Neural Process enables these machines to execute good grasps more efficiently by using far less interaction data and finishes its computation in less than a tenth of a second, as opposed seconds (or minutes) required by traditional methods.

The researchers note that the Grasping Neural Process thrives in unstructured environments like homes and warehouses, since both house a plethora of unpredictable objects. For example, a robot powered by the MIT model could quickly learn how to handle tightly packed boxes with different food quantities without seeing the inside of the box, and then place them where needed. At a fulfillment center, objects with different physical properties and geometries would be placed in the corresponding box to be shipped out to customers.

Trained on 1,000 unique geometries and 5,000 objects, the Grasping Neural Process achieved stable grasps in simulation for novel 3D objects generated in the ShapeNet repository. Then, the CSAIL-led group tested their model in the physical world via two weighted blocks, where their work outperformed a baseline that only considered object geometries. Limited to 10 experimental grasps beforehand, the robotic arm successfully picked up the boxes on 18 and 19 out of 20 attempts apiece, while the machine only yielded eight and 15 stable grasps when unprepared.

While less theatrical than an actor, robots that complete inference tasks also have a three-part act to follow: training, adaptation, and testing. During the training step, robots practice on a fixed set of objects and learn how to infer physical properties from a history of successful (or unsuccessful) grasps. The new CSAIL model amortizes the inference of the objects’ physics, meaning it trains a neural network to learn to predict the output of an otherwise expensive statistical algorithm. Only a single pass through a neural network with limited interaction data is needed to simulate and predict which grasps work best on different objects.

Then, the robot is introduced to an unfamiliar object during the adaptation phase. During this step, the Grasping Neural Process helps a robot experiment and update its position accordingly, understanding which grips would work best. This tinkering phase prepares the machine for the final step: testing, where the robot formally executes a task on an item with a new understanding of its properties.

“As an engineer, it’s unwise to assume a robot knows all the necessary information it needs to grasp successfully,” says lead author Michael Noseworthy, an MIT PhD student in electrical engineering and computer science (EECS) and CSAIL affiliate. “Without humans labeling the properties of an object, robots have traditionally needed to use a costly inference process.” According to fellow lead author, EECS PhD student, and CSAIL affiliate Seiji Shaw, their Grasping Neural Process could be a streamlined alternative: “Our model helps robots do this much more efficiently, enabling the robot to imagine which grasps will inform the best result.”

“To get robots out of controlled spaces like the lab or warehouse and into the real world, they must be better at dealing with the unknown and less likely to fail at the slightest variation from their programming. This work is a critical step toward realizing the full transformative potential of robotics,” says Chad Kessens, an autonomous robotics researcher at the U.S. Army’s DEVCOM Army Research Laboratory, which sponsored the work.

While their model can help a robot infer hidden static properties efficiently, the researchers would like to augment the system to adjust grasps in real time for multiple tasks and objects with dynamic traits. They envision their work eventually assisting with several tasks in a long-horizon plan, like picking up a carrot and chopping it. Moreover, their model could adapt to changes in mass distributions in less static objects, like when you fill up an empty bottle.

Joining the researchers on the paper is Nicholas Roy, MIT professor of aeronautics and astronautics and CSAIL member, who is a senior author. The group recently presented this work at the IEEE International Conference on Robotics and Automation.

#000#3d#3d objects#Aeronautical and astronautical engineering#aeronautics#affiliate#algorithm#arm#artificial#Artificial Intelligence#automation#box#Cake#computation#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#conference#data#domains#Electrical Engineering&Computer Science (eecs)#Engineer#engineering#experimental#Food#Fraction#Full#Future#History

0 notes

Text

Lab Analyzers Interface Solutions: Enhancing Efficiency and Accuracy

In the ever-evolving landscape of laboratory operations, lab analyzers interfacing plays a crucial role in streamlining workflows and ensuring accuracy. As laboratories seek to optimize their processes, system integration, laboratory efficiency, instrument interface, analytical instruments, and automated reporting emerge as key components of successful interface solutions.

System integration is essential for seamlessly connecting various laboratory instruments and systems, allowing for efficient data exchange and workflow automation. By integrating disparate systems, laboratories can eliminate manual data entry errors, reduce turnaround times, and improve overall efficiency.

Achieving laboratory efficiency requires optimizing every aspect of laboratory operations, from sample processing to result reporting. Through effective analyzers interfacing, laboratories can automate repetitive tasks, minimize downtime, and allocate resources more effectively, ultimately enhancing productivity and reducing costs.

The instrument interface is a critical component of lab analyzers interfacing, facilitating communication between analytical instruments and laboratory information systems (LIS). By implementing standardized interfaces, laboratories can ensure compatibility between instruments, streamline data transfer, and enhance interoperability.

Advanced analytical instruments offer enhanced capabilities for laboratory testing, allowing for more accurate and precise analysis of patient samples. Leveraging the latest analytical technologies, laboratories can improve diagnostic accuracy, expand testing capabilities, and deliver better patient care.

Automated reporting capabilities streamline the generation and delivery of test results, ensuring timely access to critical information for healthcare providers. By automating reporting processes, laboratories can reduce turnaround times, improve result accuracy, and enhance communication with clinicians and patients.

In conclusion, lab analyzers interface solutions play a vital role in enhancing efficiency and accuracy in laboratory operations. Through system integration, laboratory efficiency, instrument interface, analytical instruments, and automated reporting, laboratories can optimize workflows, improve diagnostic capabilities, and ultimately deliver better patient care.

#System Integration#Laboratory Efficiency#Instrument Interface#Analytical Instruments#Automated Reporting

1 note

·

View note

Text

0 notes

Text

Powering Discovery: The Growing Laboratory Equipment Market

The Laboratory Equipment Market: Powering Scientific Discovery

The laboratory equipment market plays a critical role in scientific research and development across various disciplines. This market encompasses a wide range of instruments and consumables used in laboratories for tasks like analysis, testing, and manipulation of materials. The laboratory equipment market is experiencing steady…

View On WordPress

0 notes

Text

Laboratory Automation Forecast Period : Key Drivers, Challenges, and Opportunities (2023-2035)

The global lab automation forecast period is estimated to be USD 5.2 billion in 2022 and grow at an annualized rate (CAGR) of 13%. The Roots Analysis report features an extensive study of the current market landscape and the future potential of the lab automation market over the next 12 years. Get a detailed insights report now!

0 notes

Text

Bit (II)

#lab#illustration#digital painting#digital art#digital illustration#cyberpunk#laboratory#retro#90s#automation#close#synthetic#robotics#scan#cables#mirror#monitor#cyborg#android#body#partial#artists on tumblr#micro#animation#heartbeat#visceral

666 notes

·

View notes

Text

Implementing LabVIEW Automation In Clinical Laboratory

Implementing LabVIEW automation in clinical laboratory settings has revolutionized the way in which medical tests and analyses are conducted. LabVIEW offers a wide array of tools and functionalities that enable laboratories to automate processes and enhance efficiency. This article delves into significance of LabVIEW in clinical laboratories, exploring its benefits, key components, practical applications and best practices.

0 notes

Text

Introducing #OnePCR, your ultimate solution for highly sensitive absolute detection needs. OnePCR is a fully automated #detection system in a single assay, featuring innovative extraction cassettes and #PCR reaction cartridges. Get quick results with the most powerful #POCT system, reducing hands-on time. It is designed to be user-friendly, compact, and convenient, making it suitable for various #laboratory settings. Experience #seamless operation with minimal manual intervention, as samples are directed straight to fluorescence detection, enhancing efficiency and #accuracy.

To know more about this incredible and revolutionary POCT System, contact us at [email protected] or call us +91 8800821778.

#pointofcare #poc #rtpcr #detection #ivd #powerful #automation #extraction #g2m #diagnostic #pcr #product #launch #news #rapid

#g2m#genes2me#poct#poc#point of care#semless#laboratory#detection solution#ivd#powerful#automation#extraction system#health care#health

0 notes

Text

Clinical Chemistry Analyzer

A clinical chemistry analyzer is a vital piece of medical laboratory equipment used to analyze various chemical components within bodily fluids such as blood, serum, plasma, or urine. Simultaneous dual-wavelength measuring

#Biochemical Analysis#Laboratory Equipment#Diagnostic Testing#Detection Technology#Automated Testing

0 notes