#DeepFake Technology

Text

LinkedIn co-founder Reid Hoffman deepfakes himself in interview video viral of this AI Technology

AI Technology: The trend of Artificial Intelligence is increasing rapidly in the whole world including India. There are many advantages and disadvantages of this technology. Talking about losses, in the last few months, deepfake content with the help of AI technology has caused a lot of loss to people. Deepfake is a technology in which, with the help of AI technology, a fake version of a real…

View On WordPress

#AI#AI chatbot#AI features#cofounder#Deepfake dangers#Deepfake technology#Deepfakes#Ethics of deepfakes#GPT-4#Hoffman#interview#LinkedIn#LinkedIn co-founder deepfake#Misinformation and deepfakes#Reid#Reid AI#Reid AI conversation#Reid Hoffman Deepfake#Reid Hoffman interviews himself#Technology#Use of deepfakes#video#viral

2 notes

·

View notes

Text

Navigating Ethical Concerns Around Using AI to Clone Voices for Political Messaging

Navigating Ethical Concerns Around Using AI to Clone Voices for Political Messaging

The use of artificial intelligence (AI) for voice cloning in political messaging is a complex and nuanced issue that raises significant ethical concerns. As technology continues to advance, it’s crucial to understand the implications and navigate these challenges responsibly.

Understanding AI Voice Cloning in…

View On WordPress

0 notes

Text

Why Deepfakes are Dangerous and How to Identify?

Spotting deepfakes requires a keen eye and an understanding of telltale signs. Unnatural facial expressions, awkward body movements and inconsistencies in coloring or alignment can betray the artificial nature of manipulated media. Lack of emotion or unusual eye movements may also indicate a deepfake. You can check if a video is real by looking at news from reliable sources and searching for similar images online. These can help in find changes or defects in the tech-generated video.

0 notes

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#how to spot them#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

What Is Deepfake

Find a good article talk about deepfake, mainly talk about:

An overview of Deepfakes

Deepfake technology development

How to make deepfake content

The pros and cons of deepfakes

Read This article: What Is Deepfake

1 note

·

View note

Text

New Post has been published on Lokapriya.com

New Post has been published on https://www.lokapriya.com/deepfake-technology-the-potential-risks-of-future-part-i/

DeepFake Technology: The Potential Risks of Future! Part I

Imagine you are watching a video of your favorite celebrity giving a speech. You are impressed by their eloquence and charisma, and you agree with their message. But then you find out that the video was not real. It was a deep fake, a synthetic media created by AI “(Artificial Intelligence) that can manipulate the appearance and voice of anyone. You feel deceived and confused.

This is no longer a hypothetical scenario; this is now real. There are several deepfakes of prominent actors, celebrities, politicians, and influencers circulating the internet. Some include deepfakes of Film Actors like Tom Cruise and Keanu Reeves on TikTok, among others. Even Indian PM Narendra Modi’s deepfake edited video was made.

In simple terms, Deepfakes are AI-generated videos and images that can alter or fabricate the reality of people, events, and objects. This technology is a type of artificial intelligence that can create or manipulate images, videos, and audio that look and sound realistic but are not authentic. Deepfake technology is becoming more sophisticated and accessible every day. It can be used for various purposes, such as in entertainment, education, research, or art. However, it can also pose serious risks to individuals and society, such as spreading misinformation, violating privacy, damaging reputation, impersonating identity, and influencing public opinion.

In this article, I will be exploring the dangers of deep fake technology and how we can protect ourselves from its potential harm.

How is Deepfake Technology a Potential Threat to Society?

Deepfake technology is a potential threat to society because it can:

Spread misinformation and fake news that can influence public opinion, undermine democracy, and cause social unrest.

Violate privacy and consent by using personal data without permission, and creating image-based sexual abuse, blackmail, or harassment.

Damage reputation and credibility by impersonating or defaming individuals, organizations, or brands.

Create security risks by enabling identity theft, fraud, or cyber attacks.

Deepfake technology can also erode trust and confidence in the digital ecosystem, making it harder to verify the authenticity and source of information.

The Dangers and Negative Uses of Deepfake Technology

As much as there may be some positives to deepfake technology, the negatives easily overwhelm the positives in our growing society. Some of the negative uses of deepfakes include:

Deepfakes can be used to create fake adult material featuring celebrities or regular people without their consent, violating their privacy and dignity. Because it has become very easy for a face to be replaced with another and a voice changed in a video. Surprising, but true.

Deepfakes can be used to spread misinformation and fake news that can deceive or manipulate the public. Deepfakes can be used to create hoax material, such as fake speeches, interviews, or events, involving politicians, celebrities, or other influential figures.

Since face swaps and voice changes can be carried out with the deepfake technology, it can be used to undermine democracy and social stability by influencing public opinion, inciting violence, or disrupting elections.

False propaganda can be created, fake voice messages and videos that are very hard to tell are unreal and can be used to influence public opinions, cause slander, or blackmail involving political candidates, parties, or leaders.

Deepfakes can be used to damage reputation and credibility by impersonating or defaming individuals, organizations, or brands. Imagine being able to get the deepfake of Keanu Reeves on TikTok creating fake reviews, testimonials, or endorsements involving customers, employees, or competitors.

For people who do not know, they are easy to convince and in an instance where something goes wrong, it can lead to damage in reputation and loss of belief in the actor.

Ethical, Legal, and Social Implications of Deepfake Technology

Ethical Implications

Deepfake technology can violate the moral rights and dignity of the people whose images or voices are used without their consent, such as creating fake pornographic material, slanderous material, or identity theft involving celebrities or regular people. Deepfake technology can also undermine the values of truth, trust, and accountability in society when used to spread misinformation, fake news, or propaganda that can deceive or manipulate the public.

Legal Implications

Deepfake technology can pose challenges to the existing legal frameworks and regulations that protect intellectual property rights, defamation rights, and contract rights, as it can infringe on the copyright, trademark, or publicity rights of the people whose images or voices are used without their permission.

Deepfake technology can violate the privacy rights of the people whose personal data are used without their consent. It can defame the reputation or character of the people who are falsely portrayed in a negative or harmful way.

Social Implications

Deepfake technology can have negative impacts on the social well-being and cohesion of individuals and groups, as it can cause psychological, emotional, or financial harm to the victims of deepfake manipulation, who may suffer from distress, anxiety, depression, or loss of income. It can also create social divisions and conflicts among different groups or communities, inciting violence, hatred, or discrimination against certain groups based on their race, gender, religion, or political affiliation.

Imagine having deepfake videos of world leaders declaring war, making false confessions, or endorsing extremist ideologies. That could be very detrimental to the world at large.

I am afraid that in the future, deepfake technology could be used to create more sophisticated and malicious forms of disinformation and propaganda if not controlled. It could also be used to create fake evidence of crimes, scandals, or corruption involving political opponents or activists or to create fake testimonials, endorsements, or reviews involving customers, employees, or competitors.

Read More: Detecting and Regulating Deepfake Technology: The Challenges! Part II

#ai#ai-and-deepfakes#cybersecurity#Deep Fake#DeepFake Technology#deepfakes#generative-ai#Modi#Narendra Modi#Security#social media#synthetic-media#web

0 notes

Text

Deepfake Technology: Exploring The Potential Risks Of AI-Generated Videos

Recent advances in the field of artificial intelligence (AI) have led to the emergence of a concerning technology called DeepFake. DeepFake refers to the use of AI algorithms to produce highly real-looking fake videos and photos that appear to be genuine. DeepFake is a technique which can be entertaining and amusing. But there are substantial risk and dangers. This article delves into the potential risks associated with AI-generated videos and images by shedding light on adverse effects that they may affect individuals, the society, and even national security.

The rise of DeepFake Technology

Deep fakes and AI technology is gaining popularity due to its ability produce convincing and false content. AI employing advanced algorithms and machine learning techniques allows the creation of videos that have facial expressions and voice that appear authentic. AI generated videos could be used to depict people doing or saying things that aren't actually happening that could have grave implications. Look at https://ca.linkedin.com/in/pamkaweske web site if you need to have details information concerning Deep fakes and AI.

Security threats to privacy

The privacy implications of DeepFake are one of its greatest dangers. Its ability to make fake videos, or to superimpose various faces on bodies of other individuals can lead to individuals being victimized for harm. DeepFake can be used as a tool to defame people, or to blackmail them in order to make it appear they're involved in criminal activities. The victims can suffer lasting psychological and emotional traumas.

False Information and Misinformation

Deep fakes and AI is a method of technology that exacerbates the issue of false news and misleading information. Artificially-generated videos and images are used by the public to deceive the public, influence their perceptions and create false narratives. The high level of authenticity offered by DeepFake is difficult to distinguish the difference between fake and real videos. The result is the loss of trust among media. A loss of trust in media sources can lead to serious consequences for the values of democracy and harmony in society.

Political Manipulation

DeepFake has the ability to manipulate and disrupt political processes. Through the creation of fake, realistic photos and videos of politicians, it becomes possible to show them engaged with criminal conduct or committing unethical acts and influence public opinion and causing instability in government institutions. The use of deep fakes and AI during political campaigns could cause the spreading of false information. It can also alter the process for making decisions democratically which undermines the foundations of a fair and equitable society.

Security threats to National Security

Beyond personal and societal implications, DeepFake technology also poses dangers to security of the nation. DeepFake videos can be used to impersonate officials of high rank or military personnel, potentially leading to confusion, misinformation, or even initiating conflicts. It is possible to compromise national security by creating convincing videos of sensitive intelligence or military operations.

Impacts on the Financial and Economic System

The risk of DeepFake technology can be found in the financial and economic sectors too. Artificially-generated video can be used to create fraudulent content, for example, fake interviews of prominent executives, false stock market predictions, or manipulated corporate announcements. This can result in financial losses, market instability and a decline in confidence in the world of business. In order to protect investors and ensure integrity in the financial system, it is crucial to detect and mitigate the risk associated with DeepFake.

DeepFake Technology: How to combat it

To address the threats posed by DeepFake, a multifaceted approach is needed. Technology advancements focused on the creation of reliable detection strategies and authentication tools are essential. Also, it is important to raise awareness about DeepFake and its implications amongst the public. Education and media literacy initiatives are important to help people improve their use of digital information, and less prone to the false information provided by DeepFake.

Bottom Line

DeepFake is a serious threat to privacy and security. It can affect societal stability, as well as the politics. Being able to make highly realistic fake videos and images can cause serious harm that can result in reputational harm, altering public opinion and disruption to democratic processes. Individuals, technologists as well as policymakers need to collaborate to devise effective countermeasures as well as strategies to combat the DeepFake risks.

0 notes

Text

AI-Generated Voice Firm Clamps Down After 4chan Makes Celebrity Voices for Abuse

4chan members used ElevenLabs to make deepfake voices of Emma Watson, Joe Rogan, and others saying racist, transphobic, and violent things.

It was only a matter of time before the wave of artificial intelligence-generated voice startups became a play thing of internet trolls. On Monday, ElevenLabs, founded by ex-Google and Palantir staffers, said it had found an “increasing number of voice cloning misuse cases” during its recently launched beta. ElevenLabs didn’t point to any particular instances of abuse, but Motherboard found 4chan members appear to have used the product to generate voices that sound like Joe Rogan, Ben Sharpio, and Emma Watson to spew racist and other sorts of material. ElevenLabs said it is exploring more safeguards around its technology.

Source: Motherboard // Vice; 30 January 2023

0 notes

Text

How To Stay Away From Deepfakes?

Generative AI is getting more proficient at creating deepfakes that can sound and look realistic. As a result, some of the more sophisticated spoofers have taken social engineering attacks to a more sinister level.

0 notes

Text

How to Identify Deepfake Videos Like a Fact-Checker

New Post has been published on https://thedigitalinsider.com/how-to-identify-deepfake-videos-like-a-fact-checker/

How to Identify Deepfake Videos Like a Fact-Checker

Deepfakes are synthetic media where an individual replaces a person’s likeness with someone else’s. They’re becoming more common online, often spreading misinformation around the world. While some may seem harmless, others can have malicious intent, making it important for individuals to discern the truth from digitally crafted false content.

Unfortunately, not everyone can access state-of-the-art software to identify deepfake videos. Here’s a look at how fact-checkers examine a video to determine its legitimacy and how you can use their strategies for yourself.

1. Examine the Context

Scrutinizing the context in which the video is presented is vital. This means looking at the background story, the setting and whether the video’s events align with what you know to be true. Deepfakes often slip here, presenting content that doesn’t hold up against real-world facts or timelines upon closer inspection.

One example involves a deepfake of Ukrainian President Volodymyr Zelensky. In March 2022, a deepfake video surfaced on social media where Zelensky appeared to be urging Ukrainian troops to lay down their arms and surrender to Russian forces.

Upon closer examination, several contextual clues highlighted the video’s inauthenticity. The Ukrainian government’s official channels and Zelensky himself didn’t share this message. Also, the timing and circumstances didn’t align with known facts about Ukraine’s stance and military strategy. The video’s creation aimed to demoralize Ukrainian resistance and spread confusion among the international community supporting Ukraine.

2. Check the Source

When you come across a video online, check for its source. Understanding where a video comes from is crucial because hackers could use it against you to deploy a cyberattack. Recently, 75% of cybersecurity professionals reported a spike in cyberattacks, with 85% noting the use of generative AI by malicious individuals.

This ties back to the rise of deepfake videos, and professionals are increasingly dealing with security incidents that AI-generated content is fueling. Verify the source by looking for where the video originated. A video originating from a dubious source could be part of a larger cyberattack strategy.

Trusted sources are less likely to spread deepfake videos, making them a safer bet for reliable information. Always cross-check videos with reputable news outlets or official websites to ensure what you’re viewing is genuine.

3. Look for Inconsistencies in Facial Expressions

One of the telltale signs of a deepfake is the presence of inconsistencies in facial expressions. While deepfake technology has advanced, it often struggles with accurately mimicking the subtle and complex movements that occur naturally when a person talks or expresses emotions. You can spot these by looking out for the following inconsistencies:

Unnatural blinking: Humans blink in a regular, natural pattern. However, deepfakes may either under-represent blinking or overdo it. For instance, a deepfake could show a person talking for an extended period without blinking or blinking too rapidly.

Lip sync errors: When someone speaks in a video, their lip movement may be off. Watch closely to see if the lips match the audio. In some deepfakes, the mismatch is subtle but detectable when looking closely.

Facial expressions and emotions: Genuine human emotions are complex and reflected through facial movements. Deepfakes often fail to capture this, leading to stiff, exaggerated or not fully aligned expressions. For example, a deepfake video might show a person smiling or frowning with less nuance, or the emotional reaction may not match the context of the conversation.

4. Analyze the Audio

Audio can also give you clues into whether a video is real or fake. Deepfake technology attempts to mimic voices, but discrepancies often give them away. For instance, pay attention to the voice’s quality and characteristics. Deepfakes can sound robotic or flat in their speech, or they may lack the emotional inflections an actual human would exhibit naturally.

Background noise and sound quality can also provide clues. A sudden change could suggest that parts of the audio were altered or spliced together. Authentic videos typically remain consistent throughout the entirety.

5. Investigate Lighting and Shadows

Lighting and shadows play a large part in revealing a video’s authenticity. Deepfake technology often struggles with accurately replicating how light interacts with real-world objects, including people. Paying close attention to lighting and shadows can help you spot various items that indicate whether it’s a deepfake.

In authentic videos, the subject’s lighting and surroundings should be consistent. Deepfake videos may display irregularities, such as the face being lit differently from the background. If the video’s direction or source of light doesn’t make sense, it could be a sign of manipulation.

Secondly, shadows should behave according to the light sources in the scene. In deepfakes, shadows can appear at wrong angles or fail to correspond with other objects. Anomalies in shadow size, direction, and the presence or absence of expected shadows give you an overall idea.

6. Check for Emotional Manipulation

Deepfakes do more than create convincing falsehoods — people often design them to manipulate emotions and provoke reactions. A key aspect of identifying such content is to assess whether it aims to trigger an emotional response that could cloud rational judgment.

For instance, consider the incident where an AI-generated image of a bomb at the Pentagon circulated on Twitter X. Despite being completely fabricated, the image’s alarming nature caused it to go viral and trigger widespread panic. As a result, a $500 billion loss in the stock market occurred.

Deepfake videos can stir the same amount of panic, especially when AI is involved. While evaluating these videos, ask yourself:

Is the content trying to evoke a strong emotional response, such as fear, anger or shock? Authentic news sources aim to inform, not incite.

Does the content align with current events or known facts? Emotional manipulation often relies on disconnecting the audience from rational analysis.

Are reputable sources reporting the same story? The absence of corroboration from trusted news outlets can indicate the fabrication of emotionally charged content.

7. Leverage Deepfake Detection Tools

As deepfakes become more sophisticated, relying solely on human observation to identify them can be challenging. Fortunately, deepfake detection tools that use advanced technology to distinguish between real and fake are available.

These tools can analyze videos for inconsistencies and anomalies that may not be visible to the naked eye. They leverage AI and machine learning by utilizing speech watermarking as one method. These technologies are trained to recognize the watermark’s placement to determine if the audio was tampered with.

Microsoft developed a tool called Video Authenticator, which provides a confidence score indicating the likelihood of a deepfake. Similarly, startups and academic institutions continually develop and refine technologies to keep pace with evolving deepfakes.

Detecting Deepfakes Successfully

Technology has a light and dark side and is constantly evolving, so it’s important to be skeptical of what you see online. When you encounter a suspected deepfake, use your senses and the tools available. Additionally, always verify where it originated. As long as you stay on top of the latest deepfake news, your diligence will be key in preserving the truth in the age of fake media.

#:is#2022#ai#ai-generated content#Analysis#Art#Artificial Intelligence#attention#audio#background#billion#Capture#change#Cloud#Community#content#cyberattack#Cyberattacks#cybersecurity#Dark#deepfake#deepfake detection#deepfake technology#deepfakes#Design#detection#direction#display#emotions#Events

0 notes

Text

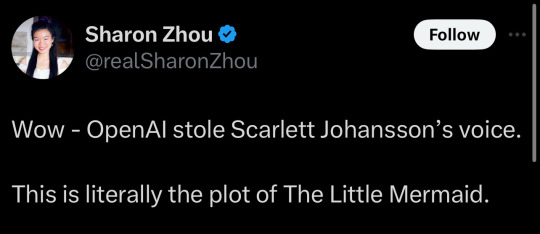

“Last September, I received an offer from Sam Altman, who wanted to hire me to voice the current ChatGPT 4.0 system. He told me that he felt that by my voicing the system, I could bridge the gap between tech companies and creatives and help consumers to feel comfortable with the seismic shift concerning humans and Al. He said he felt that my voice would be comforting to people.

After much consideration and for personal reasons, I declined the offer. Nine months later, my friends, family and the general public all noted how much the newest system named “Sky” sounded like me.

When I heard the released demo, I was shocked, angered and in disbelief that Mr. Altman would pursue a voice that sounded so eerily similar to mine that my closest friends and news outlets could not tell the difference. Mr. Altman even insinuated that the similarity was intentional, tweeting a single word “her” - a reference to the film in which I voiced a chat system, Samantha, who forms an intimate relationship with a human.

Two days before the ChatGPT 4.0 demo was released, Mr. Altman contacted my agent, asking me to reconsider. Before we could connect, the system was out there.

As a result of their actions, I was forced to hire legal counsel, who wrote two letters to Mr. Altman and OpenAl, setting out what they had done and asking them to detail the exact process by which they created the “Sky” voice. Consequently, OpenAl reluctantly agreed to take down the “Sky” voice.

In a time when we are all grappling with deepfakes and the protection of our own likeness, our own work, our own identities, I believe these are questions that deserve absolute clarity. I look forward to resolution in the form of transparency and the passage of appropriate legislation to help ensure that individual rights are protected.”

—Scarlett Johansson

#politics#deepfakes#scarlett johansson#sam altman#openai#tech#technology#open ai#chatgpt#chatgpt 4.0#the little mermaid#sky#sky ai#consent

199 notes

·

View notes

Text

India has warned tech companies that it is prepared to impose bans if they fail to take active measures against deepfake videos, a senior government minister said, on the heels of warning by a well-known personality over a deepfake advertisement using his likeness to endorse a gaming app.

The stern warning comes as New Delhi follows through on advisory last November of forthcoming regulations to identify and restrict propagation of deepfake media. Rajeev Chandrasekhar, Deputy IT Minister, said the ministry plans to amend the nation’s IT Rules by next week to establish definitive laws counteracting deepfakes. He expressed dissatisfaction with technology companies’ adherence to earlier government advisories on manipulative content.

Continue Reading.

73 notes

·

View notes

Text

Deepfake Porn Is Not “Just Porn” Because Consent Is Not Present | The Mary Sue

Let me be clear, everyone owns their own body to do whatever with it as they please. Monetize it. Tattoo it. Train it for a marathon. Lay on about on the couch. Whatever you want, it’s yours. No one should be allowed to take your likeness and make it do anything. To digitize someone’s body, and put it in sexual situations without the owner of that body’s consent, is morally reprehensible.

345 notes

·

View notes