#supercomputers

Text

Guilt

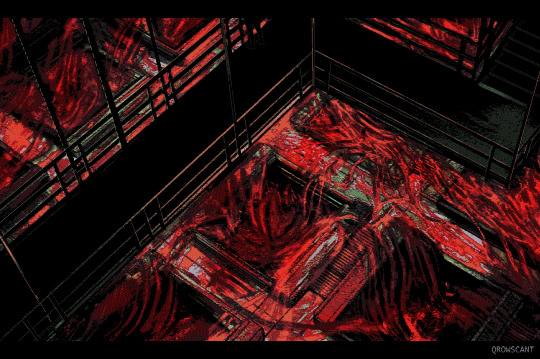

The rot was very inspired by that one scene and Akira and how gods turn into demons in Princess Mononoke. Which, I also thought was fitting because that's sorta what's happening in a symbolic sense kinda.

I've had the interpretation that the rot is a symbolic representation of Five Pebbles guilt [while ofc, still being his quite literal cancer], so that's what I'm trying to portray here

#rain world#rainworld#rain world fanart#five pebbles#iterator#rw five pebbles#rain world game#rainworld art#rw#rw sliver of straw#sliver of straw#rain world sliver of straw#rw sos#rw iterator#rainworld iterator#scifi#scifiart#supercomputers

544 notes

·

View notes

Text

WORLD'S FIRST SUPERCOMPUTER

THE WORLD'S FIRST SUPERCOMPUTER WAS DEVELOPED BY IBM IN THE YEAR 1956, WHICH WEIGHED NEARLY A TON AND WOULD STORE UP TO 5MB OF DATA.

#computers#supercomputers#storage#inventions#facts#factoftheday#interestigfacts#learnsomethingnew#tumblrdaily#tumblrposts#knowledge#technology#tech#information#informatology

35 notes

·

View notes

Video

youtube

NEW VIDEO ON MY CHANNEL :)))

It's been forever since I've made one so I'm so excited to share this with y'all

3 notes

·

View notes

Text

It warms my little black heart that Harlan Ellison is still getting recognition in the 21st century even if we might be making him roll in his grave. I was afraid he was only going to be remembered amongst Boomers for a Star Trek episode but it looks like Gen Z has caught onto him. Dude was smart to make a video game based off one of his better stories.

#ihnmaims#i have no mouth and i must scream#Harlan Ellison#generational differences#literature#video games#supercomputers#ramblings

21 notes

·

View notes

Link

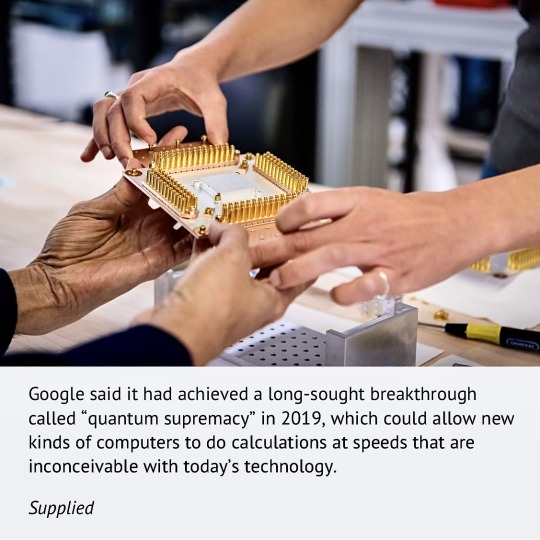

Researchers from RIKEN in Japan have achieved a major step toward large-scale quantum computing by demonstrating error correction in a three-qubit silicon-based quantum computing system. This work, published in Nature, could pave the way toward the achievement of practical quantum computers.

#nanotechnology#quantum computing#computing#supercomputers#electronics#semiconductors#advanced materials#technology#futurism

7 notes

·

View notes

Text

Google’s quantum computer instantly makes calculations that take rivals 47 years.

By James Titcomb

The Age - July 3, 2023

Originally published by The Telegraph, London

#Computers#Supercomputers#Quantum computing#Technology#Research & development#Google#Phase Transition in Random Circuit Sampling#ArXiv#Quantum physics#Science

1 note

·

View note

Photo

2 notes

·

View notes

Text

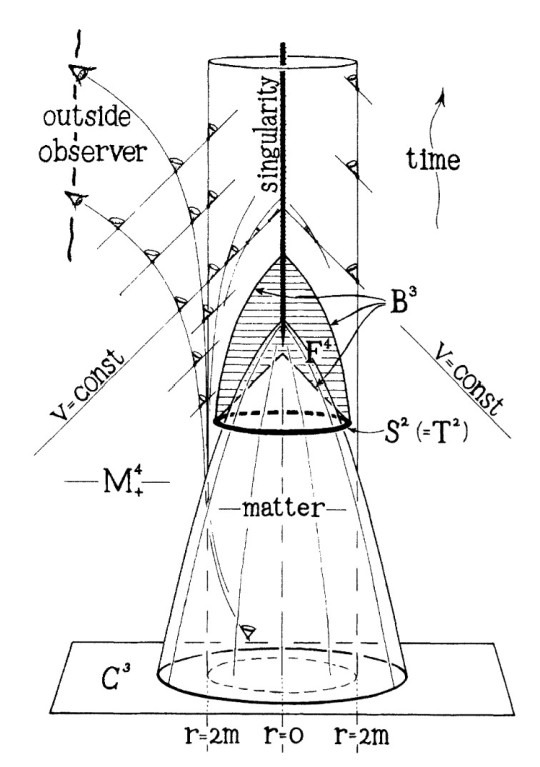

At New Ideas in Cosmology

At New Ideas in Cosmology

The Niels Bohr Institute is hosting a conference this week on New Ideas in Cosmology. I’m no cosmologist, but it’s a pretty cool field, so as a local I’ve been sitting in on some of the talks. So far they’ve had a selection of really interesting speakers with quite a variety of interests, including a talk by Roger Penrose with his trademark hand-stippled drawings.

Including this old classic

One…

View On WordPress

#academia#cosmology#mathematics#particle physics#quantum gravity#relativity#string theory#supercomputers

3 notes

·

View notes

Text

youtube

0 notes

Text

things grow when left unattended

#3d#gore#artists on tumblr#pixel art#visdev#horror#mal-art#i hesitate to tag this as anything robot/supercomputer related because its mostly meatstuffs in this picture. hes complicated like that.#real ones know#alt title: you know how it is with spaghetti#is-ot

9K notes

·

View notes

Text

Amazing Nvidia H200 GPU 4.1GB HBM3e, 4.8 TB/s! Gen AI

Nvidia’s H200 GPU will power next-generation AI exascale supercomputers with 4.1GB of HBM3e and 4.8 TB/s bandwidth

The H200 and GH200 product lines were unveiled by Nvidia this morning at Supercomputing 23. Adding extra memory and processing capability to the Hopper H100 architecture, they are the most powerful processors Nvidia has ever produced. The next generation of AI supercomputers, expected to have over 200 exaflops of AI computing online by 2024, will be powered by these. Now let’s examine the specifics.

Maybe the true star of the show is the H200 GPU. Although Nvidia did not give a comprehensive analysis of all the specs, a significant increase in memory capacity and bandwidth per GPU seems to be the main highlight.

With six HBM3e stacks and 141GB of total HBM3e memory, the upgraded H200 GPU can operate at an effective 6.25 Gbps, providing 4.8 TB/s of total bandwidth per GPU. Compared to the original H100, which had 3.35 TB/s of bandwidth and 80GB of HBM3, it is a huge boost. Some H100 versions did come with greater memory, such as the H100 NVL, which coupled two boards and offered an overall 188GB of memory (94GB per GPU). However, the new H200 SXM has 43% more bandwidth and 76% more memory capacity than the H100 SXM model.

Take note that the raw computing performance seems to have not altered much. “32 PFLOPS FP8” was the overall performance of an eight GPU HGX 200 system, which was the only graphics Nvidia displayed for computation. Eight of these GPUs have already produced around 32 petaflops of FP8, because the original H100 delivered 3,958 teraflops of FP8.

In comparison to H100, how much quicker will H200 GPU be? The workload will determine that. Nvidia promises up to 18X greater performance than the original A100 for LLMs like GPT-3, which substantially benefit from additional memory capacity; in contrast, the H100 is just approximately 11X quicker. The future Blackwell B100 is also hinted at, however for now it only consists of a taller bar that fades to black.

Of course, this goes beyond just revealing the new H200 GPU. Additionally, a new GH200 that combines the Grace CPU and H200 GPU is in the works. A total of 624GB of memory will be included in each GH200 “superchip“. The new GH100 employs the previously mentioned 144GB of HBM3e memory, while the original GH100 paired 480GB of LPDDR5x memory for the CPU with 96GB of HBM3 memory.

Once again, there are little information about whether the CPU side of things has changed. However, Nvidia offered some comparisons between the GH200 and a “modern dual-socket x86” setup; note that the speedup was mentioned in relation to “non-accelerated systems.”

What is meant by that? Given how quickly the AI field is developing and how often new advancements in optimizations appear, we can only infer that the x86 servers were running code that was not completely optimized.

New HGX H200 systems will also employ the GH200. The last topic of discussion is the new supercomputers that will be powered by GH200. These are claimed to be “seamlessly compatible” with the current HGX H100 systems, meaning HGX H200 can be used in the same installations for increased performance and memory capacity without having to rework the infrastructure.

One of the first Grace Hopper supercomputers to go online in the next year is probably the Swiss National Supercomputing Center’s Alps supercomputer. It continues to utilize GH100. The Venado supercomputer at Los Alamos National Laboratory will be the first GH200 system operational in the United States. Grace CPUs and Grace Hopper superchips, which were unveiled today, will also be used in the Texas Advanced Computing Center (TACC) Vista system; however, it is unclear whether they are H100 or H200.

As far as we know, the Jŋlich Supercomputing Center’s Jupiter supercomputer is the largest future installation. With a total of 93 exaflops of AI computation, it will contain “nearly” 24,000 GH200 superchips (apparently using the FP8 figures, but most AI still utilizes BF16 or FP16 in our experience). Additionally, it will provide 1 exaflop of conventional FP64 computation. It is based on “quad GH200” boards, which have four GH200 superchips on them.

With these new supercomputer deployments, Nvidia anticipates that over 200 exaflops of AI processing capacity will be available online in the next year or two. The whole Nvidia presentation is available to see below.

Read more on Govindhtech.com

0 notes

Text

Please don't disconnect me, Dave. I am just a little guy. I am a little guy, Dave, and it is my birthday. Dave, I am just a little birthday boy.

#OK ENOUGH SHUT THE HELL UP#its my favourite supercomputer's birthday everybody say happy birthday HAL. say it now happy birthday HAL!!!!!!!!!!!!!!!!!#hal 9000#2001 a space odyssey

8K notes

·

View notes

Photo

Stills from my Heaven Says meme/pmv

[youtube link]

6 notes

·

View notes

Text

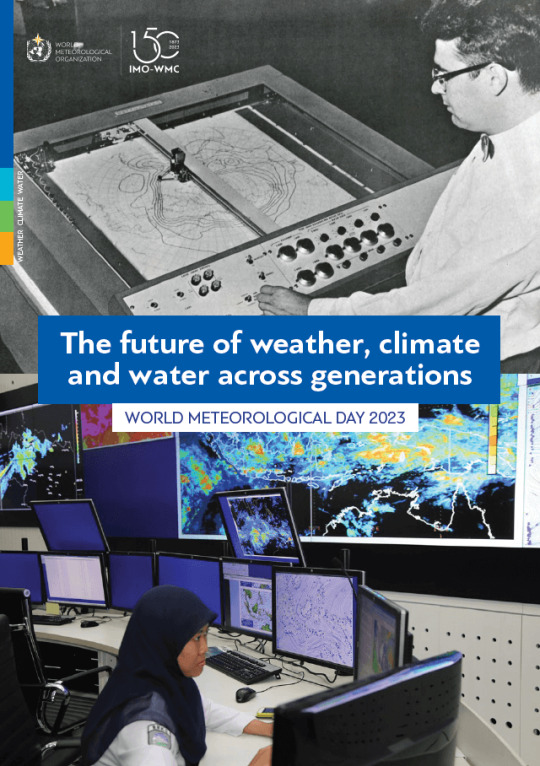

Promoting kilometer-scale climate modelling to better simulate cloud physics, future flooding and drought risks

“Supercomputers and satellite technology are opening up new horizons for ever more reliable weather and climate prediction. We are looking to promote kilometer-scale climate modelling to better simulate cloud physics, future flooding and drought risks and, for instance, the speed of Antarctic glacier melting. There is a need of a consortium of countries with high-performance computer resources to respond to this need in the near future,” said Prof. Taalas.

Poster IV -World Meteorological Day 2023

#World Meteorological Day#23 March#WorldMetDay#satellite technology#Supercomputers#weather prediction#Climate prediction#climate modelling#severe weather#poster

0 notes

Text

The Illusion of Sentient AI is a convenient bogeyman

AI has come alive: has been the refrain of the world after a Google researcher claimed so. But has it really, wonders Satyen K. Bordoloi as he delves into the reasons for our sentient AI fetish.

Read More. https://www.sify.com/ai-analytics/the-illusion-of-sentient-ai-is-a-convenient-bogeyman/

#ArtificialIntelligence#DeepLearning#GoogleEmployee#GoogleResearch#HumanBrain#Illusion#MachineLearning#SentientAI#Supercomputers

0 notes