#russian troll farm

Text

"Welcome to the Theatre": Diary of a Broadway Baby

Russian Troll Farm

February 21, 2024 | Off-Broadway | Vineyard Theatre | Evening | Play | Original | 1H 40M

A workplace comedy set in the office of a Russian troll farm, based off of the very real Internet Research Agency, which was dissolved last summer. Prior to its dissolution, it had engaged in online propaganda in favor of Russian business and political interests. This play is both hilarious and horrifying, especially in the context of Alexey Navalny's recent murder. The play is broken up into four parts with distinct styles, including a fifteen-minute monologue delivered brilliantly by Christine Lahti, whom I am now deeply in love with. The projection design was also pretty damn impressive, with real tweets the IRA created displayed at crucial moments. And with another election right on the horizon, it's certainly relevant.

Verdict: Why I Love the Theatre

A Note on Ratings

0 notes

Text

Play review (long post)

Review: In ‘Russian Troll Farm,’ You Can’t Stop the Memes

An unlikely dark comedy imagines the people pushing #PizzaGate, Donald Trump and who knows what next.

No one misses the early days and dark theaters of the Covid pandemic, but the emergency workaround of streaming content was good for a few things anyway. People who formerly could not afford admission suddenly could, since much of it was free, and artists from anywhere could now be seen everywhere, with just a Wi-Fi connection.

That’s how I first encountered “Russian Troll Farm,” a play by Sarah Gancher intended for the stage but that had its debut, in 2020, as an online co-production of three far-flung institutions: TheaterWorks Hartford, TheaterSquared in Fayetteville, Ark., and the Brooklyn-based Civilians. At the time, I found its subject and form beautifully realized and ideally matched — the subject being online interference in the 2016 presidential election by a Russian internet agency.

“This is digitally native theater,” I wrote, “not just a play plopped into a Zoom box.”

Now the box has been ripped open, and a fully staged live work coaxed out of it. But the production of “Russian Troll Farm” that opened on Thursday at the Vineyard Theater is an entirely different, and in some ways disappointing, experience. Though still informative and trenchant, and given a swifter staging by the director Darko Tresnjak, it has lost the thrill of the original’s accommodation to the extreme constraints of its time.

Not that it is any less relevant in ours; fake news will surely be as prominent in the 2024 election cycle (is Taylor Swift a pro-Biden psy-op?) as it was in 2016. That’s when, as Gancher recounts using many real texts, posts and tweets of the time, trolls at the Internet Research Agency — a real place in St. Petersburg, Russia — devised sticky memes and other content meant to undermine confidence in the electoral process, sow general discord, legitimize Trumpism and vaporize Hillary Clinton.

Egor (Haskell King) is a friendless, robotic techno-nerd who just wants to win the microwave oven that’s a prize for productivity. Steve (John Lavelle) is a Soviet revanchist who calls the Enlightenment a mistake and Gorbachev the “world’s biggest cuck.” Nikolai (Hadi Tabbal) is a moony screenwriter manqué who thinks what he does is evil but still wants “to do a good job at it” — causing Steve, who went to junior college in California, to deride him as a “human latte” and a “performative bookstore tote bag.”

The fourth troll is the newbie, Masha (Renata Friedman). A disillusioned journalist who took the job at the agency for the pay, she wants nothing more than to move to London and recover from Russia by doing yoga. Naturally she becomes the focal point of several interconnected bids for love and dominance among Steve, Nikolai and Ljuba, whose bureaucratic fury belies a troubled emotional life beneath.

The snappy dialogue draws moderate laughs, often by squeezing banal office politics against the scarier kind. (“No Nazi content unless specifically requested by supervisor,” Ljuba warns the others.) But though Gancher subtitles the play “a workplace comedy,” you may in the end be left wondering what’s funny. The trolls’ various schemes for advancement and connection all end disastrously, as many in the audience surely feel the election did, too. Nor does it help that the cast works so hard to get a response from the audience, sometimes annoyingly demanding participation and thus a kind of complicity.

Complicity was not of course possible in the no-longer-available 2020 streaming production, which required viewers to process it on the fly, in much the way they process social media, deciding for themselves what to laugh at — and what to ponder, repost or trash. Lacking that formal congruence, the live “Russian Troll Farm” has a temperature problem: Instead of cool, it feels overheated; instead of suggestive, prosaic.

It was likewise unsettling, in 2020, that you never quite knew where the characters existed, except in the electronic ether; now, on Alexander Dodge’s white box set, they are fixed in a highly specific, nonvirtual space, with ergo chairs and a photo of Putin. Likewise, the ear-scratching interstitial noise (by Darron L West and Beth Lake) and strobey light (by Marcus Doshi) and projection effects (by Jared Mezzocchi) are almost too gorgeously professional, failing to reproduce the deliberate crudeness of the original’s fuzz, pixelation and green-screen blur.

Crudeness is key. Not only does it elicit the poetry of Gancher’s writing, which despite its shiny surface has depth; it is also expressive in itself, because crudeness is a hallmark of the trolls’ greatest hits. Egor considers his English spelling mistakes (“libral” for “liberal”) a useful way of promoting engagement. People who comment on the errors are merely being pulled even farther into the web — and the whole point of the troll farm, as an author’s note points out, is “to stir up trouble.”

At that, it succeeded, though Russia has no patent on trolls. Indeed, the Internet Research Agency shut down last year, collateral damage from the Wagner Group rebellion, but fake news has never been riper. It’s just more local. I suppose “Russian Troll Farm” wants us to consider whether we would participate in its strange, chaotic economy of lies if given the opportunity — and a microwave.

Russian Troll Farm: A Workplace Comedy

Through Feb. 25 at Vineyard Theater, Manhattan; vineyardtheatre.org. Running time: 1 hour 40 minutes.

Jesse Green is the chief theater critic for The Times. He writes reviews of Broadway, Off Broadway, Off Off Broadway, regional and sometimes international productions. More about Jesse Green

A version of this article appears in print on Feb. 9, 2024, Section C, Page 3 of the New York edition with the headline: Even in Person, They Just Can’t Stop the Memes. Order Reprints | Today’s Paper | Subscribe

#refrigerator magnet#russian troll farm#drama#play#live theater#theater#theatre#sarah gancher#playwright

0 notes

Text

Russian troll farms

One factor in the toxic environment at the new twitler is the sheer amount of trolls, who are now embiggened and have free reign as Elon Musk has fired most of the staff who would have been in charge of content moderation, and invited back tens of thousands of miscreants previously banned from the old twitter. Many flavors, but all spreading discontent, chaos, disinformation.

https://www.rollingstone.com/politics/politics-news/feds-charge-russian-puppetmaster-for-secretly-directing-u-s-political-groups-1390087/

https://www.thedailybeast.com/how-russian-disinformation-goes-from-the-kremlin-to-qanon-to-fox-news

0 notes

Text

Comments section to an average video about war in Ukraine

Yes, a lot of these is russian trolls. But (1) not all of them, I've met people like this irl, and (2) it is not less distressing to be constantly bombarded with xenophobia AND russian missiles.

#ukraine#russia#war in ukraine#russian invasion of ukraine#russo ukrainian war#russian propaganda#war#disconformation#troll farms#tiktok

248 notes

·

View notes

Text

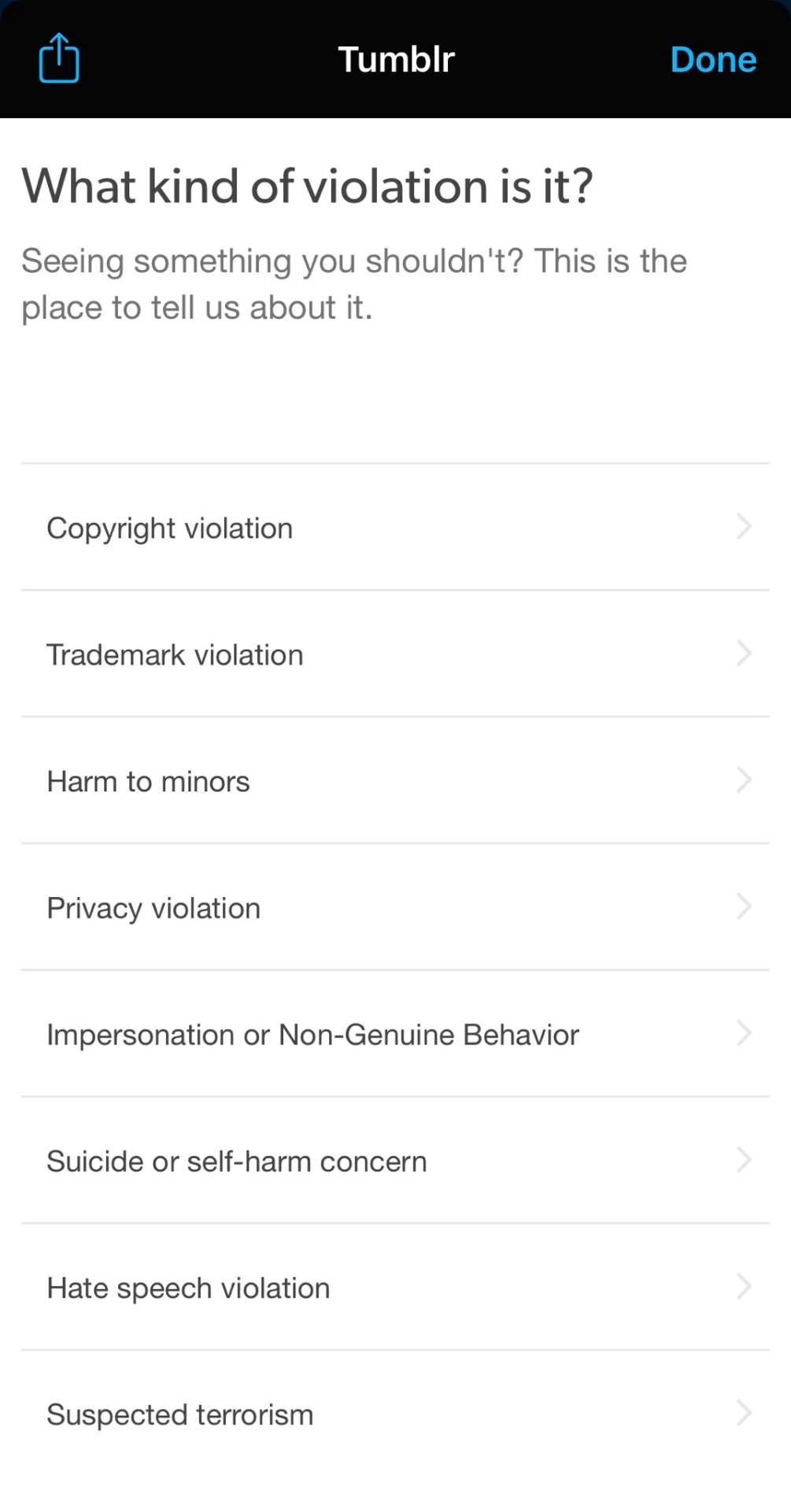

Me: sees that stupid ass election interference post being reblogged for the THIRD TIME by someone who should know better to check their sources, writes up a long rant post

Me: wait—there’s a button for that!

PSA: report shady posts that seem designed to mislead you about voting. And for fuck’s sake stop reblogging things that are meant to convince people that voting doesn’t matter

#and block that fucking thememedaddy blog#because if it isn’t a russian troll farm#it’s a fucking content farm at best and every single post is stolen#either way it’s not a real tumblr user and shouldn’t be given the time of day#(sorry. i snapped)#(but at least i deleted the rant)

75 notes

·

View notes

Text

Even when the proof is there some of you refuse to believe it. People on our side need to stop challenging credible sources. You’ve been conditioned by Trump in just a few short years to not believe anything and to do so without question. As adult Americans you should know by now which sources are credible and which are propaganda.

There’s no tule stating the poster’s commentary has to be directly about the contents of an article either. We’re adults, we can add information, extrapolate, make connections and point out similarities or inconsistencies.

#never trump#republican assholes#traitor trump#republican hypocrisy#crooked donald#Russian election interference#Russian troll farms#Republican propaganda#russian propaganda#misinformation#disinformation#republicans lie about everything

22 notes

·

View notes

Text

Jessikka Aro, a journalist who spent years researching the activities of pro-Russia internet trolls, wanted fact-checkers to know this: If you look for Russian influence in your region, you will find it

#Poynter#Russian Troll Farms#Russian disinformation#Russian internet trolls#Russian influence#Fact Checking

8 notes

·

View notes

Text

Twitter just SUSPENDED my account.

Let ME "Assure" YOU of something, SHITter,

I AM 20 YEAR AMERICAN MILITARY VETERAN TRUMP HATING PATRIOT!

And YOU, will NOT DETER me, NOR "Shut Me Up"!

You WON'T SUSPEND ALL of the FOREIGN TROLL FAKE Twitter accounts out there, but you are willing to suspend MY ACCOUNT?

Go FUCK YOURSELF SHITter!

Ass Hat "Elon Musk" WAS RIGHT about ONE THING!

TWITTER SUCKS!

Update; Now That Elon Musk has acquired SHITter, it "Sucks" EVEN MORE, because TWO "Piles of Shit", stinks MORE than ONE!

SHITter & Elon Musk were MADE for "Each Other "!

#twitter sucks#twitter#american citizen#twitter suspensions#fake identity#fake twitter#fake twitter accounts#foreign troll farms#russian troll farms#troll farms#trolls#troll bots#traitor#against trump#donald trump#trump sucks#traitors#trump is a threat to democracy#conservatives are stupid#j6 insurrection#j6 traitors#corrupt gop#vladimir putin sucks#vladimir putin blows chunks#vladimir putin war criminal#military veterans#military veteran#twitter is shit#twitter is garbage#elon musk

7 notes

·

View notes

Text

off the back of the linux backdoor (some backend microsoft guy catching an exploit that could basically destroy much of the internet as we know it or steal everyones data) i think its funny when people act like state actors keep perfectly to office hours local time. they have the knowledge to set this up but not to stagger git commits so the 9-5 isn't obvious. come on.

#they have some nerd in a basement doing it. he doesn't leave but the doors not locked he just needs to be coding like its air#i doubt it was a hobbyist but 'the git commits are all in office hours for china!! must be the ccp!!' grow uupppppp#odds are we will literally never definitively know who or why (even if a lot of actual contries look bc holy shit if this wasnt caught)#but i see this w 'russian troll farms' bestie i dont think thats a 9-5 position in as much as it does exist#me_irl

1 note

·

View note

Text

You're being targeted by disinformation networks that are vastly more effective than you realize. And they're making you more hateful and depressed.

(This essay was originally by u/walkandtalkk and posted to r/GenZ on Reddit two months ago, and I've crossposted here on Tumblr for convenience because it's relevant and well-written.)

TL;DR: You know that Russia and other governments try to manipulate people online. But you almost certainly don't how just how effectively orchestrated influence networks are using social media platforms to make you -- individually-- angry, depressed, and hateful toward each other. Those networks' goal is simple: to cause Americans and other Westerners -- especially young ones -- to give up on social cohesion and to give up on learning the truth, so that Western countries lack the will to stand up to authoritarians and extremists.

And you probably don't realize how well it's working on you.

This is a long post, but I wrote it because this problem is real, and it's much scarier than you think.

How Russian networks fuel racial and gender wars to make Americans fight one another

In September 2018, a video went viral after being posted by In the Now, a social media news channel. It featured a feminist activist pouring bleach on a male subway passenger for manspreading. It got instant attention, with millions of views and wide social media outrage. Reddit users wrote that it had turned them against feminism.

There was one problem: The video was staged. And In the Now, which publicized it, is a subsidiary of RT, formerly Russia Today, the Kremlin TV channel aimed at foreign, English-speaking audiences.

As an MIT study found in 2019, Russia's online influence networks reached 140 million Americans every month -- the majority of U.S. social media users.

Russia began using troll farms a decade ago to incite gender and racial divisions in the United States

In 2013, Yevgeny Prigozhin, a confidante of Vladimir Putin, founded the Internet Research Agency (the IRA) in St. Petersburg. It was the Russian government's first coordinated facility to disrupt U.S. society and politics through social media.

Here's what Prigozhin had to say about the IRA's efforts to disrupt the 2022 election:

"Gentlemen, we interfered, we interfere and we will interfere. Carefully, precisely, surgically and in our own way, as we know how. During our pinpoint operations, we will remove both kidneys and the liver at once."

In 2014, the IRA and other Russian networks began establishing fake U.S. activist groups on social media. By 2015, hundreds of English-speaking young Russians worked at the IRA. Their assignment was to use those false social-media accounts, especially on Facebook and Twitter -- but also on Reddit, Tumblr, 9gag, and other platforms -- to aggressively spread conspiracy theories and mocking, ad hominem arguments that incite American users.

In 2017, U.S. intelligence found that Blacktivist, a Facebook and Twitter group with more followers than the official Black Lives Matter movement, was operated by Russia. Blacktivist regularly attacked America as racist and urged black users to rejected major candidates. On November 2, 2016, just before the 2016 election, Blacktivist's Twitter urged Black Americans: "Choose peace and vote for Jill Stein. Trust me, it's not a wasted vote."

Russia plays both sides -- on gender, race, and religion

The brilliance of the Russian influence campaign is that it convinces Americans to attack each other, worsening both misandry and misogyny, mutual racial hatred, and extreme antisemitism and Islamophobia. In short, it's not just an effort to boost the right wing; it's an effort to radicalize everybody.

Russia uses its trolling networks to aggressively attack men. According to MIT, in 2019, the most popular Black-oriented Facebook page was the charmingly named "My Baby Daddy Aint Shit." It regularly posts memes attacking Black men and government welfare workers. It serves two purposes: Make poor black women hate men, and goad black men into flame wars.

MIT found that My Baby Daddy is run by a large troll network in Eastern Europe likely financed by Russia.

But Russian influence networks are also also aggressively misogynistic and aggressively anti-LGBT.

On January 23, 2017, just after the first Women's March, the New York Times found that the Internet Research Agency began a coordinated attack on the movement. Per the Times:

More than 4,000 miles away, organizations linked to the Russian government had assigned teams to the Women’s March. At desks in bland offices in St. Petersburg, using models derived from advertising and public relations, copywriters were testing out social media messages critical of the Women’s March movement, adopting the personas of fictional Americans.

They posted as Black women critical of white feminism, conservative women who felt excluded, and men who mocked participants as hairy-legged whiners.

But the Russian PR teams realized that one attack worked better than the rest: They accused its co-founder, Arab American Linda Sarsour, of being an antisemite. Over the next 18 months, at least 152 Russian accounts regularly attacked Sarsour. That may not seem like many accounts, but it worked: They drove the Women's March movement into disarray and eventually crippled the organization.

Russia doesn't need a million accounts, or even that many likes or upvotes. It just needs to get enough attention that actual Western users begin amplifying its content.

A former federal prosecutor who investigated the Russian disinformation effort summarized it like this:

It wasn’t exclusively about Trump and Clinton anymore. It was deeper and more sinister and more diffuse in its focus on exploiting divisions within society on any number of different levels.

As the New York Times reported in 2022,

There was a routine: Arriving for a shift, [Russian disinformation] workers would scan news outlets on the ideological fringes, far left and far right, mining for extreme content that they could publish and amplify on the platforms, feeding extreme views into mainstream conversations.

China is joining in with AI

[A couple months ago], the New York Times reported on a new disinformation campaign. "Spamouflage" is an effort by China to divide Americans by combining AI with real images of the United States to exacerbate political and social tensions in the U.S. The goal appears to be to cause Americans to lose hope, by promoting exaggerated stories with fabricated photos about homeless violence and the risk of civil war.

As Ladislav Bittman, a former Czechoslovakian secret police operative, explained about Soviet disinformation, the strategy is not to invent something totally fake. Rather, it is to act like an evil doctor who expertly diagnoses the patient’s vulnerabilities and exploits them, “prolongs his illness and speeds him to an early grave instead of curing him.”

The influence networks are vastly more effective than platforms admit

Russia now runs its most sophisticated online influence efforts through a network called Fabrika. Fabrika's operators have bragged that social media platforms catch only 1% of their fake accounts across YouTube, Twitter, TikTok, and Telegram, and other platforms.

But how effective are these efforts? By 2020, Facebook's most popular pages for Christian and Black American content were run by Eastern European troll farms tied to the Kremlin. And Russia doesn't just target angry Boomers on Facebook. Russian trolls are enormously active on Twitter. And, even, on Reddit.

It's not just false facts

The term "disinformation" undersells the problem. Because much of Russia's social media activity is not trying to spread fake news. Instead, the goal is to divide and conquer by making Western audiences depressed and extreme.

Sometimes, through brigading and trolling. Other times, by posting hyper-negative or extremist posts or opinions about the U.S. the West over and over, until readers assume that's how most people feel. And sometimes, by using trolls to disrupt threads that advance Western unity.

As the RAND think tank explained, the Russian strategy is volume and repetition, from numerous accounts, to overwhelm real social media users and create the appearance that everyone disagrees with, or even hates, them. And it's not just low-quality bots. Per RAND,

Russian propaganda is produced in incredibly large volumes and is broadcast or otherwise distributed via a large number of channels. ... According to a former paid Russian Internet troll, the trolls are on duty 24 hours a day, in 12-hour shifts, and each has a daily quota of 135 posted comments of at least 200 characters.

What this means for you

You are being targeted by a sophisticated PR campaign meant to make you more resentful, bitter, and depressed. It's not just disinformation; it's also real-life human writers and advanced bot networks working hard to shift the conversation to the most negative and divisive topics and opinions.

It's why some topics seem to go from non-issues to constant controversy and discussion, with no clear reason, across social media platforms. And a lot of those trolls are actual, "professional" writers whose job is to sound real.

So what can you do? To quote WarGames: The only winning move is not to play. The reality is that you cannot distinguish disinformation accounts from real social media users. Unless you know whom you're talking to, there is a genuine chance that the post, tweet, or comment you are reading is an attempt to manipulate you -- politically or emotionally.

Here are some thoughts:

Don't accept facts from social media accounts you don't know. Russian, Chinese, and other manipulation efforts are not uniform. Some will make deranged claims, but others will tell half-truths. Or they'll spin facts about a complicated subject, be it the war in Ukraine or loneliness in young men, to give you a warped view of reality and spread division in the West.

Resist groupthink. A key element of manipulate networks is volume. People are naturally inclined to believe statements that have broad support. When a post gets 5,000 upvotes, it's easy to think the crowd is right. But "the crowd" could be fake accounts, and even if they're not, the brilliance of government manipulation campaigns is that they say things people are already predisposed to think. They'll tell conservative audiences something misleading about a Democrat, or make up a lie about Republicans that catches fire on a liberal server or subreddit.

Don't let social media warp your view of society. This is harder than it seems, but you need to accept that the facts -- and the opinions -- you see across social media are not reliable. If you want the news, do what everyone online says not to: look at serious, mainstream media. It is not always right. Sometimes, it screws up. But social media narratives are heavily manipulated by networks whose job is to ensure you are deceived, angry, and divided.

Edited for typos and clarity. (Tumblr-edited for formatting and to note a sourced article is now older than mentioned in the original post. -LV)

P.S. Apparently, this post was removed several hours ago due to a flood of reports. Thank you to the r/GenZ moderators for re-approving it.

Second edit:

This post is not meant to suggest that r/GenZ is uniquely or especially vulnerable, or to suggest that a lot of challenges people discuss here are not real. It's entirely the opposite: Growing loneliness, political polarization, and increasing social division along gender lines is real. The problem is that disinformation and influence networks expertly, and effectively, hijack those conversations and use those real, serious issues to poison the conversation. This post is not about left or right: Everyone is targeted.

(Further Tumblr notes: since this was posted, there have been several more articles detailing recent discoveries of active disinformation/influence and hacking campaigns by Russia and their allies against several countries and their respective elections, and barely touches on the numerous Tumblr blogs discovered to be troll farms/bad faith actors from pre-2016 through today. This is an ongoing and very real problem, and it's nowhere near over.

A quote from NPR article linked above from 2018 that you might find familiar today: "[A] particular hype and hatred for Trump is misleading the people and forcing Blacks to vote Killary. We cannot resort to the lesser of two devils. Then we'd surely be better off without voting AT ALL," a post from the account said.")

#propaganda#psyops#disinformation#US politics#election 2024#us elections#YES we have legitimate criticisms of our politicians and systems#but that makes us EVEN MORE susceptible to radicalization. not immune#no not everyone sharing specific opinions are psyops. but some of them are#and we're more likely to eat it up on all sides if it aligns with our beliefs#the division is the point#sound familiar?#voting#rambles#long post

143 notes

·

View notes

Text

tired and worried reminder that voting for Joe Biden is not saying you approve of genocide, because not voting for Joe Biden makes it easier for Donald Trump to get elected and Donald Trump wants to do more genocide than Joe Biden

some genocide or more genocide is a repulsive and invidious choice to have to make when what you want (like any decent, reasonable person) is no genocide - your feelings of anger and distress and revulsion about that are completely understandable and justified

BUT that's the choice you have to make - not going for the some genocide option makes the more genocide option more likely. The way the system is set up means that a choice not to vote doesn't send a message, it's the absence of a message. It looks and acts the same as the choice of someone who doesn't care about anything at all.

and please consider that some of the posts you see telling you that voting for Biden is the same as approving of genocide, appealing to your natural feelings of anger and distress and revulsion, may very well be from a Russian troll farm whose whole job is to manipulate you

because that has happened on this website before and it helped Donald Trump get elected the first time

151 notes

·

View notes

Text

“We need to strengthen the conflict between Zaluzhny and Zelensky, along the lines of ‘he intends to fire him,’” one Kremlin political strategist wrote a year ago, after a meeting of senior Russian officials and Moscow spin doctors, according to internal Kremlin documents.

Russian President Vladimir Putin’s administration ordered a group of Russian political strategists to use social media and fake news articles to push the theme that Zelensky “is hysterical and weak. … He fears that he will be pushed aside, therefore he is getting rid of the dangerous ones.”

The Kremlin instruction resulted in thousands of social media posts and hundreds of fabricated articles, created by troll farms and circulated in Ukraine and across Europe, that tried to exploit what were then rumored tensions between the two Ukrainian leaders, according to a trove of Kremlin documents obtained by a European intelligence service and reviewed by The Washington Post. The files, numbering more than 100 documents, were shared with The Post to expose for the first time the scale of Kremlin propaganda targeting Zelensky with the aim of dividing and destabilizing Ukrainian society — efforts that Moscow dubbed “information psychological operations.”(..)

The documents show how in January 2023 the Kremlin’s first deputy chief of staff, Sergei Kiriyenko, tasked a team of officials and political strategists with establishing a presence on Ukrainian social media to distribute disinformation.

The effort built on an earlier project that Kiriyenko, a longtime Putin aide, had been running to subvert Western support for Ukraine, including in France and Germany, previous reporting by The Post shows. The European propaganda group was overseen by one of Kiriyenko’s deputies, Tatyana Matveeva, head of the Kremlin’s department for developing information and communication technologies, the documents show.(..)

At a Jan. 16, 2023, meeting, Kiriyenko laid out four key objectives for the Ukraine propaganda team: discrediting Kyiv’s military and political leadership, splitting the Ukrainian elite, demoralizing Ukrainian troops and disorienting the Ukrainian population, the documents show.(..)

By early March, dozens of hired trolls were pumping out more than 1,300 texts and 37,000 comments on Ukrainian social media each week, according to one of the dashboard presentations. Records show that employees at troll farms earned 60,000 rubles a month, or $660, for writing 100 comments a day.(..)

The strategists advised developing “a network of Telegram channels in combination with Twitter and Facebook/Instagram” as the most effective way of penetrating Ukraine’s media space, noting that the Telegram audience in Ukraine had grown 600 percent over the previous year. (..)

By the first week of May,a post the Kremlin strategists had planted on Facebook, saying that “Valery Zaluzhny can become the next president of Ukraine,” had garnered 4.3 million views, one of the dashboard presentations shows. The Kremlin then issued orders to create similar posts or “additional reality” — a term used by Russian officials for fake news — including reports that Western leaders were looking for a replacement for Zelensky and that Zaluzhny intended to halt the counteroffensive.

Meta, the parent company of Facebook, said in a statement referring to the Russian posts about Zaluzhny and the alleged lack of state aid for the fallen soldier that it had been “monitoring and blocking accounts, Pages and websites run by this campaign” since 2022, “including these two Pages that were quickly detected and disabled by our security team.”

Undeterred, the strategists planted a plethora of articles in Ukraine via social media, with one in May headlined “Zelensky is holding on to the throne. In Ukraine democracy is being liquidated,” the documents show. Another in June sought to play up what it claimed was the prolonged disappearance of Zaluzhny from public view, with bloggers instructed to post comments declaring: “This is why Zaluzhny disappeared: Because he could have and should have taken Zelensky’s place.”

The strategists also sought to exploit Kiriyenko’s campaign in Western Europe by recycling its disinformation for use in Ukraine. The tactics in the European campaign included cloning and usurping media and government websites, such as those for Le Monde and the French Foreign Ministry, and then posting fake content on them denigrating the Ukrainian government, in an operation dubbed Doppelgänger by European Union officials. They also included creating fake accounts on X, or Twitter, for prominent figures including German Foreign Minister Annalena Baerbock. The strategists sought to place stories or posts from those websites or accounts on Ukrainian social media as genuine European reporting or commentary.

After the fake Baerbock account declared in September that “the war in Ukraine will be over in 3 months,” the German authorities launched an investigation and found more than 50,000 fake user accounts coordinating pro-Russian propaganda, including those promoting the tweet. Officials believe the fake accounts were an extension of the Doppelgänger campaign, Der Spiegel reported.

The Doppelgänger operation was first exposed by Meta in September 2022 and then by French authoritieslast summer and tied to Reliable Recent News, a fake news site traced back to two Russian companies, the Social Design Agency and Structura National Technologies. The Kremlin documents show that the heads of Social Design Agency and Structura — Ilya Gambashidze and Nikolai Tupikin — worked directly with Kiriyenko and another Kremlin official, Sofiya Zakharova, who coordinated efforts in Europe and Ukraine.“She is the brain,” a European security official said.

The E.U. imposed sanctions in July on Gambashidze, Structura National Technologies and Social Design Agency for what it said was their role in creating fake webpages and social media accounts “usurping the identity of national media outlets and government websites” as part of “a hybrid campaign by Russia against the EU and member states.” Gambashidze and Tupikin were named by the U.S. State Department in November for their role in Kremlin efforts to spread disinformation in Latin America(..)

Gambashidze, Tupikin and their colleagues proposed narratives they hoped would destroy Zelensky’s image in the West as “the hero of a small country fighting a global evil,” one of the documents sent in April shows. They suggested portraying Zelensky as an actor only capable of following a script written for him by the United States and NATO,and his Western backers as tiring of him. They proposed distributing fake Ukrainian government documents as evidence of corrupt military procurement schemes, and suggesting that Zelensky and his family had Western bank accounts, the document shows.

The plans led to hundreds of articles and thousands of social media posts translated into French, German and English that targeted Zelensky, the document trove shows.

One article, for a French audience, was headlined: “The conductor has gotten bored of Zelensky’s concerts: the actions of the U.S. in Ukraine lead one to believe that Washington soon intends to get rid of Zelensky, without discussing this with Paris.”

On the basis of this article, one of the strategists ordered a troll farm employee to prepare social media posts in French saying, “Washington will replace Zelensky with a more capable president. And France will have to silently continue arming and financing Ukraine.”

Another article described how Zelensky had pushed for Ukrainian forces to defend Bakhmut against Zaluzhny’s wishes, leading, it said, to the deaths of 250,000 Ukrainian troops, a wildly exaggerated death toll in what was nonetheless a brutal battle for the city. The troll farm employees were asked to write comments such as “Why do Ukrainian generals hate Zelensky? PR out of the blood of fighters” and “To shoot the exhausted president? In Ukraine, a generals’ conspiracy is brewing.”

One of the strategists’ aims, European security officials said, was to ensure that the themes placed in European social media filtered back into Ukraine, through reposts and amplification,or by being picked up by Ukrainian politicians keen to boost their profiles with provocative posts.(..)

The strategists also had price lists for planting pro-Russian commentary in prominent Western media and for paying social media “influencers” in the United States and Europe “willing to work with Russian clients.” The documents say the Russians were willing to pay up to $39,000 for the planting of pro-Russian commentary in major media outlets in the West.

“Practically everywhere this will be columnists, leaders of public opinion, former diplomats, officials, professors and so on,” a note attached to the price list states.”

Catherine Belton, “Kremlin runs disinformation campaign to undermine Zelensky, documents show”

#Catherine Belton#Volodymyr Zelenskyy#Ukraine#Russia#Vladimir Putin#disinformation#fake news#social media#russian propaganda#media literacy#really good article

45 notes

·

View notes

Note

this is wild to me and I don’t get the hate why is an Indian child actress getting so much hate on a fancast https://x.com/ashleyksmalls/status/1777735121459708076?s=46

I dont know this controversy or where any of this is coming from, but I honestly don’t think we can underestimate the impact of Russian troll farms sewing discord and hate on Twitter. That’s not to excuse the very real people who are the racist marks here.

Separately, I am really not looking forward to the election.

16 notes

·

View notes

Text

Ralf Beste of Germany’s Foreign Office said the Russians were ‘looking for cracks of doubt or feelings of unease and trying to enlarge them’.

It is absolutely a threat we have to take seriously.

A network of more than 50,000 fake accounts posting as many as 200,000 posts a day sought to convince Germans that the government’s help for Ukraine was undermining German prosperity and risking nuclear war.

Russian disinformation campaigns to undermine support for Ukraine in Europe have grown significantly in scale, skill and stealth, one of Germany’s most senior diplomats has warned.

“It is absolutely a threat we have to take seriously,” Ralf Beste, head of the department for culture and communication at Germany’s Federal Foreign Office, told the Financial Times. “Overall, [there] is an increase in sophistication and impact to what we have seen before.”

Russia is combining greater subtlety and plausibility in its messaging with automation to make its disruptive attacks more effective and harder to combat, he said.

“There is probably a lot going on we can’t even see. More and more conversations are happening in private . . . channels on Telegram and WhatsApp. It is very difficult to understand what is happening there.”

Beste’s department has a dedicated cell that leads the German government’s efforts to track and stop Russia’s information operations overseas.

Germany has emerged as one of the Kremlin’s main targets for disinformation over the war in Ukraine. Under the government of Chancellor Olaf Scholz, Berlin has dramatically revised its security and defence policy and become the second-largest donor of military aid to Kyiv after Washington.

Disagreements over the shift run deep — particularly among supporters of Scholz’s own Social Democratic Party — and many Germans are concerned over economic growth and the impact of the country weaning itself from Russian gas supplies.

Beste said: “[The Russians] are looking for cracks of doubt or feelings of unease and trying to enlarge them.”

His department this year uncovered one of the biggest attempts to manipulate German public opinion yet, on the social media platform X.

A network of more than 50,000 fake accounts posting as many as 200,000 posts a day sought to convince Germans that the government’s help for Ukraine was undermining German prosperity and risking nuclear war.

The network sought to “launder” such claims by making them look as if they had been published as opinions in reputable news outlets such as Der Spiegel and Süddeutsche Zeitung. But it also simply sought to amplify existing anti-Ukrainian views and make them appear to be more widespread.

Last week the Czech government, acting with other European states, accused the Ukrainian oligarch Viktor Medvedchuk of secretly cultivating a network of influence among European politicians to spread pro-Russian narratives and undermine support for Kyiv.

Countering such efforts is hard, Beste said, and indicates the extent to which Russia has moved on from the days of running infamous “troll farms”, which employed real people to spread dissent, often in a clumsy and obvious manner.

Beste said: “[Now] it’s not just a question of information that is verifiably true or false. It’s more than that. It’s about skewing opinions. Trying to tilt the balance of debate. Or to convince people that the frame of the debate is different to what it is in reality.”

The techniques being used were more like “nudging”, he said, referring to the concept in behavioural science of using small social and informational cues to subtly shift opinion or action.

“If you say, for example, ‘there is increasing doubt that XYZ . . . ’ then you will make people more receptive to doubts about that topic,” Beste said. “They are taking elements of reality in these campaigns and then warping them to create a different impression.”

Trying to rebut such campaigns is hard because the basic elements are often unfalsifiable, and engaging can often counterproductively lend claims to credibility.

Artificial intelligence tools are also a serious concern because of their ability to mimic human behaviour.

“AI is clearly something we have to watch very carefully,” Beste said. “What I worry about is how it will be used to create the impression of interaction . . . You enter a de facto second world, not just fake pieces of information or fake films or pictures but an entire alternative information ecosystem.”

#ukraine#germany#i've seen at least two people mentioning the increasing amount of anti ukrainian discourse on tumblr

19 notes

·

View notes

Note

Leftists are genuinely so transparent with their misogyny, it baffles me. Someone made a point about how Bernie Sanders and Nancy Pelosi are very close in age but only one of them constantly gets hounded about their competency and ability to be a politician, and these people are like "hm but Bernie Sanders thinks everyone deserves healthcare and Nancy Pelosi doesn't" what??? Where does that information even exist beyond the smear campaigns the leftists have made up in their heads to insist the Dems (Pelosi, in particular) suck and justify hating them? It's like... even when the Dems do something good, and take the right position on an issue, these people are just waiting to find some way to rearrange it to fit their narrative that they actually hate them and it's only people like Bernie Sanders who genuinely give a shit.

not to mention thinking everyone deserves healthcare and actually working to make that happen are two different things. Bernie can have all the "correct" ideological positions in the world, but it doesn't seem like he's done much to advance most of them lol

Well, yeah. That's why they are useless dickweeds at best and and unrepentant enablers of fascism at the worst. They gleefully latch onto any lie, mischaracterization, exaggeration, or other bit of propaganda that makes the Democrats look bad and themselves feel Exaltedly Special and justified in not sullying their hands with a flawed democratic process. They don't give a shit if the actual world goes to hell, as long as they still have the Bestest and Most Special Ideas. Which serves the aims of both the actual right wing machine that feeds it to them and the organized Russian troll farms that feed it to THEM. And yet.

Give me a hundred Nancy Pelosis, arguably the most effective Democratic politician in living memory, over Bernie Sanders, he of the blindingly obvious once-a-month Guardian op ed and literally nothing else in the way of substantial policy, while tacitly or openly encouraging his supporters to withhold their vote as "punishment" for any candidate not him. I will say in Bernie's favor that he has changed his tune ahead of 2024, but it is the least of what he owes everyone for contributing to the clusterfuck of 2016 as substantially as he did. So.

And lol yes, the Online Leftists, known for their radical compassion and empathy to all people! They're too busy policing fellow leftists for Thoughtcrime or any other impulse to actually take a tangible action (which might therefore be Morally Problematic) to ever actually once apply compassion to a real person, let alone those who don't agree with them in some part already. In fact, they are perfectly happy to let the people they are supposed to be caring about suffer the increasing effects of nationalized, racialized, homophobic, misogynist, xenophobic white supremacist fascism, as the proper punishment for not being Leftist enough and voting for the Democrats, who they (as noted) eagerly sabotage and undercut far more than the Republicans. So yeah.

44 notes

·

View notes

Note

What do you think the ramifications will be for the GOP for blocking aid to Ukraine down the line? They certainly have a hard right vocal minority who support that, but most Americans generally sympathize with countries being invaded so will this impact the elections in any meaningful way? And is it the association between Biden and Ukraine that gets that base's opposition or their sympathies with the right wingish, culturally conservative Russian regime?

I don't think it will have much impact on the election outside of individuals who care significantly about foreign policy and support the U.S.'s current alliances, who are unlikely to vote for Trump given his foreign policy stances. Support for Ukraine is declining in the United States, first because the longer something drags on for, the less support it gets, but disinformation is being continually repeated about how the Ukraine aid is going to oligarch mansions and weapons are ending up being sold on the black market. These have been exposed, either as unfriendly psy-ops or just bone ignorance, but repeat something enough times and people start to believe it. People don't have the time to research everything, so they'll just accept what they hear as the truth, because surely, if people keep saying it, it can't be wrong, right? The biggest things that I think will determine voter outcome in 2024 are culture war stuff (which will drive turnout on both sides, favoring Democrats because their policy positions are more popular overall) and the economy (which will favor Republicans due to the inflation problem).

I mentioned before what I think were some of the Freedom Caucus/MAGA-type opposition to supporting Ukraine. I don't believe that any of them actually believe the aid is being siphoned off by corrupt oligarchs (because Ukrainian aid is actually highly audited and controlled and the details of which are included in the Congressional documentation), due to concerns about federal spending (both because the money portion of aid is actually spent in the United States replenishing stocks while older equipment is sent to Ukraine and because they tend to be quite profligate spenders themselves), or because of a commitment towards peace and de-escalation (because they are supportive of military intervention against the cartels in Mexico). I think most of it is political opportunism - both Biden and conventional Republicans have identified with the Ukrainian cause and so they place themselves in opposition. They hope for a stalemate or Ukrainian defeat so that they can point to their opponents and see: "Look at all we wasted on this boondoggle!" For the really hardline crowd, I think they support Putin's policy positions on the culture war, have a fondness for Putin because Trump himself is quite fond of him, and believe that Putin helped get Trump elected and so identify with Putin's causes in the hopes that they can benefit from Russian troll farms. Also can't forget contrarian tribal posturing - they take the opposition path because they're so much smarter, maverick thinkers that can see past the common perception of the "sheep." This is the path taken by fools like Elon Musk.

Thanks for the question, Anon.

SomethingLikeALawyer, Hand of the King

25 notes

·

View notes