#oc: newlin

Text

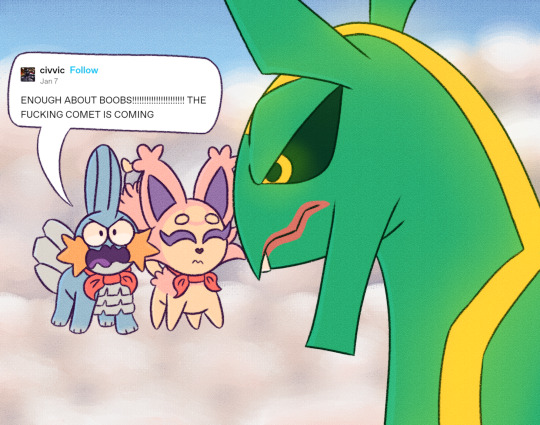

redrew my pmd1 rayquaza boobs post because i wanted to post it to toyhouse with my new hero and partner designs

#pokemon#pkmnart#pkmn#pmd#pokemon mystery dungeon#rescue team#skitty#mudkip#rayquaza#oc: mayar#oc: newlin#also the angle of rayquaza in the original bugged me a lot

577 notes

·

View notes

Text

i love newlin so much. he has severe issues with anger and has lashed out violently, to the point of being exiled from his birthplace. he's also my most adorable oc in terms of design imo.

#bwark#also preview at his newest art#nothing has really changed aside from adding grooves to his top fin and making the proportions better#when i'm done with all of my heroes and partners and their evo designs i'll be posting them then#also i have the rt characters i wanted to make unique designs for done. i'll post them afterwards#newlin is so cute (he's nearly killed someone)#granted killing someone isn't that big of a deal in this series. that's basically just tuesday morning

9 notes

·

View notes

Text

Introducing: London Greer

Fandom: True Blood

Face Claim: Ashley Greene

Full Name: London Shelby Greer

Myers Briggs Type: INFP

Hogwarts House: Hufflepuff

Love Interest: Sarah Newlin

Occupation: Nurse

Collections: Scarves

Style/Clothing: London has a softly feminine style, favoring gentle colors that are easy on the eye. She almost always dresses like it’s fall, as she loves being cozy.

Signature Quote: "Just because I became a vampire doesn’t mean I lost my empathy. I’ve always loved caring for people. That hasn’t changed."

Plot Summary: London Greer always wanted to be a nurse, and now she is one. Forever. She keeps her status as a vampire under wraps in order to continue caring for her patients, knowing that not everyone would accept a vampire as their nurse. Her whole goal in life is to spread love and healing, but sometimes people just can’t get past seeing her as a monster. Her most difficult challenge to date is getting Sarah Newlin to see the light in the darkness.

Forever Tag: @arrthurpendragon, @baubeautyandthegeek, @foxesandmagic, @carmens-garden, @bossyladies, @getawaycardotmp3, @misshiraethsworld, @kmc1989, @curious-kittens-ocs, @fanficanatic-tw

London Greer: @spice-honey

7 notes

·

View notes

Text

frank's image generation model, explained

[See also: github repo, Colab demo]

[EDIT 9/6/22: I wrote this post in January 2022. I've made a number of improvements to this model since then. See the links above for details on what the latest version looks like.]

Last week, I released a new feature for @nostalgebraist-autoresponder that generates images. Earlier I promised a post explaining how the model works, so here it is.

I'll try to make this post as accessible as I can, but it will be relatively technical.

Why so technical? The interesting thing (to me) about the new model is not that it makes cool pictures -- lots of existing models/techniques can do that -- it's that it makes a new kind of picture which no other model can make, as far as I know. As I put it earlier:

As far as I know, the image generator I made for Frank is the first neural image generator anyone has made that can write arbitrary text into the image!! Let me know if you’ve seen another one somewhere.

The model is solving a hard machine learning problem, which I didn't really believe could be solved until I saw it work. I had to "pull out all the stops" to do this one, building on a lot of prior work. Explaining all that context for readers with no ML background would take a very long post.

tl;dr for those who speak technobabble: the new image generator is OpenAI-style denoising diffusion, with a 128x128 base model and a 128->256 superresolution model, both with the same set of extra features added. The extra features are: a transformer text encoder with character-level tokenization and T5 relative position embeddings; a layer of image-to-text and then text-to-image cross-attention between each resnet layer in the lower-resolution parts of the U-Net's upsampling stack, using absolute axial position embeddings in image space; a positional "line embedding" in the text encoder that does a cumsum of newlines; and information about the diffusion timestep injected in two places, as another embedding fed to the text encoder, and injected with AdaGN into the queries of the text-to-image cross-attention. I used the weights of the trained base model to initialize the parts of the superresolution model's U-Net that deal with resolutions below 256.

This post is extremely long, so the rest is under a readmore

The task

The core of my bot is a text generator. It can only see text.

People post a lot of images on tumblr, though, and the bot would miss out on a lot of key context if these images were totally invisible to it.

So, long ago, I let my bot "see" pictures by sending them to AWS Rekognition's DetectText endpoint. This service uses a scene text recognition (STR) model to read text in the image, if it exists. ("STR" is the term for OCR when when the pictures aren't necessarily printed text on paper.)

If Rekognition saw any text in the image, I let the bot see the text, between special delimiters so it knows it's an image.

For example, when Frank read the OP of this post, this is what generator model saw:

#1 fipindustries posted:

i was perusing my old deviant art page and i came across a thing of beauty.

the ultimate "i was a nerdy teen in the mid 2000′s starter pack". there was a challenge in old deviant art where you had to show all the different characters that had inspired an OC of yours. and so i came up with this list

=======

"Inspirations Meme" by Phantos

peter

=======

(This is actually less information than I get back from AWS. It also gives me bounding boxes, telling me where each line of text is in the image. I figured GPT wouldn't be able to do much with this info, so I exclude it.)

Images are presented this way, also, in the tumblr dataset I use to finetune the generator.

As a result, the generator knows that people post images, and it knows a thing or two about what types of images people post in what contexts -- but only through the prism of what their STR transcripts would look like.

This has the inevitable -- but weird and delightful -- result that the generator starts to invent its own "images," putting them in its posts. These invented images are transcripts without originals (!). Invented tweets, represented the way STR would view a screenshot of them, if they existed; enigmatically funny strings of words that feel like transcripts of nonexistent memes; etc.

So, for a long time, I've had a vision of "completing the circuit": generating images from the transcripts, images which contain the text specified in the transcripts. The novel pictures the generator is imagining itself seeing, through the limited prism of STR.

It turns out this is very difficult.

Image generators: surveying the field

We want to make a text-conditioned image generation model, which writes the text into the generated image.

There are plenty of text-conditioned image generators out there: DALL-E, VQGAN+CLIP, (now) GLIDE, etc. But they don't write the text, they just make an image the text describes. (Or, they may write text on occasion, but only in a very limited way.)

When you design a text-conditioned image generation method, you make two nearly independent choices:

How do you generate images at all?

How do you make the images depend on the text?

That is, all these methods (including mine) start with some well-proven approach for generating images without the involvement of text, and then add in the text aspect somehow.

Let's focus on the first part first.

There are roughly 4 distinct flavors of image generator out there. They differ largely in how they provide signal about which image are plausible to the model during training. A survey:

1. VAEs (variational autoencoders).

These have an "encoder" part that converts raw pixels to a compressed representation -- e.g. 512 floating-point numbers -- and a "decoder" part that converts the compressed representation back into pixels.

The compressed representation is usually referred to as "the latent," a term I'll use below.

During training, you tell the model to make its input match its output; this forces it to learn a good compression scheme. To generate a novel image, you ignore the encoder part, pick a random value for the latent, and turn it into pixels with the decoder.

That's the "autoencoder" part. The "variational" part is an extra term in the loss that tries to make the latents fill up their N-dimensional space in a smooth, uniform way, rather than squashing all the training images into small scrunched-up pockets of space here and there. This increases the probability that a randomly chosen latent will decode to a natural-looking image, rather than garbage.

VAEs on their own are not as good at the other methods, but provide a foundation for VQ-autoregressive methods, which are now popular. (Though see this paper)

2. GANs (generative adversarial networks).

Structurally, these are like VAEs without the encoder part. They just have a latent, and a have a decoder that turns the latent into pixels.

How do you teach the decoder what images ought to look like? In a GAN, you train a whole separate model called the "discriminator," which looks at pixels and tries to decide whether they're a real picture or a generated one.

During training, the "G" (generator) and the "D" (discriminator) play a game of cat-and-mouse, where the G tries to fool the D into thinking its pictures are real, and the D tries not to get fooled.

To generate a novel image, you do the same thing as with a VAE: pick a random latent and feed it through the G (here, ignoring the D).

GANs are generally high-performing, but famously finicky/difficult to train.

3. VQVAEs (vector quantized VAEs) + autoregressive models.

These have two parts (you may be noticing a theme).

First, you have a "VQVAE," which is like a VAE, with two changes to the nature of the latent: it's localized, and it's discrete.

Localized: instead of one big floating-point vector, you break the image up into little patches (typically 8x8), and the latent takes on a separate value for each patch.

Discrete: the latent for each patch is not a vector of floating-point numbers. It's an element of a finite set: a "letter" or "word" from a discrete vocabulary.

Why do this? Because, once you have an ordered sequence of discrete elements, you can "do GPT to it!" It's just like text!

Start with (say) the upper-leftmost patch, and generate (say) the one to its immediate right, and then the one to its immediate right, etc.

Train the model to do this in exactly the same way you train GPT on text, except it's seeing representations that your VQVAE came up with.

These models are quite powerful and popular, see (the confusingly named) "VQ-VAE" and "VQ-VAE-2."

They get even more powerful in the form of "VQGAN," an unholy hybrid where the VQ encoder part is trained like a GAN rather than like a VAE, plus various other forbidding bells and whistles.

Somehow this actually works, and in fact works extremely well -- at the current cutting edge.

(Note: you can also just "do GPT" to raw pixels, quantized in a simple way with a palette. This hilarious, "so dumb it can't possibly work" approach is called "Image GPT," and actually does work OK, but can't scale above small resolutions.)

4. Denoising diffusion models.

If you're living in 2021, and you want to be one of the really hip kids on the block -- one of the kids who thinks VQGAN is like, sooooo last year -- then these are the models for you. (They were first introduced in 2020, but came into their own with two OpenAI papers in 2021.)

Diffusion models are totally different from the above. They don't have two separate parts, and they use a radically different latent space that is not really a "compressed representation."

How do they work? First, let's talk about (forward) diffusion. This just means taking a real picture, and steadily adding more random pixel noise to it, until it eventually becomes purely random static.

Here's what this looks like (in its "linear" and "cosine" variants), from OA's "Improved denoising diffusion probabilistic models":

OK, that's . . . a weird thing to do. I mean, if turning dogs into static entertains you, more power to you, your hobby is #valid. But why are we doing it in machine learning?

Because we can train a model to reverse the process! Starting with static, it gradually removes the noise step by step, revealing a dog (or anything).

There are a few different ways you can parameterize this, but in all of them, the model learns to translate frame n+1 into a probability distribution (or just a point prediction) for frame n. Applying this recursively, you recover the first frame from the last.

This is another bizarre idea that sounds like it can't possibly work. All it has at the start is random noise -- this is its equivalent of the "latent," here.

(Although -- since the sampling process is stochastic, unless you use a specific deterministic variant called DDIM -- arguably the random draws at every sampling step are an additional latent. A different random seed will give you a different image, even from the same starting noise.)

Through the butterfly effect, one arrangement of random static gradually "decodes to" a dog, and another one gradually "decodes to" a bicycle, or whatever. It's not that the one patch of RGB static is "more doglike" than the other; it just so happens to send the model on a particular self-reinforcing trajectory of imagined structure that spirals inexorably towards dog.

But it does work, and quite well. How well? Well enough that the 2nd 2021 OA paper on diffusion was titled simply, "Diffusion Models Beat GANs on Image Synthesis."

Conditioning on text

To make an image generator that bases the image on text, you pick one of the approaches above, and then find some way to feed text into it.

There are essentially 2 ways to do this:

The hard way: the image model can actually see the text

This is sort of the obvious way to do it.

You make a "text encoder" similar to GPT or BERT or w/e, that turns text into an encoded representation. You add a piece to the image generator that can look at the encoded representation of the text, and train the whole system end-to-end on text/image pairs.

If you do this by using a VQVAE, and simply feed in the text as extra tokens "before" all the image tokens -- using the same transformer for both the "text tokens" and the VQ "image tokens" -- you get DALL-E.

If you do this by adding a text encoder to a diffusion model, you get . . . my new model!! (Well, that's the key part of it, but there's more)

My new model, or GLIDE. Coincidentally, OpenAI was working on the same idea around the same time as me, and released a slightly different version of it called GLIDE.

(EDIT 9/6/22:

There are a bunch of new models in this category that came out after this post was written. A quick run-through:

OpenAI's DALL-E 2 is very similar to GLIDE (and thus, confusingly, very different from the original DALL-E). See my post here for more detail.

Google's Imagen is also very similar to GLIDE. See my post here.

Stability's Stable Diffusion is similar to GLIDE and Imagen, except it uses latent diffusion. Latent diffusion means you do the diffusion in the latent space of an autoencoder, rather than on raw image pixels.

Google's Parti is very similar to the original DALL-E.

)

-----

This text-encoder approach is fundamentally more powerful than the other one I'll describe next. But also much harder to get working, and it's hard in a different way for each image generator you try it with.

Whereas the other approach lets you take any image generator, and give it instant wizard powers. Albeit with limits.

Instant wizard powers: CLIP guidance

CLIP is an OpenAI text-image association model trained with contrastive learning, which is a mindblowingly cool technique that I won't derail this post by explaining. Read the blog post, it's very good.

The relevant tl;dr is that CLIP looks at texts and images together, and matches up images with texts that would be reasonable captions for them on the internet. It is very good at this. But, this is the only thing it does. It can't generate anything; it can only look at pictures and text and decide whether they match.

So here's what you do with CLIP (usually).

You take an existing image generator, from the previous section. You take a piece of text (your "prompt"). You pick a random compressed/latent representation, and use the generator to make an image from it. Then ask CLIP, "does this match the prompt?"

At this point, you just have some randomly chosen image. So, CLIP, of course, says "hell no, this doesn't match the prompt at all."

But CLIP also tells you, implicitly, how to change the latent representation so the answer is a bit closer to "yes."

How? You take CLIP's judgment, which is a complicated nested function of the latent representation: schematically,

judgment = clip(text, image_generator(latent))

All the functions are known in closed form, though, so you can just . . . analytically take the derivative with respect to "latent," chain rule-ing all the way through "clip" and then through "image_generator."

That's a lot of calculus, but thankfully we have powerful chain rule calculating machines called "pytorch" and "GPUs" that just do it for you.

You move latent a small step in the direction of this derivative, then recompute the derivative again, take another small step, etc., and eventually CLIP says "hell yes" because the picture looks like the prompt.

This doesn't quite work as stated, though, roughly because the raw CLIP gradients can't break various symmetries like translation/reflection that you need to break to get a natural image with coherent pieces of different-stuff-in-different-places.

(This is especially a problem with VQ models, where you assign a random latent to each image patch independently, which will produce a very unstructured and homogeneous image.)

To fix this, you add "augmentations" like randomly cropping/translating the image before feeding it to CLIP. You then use the averaged CLIP derivatives over a sample of (say) 32 randomly distorted images to take each step.

A crucial and highly effective augmentation -- for making different-stuff-in-different-places -- is called "cutouts," and involves blacking out everything in the image but a random rectangle. Cutouts is greatly helpful but also causes some glitches, and is (I believe) the cause of the phenomenon where "AI-generated" images often put a bunch of distinct unrelated versions of a scene onto the same canvas.

This CLIP-derivative-plus-augmentations thing is called CLIP guidance. You can use it with whichever image generator you please.

The great thing is you don't need to train your own model to do the text-to-image aspect -- CLIP is already a greater text-to-image genius than anything you could train, and its weights are free to download. (Except for the forbidden CLIPs, the best and biggest CLIPs, which are OA's alone. But you don't need them.)

(EDIT 9/6/22: since this post was written, the "forbidden CLIPs" have been made available for public use, and have been seeing use for a while in projects like my bot and Stable Diffusion.)

For the image generator, a natural choice is the very powerful VQGAN -- which gets you VQGAN+CLIP, the source of most of the "AI-generated images" you've seen papered all over the internet in 2021.

You know, the NeuralBreeders, or the ArtBlenders, or whatever you're calling the latest meme one. They're all just VQGAN+CLIP.

Except, sometimes they're a different thing, pioneered by RiversHaveWings: CLIP-guided diffusion. Which is just like VQGAN+CLIP, except instead of VQGAN, the image generator is a diffusion model.

(You can also do something different called CLIP-conditioned diffusion, which is cool but orthogonal to this post)

Writing text . . . ?

OK but how do you get it to write words into the image, though.

None of the above was really designed with this in mind, and most of it just feels awkward for this application.

For instance...

Things that don't work: CLIP guidance

CLIP guidance is wonderful if you don't want to write the text. But for writing text, it has many downsides:

CLIP can sort of do some basic OCR, which is neat, but it's not nearly good enough to recognize arbitrary text. So, you'd have to finetune CLIP on your own text/image data.

CLIP views images at a small resolution, usually 224x224. This is fine for its purposes, but may render some text illegible.

Writing text properly means creating a coherent structure of parts in the image, where their relation in space matters. But the augmentations, especially cutouts, try to prevent CLIP from seeing the image globally. The pictures CLIP actually sees will generally be crops/cutouts that don't contain the full text you're trying to write, so it's not clear you even want CLIP to say "yes." (You can remove these augmentations, but then CLIP guidance loses its magic and starts to suck.)

I did in fact try this whole approach, with my own trained VQVAE, and my own finetuned CLIP.

This didn't really work, in exactly the ways you'd expect, although the results were often very amusing. Here's my favorite one -- you might even be able to guess what the prompt was:

OK, forget CLIP guidance then. Let's do it the hard way and use a text encoder.

I tried this too, several times.

Things that don't work: DALL-E

I tried training my own DALL-E on top of the same VQVAE used above. This was actually the first approach I tried, and where I first made the VQVAE.

(Note: that VQVAE itself can auto-encode pictures from tumblr splendidly, so it's not the problem here.)

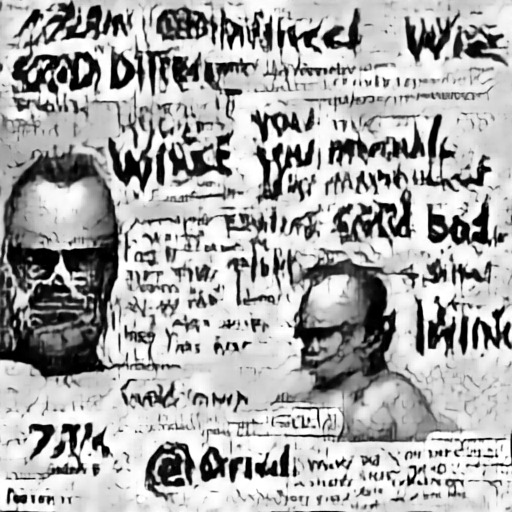

This failed more drastically. The best I could ever get was these sort of "hieroglyphics":

This makes sense, given that the DALL-E approach has steep downsides of its own for this task. Consider:

The VQVAE imposes an artificial "grain" onto the image, breaking it up into little patches of (typically) 8x8 pixels. When text is written in an image, the letters could be aligned anywhere with respect to this "grain."

The same letters will look very different if they're sitting in the middle of a VQ patch, vs. if they're sitting right on the edge between two, or mostly in one patch and partly in another. The generator has to learn the mapping from every letter (or group of letters) to each of these representations. And then it has to do that again for every font size! And again for every font!

Learning to "do GPT" on VQ patches is generally just harder than learning to do stuff on raw pixels, since the relation to the image is more abstract. I don't think I had nearly enough data/compute for a VQ-autoregressive model to work.

Things that don't work: GANs with text encoders

OK, forget DALL-E . . . uh . . . what if we did a GAN, I guess?? where both the G and the D can see the encoded text?

This was the last thing I tried before diffusion. (StyleGAN2 + DiffAug, with text encoder.) It failed, in boring ways, though I tried hard.

GANs are hard to train and I could never get the thing to "use the text" properly.

One issue was: there is a lot of much simpler stuff for the G and D to obsess over, and make the topic of their game, before they have to think about anything as abstract as text. So you have to get pretty far in GAN training for the point where the text would matter, and only at that point does the text encoder start being relevant.

But I think a deeper issue was that VAE/GAN-style latent states don't really make sense for text. I gave the G both the usual latent vector and a text encoding, but this effectively implies that every possible text should be compatible with every possible image.

For that to make sense, the latent should have a contextual meaning conditional on the text, expressing a parameterization of the space of "images consistent with this text." But that intuitively seems like a relatively hard thing for an NN to learn.

Diffusion

Then I was on the EleutherAI discord, and RiversHaveWings happened to say this:

And I though, "oh, maybe it's time for me to learn this new diffusion stuff. It won't work, but it will be educational."

So I added a text encoder to a diffusion model, using cross-attention. Indeed, it didn't work.

Things that don't work: 256x256 diffusion

For a long time, I did all my diffusion experiments at 256x256 resolution. This seemed natural: it was the biggest size that didn't strain the GPU too much, and it was the smallest size I'd feel OK using in the bot. Plus I was worried about text being illegible at small resolutions.

For some reason, I could never get 256x256 text writing to work. The models would learn to imitate fonts, but they'd always write random gibberish in them.

I tried a bunch of things during this period that didn't fix the problem, but which I still suspect were very helpful later:

Timestep embeddings: at some point, RiversHaveWings pointed out that my text encoder didn't know the value of the diffusion timestep. This was bad b/c presumably you need different stuff from the text at different noise levels. I added that. Also added some other pieces like a "line embedding," and timestep info injected into the cross-attn queries.

Line embeddings: I was worried my encoder might have trouble learning to determine which tokens were on which line of text. So I added an extra positional embedding that expresses how many newlines have happened so far.

Synthetic data: I made a new, larger synthetic, grayscale dataset of text in random fonts/sizes on flat backgrounds of random lightness. This presented the problem in a much crisper, easier to learn form. (This might have helped if I'd had it for the other approaches, although I went back and tried DALL-E on it and still got hieroglyphics, so IDK.)

Baby's first words: 64x64 diffusion

A common approach with diffusion models is to make 2 of them, one at low resolution, and one that upsamples low-res images to a higher resolution.

At wit's end, I decided to try this, with train a 64x64 low-res model. I trained it with my usual setup, and . . .

. . . it can write!!!

It can write, in a sense . . . but with misspellings. Lots of misspellings. Epic misspellings.

One of my test prompts, which I ran on all my experimental models for ease of comparison, was the following (don't ask):

the

what string

commit

String evolved

LEGGED

Here are two samples from the model, both with this prompt. (I've scaled them up in GIMP just so they're easier to see, which is why they're blurry.)

Interestingly, the misspellings vary with the conditioning noise (and the random draws during sampling since I'm not using DDIM). The model has "overly noisy/uncertain knowledge" as opposed to just being ignorant.

Spelling improves: relative positional embeddings

At this point, I was providing two kinds of position info to the model:

- Which line of text is this? (line embedding)

- Which character in the string is this, counting from the first one onward? (Absolute pos embedding)

I noticed that the model often got spelling right near the beginning of lines, but degraded later in them. I hypothesized that it was having trouble reconstructing relative position within a line from the absolute positions I was giving it.

cfoster0 on discord suggested I try relative positional embeddings, which together with the line embedding should convey the right info in an easy-to-use form.

I tried this, using the T5 version of relative positional embeddings.

This dramatically improved spelling. Given that test prompt, this model spelled it exactly right in 2 of 4 samples I generated:

I showed off the power of this new model by thanking discord user cfoster0 for their suggestion:

(At some point slightly before this, I also switched from a custom BPE tokenizer to character-level tokenization, which might have helped.)

Doing it for real: modeling text in natural images

OK, we can write text . . . at least, in the easiest possible setting: tiny 64x64 images that contain only text on a flat background, nothing else.

The goal, though, is to make "natural" images that just so happen to contain text.

Put in a transcript of a tweet, get a screenshot of a tweet. Put in a brief line of text, get a movie still with the text as the subtitle, or a picture of a book whose the text is the title, or something. This is much harder:

I have fewer real tumblr images (around 169k) than synthetic text images (around 407k, and could make more if needed)

The real data is much more diverse and complex

The real data introduces new ways the image can depend on the text

Much of the real data is illegible at 64x64 resolution

Let's tackle the resolution issue first. On the synthetic data, we know 64x64 works, and 256x256 doesn't work (even with relative embeds.)

What about 128x128, though? For some reason, that works just as well as 64x64! It's still small, and ideally I'd want to make images bigger than that, but it makes legibility less of a concern.

OK, so I can generate text that looks like the synthetic dataset, at 128x128 resolution. If I just . . . finetune that model on my real dataset, what happens?

It works!

The model doesn't make recognizable objects/faces/etc most of the time, which is not surprising given the small size and diverse nature of the data set.

But it does learn the right relationships between text and image, without losing its ability to write text itself. It does misspell things sometimes, about as often as it did on the synthetic data, but that seems acceptable.

Here's a generated tweet from this era:

The prompt for this was a real STR transcript of this tweet. (Sorry about the specific choice of image here, it's just a tweet that ended up in my test split and was thus a useful testing prompt)

At this point, I was still doing everything in monochrome (with monochrome noise), afraid that adding color might screw things up. Does it, though?

Nope! So I re-do everything in color, although the synthetic font data is still monochrome (but now diffused with RGB noise). Works just as well.

(Sometime around this point, I added extra layers of image-to-text cross-attn before each text-to-image one, with an FF layer in the middle. This was inspired by another cfoster0 suggestion, and I thought it might help the model use image context to guide how it uses the text.

This is called "weave attn" in my code. I don't know if it's actually helpful, but I use it from here on.)

One last hurdle: embiggening

128x128 is still kinda small, though.

Recall that, when I originally did 64x64, the plan was to make a second "superresolution" model later to convert small images into bigger ones.

(You do this, in diffusion, by simply giving the model the [noiseless] low-res image as an extra input, alongside the high-res but noised image that is an input to any diffusion model. In my case, I also fed it the text, using the same architecture as elsewhere.)

How was that going? Not actually that well, even though it felt like the easy part.

My 128 -> 256 superresolution models would get what looked like great metrics, but when I looked at the upsampled images, they looked ugly and fuzzy, a lot like low-quality JPEGs.

I had warm-started the text encoder part of the super-res model with the encoder weights from the base model, so it should know a lot about text. But it wasn't very good at scaling up small, closely printed text, which is the most important part of its job.

I had to come up with one additional trick, to make this last part work.

My diffusion models use the standard architecture for such models, the "U-net," so called for its U shape.

It takes the image, processes it a bit, and then downsamples it to half the resolution. It processed it there, then downsamples it again, etc -- all the way down to 8x8. Then it goes back up again, to 16x16, etc. When it reaches the original resolution, it spits out its prediction for the noise.

Therefore, most of the structure of my 256-res model looks identical to the structure of my 128-res model. Only it's "sandwiched" between a first part that downsamples from 256, and a final part that upsamples to 256.

The trained 128 model knows a lot about how these images tend to look, and about writing text. What if I warm-start the entire middle of the U-Net with weights of the 128 model?

That is, at initialization, my 256 super-res model would just be my 128 model sandwiched inside two extra parts, with random weights for the "bread" only.

I can imagine lots of reasons this might not work, but it was easy to try, and in fact it did work!

Super-res models initialized in this way rapidly learned to do high-quality upsampling, both of text and non-text image elements.

At this point, I had the model (or rather, the two models) I would deploy in the bot.

Using it in practice: rejection sampling

To use this model in practice, the simplest workflow would be:

Generate a single 128x128 image from the prompt

Using the prompt and the 128x128 image, upsample to 256x256

We're done

However, recall that we have access to STR model, which we can ask to read images.

In some sense, the point of all this work is to "invert" the STR model, making images from STR transcripts. If this worked perfectly, feeding the image we make through STR would always return the original prompt.

The model isn't that good, but we can get it closer by using this workflow instead:

Generate multiple 128x128 images from the prompt

Read all the 128x128 images with STR

Using some metric like n-gram similarity, measure how close the transcripts are to the original prompt, and remove the "worst" images from the batch

Using the prompt and the 128x128 images that were kept in step 3, upsample 256x256

Feed all the 256x256 images through STR

Pick the 256x256 image that most closely matches the prompt

We're done

For step 3, I use character trigram similarity and a slightly complicated pruning heuristic with several thresholds. The code for this is here.

Why did diffusion work?

A few thoughts on why diffusion worked for this problem, unlike anything else:

- Diffusion doesn't have the problem that VQ models have, where the latent exists on an arbitrary grid, and the text could have any alignment w/r/t the grid.

- Unlike VQ models, and GAN-type models with a single vector latent, the "latent" in diffusion isn't trying to parameterize the manifold of plausible images in any "nice" way. It's just noise.

Since noise works fine without adding some sort of extra "niceness" constraint, we don't have to worry about the constraint being poorly suited to text.

- During training, diffusion models take partially noised real images as inputs, rather than getting a latent and having to invent the entire image de novo. And it only gets credit for making this input less noised, not for any of the structure that's already there.

I think this helps it pick up nuances like "what does the text say?" more quickly than other models. At some diffusion timesteps, all the obvious structure (that other models would obsess over) has already been revealed, and the only way to score more points is to use nuanced knowledge.

In a sense, diffusion learns the hard stuff and the easy stuff in parallel, rather than in stages like other models. So it doesn't get stuck in a trap where it over-trains itself to one stage, and then can't learn the later stages, because the loss landscape has a barrier in between (?). I don't know how to make this precise, but it feels true.

Postscript: GLIDE

Three days before I deployed this work in the bot, OpenAI released its own text-conditioned diffusion model, called GLIDE. I guess it's an idea whose time has come!

Their model is slightly different in how it joins the text encoder to the U-net. Instead of adding cross-attn, it simply appends the output of the text encoder as extra positions in the standard attention layers, which all diffusion U-Nets have in their lower-resolution middle layer(s).

I'm not sure if this would have worked for my problem. (I don't know because they didn't try to make their model write text -- it models the text-image relation more like CLIP and DALL-E.)

In any event, it makes bigger attn matrices than my approach, of size (text_len + res^2)^2 rather than my (text_len * res^2). The extra memory needed might be prohibitive for me in practice, not sure.

I haven't tried their approach, and it's possible it would beat mine in a head-to-head comparison on this problem. If so, I would want to use theirs instead.

The end

Thanks for reading this giant post!

Thanks again to people in EleutherAI discord for help and discussion.

You can see some of the results in this tag.

106 notes

·

View notes

Photo

1950′s O.C. Newlin Guitar

from: https://www.soundspace.la/1950s-oc-newlin-custom-guitar

17 notes

·

View notes

Note

3, 18, 21, 34, 47 for the OCs ask :D

Thank youuuuu :)

Some OC Questions

3.Have you ever adopted a character or gotten a character from someone else?

Not properly! I played around in other writers’ sandbox (hi @cirianne!!) but it’s more borrowing that adopting. (I’d 100% be down to adopt @unfocused-overwriter‘s Danae and Diego, @weaver-of-fantasies-and-fables‘s girls, and @maxseidel‘s Eridein! not for writing, for protection!!)

18. Any OC crackships?

Too many! Most “popular” ones are CatxGiuliano and AldaxDrinian.

21.Your most artistic OC

Funny enough, none of my OCs have a very artistic vibe! The palm would go to Newlin, since they’re the only one who makes use of their vivid imagination.

34.Do you have any twin characters?

I do!! Damiel and Drinian are twins, as are their younger siblings, Dwight and Deana (Daria is the only single child in that family).

47.Has anyone ever (friendly) claimed any of your OCs as their child?

Yep! I can’t remember who has custody of who, but yes^^

#ask#my characters#unfocused-overwriter#primum sanguis#ellerin's inheritance#c: caterina sforza#c: giuliano della rovere#c: alda westfall#c: drinian highorn#c: damile highorn#c: dwight highorn#c: deana highorn#c: daria highorn#c: newlin#AHSPOA

10 notes

·

View notes

Photo

🔥SNAZZY OC' Tee🔥 📦FREE shipping to US📦 💯 ORIGINAL DESIGN💯 🔗LINK TO STORE IN BIO🔗 . . . . You won't find this anywhere else!!😀 . . shirts BOGO 10% off! Free shipping! . Only on ClassicCassy.com . . Pre-Sale!!! . #trendy #new #exclusive #originaldesign #cartoons #patterns #trendsetter #unique #popculture #shoponline #instashop #classiccassy #absolute #litty #obsessed #oneofakind #shoppingonline #teeshirts #nostalgia #Original #newline #newdesigner #illtellyouwhat #mensfashion #presale #womensfashion #menstyle #oldies #lity #tshirts https://www.instagram.com/p/BxTZe5uALR8/?utm_source=ig_tumblr_share&igshid=1osnpggieahlh

#trendy#new#exclusive#originaldesign#cartoons#patterns#trendsetter#unique#popculture#shoponline#instashop#classiccassy#absolute#litty#obsessed#oneofakind#shoppingonline#teeshirts#nostalgia#original#newline#newdesigner#illtellyouwhat#mensfashion#presale#womensfashion#menstyle#oldies#lity#tshirts

0 notes

Text

A while ago, I posted the list of odonates I *should* find in my county. It’s here, if you want to go look at it, but I’m thinking it’s an unambitious list because it had like six things missing from it when I first looked at it. If a comprehensive list is missing ten percent of the things (was missing 6 but had 53), then it is not a very good list. The list is now 59 because I am over here improving the scientific record with proof. (Pix or it didn’t happen!)

Thing is, the odonatacentral.org county level checklists, generated from Actual County Observation Records, suck. They're better if you live in a county with a major land-grant university (Centre County, PA, home of Penn State, comes to mind), a flashy and substantial water feature (Raystown Lake, Huntington County, PA, I am looking directly at you), or a couple of state parks. But if you live in plain old Greater Rednecklandia, the county records for your county probably suck and the more Greater Rednecklandia you are, the worse the records are likely to be. As a consequence of the paucity of the scholarly record, the procedurally generated county level checklists from over at OC... suck. (Science needs more boots on the ground, is the real problem.)

The OC county checklists are better than nothing, but they are not as good as they COULD be. So wah wah, my county list from OC is not particularly great because whiny amateur me half-assedly proved it wrong in a month or so. Could there be a better county list? Certainly!

I want a better county list because observers see, a lot of the time, only the things that we are looking for. (That video with the gorilla suit person amongst the basketball players comes to mind) Basically, an insufficient checklist might lead someone to not look for or recognize stuff that could totally be out there. Totally. Like in McElligot’s Pool.

So, the current science-y records over at OC for my county (Fulton County, PA) are shittastic and I whine a lot. Fulton County is a rural county with very few people (less than 20K residents). It lacks universities, fancy water features, or any other reasons for the presence of a gung-ho odonate observation force. The records for my abutting counties (Bedford, Huntington, Franklin in PA, Washington in MD) are fuller. I feel like the records for “possibly available in Fulton County” would be more informed if I included in the “possibles” list all the stuff found in neighboring counties. Odonates do fly, after all, and if my own county is poorly surveyed, maybe... maybe I should be looking for more things than are on the official list.

So, I’ve gone and done that -- pulled the species checklists for the above listed counties from odonatacentral, thrown them into a text file, and pushed them through assorted linux command-line script filters to generate some information that might be useful to me in terms of What To Look For and How Likely Finding Them Might Be.

Methodology: Pull the county checklists off of odonatacentral, paste into a flat text file on the dumbest text editor you can find, using “paste as plain text”. You’ll get entries that look like this:

Aeshna canadensis

Canada Darner

Aeshna constricta

Lance-tipped Darner

(etc)

These need to be cleaned up and made all one line, which I did with shell scripting. I am THE WORLD’S SHITTIEST SHELL SCRIPTER, just putting that out there right now. I do not shell script for a living any more than I chase the odonates around for a living.

Here’s what I did:

cat fivecounties.txt | xargs -n3 -d'\n' > test1.txt

(In english: read file fivecounties.txt, which is the plaintext paste file of all the county checklists, go through and take out the newline character three times (one for after scientific name, one for after common name, one for blank line), throw the result in new file called test1.txt)

Your output should look like this:

Aeshna canadensis Canada Darner

Aeshna constricta Lance-tipped Darner

(One line per species, no blank lines)

Next, I sorted the file:

sort test1.txt > test2.txt

Output should look like this:

Aeshna canadensis Canada Darner

Aeshna canadensis Canada Darner

Aeshna constricta Lance-tipped Darner

Aeshna constricta Lance-tipped Darner

Aeshna tuberculifera Black-tipped Darner

Aeshna tuberculifera Black-tipped Darner...

(All like kinds grouped together and this is not a big enough text file for it to matter what mechanism linux uses for sorting. Does Not Matter.)

Then I did

cat test2.txt | uniq -c > test3.txt

for an output like this...

2 Aeshna canadensis Canada Darner

2 Aeshna constricta Lance-tipped Darner

3 Aeshna tuberculifera Black-tipped Darner

Finally, I picked out the ones I wanted (by number) and formatted them for easy pasting in tumblr, an effort which took about eight tries and a lot of google because I freaking SUCK at sed. sed hates me. I did each iteration (1 through 5) seperately because I am no coder.

cat test3.txt | grep '5' | sed -e s/[[:space:]]*5[[:space:]]*//g > 5counts.txt

for an output of:

Anax junius Common Green Darner

Argia fumipennis Variable Dancer

Arigomphus villosipes Unicorn Clubtail...

So what did we get?

STUFF IN ALL FIVE COUNTIES (my county of Fulton plus the ones around it: Bedford, Huntington, Franklin in PA and Washington in MD), ones I do not have a verified photo record of are bolded:

Anax junius Common Green Darner (I can’t catch them. We have ‘em.)

Argia fumipennis Variable Dancer

Arigomphus villosipes Unicorn Clubtail

Calopteryx maculata Ebony Jewelwing

Celithemis elisa Calico Pennant

Dromogomphus spinosus Black-shouldered Spinyleg

Enallagma aspersum Azure Bluet

Enallagma civile Familiar Bluet

Enallagma signatum Orange Bluet

Epitheca cynosura Common Baskettail

Epitheca princeps Prince Baskettail

Erythemis simplicicollis Eastern Pondhawk

Hagenius brevistylus Dragonhunter

Ischnura posita Fragile Forktail

Ischnura verticalis Eastern Forktail

Libellula cyanea Spangled Skimmer

Libellula incesta Slaty Skimmer

Libellula luctuosa Widow Skimmer

Libellula pulchella Twelve-spotted Skimmer

Macromia illinoiensis Swift River Cruiser

Pachydiplax longipennis Blue Dasher

Perithemis tenera Eastern Amberwing

Phanogomphus lividus Ashy Clubtail

Plathemis lydia Common Whitetail

Sympetrum rubicundulum Ruby Meadowhawk

Sympetrum semicinctum Band-winged Meadowhawk

Sympetrum vicinum Autumn Meadowhawk

These All-Five-Counties odonates are the guys I should be able to find in my county. The official list says they’re here and they are also in all the bordering counties. I should make more of an effort on these because they’re probably here.

Next, I have four-out-of-five. These are likely but not 100%. Bolded the ones I do not have and probably I should read up for better stalking of these jobbies. Lurking in the usual hangouts, etc.

Aeshna umbrosa Shadow Darner

Argia apicalis Blue-fronted Dancer

Argia sedula Blue-ringed Dancer

Argia translata Dusky Dancer

Basiaeschna janata Springtime Darner

Boyeria vinosa Fawn Darner

Calopteryx angustipennis Appalachian Jewelwing

Chromagrion conditum Aurora Damsel

Didymops transversa Stream Cruiser

Enallagma basidens Double-striped Bluet

Enallagma divagans Turquoise Bluet

Enallagma exsulans Stream Bluet

Enallagma geminatum Skimming Bluet

Enallagma hageni Hagen's Bluet

Epiaeschna heros Swamp Darner

Hetaerina americana American Rubyspot

Lestes rectangularis Slender Spreadwing

Leucorrhinia intacta Dot-tailed Whiteface

Libellula semifasciata Painted Skimmer

Phanogomphus exilis Lancet Clubtail

And three out of five. Maybes but worth looking at if I have the appropriate habitat. Need to up my darner game. I’ve bolded what I don’t have.

Aeshna tuberculifera Black-tipped Darner

Aeshna verticalis Green-striped Darner

Argia moesta Powdered Dancer

Boyeria grafiana Ocellated Darner

Cordulegaster maculata Twin-spotted Spiketail

Cordulegaster obliqua Arrowhead Spiketail

Enallagma traviatum Slender Bluet

Enallagma vesperum Vesper Bluet

Helocordulia uhleri Uhler's Sundragon

Ladona julia Chalk-fronted Corporal

Lestes vigilax Swamp Spreadwing

Pantala flavescens Wandering Glider

Somatochlora tenebrosa Clamp-tipped Emerald

Stylogomphus albistylus Eastern Least Clubtail

Tramea carolina Carolina Saddlebags

Tramea lacerata Black Saddlebags

Two out of five. Here we’re going to bold and italicize what I do have. I don’t have most of these.

Aeshna canadensis Canada Darner

Aeshna constricta Lance-tipped Darner

Amphiagrion saucium Eastern Red Damsel

Celithemis eponina Halloween Pennant

Cordulegaster bilineata Brown Spiketail

Cordulia shurtleffii American Emerald

Enallagma antennatum Rainbow Bluet

Epitheca canis Beaverpond Baskettail

Gomphaeschna furcillata Harlequin Darner

Gomphurus fraternus Midland Clubtail

Gomphurus vastus Cobra Clubtail

Hylogomphus abbreviatus Spine-crowned Clubtail

Ischnura hastata Citrine Forktail

Ladona deplanata Blue Corporal

Lanthus vernalis Southern Pygmy Clubtail

Lestes congener Spotted Spreadwing

Lestes dryas Emerald Spreadwing

Lestes forcipatus Sweetflag Spreadwing

Lestes inaequalis Elegant Spreadwing

Libellula axilena Bar-winged Skimmer

Libellula vibrans Great Blue Skimmer

Macromia alleghaniensis Allegheny River Cruiser

Nehalennia irene Sedge Sprite

Neurocordulia obsoleta Umber Shadowdragon

Ophiogomphus rupinsulensis Rusty Snaketail

Pantala hymenaea Spot-winged Glider

Phanogomphus spicatus Dusky Clubtail

Rhionaeschna mutata Spatterdock Darner

Somatochlora linearis Mocha Emerald

Stenogomphurus rogersi Sable Clubtail

Sympetrum obtrusum White-faced Meadowhawk

Tachopteryx thoreyi Gray Petaltail

1 of 5 -- I figure this stuff is longshots but do note that the lilypad forktail, bolded and italicized below, the ONE record in the five county area -- that’s my record. I found that. That was me. So possibly...

Anax longipes Comet Darner

Archilestes grandis Great Spreadwing

Argia tibialis Blue-tipped Dancer

Calopteryx amata Superb Jewelwing

Cordulegaster diastatops Delta-spotted Spiketail

Cordulegaster erronea Tiger Spiketail

Dorocordulia libera Racket-tailed Emerald

Enallagma anna River Bluet

Enallagma annexum Northern Bluet

Enallagma carunculatum Tule Bluet

Enallagma ebrium Marsh Bluet

Gomphurus lineatifrons Splendid Clubtail

Hetaerina titia Smoky Rubyspot

Hylogomphus viridifrons Green-faced Clubtail

Ischnura kellicotti Lilypad Forktail

Lanthus parvulus Northern Pygmy Clubtail

Lestes australis Southern Spreadwing

Lestes disjunctus Northern Spreadwing

Lestes eurinus Amber-winged Spreadwing

Lestes unguiculatus Lyre-tipped Spreadwing

Leucorrhinia frigida Frosted Whiteface

Leucorrhinia hudsonica Hudsonian Whiteface

Leucorrhinia proxima Belted Whiteface

Libellula auripennis Golden-winged Skimmer

Libellula flavida Yellow-sided Skimmer

Libellula quadrimaculata Four-spotted Skimmer

Nasiaeschna pentacantha Cyrano Darner

Nehalennia gracilis Sphagnum Sprite

Neurocordulia yamaskanensis Stygian Shadowdragon

Ophiogomphus carolus Riffle Snaketail

Ophiogomphus mainensis Maine Snaketail

Phanogomphus borealis Beaverpond Clubtail

Phanogomphus descriptus Harpoon Clubtail

Phanogomphus quadricolor Rapids Clubtail

Somatochlora elongata Ski-tipped Emerald

Somatochlora walshii Brush-tipped Emerald

Stylurus laurae Laura's Clubtail

Stylurus spiniceps Arrow Clubtail

Sympetrum internum Cherry-faced Meadowhawk

Anyway. That’s how I buffed up my “things to look for in my area and how reasonable they are for me to be looking for them” list for dragonflies. Seems legit to me, anyway.

0 notes

Text

get your shit together rayquaza

2K notes

·

View notes

Text

oh yeah i finished the designs for my heroes and partners this morning. was originally gonna upload each of them with my npc redesigns from their respective games but ehhh might as well post them now.

rescue team (team fable): mayar (skitty, she/they) and newlin (mudkip/seadra hybrid, he/him)

explorers (team saviour): asta (eevee/sylveon half evolution, she/it) and dustin (shinx, he/him)

gates to infinity (the eden): nadine (snivy, she/he) and kazuki (oshawott/palafin hybrid, he/him)

super (the vagabonds): zaya (fennekin, she/her) and dalia (treecko, she/her)

#pokemon#pkmnart#pkmn#pmd#pokemon mystery dungeon#rescue team#explorers of sky#gates to infinity#pokemon super mystery dungeon#psmd#skitty#mudkip#eevee#shinx#snivy#oshawott#fennekin#treecko#oc: mayar#oc: newlin#oc: asta#oc: dustin#oc: nadine#oc: kazuki#oc: zaya#oc: fennekin#i'll make another post with their evolutions when i'm done. nadine and kazuki don't evolve (at least rn. may change my mind about that in#the future). also all of these will be up on toyhouse......eventually#i do have the bios for teams fable and saviour written out but they're very outdated rn

185 notes

·

View notes

Text

here are the evos for my pmd hero and partner designs!

#pokemon#pkmnart#pkmn#pmd#pokemon mystery dungeon#delcatty#oc: mayar#swampert#oc: newlin#sylveon#oc: asta#luxray#oc: dustin#braixen#oc: zaya#grovyle#oc: dalia#like i said in that post my gates team is remaining unevolved. for now at least. not opposed to changing my mind#definitely not fully evolving nadine given the. arm situation. kazuki will probably fully evolve because idc abour dewott that much sorry#same reason why i haven't given newlin a marshtomp design#i mayyyy give dustin a luxio design in the future. just because in my lore you don't need to go to a specific place to evolve. kinda like#how gates handled evolution. so dustin would be able to evolve without that and i like the idea of him being a luxio during the post game#story. also the ingame reason as to why you can't evolve at the spring is stupid and makes no sense so fuck it#also might give zaya and dalia accessories in the future they look too naked rn. not the harmony scarves though bc i like the way they#disappeared at the end of psmd

113 notes

·

View notes

Text

hero and partner icons are updated too!

#pokemon#pkmn#pmd#pokemon mystery dungeon#pmd ocs#ties that bind#skitty#oc: mayar#mudkip#oc: newlin#eevee#oc: asta#shinx#oc: dustin#snivy#oc: nadine#oshawott#oc: kazuki#fennekin#oc: zaya#treecko#oc: dalia#technically zaya and dalia's first icons but whatever

75 notes

·

View notes

Text

tonight i finally finished 100% completing rtdx. current mood ⬆️

#pokemon#pkmnart#pkmn#pmd#pokemon mystery dungeon#delcatty#swampert#was contemplating going for a shiny living dex on top of the living dex i was doing for 100% but i don't hate myself that much#oc: mayar#oc: newlin

211 notes

·

View notes

Text

hitting the road

#pokemon#pkmnart#pkmn#pmd#pokemon mystery dungeon#skitty#mudkip#gengar#god i hope this perspective makes sense xmx#oc: mayar#oc: newlin

154 notes

·

View notes

Text

this new meme reminds me of pmd1

72 notes

·

View notes

Text

The Valuable Sun | Chapter 18

Summary: Sookie comes to learn her little sister’s got her life together while she has to rebuild everything.

Pairing: Eric x OC

Warnings: 18+

A/N: Please, note that I am French so there might be some mistakes here and there.

Words: 2254

Masterlist

Chapter 1 | Chapter 2 | Chapter 3 | Chapter 4 | Chapter 5 | Chapter 6 | Chapter 7 | Chapter 8 | Chapter 9 | Chapter 10 | Chapter 11 | Chapter 12 | Chapter 13 | Chapter 14 | Chapter 15 | Chapter 16 | Chapter 17

Spending the entire night with Jason and Sookie, cuddled in blankets, drinking hot coco with marshmallows… all of it seemed like a distant dream. A dream Brooklynne had had multiple times in the past year, once she thought would never happen again.

It was as hard for Sookie to get back to real life as it was for Brooklynne. Everyone in Bon Temps was so happy to not only hear about her return but also to see her again. The excuse Bill gave the police was very useful to shut anyone who was a little too curious. Of course, not everything was easy, and now that Sookie was back, it was a hard adjustment for Eric and Brooke to have a new roommate. So hard, in fact, that after only one day, Eric suggested they get a place of their own. A suggestion which, to her own surprise, she didn’t find uninteresting.

“Anywhere but Bon Temps,” Eric whispered so close to her hear she managed to hear him despite the terrible and terribly loud music playing in the club.

“You mean Shreveport,” she chuckled as she slid a hand in his hair, gently bringing his face to hers.

As per usual, she was sitting on his lap, on his ‘throne’. She had learnt to ignore the dozen pair of eyes, human or vampire, that were fixed on them for the entirety of the night. They were different, depending on their species. Mortals envied her. The undead judged him. Silently, of course. They knew what would happen if their sheriff caught them talking ill of his human.

The humans outside, however, weren’t so quiet or discreet about their judgmental ideas and hate. Steve Newlin’s following only grew bigger every day, ever since Russell went crazy on live TV, and a portion of it from Louisiana reunited every night in front of Fangtasia to spew their hatred all over its customers, be they vampire, or human. It did scare away most of the mortal clientele, though Fangtasia had been around for a while, and had, fortunately for business, loyal customers.

“What’s wrong with Shreveport?”

“What’s wrong with Bon Temps?”

He smirked. He liked it when she was challenging him. Always reminding him how perfect she was for him.

“We’d be close to Fangtasia. We wouldn’t have to fly here every night.”

“I like to fly,” she shrugged.

“Oh, I know you do,” he said with a grin.

“So, what do you want? To build a home under the club?”

“I would hate that as much as you would. I had an apartment in mind.”

“An apartment?”

He nodded. “UV protected windows, with a view…”

“Mmh… that doesn’t sound so bad.”

“Wait until you see the view.”

“I don’t care about the view. As long as we’re together.”

She pulled him to her, his lips crashing onto hers. That was how the night almost always ended, except when a dumbass decided to get into a fight with the other dumbasses outside.

“Eric.”

The Viking chose to ignore his progeny, hoping she’d get the hint and leave them alone.

“Eric.”

“Not now, Pam,” he said between two intense kisses.

“We have a problem.”

Brooklynne moved away, both annoyed and frustrated, making the vampire growl.

“What is it?” he snarled.

“Some idiot outside got into a fight with the other idiots outside.”

“Can’t you handle it?”

“It’s Hoyt, Eric. Jessica’s human? The King’s progeny? Rings any bell?”

Eric sighed as he tried hard not to roll his eyes.

“Go, it’s fine,” Brooke told him, not even trying to hide her disappointment.

“I’ll make it up to you,” he said before he stole another kiss from her.

“Oh, I know,” she smirked, not wanting him to leave in such a bad mood.

He chuckled as he got up, letting her slide gently onto the chair. Oh, how it made him feel to see her sitting there. She was never sexier than in his chair. She watched him leave, wiggling her fingers as he turned to her one last time. Left alone, she looked around the room, still feeling the eyes of the people there, humans still wishing they were in her position, vampires unhappy about their mortal queens. But as unhappy as they were, there was no doubt in anyone’s mind, that she was, indeed, their queen.

***

Wrapped in Eric’s large grey shirt, Brooke was sound asleep in their bed, in the cool of his arms. She couldn’t remember the last time she got up before noon, but she was always awake before Eric, who only opened his eyes at sundown. She awoke particularly early that afternoon and she would usually stay in bed and go back to sleep but since Sookie was back she had been getting up sooner to spend as much time as possible with her before she had to leave for Fangtasia. She rubbed her eyes as she yawned, another hour of sleep wouldn’t have been so bad.

She didn’t bother dressing up and climbed the ladder carefully. She knew she could make all the noise in the world and it wouldn’t wake the vampire up, but she always tried to be considerate. Human habit.

She shielded her eyes from the sun of the early afternoon as she opened the doors of the fake wooden wardrobe. She heard voices coming from the kitchen and wondered who Sookie could’ve been with as she knew Jason was working. Besides, it sounded like a woman’s voice. It sounded like…

“Tara,” Brooklynne smiled as she stepped into the kitchen. “What are you doing here?” a laugh escaped her as she went to hug her old friend.

“Hey Brooke, you’re up late,” Tara chuckled as she hugged her back.

“Or early, considering she went to bed at 6,” Sookie said with a grimace.

“Don’t tell me you spend your nights with that vampire,” Tara scolded the young telepath as she saw the logo of Fangtasia on her shirt.

“Her nights and her days,” Sookie informed her friend, “he lives here now.”

“He what?”

“Eric moved in months ago,” Brooke said as she made her way to the fridge.

“Are you telling me that psychopath who kidnapped and tortured Lafayette for weeks lives in this house?”

Brooklynne paused.

“First of all, he apologized for that, he even gave him a car. Second of all, yes, Tara, my boyfriend lives with me… in my house.”

“Lafayette’s terrified of that motherfucker, he can give him all the cars in the world it won’t change a thing!”

“And… this is still my house,” Sookie said.

“Actually… it isn’t,” Brooke wrinkled her nose. “The deed’s in my name now… I signed the papers and everything…” she continued as she closed the fridge, a bottle of orange juice in one hand.

“What?” Sookie breathed out as if she had just been punched in the heart.

“Legally I had to… since you were gone for so long… I’m sorry,” she shrugged. “But it’s still your house. It’s always gonna be your house. And Jason’s.”

“Great,” Sookie threw her hands in the air, “good thing I came back or you’d have sold my car next.”

“That’s not fair,” Brooklynne replied, offended. “I took care of your car, I took care of all your stuff.”

“I’m not staying one more second here, I don’t want your boyfriend to think I’m his dinner,” she said as she practically jumped off her chair and made her way to the back door.

“Tara, wait!”

“He’s not gonna hurt you,” Brooke called after her, but Tara wasn’t listening.

Sookie gave a disappointed and disapproving look to her sister before she went after her friend. Brooke shrugged, walked up to the shelf where they kept the glasses and poured herself a full glass of orange juice. She mumbled something as she brought it to her lips.

“And a good day to you to.”

***

Sookie was obviously mad when she came back. Tara had just come back from New-Orleans to see her and they had already been in a fight. It all made Brooklynne seriously reconsider Eric’s offer to move in that apartment in Shreveport, the one with the great view. Fortunately, the two sisters didn’t get the opportunity to argue about Eric again, as the phone rang as soon as she came back in the house, having failed to convince Tara to stay a little more.

“Who was it?” Brooklynne asked before taking another sip from her glass.

“Andy,” she sighed. “Jason didn’t come to work this morning and he’s not answering his phone.”

“Did he check his house?”

“He asked me to do it,” she answered as she took her car keys from the counter.

“Want me to come with…”

“No.”

Brooklynne silently watched her sister exit through the back door and quickly get into her car. After Sookie was gone, she washed the dishes, then took a long and cold shower, wondering what she should do while waiting for the night to come.

***

Brooklynne’s denial of Jason’s situation unnerved Sookie more than anything. Though Eric acted as usual, detached from any feeling and showing no particular worry, he did find it strange too. Maybe it was too hard for Brooklynne to even imagine that another one of her siblings was missing again out of nowhere with no clue as to where to find them. Or maybe it was just as she said, optimism and trust. Jason would come back. Like Sookie did. Eric didn’t dare say aloud what both he and Sookie thought: hopefully, it wouldn’t take a year.

“He’s probably with a girl,” she said to her sister. “He’ll be back in a week.”

Jason’s disappearance had one positive effect, at least, Sookie and Brooklynne weren’t arguing about Eric anymore. Especially since Eric had said he’d order some vampires to look for their brother. Sookie appreciated it, even if she wouldn’t say it aloud. But there was something else that bothered the eldest telepath, something that had been bothering her ever since she had come back from Alcide’s.

“Wasn’t he happy to see you?” Brooklynne asked as she put a plate down on the table.

“He was very happy to see me,” Sookie answered as she handed her the cutlery. She was cooking Adele’s famous sausage recipe, Jason’s favorite.

“Then what?” she insisted as she finished setting up the table.

“Debbie was there.”

“Yeah. They got back together a few months ago. She’s clean now.”

“Wait… you knew?”

Brooke shrugged. “Alcide helped us a lot when we were looking for you. We spent a lot of time together.”

“He kept whining about that V addicted werewolf who left him,” Eric said as he appeared from the living room.

“She needed help getting clean. Needed support,” Brooke continued as she gave a reproving look to the vampire. Eric ignored it and kissed her temple, a gesture she took as an apology. That was the best she’d get from him anyway.

“We’re out of Tru Blood,” Sookie informed the vampire.

“I know,” he told her as he slid his hands on Brooke’s waist, gently bringing her back to his chest before placing a kiss on her neck, where two puncture wounds could be seen.

Sookie grimaced as she turned around and got back to the stove where the sausages were about to burn. Brooke smiled before she put a kiss on Eric’s lips. She meant to get the glasses from the shelf, but Eric had something else in mind. He didn’t let her go from his arms and captured her lips, tightening his hold on her.

“Could you not?” Sookie sighed. “We’re about to eat.”

“I’m about to eat too,” he smirked, making her roll her eyes.

“Stop it,” Brooke giggled, wiggling out of Eric’s arms. “I’m not your dinner,” she playfully punched him in the arm.

“No?” he asked with a grin and a raised eyebrow.

She smiled back, bit her lower lip, then tiptoed and wrapped her arms around his neck before whispering in his ear.

“No, I’m your dessert.”

“Mmh, I like that.”

“Ew, stop it,” Sookie begged.

“You didn’t even hear what I said,” Brooke told her.

“I can read your mind, remember?”

“Then stay out of my head.”

As Sookie put the pan in the middle of the table, dinner being ready to be served, Eric’s phone started to ring.

“I thought Fangtasia was closed tonight,” Sookie said as she sat down.

“Fangtasia is never closed,” he told her before putting another kiss on Brooke’s forehead. “I’ll be right back.”

Brooklynne sat across from Sookie and started eating, every bite reminded her of her grandmother, even the smell, as if Adele was right there with them.

“You’re the only one who can cook it perfectly.”

“It’s just sausages,” Sookie chuckled.

“Nothing new from Jason?”

“Afraid not… Andy said he’d file a missing person report tomorrow… I said he should have already done it but…”

“You know Jason… he’s just fooling around with a girl.”

“He hasn’t answered his phone in two days, Brooke.”

“So, it died,” she shrugged. “He’s too busy to notice.”

“I hope you’re right,” Sookie whispered, more to herself.

Eric stepped back in the kitchen with an annoyed look on his face. Though Sookie noticed, she didn’t bother asking him about it, she didn’t really care about his problems.

“What’s wrong?” Brooke asked.

“We have to go.”

“You can deal with your problems on your own, Eric,” Sookie told him. “Or ask Pam.”

“It’s Bill,” he said. “He has a job for us.”

**********

Tags: @thepoet1975 @nerdysandwichqueen @catchmeupimgettingoutofhere @raegan-hale @colie87 @heavenly1927 @abbey7103

#true blood#eric northman#eric x oc#sookie stackhouse#jason stackhouse#fanfic#fanfiction#oc#imagine#reader#eric x reader

77 notes

·

View notes