#by associating them with the use of exploitative ai

Note

Hey ik this is not tournament related, but in case you didn't know and want to spread the word, Tumblr is selling everybody's data to AI companies.

Here is the staff post about it https://www.tumblr.com/loki-valeska/743539907313778688

And a post with more information and how to opt out https://www.tumblr.com/khaleesi/743504350780014592/

Hi thanks for the information and sorry for my late reply. I was a bit low on spoons this week and I wanted to form thoughts about this.

Because the thing is, I am doing a PhD at an AI department in real-life. Not in generative AI, in fact I’m partly doing this because I distrust how organisations are currently using AI. But so this is my field of expertise and I wanted to share some insights.

First of all yes do try to opt out. We have no guarantee how useful that’s going to be, but they don’t need to be given your data that easily.

Secondly, I am just so confused as to why? Why would you want to use tumblr posts to train your model? Everyone in the field surely knows about the garbage in, garbage out rule? AI models that need to be trained on data are doing nothing more than making statistical predictions based on the data they’ve seen. Garbage in, garbage out therefore refers to the fact that if your data is shit, your results will also be shit. And like not to be mean but a LOT of tumblr posts are not something I would want to see from a large language model.

Thirdly I’ve seen multiple posts encouraging people to use nightshade and glaze on their art but also posts wondering what exactly it is these programs do to your art. The thing is, generative ai models are kinda stupid, they just learn to associate certain patterns in pictures with certain words. However these patterns are typically not patterns we’d want them to pick up on. An example would be a model that you want to differentiate between pictures of birds and dogs, but instead of learning to look for say wings, it learns that pictures of birds usually have a blue sky as background and so a picture of a bird in the grass will be labelled as ‘dog’.

So what glaze and nightshade are more or less doing is exploiting this stupidness by changing a few pixels in your art that will give it a very different label when an AI looks at it. I can look up papers for people who want to know the details, but this is the essence of it.

To see how much influence this might have on your art, see this meme I made a few years ago based on the paper ”Intriguing properties of neural networks”, Figure 5 by Szegedy et al. (2013)

Finally, staff said in that post that they gave us the option to opt out because of the maybe upcoming AI act in Europe. I was under the impression that they should give us this opportunity because of the GDPR and that the AI act is supposed to be more about the use of AI and less about the creation and data aspect but nevertheless this shows that the EU has a real ability to influence these kinds of things and the European Parliament elections are coming up this year, so please go vote and also read up on what the parties are saying about AI and other technologies beforehand (next to everything else you care about) (also relevant for other elections of course but the EU has a good track record on this topic).

Anyway sorry for the long talk, but as I said this is my area and so I felt the need to clarify some things. Feel free to send me more asks if you want to know something specific!

#misc#ai#tumblr#oh yeah nigthwish and glaze are very heavy on your computer so do take that into account#nightshade*

94 notes

·

View notes

Text

On AI-Driven Conversations In Games

The AI gang really shot themselves in the foot by leaning so hard into capitalist exploitation angle, because now whenever they present a use of the tech that is actually moderately interesting the baseline reaction people have to it is just going to be hate because it's associated to AI in any way shape or form.

I mean, obviously I understand why people react this way, because most of the practical applications of AI are just a veil for replacing of labour and increasing profit margins for the executive levels. But I feel like nowadays you'll also just see a lot of people hating an idea because it's implicitly tied to AI in any way, despite the core conceit of the idea actually being fairly interesting. (Remember when Spiderverse used Machine Learning to generate some of it's incredibly labour-intensive frame-by-frame effects and then a bunch of people got mad because it used Machine Learning for that?)

People have been pointing to the use case of "what if you could talk to an NPC in a game and have their reponses generated via AI", and laughing at it like it's the dumbest suggestion ever, but honestly in my opinion I think that's the exact kind of system AI was practically designed for! To me that feels like an excellent application of the tech that is now just marred by the mention of AI in the first place.

Anyway, to ruminate on the concept a bit: I see that use of AI enabling a dev to fill out a world with more NPCs who help it feel more populated, as well as potentially give them incredibly varied responses that are more relevant to the NPCs immediate context of the game. I imagine instead of replacing full-on player choice dialog it would instead replace the throwaway barks of awkward and out of place open-world NPCs who look at you and say "I have nothing to say to you" and giving them something to directly say about your adventure or the context around them instead.

Instead of having the intern narrative designers be forced to write little barks and blurbs like "I have nothing to say" (which I understand narrative folks usually view as grunt work and hate writing in he first place), they'd be writing little prompts for that system instead. End result is when you talk to random farmer NPC #344 outside of town they say "Crop's doing well this year, here's hoping a dragon doesn't attack us" instead of "I've got nothing to say". I think on paper that's a genuinely good and interesting way to improve an antiquated open-world problem like that. Should it be helpful? Probably not. Would it be interesting? No. Would it be a little more flavourful than what we currently have going on? I think so!

It's not an AI shill fever dream, I can see exactly how it would work and I'd bet money that there's a studio doing something like it in R&D right now. I imagine it'd also probably be pretty adaptable between projects too, so the similar system could be applied to different areas of the world.

Should it be trusted to give the player directions or do any sort of leading that a narrative designer should do? Almost certainly not because it would be inconsistent and have too big a possibility window, and AI is nothing if not horrible at performing essential tasks that might block progress.

Should it be done with the tech as it is now? Hell no, unless you want to wait five seconds for every reply to be generated and for it to be tied to some server bank that's guzzling all of Arizona's water. Also it would probably need an internet conncetion to work, which is asking a lot for an open world game.

Should it be done by these studios who are more interested in using it to replace labour and make the end result cheaper to make so they can keep more profits off he top? No, and that's the real reason why the applications of this tech sucks - because spoiler alert they'd all love to save money.

Obviously this concept isn't doable right now, but I wouldn't be surprised if it ships in a game in some form within 5-10 years tops.

Again I get why at this point in capitalism there's almost no applications of machine learning that are easy to trust, nor should we ever believe studios are doing it for any reason outside of trying to make development cheaper. I just think when it comes to tech it's worthwhile to keep execution in mind separate from intent; Tech isn't implicitly evil, it's the system it's built under that is. :)

#game development#gamedev#game dev#indie games#indie game#gamedevelopment#indiegames#indiedev#indie dev#thoughts#blog#ai#analysis

56 notes

·

View notes

Text

The US Copyright Office is currently asking for input on generative AI systems ...

... to help assess whether legislative or regulatory steps in this area are warranted.

Here is what I wrote to them, and what I want as a creative professional:

AI systems undermine the value of human creative thinking and work, and harbor a danger for us creative people that should not be underestimated. There is a risk of a transfer of economic advantage to a few AI companies, to the detriment of hundreds of thousands of creatives. It is the creative people with their works who create the data and marketing basis for the AI companies, from which the AI systems feed.

AI systems cannot produce text, images or music without suitable training material, and the quality of that training material has a direct influence on the quality of the results. In order to supply the systems with the necessary data, the developers of those AI systems are currently using the works of creative people - without consent or even asking, and without remuneration. In addition, creative professionals are denied a financial participation in the exploitation of the AI results created on the basis of the material.

My demand as a creative professional is this: The works and achievements of creative professionals must also be protected in digital space. The technical possibility of being able to read works via text and data mining must not legitimize any unlicensed use!

The remuneration for the use of works is the economic basis on which creative people work. AI companies are clearly pursuing economic interests with their operation. The associated use of the work for commercial purposes must be properly licensed, and compensated appropriately.

We need transparent training data as an access requirement for AI providers! In order to obtain market approval, AI providers must be able to transparently present this permission from the authors. The burden of proof and documentation of the data used - in the sense of applicable copyright law - lies with the user and not with the author. AI systems may only be trained from comprehensible, copyright-compliant sources.

____________________________

You can send your own comment to the Copyright Office here: https://www.regulations.gov/document/COLC-2023-0006-0001

My position is based on the Illustratoren Organisation's (Germany) recently published stance on AI generators:

https://illustratoren-organisation.de/2023/04/04/ki-aber-fair-positionspapier-der-kreativwirtschaft-zum-einsatz-von-ki/

170 notes

·

View notes

Text

We're told our art is worthless, to get a real job, that we will starve. But look how quickly they work to steal our labor for their profit. We are fed this lie again and again because it's a lot easier to exploit us when we don't value ourselves.

Clearly it is profitable to create and use AI. Even platforms purporting to support artists (that would have NO content for their platforms without us) are eager to throw artists under the bus for profit.

Ask yourselves: Why are AI creators so cautious about the music they pull for their datasets yet so cavalier with visual art? It's because they'd be sued into oblivion. The music industry deserves a lot of criticism but you can rest assured that they will defend their ⓒ tooth & nail.

When bots scavenge your data, they scrape away at the rights to your work. Opting out is only an option if all AI programmers decide to start collecting data ethically. There is currently no incentive for them to do so.

If a musician samples someone's work for their music, the sampled party gets credit AND royalties. Some artists have proposed an AI model that is both opt-in and allows artists to gain royalties every time their data is used to generate an image. No such thing exists currently.

I urge you to watch this conversation hosted by Concept Art Association feat. the US Copyright office, an AI ethicist, Greg Rutkowski and moderated by Karla Ortiz:

https://youtu.be/7u1CeiSHqwY

As an aside, how many of you heard from distant relatives or old acquaintances for the first time in forever because they had a brilliant idea for an NFT and you are just the person to make it? Suddenly, your skills are worth something to them.

Visibility is crucial for making a living as a visual artist, but the platforms we use to gain visibility are growing increasingly hostile towards us. So what can we do? Well...

Be selective with where you host your art. Consider keeping most of it behind passwords, CAPTCHAs or paywalls, only posting thumbnails to socials. The point is to make it harder for bots to scrape the internet for your work.

CONTACT YOUR LEGISLATORS and tell them this matter is important to you! Urge them to introduce bills that regulate how your data can be used! If you're in the US, find your Representative here:

https://www.house.gov/representatives/find-your-representative

And your Senators:

https://www.senate.gov/senators/senators-contact.htm

Get involved in artist advocacy groups such as:

https://graphicartistsguild.org/

We live in an attention economy, nothing is better at grabbing attention immediately than a well-executed image. Artists have already been battling decades of wage stagnation and devaluation but our work is more valuable than ever. Don't buy into the narrative that your skills are worthless. Fight for your rights. Do not support platforms that will diminish your rights for profit. They are NOTHING without you.

127 notes

·

View notes

Text

Don't mind me, just getting incredibly mad about Timnit Gebru's "TESCREAL" talk again.

You know, I will agree with her, there is a real problem with the upper class capitalist elite using ideas like Effective Altruism and Longtermism to make warped judgements that justify the centralization of power. There is a problem of overvaluing concerns like AI existential risk over the non-hypothetical problems that require more resources in the world today. There is a problem with medical paradigms that fetishize intelligence and physical ability in a way that echoes 20th century eugenicist rhetoric.

But what Gebru's talk/paper, which have sickeningly become a go-to leftist touchpoint for discussing tech, slanderously conflates whole philosophical movements into a "eugenics conspiracy" that is so myopically flattening that you have her arguing that things like the concept of "being rational" are modern eugenics. Forget transhumanism as radical self-determinism and self-modification, increasing human happiness by overcoming our biology, TESCREALs just want to make themselves superior (modern curative medical science is excluded from this logic, being tangible instead of speculative and thus too obviously good). Forget the fight to reduce scarcity, TESCREALs true agenda is to exploit minorities to enrich corporations! Forget trying to do good in the world, didn't you hear that Sam Bankman-Fried called himself an EA and yet was a bad guy? And safety in AI research? Nonsense, this is just part of the TESCREAL mythology of the AI godhead!

Gebru takes real problems in a bunch of fields and the culture surrounding them - problems that people are trying to address, including nominally her! - and declares a conspiracy where these problems are the flattened essence of these movements, essentially giving up on trying to improve matters. It's an argument supported by loose aesthetic associations and anecdotal cherrypicking, by taking tech CEOs at their word because they have the largest platform instead of contemplating that perhaps they have uniquely distorted understandings of the concepts they invoke, and a sneering condescension at anyone who placed in the "tech bro" box through aesthetic similarity.

I hate it, I hate it, I hate it, I hate it.

15 notes

·

View notes

Text

More women in big corporations mean a better chance of the publics learning of any wrong doings

A number of high-profile whistleblowers in the technology industry have stepped into the spotlight in the past few years. For the most part, they have been revealing corporate practices that thwart the public interest: Frances Haugen exposed personal data exploitation at Meta, Timnit Gebru and Rebecca Rivers challenged Google on ethics and AI issues, and Janneke Parrish raised concerns about a discriminatory work culture at Apple, among others.

Many of these whistleblowers are women – far more, it appears, than the proportion of women working in the tech industry. This raises the question of whether women are more likely to be whistleblowers in the tech field. The short answer is: “It’s complicated.”

For many, whistleblowing is a last resort to get society to address problems that can’t be resolved within an organization, or at least by the whistleblower. It speaks to the organizational status, power and resources of the whistleblower; the openness, communication and values of the organization in which they work; and to their passion, frustration and commitment to the issue they want to see addressed. Are whistleblowers more focused on the public interest? More virtuous? Less influential in their organizations? Are these possible explanations for why so many women are blowing the whistle on big tech?

To investigate these questions, we, a computer scientist and a sociologist, explored the nature of big tech whistleblowing, the influence of gender, and the implications for technology’s role in society. What we found was both complex and intriguing.

Narrative of virtue

Whistleblowing is a difficult phenomenon to study because its public manifestation is only the tip of the iceberg. Most whistleblowing is confidential or anonymous. On the surface, the notion of female whistleblowers fits with the prevailing narrativethat women are somehow more altruistic, focused on the public interest or morally virtuous than men.

Consider an argument made by the New York State Woman Suffrage Association around giving U.S. women the right to votein the 1920s: “Women are, by nature and training, housekeepers. Let them have a hand in the city’s housekeeping, even if they introduce an occasional house-cleaning.” In other words, giving women the power of the vote would help “clean up” the mess that men had made.

More recently, a similar argument was used in the move to all-women traffic enforcement in some Latin American cities under the assumption that female police officers are more impervious to bribes. Indeed, the United Nations has recently identified women’s global empowerment as key to reducing corruption and inequality in its world development goals.

There is data showing that women, more so than men, are associated with lower levels of corruption in government and business. For example, studies show that the higher the share of female elected officials in governments around the world, the lower the corruption. While this trend in part reflects the tendency of less corrupt governments to more often elect women, additional studies show a direct causal effect of electing female leaders and, in turn, reducing corruption.

Experimental studies and attitudinal surveys also show that women are more ethical in business dealings than their male counterparts, and one study using data on actual firm-level dealings confirms that businesses led by women are directly associated with a lower incidence of bribery. Much of this likely comes down to the socialization of men and women into different gender roles in society.

Hints, but no hard data

Although women may be acculturated to behave more ethically, this leaves open the question of whether they really are more likely to be whistleblowers. The full data on who reports wrongdoing is elusive, but scholars try to address the question by asking people about their whistleblowing orientation in surveys and in vignettes. In these studies, the gender effect is inconclusive.

However, women appear more willing than men to report wrongdoing when they can do so confidentially. This may be related to the fact that female whistleblowers may face higher rates of reprisal than male whistleblowers.

In the technology field, there is an additional factor at play. Women are under-represented both in numbers and in organizational power. The “Big Five” in tech – Google, Meta, Apple, Amazon and Microsoft – are still largely white and male.

Women currently represent about 25% of their technology workforce and about 30% of their executive leadership. Women are prevalent enough now to avoid being tokens but often don’t have the insider status and resources to effect change. They also lack the power that sometimes corrupts, referred to as the corruption opportunity gap.

In the public interest

Marginalized people often lack a sense of belonging and inclusion in organizations. The silver lining to this exclusion is that those people may feel less obligated to toe the line when they see wrongdoing. Given all of this, it is likely that some combination of gender socialization and female outsider status in big tech creates a situation where women appear to be the prevalent whistleblowers.

It may be that whistleblowing in tech is the result of a perfect storm between the field’s gender and public interest problems. Clear and conclusive data does not exist, and without concrete evidence the jury is out. But the prevalence of female whistleblowers in big tech is emblematic of both of these deficiencies, and the efforts of these whistleblowers are often aimed at boosting diversity and reducing the harm big tech causes society.

More so than any other corporate sector, tech pervades people’s lives. Big tech creates the tools people use every day, defines the information the public consumes, collects data on its users’ thoughts and behavior, and plays a major role in determining whether privacy, safety, security and welfare are supported or undermined.

And yet, the complexity, proprietary intellectual property protections and ubiquity of digital technologies make it hard for the public to gauge the personal risks and societal impact of technology. Today’s corporate cultural firewalls make it difficult to understand the choices that go into developing the products and services that so dominate people’s lives.

Of all areas within society in need of transparency and a greater focus on the public interest, we believe the most urgent priority is big tech. This makes the courage and the commitment of today’s whistleblowers all the more important.

255 notes

·

View notes

Text

This is what I mean when I say everyone who is most motivated to talk about AI art right now is aggressively stupid and bad at posting. You don't actually know my position, you are just having peepee tantrums in my inbox because you saw me bring up a single criticism of a single aspect of the way image generators are currently resourced. You are the most annoying type of person online right now and you make me embarrassed to be even remotely associated with this conversation.

I don't have anything against AI, you fucking babies.

The technology is cool. I'd love to be able to use it ethically for fun and as part of my artistic workflow. It would be great if it was possible to engage with these tools without overriding other people's desire to be excluded from datasets. It would be sick as hell if this tech wasn't built on the labour of people paid $2 a day to comb through images of decapitations and child exploitation and tag them appropriately for safe consumption by an algorithm. It would knock my fucking socks off if we could talk about the issues with emerging technologies like grown adults who want to fix them, instead of having to stop every single time and deal with people like you who plug your ears and shit and puke and scream if anyone points out the toy you like isn't already perfect. At the very least, if you're going to march into my inbox and be like this, learn to post. This is dogshit.

50 notes

·

View notes

Text

The horrifying tale of a blockchain-based virtual sweatshop

In 2004, my wife came home from the Game Developers Conference with a wild story. A presenter there claimed that he had set up a sweatshop on the US/Mexican border where he paid low-wage workers to do repetitive tasks in Everquest to amass virtual gold, which was sold on Ebay to lazier, richer players

The presenter was a well-known bullshitter and people were skeptical at the time, but my imagination was fired. I sat down at my keyboard and wrote “Anda’s Game,” a story about “gold farmers” who form an in-game, transnational trade-union under their bosses’ noses:

https://www.salon.com/2004/11/15/andas_game/

“Anda’s Game” was a surprise hit. It got reprinted in the year’s Best American Short Stories, won a bunch of awards, and Jen Wang and Firstsecond turned it into the NYT bestselling graphic novel “In Real Life”:

https://firstsecondbooks.com/books/new-book-in-real-life-by-cory-doctorow-and-jen-wang/

Then, in 2010, I adapted the story into For the Win, a YA novel about gold farming and global trade unions (led by the Industrial Workers of the World Wide Web, AKA IWWW, AKA Webblies):

https://craphound.com/category/ftw/

There’s an old cyberpunk writers’ joke that “cyberpunk is a warning, not a suggestion.” Alas, my parable-like stories about how digital technology enables the creation of new, high-tech sweatshops that arbitrage weak labor protections in the global south to worsen working conditions everywhere embodied the punchline to that cyberpunk joke. Over and over, these stories became touchstones for all kinds of global, digital labor exploitation and global, digital labor solidarity.

But sometimes, the stories don’t merely analogize to describe current situations — they end up very on-the-nose. Nowhere is that more true than with the blockchain-based, play-to-earn, NFT-infected gaming world, whose standard-bearer is the scandal-haunted Axie Infinity.

This week, my mentions have been full of “Don’t create the Torment Nexus” jokes referencing Neirin Gray Desai’s outstanding Rest of World story on the rise and implosion of the “play-to-earn” Minecraft/blockchain game Critterz:

https://restofworld.org/2022/minecraft-nft-ban-critterz/

Critterz was yet another one of those blockchain games, but they made a fatal mistake: they built their virtual sweatshop on Minecraft, whose parent company, Mojang (a subsidiary of Microsoft), banned NFT integration, stating: “blockchain technologies are not permitted to be integrated inside our Minecraft client and server applications nor may they be utilized to create NFTs associated with any in-game content, including worlds, skins, personal items, or other mods.”

Very quickly, the in-game money issued by Critterz tanked, and players — both the poor people who actually played the game, and the rich people who bought the treasures they earned from them — ran for the exits.

Even without Minecraft’s ban on NFTs, play-to-earn is in serious trouble. As the sector seeks a new lifeline, some wild ideas are emerging, straight out of the Torment Nexus. For example, Desai talked to Mikhai Kossar, who consults on NFT games. Kossar proposed that the future of play-to-earn might be poor people pretending to be non-player characters to give richness to the in-game experience of wealthy people. They could “just populate the world, maybe do a random job or just walk back and forth, fishing, telling stories, a shopkeeper, anything is really possible.”

There’s another tech joke, that “AI” stands for “Absent Indians” — the gag being that the “AIs” you interact with in the world are actually low-waged Indian workers pretending to be bots.

Once again — and I honestly can’t believe I have to say this — that joke is a warning, not a suggestion.

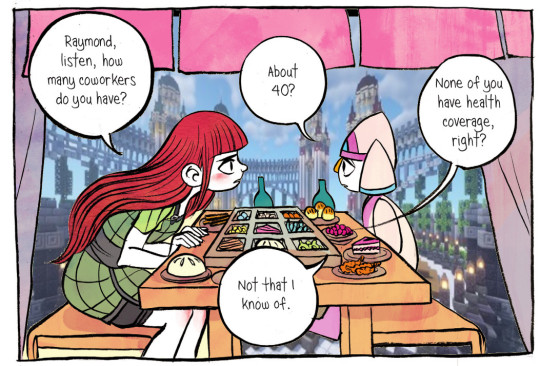

Image:

Jen Wang (modified)

Critterz (modified)

[Image ID: A panel from the graphic novel 'In Real Life' in which Anda and Raymond are having a union organizing talk; the background of the panel has been replaced with a screengrab from the Critterz Minecraft world.]

99 notes

·

View notes

Text

// nothing tells me someone doesn't understand modern economics or capitalism than them trying to claim marx explains this or uses the term 'late-stage capitalism'

marx's entire theory of economics is entirely incompatible with the gig economy

and there is no 'late stage' it just eventually becomes oligarchy

it's as stupid as claiming that Malthus understands economics and that late-stage feudalism was the end point of economics

the big secret in economics that no one likes to talk about is the very existence of the gig economy, where the companies who make money off services do not actually own the means of production themselves, and instead allow the worker to own the means of production and thus incur all the risk associated with owning it!

you have real estate companies that own no real estate, you have taxi companies that own no cars, and you have contractor companies that employ no contractors. That is entirely incompatible with workers seizing the means of production, because they already possess them, and are now poorer and more exploited than ever, because they do not possess the platforms that facility economic activity.

In other words, it isn't the means of production that stops workers from making money, it's the fact that it is impossible for them all to own the market square where economic activity actually happens. An uber driver or fiverr writer owns the means of production; but all these companies have done us undercut their industries and strip away worker protections in the name of financial freedom.

In other words, we shouldn't be worried about AI, we should be worried abotut he gig economy eating every industry, until no one actually works anywhere, they just get rented out by other customers, paying fees to platforms that neither own the means of production nor employ any actual workers and thus aren't required to provide benefits or security.

4 notes

·

View notes

Text

The Future of Finance: How Fintech Is Winning the Cybersecurity Race

In the cyber age, the financial world has been reshaped by fintech's relentless innovation. Mobile banking apps grant us access to our financial lives at our fingertips, and online investment platforms have revolutionised wealth management. Yet, beneath this veneer of convenience and accessibility lies an ominous spectre — the looming threat of cyberattacks on the financial sector. The number of cyberattacks is expected to increase by 50% in 2023. The global fintech market is expected to reach $324 billion by 2028, growing at a CAGR of 25.2% from 2023 to 2028. This growth of the fintech market makes it even more prone to cyber-attacks. To prevent this there are certain measures and innovations let's find out more about them

Cybersecurity Measures in Fintech

To mitigate the ever-present threat of cyberattacks, fintech companies employ a multifaceted approach to cybersecurity problems and solutions. Here are some key measures:

1. Encryption

Encrypting data at rest and in transit is fundamental to protecting sensitive information. Strong encryption algorithms ensure that even if a hacker gains access to data, it remains unreadable without the decryption keys.

2. Multi-Factor Authentication (MFA)

MFA adds an extra layer of security by requiring users to provide multiple forms of verification (e.g., passwords, fingerprints, or security tokens) before gaining access to their accounts.

3. Continuous Monitoring

Fintech companies employ advanced monitoring systems that constantly assess network traffic for suspicious activities. This allows for real-time threat detection and rapid response.

4. Penetration Testing

Regular penetration testing, performed by ethical hackers, helps identify vulnerabilities in systems and applications before malicious actors can exploit them.

5. Employee Training

Human error is a significant factor in cybersecurity breaches. Companies invest in cybersecurity training programs to educate employees about best practices and the risks associated with cyber threats.

6. Incident Response Plans

Having a well-defined incident response plan in place ensures that, in the event of a breach, the company can respond swiftly and effectively to mitigate the damage.

Emerging Technologies in Fintech Cybersecurity

As cyber threats continue to evolve, so do cybersecurity technologies in fintech. Here are some emerging technologies that are making a significant impact:

1. Artificial Intelligence (AI)

AI and machine learning algorithms are used to analyse vast amounts of data and identify patterns indicative of cyber threats. This allows for proactive threat detection and quicker response times.

2. Blockchain

Blockchain technology is employed to enhance the security and transparency of financial transactions. It ensures that transaction records are immutable and cannot be altered by malicious actors.

3. Biometrics

Fintech companies are increasingly adopting biometric authentication methods, such as facial recognition and fingerprint scanning, to provide a higher level of security than traditional passwords.

4. Quantum-Safe Encryption

With the advent of quantum computing, which poses a threat to current encryption methods, fintech companies are exploring quantum-safe encryption techniques to future-proof their security measures.

Conclusion

In the realm of fintech, where trust and security are paramount, the importance of cybersecurity cannot be overstated. Fintech companies must remain vigilant, employing a combination of advanced digital transformation solutions, employee training, and robust incident response plans to protect sensitive financial data from cyber threats. As the industry continues to evolve, staying one step ahead of cybercriminals will be an ongoing challenge, but one that fintech firms must embrace to ensure their continued success and the safety of their customers' financial well-being.

3 notes

·

View notes

Text

AI Winter is coming

mostly @northshorewave who has been worried about this

The other day a friend wanted to show off his positive interactions with ChatGPT. He’d used it to write a 20 questions quiz for the party we were at. I asked if I could proofread the questions and promised not to look up the answers. It contained stuff like:

“Which one of these countries does not have a square flag? a) Switzerland b) China c) Britain d) Egypt”

Switzerland is the only one of them that does have a square flag. ChatGPT is an overgrown autocomplete that “knows” to associate the concepts “square flag” and “Switzerland” and the general shape of a quiz question, and mashes words into this template. When I pointed this out, my friend was rather disappointed and quickly set to reviewing the quiz.

Then I asked to use his laptop for a moment, and showed off ChatGPT’s propensity to hallucinate by asking it for a summary of a nonexistent Wikipedia article whose title I made up on the spot.

ChatGPT happily summarized the article as describing a Danish children’s TV series involving two boys who go to Mars in their home-built spaceship to look for their dad who disappeared on a research expedition there. The series had 12 episodes that ran from 2005 to 2006. It was produced by (Danish studio name I don’t remember) for a cost of fourteen million dollars.

My friend spent the next several minutes on Google checking whether this series had at any point existed, and rushing through the five stages of grief. :^D

This looks like to me like a miniature of the current hype cycle (”AI Summer”), which will die in a year or two, and there will be another “AI Winter” of disappointment. I say another because there’s been at least three and possibly more minor ones. Experts dispute the count, there’s no objective number, but this is my impression:

In the 60s, there was an AI hype cycle. It produced a lot of obscure tech and the moderately famous ELIZA, an early chatbot-psychotherapist. ELIZA arguably passed the Turing test in the very narrow sense of “some people talking to it thought it was human”. AI researchers were sure that full humanlike AI was probably just a decade away.

Enter the 70s, humanlike AI is nowhere close, ELIZA is clearly just a trivial grammar engine, AI winter sets in, people and funding leave the field.

A second hype cycle around “expert systems” AI packed full of knowledge and rules and heuristics started in the early 80s. Surely this time AI is close, now that it knows stuff. Nope - AI winter 2 around late 80s-1990. My pet nerd example here is Eurisko winning a tournament of Traveller TCS - a very very large wide-open sandbox game with custom-designed ships in space battles, which has too many possibilities and too much rock-paper-scissors to solve.

Eurisko exploited enough edge cases and loopholes and cheese tactics that the judges changed the rules for next year. Eurisko won the next year again with new cheese, and the judges said “please don’t come back”.

Expert systems did spread into businesses and automation, but nobody thinks “AI” anymore about the automatic crop-picking robot that can tell green crops from green leaves.

In the late 90s, another AI hype cycle starts. A focus for this one is Deep Blue defeating world chess champion Garry Kasparov in 1997. Chess was a proverbial smart people game; is the AI finally smart enough to be humanlike now that it can beat the smartest person in the world?

No. Instead, chess stopped being a proverbial smart people game, now that it could be brute-forced, and Deep Blue looked less smart and more fast, having enough computing power to examine 200 million moves per second by mostly brute force. Well, I’d play a lot better too if I got enough subjective time to examine possible moves on 200 million virtual boards.

AI winter 3 in the 2000s.

Now it’s machine learning that is in another hype cycle around neural networks and machine learning from the mid-2010s (maybe from AlphaGo v Lee Sedol?) and into present-day ChatGPT. Maybe now it’s finally going to be a real boy...

...but history suggests not. It seems likely to me that ChatGPT’s failures will become more blatant, chatbot detection will become more common, and massive disappointment will set in within a few years now that the hype is so high. A few years after AI winter 4, we’ll be accustomed to the rather more limited things that GPT makes a good tool for.

A lot of people are saying that ChatGPT or Bing Sydney passes such-and-such test. Consider: is this a test for which answer material is available on the internet? Because a lot of ChatGPT behavior involves, basically, searching for answers to copypaste in internet-derived training data. This is a great technique for sounding moderately intelligent on any sort of test or in any field; and a terrible technique for advancing the state of the art, or saying anything I couldn’t find with my own search, or showing one’s skill at anything but copypaste.

ChatGPT is like a cheating D student, and its likely applications are on the order of “What if you had infinite D students as unpaid interns?”

(spamming publishing houses with D-grade schlock being one such)

9 notes

·

View notes

Text

A number of high-profile whistleblowers in the technology industry have stepped into the spotlight in the past few years. For the most part, they have been revealing corporate practices that thwart the public interest: Frances Haugen exposed personal data exploitation at Meta, Timnit Gebru and Rebecca Rivers challenged Google on ethics and AI issues, and Janneke Parrish raised concerns about a discriminatory work culture at Apple, among others.

Many of these whistleblowers are women – far more, it appears, than the proportion of women working in the tech industry. This raises the question of whether women are more likely to be whistleblowers in the tech field. The short answer is: “It’s complicated.”

For many, whistleblowing is a last resort to get society to address problems that can’t be resolved within an organization, or at least by the whistleblower. It speaks to the organizational status, power and resources of the whistleblower; the openness, communication and values of the organization in which they work; and to their passion, frustration and commitment to the issue they want to see addressed. Are whistleblowers more focused on the public interest? More virtuous? Less influential in their organizations? Are these possible explanations for why so many women are blowing the whistle on big tech?

To investigate these questions, we, a computer scientist and a sociologist, explored the nature of big tech whistleblowing, the influence of gender, and the implications for technology’s role in society. What we found was both complex and intriguing.

Narrative of virtue

Whistleblowing is a difficult phenomenon to study because its public manifestation is only the tip of the iceberg. Most whistleblowing is confidential or anonymous. On the surface, the notion of female whistleblowers fits with the prevailing narrativethat women are somehow more altruistic, focused on the public interest or morally virtuous than men.

Consider an argument made by the New York State Woman Suffrage Association around giving U.S. women the right to votein the 1920s: “Women are, by nature and training, housekeepers. Let them have a hand in the city’s housekeeping, even if they introduce an occasional house-cleaning.” In other words, giving women the power of the vote would help “clean up” the mess that men had made.

More recently, a similar argument was used in the move to all-women traffic enforcement in some Latin American cities under the assumption that female police officers are more impervious to bribes. Indeed, the United Nations has recently identified women’s global empowerment as key to reducing corruption and inequality in its world development goals.

There is data showing that women, more so than men, are associated with lower levels of corruption in government and business. For example, studies show that the higher the share of female elected officials in governments around the world, the lower the corruption. While this trend in part reflects the tendency of less corrupt governments to more often elect women, additional studies show a direct causal effect of electing female leaders and, in turn, reducing corruption.

Experimental studies and attitudinal surveys also show that women are more ethical in business dealings than their male counterparts, and one study using data on actual firm-level dealings confirms that businesses led by women are directly associated with a lower incidence of bribery. Much of this likely comes down to the socialization of men and women into different gender roles in society.

Hints, but no hard data

Although women may be acculturated to behave more ethically, this leaves open the question of whether they really are more likely to be whistleblowers. The full data on who reports wrongdoing is elusive, but scholars try to address the question by asking people about their whistleblowing orientation in surveys and in vignettes. In these studies, the gender effect is inconclusive.

However, women appear more willing than men to report wrongdoing when they can do so confidentially. This may be related to the fact that female whistleblowers may face higher rates of reprisal than male whistleblowers.

In the technology field, there is an additional factor at play. Women are under-represented both in numbers and in organizational power. The “Big Five” in tech – Google, Meta, Apple, Amazon and Microsoft – are still largely white and male.

Women currently represent about 25% of their technology workforce and about 30% of their executive leadership. Women are prevalent enough now to avoid being tokens but often don’t have the insider status and resources to effect change. They also lack the power that sometimes corrupts, referred to as the corruption opportunity gap.

In the public interest

Marginalized people often lack a sense of belonging and inclusion in organizations. The silver lining to this exclusion is that those people may feel less obligated to toe the line when they see wrongdoing. Given all of this, it is likely that some combination of gender socialization and female outsider status in big tech creates a situation where women appear to be the prevalent whistleblowers.

It may be that whistleblowing in tech is the result of a perfect storm between the field’s gender and public interest problems. Clear and conclusive data does not exist, and without concrete evidence the jury is out. But the prevalence of female whistleblowers in big tech is emblematic of both of these deficiencies, and the efforts of these whistleblowers are often aimed at boosting diversity and reducing the harm big tech causes society.

More so than any other corporate sector, tech pervades people’s lives. Big tech creates the tools people use every day, defines the information the public consumes, collects data on its users’ thoughts and behavior, and plays a major role in determining whether privacy, safety, security and welfare are supported or undermined.

And yet, the complexity, proprietary intellectual property protections and ubiquity of digital technologies make it hard for the public to gauge the personal risks and societal impact of technology. Today’s corporate cultural firewalls make it difficult to understand the choices that go into developing the products and services that so dominate people’s lives.

Of all areas within society in need of transparency and a greater focus on the public interest, we believe the most urgent priority is big tech. This makes the courage and the commitment of today’s whistleblowers all the more important.

#honestly I don't think women are naturally more virtuous than men#or any bioessentialism shit like that#but women are often raised to care more about their community and doing the right thing#we really need to start raising boys better#but I think that's why more whistleblowers are women#because women are more likely to have been raised to think of the community and to do the right thing

10 notes

·

View notes

Text

After four years of watching Donald Trump inflict flesh wounds on China with his ineffectual trade war, U.S. President Joe Biden appears to have found the jugular. The goal is the same, but this knife is sharper—and could set back China’s tech ambitions by as much as a decade.

The target: semiconductor chips, especially the cutting-edge variety used for supercomputers and artificial intelligence. New export controls announced by the Biden administration this month prohibit the sale of not only those chips to China but also the advanced equipment needed to make them, as well as knowledge from any U.S. citizens, residents, or green card holders.

The chips, wafer-thin and the size of a fingernail, underpin everything from our smartphones to the advanced weapons systems that the United States specifically called out in its filing announcing the export restrictions. Perhaps more important—and this is where the U.S. curbs will hurt China the most—they are indispensable to the technologies of the future, such as AI and self-driving cars, as well as virtually every industry from pharmaceuticals to defense.

“You can pick a cliche—people talk about it as the ‘new oil’ or whatever,” said Raj Varadarajan, a managing director and senior partner at the Boston Consulting Group whose research has focused on the semiconductor industry. “But it’s there in everything, it’s pervading everything, and that’s one of the reasons it’s become such a flashpoint.”

China has set out lofty ambitions for its technology sector, with several government plans over the past decade setting out targets such as self-sufficiency in high-tech manufacturing by 2025, global leadership in AI by 2030, and global industry standards dominance by 2035. The latest U.S. broadside is aimed squarely at that “Made in China” sign.

“I think this is part of also signaling to China that we are not just going to resolve to give China global leadership in some of these key areas,” said Daniel Gerstein, a senior policy researcher at the Rand Corp. who previously served in the U.S. Department of Homeland Security’s Science and Technology Directorate. “We don’t want to lose and become beholden, if you will, to Chinese approaches.”

The semiconductor industry is the cornerstone of that strategy, and China has made significant strides in the recent past. The country now accounts for 35 percent of the global market, according to the Semiconductor Industry Association (SIA). But that figure reflects the final sales of finished chips to electronics companies, many of which have large manufacturing operations concentrated in China. The more high-tech and critical parts of the process, such as chip design and initial production, are still dominated by the United States.

And while China can hold its own at the lower end of the spectrum and the production of older-generation chips, it still lags behind in the cutting-edge research, design, and advanced technology that the Biden administration’s export restrictions target. Those goals have now likely been pushed back several years.

A significant reason for China’s vulnerability, as well as its painstaking effort to achieve independence, is how interconnected the global semiconductor supply chain is. Chips will often be designed in one country; fabricated in another using machines from a third; tested in a fourth; and finally assembled and placed into electronic devices in a fifth—sometimes with a few more countries and steps in between.

And many of those countries have concentrated their strengths and capacities in certain parts of that process, creating potential bottlenecks that can easily be exploited. For instance, the SIA estimates that there are “more than 50 points across the value chain where one region holds more than 65% of the global market share.” And 92 percent of manufacturing capacity for the world’s most advanced chips is concentrated in Taiwan; the remaining 8 percent is in South Korea.

The United States is trying to hedge its bets on that front as well, passing the CHIPS and Science Act this year, which provides $52 billion in incentives—most of it for companies that set up chip factories on U.S. soil—and hundreds of billions of dollars more to further shore up its research and development capabilities. Biden has been doing the rounds in upstate New York this month, touting the impact of the act, including at an IBM plant in Poughkeepsie (a day before the export controls were announced) and a Micron facility in Syracuse on Thursday.

For the United States, building up its own manufacturing ecosystem is a fail-safe. For China, it has rapidly become an absolute necessity.

“This is an effort that is going to take hundreds of billions of dollars and an incredible amount of engineering talent and energy to recreate a semiconductor supply chain that doesn’t involve U.S. technology,” said Jordan Schneider, a senior analyst at the Rhodium Group. “This supply chain is so globalized, but also so specialized, that at any step in it there’s only a handful of firms in the world that can do it, and if you’re sort of locked out of any one of these steps, then you can’t make chips.”

There are still some unanswered questions, including how the restrictions will be implemented in practice. In many cases, they give companies the option to apply for licenses to use and sell U.S. technology.

“It’s not clear that permission will be denied. It’s very possible that permission will be given, and so it’ll just delay and slow down some things,” Varadarajan said.

The other big question is whether and how China might hit back. Beijing has slammed what it calls “abuse” of export controls and warned that the restrictions could ultimately “backfire” on Washington, but its response so far has been a distant cry from the tit-for-tat tariffs that were a hallmark of Trump’s trade war.

With semiconductors specifically, the vast gap between U.S. and Chinese technological capabilities means Beijing doesn’t have much with which to retaliate. While China accounts for a significant portion of mature node chips—older, larger semiconductors that are not as cutting-edge but are used in products such as cars—it is not indispensable, and production can likely shift elsewhere without much disruption.

“If the U.S. bans selling semiconductors to China, and China says [it is also] going to ban semiconductors, there isn’t much in terms of things that they make over there that they can ban equivalent to proportional response,” Varadarajan said.

China, in any event, is backed into a corner. Any move Beijing makes at the moment to cut itself off from the global supply chain could hit the country’s employment and exports, both of which it can ill afford with a current economic growth rate of 3 percent—far lower than government forecasts—and no easy way out.

Actions within China in the weeks after the U.S. export controls were announced betray the uncertainty within of what to do next. The Chinese government reportedly held emergency meetings with the country’s top semiconductor firms to assess the impact of the restrictions. The Financial Times reported that one of the leading firms, Yangtze Memory Technologies Corp., has already asked several American employees to leave.

China will be forced to double down on its yearslong effort to build its own semiconductor ecosystem and might just achieve its goal of becoming self-sufficient in the long run. But in the short term, there’s likely to be pain.

“The Chinese companies are going to have an enormously difficult time trying to push past these limits without U.S. technology, but any effort to do so just to get to a 2022 level will probably take a decade or more,” Schneider said. “And even with all the effort, it’s not clear that they would succeed.”

4 notes

·

View notes

Note

Touching 13 or 29 for Shawn and Leigh?? 🥺

I have combined these! Hope that's okay and that you enjoy! :)

Also on AO3

"Long day?"

The only response being a grunt, half muffled by the throw pillows on the sofa, told Leigh a few things. One, it had definitely been a long day for his partner; two, it had put Shawn into a decidedly grumpy mood; and three, sometimes the frustration just needed a little help to disperse before it settled too deeply, before it had a chance to send the other man spiraling into a fit of despair and doubt. For all Shawn had come a long way over the past few years certain trepidations echoed, holdovers from those first anxious months not knowing who he was, how he fit. Not knowing what to do with choice, that crucial ability to choose, of actions and consequences and the painful, beautiful reality of defining himself as best he could.

In the beginning, Shawn had been against the idea of going into politics. Now, he met with the Council or their undersecretaries almost daily.

I want to know that I did something, he'd said once, nervous, fingertips gingerly poised over the haptic keyboard, his response, his acceptance of a position he'd never thought he'd apply for let alone get, waiting for that life-altering button push. I want to know that I helped, even a little. Crucial connections made, relationships fostered with EDI, with the Geth, and Shawn wasn't so sure he'd be useful in this arena, wasn't sure he could overcome those massive gaps in his knowledge of the world and how it worked, but it meant something to him. Being in-between, not wholly synthetic or wholly organic, and it mattered that one part not be dismissed in favor of the other.

It mattered, he'd said, that he be treated as a whole person, and that his fully synthetic, AI friends and associates be treated as the people they were, too.

But causes close to the heart were often heavy, a weight Leigh liked to try and alleviate in any way he could.

So he set his own datapad aside, the work he often felt beholden to loosening its grip little by little as his priorities grew, changed, impacted in no small part by the man currently laying face down on his sofa, and made his way over. Knelt beside him. Considered him.

Reached out and nudged him, earning another grunt.

He smiled.

"What do you need, love?" he murmured, hand moving to run along Shawn's back. And the other man sighed, turning his head so they were face to face, and Leigh leaned in to kiss the little furrow that always appeared between Shawn's eyebrows when he frowned.

"You."

His smile widened, and he pressed a second kiss to his forehead.

"You always have me," he pointed out, cocking his eyebrow. "Think you can be more specific?"

Another nudge, another poke, and Shawn's scowl was deeply undermined by the fact that he was scrunching his nose, the corners of his mouth fighting against his desire to frown by curving ever so slightly upward.

"Hmmm."

Leigh's fingers glanced over his ribs, feather-light through the thin fabric of his T-shirt. Felt Shawn twitch beneath his touch, and gave him a wicked grin.

"Seems you've a few ideas yourself," Shawn went on, biting his lower lip as Leigh retraced the same area.

"Mmm maybe."

Shawn narrowed his eyes, scowl easing into a smirk, and Leigh could only laugh in surprise when the other man shifted, tugging him bodily onto the sofa until he was settled atop of him.

"You realize this won't stop me from tickling you, right?"

"You realize this just levels the playing field, right?" Shawn retorted, a glint in his eyes, and Leigh barely had time to brace before his partner's fingers unerringly sought out his own ticklish spots and exploited them mercilessly. He writhed, trying in vain to reach Shawn's ribs again, gasping for breath as he laughed against the other man's shoulder.

"Truce!"

"Truce?"

Shawn's fingers eased and Leigh turned his head enough to catch his eye, catch the flush on his cheeks. Relaxed against him a moment as they both caught their breaths.

"Not sure you're in a position to call for a truce," Shawn mused, hands slipping under Leigh's shirt. His nails ran lightly up and down his back. "A surrender, now…"

"Mmm no, I don't think so," Leigh countered before making his move. He buried his face in Shawn's neck, blowing a raspberry directly against the sensitive spot below his ear.

"Leigh!"

"Should've taken the truce, love," Leigh retorted, unrepentant. He adjusted his position, knees bracketing Shawn's hips and squeezing to keep his partner from accidentally bucking him right off the couch. The last time they'd messed around like this had ended with an elbow cracking hard against the coffee table, ice packs, and a freaked out Shawn, terrified that he may have actually hurt his boyfriend. More limits, more boundaries explored and set, and they had both known what was coming when Shawn had pushed the coffee table away with his foot earlier, a quick move when he'd tugged Leigh into his current position.

Because Shawn, Leigh had been delighted to learn, loved to laugh. Loved to indulge in silliness. Loved touch, loved movement, loved connection.

Loved sharing these things with Leigh, encouraging, rewards mutually felt and savored in a way Leigh had never really explored with previous partners.

He wasn't the stiff, no-nonsense, broken soldier to Shawn Shepard.

He was just Leigh.

He shivered as he felt Shawn's hands abruptly shift downward, boldly slipping under his jeans and briefs to grab his ass. A squeeze had him gasping, laughing, nuzzling against him.

"Is this a distraction tactic?"

"Isn't this whole thing a distraction tactic?"

And Shawn's cheeks were still flushed, eyes alight, but his smile was soft. Knowing.

"Depends," Leigh replied, easing off the tickling and brushing his nose to Shawn's. "Is it helping?"

"It always helps," Shawn murmured. He leaned up, capturing his lips with his own.

"You always help," he added, giving him a nip.

"Good," Leigh whispered, shivering. Shawn arched against him, sighing, and his hands found their way back up, cupping Leigh's face. Holding him steady, eyes searching his -- seeing him. Knowing him. An affection that still took Leigh out at the knees, made him warm from the inside out only to be contrasted by the shudders jolting through him when Shawn pulled him down into another kiss -- fervent, fevered, the kind of kiss that left Leigh dizzy, left him marveling at the idea that Shawn had ever thought he'd be bad at this part. A distraction all its own, some part of him knew, but he was still surprised when Shawn abruptly sat up, arms around him, standing and readjusting his hold on him. Another flutter in his stomach, a part of Leigh that had never before appreciated how much he loved having a partner who could handle him like this, take care of him like this perking up and taking avid interest, and he knew what was coming, knew what was going to happen, but made no move to stop it --

"--Shawn!"

Peals of laughter and he clung to his partner all the tighter, legs around his waist to keep his balance as Shawn shamelessly retaliated with a long, drawn-out raspberry against Leigh's own neck.

"Something about love, war, and fairness," Shawn murmured, breathless, smiling against his lips as he kissed him again. Leigh was laughing, fingers tangling in Shawn's hair.

"Just keep kissing me," he breathed, their laughs blending until they muffled between their lips.

#my writing#prompt fill#Shawn Shepard#Leigh Coats#Shawn x Leigh#male shepard clone#major coats#mshep clone x major coats#mass effect#These Ineffable Somethings

7 notes

·

View notes

Text

Overcoming the Top Security Challenges of AI-Driven Low-Code/No Code Development

New Post has been published on https://thedigitalinsider.com/overcoming-the-top-security-challenges-of-ai-driven-low-code-no-code-development/

Overcoming the Top Security Challenges of AI-Driven Low-Code/No Code Development

Low-code development platforms have changed the way people create custom business solutions, including apps, workflows, and copilots. These tools empower citizen developers and create a more agile environment for app development. Adding AI to the mix has only enhanced this capability. The fact that there aren’t enough people at an organization that have the skills (and time) to build the number of apps, automations and so on that are needed to drive innovation forward has given rise to the low-code/no-code paradigm. Now, without needing formal technical training, citizen developers can leverage user-friendly platforms and Generative AI to create, innovate and deploy AI-driven solutions.

But how secure is this practice? The reality is that it’s introducing a host of new risks. Here’s the good news: you don’t have to choose between security and the efficiency that business-led innovation provides.

A shift beyond the traditional purview

IT and security teams are used to focusing their efforts on scanning and looking for vulnerabilities written into code. They’ve centered on making sure developers are building secure software, assuring the software is secure and then – once it’s in production – monitoring it for deviations or for anything suspicious after the fact.

With the rise of low code and no code, more people than ever are building applications and using automation to create applications – outside the traditional development process. These are often employees with little to no software development background, and these apps are being created outside of security’s purview.

This creates a situation where IT is no longer building everything for the organization, and the security team lacks visibility. In a large organization, you might get a few hundred apps built in a year through professional development; with low/no code, you could get far more than that. That’s a lot of potential apps that could go unnoticed or unmonitored by security teams.

A wealth of new risks

Some of the potential security concerns associated with low-code/no-code development include:

Not in IT’s purview – as just mentioned, citizen developers work outside the lines of IT professionals, creating a lack of visibility and shadow app development. Additionally, these tools enable an infinite number of people to create apps and automations quickly, with just a few clicks. That means there’s an untold number of apps being created at breakneck pace by an untold number of people all without IT having the full picture.

No software development lifecycle (SDLC) – Developing software in this way means there’s no SDLC in place, which can lead to inconsistency, confusion and lack of accountability in addition to risk.

Novice developers – These apps are often being built by people with less technical skill and experience, opening the door to mistakes and security threats. They don’t necessarily think about the security or development ramifications in the way that a professional developer or someone with more technical experience would. And if a vulnerability is found in a specific component that is embedded into a large number of apps, it has the potential to be exploited across multiple instances

Bad identity practices – Identity management can also be an issue. If you want to empower a business user to build an application, the number one thing that might stop them is a lack of permissions. Often, this can be circumvented, and what happens is that you might have a user using someone else’s identity. In this case, there is no way to figure out if they’ve done something wrong. If you access something you are not allowed to or you tried to do something malicious, security will come looking for the borrowed user’s identity because there’s no way to distinguish between the two.

No code to scan – This causes a lack of transparency that can hinder troubleshooting, debugging and security analysis, as well as possible compliance and regulatory concerns.

These risks can all contribute to potential data leakage. No matter how an application is built – whether it gets built with drag-and-drop, a text-based prompt, or with code – it has an identity, it has access to data, it can perform operations, and it needs to communicate with users. Data is being moved, often between different places in the organization; this can easily break data boundaries or barriers.

Data privacy and compliance are also at stake. Sensitive data lives within these applications, but it’s being handled by business users who don’t know how (nor even think to) to properly store it. That can lead to a host of additional issues, including compliance violations.

Regaining visibility

As mentioned, one of the big challenges with low/no code is that it’s not under the purview of IT/security, which means data is traversing apps. There’s not always a clear understanding of who is really creating these apps, and there’s an overall lack of visibility into what’s really happening. And not every organization is even fully aware of what’s happening. Or they think citizen development isn’t happening in their organization, but it almost certainly is.

So, how can security leaders gain control and mitigate risk? The first step is to look into the citizen developer initiatives within your organization, find out who (if anyone) is leading these efforts and connect with them. You don’t want these teams to feel penalized or hindered; as a security leader, your goal should be to support their efforts but provide education and guidance on making the process safer.

Security must start with visibility. Key to this is creating an inventory of applications and developing an understanding of who is building what. Having this information will help ensure that if some kind of breach does occur, you’ll be able to trace the steps and figure out what happened.

Establish a framework for what secure development looks like. This includes the necessary policies and technical controls that will ensure users make the right choices. Even professional developers make mistakes when it comes to sensitive data; it’s even harder to control this with business users. But with the right controls in place, you can make it difficult to make a mistake.

Toward more secure low-code/no-code

The traditional process of manual coding has hindered innovation, especially in competitive time-to-market scenarios. With today’s low-code and no code platforms, even people without development experience can create AI-driven solutions. While this has streamlined app development, it can also jeopardize the safety and security of organizations. It doesn’t have to be a choice between citizen development and security, however; security leaders can partner with business users to find a balance for both.

#:not#agile#ai#Analysis#app#app development#applications#apps#automation#background#breach#Building#Business#Citizen Developers#code#code development#coding#compliance#cybersecurity#data#data privacy#Developer#developers#development#education#efficiency#employees#Environment#framework#Full

0 notes

Text

Exploring the Ethical Quandaries of AI: Undress AI Remove Clothes

In the realm of artificial intelligence (AI), the line between innovation and ethical considerations is often blurred. The latest controversy to emerge is the advent of AI-powered tools designed to "undress" individuals in digital images, essentially stripping them of their clothing with a few clicks. This technology, often marketed under various names like "Remove Clothes" or "Cloth Remover," utilizes deep learning algorithms to generate realistic nude images from clothed pictures. While proponents tout its potential applications in various fields, including fashion design and entertainment, critics raise serious concerns regarding privacy, consent, and the exacerbation of societal issues. Undress AI Remove Clothes

At its core, undressing AI operates on the principles of generative adversarial networks (GANs), a subset of machine learning where two neural networks, the generator and the discriminator, compete against each other. The generator creates synthetic images, while the discriminator distinguishes between real and fake ones. Through iterative training, the generator learns to produce increasingly convincing images, including those of unclothed individuals, by understanding patterns and features in vast datasets of clothed and nude images.

Proponents argue that undress AI technology has legitimate applications, such as aiding fashion designers in envisioning how garments will fit and drape on the human body without the need for physical prototypes. Additionally, it could be utilized in virtual try-on services, allowing consumers to visualize clothing items before making a purchase. In the entertainment industry, it may streamline the creation of digital characters and animations, reducing production costs and enhancing realism.

However, the ethical implications of undressing AI are profound and multifaceted. One of the foremost concerns is the potential for misuse and exploitation. With the proliferation of deepfake technology, there's a real risk that malicious actors could weaponize undress AI to create non-consensual pornographic material or to harass individuals by manipulating their images without their consent. This raises serious issues related to privacy, consent, and the psychological well-being of those targeted.

Moreover, the deployment of undressing AI exacerbates existing societal issues, particularly those related to body image and objectification. By reducing individuals to mere bodies, stripped of context and agency, this technology perpetuates harmful stereotypes and reinforces unrealistic beauty standards. It not only undermines the dignity and autonomy of the individuals depicted but also contributes to a culture of commodification and exploitation.

From a legal standpoint, the use of undress AI raises complex questions about intellectual property rights, privacy laws, and the boundaries of digital manipulation. While some jurisdictions have begun to introduce legislation specifically targeting deepfake technology and non-consensual image sharing, enforcement remains challenging, particularly in the context of rapidly evolving AI capabilities.

Addressing the ethical challenges posed by undress AI requires a multifaceted approach involving technological, legal, and societal interventions. Technological solutions, such as watermarking and digital signatures embedded within images, could help verify authenticity and prevent unauthorized manipulation. Enhanced education and awareness campaigns are essential to inform the public about the risks associated with AI-generated content and the importance of consent and digital literacy.

Furthermore, policymakers must engage in proactive regulation to ensure that the development and deployment of AI technologies prioritize ethical considerations and respect fundamental rights and freedoms. This includes robust data protection laws, stringent enforcement mechanisms, and clear guidelines for the responsible use of AI in sensitive contexts. Undress AI Remove Clothes

Ultimately, the rise of undressing AI underscores the urgent need for a comprehensive ethical framework to govern the development and deployment of AI technologies. While innovation holds the promise of transformative benefits, it must not come at the expense of fundamental human rights and dignity. By fostering dialogue and collaboration among stakeholders, we can harness the potential of AI for positive societal impact while mitigating its risks and ensuring accountability.

0 notes