#bruce schneier

Text

The true post-cyberpunk hero is a noir forensic accountant

I'm touring my new, nationally bestselling novel The Bezzle! Catch me in TOMORROW (Apr 17) in CHICAGO, then Torino (Apr 21) Marin County (Apr 27), Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

I was reared on cyberpunk fiction, I ended up spending 25 years at my EFF day-job working at the weird edge of tech and human rights, even as I wrote sf that tried to fuse my love of cyberpunk with my urgent, lifelong struggle over who computers do things for and who they do them to.

That makes me an official "post-cyberpunk" writer (TM). Don't take my word for it: I'm in the canon:

https://tachyonpublications.com/product/rewired-the-post-cyberpunk-anthology-2/

One of the editors of that "post-cyberpunk" anthology was John Kessel, who is, not coincidentally, the first writer to expose me to the power of literary criticism to change the way I felt about a novel, both as a writer and a reader:

https://locusmag.com/2012/05/cory-doctorow-a-prose-by-any-other-name/

It was Kessel's 2004 Foundation essay, "Creating the Innocent Killer: Ender's Game, Intention, and Morality," that helped me understand litcrit. Kessel expertly surfaces the subtext of Card's Ender's Game and connects it to Card's politics. In so doing, he completely reframed how I felt about a book I'd read several times and had considered a favorite:

https://johnjosephkessel.wixsite.com/kessel-website/creating-the-innocent-killer

This is a head-spinning experience for a reader, but it's even wilder to experience it as a writer. Thankfully, the majority of literary criticism about my work has been positive, but even then, discovering something that's clearly present in one of my novels, but which I didn't consciously include, is a (very pleasant!) mind-fuck.

A recent example: Blair Fix's review of my 2023 novel Red Team Blues which he calls "an anti-finance finance thriller":

https://economicsfromthetopdown.com/2023/05/13/red-team-blues-cory-doctorows-anti-finance-thriller/

Fix – a radical economist – perfectly captures the correspondence between my hero, the forensic accountant Martin Hench, and the heroes of noir detective novels. Namely, that a noir detective is a kind of unlicensed policeman, going to the places the cops can't go, asking the questions the cops can't ask, and thus solving the crimes the cops can't solve. What makes this noir is what happens next: the private dick realizes that these were places the cops didn't want to go, questions the cops didn't want to ask and crimes the cops didn't want to solve ("It's Chinatown, Jake").

Marty Hench – a forensic accountant who finds the money that has been disappeared through the cells in cleverly constructed spreadsheets – is an unlicensed tax inspector. He's finding the money the IRS can't find – only to be reminded, time and again, that this is money the IRS chooses not to find.

This is how the tax authorities work, after all. Anyone who followed the coverage of the big finance leaks knows that the most shocking revelation they contain is how stupid the ruses of the ultra-wealthy are. The IRS could prevent that tax-fraud, they just choose not to. Not for nothing, I call the Martin Hench books "Panama Papers fanfic."

I've read plenty of noir fiction and I'm a long-term finance-leaks obsessive, but until I read Fix's article, it never occurred to me that a forensic accountant was actually squarely within the noir tradition. Hench's perfect noir fit is either a happy accident or the result of a subconscious intuition that I didn't know I had until Fix put his finger on it.

The second Hench novel is The Bezzle. It's been out since February, and I'm still touring with it (Chicago tonight! Then Turin, Marin County, Winnipeg, Calgary, Vancouver, etc). It's paying off – the book's a national bestseller.

Writing in his newsletter, Henry Farrell connects Fix's observation to one of his own, about the nature of "hackers" and their role in cyberpunk (and post-cyberpunk) fiction:

https://www.programmablemutter.com/p/the-accountant-as-cyberpunk-hero

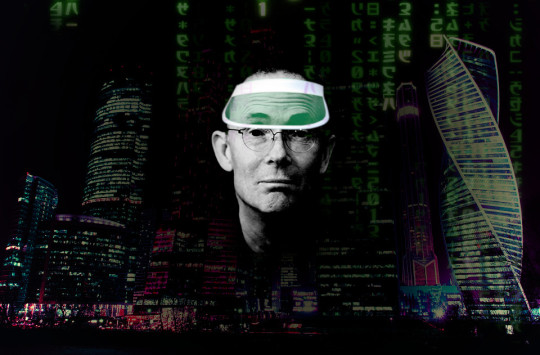

Farrell cites Bruce Schneier's 2023 book, A Hacker’s Mind: How the Powerful Bend Society’s Rules and How to Bend Them Back:

https://pluralistic.net/2023/02/06/trickster-makes-the-world/

Schneier, a security expert, broadens the category of "hacker" to include anyone who studies systems with an eye to finding and exploiting their defects. Under this definition, the more fearsome hackers are "working for a hedge fund, finding a loophole in financial regulations that lets her siphon extra profits out of the system." Hackers work in corporate offices, or as government lobbyists.

As Henry says, hacking isn't intrinsically countercultural ("Most of the hacking you might care about is done by boring seeming people in boring seeming clothes"). Hacking reinforces – rather than undermining power asymmetries ("The rich have far more resources to figure out how to gimmick the rules"). We are mostly not the hackers – we are the hacked.

For Henry, Marty Hench is a hacker (the rare hacker that works for the good guys), even though "he doesn’t wear mirrorshades or get wasted chatting to bartenders with Soviet military-surplus mechanical arms." He's a gun for hire, that most traditional of cyberpunk heroes, and while he doesn't stand against the system, he's not for it, either.

Henry's pinning down something I've been circling around for nearly 30 years: the idea that though "the street finds its own use for things," Wall Street and Madison Avenue are among the streets that might find those uses:

https://craphound.com/nonfic/street.html

Henry also connects Martin Hench to Marcus Yallow, the hero of my YA Little Brother series. I have tried to make this connection myself, opining that while Marcus is a character who is fighting to save an internet that he loves, Marty is living in the ashes of the internet he lost:

https://pluralistic.net/2023/05/07/dont-curb-your-enthusiasm/

But Henry's Marty-as-hacker notion surfaces a far more interesting connection between the two characters. Marcus is a vehicle for conveying the excitement and power of hacking to young readers, while Marty is a vessel for older readers who know the stark terror of being hacked, by the sadistic wolves who're coming for all of us:

https://www.youtube.com/watch?v=I44L1pzi4gk

Both Marcus and Marty are explainers, as am I. Some people say that exposition makes for bad narrative. Those people are wrong:

https://maryrobinettekowal.com/journal/my-favorite-bit/my-favorite-bit-cory-doctorow-talks-about-the-bezzle/

"Explaining" makes for great fiction. As Maria Farrell writes in her Crooked Timber review of The Bezzle, the secret sauce of some of the best novels is "information about how things work. Things like locks, rifles, security systems":

https://crookedtimber.org/2024/03/06/the-bezzle/

Where these things are integrated into the story's "reason and urgency," they become "specialist knowledge [that] cuts new paths to move through the world." Hacking, in other words.

This is a theme Paul Di Filippo picked up on in his review of The Bezzle for Locus:

https://locusmag.com/2024/04/paul-di-filippo-reviews-the-bezzle-by-cory-doctorow/

Heinlein was always known—and always came across in his writings—as The Man Who Knew How the World Worked. Doctorow delivers the same sense of putting yourself in the hands of a fellow who has peered behind Oz’s curtain. When he fills you in lucidly about some arcane bit of economics or computer tech or social media scam, you feel, first, that you understand it completely and, second, that you can trust Doctorow’s analysis and insights.

Knowledge is power, and so expository fiction that delivers news you can use is novel that makes you more powerful – powerful enough to resist the hackers who want to hack you.

Henry and I were both friends of Aaron Swartz, and the Little Brother books are closely connected to Aaron, who helped me with Homeland, the second volume, and wrote a great afterword for it (Schneier wrote an afterword for the first book). That book – and Aaron's afterword – has radicalized a gratifying number of principled technologists. I know, because I meet them when I tour, and because they send me emails. I like to think that these hackers are part of Aaron's legacy.

Henry argues that the Hench books are "purpose-designed to inspire a thousand Max Schrems – people who are probably past their teenage years, have some grounding in the relevant professions, and really want to see things change."

(Schrems is the Austrian privacy activist who, as a law student, set in motion the events that led to the passage of the EU's General Data Privacy Regulation:)

https://pluralistic.net/2020/05/15/out-here-everything-hurts/#noyb

Henry points out that William Gibson's Neuromancer doesn't mention the word "internet" – rather, Gibson coined the term cyberspace, which, as Henry says, is "more ‘capitalism’ than ‘computerized information'… If you really want to penetrate the system, you need to really grasp what money is and what it does."

Maria also wrote one of my all-time favorite reviews of Red Team Blues, also for Crooked Timber:

https://crookedtimber.org/2023/05/11/when-crypto-meant-cryptography/

In it, she compares Hench to Dickens' Bleak House, but for the modern tech world:

You put the book down feeling it’s not just a fascinating, enjoyable novel, but a document of how Silicon Valley’s very own 1% live and a teeming, energy-emitting snapshot of a critical moment on Earth.

All my life, I've written to find out what's going on in my own head. It's a remarkably effective technique. But it's only recently that I've come to appreciate that reading what other people write about my writing can reveal things that I can't see.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/17/panama-papers-fanfic/#the-1337est-h4x0rs

Image:

Frédéric Poirot (modified)

https://www.flickr.com/photos/fredarmitage/1057613629 CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#science fiction#cyberpunk#literary criticism#maria farrell#henry farrell#noir#martin hench#marty hench#red team blues#the bezzle#forensic accountants#hackers#bruce schneier#post-cyberpunk#blair fix

187 notes

·

View notes

Text

If we spent just one-tenth of the effort we spend prosecuting the poor on prosecuting the rich, it would be a very different world.

Bruce Schneier

10 notes

·

View notes

Quote

If you think technology can solve your security problems, then you don’t understand the problems and you don’t understand the technology

Bruce Schneier

0 notes

Text

The End of Trust

New Post has been published on https://www.aneddoticamagazine.com/the-end-of-trust/

The End of Trust

EFF and McSweeney’s have teamed up to bring you The End of Trust (McSweeney’s 54). The first all-nonfiction McSweeney’s issue is a collection of essays and interviews focusing on issues related to technology, privacy, and surveillance.

The collection features writing by EFF’s team, including Executive Director Cindy Cohn, Education and Design Lead Soraya Okuda, Senior Investigative Researcher Dave Maass, Special Advisor Cory Doctorow, and board member Bruce Schneier.

Anthropologist Gabriella Coleman contemplates anonymity; Edward Snowden explains blockchain; journalist Julia Angwin and Pioneer Award-winning artist Trevor Paglen discuss the intersections of their work; Pioneer Award winner Malkia Cyril discusses the historical surveillance of black bodies; and Ken Montenegro and Hamid Khan of Stop LAPD Spying debate author and intelligence contractor Myke Cole on the question of whether there’s a way law enforcement can use surveillance responsibly.

The End of Trust is available to download and read right now under a Creative Commons BY-NC-ND license.

#bruce schneier#Cindy Cohn#collection of essays and interviews#Cory Doctorow#Dave Maass#Edward Snowden#EFF#Gabriella Coleman#privacy#Soraya Okuda#surveillance#technology#The End of Trust

1 note

·

View note

Text

Schneier's Law: Anyone, from the most clueless amateur to the best cryptographer, can create an algorithm that he himself can’t break.

— Schneier's Law, written by cryptographer Bruce Schneier

0 notes

Text

Limits of AI and LLM for attorneys

Note: Creepio is a featured player among Auralnauts.

The current infatuation with Artificial Intelligence (AI), especially at the state bar which is pushing CLEs about how lawyers need to get on the AI bandwagon, is generally an un-serious infatuation with a marketing concept.

AI and LLM – language learning models, on which much of recent AI is based – has nothing to do with accuracy. So, for a…

View On WordPress

0 notes

Text

Artificial intelligence will change so many aspects of society, largely in ways that we cannot conceive of yet. Democracy, and the systems of governance that surround it, will be no exception. In this short essay, I want to move beyond the “AI generated disinformation” trope and speculate on some of the ways AI will change how democracy functions – in both large and small ways.

When I survey how artificial intelligence might upend different aspects of modern society, democracy included, I look at four different dimensions of change: speed, scale, scope, and sophistication. Look for places where changes in degree result in changes of kind. Those are where the societal upheavals will happen.

Some items on my list are still speculative, but non require science-fictional levels of technological advance. And we can see the first stages of many of them today.

—Bruce Schneier [a major dude in cybersecurity btw]

0 notes

Text

Do AI detectors work?

In short, no. While some (including OpenAI) have released tools that purport to detect AI-generated content, none of these have proven to reliably distinguish between AI-generated and human-generated content.

Additionally, ChatGPT has no “knowledge” of what content could be AI-generated. It will sometimes make up responses to questions like “did you write this [essay]?” or “could this have been written by AI?” These responses are random and have no basis in fact.

1 note

·

View note

Text

''When most people look at a system, they focus on how it works. When security technologists look at the same system, they can’t help but focus on how it can be made to fail: how that failure can be used to force the system to behave in a way it shouldn’t, in order to do something it shouldn’t be able to do—and then how to use that behavior to gain an advantage of some kind.

That’s what a hack is: an activity allowed by the system that subverts the goal or intent of the system.

...

Hacking is how the rich and powerful subvert the rules to increase both their wealth and power. They work to find novel hacks, and also to make sure their hacks remain so they can continue to profit from them.

...It’s not that the wealthy and powerful are better at hacking, it’s that they’re less likely to be punished for doing so. Indeed, their hacks often become just a normal part of how society works. Fixing this is going to require institutional change. Which is hard, because institutional leaders are the very people stacking the deck against us.''

-Bruce Schneier, A Hacker's Mind

0 notes

Text

Shamir Secret Sharing

It’s 3am. Paul, the head of PayPal database administration carefully enters his elaborate passphrase at a keyboard in a darkened cubicle of 1840 Embarcadero Road in East Palo Alto, for the fifth time. He hits Return. The green-on-black console window instantly displays one line of text: “Sorry, one or more wrong passphrases. Can’t reconstruct the key. Goodbye.”

There is nerd pandemonium all around us. James, our recently promoted VP of Engineering, just climbed the desk at a nearby cubicle, screaming: “Guys, if we can’t get this key the right way, we gotta start brute-forcing it ASAP!” It’s gallows humor – he knows very well that brute-forcing such a key will take millions of years, and it’s already 6am on the East Coast – the first of many “Why is PayPal down today?” articles is undoubtedly going to hit CNET shortly. Our single-story cubicle-maze office is buzzing with nervous activity of PayPalians who know they can’t help but want to do something anyway. I poke my head up above the cubicle wall to catch a glimpse of someone trying to stay inside a giant otherwise empty recycling bin on wheels while a couple of Senior Software Engineers are attempting to accelerate the bin up to dangerous speeds in the front lobby. I lower my head and try to stay focused. “Let’s try it again, this time with three different people” is the best idea I can come up with, even though I am quite sure it will not work.

It doesn’t.

The key in question decrypts PayPal’s master payment credential table – also known as the giant store of credit card and bank account numbers. Without access to payment credentials, PayPal doesn’t really have a business per se, seeing how we are supposed to facilitate payments, and that’s really hard to do if we no longer have access to the 100+ million credit card numbers our users added over the last year of insane growth.

This is the story of a catastrophic software bug I briefly introduced into the PayPal codebase that almost cost us the company (or so it seemed, in the moment.) I’ve told this story a handful of times, always swearing the listeners to secrecy, and surprisingly it does not appear to have ever been written down before. 20+ years since the incident, it now appears instructive and a little funny, rather than merely extremely embarrassing.

Before we get back to that fateful night, we have to go back another decade. In the summer of 1991, my family and I moved to Chicago from Kyiv, Ukraine. While we had just a few hundred dollars between the five of us, we did have one secret advantage: science fiction fans.

My dad was a highly active member of Zoryaniy Shlyah – Kyiv’s possibly first (and possibly only, at the time) sci-fi fan club – the name means “Star Trek” in Ukrainian, unsurprisingly. He translated some Stansilaw Lem (of Solaris and Futurological Congress fame) from Polish to Russian in the early 80s and was generally considered a coryphaeus at ZSh.

While USSR was more or less informationally isolated behind the digital Iron Curtain until the late ‘80s, by 1990 or so, things like FidoNet wriggled their way into the Soviet computing world, and some members of ZSh were now exchanging electronic mail with sci-fi fans of the free world.

The vaguely exotic news of two Soviet refugee sci-fi fans arriving in Chicago was transmitted to the local fandom before we had even boarded the PanAm flight that took us across the Atlantic [1]. My dad (and I, by extension) was soon adopted by some kind Chicago science fiction geeks, a few of whom became close friends over the years, though that’s a story for another time.

A year or so after the move to Chicago, our new sci-fi friends invited my dad to a birthday party for a rising star of the local fandom, one Bruce Schneier. We certainly did not know Bruce or really anyone at the party, but it promised good food, friendly people, and probably filk. My role was to translate, as my dad spoke limited English at the time.

I had fallen desperately in love with secret codes and cryptography about a year before we left Ukraine. Walking into Bruce’s library during the house tour (this was a couple years before Applied Cryptography was published and he must have been deep in research) felt like walking into Narnia.

I promptly abandoned my dad to fend for himself as far as small talk and canapés were concerned, and proceeded to make a complete ass out of myself by brazenly asking the host for a few sheets of paper and a pencil. Having been obliged, I pulled a half dozen cryptography books from the shelves and went to work trying to copy down some answers to a few long-held questions on the library floor. After about two hours of scribbling alone like a man possessed, I ran out of paper and decided to temporarily rejoin the party.

On the living room table, Bruce had stacks of copies of his fanzine Ramblings. Thinking I could use the blank sides of the pages to take more notes, I grabbed a printout and was about to quietly return to copying the original S-box values for DES when my dad spotted me from across the room and demanded I help him socialize. The party wrapped soon, and our friends drove us home.

The printout I grabbed was not a Ramblings issue. It was a short essay by Bruce titled Sharing Secrets Among Friends, essentially a humorous explanation of Shamir Secret Sharing.

Say you want to make sure that something really really important and secret (a nuclear weapon launch code, a database encryption key, etc) cannot be known or used by a single (friendly) actor, but becomes available, if at least n people from a group of m choose to do it. Think two on-duty officers (from a cadre of say 5) turning keys together to get ready for a nuke launch.

The idea (proposed by Adi Shamir – the S of RSA! – in 1979) is as simple as it is beautiful.

Let’s call the secret we are trying to split among m people K.

First, create a totally random polynomial that looks like: y(x) = C0 * x^(n-1) + C1 * x^(n-2) + C2 * x^(n-3) ….+ K. “Create” here just means generate random coefficients C. Now, for every person in your trusted group of m, evaluate the polynomial for some randomly chosen Xm and hand them their corresponding (Xm,Ym) each.

If we have n of these points together, we can use Lagrange interpolating polynomial to reconstruct the coefficients – and evaluate the original polynomial at x=0, which conveniently gives us y(0) = K, the secret. Beautiful. I still had the printout with me, years later, in Palo Alto.

It should come as no surprise that during my time as CTO PayPal engineering had an absolute obsession with security. No firewall was one too many, no multi-factor authentication scheme too onerous, etc. Anything that was worth anything at all was encrypted at rest.

To decrypt, a service would get the needed data from its database table, transmit it to a special service named cryptoserv (an original SUN hardware running Solaris sitting on its own, especially tightly locked-down network) and a special service running only there would perform the decryption and send back the result.

Decryption request rate was monitored externally and on cryptoserv, and if there were too many requests, the whole thing was to shut down and purge any sensitive data and keys from its memory until manually restarted.

It was this manual restart that gnawed at me. At launch, a bunch of configuration files containing various critical decryption keys were read (decrypted by another key derived from one manually-entered passphrase) and loaded into the memory to perform future cryptographic services.

Four or five of us on the engineering team knew the passphrase and could restart cryptoserv if it crashed or simply had to have an upgrade. What if someone performed a little old-fashioned rubber-hose cryptanalysis and literally beat the passphrase out of one of us? The attacker could theoretically get access to these all-important master keys. Then stealing the encrypted-at-rest database of all our users’ secrets could prove useful – they could decrypt them in the comfort of their underground supervillain lair.

I needed to eliminate this threat.

Shamir Secret Sharing was the obvious choice – beautiful, simple, perfect (you can in fact prove that if done right, it offers perfect secrecy.) I decided on a 3-of-8 scheme and implemented it in pure POSIX C for portability over a few days, and tested it for several weeks on my Linux desktop with other engineers.

Step 1: generate the polynomial coefficients for 8 shard-holders.

Step 2: compute the key shards (x0, y0) through (x7, y7)

Step 3: get each shard-holder to enter a long, secure passphrase to encrypt the shard

Step 4: write out the 8 shard files, encrypted with their respective passphrases.

And to reconstruct:

Step 1: pick any 3 shard files.

Step 2: ask each of the respective owners to enter their passphrases.

Step 3: decrypt the shard files.

Step 4: reconstruct the polynomial, evaluate it for x=0 to get the key.

Step 5: launch cryptoserv with the key.

One design detail here is that each shard file also stored a message authentication code (a keyed hash) of its passphrase to make sure we could identify when someone mistyped their passphrase. These tests ran hundreds and hundreds of times, on both Linux and Solaris, to make sure I did not screw up some big/little-endianness issue, etc. It all worked perfectly.

A month or so later, the night of the key splitting party was upon us. We were finally going to close out the last vulnerability and be secure. Feeling as if I was about to turn my fellow shard-holders into cymeks, I gathered them around my desktop as PayPal’s front page began sporting the “We are down for maintenance and will be back soon” message around midnight.

The night before, I solemnly generated the new master key and securely copied it to cryptoserv. Now, while “Push It” by Salt-n-Pepa blared from someone’s desktop speakers, the automated deployment script copied shard files to their destination.

While each of us took turns carefully entering our elaborate passphrases at a specially selected keyboard, Paul shut down the main database and decrypted the payment credentials table, then ran the script to re-encrypt with the new key. Some minutes later, the database was running smoothly again, with the newly encrypted table, without incident.

All that was left was to restore the master key from its shards and launch the new, even more secure cryptographic service.

The three of us entered our passphrases… to be met with the error message I haven’t seen in weeks: “Sorry, one or more wrong passphrases. Can’t reconstruct the key. Goodbye.” Surely one of us screwed up typing, no big deal, we’ll do it again. No dice. No dice – again and again, even after we tried numerous combinations of the three people necessary to decrypt.

Minutes passed, confusion grew, tension rose rapidly.

There was nothing to do, except to hit rewind – to grab the master key from the file still sitting on cryptoserv, split it again, generate new shards, choose passphrases, and get it done. Not a great feeling to have your first launch go wrong, but not a huge deal either. It will all be OK in a minute or two.

A cursory look at the master key file date told me that no, it wouldn’t be OK at all. The file sitting on cryptoserv wasn’t from last night, it was created just a few minutes ago. During the Salt-n-Pepa-themed push from stage, we overwrote the master key file with the stage version. Whatever key that was, it wasn’t the one I generated the day before: only one copy existed, the one I copied to cryptoserv from my computer the night before. Zero copies existed now. Not only that, the push script appears to have also wiped out the backup of the old key, so the database backups we have encrypted with the old key are likely useless.

Sitrep: we have 8 shard files that we apparently cannot use to restore the master key and zero master key backups. The database is running but its secret data cannot be accessed.

I will leave it to your imagination to conjure up what was going through my head that night as I stared into the black screen willing the shards to work. After half a decade of trying to make something of myself (instead of just going to work for Microsoft or IBM after graduation) I had just destroyed my first successful startup in the most spectacular fashion.

Still, the idea of “what if we all just continuously screwed up our passphrases” swirled around my brain. It was an easy check to perform, thanks to the included MACs. I added a single printf() debug statement into the shard reconstruction code and instead of printing out a summary error of “one or more…” the code now showed if the passphrase entered matched the authentication code stored in the shard file.

I compiled the new code directly on cryptoserv in direct contravention of all reasonable security practices – what did I have to lose? Entering my own passphrase, I promptly got “bad passphrase” error I just added to the code. Well, that’s just great – I knew my passphrase was correct, I had it written down on a post-it note I had planned to rip up hours ago.

Another person, same error. Finally, the last person, JK, entered his passphrase. No error. The key still did not reconstruct correctly, I got the “Goodbye”, but something worked. I turned to the engineer and said, “what did you just type in that worked?”

After a second of embarrassed mumbling, he admitted to choosing “a$$word” as his passphrase. The gall! I asked everyone entrusted with the grave task of relaunching crytposerv to pick really hard to guess passphrases, and this guy…?! Still, this was something -- it worked. But why?!

I sprinted around the half-lit office grabbing the rest of the shard-holders demanding they tell me their passphrases. Everyone else had picked much lengthier passages of text and numbers. I manually tested each and none decrypted correctly. Except for the a$$word. What was it…

A lightning bolt hit me and I sprinted back to my own cubicle in the far corner, unlocked the screen and typed in “man getpass” on the command line, while logging into cryptoserv in another window and doing exactly the same thing there. I saw exactly what I needed to see.

Today, should you try to read up the programmer’s manual (AKA the man page) on getpass, you will find it has been long declared obsolete and replaced with a more intelligent alternative in nearly all flavors of modern Unix.

But back then, if you wanted to collect some information from the keyboard without printing what is being typed in onto the screen and remain POSIX-compliant, getpass did the trick. Other than a few standard file manipulation system calls, getpass was the only operating system service call I used, to ensure clean portability between Linux and Solaris.

Except it wasn’t completely clean.

Plain as day, there it was: the manual pages were identical, except Solaris had a “special feature”: any passphrase entered that was longer than 8 characters long was automatically reduced to that length anyway. (Who needs long passwords, amiright?!)

I screamed like a wounded animal. We generated the key on my Linux desktop and entered our novel-length passphrases right here. Attempting to restore them on a Solaris machine where they were being clipped down to 8 characters long would never work. Except, of course, for a$$word. That one was fine.

The rest was an exercise in high-speed coding and some entirely off-protocol file moving. We reconstructed the master key on my machine (all of our passphrases worked fine), copied the file to the Solaris-running cryptoserv, re-split it there (with very short passphrases), reconstructed it successfully, and PayPal was up and running again like nothing ever happened.

By the time our unsuspecting colleagues rolled back into the office I was starting to doze on the floor of my cubicle and that was that. When someone asked me later that day why we took so long to bring the site back up, I’d simply respond with “eh, shoulda RTFM.”

RTFM indeed.

P.S. A few hours later, John, our General Counsel, stopped by my cubicle to ask me something. The day before I apparently gave him a sealed envelope and asked him to store it in his safe for 24 hours without explaining myself. He wanted to know what to do with it now that 24 hours have passed.

Ha. I forgot all about it, but in a bout of “what if it doesn’t work” paranoia, I printed out the base64-encoded master key when we had generated it the night before, stuffed it into an envelope, and gave it to John for safekeeping. We shredded it together without opening and laughed about what would have never actually been a company-ending event.

P.P.S. If you are thinking of all the ways this whole SSS design is horribly insecure (it had some real flaws for sure) and plan to poke around PayPal to see if it might still be there, don’t. While it served us well for a few years, this was the very first thing eBay required us to turn off after the acquisition. Pretty sure it’s back to a single passphrase now.

Notes:

1: a member of Chicagoland sci-fi fan community let me know that the original news of our move to the US was delivered to them via a posted letter, snail mail, not FidoNet email!

517 notes

·

View notes

Text

Bruce Schneier's "A Hacker's Mind"

A Hacker’s Mind is security expert Bruce Schneier’s latest book, released today. For long-time readers of Schneier, the subject matter will be familiar, but this iteration of Schneier’s core security literacy curriculum has an important new gloss: power.

https://wwnorton.com/books/9780393866667

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/02/07/trickster-makes-the-world/#power-play

Schneier started out as a cryptographer, author of 1994’s Applied Cryptography, one of the standard texts on the subject. He created and co-created several important ciphers, and started two successful security startups that were sold onto larger firms. Many readers outside of cryptography circles became familiar with Schneier through his contribution to Neal Stephenson’s Cryptonomicon, and he is well-known in science fiction circles (he even received a Hugo nomination for editing the restaurant guide for MiniCon 34 in 1999).

https://www.schneier.com/wp-content/uploads/2016/02/restaurants-san-jose.pdf

But Schneier’s biggest claim in fame is as a science communicator, specifically in the domain of security. In the wake of the 9/11 bombings and the creation of a suite of hasty, ill-considered “security” measures, Schneier coined the term “security theater” to describe a certain kind of wasteful, harmful, pointless exercise, like forcing travelers to take off their shoes to board an airplane.

Schneier led the charge for a kind of sensible, reasonable thinking about security, using a mix of tactics to shift the discourse on the subject: debating TSA boss Kip Hawley, traveling with reporters through airport checkpoints while narrating countermeasures to defeat every single post-9/11 measure, and holding annual “movie-plot threat” competitions:

https://www.schneier.com/tag/movie-plot-threat-contests/

Most importantly, though, Schneier wrote long-form books that set out the case for sound security reasoning, railing against security theater and calling for policies that would actually make our physical and digital world more secure — abolishing DRM, clearing legal barriers to vulnerability research and disclosure, and debunking security snake-oil, from “unbreakable proprietary ciphers” to “behavioral detection training” for TSA officers.

Schneier inspired much of my own interest in cryptography, and he went on to design my wedding rings, which are cipher wheels:

https://www.schneier.com/blog/archives/2008/09/contest_cory_do.html

And then he judged a public cipher-design contest, which Chris Smith won with “The Fidget Protocol”:

http://craphound.com/FidgetProtocol.zip

Schneier’s books — starting with 2000’s Secrets and Lies — follow a familiar, winning formula. Each one advances a long-form argument for better security reasoning, leavened with a series of utterly delightful examples of successful and hacks and counterhacks, in which clever people engage in duels of wits over the best way to protect some precious resource — or bypass that protection. There is an endless supply of these, and they are addictive, impossible to read without laughing and sharing them on. There’s something innately satisfying about reading about hacks and counterhacks — as authors have understood since Poe wrote “The Purloined Letter” in 1844.

A Hacker’s Mind picks up on this familiar formula, with a fresh set of winning security anaecdotes, both new and historical, and restates Schneier’s hypothesis about how we should think about security — but, as noted, Hacker’s Mind brings a new twist to the subject: power.

In this book, Schneier broadens his frame to consider all of society’s rules — its norms, laws and regulations — as a security system, and then considers all the efforts to change those rules through a security lens, framing everything from street protests to tax-cheating as “hacks.”

This is a great analytical tool, one that evolved out of Schneier’s work on security policy at the Harvard Kennedy School. By thinking of (say) tax law as a security system, we can analyze its vulnerabilities just as we would analyze the risks to, say, your Gmail account. The tax system can be hacked by lobbying for tax-code loopholes, or by discovering and exploiting accidental loopholes. It can be hacked by suborning IRS inspectors, or by suborning Congress to cut the budget for IRS inspectors. It can be hacked by winning court cases defending exotic interpretations of the tax code, or by lobbying Congress to retroactively legalize those interpretations before a judge can toss them out.

This analysis has a problem, though: the hacker in popular imagination is a trickster figure, an analog for Coyote or Anansi, outsmarting the powerful with wits and stealth and bravado. The delight we take in these stories comes from the way that hacking can upend power differentials, hoisting elites on their own petard. An Anansi story in which a billionaire hires a trickster god to evade consequences for maiming workers in his factory is a hell of a lot less satisfying than the traditional canon.

Schneier resolves this conundrum by parsing hacking through another dimension: power. A hack by the powerful against society — tax evasion, regulatory arbitrage, fraud, political corruption — is a hack, sure, but it’s a different kind of hack from the hacks we’ve delighted in since “The Purloined Letter.”

This leaves us with two categories: hacks by the powerful to increase their power; and hacks by everyone else to take power away from the powerful. These two categories have become modern motifs in other domains — think of comedians’ talk of “punching up vs punching down” or the critique of the idea of “anti-white racism.”

But while this tool is familiar, it takes on a new utility when used to understand the security dimensions of policy, law and norms. Schneier uses it to propose several concrete proposals for making our policy “more secure” — that is, less vulnerable to corruption that further entrenches the powerful.

That said, the book does more to explain the source of problems than to lay out a program for addressing them — a common problem with analytical books. That’s okay, of course — we can’t begin to improve our society until we agree on what’s wrong with it — but there is definitely more work to be done in converting these systemic analyses into systemic policies.

Next week (Feb 8-17), I'll be in Australia, touring my book Chokepoint Capitalism with my co-author, Rebecca Giblin. We'll be in Brisbane on Feb 8, and then we're doing a remote event for NZ on Feb 9. Next are Melbourne, Sydney and Canberra. I hope to see you!

https://chokepointcapitalism.com/

[Image ID: The WW Norton cover for Bruce Schneier's 'A Hacker's Mind.']

#pluralistic#schneier#bruce schneier#books#reviews#hacking#power#punching up#punching down#gift guide#tax evasion#regulatory capture

56 notes

·

View notes

Text

Why Not Write Cryptography

I learned Python in high school in 2003. This was unusual at the time. We were part of a pilot project, testing new teaching materials. The official syllabus still expected us to use PASCAL. In order to satisfy the requirements, we had to learn PASCAL too, after Python. I don't know if PASCAL is still standard.

Some of the early Python programming lessons focused on cryptography. We didn't really learn anything about cryptography itself then, it was all just toy problems to demonstrate basic programming concepts like loops and recursion. Beginners can easily implement some old, outdated ciphers like Caesar, Vigenère, arbitrary 26-letter substitutions, transpositions, and so on.

The Vigenère cipher will be important. It goes like this: First, in order to work with letters, we assign numbers from 0 to 25 to the 26 letters of the alphabet, so A is 0, B is 1, C is 2 and so on. In the programs we wrote, we had to strip out all punctuation and spaces, write everything in uppercase and use the standard transliteration rules for Ä, Ö, Ü, and ß. That's just the encoding part. Now comes the encryption part. For every letter in the plain text, we add the next letter from the key, modulo 26, round robin style. The key is repeated after we get tot he end. Encrypting "HELLOWORLD" with the key "ABC" yields ["H"+"A", "E"+"B", "L"+"C", "L"+"A", "O"+"B", "W"+"C", "O"+"A", "R"+"B", "L"+"C", "D"+"A"], or "HFNLPYOLND". If this short example didn't click for you, you can look it up on Wikipedia and blame me for explaining it badly.

Then our teacher left in the middle of the school year, and a different one took over. He was unfamiliar with encryption algorithms. He took us through some of the exercises about breaking the Caesar cipher with statistics. Then he proclaimed, based on some back-of-the-envelope calculations, that a Vigenère cipher with a long enough key, with the length unknown to the attacker, is "basically uncrackable". You can't brute-force a 20-letter key, and there are no significant statistical patterns.

I told him this wasn't true. If you re-use a Vigenère key, it's like re-using a one time pad key. At the time I just had read the first chapters of Bruce Schneier's "Applied Cryptography", and some pop history books about cold war spy stuff. I knew about the problem with re-using a one-time pad. A one time pad is the same as if your Vigenère key is as long as the message, so there is no way to make any inferences from one letter of the encrypted message to another letter of the plain text. This is mathematically proven to be completely uncrackable, as long as you use the key only one time, hence the name. Re-use of one-time pads actually happened during the cold war. Spy agencies communicated through number stations and one-time pads, but at some point, the Soviets either killed some of their cryptographers in a purge, or they messed up their book-keeping, and they re-used some of their keys. The Americans could decrypt the messages.

Here is how: If you have message $A$ and message $B$, and you re-use the key $K$, then an attacker can take the encrypted messages $A+K$ and $B+K$, and subtract them. That creates $(A+K) - (B+K) = A - B + K - K = A - B$. If you re-use a one-time pad, the attacker can just filter the key out and calculate the difference between two plaintexts.

My teacher didn't know that. He had done a quick back-of-the-envelope calculation about the time it would take to brute-force a 20 letter key, and the likelihood of accidentally arriving at something that would resemble the distribution of letters in the German language. In his mind, a 20 letter key or longer was impossible to crack. At the time, I wouldn't have known how to calculate that probability.

When I challenged his assertion that it would be "uncrackable", he created two messages that were written in German, and pasted them into the program we had been using in class, with a randomly generated key of undisclosed length. He gave me the encrypted output.

Instead of brute-forcing keys, I decided to apply what I knew about re-using one time pads. I wrote a program that takes some of the most common German words, and added them to sections of $(A-B)$. If a word was equal to a section of $B$, then this would generate a section of $A$. Then I used a large spellchecking dictionary to see if the section of $A$ generated by guessing a section of $B$ contained any valid German words. If yes, it would print the guessed word in $B$, the section of $A$, and the corresponding section of the key. There was only a little bit of key material that was common to multiple results, but that was enough to establish how long they key was. From there, I modified my program so that I could interactively try to guess words and it would decrypt the rest of the text based on my guess. The messages were two articles from the local newspaper.

When I showed the decrypted messages to my teacher the next week, got annoyed, and accused me of cheating. Had I installed a keylogger on his machine? Had I rigged his encryption program to leak key material? Had I exploited the old Python random number generator that isn't really random enough for cryptography (but good enough for games and simulations)?

Then I explained my approach. My teacher insisted that this solution didn't count, because it relied on guessing words. It would never have worked on random numeric data. I was just lucky that the messages were written in a language I speak. I could have cheated by using a search engine to find the newspaper articles on the web.

Now the lesson you should take away from this is not that I am smart and teachers are sore losers.

Lesson one: Everybody can build an encryption scheme or security system that he himself can't defeat. That doesn't mean others can't defeat it. You can also create an secret alphabet to protect your teenage diary from your kid sister. It's not practical to use that as an encryption scheme for banking. Something that works for your diary will in all likelihood be inappropriate for online banking, never mind state secrets. You never know if a teenage diary won't be stolen by a determined thief who thinks it holds the secret to a Bitcoin wallet passphrase, or if someone is re-using his banking password in your online game.

Lesson two: When you build a security system, you often accidentally design around an "intended attack". If you build a lock to be especially pick-proof, a burglar can still kick in the door, or break a window. Or maybe a new variation of the old "slide a piece of paper under the door and push the key through" trick works. Non-security experts are especially susceptible to this. Experts in one domain are often blind to attacks/exploits that make use of a different domain. It's like the physicist who saw a magic show and thought it must be powerful magnets at work, when it was actually invisible ropes.

Lesson three: Sometimes a real world problem is a great toy problem, but the easy and didactic toy solution is a really bad real world solution. Encryption was a fun way to teach programming, not a good way to teach encryption. There are many problems like that, like 3D rendering, Chess AI, and neural networks, where the real-world solution is not just more sophisticated than the toy solution, but a completely different architecture with completely different data structures. My own interactive codebreaking program did not work like modern approaches works either.

Lesson four: Don't roll your own cryptography. Don't even implement a known encryption algorithm. Use a cryptography library. Chances are you are not Bruce Schneier or Dan J Bernstein. It's harder than you thought. Unless you are doing a toy programming project to teach programming, it's not a good idea. If you don't take this advice to heart, a teenager with something to prove, somebody much less knowledgeable but with more time on his hands, might cause you trouble.

346 notes

·

View notes

Note

hey random ask, but I was wondering if there are any tech information sites/blogs you would recommend?

How-To Geek, The Register, and BoingBoing are all good for general tech news (as are Ars Technica and TechCrunch, I just find myself at the other sites more often). How-To Geek is really great for tutorials and how-tos (I link them a lot in a lot of my how to computer posts). Cory Doctorow is a fantastic person to follow if you're interested in the more philosophical side of technology; you can find him at Pluralistic.net or right here on tumblr as @mostlysignssomeportents.

Schneier on Security is a *wonderful* blog run by a Bruce Schneier, who is a security expert with a lot of focus on the human side of security and who has opinions on and approaches to security, privacy, and internet freedoms that align very closely with mine.

Privacyguides.org is also a very, very, very good resource for the security conscious folks out there, and EFF.Org is the place to go if you want to know about what's going on in tech politically. ItsFOSS.com is a good resource for linux news and education. IFIXIT.com is where I point people for very specific repair tutorials, and for overall "how to computers go together" stuff I recommend Paul's Hardware. You can keep up with Right to Repair stuff by following Louis Rossman (who is correct about right to repair and incorrect about many things so heads up).

359 notes

·

View notes

Text

Bruce Springsteen, Clarence Clemons, Ernest "Boom" Carter, Garry Tallent, Danny Federici, and David Sancious photographed by Barry Schneier, 1974.

48 notes

·

View notes

Text

The End of Trust

New Post has been published on https://www.aneddoticamagazine.com/the-end-of-trust/

The End of Trust

EFF and McSweeney’s have teamed up to bring you The End of Trust (McSweeney’s 54). The first all-nonfiction McSweeney’s issue is a collection of essays and interviews focusing on issues related to technology, privacy, and surveillance.

The collection features writing by EFF’s team, including Executive Director Cindy Cohn, Education and Design Lead Soraya Okuda, Senior Investigative Researcher Dave Maass, Special Advisor Cory Doctorow, and board member Bruce Schneier.

Anthropologist Gabriella Coleman contemplates anonymity; Edward Snowden explains blockchain; journalist Julia Angwin and Pioneer Award-winning artist Trevor Paglen discuss the intersections of their work; Pioneer Award winner Malkia Cyril discusses the historical surveillance of black bodies; and Ken Montenegro and Hamid Khan of Stop LAPD Spying debate author and intelligence contractor Myke Cole on the question of whether there’s a way law enforcement can use surveillance responsibly.

The End of Trust is available to download and read right now under a Creative Commons BY-NC-ND license.

#bruce schneier#Cindy Cohn#collection of essays and interviews#Cory Doctorow#Dave Maass#Edward Snowden#EFF#Gabriella Coleman#privacy#Soraya Okuda#surveillance#technology#The End of Trust

1 note

·

View note