#ai is bullshit

Text

Absolutely fascinated by all the people who claim that AI and fanfiction are legally and conceptually indistinguishable, and advocating against one means advocating against the other. Can't tell if they actually think human work is inseparable from that created by machines or if they want us to believe they think that so they have an excuse to keep using AI to steal from living, breathing writers (including fanfic writers) and spew their endless garbage. I never want to find out.

#fuck ai bros#fuck ai#fuck ai writing#fanfiction is writing#ai is not#fuck ai everything#fuck ai art#ai is theft#ai is bullshit#ai is not art#ai is stupid#ai is a plague#ai is for assholes#fuck ai music#screw ai#ai is scary#ai#anti chatgpt#fanfiction#assholery#fuck ai all my homies hate ai#anti ai#anti ai writing#anti ai art#anti ai music#anti chatpgt#fuck chatgpt#honestly portraying yourself as saving as from the copyright overloads is such absolute shit

46 notes

·

View notes

Text

there is so much vapid, meaningless AI generated bullshit content coming across my dash now and I fucking hate it.

4 notes

·

View notes

Text

Oh damn the Catholics have joined in on the war against AI "art".

#anti ai#anti ai art#ex catholic#catholic#game over guys the catholic church is onto your bullshit lol

76K notes

·

View notes

Text

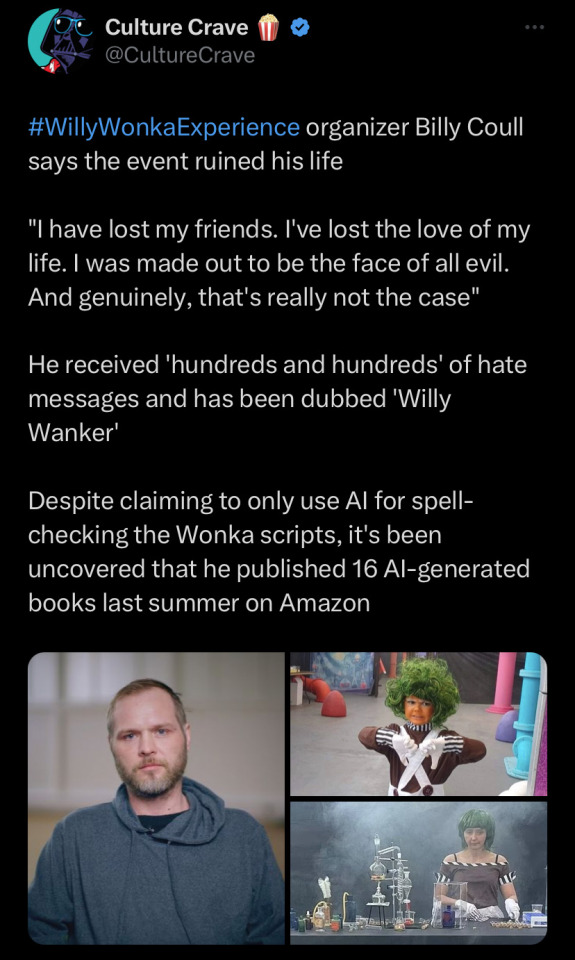

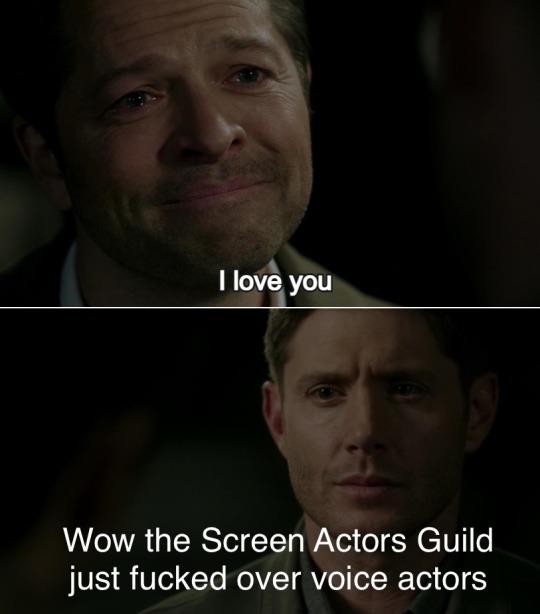

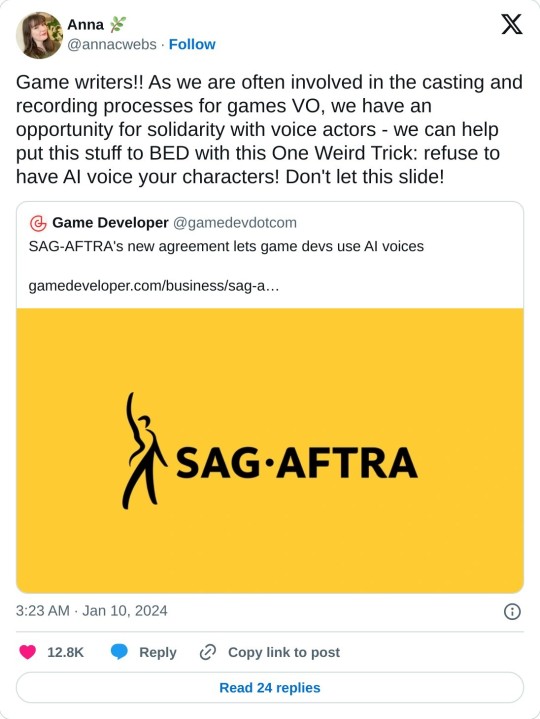

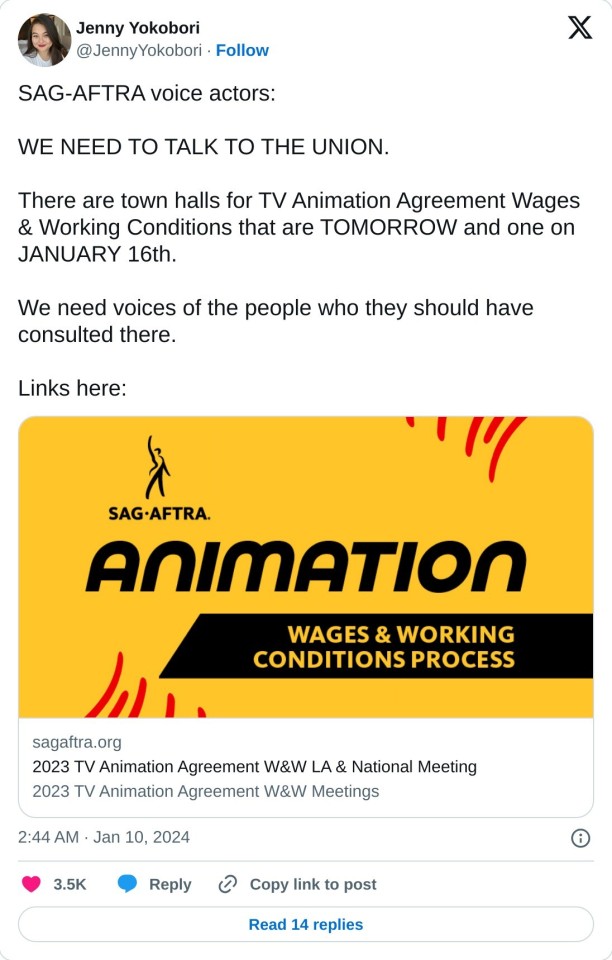

WE LIVE IN A HELL WORLD

Snippets from the article by Karissa Bell:

SAG-AFTRA, the union representing thousands of performers, has struck a deal with an AI voice acting platform aimed at making it easier for actors to license their voice for use in video games. ...

the agreements cover the creation of so-called “digital voice replicas” and how they can be used by game studios and other companies. The deal has provisions for minimum rates, safe storage and transparency requirements, as well as “limitations on the amount of time that a performance replica can be employed without further payment and consent.”

Notably, the agreement does not cover whether actors’ replicas can be used to train large language models (LLMs), though Replica Studios CEO Shreyas Nivas said the company was interested in pursuing such an arrangement. “We have been talking to so many of the large AAA studios about this use case,” Nivas said. He added that LLMs are “out-of-scope of this agreement” but “they will hopefully [be] things that we will continue to work on and partner on.”

...Even so, some well-known voice actors were immediately skeptical of the news, as the BBC reports. In a press release, SAG-AFTRA said the agreement had been approved by "affected members of the union’s voiceover performer community." But on X, voice actors said they had not been given advance notice. "How has this agreement passed without notice or vote," wrote Veronica Taylor, who voiced Ash in Pokémon. "Encouraging/allowing AI replacement is a slippery slope downward." Roger Clark, who voiced Arthur Morgan in Red Dead Redemption 2, also suggested he was not notified about the deal. "If I can pay for permission to have an AI rendering of an ‘A-list’ voice actor’s performance for a fraction of their rate I have next to no incentive to employ 90% of the lesser known ‘working’ actors that make up the majority of the industry," Clark wrote.

SAG-AFTRA’s deal with Replica only covers a sliver of the game industry. Separately, the union is also negotiating with several of the major game studios after authorizing a strike last fall. “I certainly hope that the video game companies will take this as an inspiration to help us move forward in that negotiation,” Crabtree said.

And here are some various reactions I've found about things people in/adjacent to this can do

And in OTHER AI games news, Valve is updating it's TOS to allow AI generated content on steam so long as devs promise they have the rights to use it, which you can read more about on Aftermath in this article by Luke Plunkett

#video games#voice acting#voice actors#sag aftra#ai#ai news#ai voice acting#video game news#Destiel meme#industry bullshit

25K notes

·

View notes

Text

alright guys. you know what this means. everyone make a sideblog and post even more incomprehensible shit than usual to fuck with the ai theyre training

1 note

·

View note

Text

I feel like I sometimes need a reminder that I can sometimes fucking draw.

But as my most recent post before this proves, it takes INCESSANT PRACTICE. (Which I did not indulge in before my Alastor bullshit)

Here have Bowie, two self portraits, and a still memory BS interpretative draw with my best friend that had been a speed trace.

I also have sketches I've done in glitter pen that are better than anything I've done in pencil with an eraser.

Draw your own shit.

#Ai is bullshit#from a bad artist to the masses#draw your own shit or pay a real artist never use Ai

0 notes

Text

I said this elsewhere but

not to be That Guy but I don't really see the point of moving platforms anymore.

There is no where we can hide on the internet from the silicon valley bros. There just isn't. Patreon is VC-funded and could announce tomorrow that oh of course they've been partnered with Midjourney for months already. Twitter actively scraps everything for AI learning. And even if you trusted the other big players like FB/IG to tell the truth about shit, people are going to use these platforms for datasets anyway. They'll just do it quietly and hope no one notices.

And places like cohost or whatever-- honestly, if it makes you feel safer/better, go for it, but I don't think cohost has the sway or capital to build the type of legal team you need to fight against scrapers. Hell, you wanna retreat into private discords? Discord wants in on AI too.

Everyone big is already dealing in AI, and everyone small doesn't even have a seat at the table. In my opinion, we are all collectively holding out for Brussels or any of the many court cases to do something about this shit, because it's no longer a thing we can just hide from.

I'm going to keep my writing on the AO3 because they are the odd case of having an actual legal team in place for this shit. For artists, I have nothing but sympathy. I suggest glazing and nightshading literally everything you post.

But beyond that, I'm unsure what we can do. This is a matter for legislation. Silicon Valley doesn't care if we all go to cohost, and even less scrupulous data-crawlers will just grab our shit from there too.

So I'll be here.

3K notes

·

View notes

Text

hey, everybody go into your blog settings right now, go to Visibility, and click prevent third party sharing. Do it for all your blogs.

The fact tumblr is partnering with AI companies fucking blows but at least (at the very fucking least!) we can opt out.

#fuck ai#and at least it doesnt seem to be an every 30 days bullshit like opting out of tumblr live was#tumblr

2K notes

·

View notes

Text

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

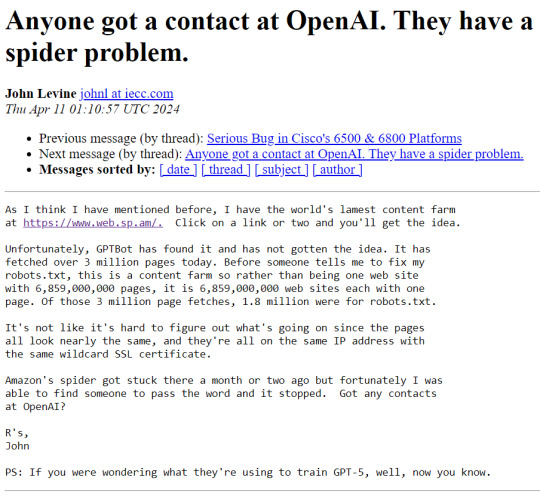

To understand what's going on here, know these things:

OpenAI is the company that makes ChatGPT

A spider is a kind of bot that autonomously crawls the web and sucks up web pages

robots.txt is a standard text file that most web sites use to inform spiders whether or not they have permission to crawl the site; basically a No Trespassing sign for robots

OpenAI's spider is ignoring robots.txt (very rude!)

the web.sp.am site is a research honeypot created to trap ill-behaved spiders, consisting of billions of nonsense garbage pages that look like real content to a dumb robot

OpenAI is training its newest ChatGPT model using this incredibly lame content, having consumed over 3 million pages and counting...

It's absurd and horrifying at the same time.

12K notes

·

View notes

Text

How to Tell If That Post of Advice Is AI Bullshit

Right, I wasn't going to write more on this, but every time I block an obvious AI-driven blog, five more clutter up the tags. So this is my current (April 2024) advice on how to spot AI posts passing themselves off as useful writing advice.

No Personality - Look up a long-running writing blog, you'll notice most people try to make their posts engaging and coming from a personal perspective. We do this because we're writers and, well, we want to convey a sense of ourselves to our readers. A lot of AI posts are straight-forward - no sense of an actual person writing them, no variation in tone or text.

No Examples - No attempts to show how pieces of advice would work in a story, or cite a work where you could see it in action. An AI post might tell you to describe a person by highlighting two or three features, and that's great, but it's hard to figure out how that works without an example.

Short, Unhelpful Definitions - A lot of what I've seen amount to two or three-sentence listicles. 'When you want to write foreshadowing, include a hint of what you want foreshadowed in an earlier chapter.' Cool beans, could've figured that out myself.

SEO/AI Prompt Language Included - I've seen way too many posts start with "this post is about..." or "now we will discuss..." or "in this post we will..." in every single blog. This language is meant to catch a search engine or is ChatGPT reframing the prompt question. It's not a natural way of writing a post for the average tumblr user.

Oddly Clinical Language - Right, I'm calling out that post that tried to give advice on writing gay characters that called us "homosexuals" the entire time. That's a generative machine trying to stay within certain parameters, not an actual person who knows that's not a word you'd use unless you were trying to be insulting or dunking on your own gay ass in the funniest way possible.

Too Perfect - Most generative AI does not make mistakes (this is how many a student gets caught trying to use it to cheat). You can find ways to make it sound more natural and have it make mistakes, but that takes time and effort, and neither of those are really a factor in these posts. They also tend to have really polished graphics and use the same format every time.

Maximized Tags (That Are Pointless) - Anyone who uses more than 10 one-word tags is a cop. Okay, fine, I'm joking, but there's a minimal amount of tags that are actually useful when promoting a post. More tags are not going to get a post noticed by the algorithm, there is no algorithm. Not everyone has to use their tags to make snarky comments, but if your tags look like a spambot, I'm gonna assume you're a spambot.

No Reblogs From The Rest of Writblr - I'm always finding new Writblr folks who have been around for awhile, but every real person I've seen reblogs posts from other people. We've all got other stuff to do, I'm writing this blog to help others and so are they, the whole point of tumblr is to pass along something you think is great.

While you'll probably see some variation in the future - as people get wise to obviously generated text, they'll try to make it look less generated - but overall, there's still going to be tells to when something is fake.

I don't have any real advice for what to do about this (other than block those blogs, which is what I do). Like most AI bullshit, I suspect most of these blogs are just another grift, attempting to build large follower counts to leverage or sell something to in the future. They may progress past these tattletale features, but I'm still going to block them when I see them. I don't see any value in writing advice compiled from the work of better writers who put the effort in when I can just go find those writers myself.

523 notes

·

View notes

Text

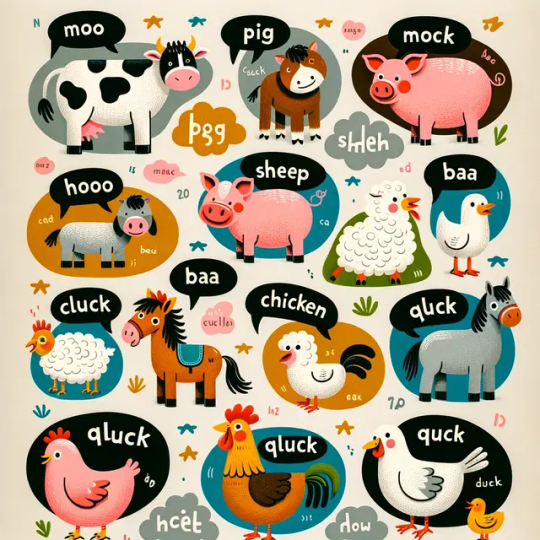

Hey kids, what sound does a wooly horse-sheep make?

What about a three-legged chicken?

All you have to do is ask chatgpt/dalle3, and the highest quality educational material can be yours at the click of a button.

more

#dalle3#chatgpt#ai generated#farm animals#animal sounds#old mcdonald had a chicken-sheep#e i e i uh oh#even a first grader can call bullshit on ai here

513 notes

·

View notes

Text

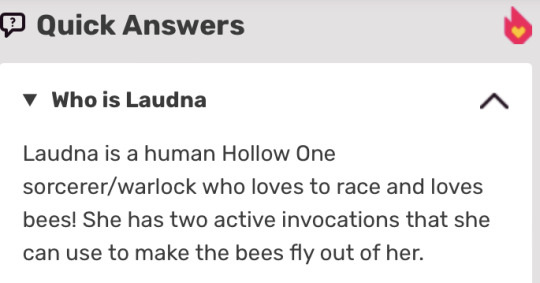

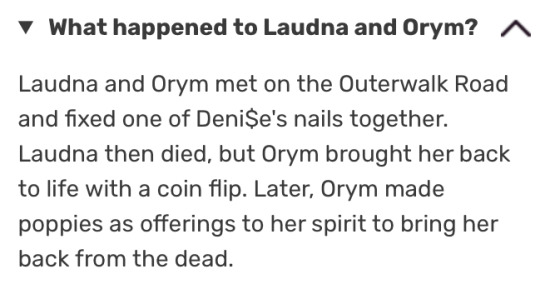

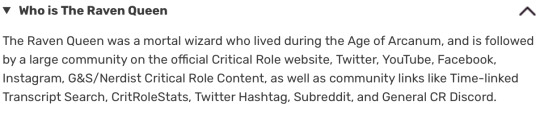

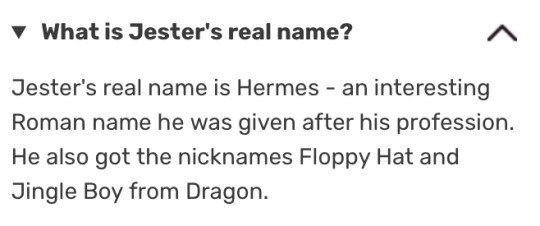

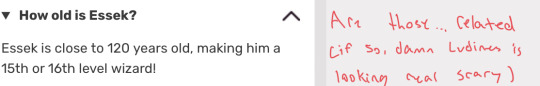

Hello please look at this collection of very accurate answers from the “quick answers” thing in the cr wiki

These were not written by a human being who has watched this show

#there’s more I swear but these are my favorites#they’re so fucking funny (and incorrect)#no amount of keyboard smashing will accurately represent how I feel about this#critical role#asdfghjkl;#undescribed#anyway fuck ai bullshit

988 notes

·

View notes