#Data Mesh Architecture

Text

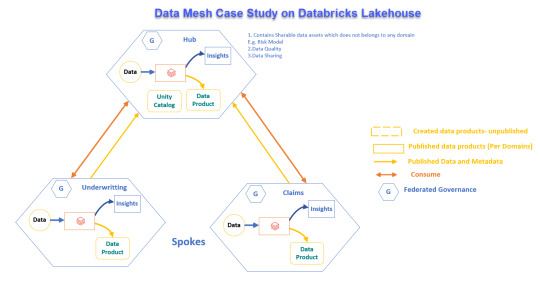

Implementing Data Mesh on Databricks: Harmonized and Hub & Spoke Approaches

Explore the Harmonized and Hub & Spoke Data Mesh models on Databricks. Enhance data management with autonomous yet integrated domains and central governance. Perfect for diverse organizational needs and scalable solutions. #DataMesh #Databricks

View On WordPress

#Autonomous Data Domains#Data Governance#Data Interoperability#Data Lakes and Warehouses#Data Management Strategies#Data Mesh Architecture#Data Privacy and Security#Data Product Development#Databricks Lakehouse#Decentralized Data Management#Delta Sharing#Enterprise Data Solutions#Harmonized Data Mesh#Hub and Spoke Data Mesh#Modern Data Ecosystems#Organizational Data Strategy#Real-time Data Sharing#Scalable Data Infrastructures#Unity Catalog

0 notes

Text

The Future Of Data Warehousing: Getting Ready For The Next Big Thing

Data warehousing is a process of collecting data and organizing it into a central repository. The data can then be accessed by business users to improve decision making. Data warehouses are typically built using Extract, Transform, and Load (ETL) tools. The data is cleaned and organized in a way that makes it easy to use.

The benefits of data warehousing are many, including improved decision-making, increased efficiency, and more accurate information.

Improved Decision Making

Business users can access the data in the data warehouse to make better decisions. They can identify trends, patterns, and correlations that would not be possible with smaller amounts of data. The data can also be used to create reports and dashboards.

The use of data warehouses has led to improved decision making across many industries. For example, retailers can use the data to identify popular items and trends, and adjust their inventory accordingly. Banks can use the data to identify fraudulent activity, and health care providers can use the data to improve patient care.

Faster Analysis Of Business Trends

The world of business is always changing. To stay ahead of the competition, businesses need to be able to quickly analyze trends in their industry. Traditionally, this has been done by looking at data that is spread out across different departments and systems. This process can be slow and cumbersome, and it can be difficult to get a clear picture of what is happening in the business.

A data warehousing can help to overcome these issues. By consolidating all of the data into a single system, businesses can quickly and easily analyze trends in their industry. This can help them to make better decisions about how to move forward with their business.

Additionally, a data warehousing can help businesses to identify patterns and correlations that they may not have otherwise been able to see.

Improved Business Processes

The role of data warehousing in business process improvement is twofold: first, data warehouses provide a single source of truth for all data in the organization, which can then be used to make better decisions; secondly, cloud data migration can help automate business processes.

A well-designed data warehouse can provide a single source of truth for all the data in an organization. This means that all stakeholders – from senior management down to front-line employees – can trust the data that they are working with. This leads to better decision-making, as everyone has access to the same accurate information.

Data warehouses can also help automate business processes. By integrating with enterprise resource planning (ERP) systems, for example, data warehouses can automatically generate reports or send notifications when certain conditions are met. This helps businesses run more efficiently and reduces the chance of human error.

More Accurate Customer Segmentation

In order to provide a better customer experience and more accurate product recommendations, businesses need to move beyond simple customer segmentation. Data mesh architecture can help with this by allowing businesses to group customers based on their purchase history, demographics, and other factors. This allows businesses to create more specific customer segments and target them with relevant offers and content. Data warehousing can also help businesses understand how different customers interact with their products and identify opportunities for improvement.

More Effective Product Promotion

Data warehousing is an important process for businesses of all sizes. By organizing and analyzing data, businesses can more effectively promote their products. Data warehousing makes it possible to track customer behavior and preferences, which can help businesses create more effective marketing campaigns. In addition, data warehousing can help businesses identify trends and opportunities. By understanding the needs and wants of their customers, businesses can develop products that are more likely to be successful.

0 notes

Text

Exploring the Synergy of Data Mesh and Data Fabric

As the digital landscape evolves and data becomes increasingly complex, organizations are seeking innovative approaches to manage and derive value from their data assets. Data mesh and data fabric have emerged as promising frameworks that address the challenges associated with data democratization, scalability, and agility.

In this blog, our expert authors delve into the fundamentals of data mesh, an architectural paradigm that emphasizes decentralized ownership and domain-driven data products. Learn how data mesh enables organizations to establish self-serve data ecosystems, fostering a culture of data collaboration and empowering teams to own and govern their data.

Furthermore, explore the concept of data fabric, a unified and scalable data infrastructure layer that seamlessly connects disparate data sources and systems. Uncover the benefits of implementing a data fabric architecture, including improved data accessibility, enhanced data integration capabilities, and accelerated data-driven decision-making.

Through real-world examples and practical insights, this blog post showcases the synergistic relationship between data mesh and data fabric. Discover how organizations can leverage these two frameworks in harmony to establish a robust data architecture that optimizes data discovery, quality, and usability.

Stay ahead of the data management curve and unlock the potential of your organization's data assets. Read this thought-provoking blog post on the synergy of data mesh and data fabric today!

For more info visit here: https://www.incedoinc.com/exploring-the-synergy-of-data-mesh-and-data-fabric/

1 note

·

View note

Text

Introducing Data Mesh

Check out my latest video on #DataMesh - an emerging approach to data architecture that is revolutionizing data ownership and management. #DataArchitecture #DecentralizedData #DataOwnership

Please do not forget to subscribe to our posts at www.AToZOfSoftwareeEgineering.blog.

Listen & follow our podcasts available on Spotify and other popular platforms.

Have a great reading and listening experience!

View On WordPress

#collaboration#data architecture#data governance#data integration#data management#data mesh#data ownership#data product#data quality#decentralized data#domain-driven design#self-serve infrastructure#youtube#security#it leadership#best practices#software development#automation#it leaders#innovation#business intelligence#agile development

1 note

·

View note

Text

Pre-alpha Lancer Tactics changelog

(cross-posting the full gif changelog here because folks seemed to like it last time I did)

We're aiming for getting the first public alpha for backers by the end of this month! Carpenter and I scoped out mechanics that can wait until after the alpha (e.g. grappling, hiding) in favor of tying up the hundred loose threads that are needed for something that approaches a playable game. So this is mostly a big ol changelog of an update from doing that.

But I also gave a talent talk at a local Portland Indie Game Squad event about engine architecture! It'll sound familiar if you've been reading these updates; I laid out the basic idea for this talk almost a year ago, back in the June 2023 update.

youtube

We've also signed contracts & had a kickoff meeting with our writers to start on the campaigns. While I've enjoyed like a year of engine-work, it'll be so so nice to start getting to tell stories. Data structures don't mean anything beyond how they affect humans & other life.

New Content

Implemented flying as a status; unit counts as +3 spaces above the current ground level and ignores terrain and elevation extra movement costs. Added hover + takeoff/land animations.

Gave deployables the ability to have 3D meshes instead of 2D sprites; we'll probably use this mostly when the deployable in question is climbable.

Related, I fixed a bug where after terrain destruction, all units recheck the ground height under them so they'll move down if the ground is shot out from under them. When the Jerichos do that, they say "oh heck, the ground is taller! I better move up to stand on it!" — not realizing that the taller ground they're seeing came from themselves.

Fixed by locking some units' rendering to the ground level; this means no stacking climbable things, which is a call I'm comfortable making. We ain't making minecraft here (I whisper to myself, gazing at the bottom of my tea mug).

Block sizes are currently 1x1x0.5 — half as tall as they are wide. Since that was a size I pulled out of nowhere for convenience, we did some art tests for different block heights and camera angles. TLDR that size works great and we're leaving it.

Added Cone AOE pattern, courtesy of an algorithm NMcCoy sent me that guarantees the correct number of tiles are picked at the correct distance from the origin.

pick your aim angle

for each distance step N of your cone, make a list ("ring") of all the cells at that distance from your origin

sort those cells by angular distance from your aim angle, and include the N closest cells in that ring in the cone's area

Here's a gif they made of it in Bitsy:

Units face where you're planning on moving/targeting them.

Got Walking Armory's Shock option working. Added subtle (too subtle, now that I look at it) electricity effect.

Other things we've added but I don't have gifs for or failed to upload. You'll have to trust me. :)

disengage action

overcharge action

Improved Armament core bonus

basic mine explosion fx

explosion fx on character dying

Increase map elevation cap to 10. It's nice but definitely is risky with increasing the voxel space, gonna have to keep an eye on performance.

Added Structured + Stress event and the associated popups. Also added meltdown status (and hidden countdown), but there's not animation for this yet so your guy just abruptly disappears and leaves huge crater.

UI Improvements

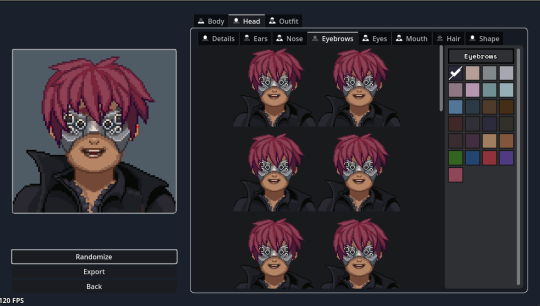

Rearranged the portrait maker. Auto-expand the color picker so you don't have to keep clicking into a submenu.

Added topdown camera mode by pressing R for handling getting mechs out of tight spaces.

The action tooltips have been bothering me for a while; they extend up and cover prime play-area real estate in the center of the screen. So I redesigned them to be shorter and have a max height by putting long descriptions in a scrollable box. This sounds simple, but the redesign, pulling in all the correct data for the tags, and wiring up the tooltips took like seven hours. Game dev is hard, yo.

Put the unit inspect popups in lockable tooltips + added a bunch of tooltips to them.

Implemented the rest of Carpenter's cool hex-y action and end turn readout. I'm a big fan of whenever we can make the game look more like a game and less like a website (though he balances out my impulse for that for the sake of legibility).

Added a JANKY talent/frame picker. I swear we have designs for a better one, but sometimes you gotta just get it working. Also seen briefly here are basic level up/down and HASE buttons.

Other no-picture things:

Negated the map-scaling effect that happens when the window resizes to prevent bad pixel scaling of mechs at different resolutions; making the window bigger now just lets you see more play area instead of making things bigger.

WIP Objectives Bullets panel to give the current sitrep info

Wired up a buncha tooltips throughout the character sheet.

Under the Hood

Serialization: can save/load games! This is the payoff for sticking with that engine architecture I've been going on about. I had to add a serialization function to everything in the center layer which took a while, but it was fairly straightforward work with few curveballs.

Finished replacement of the kit/unit/reinforcement group/sitrep pickers with a new standardized system that can pull from stock data and user-saved data.

Updated to Godot 4.2.2; the game (and editor) has been crashing on exit for a LONG time and for the life of me I couldn't track down why, but this minor update in Godot completely fixed the bug. I still have no idea what was happening, but it's so cool to be working in an engine that's this active bugfixing-wise!

Other Bugfixes

Pulled straight from the internal changelog, no edits for public parseability:

calculate cover for fliers correctly

no overwatch when outside of vertical threat

fixed skirmisher triggering for each attack in an AOE

fixed jumpjets boost-available detection

fixed mines not triggering when you step right on top of them // at a different elevation but still adjacent

weapon mods not a valid target for destruction

made camera pan less jumpy and adjust to the terrain height

better Buff name/desc localization

Fixed compcon planner letting you both boost and attack with one quick action.

Fix displayed movement points not updating

Prevent wrecks from going prone

fix berserkers not moving if they were exactly one tile away

hex mine uses deployer's save target instead of 0

restrict weapon mod selection if you don't have the SP to pay

fix deployable previews not going away

fix impaired not showing up in the unit inspector (its status code is 0 so there was a check that was like "looks like there's no status here")

fix skirmisher letting you move to a tile that should cost two movement if it's only one space away

fix hit percent calculation

fix rangefinder grid shader corner issues (this was like a full day to rewrite the shader to be better)

Teleporting costs the max(spaces traveled, elevation change) instead of always 1

So um, yeah, that's my talk, any questions? (I had a professor once tell us to never end a talk like this, so now of course it's the phrase that first comes to mind whenever I end a talk)

113 notes

·

View notes

Text

Jaegers of Pacific Rim: What do we know about them?

There's actually a fair amount of lore about Pacific Rim's jaegers, though most of it isn't actually in the movie itself. A lot of it has been scattered in places like Pacific Rim: Man, Machines, & Monsters, Tales From Year Zero, Travis Beacham's blog, and the Pacific Rim novelization.

Note that I will not be including information from either Pacific Rim: Uprising or Pacific Rim: The Black. Uprising didn't really add anything, and The Black's take on jaegers can easily be summed up as "simplified the concept to make a cartoon for children."

So what is there to know about jaegers, besides the fact that they're piloted by two people with their brains connected via computer?

Here's a fun fact: underneath the hull (which may or may not be pure iron), jaegers have "muscle strands" and liquid data transfer technology. Tendo Choi refers to them in the film when describing Lady Danger's repairs and upgrades:

Solid iron hull, no alloys. Forty engine blocks per muscle strand. Hyper-torque driver for every limb and a new fluid synapse system.

The novelization by Alex Irvine makes frequent references to this liquid data transfer tech. For example:

The Jaeger’s joints squealed and began to freeze up from loss of lubricant through the holes Knifehead had torn in it. Its liquid-circuit neural architecture was misfiring like crazy. (Page 29.)

He had enough fiber-optic and fluid-core cabling to get the bandwidth he needed. (Page 94.)

Newt soldered together a series of leads using the copper contact pins and short fluid-core cables. (Page 96.)

Unfortunately I haven't found anything more about the "muscle strands" and what they might be made of, but I do find it interesting that jaegers apparently have some sort of artificial muscle system going on, especially considering Newt's personnel dossier in the novel mentioned him pioneering research in artificial tissue replication at MIT.

The novelization also mentions that the pilots' drivesuits have a kind of recording device for their experiences while drifting:

This armored outer layer included a Drift recorder that automatically preserved sensory impressions. (Page 16.)

It was connected through a silver half-torus that looked like a travel pillow but was in fact a four-dimensional quantum recorder that would provide a full record of the Drift. (Page 96.)

This is certainly... quite the concept. Perhaps the PPDC has legitimate reasons for looking through the memories and feelings of their pilots, but let's not pretend this doesn't enable horrific levels of privacy invasion.

I must note, though, I haven't seen mention of a recording system anywhere outside of the novel. Travis Beacham doesn't mention it on his blog, and it never comes up in either Tales From Year Zero or Tales From The Drift, both written by him. Whether there just wasn't any occasion to mention it or whether this piece of worldbuilding fell by the wayside in Beacham's mind is currently impossible to determine.

Speaking of the drivesuits, let's talk about those more. The novelization includes a few paragraphs outlining how the pilots' drivesuits work. It's a two-layer deal:

The first layer, the circuity suit, was like a wetsuit threaded with a mesh of synaptic processors. The pattern of processor relays looked like circuitry on the outside of the suit, gleaming gold against its smooth black polymer material. These artificial synapses transmitted commands to the Jaeger’s motor systems as fast as the pilot’s brain could generate them, with lag times close to zero. The synaptic processor array also transmitted pain signals to the pilots when their Jaeger was damaged.

...

The second layer was a sealed polycarbonate shell with full life support and magnetic interfaces at spine, feet, and all major limb joints. It relayed neural signals both incoming and outgoing. This armored outer layer included a Drift recorder that automatically preserved sensory impressions.

...

The outer armored layer of the drivesuit also kept pilots locked into the Conn-Pod’s Pilot Motion Rig, a command platform with geared locks for the Rangers’ boots, cabled extensors that attached to each suit gauntlet, and a full-spectrum neural transference plate, called the feedback cradle, that locked from the Motion Rig to the spine of each Ranger’s suit. At the front of the motion rig stood a command console, but most of a Ranger’s commands were issued either by voice or through interaction with the holographic heads-up display projected into the space in front of the pilots’ faces. (Page 16.)

Now let's talk about the pons system. According to the novelization:

The basics of the Pons were simple. You needed an interface on each end, so neuro signals from the two brains could reach the central bridge. You needed a processor capable of organizing and merging the two sets of signals. You needed an output so the data generated by the Drift could be recorded, monitored, and analyzed. That was it. (Page 96.)

This is pretty consistent with other depictions of the drift, recording device aside. (Again, the 4D quantum recorder never comes up anywhere outside of the novel.)

The development of the pons system as we know it is depicted in Tales From Year Zero, which goes into further detail on what happened after Trespasser's attack on San Francisco. In this comic, a jaeger can be difficult to move if improbably calibrated. Stacker Pentecost testing out a single arm describes the experience as feeling like his hand is stuck in wet concrete; Doctor Caitlin Lightcap explains that it's resistance from the datastream because the interface isn't calibrated to Pentecost's neural profile. (I'm guessing that this is the kind of calibration the film refers to when Tendo Choi calls out Lady Danger's left and right hemispheres being calibrated.)

According to Travis Beacham's blog, solo piloting a jaeger for a short time is possible, though highly risky. While it won't cause lasting damage if the pilot survives the encounter, the neural overload that accumulates the longer a pilot goes on can be deadly. In this post he says:

It won't kill you right away. May take five minutes. May take twenty. No telling. But it gets more difficult the longer you try. And at some point it catches up with you. You won't last a whole fight start-to-finish. Stacker and Raleigh managed to get it done and unplug before hitting that wall.

In this post he says:

It starts off fine, but it's a steep curve from fine to dead. Most people can last five minutes. Far fewer can last thirty. Nobody can last a whole fight.

Next, let's talk about the size and weight of jaegers. Pacific Rim: Man, Machines, & Monsters lists off the sizes and weights of various jaegers. The heights of the jaegers it lists (which, to be clear, are not all of them) range from 224 feet to 280 feet. Their weights range from 1850 tons to 7890 tons. Worth noting, the heaviest jaegers (Romeo Blue and Horizon Brave) were among the Mark-1s, and it seems that these heavy builds didn't last long given that another Mark-1, Coyote Tango, weighed 2312 tons.

And on the topic of jaeger specs, each jaeger in Pacific Rim: Man, Machines, & Monsters is listed with a (fictional) power core and operating system. For example, Crimson Typhoon is powered by the Midnight Orb 9 power core, and runs on the Tri-Sun Plasma Gate OS.

Where the novelization's combat asset dossiers covers the same jaegers, this information lines up - with the exception of Lady Danger. PR:MMM says that Lady Danger's OS is Blue Spark 4.1; the novelization's dossier says it's BLPK 4.1.

PR:MMM also seems to have an incomplete list of the jaegers' armaments; for example, it lists the I-22 Plasmacaster under Weaponry, and "jet kick" under Power Moves. Meanwhile, the novelization presents its armaments thus:

I-22 Plasmacaster

Twin Fist gripping claws, left arm only

Enhanced balance systems and leg-integral Thrust Kickers

Enhanced combat-strike armature on all limbs

The novel's dossiers list between 2-4 features in the jaegers' armaments sections.

Now let's move on to jaeger power cores. As many of you probably already know, Mark-1-3 jaegers were outfitted with nuclear power cores. However, this posed a risk of cancer for pilots, especially during the early days. To combat this, pilots were given the (fictional) anti-radiation drug, Metharocin. (We see Stacker Pentecost take Metharocin in the film.)

The Mark-4s and beyond were fitted with alternative fuel sources, although their exact nature isn't always clear. Striker Eureka's XIG supercell chamber implies some sort of giant cell batteries, but it's a little harder to guess what Crimson Typhoon's Midnight Orb 9 might be, aside from round.

Back on the topic of nuclear cores, though, the novelization contains a little paragraph about the inventor of Lady Danger's power core, which I found entertaining:

The old nuclear vortex turbine lifted away from the reactor housing. The reactor itself was a proprietary design, brainchild of an engineer who left Westinghouse when they wouldn’t let him use his lab to explore portable nuclear miniaturization tech. He’d landed with one of the contractors the PPDC brought in at its founding, and his small reactors powered many of the first three generations of Jaegers. (Page 182.)

Like... I have literally just met this character, and I love him. I want him to meet Newt Geiszler, you know? >:3

Apparently, escape pods were a new feature to Mark-3 jaegers. Text in the novelization says, "New to the Mark III is an automated escape-pod system capable of ejecting each Ranger individually." (Page 240.)

Finally, jaegers were always meant to be more than just machines. Their designs and movements were meant to convey personality and character. Pacific Rim: Man, Machines, & Monsters says:

Del Toro insisted the Jaegers be characters in and of themselves, not simply giant versions of their pilots. Del Toro told his designers, "It should be as painful for you to see a Jaeger get injured as it is for you to see the pilot [get hurt.]" (Page 56.)

Their weathered skins are inspired by combat-worn vehicles from the Iraq War and World War II battleships and bombers. They look believable and their design echoes human anatomy, but only to a point. "At the end of the day, what you want is for them to look cool," says Francisco Ruiz Velasco. "It's a summer movie, so you want to see some eye candy." Del Toro replies, "I, however, believe in 'eye protein,' which is high-end design with a high narrative content." (Page 57.)

THE JAEGER FROM DOWN UNDER is the only Mark 5, the most modern and best all-around athlete of the Jaegers. He's also the most brutal of the Jaeger force. Del Toro calls him "sort of brawler, like a bar fighter." (Page 64.)

And that is about all the info I could scrounge up and summarize in a post. I think there's a lot of interesting stuff here - like, I feel that the liquid circuit and muscle tissue stuff gives jaegers an eerily organic quality that could be played for some pretty interesting angles. And I also find it interesting that jaegers were meant to embody their own sort of character and personality, rather than just being simple combat machines or extensions of their pilots - it's a great example of a piece of media choosing thematic correctness over technical correctness, which when you get right down to it, is sort of what Pacific Rim is really all about.

83 notes

·

View notes

Photo

Contorted Compositions V2.0 webinar: Grasshopper + 3ds Max with @amir.h.fakhrghasemi - If you can’t attend the live date and time you will still get access to the recording by registering! - Register Now, link in bio or: https://designmorphine.com/education/contorted-compositions-v2-0 - Contorted Compositions V2.0 intends to introduce a set of form-finding methods that are based on Minimal Surface concept and its combination with Polygon Modeling methods with Rhinoceros, Grasshopper, and 3ds Max. Simulation of Forces and Data Management tools in both 3Ds Max and Grasshopper are another part of this teaching webinar. Through the combination of modeling tools in these two software we will attain the necessary freedom in the customization of mesh typology. . . . . . . #rhino3d #grasshopper3d #parametricart #3dmodeling #architecture #parametric #3dsmax #autodesk #math #building (at 𝓣𝓱𝒆 𝓤𝒏𝒊𝓿𝒆𝒓𝒔𝒆) https://www.instagram.com/p/CjighREJMGM/?igshid=NGJjMDIxMWI=

#rhino3d#grasshopper3d#parametricart#3dmodeling#architecture#parametric#3dsmax#autodesk#math#building

8 notes

·

View notes

Text

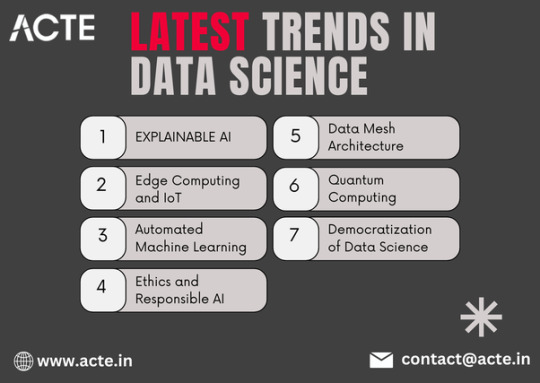

The Transformative Frontiers of Data Science in 2024

The world of data science is undergoing a remarkable transformation, with new advancements and emerging trends reshaping the way we extract insights and make data-driven decisions. As we venture into the mid-2020s, let's explore some of the key frontiers that are defining the future of this dynamic field.

With a Data Science Course in Coimbatore, professionals can gain the skills and knowledge needed to harness the capabilities of Data Science for diverse applications and industries.

Some of The Key Trends Shaping the Evolving Landscape of Data Science:

Unraveling the Black Box: Explainable AI

The increasing complexity of machine learning models has sparked a growing demand for transparency and interpretability. Techniques like SHAP, LIME, and Grad-CAM are enabling data scientists to delve deeper into the inner workings of their algorithms, fostering greater trust and informed decision-making.

Responsible Data Stewardship: The Rise of Ethical AI

Alongside the push for powerful AI solutions, there is a heightened emphasis on developing systems that align with principles of privacy, security, and non-discrimination. Organizations are investing in robust frameworks to ensure their data-driven initiatives are ethical and accountable.

Democratizing Data Insights: Empowering Domain Experts

The democratization of data science is underway, as user-friendly tools and no-code/low-code platforms lower the technical barrier to entry. This empowers domain experts and business analysts to leverage data-driven insights without requiring deep technical expertise.

Edge Computing and the IoT Frontier

As the Internet of Things (IoT) expands, data science is playing a pivotal role in processing and analyzing the vast amounts of data generated at the network edge. This is enabling real-time decision-making and actionable insights closer to the source of data.

To master the intricacies of Data Science and unlock its full potential, individuals can benefit from enrolling in the Data Science Certification Online Training.

Decentralized Data Governance: The Data Mesh Architecture

Traditional centralized data warehousing approaches are giving way to a decentralized, domain-driven data architecture known as the "data mesh." This model empowers individual business domains to own and manage their data, fostering greater agility and flexibility.

Conversational Breakthroughs: Advances in Natural Language Processing

Recent advancements in transformer-based models, such as GPT-3 and BERT, have significantly improved the capabilities of natural language processing (NLP) for tasks like text generation, sentiment analysis, and language translation.

Quantum Leap: The Emergence of Quantum Computing

While still in its early stages, quantum computing is expected to have a profound impact on data science, particularly in areas like cryptography, optimization, and simulation. Pioneering research is underway to harness the power of quantum mechanics for data-intensive applications.

As the data science landscape continues to evolve, professionals in this field will need to stay agile, continuously learn, and collaborate across disciplines to unlock the full potential of data-driven insights and transform the way we approach problem-solving.

0 notes

Text

Maximizing Urban Efficiency: The Power of AMR/AMI Solutions and Network Installation Consulting

Introduction

In an era where urbanization poses unprecedented challenges to city management, the integration of innovative solutions becomes imperative. Advanced Metering Infrastructure (AMI) and Automated Meter Reading (AMR) projects offer promising avenues for cities to enhance resource management and operational efficiency. However, the successful implementation of these systems often relies on the expertise provided by professional network installation consulting services. This article explores how the synergy between AMR/AMI solutions and network installation consulting can catalyze transformative changes in cities.

Understanding AMR/AMI Solutions

AMR and AMI technologies revolutionize the way cities monitor and manage utilities such as water, electricity, and gas consumption. AMR systems automate the process of collecting meter readings, eliminating the need for manual intervention and reducing errors. On the other hand, AMI goes beyond mere meter reading by enabling two-way communication between meters and utility providers. This bidirectional communication empowers utilities with real-time data insights and enables proactive management of resources.

The Benefits of AMR/AMI Implementation

Enhanced Operational Efficiency:

Streamlined Data Collection: AMR/AMI systems automate meter reading processes, reducing reliance on manual readings and minimizing errors.

Real-Time Monitoring: With AMI technology, utilities gain access to real-time consumption data, allowing for immediate detection of leaks, outages, or abnormalities.

Improved Resource Allocation: Accurate data provided by AMR/AMI systems enables utilities to optimize resource distribution, leading to cost savings and improved service delivery.

Customer Empowerment:

Consumption Awareness: AMR/AMI systems empower consumers with insights into their utility usage patterns, fostering awareness and encouraging conservation.

Timely Issue Resolution: With real-time monitoring capabilities, utilities can promptly address customer concerns such as billing discrepancies or service interruptions, enhancing customer satisfaction.

Leveraging Network Installation Consulting

While the benefits of AMR/AMI solutions are evident, their successful deployment hinges on robust network infrastructure. Professional network installation consulting services play a pivotal role in ensuring the seamless integration and optimization of these systems within city environments. Key contributions of network installation consulting include:

Infrastructure Assessment:

Conducting comprehensive assessments to evaluate the existing infrastructure and identify potential bottlenecks or compatibility issues.

Designing customized network architectures tailored to the specific needs and scale of the city.

Deployment Support:

Providing expertise in deploying communication networks necessary for AMR/AMI functionality, including wireless mesh networks or cellular connectivity.

Ensuring proper installation and configuration of hardware components such as meters, gateways, and data concentrators.

Scalability and Future-Proofing:

Designing network solutions that accommodate future expansion and technological advancements, ensuring scalability and long-term viability.

Offering ongoing support and maintenance services to address evolving needs and optimize system performance.

Conclusion

In an increasingly urbanized world, the effective management of resources is paramount for sustainable development. AMR/AMI solutions offer cities the tools to address these challenges by enabling efficient utility management and empowering both utilities and consumers with real-time data insights. However, the successful implementation of AMR/AMI systems relies on robust network infrastructure, underscoring the importance of professional network installation consulting services. By leveraging the synergy between AMR/AMI solutions and network installation consulting, cities can unlock transformative potential and pave the way towards a smarter, more sustainable future.

To know more please visit: https://xususa.com/

https://www.linkedin.com/company/101381413/admin/feed/posts

or call: 469-506-1712

ADD : Dallas TX, United States, 75001

#xtreme utility solutions#smart utility solutions#city utility services#bestutilityservicesindallas#innovative utility solutions

0 notes

Text

Real-World Application of Data Mesh with Databricks Lakehouse

Explore how a global reinsurance leader transformed its data systems with Data Mesh and Databricks Lakehouse for better operations and decision-making.

View On WordPress

#Advanced Analytics#Business Transformation#Cloud Solutions#Data Governance#Data management#Data Mesh#Data Scalability#Databricks Lakehouse#Delta Sharing#Enterprise Architecture#Reinsurance Industry

0 notes

Text

From Chaos to Clarity - How Data Mesh is Taming Data Silos for Modern Businesses

In today's data-driven world, businesses are generating more data than ever before. From customer transactions and marketing campaigns to website analytics and social media interactions, the volume of information is truly staggering. However, this abundance can be a double-edged sword. Often, valuable data gets trapped within departmental "silos," making it difficult to access, analyze, and leverage for strategic decision-making. This fragmented data landscape leads to frustration, hinders collaboration, and ultimately, restricts a company's ability to unlock the full potential of its information assets.

The Silo Effect: How Data Fragmentation Hinders Progress

The culprit behind this data chaos? Data silos. These arise when different departments within an organization collect and manage their own data independently. Departmental ownership of specific data sets, lack of standardized formats across departments, or simply a culture of information control can all contribute to silo formation. Regardless of the cause, the consequences are far-reaching.

Limited Visibility: Without a unified view of all relevant data, gaining a holistic understanding of the business becomes challenging. This can lead to missed opportunities, inefficient resource allocation, and flawed strategic planning.

Data Inconsistency: Fragmented data management can lead to inconsistencies in quality and format. This makes it difficult to trust the accuracy of insights derived from the data, hindering data-driven decision-making.

Reduced Agility: When valuable data is locked away in silos, it takes longer to access and analyze, hindering an organization's ability to respond quickly to market changes or customer needs.

Traditional approaches to data integration, such as building centralized data warehouses, often prove cumbersome and expensive. They require significant upfront investment and ongoing maintenance, making them both time-consuming and resource-intensive.

Breaking Down the Walls: Introducing Data Mesh

The Data Mesh architecture offers a revolutionary solution to the challenge of data silos. It promotes a decentralized approach to data management, where ownership and responsibility for data reside with the business domains that originate it. This approach empowers data domains, such as marketing, sales, or finance, to own and manage their generated data. This fosters accountability, ensures data quality, and instills a sense of stewardship within the organization.

One of the key features of Data Mesh is self-service data availability. Domains are responsible for preparing their data as easily consumable products, allowing other departments to access and utilize it without relying on centralized IT teams. This self-service approach democratizes data access, facilitating collaboration, and enabling faster decision-making.

Data governance remains a vital component of Data Mesh, but it is implemented at the domain level. Each domain is responsible for ensuring the quality and consistency of its own data set. This decentralized approach to data governance aligns with the principles of Data Mesh, promoting agility and empowering data domains to take ownership of their data management practices.

Lastly, Data Mesh fosters a culture of data collaboration and innovation, enabling data sharing across domains and unlocking new opportunities for innovation.

Building a Data-Driven Future: Implementing Data Mesh

While Data Mesh offers a promising solution, its implementation requires meticulous planning and execution. The initial step involves identifying data domains within an organization and assigning clear data ownership responsibilities. Establishing data governance frameworks and standards is crucial, encompassing standardized data models, access controls, and quality assurance measures across all data domains.

Moreover, investing in data interoperability tools, such as APIs and data catalogs, facilitates seamless data exchange and integration between domains. Encouraging a data-driven culture is pivotal, entailing training employees on data literacy and highlighting the benefits of Data Mesh.

Conclusion

Data silos present a significant challenge to businesses, hindering their ability to leverage the full value of their data assets. Traditional approaches to data integration are time-consuming and resource-intensive, but the Data Mesh architecture offers a revolutionary solution. The Data Mesh promotes a decentralized approach, empowering business domains to own and manage their data, fostering accountability, data quality, and stewardship.

If you're seeking effective data solutions, consider exploring services from Cipherslab. With a team of skilled and experienced data analysts, they offer a wide range of solutions to address your data management needs. Visit CipherSlab to unlock the power of your data assets today.

0 notes

Text

European supercomputer Jupiters CPU Rhea1 gets a spec bump and a delay

Tick tick tick: SiPearl is the French company selected by the European supercomputer consortium (EuroHPC JU) to develop a chip for the first exascale-class supercomputer in the region. The organization recently released updated specifications for the Rhea1 microchip, indicating that the first samples will be available later than initially expected.

While attending the ISC trade show in Hamburg, SiPearl shared the latest specifications and main features of the supercomputer chip Rhea1. This “first-generation” processor will utilize the Arm Neoverse V1 platform, empowering the high-performance computing tasks of Europe’s next-generation supercomputers while consuming less energy than competing products.

Previously, Rhea1’s specifications included 72 Neoverse V1 cores. However, SiPearl now indicates that the chip will feature a total of 80 Arm cores. The Rhea1 project always intended to incorporate 80 computing cores, the company clarified, with each core containing two 256-bit scalable vector extensions for “fast vector computations” in HPC scenarios.

The updated design includes built-in, high-bandwidth memory chips with four different HBM stacks per chip. Many HPC tasks, especially AI inferencing, will greatly benefit from integrated RAM. SiPearl is collaborating with Samsung to utilize HBM2e memory chips, although the Korean manufacturer will soon introduce the new HBM4 standard.

In addition to HBM, Rhea1 chips will also feature four DDR5 interfaces supporting two additional DIMM modules per channel. The design accommodates a total of 104 PCIe Gen5 lanes, with configurations of up to six x16 lanes plus two x4 lanes. The Neoverse technology also incorporates a specific Network-on-Chip component, the CMN-700 Coherent Mesh NoC, to facilitate rapid data sharing between compute and I/O elements.

Rhea1 is already supported by a wide range of compilers, libraries, and tools. It is capable of powering both “traditional” HPC workloads and newer AI inference tasks. According to the French company, the chip’s “generous” memory capacity will be the key element in delivering increased performance levels while retaining the energy-efficiency features of the Arm architecture, resulting in an unprecedented byte-per-FLOP ratio.

What SiPearl failed to emphasize, however, is that the Rhea project was initially intended to produce the first actual chips by 2022. The silicon company previously announced that the chips would be manufactured by TSMC in 2023 but is postponing Rhea1’s debut to 2025. Installation of Jupiter, Europe’s first exascale supercomputer designed around Rhea’s chip architecture, is expected to start this year.

Source link

via

The Novum Times

0 notes

Text

Data Mesh: Principles, Benefits, Drawbacks, and Best Practices

Check out my latest blog on #DataMesh - an emerging approach to data architecture that is revolutionizing data ownership and management. Learn about the principles, benefits, drawbacks, and best practices for implementing this innovative approach. #DataAr

In recent years, the concept of data mesh has gained a lot of attention in the data engineering community. The term was coined by Zhamak Dehghani, a principal consultant at ThoughtWorks, in a blog post published in May 2020. Since then, data mesh has become a popular topic in the data engineering world, with many organizations exploring its potential benefits.

Data mesh is a new approach to data…

View On WordPress

#collaboration#data architecture#data governance#data integration#data management#data mesh#data ownership#data product#data quality#decentralized data#domain-driven design#self-serve infrastructure

1 note

·

View note

Text

Elevate Your Data Strategy with Dataplex Solutions

Scalability, agility, and governance issues are limiting the use of traditional centralised data architectures in the rapidly changing field of data engineering and analytics. A new paradigm known as “data mesh” has arisen to address these issues and enables organisations to adopt a decentralised approach to data architecture. This blog post describes the idea of data mesh and explains how Dataplex, a BigQuery suite data fabric feature, may be utilised to achieve the advantages of this decentralised data architecture.

What is Data mesh

An architectural framework called “data mesh” encourages treating data like a product and decentralises infrastructure and ownership of data. More autonomy, scalability, and data democratisation are made possible by empowering teams throughout an organisation to take ownership of their respective data domains. Individual teams or data products assume control of their data, including its quality, schema, and governance, as opposed to depending on a centralised data team. Faster insights, simpler data integration, and enhanced data discovery are all facilitated by this dispersed responsibility paradigm.

An overview of the essential components of data mesh is provided in Figure 1.Image credit to Google Cloud

Data mesh architecture

Let’s talk about the fundamentals of data mesh architecture and see how they affect how we use and manage data.

Domain-oriented ownership:

Data mesh places a strong emphasis on assigning accountability to specific domains or business units inside an organisation as well as decentralising data ownership. Every domain is in charge of overseeing its own data, including governance, access controls, and data quality. Domain specialists gain authority in this way, which promotes a sense of accountability and ownership. Better data quality and decision-making are ensured by this approach, which links data management with the particular requirements and domain expertise of each domain.

Self-serve data infrastructure:

Within a data mesh architecture, domain teams can access data infrastructure as a product that offers self-serve features. Domain teams can select and oversee their own data processing, storage, and analysis tools without depending on a centralised data team or platform. With this method, teams may customise their data architecture to meet their unique needs, which speeds up operations and lessens reliance on centralised resources.

Federated computational governance:

In a data mesh, a federated model governs data governance instead of being imposed by a central authority. Data governance procedures are jointly defined and implemented by each domain team in accordance with the demands of their particular domain. This methodology guarantees that the people closest to the data make governance decisions, and it permits adaptation to domain-specific requirements with flexibility. Federated computational governance encourages responsibility, trust, and adaptability in the administration of digital assets.

Data as a product:

Data platforms are developed and maintained with a product mentality, and data within a data mesh is handled as such. This entails concentrating on adding value for the domain teams, or end users, and iteratively and continuously enhancing the data infrastructure in response to input. Teams who employ a product thinking methodology make data platforms scalable, dependable, and easy to use. They provide observable value to the company and adapt to changing requirements.

Google Dataplex

Dataplex is a cloud-native intelligent data fabric platform that simplifies, integrates, and analyses large, complex data sets. It standardises data lineage, governance, and discovery to help enterprises maximise data value.

Dataplex’s multi-cloud support allows you to leverage data from different cloud providers. Its scalability and flexibility allow you to handle large volumes of data in real-time. Its robust data governance capabilities help ensure security and compliance. Finally, its efficient metadata management improves data organisation and accessibility. Dataplex integrates data from various sources into a unified data fabric.

How to apply Dataplex on a data mesh

Step 1: Establish the data domain and create a data lake.

We specify the data domain, or data boundaries, when building a Google Cloud data lake. Data lakes are adaptable and scalable big data storage and analytics systems that store structured, semi-structured, and unstructured data in its original format.

Domains are represented in the following diagram as Dataplex lakes, each controlled by a different data provider. Data producers keep creation, curation, and access under control within their respective domains. In contrast, data consumers are able to make requests for access to these subdomains or lakes in order to perform analysis.Image credit to Google Cloud

Step 2: Define the data zones and create zones in your data lake.

We create zones within the data lake in this stage. Every zone has distinct qualities and fulfils a certain function. Zones facilitate the organisation of data according to criteria such as processing demands, data type, and access needs. In the context of a data lake, creating data zones improves data governance, security, and efficiency.

Typical data zones consist of the following:

The raw zone is intended for the consumption and storage of unfiltered, raw data. It serves as the point of arrival for fresh data that enters the data lake. Because the data in this zone is usually preserved in its original format, it is perfect for data lineage and archiving.

Data preparation and cleaning occurs in the curated zone prior to data transfer to other zones. To guarantee data quality, this zone might include data transformation, normalisation, or deduplication.

Zone of transformation:This area contains high-quality, structured, and converted data that is prepared for use by data analysts and other users. This zone’s data is arranged and enhanced for analytical uses

Image credit to Google Cloud

Step 3: Fill the data lake zones with assets

We concentrate on adding assets to the various data lake zones in this step. The resources, data files, and data sets that are ingested into the data lake and kept in their designated zones are referred to as assets. You can fill the data lake with useful information for analysis, reporting, and other data-driven procedures by adding assets to the zones.

Step 4: Protect your data lake

We put strong security measures in place in this stage to protect your data lake and the sensitive information it contains. Protecting sensitive data, assisting in ensuring compliance with data regulations, and upholding the confidence of your users and stakeholders all depend on having a safe data lake.

With Dataplex’s security approach, you can manage access to carry out the following actions:

Establishing zones, building up more data lakes, and developing and linking assets are all part of managing a data lake.obtaining data connected to a data lake through the mapped asset (storage buckets and BigQuery data sets, for example)obtaining metadata related to the information connected to a data lake

By designating the appropriate fundamental and preset roles, the administrator of a data lake controls access to Dataplex resources (such as the lake, zones, and assets).Table schemas are among the metadata that metadata roles can access and inspect.The ability to read and write data in the underlying resources that the assets in the data lake reference is granted to those who are assigned data responsibilities.

Benefits of creating a data mesh

Enhanced accountability and ownership of data:

The transfer of data ownership and accountability to individual domain teams is one of the fundamental benefits of a data mesh. Every team now has accountability for the security, integrity, and quality of their data products thanks to the decentralisation of data governance.

Flexibility and agility:

Data meshes provide domain teams the freedom to make decisions on their own, enabling them to react quickly to changing business requirements. Iterative upgrades to existing data products and faster time to market for new ones are made possible by this agility.

Scalability and decreased bottlenecks:

By dividing up data processing and analysis among domain teams, a data mesh removes bottlenecks related to scalability. To effectively handle growing data volumes, each team can extend its data infrastructure on its own terms according to its own requirements.

Improved data discoverability and accessibility:

By placing a strong emphasis on metadata management, data meshes improve both of these metrics. Teams can find and comprehend available data assets with ease when they have access to thorough metadata.

Collaboration and empowerment:

Domain experts are enabled to make data-driven decisions that are in line with their business goals by sharing decision-making authority and data knowledge.

Cloud technologies enable scalable cloud-native infrastructure for data meshes.Serverless computing and elastic storage let companies scale their data infrastructure on demand for maximum performance and cost-efficiency.

Strong and comprehensive data governance: Dataplex provides a wide range of data governance solutions to assure data security, compliance, and transparency. Dataplex secures data and simplifies regulatory compliance via policy-driven data management, encryption, and fine-grained access restrictions. Through lineage tracing, the platform offers visibility into the complete data lifecycle, encouraging accountability and transparency. By enforcing uniform governance principles, organisations may guarantee consistency and dependability throughout their data landscape.

Effective data governance procedures are further enhanced by Dataplex’s centralised data catalogue governance and data quality monitoring capabilities.Businesses can gain a number of advantages by adopting the concepts of decentralisation, data ownership, and autonomy.Better data quality, accountability, agility, scalability, and decision-making are benefits. This innovative strategy may put firms at the forefront of the data revolution, boosting growth, creativity, and competitiveness.

Read more on govindhtech.com

#Dataplex#BigQuery#DataIntegration#datamanagement#architecturalframework#DataGovernance#dataplatforms#GoogleCloud#strongsecurity#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

3D Scanner Market Outlook Report 2024-2030: Trends, Strategic Insights, and Growth Opportunities | GQ Research

The 3D Scanner Market is set to witness remarkable growth, as indicated by recent market analysis conducted by GQ Research. In 2023, the global 3D Scanner Market showcased a significant presence, boasting a valuation of US$ 1.03 billion. This underscores the substantial demand for Acetophenone technology and its widespread adoption across various industries.

Get Sample of this Report at: https://gqresearch.com/request-sample/global-3d-scanner-market/

Projected Growth: Projections suggest that the 3D Scanner Market will continue its upward trajectory, with a projected value of US$ 1.75 billion by 2030. This growth is expected to be driven by technological advancements, increasing consumer demand, and expanding application areas.

Compound Annual Growth Rate (CAGR): The forecast period anticipates a Compound Annual Growth Rate (CAGR) of 6.9%, reflecting a steady and robust growth rate for the 3D Scanner Market over the coming years.

Technology Adoption:

In the 3D Scanner market, technology adoption revolves around the development and integration of advanced sensors, optics, and software algorithms to capture and process three-dimensional objects with high precision and accuracy. Various technologies such as laser scanning, structured light scanning, and photogrammetry are utilized in 3D scanners to capture surface geometry, texture, and color information. Additionally, advancements in sensor miniaturization, calibration techniques, and data processing enable portable and handheld 3D scanners to achieve high-resolution scanning in diverse environments and applications.

Application Diversity:

The 3D Scanner market serves diverse applications across various industries, including manufacturing, healthcare, architecture, arts, and entertainment. 3D scanners are used in manufacturing and engineering for quality control, reverse engineering, and dimensional inspection of parts and components. Moreover, 3D scanning technology is employed in healthcare for patient diagnosis, treatment planning, and customized medical device design. Additionally, 3D scanners find applications in architecture and construction for building documentation, heritage preservation, and virtual reality simulation. Furthermore, 3D scanners are utilized in the entertainment industry for character modeling, animation, and virtual set creation.

Consumer Preferences:

Consumer preferences in the 3D Scanner market are driven by factors such as scanning accuracy, speed, versatility, ease of use, and affordability. End-users prioritize 3D scanners that offer high-resolution scanning, precise geometric detail, and color accuracy for capturing objects of varying sizes and complexities. Additionally, consumers value user-friendly interfaces, intuitive software workflows, and compatibility with existing CAD/CAM software for seamless integration into their design and manufacturing processes. Moreover, affordability and cost-effectiveness are important considerations for consumers, especially small and medium-sized businesses, when selecting 3D scanning solutions.

Technological Advancements:

Technological advancements in the 3D Scanner market focus on improving scanning performance, resolution, and functionality through hardware and software innovations. Research efforts aim to develop next-generation sensors, such as time-of-flight (ToF) and multi-view stereo (MVS) cameras, with higher resolution, wider field of view, and enhanced depth sensing capabilities for improved 3D reconstruction. Additionally, advancements in software algorithms for point cloud processing, mesh generation, and texture mapping enable faster and more accurate reconstruction of scanned objects. Moreover, integration with artificial intelligence (AI) and machine learning techniques enhances feature recognition, noise reduction, and automatic alignment in 3D scanning workflows.

Market Competition:

The 3D Scanner market is characterized by intense competition among hardware manufacturers, software developers, and service providers, driven by factors such as scanning performance, product reliability, pricing, and customer support. Major players leverage their research and development capabilities, extensive product portfolios, and global distribution networks to maintain market leadership and gain competitive advantage. Meanwhile, smaller players and startups differentiate themselves through specialized scanning solutions, niche applications, and targeted marketing strategies. Additionally, strategic partnerships, acquisitions, and collaborations are common strategies for companies to expand market reach and enhance product offerings in the competitive 3D Scanner market.

Environmental Considerations:

Environmental considerations are increasingly important in the 3D Scanner market, with stakeholders focusing on energy efficiency, resource conservation, and sustainable manufacturing practices. Manufacturers strive to develop energy-efficient scanning devices with low power consumption and eco-friendly materials to minimize environmental impact during production and operation. Additionally, efforts are made to optimize packaging materials, reduce waste generation, and implement recycling programs to promote sustainable consumption and disposal practices in the 3D Scanner industry. Furthermore, initiatives such as product life cycle assessment (LCA) and eco-design principles guide product development processes to minimize carbon footprint and environmental footprint throughout the product lifecycle.

Top of Form

Regional Dynamics: Different regions may exhibit varying growth rates and adoption patterns influenced by factors such as consumer preferences, technological infrastructure and regulatory frameworks.

Key players in the industry include:

FARO Technologies, Inc.

Hexagon AB (Leica Geosystems)

Creaform (Ametek Inc.)

Trimble Inc.

Nikon Metrology NV

Artec 3D

GOM GmbH

Zeiss Group

Perceptron, Inc.

Keyence Corporation

Konica Minolta, Inc.

Shining 3D

Topcon Corporation

ShapeGrabber Inc.

SMARTTECH 3D

The research report provides a comprehensive analysis of the 3D Scanner Market, offering insights into current trends, market dynamics and future prospects. It explores key factors driving growth, challenges faced by the industry, and potential opportunities for market players.

For more information and to access a complimentary sample report, visit Link to Sample Report: https://gqresearch.com/request-sample/global-3d-scanner-market/

About GQ Research:

GQ Research is a company that is creating cutting edge, futuristic and informative reports in many different areas. Some of the most common areas where we generate reports are industry reports, country reports, company reports and everything in between.

Contact:

Jessica Joyal

+1 (614) 602 2897 | +919284395731

Website - https://gqresearch.com/

0 notes

Photo

Contorted Compositions V2.0 webinar: Grasshopper + 3ds Max with @amir.h.fakhrghasemi - If you can’t attend the live date and time you will still get access to the recording by registering! - Register Now, link in bio or: https://designmorphine.com/education/contorted-compositions-v2-0 - Contorted Compositions V2.0 intends to introduce a set of form-finding methods that are based on Minimal Surface concept and its combination with Polygon Modeling methods with Rhinoceros, Grasshopper, and 3ds Max. Simulation of Forces and Data Management tools in both 3Ds Max and Grasshopper are another part of this teaching webinar. Through the combination of modeling tools in these two software we will attain the necessary freedom in the customization of mesh typology. . . . . . . #rhino3d #grasshopper3d #parametricart #3dmodeling #architecture #parametric #3dsmax #autodesk #math #building (at 𝓣𝓱𝒆 𝓤𝒏𝒊𝓿𝒆𝒓𝒔𝒆) https://www.instagram.com/p/CkBbx9WpF9B/?igshid=NGJjMDIxMWI=

#rhino3d#grasshopper3d#parametricart#3dmodeling#architecture#parametric#3dsmax#autodesk#math#building

7 notes

·

View notes