#rokoko studio

Text

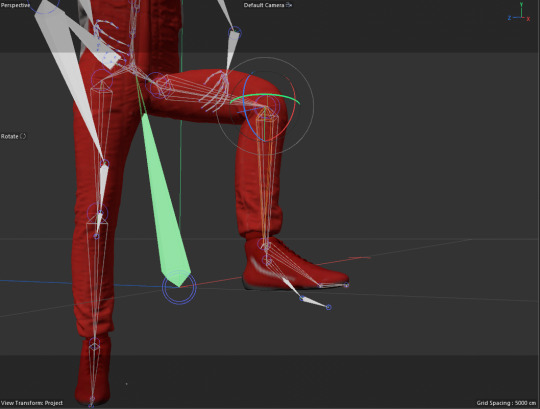

Another WIP animation before I get started on a major project. There's something rather therapeutic with animating in Blender in 3D over drawing everything in a 2D App. Well, I have 40 Years of Content related to 2D Animation so its finally time I switch up after four decades. I have also been storyboarding and writing a lot related to the Neo-Fureza Series behind the scenes so something is in the works related to the 20 Anniversary of the series.

#artists on tumblr#original concept#original content#original character#furry art#original story#furry oc#3d modeling#3d art#3d render#blender#lowpoly#animation#rokoko studio#motion capture#furry animation#blender3d#blender 2.8

0 notes

Text

Motion Capture Services at AAS Apple Arts Studios! ( www.appleartsstudios.com ) From precise Body Tracking, Retargeting to seamless Facial Retargeting, we've got it all covered. Whether it's from Vicon, OptiTrack, Qualisys, or markerless systems like Movella Entertainment | Xsens, Rokoko and #PerceptionNeuron, we specialize in turning motion data into captivating experiences. Reach out for top-notch services in gaming, entertainment, and beyond! #MotionCapture #mocap #Gaming #Entertainment #AASStudio"

#3D Animation#animation#MotionCapture#mocap#Gaming#Entertainment#AASStudio"#outsourcing#gamedev#optitrack

3 notes

·

View notes

Text

Problems and Looking for an Alternative Software

The Kinect skeleton was a good start as it uses the data from a old game system which was good as the tracking did work amazingly. However, there were issues with the player model/ mesh skin. Additionally, there are very limited resources for supporting with problem solving.

This has led me to pursue a different form of motion capture.

Research:

Rokoko Studio

"The software allows you to record, edit and stream character animations captured with Rokoko mocap. Rokoko is completely free for up to 3 creators and offers unlimited cloud storage and real-time preview of your captured data.

However, you cannot use Rokoko’s mocap tools independently of Rokoko Studio. This tool captures raw mocap data from Rokoko Face Capture, Smartgloves, and the Smartsuit Pro. This raw data is then processed by the Studio using advanced inverse kinetic models and filters to create a high-quality animation."

OptiTrack Motive

"OptiTrack Motive enhances skeleton tracking in difficult-to-track situations. Motive runs more than 10 skeletons or 300+ rigid bodies at real-time speed.

The motion capture software comes with positional accuracy of +/- 0.2 mm, latency of < 9 ms, and rotational accuracy of +/- 0.1 deg. Motive processes OptiTrack camera data and delivers global 3D positions, marker IDs, rotational data, and hyper-accurate skeletal tracking."

Autodesk MotionBuilder

"Autodesk’s MotionBuilder is a 3D character animation software that lets you use a wide range of tools to adjust the nuances of the model’s movement with maximum speed, precision, and consistency.

This live motion capture software offers an interactive and customizable display to help you capture live motion, edit, and replay complex character animations. This software is widely used by professionals who want to work with traditional keyframe animation or motion capture."

I choose rokoko one because it was free but also it was streamlined so that the animations and building of the skeletal animation are all made within rokoko not needing me to get any extra addons or software's for it to work

0 notes

Text

studioblack77.etsy.com

Rokoko MARIE Portrait Frau Malerei DRUCKBARE Kunst | Studio Black 77 MARIE

0 notes

Text

Historia projektowania wnętrz

Projektowanie wnętrz jest dziedziną, która ma długą i bogatą historię. Od starożytności po współczesność, ludzie zawsze starali się tworzyć piękne i funkcjonalne przestrzenie, w których mogliby żyć, pracować i odpoczywać. W tym artykule przyjrzymy się ewolucji projektowania wnętrz na przestrzeni wieków.

Starożytność Początki projektowania wnętrz można odnaleźć w starożytnych cywilizacjach, takich jak Egipt, Grecja i Rzym. W tamtych czasach wnętrza były projektowane głównie dla elit, takich jak faraonowie, cesarze i bogaci arystokraci. Wnętrza były bogato zdobione, z wykorzystaniem marmuru, złota, kolorowych mozaik i fresków. Meble były rzeźbione i ozdobione, a tkaniny były bogato haftowane.

Średniowiecze W okresie średniowiecza projektowanie wnętrz było silnie związane z architekturą kościołów i zamków. Wnętrza były mroczne i surowe, z ciężkimi drewnianymi meblami i ciemnymi tkaninami. Dekoracje były głównie religijne, z witrażami, rzeźbami i malowidłami przedstawiającymi sceny biblijne.

Renesans W okresie renesansu projektowanie wnętrz zaczęło się rozwijać w kierunku bardziej harmonijnych i proporcjonalnych przestrzeni. Włoscy architekci i projektanci, takie jak Leonardo da Vinci i Michelangelo, wprowadzili nowe pomysły i techniki, które wpływały na projektowanie wnętrz. Wnętrza były teraz bardziej otwarte, z większymi oknami, jasnymi kolorami i symetrycznymi układami.

Barok i rokoko W okresie baroku i rokoko, projektowanie wnętrz stało się jeszcze bardziej rozbudowane i ekstrawaganckie. Wnętrza były pełne bogatych tkanin, złotych zdobień, kryształowych żyrandoli i rzeźbionych mebli. Projektanci starali się stworzyć efekt wow, aby imponować swoim klientom.

XIX i XX wiek W XIX wieku projektowanie wnętrz zaczęło się bardziej skupiać na funkcjonalności i wygodzie. Wraz z rewolucją przemysłową pojawiły się nowe materiały i technologie, które wpłynęły na projektowanie wnętrz. Meble stały się bardziej praktyczne i dostępne dla szerszego grona odbiorców. W XX wieku pojawiły się różne style, takie jak modernizm, art deco, minimalizm i postmodernizm, które wpływały na projektowanie wnętrz.

Współczesność W dzisiejszych czasach projektowanie wnętrz jest bardzo różnorodne i zróżnicowane. Projektanci starają się tworzyć przestrzenie, które odzwierciedlają indywidualność i styl życia swoich klientów. Nowoczesne wnętrza są często minimalistyczne, z wykorzystaniem prostych linii, naturalnych materiałów i neutralnych kolorów. Wnętrza są teraz projektowane z myślą o zrównoważonym rozwoju i wykorzystaniu energii.

Podsumowując, projektowanie wnętrz ma długą i fascynującą historię. Od starożytności po współczesność, ludzie zawsze starali się tworzyć piękne i funkcjonalne przestrzenie. Ewolucja projektowania wnętrz odzwierciedla zmieniające się trendy, technologie i potrzeby społeczne. Dzięki projektowaniu wnętrz możemy tworzyć przestrzenie, które są zarówno estetyczne, jak i praktyczne, spełniając nasze potrzeby i preferencje.

#architektura wnętrz#aranżacja wnętrz#architekt wnętrz#projektanci wnętrz#home & lifestyle#architecture#białystok

0 notes

Text

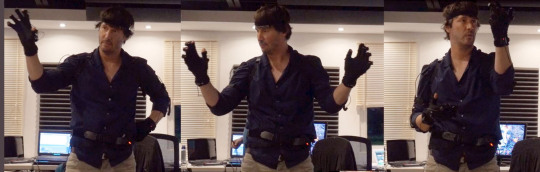

(6/7) Days of Making

As a man without the patience to be an animator, I've been fawning over MoCap constantly over the last year. After having no luck in finding the motion capture studio that "allegedly" exists on my schools campus, I decided to take matters into my own hands with the help of AI.

I set up a simple stage for myself in my bedroom, and recorded from my own webcam directly into Rokoko's smart mocap data generator. This process took somewhere in the realm of 5 minutes, and the data was a bit less than satisfactory, but for the sake of a demo it would do.

I borrowed a rigged model from Mixamo to pair with my new animation, but unfortunately I couldn't get the two to match up exactly which ultimately hindered the process. Going forward, I'll study up on how to properly match models to rigged figures, and I'll begin testing with MoveOne, whose closed beta I was recently approved for.

0 notes

Text

Motion capture suit

#Motion capture suit pro#

Virtual events consist either of a 3D world entirely or include live-action footage. Hybrid motion capture systems are rare but are gaining popularity as more and more studios turn to virtual production and virtual events.

Previs for production (live-action or 3D).

Costs start at around $2k for a basic setup and rise to around $25k depending on the software and mocap tool provider. The setup is quick and can be learned by a mid-level 3D artist in a few hours. The motion capture can be acted by the animator themselves or by a dedicated actor. Inertial motion capture uses internal gyroscopes placed on the body to translate movement into animation data.

#Motion capture suit pro#

Shutter Authority VFX indie Artist Raghav Anil Kumar using the Rokoko Smartsuit Pro and Smartgloves to wirelessly stream animation data on his custom characters - Read his story here. This can have a surprising impact on usability and price. It’s important to note that all motion capture systems need their proprietary software to operate. Inertial systems, in comparison, are a newer technology and are far cheaper and more convenient to use. An optical setup is usually used on high-budget productions (like Lord of the Rings) that require highly detailed animation data. There are two main types of motion capture technology inertial and optical. What type of motion capture should you choose? We’ll cover it all in this article, from Hollywood solutions like Optitrack and Vicon to popular, affordable setups!īut first, let’s jump into what types of mocap systems best suit your 3D animation needs. With the cost of technology decreasing (while the range of options increases), there are plenty of setups and combinations that you can use to record human motion. But in the last ten years, all that’s changed. Motion capture was traditionally only available to studios with big budgets.

1 note

·

View note

Video

instagram

“51 by MBGCORE ⚔👽 🔊Sound design by @bruits.studio 👾

Track : Jeff Heim - Drum run

Endorphin Dynamics

Special thanks to Léa & @chrys_d_974 for vocals 🔊

Custom mocap made with @hellorokoko

Smartsuit 💎”

From the Instagram account of Marc-Aurélien

Very cool job...

#Marc-Aurélien#51#area 51#MBGCORE#Bruits. Studio#Jeff Heim#Drum run#Rokoko#Christophe Crescence#science fiction#sci fi#Naruto#naruto run#animation#animated short film#unilad#military base#digital art#helicopter#explosion#attack#invasion#Instagram

72 notes

·

View notes

Photo

Virtual Reality per contrastare il Covid e non solo. Il corso di Scuola Futuro Lavoro per operare nel virtuale

Milano, 11 gennaio 2021 - Sono enormi le potenzialità della realtà virtuale nei contesti sanitari, già gravati dalla pandemia, scolastici, artistici, sportivi e nei vari tipi di training, oltre a rivelarsi utile contro lo stress da pandemia. Consapevole della sempre più evidente affermazione delle tecnologie immersive sul mercato, Scuola Futuro Lavoro (con sede a Milano, in via Ondina Valla 2 angolo Viale Cassala 48/1) lancia il corso di Virtual Reality, che si svolgerà dal 22 febbraio al 30 giugno, dalle 18.30 alle 21.30. Composto da 200 ore, declinate in 6 distinte unità didattiche, il corso tiene conto della varietà degli orizzonti applicativi e dell'opportunità di integrare le tecnologie VR (da Virtual Reality) nei contesti professionali più eterogenei.

La realtà virtuale rappresenta una delle tecnologie che più riesce a rispondere alle nuove richieste in termini di modalità di consumo delle informazioni, di fruizione di contenuti e di intrattenimento: nasce dall'idea di replicare la realtà quanto più accuratamente possibile dal punto di vista visivo, uditivo, tattile e anche olfattivo, per compiere azioni nello spazio virtuale superando limiti fisici, economici e di sicurezza.

Oltre al settore dell'intrattenimento, sempre più imprese utilizzano queste tecnologie per ottimizzare tempi e costi in diversi ambiti: progettazione, simulazione, formazione, testing, assistenza tecnica.

Nel campo medico, la realtà virtuale permette di offrire assistenza sanitaria a distanza ai pazienti affetti dal Covid, monitorando il loro ritorno a casa dall'ospedale. Nell'ambito di speciali programmi di supporto, supervisionati da remoto dal personale medico, applicazioni VR consentono di gestire meglio lo stress e l'ansia, minimizzando gli effetti negativi dell'isolamento, grazie alla possibilità di rilassarsi e visitare virtualmente luoghi straordinari.

La realtà virtuale è, inoltre, uno strumento sempre più importante per architetti, designer, costruttori e venditori. Attraverso l'immersione sensoriale si percepiscono proporzioni e dimensioni in maniera realistica, ottenendo ciò che è impossibile fare attraverso un disegno 2D.

L'intento del corso è quello di istruire i partecipanti sulle tecniche principali per realizzare applicazioni in Realtà Virtuale. Attraverso lo studio delle potenzialità dell'engine in real time di Unity 3D, gli alunni impareranno a configurare un progetto, impostare una scena, assemblare gli asset dallo store di Unity 3D, ideare una scena interattiva ed esportare la stessa completa per la fruizione nel visore. Una parte del corso è riservata ai concetti base e tecniche del Sound Design.

Non si prescinderà, inoltre, da una conoscenza approfondita delle specifiche tecniche dei dispositivi VR e dalla capacità di configurare gli stessi per la fruizione individuale. Oltre al modulo di Character Design, parte centrale del corso è rappresentata dall'unità dedicata all'esplorazione delle potenzialità dei software di modellazione 3D, durante la quale gli studenti realizzeranno il modello 3D di un personaggio e di un prop utilizzando i principali strumenti per la scultura e la modellazione in Blender.

A completamento della prima aggregazione di moduli, i corsisti si misureranno conl'animazione 3d attraverso tecniche e software di Motion Capture, con la quale impareranno ad applicare a personaggi virtuali i movimenti di persone o cose ripresi in tempo reale e immediatamente riprodotti sullo schermo tramite l'utilizzo delle attrezzatore di MoCap Rokoko. Dotata di aule con strumentazione e tecnologie altamente professionali, Scuola Futuro Lavoro mette a disposizione uno spazio dedicato alla Realtà Virtuale, oltre a tuta e guanti per Motion Capture e Visori Oculus.

2 notes

·

View notes

Link

By Mike Seymour

January 29, 2019

In the film, after a car accident kills the family of William "Will" Foster, played by Keanu Reeves, he will stop at nothing to bring them back, even if it means pitting himself against a government-controlled laboratory, a police task force and the physical laws of science.

On the verge of successfully transferring human consciousness into a computer, synthetic biologist and neuroscientist Will believes he can essentially resurrect his family. Will recruits fellow scientist Ed Whittle to help him secretly clone the bodies of his family and create replicas. Will eventually faces a "Sophie's choice" when it turns out that he can only bring three of his four deceased family members back to life. The film features what has been described by one critic as "cinema's weirdest not-to-be-missed robots, as it scarily, giddily threatens revenge, with a mere turn of its head."

The story and screenplay for Replicas was developed at Keanu Reeves and Stephen Hamel's production company Company Films.

trailer

Executive producer James Dodson got involved at the very beginning of the Replicas project two and half years ago, around the time they decided to shoot the film in Puerto Rico.

Copyright: Entertainment Studios

The film was shot on the Alexa and ended up with over 400 visual effects shots. Keanu Reeves was heavily involved as both producer and actor. "He was interested in the whole movie. It was his movie. He developed the script. He was on it for two years before we shot it...and he was particularly interested in the AV stuff," comments Dodson, referring to the minority report style sequences, which were complex to act and animate. "Basically we were trying to tell the story that Keanu's character, William Foster, was going into the human brain and analyzing the data in the brain and then transferring the data elegantly into a machine facsimile of a human - into a synthetic human brain, if you will," he adds. "And it's not like you can just go and buy that on Turbo Squid."

The team worked with multiple vendors, spending months to try and find the right design solution. "Every piece of user interface was crafted, there was nothing off the shelf that you could use. Everything had to be designed specifically for this because it was such a specific story. Keanu was keenly interested in every step."

The user interface designs of the brain in AR were started by Chris Keifer, who does much of the screen graphics for Westworld. "He finally cracked how to represent it. We had 9 or 10 weeks with him, before Pacific Rim 2 stole him away from us!" jokes Dodson. "Then Josh Zacharias took over, I talked to him almost every day for a year on this project. He really elevated the work and nailed it. It had to be believable and it had to tell the story but not be too on the nose...it was just so important that the audience would buy it."

The animation was choreographed to the motion data gained from Reeves hand movements, animated in Maya and rendered in RenderMan.

The team used iClone software in the production of Replicas in three different ways:

James Martin and John Martin led the previz team in visualising some of the action sequences such as the car crash in iClone.

There was also conceptual work around the AR infographics that Keanu's character manipulates, that needed the software for the interaction to work onscreen.

Finally in post-production, iClone was invaluable in animating the robot when it gains consciousness.

Reallusion makes the iClone software the team used. They are headquartered in Silicon Valley, with R&D centres in Taiwan, and offices in Germany and Japan. Reallusion is focused on the development of real-time cinematic virtual production and motion capture tools.

Reallusion provides users with character animation, facial and body mocap, and voice lipsync solutions for real-time filmmaking production. The company first launched iClone v1.0 at the end of 2005 and it found popularity within the Machinima community. The current version 7 was released in 2017. In addition to having its own real time engine, an easy-to-use avatar and facial morphing system with a voice lip-sync solution, the software interfaces with most mocap systems.

More recently the company has developed Motion LIVE, which is a body motion capture platform that connects motion data streams from multiple industry mocap devices, such as Xsens and Rokoko, to animate 3D character faces, hands and bodies.

Motion LIVE did not exist when Replicas was in production however. "That program didn't exist 18 months ago when we were doing Replicas, but we used a similar thing. It was just Perception Neurons, motion capture software (by Noitom), which then fed directly into iClone. Replicas did not need facial capture of the actors' expressions, only their movements."

In the robot sequence, the robot was 100% CGI. On set there was a mocap artist in a Perception Neuron suit. This live data was fed into iClone and this allowed for a 'slap comp' version of the robot to be seen on set for framing and blocking. The edit used this footage as post-viz. "The great thing was that once that sequence was cut, we could send those exact MoCap files down to Argentina where the artists could take the data and attach it to the final full resolution robot," explains Dodson. The export from iClone to Maya was done as an FBX file.

For the crash sequence, the team used iClone to work out how to film with the stunt team and physical effects crew. The stunt car was on "a crane cable with a gymbal and we worked out which shots we could chuck it in the air and pull it back like a pendulum to drop it, and which ones would need visual effects," Dodson recalls. One of the features the team used in the previz is the ability to use a single jpeg to create a representation of the actual actors on the 3D models used for the layout and previz. Each of the family members was modelled this way for the previz.

Dodson fully acknowledges that major big budget films use expensive and often proprietary technology for simulation and previz. However for him, the benefit is in how inexpensive and accessible tools, such as iClone, are able to, "democratize the entire process of motion capture and real time rendering - I am just addicted to previsualisation, and the level of quality and detail we can do for a few hundred dollars and still have studio quality results is incredible."

source

13 notes

·

View notes

Text

Motion Capture Services at AAS Apple Arts Studios! ( www.appleartsstudios.com ) From precise Body Tracking, Retargeting to seamless Facial Retargeting, we've got it all covered. Whether it's from Vicon, OptiTrack, Qualisys, or markerless systems like Movella Entertainment | Xsens, Rokoko and #PerceptionNeuron, we specialize in turning motion data into captivating experiences. Reach out for top-notch services in gaming, entertainment, and beyond! #MotionCapture #mocap #Gaming #Entertainment #AASStudio"

#3D Animation#animation#MotionCapture#mocap#Gaming#Entertainment#AASStudio"#outsourcing#gamedev#optitrack

1 note

·

View note

Text

List of 10 Best Interior Designers in India (2021)

In antique days, humans was once informal approximately the interiors in their homes and offices. But now the time has changed. People need an excellent atmosphere whether or not it’s a restaurant, hotel, office, or their home. So, to clear up this trouble the saviors are indoors designers. But, humans nonetheless get harassed approximately whom to pick out amongst such a lot of indoors designers. So, for this trouble, we've got provide you with a listing of the 10 Best Interior Designers in India (2021).

10 Best Interior Designers in India

1. KK Technocrats

This is an interior design company headquartered in Delhi. Provide services such as general contracting, design and construction, and MEP services. The company's main customers are Ibibo, TATA, Quikr and many other big brands. The company is registered in 8 states: Delhi, Haryana, UP, Karnataka, Chandigarh, Gujarat, Maharashtra and Uttarakhand.

2. Carafina

Carafina is a Bangalore-based company. This company masters in home interior designing. They have designed interiors of many villa, apartments and bungalows. The company is run by 3 women.

3. Sobha Limited

Sobha Limited is one of the best interior designers in India. The company has done extensive work in the design of corporate and residential spaces. Obha Limited is also one of the most expensive interior design companies in India.

3. Ocean

Ocean is one of the Best Interior Designers in India. Ocean is based in Chennai. This company was founded in 1996. It works in corporate, home interiors, MEP and Façade works. Ocean has done work for Google, ALF, Table Space, Olympia, etc.

4. Allied Studios

Although the company also provides other services, such as turnkey furniture, furniture, home furnishings, etc., Allied Studios is one of the best choices for cafe interior design. However, the company has worked in many bars and cafes, such as: ROADIES CAFE, Pumpkin N PIE, RAMADA GURGAON, SHAKE SQUARE.

5. Rokoko

Rococo is one of the cheapest and cheapest interior designers in India, but this does not mean that it is a low-quality company. It is an excellent and affordable company that even middle-class interior designers can choose. The uniqueness of this company is that you can choose a design online. You can customize the design online from your website.

6. Gauri Khan Designs

Mostly everyone knows about this company because of its owner. Gauri Khan who is the wife of Shahrukh Khan is the founder of this company. Gauri Khan Designs has done Interior Designing for many celebrities like Karan Johar, Ranbir Kapoor, Alia Bhat, etc. One good and unique thing about this company is that it has 3D designs that help the clients to visualize better.

7. De Panache

One of the Luxurious Interior Designers in Bangalore. De Panache is a famous company in Bangalore. It has worked for Prestige, Sobha Indraprastha and many Villas.

8. Designqube

One of the best interior design companies in India. Designqube is headquartered in Bangalore and operates in southern India. He has worked with some famous brands such as ITC, Murugappa, Lord of the Drinks and so on.

9. Synergy

Synergy is one of the Best Interior Designers in India. Synergy was founded in 1998 by Vipin Chutani and Swati Garg. It is also awarded the Best Corporate Interiors Company Delhi-NCR by NDTV twice. Synergy has a long chain of clients like:

10. K2India

This is one of the Best Interior Designers in India. Sunita Kohli is a Padma Shri holder. She received this award in 1992.She has also has refurbished many historical buildings like Rashtrapati Bhawan, the Prime Minister’s Office and Official Residence, Hyderabad House, the bungalows of the Indira Gandhi Memorial Museum and the British Council Building and Library.

0 notes

Link

While looking up mocap data, i found this site which includes a library of it called ‘Rokoko Studio’.

It also mentions that Maya 2020 has a native intergration plugin for motion capture, but i’ll look into that more as im not able to get Maya 2020 on my home computer for some reason.

0 notes

Text

New Post has been published on SONGWRITER NEWS

New Post has been published on https://songwriternews.co.uk/2020/02/lil-nas-x-panini-official-video/

Lil Nas X - Panini (Official Video)

youtube

Official video for “Panini” by Lil Nas X.

Listen & Download ‘7’ the EP by Lil Nas X out now: https://smarturl.it/lilnasx7ep

Amazon – https://smarturl.it/lilnasx7ep/az

Apple Music – https://smarturl.it/lilnasx7ep/applemusic

iTunes – https://smarturl.it/lilnasx7ep/itunes

Spotify – https://smarturl.it/lilnasx7ep/spotify

YouTube Music – https://smarturl.it/lilnasx7ep/youtubemusic

Director: Mike Diva

Story By: Lil Nas X

Starring: Skai Jackson

Executive Producer: Josh Shadid

Associate Producer: Marissa Alanis

Producers: Johnny Hernandez & Joseph Barbalaco

Commissioner/Producer: Saul Levitz

Production Company: Lord Danger

Director of Photography: Aaron Grasso

1st AC: Keith Jones

Steadicam: Ari Robbins

Gaffer: Michael Schilling

BBE: Ardy Fatehi

Key Grip: Fritz Weber

BBG: Nico Ortiz

Production Designer: Johnny Love

Art Director: Michelle Hall

Wardrobe Stylist: Justin Lynn

Costume Design/Fabrication: Lucid Studios

Skai Jackson’s Hair & Make-Up: Alexander Armand & Brandy Allen

Lil Nas X Hair & Make-Up: Diana Escamilla & Jennifer Urquizo

Choreographer: Phil Wright

Motion Capture Specialist: Don Allen Stevenson III

VFX/Post Production Company: Chimney Group

Executive Producer: Ron Moon

Post Producers: Alex Ramos

Creative Director: Frederick Ross

VFX/Post Production Company: Post Lord (Lord Danger)

Executive Producer: Josh Shadid

Post Producer: Shyam Sengupta & Marissa Alanis

Creative Director: Mike Diva

Post Supervisor: Andrew Henry

Editors: Mike Diva & Andrew Henry

3D Artist: Cornel Swodoba

3D Artist: Calvin Serrano

VFX Artist: Octane Jesus (David Ariew)

VFX Artist: Ryan Talbot

GFX Artist: Cody Vondell

GFX Artist: Silica (Eric Castro)

GFX Artist: Olney Atwell

GFX Artist: Tom Arena

Compositor: Ethan Chancer

Compositor: Josh Johnson

Special Thanks: Rokoko Electronics for providing Motion Capture Suit

Follow Lil Nas X

Facebook – https://www.facebook.com/LilNasX/

Instagram – https://www.instagram.com/lilnasx/

Twitter – https://twitter.com/LilNasX

https://www.lilnasx.com

#LilNasX #7EP #Panini

source

0 notes

Photo

Rokoko доступный захват движения лица

Rokoko анонсировала новое решение для захвата движений лица, которое не требует маркеров и обещает работать на iOS, Android и Desktop.

Он работает с использованием независимой от камеры надстройки внутри Rokoko studio, по словам разработчиков, все, что вам нужно, – это простая калибровка, чтобы начать работу.

Помимо возможности записи и экспорта данных, вы также сможете просматривать предварительный просмотр в реальном времени в Rokoko Studio и потоковую запись данных в реальном времени в Unreal, Unity и Motionbuilder.

Для того, чтобы держать телефон на месте, также можно будет приобрести смонтированный на голове риг, а для студий, использующих решение для захвата тела от Rokoko Smartsuit Pro, захват лица может быть синхронизирован для захвата как тела, так и лица.

Запуск запланирован на июнь 2019 года, но вы можете зарегистрироваться для раннего доступа и получить месяц бесплатно. После бесплатного периода услуга предлагается за 39 долларов в месяц. Первоначальный выпуск будет поддерживать iOS (специально для iPhone X), в скором времени планируется выпустить Android и ПК.

Узнайте больше на веб-сайте Rokoko.

(function(w, d, n, s, t) []; w[n].push(function() Ya.Context.AdvManager.render( blockId: "R-A-326577-4", renderTo: "yandex_rtb_R-A-326577-4", async: true ); ); t = d.getElementsByTagName("script")[0]; s = d.createElement("script"); s.type = "text/javascript"; s.src = "//an.yandex.ru/system/context.js"; s.async = true; t.parentNode.insertBefore(s, t); )(this, this.document, "yandexContextAsyncCallbacks");

https://is.gd/vC7qZ9

0 notes

Text

Digital influencers and the dollars that follow them

Sunny Dhillon Contributor

Sunny Dhillon is a partner at Signia Venture Partners.

More posts by this contributor

Security tokens will be coming soon to an exchange near you

Amazon’s next conquest will be apparel

Animated characters are as old as human storytelling itself, dating back thousands of years to cave drawings that depict animals in motion. It was really in the last century, however — a period bookended by the first animated short film in 1908 and Pixar’s success with computer animation with Toy Story from 1995 onward — that animation leapt forward. Fundamentally, this period of great innovation sought to make it easier to create an animated story for an audience to passively consume in a curated medium, such as a feature-length film.

Our current century could be set for even greater advances in the art and science of bringing characters to life. Digital influencers — virtual or animated humans that live natively on social media — will be central to that undertaking. Digital influencers don’t merely represent the penetration of cartoon characters into yet another medium, much as they sprang from newspaper strips to TV and the multiplex. Rather, digital humans on social media represent the first instance in which fictional entities act in the same plane of communication as you and I — regular people — do. Imagine if stories about Mickey Mouse were told over a telephone or in personalized letters to fans. That’s the kind of jump we’re talking about.

Social media is a new storytelling medium, much as film was a century ago. As with film then, we have yet to transmit virtual characters to this new medium in a sticky way.

Which isn’t to say that there aren’t digital characters living their lives on social channels right now. The pioneers have arrived: Lil’ Miquela, Astro, Bermuda and Shudu are prominent examples. But they are still only notable for their novelty, not yet their ubiquity. They represent the output of old animation techniques applied to a new medium. This TechCrunch article did a great job describing the current digital influencer landscape.

More investors are betting on virtual influencers like Lil Miquela

So why haven’t animated characters taken off on social media platforms? It’s largely an issue of scale — it’s expensive and time-consuming to create animated characters and to depict their adventures. One 2017 estimate stated that a 60 to 90-second animation took about 6 weeks to create. An episode of animated TV takes between 1–3 months to produce, typically with large teams in South Korea doing much of the animation legwork. That pace simply doesn’t work in a medium that calls for new original content multiple times a day.

Yet the technical piece of the puzzle is falling into place, which is primarily what I want to talk about today. Traditionally, virtual characters were created by a team of experts — not scalable — in the following way:

Create a 3D model

Texture the model and add additional materials

Rig the 3D model skeleton

Animate the 3D model

Introduce character into desired scene

Today, there are generally three types of virtual avatar: realistic high-resolution CGI avatars, stylized CGI avatars and manipulated video avatars. Each has its strengths and pitfalls, and the fast-approaching world of scaled digital influencers will likely incorporate aspects of all three.

The digital influencers mentioned above are all high-resolution CGI avatars. It’s unsurprising that this tech has breathed life into the most prominent digital influencers so far — this type of avatar offers the most creative latitude and photorealism. You can create an original character and have her carry out varied activities.

The process for their creation borrows most from the old-school CGI pipeline described above, though accelerated through the use of tools like Daz3D for animation, Moka Studio for rigging, and Rokoko for motion capture. It’s old wine in new bottles. Naturally, it shares the same bottlenecks as the old-school CGI pipeline: creating characters in this way consumes a lot of time and expertise.

Though researchers, like Ari Shapiro at the University of Southern California Institute for Creative Technologies, are currently working on ways to automate the creation of high-resolution CGI avatars, that bottleneck remains the obstacle for digital influencers entering the mainstream.

Stylized CGI avatars, on the other hand, have entered the mainstream. If you have an iPhone or use Snapchat, chances are you have one. Apple, Samsung, Pinscreen, Loom.ai, Embody Digital, Genies and Expressive.ai are just some of the companies playing in this space. These avatars, while likely to spread ubiquitously à la Bitmoji before them, are limited in scope.

While they extend the ability to create an animated character to anyone who uses an associated app, that creation and personalization is circumscribed: the avatar’s range is limited for the purposes of what we’re discussing in this article. It’s not so much a technology for creating new digital humans as it is a tool for injecting a visual shorthand for someone into the digital world. You’ll use it to embellish your Snapchat game, but storytellers will be unlikely to use these avatars to create a spiritual successor to Mickey Mouse and Buzz Lightyear (though they will be a big advertising / brand partnership opportunity nonetheless).

Video manipulation — you probably know it as deepfakes — is another piece of tech that is speeding virtual or fictional characters into the mainstream. As the name implies, however, it’s more about warping reality to create something new. Anyone who has seen Nicolas Cage’s striking features dropped onto Amy Adams’ body in a Superman film will understand what I’m talking about.

Open-source packages like this one allow almost anyone to create a deepfake (with some technical knowhow — your grandma probably hasn’t replaced her time-honored Bingo sessions with some casual deepfaking). It’s principally used by hobbyists, though recently we’ve seen startups like Synthesia crop up with business use cases. You can use deepfake tech for mimicry, but we haven’t yet seen it used for creating original characters. It shares some of the democratizing aspects of stylized CGI avatars, and there are likely many creative applications for the tech that simply haven’t been realized yet.

While none of these technology stacks on their own currently enable digital humans at scale, when combined they may make up the wardrobe that takes us into Narnia. Video manipulation, for example, could be used to scale realistic high-res characters like Lil’ Miquela through accelerating the creation of new stories and tableaux for her to inhabit. Nearly all of the most famous animated characters have been stylized, and I wouldn’t bet against social media’s Snow White being stylized too. What is clear is that the technology to create digital influencers at scale is nearing a tipping point. When we hit that tipping point, these creations will transform entertainment and storytelling.

0 notes