#agent 8b

Text

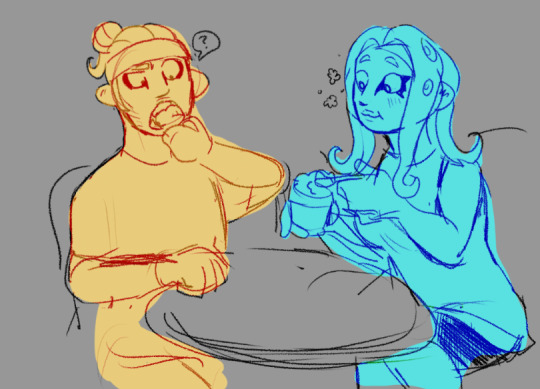

COMIC: Meeting the Pearl drone 💗

#art#splatoon#splatoon 3#splatoon side order#side order splatoon#pearl splatoon#side order#pearl bot#agent 8#agent 8b#cinnamoroll#nate#Agents#pls let us have a pearl bot plushie someday. i'm obsessed with her

226 notes

·

View notes

Text

Old doodle: Ami leaving for the mission

74 notes

·

View notes

Note

Some agents have separate side businesses where they offer editorial critiques on query letters, opening pages, and the like. I know it's against code of conduct rules for a writer to hire an agent for editing and then submit that SAME work to that agent for possible representation, but does that same rule apply for future works? Like, if I hire Agent Agatha to critique my manuscript, then Agent Agatha would never be able to represent me with any book -- or just not with the one she critiqued?

To be clear, that rule in the AALA Canon of Ethics is not meant to thwart you -- it's meant to protect you. We can't accept money from our clients for "reading fees" or editorial services -- reading our clients work and providing whatever editorial services is part of our job, and we don't get paid for them unless the book sells. And, while we CAN offer freelance editorial services to non-clients for a fee, it has to be clear that paying the fee doesn't make us your agent, that representation in no possible way hinges on paying a fee, etc. So IF an agent takes on freelance editorial clients / "book coach" clients, etc, they have to have a very clear line in the sand keeping those separate from agency clients.

Let's take a closer look at the relevant parts of the Canon of Ethics, I've bolded them. You'll see that there is in fact a proviso about representing something we've worked on editorially:

"8. A) The Association believes that the practice of literary agents charging clients or potential clients for reading and evaluating literary works (including query letters, outlines, proposals, and partial or complete manuscripts) is subject to serious abuse that reflects adversely on our profession. Members should be primarily engaged in selling or supporting the selling of rights and services on behalf of their clients, i.e. members should not be primarily pursing freelance editorial work and misrepresenting themselves as literary agents or support staff of a literary agency. Members may not charge any reading fees for evaluating work for possible representation. However, members may provide editorial services in exchange for a fee to authors who are not clients, provided members adhere to the following provisions:

I) Members who render such services must make clear to the author in writing in advance that the rendering of such services does not indicate or imply that the member will represent the author as a literary agent and must provide to the author at the outset a copy of this Paragraph (8A-8B) of the AALA Canon of Ethics; and

II) if during or after the rendering of such services the member agrees to represent the author, the member must then return in full all payments received for such services prior to submitting the work and waive any further payments for such services for that author; and

III) to help prevent confusion, abuse, and to further separate paid editorial services from literary representation, at no time may members respond to an author who approaches them only for literary representation by instead suggesting or directing the author to pay for editorial services by the member or by anyone else financially associated with the member or member’s agency. Members must provide paid editorial services only to authors who have approached them directly for such services.

9 notes

·

View notes

Text

Unicorn Warriors Ep 6...

Hmm not entirely a lot.

Interesting twist I guess. Sooo I’m guessing Melinda is in full control? And Emma’s just a passenger. That being said... was she in control on the boat ep? Or just partially...

Also.. when she asked if Edred knew about her mother. Granted thats not so much a twist. I’m more curious as to why Melinda doesn’t remember if she did/or didn’t. Personally I don’t think she did. She’s fighting Emma this much to avoid talking about her trauma. But anyways part of me is like “If you’re in full control now don’t you remember?”.

Or is this just to show that they’re all not “fully aware” or whatever. Do actually like that depending on how much Melinda was in “charge” or whatever, she’s also pissed at Edred about the incident on the boat.

Edred being a bit of a dummy. I do admit that scene where he felt bad for her, the ears drooping was adorable. Also curious about what this big secret is, that Alfie’s referring to. Hmmm guess he went to the past rather than the future (also Fang cameo XD).

Just gonna guess and assume like Melinda, Edred has family issues 8B.

And then we have the evil/I guess Fox lady?(kitsune)... I’m gonna be blunt I don’t think that was the main evil or for that matter that their battle is over. Feels literally too early for that. Also gonna assume that robot tycoon is the one who betrayed her.

Again though why? Whats he to gain from this. Either the evil or the fox lady will return. Or it’ll show the fox was just an agent. I dunno. Either way curious for next ep.

11 notes

·

View notes

Text

Agent 8

Deep sea metro (Restricted Sublevel -8b)

[EXPUNGED]

8: This should be the place the signal is coming from…

DJ Hyperfresh: Correct 8, with your new suit you should be able to interface with the ancient controls of this large unidentified object.

M.C Princess: Yeah 8! Find that signal and figure out what’s going on with the square!

8: Copy that!

*DOOR? OPEN*

*TERMINAL BOOTS*

DJ Hyperfresh: 8 I’ve lost your tracking signal, can you report what you see?

8: It’s a small room with cylinders lining the walls, they remind me of test tubes.

M.C Princess: Even though the signal is strong, Marina was able to find that the broadcaster is a small podium looking thing. Smash it and get out of there!

DJ Hyperfresh: Pearlie! We need to try and preserve this object so we can research it for later use. 8 don’t smash anything!

8: podium located, what’s my next steps?

DJ Hyperfresh: there should be a small data chip, remove that and according to these schematics that should stop the signal.

*SOUND OF EJECTED STORAGE CHIP*

8: Done! Now let’s-

*RUMBLING*

???:This place will become your home.

*EXPLOSION*

???:This place will become your tomb.

2 notes

·

View notes

Text

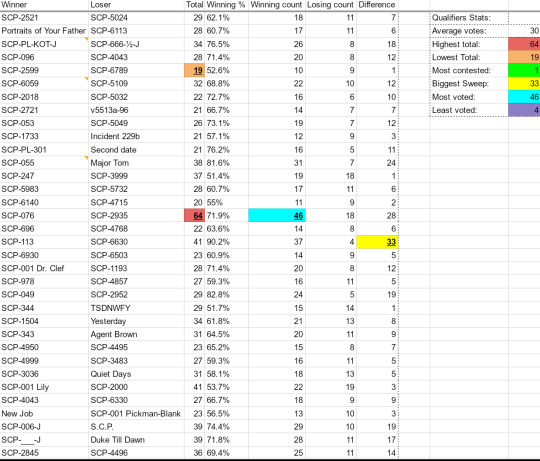

Round 1 is upon here's! Here's a bracket and overview of the qualifiers.

Left half will be going up today (in just a minute!) and right half tomorrow.

Everyone that won had been filtered into the bracket based on how (approximately) many votes they got, so it's still somewhat seeded. The tiebreakers were spotted into slots numbers 62-64. I've immediately spotting a mistake with not properly resizing for Dr. Clef's Proposal on the left side but I'm not going to bother to correct that! Get rid for these matchups.

Qualifiers Data Analysis / Losers Acknowledgement

34 contestants moved on to round 1 proper, and it already didn't go the way in expected! I expected the tales to be so much more popular than they were. I also expected more Propaganda, but I guess people are keeping those for the proper rounds.

It was a small Qualifiers round, as I expected, but there was still a surprising turnout for SCP-076, who got a massive 46 votes.

The biggest sweep was SCP-113, unsurprisingly, with 90.2% of the votes.

And a acknowledgement to the qualifiers that didn't move on, including: SCP-5024, SCP-6113, SCP-666-½-J, SCP-4043, SCP-6789, SCP-5109, SCP-5032, Volume 55.13.A-96: Of the Retooling of Sector 92, Production Line 8b, And Other Matters, SCP-5049, Incident 239-B and Supplemental Report 239-B-192, Second Date, Major Tom, SCP-3999, SCP-5732, SCP-4715, SCP-2935, SCP-4768, SCP-6630, SCP-6503, SCP-1193, SCP-4857, SCP-2952, The Stars Do Not Wait For You, Yesterday, Agent Brown and the Case of the Missing Amulet, SCP-4495, SCP-3483, Quiet Days, SCP-2000, SCP-6330, SCP-001 Pickman-Blank, Stupendous Containment Procedures, Duke Till Dawn, SCP-4496.

Special shout outs to the tales that lost, because a few of them got double votes and should have been safe, if it wasn't for the weird number of match ups causing me to have to draw from the pool of safety. OTL my mistakes will be forgotten.

4 notes

·

View notes

Text

Some Cool Details About Llama 3

New Post has been published on https://thedigitalinsider.com/some-cool-details-about-llama-3/

Some Cool Details About Llama 3

Solid performance, new tokenizer, fairly optimal training and other details about Meta AI’s new model.

Created Using Ideogram

Next Week in The Sequence:

Edge 389: In our series about autonomous agents, we discuss the concept of large action models(LAMs). We review the LAM research pioneered by the team from Rabbit and we dive into the MetaGPT framework for multi-agent systems.

Edge 390: We dive into Databricks’ new impressive model: DBRX.

You can subscribed to The Sequence below:

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

📝 Editorial: Some Cool Details About Llama 3

I had an editorial prepared for this week’s newsletter, but then Meta AI released Llama 3! Such are the times we live in. Generative AI is evolving on a weekly basis, and Llama 3 is one of the most anticipated releases of the past few months.

Since the debut of the original version, Llama has become one of the foundational blocks of the open source generative AI space. I prefer to use the term “open models,” given that these releases are not completely open source, but that’s just my preference.

The release of Llama 3 builds on incredible momentum within the open model ecosystem and brings its own innovations. The 8B and 70B versions of Llama 3 are available, with a 400B version currently being trained.

The Llama 3 architecture is based on a decoder-only model and includes a new, highly optimized 128k tokenizer. This is quite notable, given that, with few exceptions, most large language models simply reuse the same tokenizers. The new tokenizer leads to major performance gains. Another area of improvement in the architecture is the grouped query attention, which was already used in Llama 2 but has been enhanced for the larger models. Grouped query attention helps improve inference performance by caching key parameters. Additionally, the context window has also increased.

Training is one area in which Llama 3 drastically improves over its predecessors. The model was trained on 15 trillion tokens, making the corpus quite large for an 8B parameter model, which speaks to the level of optimization Meta achieved in this release. It’s interesting to note that only 5% of the training corpus consisted of non-English tokens. The training infrastructure utilized 16,000 GPUs, achieving a throughput of 400 TFLOPs, which is nothing short of monumental.

Llama 3 is a very welcome addition to the open model generative AI stack. The initial benchmark results are quite impressive, and the 400B version could rival GPT-4. Distribution is one area where Meta excelled in this release, making Llama 3 available on all major machine learning platforms. It’s been just a few hours, and we are already seeing open source innovations using Llama 3. The momentum in the generative AI open models space definitely continues, even if it forced me to rewrite the entire editorial. 😊

🔎 ML Research

VASA-1

Microsoft Research published a paper detailing VASA-1, a framework for generating talking faces from static images and audio clips. The model is able to generage facial gestures such as head or lip movements in a very expressive way —> Read more.

Zamba

Zyphra published a paper introducing Zamba, a 7B SSM model. Zamba introduces a new architecture that combines Mamba blocks with attention layers which leads to high performance in training and inference with lower computational resources —> Read more.

MEGALODON

AI researchers from Meta and Carnegie Mellon University published a paper introducing MEGALODON, a new architecture that can scale to virutally unlimited context windows. As it names indicates, MEGALODON is based on the MEGA architecture with an improved gated attention mechanism —> Read more.

SAMMO

Microsoft Research published a paper detailing Structure-Aware Multi-objective Metaprompt Optimization (SAMMO), a framework for prompt optimization. The framework is able to optimize prompts for scenarios such as RAG or instruction tuning —> Read more.

Infini-Attention

Google Research published a paper introducing Infini-Attention, a method to scale the context window in transformer architectures to virtually unlimited levels. The method adds a compressive memory into the attention layer which allow to build long-term and masked-local attention into a single transformer block —> Read more.

AI Agents Ethics

Google DeepMind published a paper discussing ethical considerations in AI assistants. The paper cover aspects such as safety alingment, safety and misuse —> Read more.

🤖 Cool AI Tech Releases

Llama 3

Meta AI introduced the highly anticipated Llama 3 model —> Read more.

Stable Diffusion 3

Stability AI launched the APIs for Stable Diffusion 3 as part of its developer platform —> Read more.

Reka Core

Reka, an AI startup built by former DeepMind engineers, announced its Reka Core multimodal models —> Read more.

OpenEQA

Meta AI released OpenEQA, a benchmark for visual language model in physical environments —> Read more.

Gemini Cookbook

Google open sourced the Gemini Cookbook, a series of examples for interacting with the Gemini API —> Read more.

🛠 Real World ML

AI Privacy at Slack

Slack discusses the architecture enabling privacy capabilities in its AI platform —> Read more.

📡AI Radar

Andreessen Horowitz raised $7.2 billion in new funds with a strong focus on AI.

Meta AI announced its new AI assistant based on Llama 3.

The Linux Foundation announced the Open Platform for Enterprise AI to foment enterprise AI collaboration.

Amazon Bedrock now supports Claude 3 models.

Boston Dynamics unveiled a new and impressive version of its Atlas robot.

Limitless, formerly Rewind, launched its AI-powered Pendant device.

Brave unveiled a new AI answer engine optimized for privacy.

Paraform, an AI platform that connects startups to recruiters, raised $3.6 million.

Poe unveiled multi-bot chat capabilties.

Thomson Reuters will expand its AI Co Counsel platform to other industries.

BigPanda unveiled expanded context analysis capabilities to its AIOps platform.

Evolution Equity Partners closed a $1.1 billion fund focused on AI and cybersecurity.

Salesforce rolled out Slack AI to its paying customers.

Stability AI announced that is laying off 10% of its staff.

Dataminr announced ReGenAI focused on analyzing multidimensional data events.

#000#agent#agents#ai#AI AGENTS#ai assistant#ai platform#AI-powered#Amazon#Analysis#API#APIs#architecture#attention#attention mechanism#audio#benchmark#billion#bot#Carnegie Mellon University#claude#claude 3#Collaboration#cybersecurity#data#databricks#dbrx#DeepMind#details#Developer

0 notes

Text

Tax Return Filing Agents in Mohali | Amrit Accounting & Taxation Services . Looking for reliable GST services in Mohali? Look no further! Amrit Accounting & Taxation Services offers expert assistance for all your GST needs. From registration to filing returns, our team ensures compliance and maximizes your benefits. Contact us today at +919915311984 or visit us at E331, Phase 8B, Industrial Area, Sector 74, Sahibzada Ajit Singh Nagar, Punjab 160057.

0 notes

Text

DAN DAWN" Episode 17 - DODON DODON DODON DODON! ~ (in Japanese)

reference:Amazon

URL:https://www.amazon.co.jp/%E3%81%A0%E3%82%93%E3%83%89%E3%83%BC%E3%83%B3-1-%E3%83%A2%E3%83%BC%E3%83%8B%E3%83%B3%E3%82%B0-KC-%E4%B8%89%E5%AD%90/dp/4065332974

I read episode 17 of "Dundon".

*I'm heavily spoiled.

It seems that the secret imperial edict of Boshin was safely delivered to the Mito clan.

However, the Mito Clan was too scared of Ii Naosuke to keep it.

The Satsuma clan camp, troubled, planned to overthrow Ii Naosuke in cooperation with the Mito clan.

Meanwhile, a captured anti-Ii faction member is being tortured in Edo.

He is said to be a scholar named Umeda Unhama.

I immediately looked him up on Wiki. It is true that he was captured, tortured, and died of illness in prison. I heard that even after being whipped, he never spoke. It seems that he had a strong spirit.

However, Taka-sama easily discovered the hiding place of the list of activists and documents that Umeda Unhama had in his possession.

Now that the Shogunate has all the materials it needs to arrest an important person in the Satsuma clan, Gessho and Saigodon are in a desperate situation!

…… is the main story.

In a side story, the son of the double agent Inumaru is taken in by Kawaji.

Inumaru dares to hand him over to the enemy side, judging that he will likely be killed if he keeps him with him.

However, I am amazed that Taka-sama does not find out that he has been a double agent for such a long time. I wonder if he has already found out. I think there is a theory that he is being kept in the dark. Taka-sama's smile in the middle of the story was also very meaningful. He talked about his bond with Inumaru, but they were that close.

And this Inumaru's son, Taro, seems to have some great potential.

Probably …… father will out sooner or later, and I'm sure he will show his ability when that happens. I wonder if he will be Kawaji's belly-button. But Kawaji is the one who pushed my dad into a corner, so I'm sure he's going to have a lot of resentment towards him.

And so, in the next issue, a great big man is going to make an appearance.

Who is the great big man of this era? I'm curious.

I will look forward to waiting for it.

0 notes

Text

Embark on unforgettable journeys with Samaira Travels, your best travel agency in Delhi, and the ultimate Tour and Travel Agency in Rohini. Our dedicated team ensures a seamless travel experience, making us the best in the industry. As your trusted Air Ticketing Agent, we prioritize convenience and competitive prices for your flights. Samaira Travels excels in providing efficient Passport Services and Visa Services, ensuring hassle-free travel documentation. Additionally, we stand out as a top Hotel Booking Agent, offering a wide range of accommodations tailored to your preferences. Whether you're planning a leisurely vacation or a business trip, Samaira Travels is your go-to for the best tour and travel services. Trust us for excellence in passport services, air ticketing, visa assistance, and hotel bookings, ensuring your journeys are truly remarkable.

1 note

·

View note

Text

my half-sanitized agent Nate finally finds someone like him 🩷

(also I'm OBSESSED with the Pearl drone)

#art#splatoon#splatoon 3#splatoon oc#side order#splatoon side order#side order splatoon#dedf1sh#agent 8#acht splatoon#acht#nate#agent 8b#i have a feeling this will be Nate-centric and OE is Vanessa-centric#Agents

186 notes

·

View notes

Text

I wanted to make lil doodles from the splatfest during it, but like I've been playing too much Splatoon and doing lil side stuff rather than drawing 😔😭

But these were some I did around the time!

Then here's one I did today 💀

OH FUCK I FORGOT TO DRAW THE REST OF RILEY'S TANK TOP UM IMAGINE THERE'S ONE LMAO

#splatoon#doodles#im gonna draw some more today#inkling#octoling#(3) riley#(8) ami#(4) sol#agent 4#agent 8#splat3#agent 3#(8b) suke#i put a b there to signify ami's brother#splatfest

64 notes

·

View notes

Text

描述ai的潜力和中短期的未来预测

文章起源于一个用户感叹openai升级chatgpt后,支持pdf上传功能,直接让不少的靠这个功能吃饭的创业公司面临危机,另外一个叫Ate a pie的用户对此做了回复,这个简短的回复也很值得一读:

“我不知道为什么会有人感到惊讶。

以下是OpenAI未来两年的产品战略:

+你将能够上传任何内容到ChatGPT

+你将能够链接任何外部服务,如Gmail、Slack

+ChatGPT将拥有持久的记忆,除非你想要,否则不再需要多次聊天,(*所有的聊天都在一个窗口中进行,这样chatgpt能对你的喜好进行全方位的了解)

+ChatGPT将拥有一致的、用户可定制的个性,包括政治偏见

+ChatGPT将能够支持文本、声音、图像(图表和视频还在开发中?)

+ChatGPT将变得更加快速,直到你感觉它是一个真人(响应时间>50毫秒)

+幻觉和非事实性错误将迅速下降

+随着自我调节的改进,拒绝回答问题的情况将减少”

然后本文作者,Rob Phillips,对此进行了详细的回复。

作为曾与Siri团队合作的工程师,作者对ai助理未来的潜力的描述非常专业:

“OpenAI正在构建一种全新的计算机,不仅仅是一个用于中间件/前端的大型语言模型(LLM)。他们需要实现这一目标的关键部分包括:

1、对用户偏好的持续把握:

1a、ai助理最大的突破始终是深入理解用户最具体的需求。

1b、这是电脑的“我靠”时刻。

1c、我们2016年在Viv项目上做到了这一点,当时我们的AI根据你通过Viv使用的每项服务了解你的喜好,并结合了上下文,比如你告诉我们你妈喜欢什么样的花。

1c、这还需要包括访问您的个人信息以推断偏好。

2、外部实时数据:

2a、LLM的50%实用性来自基础训练和RLHF微调(Reinforcement Learning from Human Feedback,增强式用户反馈学习);但通过扩展其可用数据与外部资源,其实用性将大大增加。

2b、Zapier、Airbyte等将有所帮助,但期望与第三方应用程序/数据进行深度集成。

2c、“与PDF聊天”只是冰山一角。能做的远不止这些。

3、跑在虚拟机上的应用:

3a、上下文窗口有限,因此AI提供商将继续从直接在Python或Node/Deno虚拟环境上运行任务中获益,使其可以像今天的计算机一样消耗大量数据。

3b、如今这些是数据分析师暂时的工作环境,但随着时间的推移,它们将成为一种新型的Dropbox,您的数据将长期保留以供额外处理或进行跨文件推断/洞察。

4、代理任务/流程规划:

4a、没有意图,规划就无法进行。理解意图一直是(应用开发的)圣杯,LLM最终帮助我们解锁了我们在Viv上用NLP技术花了多年时间来试图解决的功能。

4b、一旦意图准确,规划就可以开始。创建代理规划器需要非常细致,需要与用户偏好、第三方数据集、对计算能力的了解等进行大量集成。

4c、Viv真正的魔法大部分是动态规划器/混合器,它会将所有这些数据和API整合在一起,并为普通消费者生成工作流程和动态UI以执行它们。

5、专家级(可组合的)应用商店:

5a、苹果最初犯了一个错误,建立了一个封闭的应用商店;后来他们意识到,如果开放,他们可以通过创意复利来实现盈利。

5b、尽管OpenAI说他们只专注于ChatGPT,但他们终将重新定义专注的边界,并最终将帮助创造一大波的专业助理(agent)。

5c、构建者将能够将多种工具组合在一起形成专业工作流程。

5d、随着时间的推移,AI也将能够自动组合这些应用(agent),从之前的构建者那里学习。

6、持久的、上下文相关的记忆:

6a、嵌入(Embeddings)很有帮助,但它们缺少基本部分,如上下文切换��对话中心点、总结、丰富化等。

6b、如今LLM的大部分成本来自提示,但随着历史和持久性的嵌入以及推断的缓存,这将解锁长期记忆的能力,并指向关键的主题、话题、情感、语调等。

6c、核心记忆仅仅是开始。我们仍然需要所有我们思考过去的日落、分手、科学理解或与我们互动的人的敏感上下文时大脑会想到的丰富信息。

7、长轮询任务:

7a、“代理”是一个有争议的词,但部分意图是拥有可以根据所需的时间范围进行计划和自我完成的任务。

例如,“当蒙特利尔到夏威夷的航班价格低于500美元时通知我”

这将需要跨API提供商以及云中的虚拟环境协调计算。

8、动态用户界面:

8a、聊天不是最终的、一切的界面。应用之所以有按钮、日期选择器、图像等便利性,是因为它简化、澄清了操作。

8b、AI将是一个副驾驶,但要成为副驾驶,它需要适应对特定用户来说最有效的界面。未来的用户界面将是个性化的,因为优化需要它,所以用户界面将是动态的。

9、API和工具组合:

9a、预计未来AI将生成自定义的“应用”,在那里我们可以构建自己的工作流程并组合API,无需等待一个大型初创公司来做这个项目。

9b、将需要更少的应用程序和初创公司来生成前端,AI将更擅长将一系列工具和API结合在一起,付一定的费用后,生成最能满足用户需要的前端。

10、助理间的互动:

10a、未来将有无数的助理,每个助理都在帮助人类和其他助理朝着某个更高的(能力)意图发展。

10b、与此同时,助理还需要学会通过文本、API、文件系统和代理/初创公司和人类都使用的其他模式来相互对接,随着各种应用,更深的嵌入我们的世界。

11、插件/工具商店:

11a、只有通过组合工具、API、提示、数据、偏好等,才能实现专业助理。

11b、当前的插件商店还处于早期阶段,所以期待更多工作的到来,许多插件将因为它们变得更加任务关键而被内部整合。

这只是(我)10分钟的头脑风暴;之后毫无疑问还有更多,包括互联网搜索和抓取、社区(用于意图、构建、RLHF等)、动态API生成器和连接器、费用优化、工具构建、通过眼镜/耳机等不同输入方式的信息摄取。

如果你认为进入AI的时机已晚,那么请知道上述内容大约只占实际需要的25%,随着我们迭代并变得更加有创意,还有更多的创意即将到来。

我们正在@FastlaneAI构建这些部分,基于一个不太一样的理解:OpenAI永远不会在所有事情上都是最好的。因此,我们希望让你使用世界上最好的AI,不管是谁构建的(也可以是你!)。”

原文

0 notes

Link

In artificial intelligence, the seamless fusion of textual and visual data has long been a complex challenge, particularly in crafting highly efficient digital agents. Adept AI’s recent launch of Fuyu-8B signifies a groundbreaking leap forward in si #AI #ML #Automation

0 notes

Text

Fuyu-8B: A multimodal architecture for AI agents

https://www.adept.ai/blog/fuyu-8b

0 notes