Text

Sound deadening walls

This past week we attempted to sound deaden a wall in our condo from neighbors with a noisy TV. We Installed Rockwool safe n’ sound insulation, Green Glue Whisper Clips, Hat Track, and 2x 5/8″ sheets of drywall separated by Green Glue isolation compound, and sealed all gaps with Green Glue noise proofing sealant. It was a 4 day project, and we’re just about at ready to paint at this point.

The sound reduction seems to have worked? It should improve over the next month as the green glue sets. We no longer hear neighboring footsteps and haven’t heard any music or conversation since finishing the wall.

0 notes

Link

Neat little project I worked on over the last two days. We had a need to control power for lots of IoT devices. I picked the Sonoff Basic IoT smart switch and modified/wrote and flashed custom firmware to them.

We use our test track wifi network to provide sticky addresses via DHCP to the devices and then tie these into our control API.

The result is the ability to turn on/off devices like cameras, LIDAR, facility lights, and holiday tree lights from our test facility software API.

Devices are cheap (~$5.50) and by new years we should have around 100 in use throughout the facility. Very low latency since only the last very short hop is on a very stable and strong wifi connection.

0 notes

Photo

Refurbished a 4 way traffic signal for the Mcity test bench/office. Had to have a cube mount fabbed since Steelcase doesn’t sell one (no idea why not!). Waiting on 4 odd milspec connectors to get it hooked up to our Econolite traffic controller. Soon you can use the Mcity test API to control the office light!

3 notes

·

View notes

Text

TEAC PD-H303 disassembly/access to lens and cleaning.

Equipped with the service manual (link here), I attempted to fix a TEAC PD-?H303 3 disc cd changer I purchased in supposedly working condition from ebay.

Spoiler: It wasn’t in working condition.

The unit was skipping on all discs tracks 3-5. It would get stuck in a loop and was unable to progress. It happened at the same time into each disc and didn’t matter if the disc was new or not.

Fix below the fold.

Attempt 1:

Quick try with air from a compressor (with an air dryer) in the slot with the tray open. No luck, still skips. This usually takes care of things if it’s dust on a lens.

Attempt 2:

Reference TEAC support that suggests using a disc/lens cleaner. I pop mine in (note that it’s specifically labeled as fine for front loading/carousel changers!), and give it a whirl, but no luck. Still skips in the same place on all discs.

Attempt 3:

Posts online indicate it’s pretty common to be caused by the rails/gears in this CD player needing lube and that it gets hung up when moving. Downloaded the service manual for the PD-H303 (mirrored here) and noticed it looks like I’d need to disassemble most of it to replace the drive mechanism or even lubricate it. No good guides online for this unit, so I decide I’m not up for a project on something sold as working and attempt to return. (see my prior post)

Attempt 4 (working!):

After eBay decided it liked the seller more and made a second final decision (they swear this time!) to not refund, I take it apart to find all the plastic gearings pretty dry and caked with dust. So definitely not working when it was sent and someone lied.

Lens is clean though so, +1 cd cleaning disc!

Sometimes the gears in units like this are made of a slippery plastic that you don’t need to lubricate, just clean. That is not the case in this unit, you’ll need to clean them while in here. The focus is on those that move the lens, but by not clean the rest.

Power the unit down. Next, take the case off.

4 x No. 2 Phillips, 2 on each side. Largest screws you will remove.

Then 4 from back of case. No. 1 phillips. The top 2 are slightly longer than the side 2.

Lift off the lid (fairly straight up)

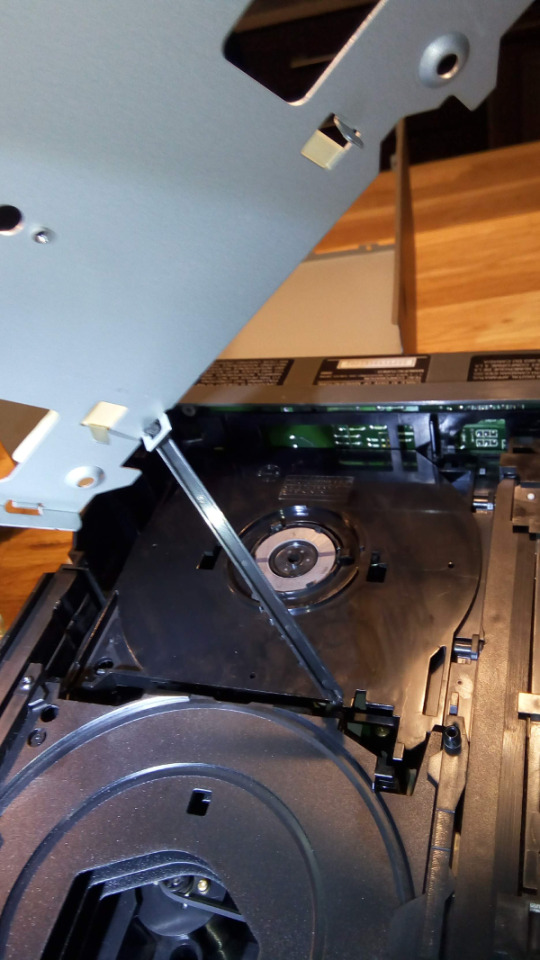

In the front you’ll see a metal plate covering the 3 trays, and a black plastic piece in the back covering the lens and drive mechanism for the optics. We need to get under this black piece. The easiest way is through the tray area.

Remove the LED used for checking disc presence on top of the metal plate. It’s 1 small No. 1 phillips and it moves to the side. Full size photo here.

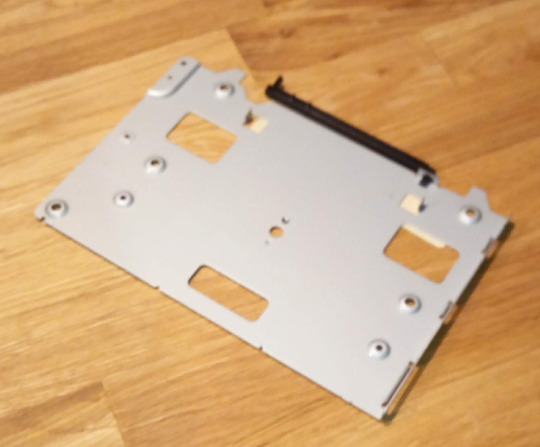

With that piece removed, take the 5 black No 1 phillips screws off from the metal tray/lid and slide it back slightly to remove it. It’s attached by a black arm that lifts the rear plastic part up when changing discs. Careful on removal, it will pop out of the plastic tray side when you are at the right angle. Again full size photo here.

Tray removed. Full Photo here.

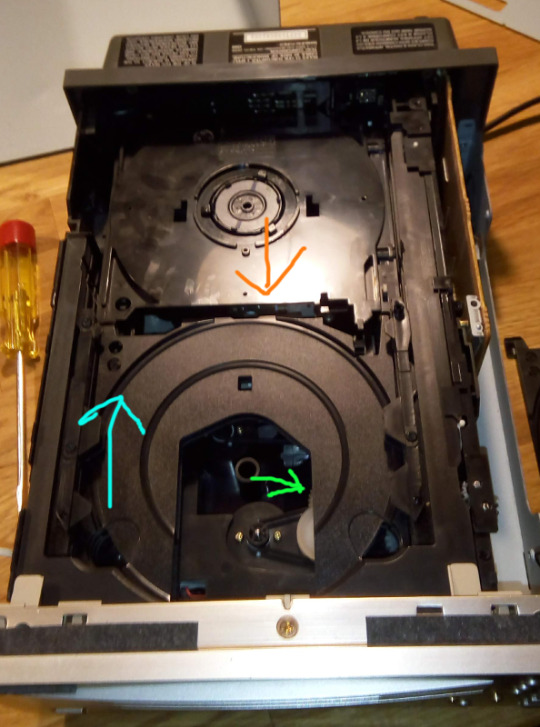

Now to get access to the CD head. I don’t see a readily easy way to remove the black plastic part from the top, but we just need to get under it a bit. So follow the image below and these steps.

Remove the top two trays. The top should slide out pretty easily. Just push it back (teal arrow). The second tray gets hung up on a peg to the right of the orange arrow, but with a bit of gentle lifting of the tray you can get it to clear and come out. It’s possible you need not remove this one, but it makes it easier. Keep track of the trays. In the top left corner of each is two circles that are punched differently on each tray. The CD player uses this to know which trays go back in which location. If you get it wrong it will likely try to unload into the wrong slot.

With the top two trays out find the gear at the green arrow. You’ll see 2 here, you want the one further back. Notice the position of the rear tray, we want to put it back in the same spot (all the way down) when done. Turn this gear a few times and watch the rear tray lift up. Go until it is all the way up and you have access to the rear tray.

On the right side of the image, notice a metal clip that falls away from the housing when you remove the top board. Make sure this is in place during re-assembly.

Full size image here

With everything exposed, start cleaning.

You can move the lens forward by turning the gear shown in the picture below (teal arrow).

Spray down the unit with compressed air (oil free!), then using a Q tip and rubbing alcohol clean up any spots where it’s caked on. If yours is dirty you can now clean the lens pretty easily. Mine was already clean from the disc I used.

My gears on right side of the unit looked pretty gross. Additionally, the rail and gears shown below (this is what they look like clean), had dirt stuck in the teeth and along the slide points on the rail bearing. The rail was my major problem as it would hang up slightly when moving by rotation of the drive gear. I suspect when this happened, it would misread and start mis-tracking. Clean this rail well!

Next apply white lithium grease (very tiny amount!) to the gears and rail (red arrows). Run them back and forth to make sure it move freely. If you see more dirt, clean and repeat.

When done, assemble in reserve. Put the lens at the back, lower the rear tray using green arrow gear (photo above), and put the disc trays back in. Be sure they go back in the right order or you’ll be pulling it apart again. When putting on the metal top plat ensure the gears on the right side of unit and the metal clip that is the slide for tray changing is held under the right rear screw. Finally install the outer case and test.

Full size photo

With it reassembled, try it out and enjoy. If you need to actually replace your drive unit for some reason, you’ll likely need to remove the top plastic plate. I couldn’t see a way to do this without removing the entire changer mechanism from the housing (looks like 60+ screws from service manual).

0 notes

Text

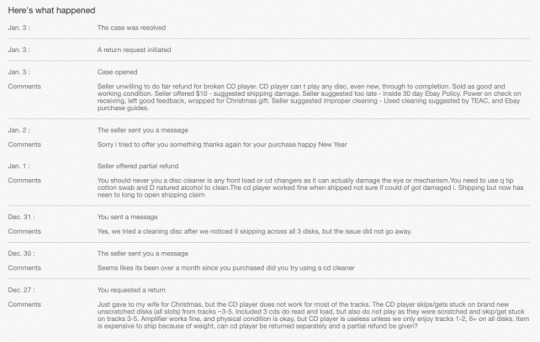

TEAC PD-H303 and a crappy eBay experience

This guide in the next post for reviving a TEAC PD-H303 3 disc cd changer stared out of the crappy experience I had with an eBay seller documented below.

I purchased an older TEAC amp and CD changer for my wife to replace the massive one we had in the family room. It was listed as in working good shape. It arrived, I unpacked, did a “power on” test quick before my wife got home from work, then stuffed it away for the holidays. That night I left good feedback for the seller (my mistake!).

When unpacked for Christmas we noticed it came loaded with 3 heavily scratched christian cds. Seems like a sub-optimal way to spread the word of god, but I guess whatever they think works? The CD player on first run skips on all discs between tracks ~3-5, even with new discs. Not the ideal gift.

Attempt 1:

Quick try with air from a compressor (with an air dryer) in the slot with the tray open. No luck, still skips. This usually takes care of things.

Attempt 2:

Reference TEAC support that suggests using a disc/lens cleaner. I pop mine in (note that it’s specifically labeled as fine for front loading/carousel changers!), and give it a whirl, but no luck. Still skips in the same place on all discs. Posts online indicate it’s pretty common to be caused by the rails/gears in this CD player needing lube and that it gets hung up when moving.

Attempt 3:

Downloaded the service manual for the PD-H303 (mirrored here) and noticed it looks like I’d need to disassemble most of it to replace the drive mechanism or even lubricate it. No good guides online for this unit, so I decide I’m not up for a project on something sold as working.

eBay saga:

I’m within 30 days of shipping, so I open a return with seller on eBay. I’ve never had to return an item before, but heard it’s usually in favor of the sellers.

Conversation with seller is below. You can witness them clearly not wanting to make the situation right (especially amusing after the christian cds). First calling out my use of a CD cleaner (remember how it was recommended by manufacturer?). Then they suggest it must have happened in shipping, but since it had been so long they offer a 10 dollar refund. I decline and ask eBay to step in. It was pretty clear to me this isn’t my fault and what I received was not in good working condition.

Full size image

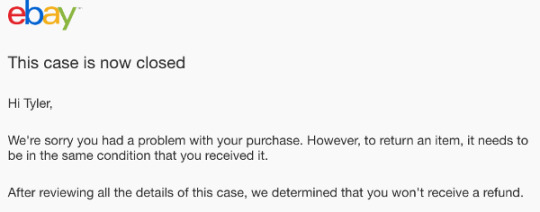

eBay’s first (not so) final decision:

In my favor, yay! Just return the item, eat the shipping cost at first of the amp/cd player and they will refund later. Not terrible. At least I can buy another. I review and print the shipping label and the packing slip, and figure I’ll box it up when I get home.

eBay’s second final decision (I guess for real this time) - 2 hours later:

I cleaned it, so I guess that means I can’t send it back? eBay if you only saw the cruddy stuff your sellers have sent over the years. I usually open the crap outside the house and give it a good wipe before brining it in.

I’d guess the seller called, complained to their support, fed them BS about using the cleaning disc and they changed their mind to make the seller who bring them more profit happy.

Whatever, not ideal, but I can probably fix the changer if I had to. At worst it is already broken and now I can keep the amp.

Text from seller - 2 hours later:

“Please do not ship check case details thanks for your positive feedback and merry new years”

Don’t worry, I’m not going to waste more time dealing with you. Enjoy the positive feedback.

Result:

eBay - Not helpful.

Seller - Likely a less than great human.

Tyler - Owner of a a nice amp, and a cd changer to fix or eat the cost on.

My next post will detail how you can fix the unit.

Spoiler: I was right, my cleaning disc didn’t damage it, seller was wrong and definitely sent a non-working item.

If my wife hadn’t wanted another cd changer, I would have just picked up a new TEAC AX-501 and a PD-301. Won’t someone please make a nice newer multi-disc transport?

0 notes

Text

Git Variable Expansion with Filters/Hooks

I read tons of articles saying this can’t and shouldn’t be done, I understand why Git doesn’t support it native and I also understand that I could use lots of other ways to work around it. All the work around solutions seemed just as much of a hack.

Code here: https://github.com/tsworman/GitHooksKeywordExpansion

Explanation below the break.

On checkout/pull/commit I want the last commit ID, user and commit message for a file added to the file in my local repository at a specific location. I deploy this scripts to a server and they do not get a version number, nor can I easily compile one. I want to know what version of the script our deployment packages placed on the server simply by browsing the scripts on the production server.

PVCS offered this for years with their $LOG: tag. In PVCS’s case it actually puts a running history of the file in places of the $LOG just before check-in. The file in the repository actually contains the changes checked into it. This makes the commit messages appear in diffs which is not terribly useful. This is one of the many reasons I get why GIT does not support this function. SVN had similar functionality.

I was excited when I originally found Git IDENT, hoping that it’s functionality could be used to achieve something similar but the $ID in Git (checksum of file) is not helpful in this case. I want an easy way to reference the commit in the repository and see which version of the file is deployed.

I first experimented with Git filters using clean/smudge and got most of the replacement functionality working. It didn’t work when I pulled in someone else’s changes. It wasn’t working on cloning a repository. Worst of all the log data I wanted wasn’t actually available on disk until after the commit completed which is also after the smudge filter runs. The smudge/clean turned out to work perfectly when doing diffs, but not so great to handle the insertion of the expanded data.

Git hooks filled the gap where smudge/clean couldn’t work. The post-commit and post-merge hooks fire after a pull/merge and also after a commit. I call the same scripts I made for the clean/smudge filters and apply the changes only to my local working tree. Clean takes care of removing this data before committing and the files in the remote repository stay clean!

0 notes

Text

PVCS -> SVN -> Git

I didn’t find a concise guide on how to export a PVCS repository to Git. This is what I came up with. It uses Polarion’s SVNIMPORTER program available here:

https://polarion.plm.automation.siemens.com/products/svn/svn_importer

On windows after install configure the configuration.properties file in the main directory of the importer. This assumed that PVCS was installed and working already. My sample config file is listed at the end of this post.

Important things to know:

If the svnimporter process can’t connect to PVCS it will fail immediately but not state that it can’t connect to the authentication server. I believe the error it gives is -1.

If your path to the PVCS project is wrong you receive error -3 with no description. Your project path must end in a /. Your subproject must not have a / at the end or the start. It’s advised to not have spaces in your project paths as this causes additional errors. I’d suggest renaming those paths before you start. It’s only an issue with the configuration tool and how it handles escaping the paths (spoiler: it doesn’t nicely).

If you are logged into the domain already, you do not need to provide a password to the config.properties. This will also cause the login to fail again with -3 and no description as to why.

With the config file in place, svn server installed, git installed, and svnimporter installed on a machine that has PVCS configured; you can run the following commands to start the export.

c:\svnimporter\run.bat full config.properties

While this runs in another terminal you can run tail -f on the svnimporter.log file to see it’s progress.

When it finishes you should see an export in c:\svn.

Start SVN serve in this directory so that we can query the repository.

c:\Subversion\bin\svnserve.exe -r c:\SVN -X

Next get a listing of authors so that we can create a mapping file for the Git import

svn log svn://localhost/ --xml | grep author

You’ll want to create a mapping file with the following format:

schacon = Scott Chacon <[email protected]>

selse = Someo Nelse <[email protected]>

With that done create a directory for the git repository and change to that folder. In this example mine is named c:\temp. My authors names file is located at c:\svn\names.txt

cd c:\temp

git svn clone svn://localhost/ --no-metadata -stdlayout temp

git svn clone svn://localhost/ --no-metadata --authors-file=c:\SVN\names.txt -stdlayout temp2

Delete the trunk branch if SVN created it.

git branch -d trunk

And finally add an origin to be able to push this repository to a remote server.

git remote add origin git@somegitserver:Project/Projectfile.git

git push

This is my config.properties file. Note that the parameter only_trunk is set to YES in this example as I didn’t want it to bring over branches in PVCS as branches in SVN/Git. My repository for PVCS is located at D:/PVCSREPO

# The source repository provider,

# either cvs,cvsrcs,pvcs,mks,cc,vss or st

srcprovider=pvcs

###########################################################################

# Import dump settings

#

# import_dump_into_svn - If enabled then after dump creation,

# it will be imported into svn repository via svnadmin

# (you must write proper svn autoimport options)

# If svn repository is not exist it will be created.

#

# existing-svnrepos - if enables and if import enabled by previous

# option then dump will be imported into svn repository ONLY IF REPOSITORY EXISTS

#

# clear_svn_parent_dir - if enabled and import enabled then application will delete all

# existing records in svn's parent directory before import dump to it.

# (affect for full dump only)

#

import_dump_into_svn=yes

#existing_svnrepos=yes

clear_svn_parent_dir=yes

# Option enables that feature:

# "Now the importer always import whole history(with possibilities like trunk-only etc.).

# It should be possible to import only current state as one revision. It is useful for

# the incremental import. In current situation we will never be able to import whole because

# of the size of the dump file."

# But see the new parameter "dump.file.sizelimit.mb" below.

#

# AFFECT FOR FULL DUMP CREATION ONLY!

use_only_last_revision_content=no

# VM systems often allow to set a description on a versioned file (one for all revisions).

# svnimporter is able to migrate it to a svn property. Since there is no predefined

# property key for this purpose in subversion, you can configure it here. If you do

# not give a property key name, the file description will not be migrated.

# NOTE: migration of properties is presently implemented only for PVCS

#

file_description_property_key=description

# If use_file_copy is set to yes, svnimporter uses SVN file copy operations for tags and

# branches. This raises the quality of the import dramatically. On the other hand,

# it works reliably for one-shot imports only. DO NOT SET THIS TO YES if you

# want to make incremental imports to synchronize repositories; otherwise the resulting

# dump files may not be importable to SVN.

#

# If set to no, every branch and tag operation is implemented as a simple file add operation.

# The origin of the tag or branch from the trunk is not recorded.

#

# Presently, this flag is evaluated only by the import from PVCS, CVS, MKS, ClearCase.

#

use_file_copy=yes

#####################################################################################

# FILE SETTINGS

#

# full.dump.file - file name pattern for saving full dump

# incr.dump.file - file name pattern for saving incremental dump

# incr.history.file - file for saving history for incremental dump

# list.files.to - destination file for saving scm's files list

# dump.file.sizelimit.mb - rough maximum dump file size in MegaBytes. See below.

#####################################################################################

full.dump.file=full_dump_%date%.txt

incr.dump.file=incr_dump_%date%.txt

incr.history.file=incr_history.txt

list.files.to=files_%date%.txt

# svnimporter checks the size of the current dump file before dump of each revision. If

# the size (in Megabytes) exceeds this limit, a new dump file is created. For large

# source repositories and/or small size limits, a run of svnimporter will generate a

# sequence of dump files. Their actual sizes will be slightly larger than the limit

# specified here.

#

# Take care not to set the limit too small. The dump file names are distinguished

# by their date part only which has a resolution of one second. Producing one dump file

# should therefore take longer than one second.

#

# Set the value to 0 to switch off this feature.

dump.file.sizelimit.mb=400

#######################################################################################

# SVN DUMP OPTIONS

#

# trunk_path - location of "trunk" folder. Can be "." if "only_trunk" option is enabled

# branches_path - location of "branches" folder

# tags_path - location of "tags" path

# svnimporter_user_name - name of service user, which create first revision etc.

# only_trunk - if enabled then convert only trunk of repository (skip tags and branches)

#######################################################################################

trunk_path=trunk

branches_path=branches

tags_path=tags

svnimporter_user_name=NOVA1313

only_trunk=yes

#######################################################################################

# SVN AUTOIMPORT OPTIONS

#

# svnadmin.executable - path to svnadmin executable

# svnadmin.repository_path - path to svn repository

# svnadmin.parent_dir - parent dir in svn repository for importing dump (must be created manually)

# svnadmin.tempdir - temp directory for svnadmin

# svnclient.executable - path to svn executable

# svnadmin.import_timeout - The value is length of time to wait in milliseconds,

# if this parameter is set and "svnadmin load" did not finished after specified length of time

# then it's process will be killed and svnimporter execution will be aborted.

# svnadmin.path_not_exist_signature - when importer checks repository path for existing

# it compares output of "svn ls" command with given string. If given string not found

# in command output and command return code is not null then importer cannot determine

# path exist or not, then exception will be thrown.

# If you runs importer not in English locale and log file contains similar as following error:

# EXCEPTION CAUGHT: org.polarion.svnimporter.svnprovider.SvnAdminException:

# error during execution 'svn ls' command: svn: URL 'file:///c:/tmp/ImportFromCvs/zzzzz' existiert nicht in dieser Revision

# then you should change signature to "existiert nicht in dieser Revision"

#######################################################################################

svnadmin.executable=C:/Subversion/bin/svnadmin.exe

svnadmin.repository_path=c:/SVN

svnadmin.parent_dir=.

svnadmin.tempdir=c:/tmp1

svnclient.executable=C:/Subversion/bin/svn.exe

svnadmin.verbose_exec=yes

#svnadmin.import_timeout=1800000

svnadmin.path_not_exist_signature=non-existent in that revision

#svnadmin.path_not_exist_signature=existiert nicht in dieser Revision

#################################################################################

########################## PVCS PROVIDER CONFIGURATION ##########################

#################################################################################

pvcs.class=org.polarion.svnimporter.pvcsprovider.PvcsProvider

pvcs.executable=C:/Program Files/Serena/vm/win32/bin/pcli.exe

pvcs.projectpath=d:/PVCSREPO/PVCS_NAME/

pvcs.subproject=PROJECT/PATH/SUBPATHNAMEHERE

pvcs.tempdir=c:/tmp2

pvcs.log.dateformat=MMM dd yyyy HH:mm:ss

pvcs.checkouttempdir=c:/tmp3

pvcs.log.datelocale=en

pvcs.log.encoding=Cp1251

#pvcs.log.datetimezone=Europe/Berlin

pvcs.verbose_exec=yes

pvcs.username=PVCSDOMAINUSER

#pvcs.password=NOTNEEDED

# If you set keep_vlogfile to "yes", and there already exist vlog.tmp and files.tmp

# files in the tempdir from a previous run of svnimporter, these files will not

# be regenerated. This is useful in some special situations, for example when you

# want to make sure you import the same state of the PVCS archive as before.

#

# pvcs.keep_vlogfile=yes

# If import_attributes=yes, svnimporter will try to map the PVCS archive attributes

# to SVN properties. However, it is usually better to use the auto-props feature of Subversion

# to set properties during the import.

#

# pvcs.import_attributes=yes

# The bug was occasionally observed during import of big projects

# (many files/revisions) and heavy PVCS load (probably).

# This bug is actually caused by invalid behaviour of pcli.exe get command,

# which sometimes returns invalid file content (wich less than 0.1% probability)

# if pvcs.validate_checkouts set to "yes" then importer will detect this bug

# and try to fix it.

# It may slow down import process because each checkout will be performed twice (at least)

pvcs.validate_checkouts=yes

#################################################################################

########################## LOG4J CONFIGURATION ##################################

#################################################################################

log4j.rootLogger=DEBUG, file

#log4j.logger.cz=DEBUG, file

#log4j.logger.cz=DEBUG, stdout

log4j.logger.historyLogger=DEBUG, historyFile

log4j.appender.file=org.apache.log4j.FileAppender

log4j.appender.file.File=svnimporter.log

log4j.appender.file.layout=org.apache.log4j.PatternLayout

log4j.appender.file.layout.ConversionPattern=%d{HH:mm:ss,SSS} [%t] %5p %c{1}:%L - %m%n

log4j.appender.historyFile=org.apache.log4j.FileAppender

log4j.appender.historyFile.File=history.log

log4j.appender.historyFile.layout=org.apache.log4j.PatternLayout

log4j.appender.historyFile.layout.ConversionPattern=%m%n

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%c{1}:%L %5p - %m%n

#################################################################################

############################### DEBUG OPTIONS ###################################

#################################################################################

#

# do not delete provider's temporary files

#

disable_cleanup=yes

# Create or playback a playback log file

# This playback log file, plus your config.properties file can be emailed

# to the developers who can play it back to identify specific problems with

# your migration attempts.

# record.mode = normal - normal operation, nothing will be recorded or played

# record - record all interactions with RCS system

# record-stubs - same as record, except actual contents of

# member files will be replaced with a single

# line containing the file name and revision number

# Makes a much smaller file that reveals much

# less sensitive information.

# playback - Play back previously recorded playback log file

# record.file = name of playback log file to record or playback

record.mode=normal

record.file=playback-log.txt

0 notes

Text

Opening Excel, CSV and Tabbed files from C# .Net

I set out to find a way to extract data from tab/csv/xls/xlsx files from C#.Net. If possible I’d use SQL to query them.

The OLEDB providers make this possible. You’ll find lots of documentation online regarding the JET database engine, you can safely ignore it. Microsoft stopped producing JET and no 64 bit version is available. Instead you want to find and install a Microsoft ACE database provider.

ACE comes in 2 flavors, a 32 and a 64 bit version. Your ACE install processor architecture needs to match the processor architecture of your .Net project. If you are going to use this from withing IIS on a 64 bit machine, but want to run a 32 bit project, you need to make sure your application has 32 bit workers enabled in the application pool or it will not pick up the 32 bit version os the ACE driver. When it can’t find the right provider you’ll get the error: “The Microsoft.ACE.OLEDB.12.0 provider is not registered on the local machine “

Can I list the registered providers from .Net? I found no way to easily do that but it feels like something that should be easy to do. If you find a way, please let me know.

Note that Microsoft doesn’t seem to officially support using these for anything outside of desktop applications.

Here’s how I did it:

In my web.config add the following

<connectionStrings>

<add name="Excel03ConString" connectionString="Provider=Microsoft.ACE.OLEDB.12.0;Data Source={0};Extended Properties='Excel 8.0;HDR={1}'" />

<add name="Excel07ConString" connectionString="Provider=Microsoft.ACE.OLEDB.12.0;Data Source={0};Extended Properties='Excel 12.0 Xml;HDR={1}'" />

<add name="CSVConString" connectionString="Provider=Microsoft.ACE.OLEDB.12.0;Data Source={0};Extended Properties='Text;HDR={1};FMT=Delimited'" />

<add name="TABConString" connectionString="Provider=Microsoft.ACE.OLEDB.12.0;Data Source={0};Extended Properties='Text;HDR={1};FMT=TabDelimited'" />

</connectionStrings>

This are a centralized location to list your connection strings. the numbers enclosed in brackets are replaceable at runtime.

Here is the .Net Code to use them:

string conStr = "";

string filePath = "";

string fileExtension = Path.GetExtension(in_filePath);

switch (fileExtension.ToLower())

{

case ".csv": //CSV, if we want to support tab, we either change the tab to , or use a schema.ini file and list each file in it.

filePath = Path.GetDirectoryName(in_filePath);

conStr = ConfigurationManager.ConnectionStrings["CSVConString"].ConnectionString;

break;

case ".xls" : //Excel 97-03

filePath = in_filePath;

conStr = ConfigurationManager.ConnectionStrings["Excel03ConString"].ConnectionString;

break;

case ".xlsx": //Excel 07

filePath = in_filePath;

conStr = ConfigurationManager.ConnectionStrings["Excel07ConString"].ConnectionString;

break;

}

DataTable dt = new DataTable(); conStr = String.Format(conStr, filePath, in_isHDR);

OleDbConnection connExcel = new OleDbConnection(conStr);

OleDbCommand cmdExcel = new OleDbCommand();

OleDbDataAdapter oda = new OleDbDataAdapter();

cmdExcel.Connection = connExcel; //Get the name of First Sheet

connExcel.Open();

DataTable dtExcelSchema;

dtExcelSchema = connExcel.GetOleDbSchemaTable(OleDbSchemaGuid.Tables, null); string SheetName = "";

switch (fileExtension.ToLower())

{

case ".csv": //CSV

SheetName = Path.GetFileName(in_filePath);

break;

case ".xls": //Excel 97-03

case ".xlsx": //Excel 07

SheetName = dtExcelSchema.Rows[0]["TABLE_NAME"].ToString();

break;

} connExcel.Close(); //Read Data from First Sheet

connExcel.Open();

cmdExcel.CommandText = "SELECT * From [" + SheetName + "]";

oda.SelectCommand = cmdExcel;

oda.Fill(dt);

connExcel.Close();

The end result is a data table filled with data from a tab/csv or first sheet of an excel file.

0 notes

Text

Oracle 12 Concat/Aggregate of Long strings to Clob

Lots of strings and want to make a clob? Don’t reach for list_agg it won’t help you north of 4000 characters.

Sure you can write a function that uses the standard concat operator in a loop:

function get_comment_clob (v_thread_id in number) return clob parallel_enable is

catted clob;

cursor c_existing_rows is

select to_clob(thread_comment) comment

from thread_comments a

where thread = v_thread_id and

thread_comment is not null and

thread_comment <> ' '

order by line asc;

begin

catted := null;

for v_existing_rows in c_existing_rows loop

catted := catted || v_existing_rows.comment || to_clob(' ');

end loop;

catted := rtrim(catted);

return catted;

exception

when others then

raise;

end;

But that is a bit slow. I hate cursors and usually see the parallel slaves sitting there just chewing CPU for each row. The explain plan will look sane, but the execution plan here is a bit poor.

You could write an aggregate function and the related member functions (I’ve covered them before). You can also abuse the XML functions

I give you String list to CLOB via XML Aggregate...

function get_comment_clob (v_thread_id in number) return clob parallel_enable is

catted clob;

begin

catted := null;

select dbms_xmlgen.convert(rtrim(xmlagg(xmlelement(e,thread_comment,' ').extract('//text()') order by line).GetClobVal(),' '), 1)

into catted

from (select to_clob(replace(thread_comment, chr(00), '')) thread_comment,

line

from thread_comments a

where thread_id = v_thread_id and

thread_comment is not null and

thread_comment <> ' ');

return catted;

exception

when others then

raise;

end;

So what is this doing? The sub query is getting our data. It doesn’t need to be a sub-query but for explaining, it is a bit easier to break it up. In the sub-query I’m fetching the list of comments I want to concatenate into a clob. The replace in the sub query is there because our data set had null characters inserted mid-string and most of the Oracle functions just stopped processing the test at that point. XMLElement though will throw XML parsing failed (ORA-31011) and Invalid Character 0 (LPX-00216) errors if you have it. I still want the data after the nulls, so I’m simply removing them.

Once we have the list, we can convert each to an xml element (tagged with <e>) by using the xmlelement function. We only want the text portion so we extract that (I don’t want the tags) and convert to clob with getclobval(). XML Agg will produce a clob when fed a clob. Now aggregate that and trim the result to remove all spaces at the end. Finally, we’ll call dbms_gen.convert with the decode flag so that values that xmlelement encoded get switched back to what they were originally. Without this, you’ll see ‘>’,’<’, and ‘%’ all appear encoded in the final result.

It’s fast when compared to the concat.

It works on our data with crappy characters in it (0x00).

It handles returning a clob.

0 notes

Text

Java in Oracle as PL/SQL function

CREATE or REPLACE JAVA SOURCE NAMED "Welcome" AS

public class Welcome {

public static String welcome() {

return "Welcome World";

}

};

CREATE OR REPLACE FUNCTION

say_hello return varchar2 AS language java name 'Welcome.welcome() return java.lang.String';

select say_hello() from dual;

A co-worker was asking about Java in Oracle. We do a fair amount of work with this but the basic examples often don’t entirely tie it together. You’ll see using a load or external JVM, you may see the create java statement, but often not soup to nuts. This is a simple example showing creating a java class inside Oracle and returning a value from it in a SQL statement. The class is exposed via a PL/SQL function and then called from SQL it runs on the built in JVM in the Oracle database.

0 notes

Link

Based off the launcher script for XSLX diffing found here: https://www.cnblogs.com/micele/p/5014037.html

I’ve updated it to support Spreadsheet Compare 2016. This allows you to produce a meaningful diff between two XSLX files. I’m using this to aid in resolving merge conflicts between Excel files.

0 notes

Text

Oracle Views and ORA-04027

Achievement unlocked! A new Oracle Error message appears:

ORA-04027: self-deadlock during automatic validation for object PB

Wait what? Let me google that quick... Not helpful and amusingly none of the results when I did it were from Oracle, despite it being their error number.

How did we get here? Two developers running different code with shared dependencies individually and working fine. One day (after 15 years) they ran it at the same time by chance and noticed it just sat their doing nothing. If one developer killed their job, the others finished. If they wait eventually one times out with the error message above. ORA-04027.

Let’s show how this was triggered

Table tA - A dimension table.

Table tB1 - A second dimension table.

Table tB2 - A second table related to the second dimension.

View vB - A View that is a union of tB1 and tB2.

Table tF - A fact table.

Package pA - Inserts data into tables each insert calls a function (fA) from pB.

Package pB - A package of get functions that do simple selects from dimension tables. One function is fA and selects from table tA. One function is fB and selects from view vB.

package pC - Saves and restores indexes on table tB1 and inserts new rows to it.

Package pI - Procedures to save indexes (pS) and restore indexes (pR) on tables. pS - Get DDL for index, write to table, and issue a DML to drop the index from a table. pR - Gets indexes from tables and creates them on the table.

OKAY! So with the stage set, let’s get started!

Developer 1 call his procedure from pA to load table F. It takes about 10 minutes to truncate the table and perform an insert. This insert is calling fA from pB for every row.

Developer 2 starts his package pC. It saves the indexes off of table tB1 in prep to load it. The process hangs right there and Developer 1 and Developer 2 are left stranded.

Developer 2 wasn’t doing anything to the tables that were used by developer 1. They were separate tables. Developer 2′s code didn’t alter the underlying tables which would have caused the package to go invalid. All Developer 2 did was drop an index, try to add data and then restore the index.

As I said before, Developer 1 killing his job causes Developer 2′s process to finish. Developer 2 killing his job causes Developer 1′s process to finish.

Going invalid - One of the developers noticed that when he ran his code at the same time as the other developer, that vB would go invalid and so would pB. This didn’t occur when they ran it one at a time.

Odd. We went through the code and everything looked okay at first glance. No DDL’s changing the tables. Nothing that would make me think any object would be invalidated. We wondered if unlocking stats, gathering them and re-locking would cause the invalidation? No it does not.

We stepped through the section by section then line by line and narrowed it down to the drop index statement from package pI. When the index was dropped it was invalidating the view and the package that depended on the view. That is odd to me. The view isn’t really dependent on the index unless it’s an IoT table or maybe a clob. If a view is invalidated on next run it would re-compile.

To the Oracle docs on Drop Index.. LINK

“Oracle Database invalidates all objects that depend on the underlying table, including views, packages, package bodies, functions, and procedures.”

Wait, what? It still doesn’t make sense to me that a view is invalidated on dropping an index. That might explain our problem more.

The dead-lock - At Last! The Oracle Speaks!

After some time leaving both developers code hanging in wait state we’d produce the error: ORA-04027: self-deadlock during automatic validation for object PB

When Developer 2 dropped the index on tB1 it invalidated vB which was used by package pB. Package pB was also invalidated.

Developer 1 who was running his insert using a different function dependent on a valid table from pB paused. Developer 1′s package tried to automatically recompile the package.

Developer 2′s code who was also dependent on pB (just in a totally different procedure than the one running) would also try to recompile the package. No big deal normally, one of them would win and the other would resume. So for 15 years we never encountered this problem.

It was only when this one function ran that happened to be dependent on a view vB instead of directly on a table like tB, that we got a self-deadlock.

Remember that Developer 2′s package would see that pB was invalid and would try to recompile. Our assumption (based on observation of wait state/pin lock timing) is that when it fails, it then tries to recompile objects it depends on and moves to working on vB.

Developer 1′s package also noticed that pB was invalidated during it’s run and would try to recompile. Usually this would result in a busy resource/pin lock timeout sort of deal. In this case since Developer 2 switched to validating vB, Developer 1′s application would then try to validated pB and they both get stuck. So it’s not really a true self-deadlock. The deadlock is caused caused during automatic re-validation of the view vB which is being done to try and validate package pB. It’s really the other developer trying to validate pB at the same time that stops the first process from getting a lock on the package again.

The timing is important since whoever’s process tried to revalidate first is going to be the one to time out first and get the ORA-04027 error. The other user’s process will just eventually complete when it becomes the sole user trying to compile package pB.

Ugh.

If you eliminate the view here you don’t see this problem as tables do not go invalid on dropping an index. I still don’t understand why every view (even simple ones) would invalidate on dropping an index, except for lazy programmers. It’s good that Oracle have at least explained it away in the documentation. It’s no longer a bug if we want it fixed, it’s a feature request!

1 note

·

View note

Text

C# web services interacting with JQuery and Oracle.

I dislike over engineering things and I've felt that many of the frameworks I've worked with for building web services often tread into the area where they become hard to extend or maintain the services long term. I set out to create a simple example of web services that could easily be deployed on an internet .Net/Coldfusion server.

Requirements:Must be .NetMust produce JSON result sets.Must connect to Oracle database.

https://github.com/tsworman/CSharp-Oracle-WebService-JQuery-Example

This was setup to be a sample to prove that I could replace an existing Coldfusion application with .Net webservices that are called by a single page application to manage a database account.

Many of the examples I found returned XML from .Net and I didn't want to do that. Many examples used service accounts for connecting to the database, but we really wanted the users to authenticate as themselves. This rules out connection pools and unless we want to store the login info somewhere or constantly pass it back and forth, it locks us to a single server per session architecture.

I've published the sample that I developed our final solution from. This is a basic set of code where I exercised all of my use cases to be able to verify it all was sound. I eventually added more security and input handling, but it should get folks the basics of what is needed. I provide a sample page that calls the webservice and returns JSON. We have functions that support JSONP if someone isn't doing session logins like our example. The best part is that it's simple, it's one bundle of code and it's decently documented.

I use JQuery ajax calls to hit the web services. I realize this isn't the most fashionable thing to do. It was chosen because most of the folks that get stuck maintaining my app, know how to use it.

Please contact me and let me know if I can help, fix issues, or make the code easier to understand!

0 notes

Text

tyD-Torrent

My first Rust software is complete. For years I’ve been wanting to cleanup a large torrent directory I had. It got bigger with each release as the maintainers of the torrent changed file names and folder structures. Loading the new torrent into my client downloaded the new files but it left the old ones around. I wasn’t quite sure how to resolve this and I believe some existing software did it but was poorly documented.

This year when I went to update I decided it was time to do something about it. I had been wanting to learn at least a little bit of Rust so that I could keep up with the news stories on Hacker News. Even after coding this I don’t see what all the fuss is about but the build system is fancy and the libraries available are fairly nice.

This application reads a torrent file and finds all filenames and paths of files that are part of it. It then scans a provided directory and lists all the files you should delete to make your structure match that of the torrent (purge extra files). If you pass the -d or --delete option it will actually remove those files. In my case this cleared up 36GB on one torrent directory alone. YMMV as it depends how sloppy you were with maintaining your folders.

Code here:

https://github.com/tsworman/tyD-torrent

build with:

cargo build

Run with:

tyD_torrent somefile.torrent /home/username/somefilev181/

and an optional -d or --delete flag.

What do I think about Rust? Cargo is super nice and the packages available are great. The built in collections left me a bit perplexed as they seemed like super odd choices. References vs pointers was a bit confusing coming from C/C++ but I appreciate their simplicity.

I have an issue with understanding Lifetimes. I get why a variable needs a longer lifetime and I understand the reference to it will be freed. I don’t get the syntax or understand Cow (copy on write).. I mean I know I need them when a colleciton or type doesn’t implement copy and it throws me a lifetime error. I can’t figure out the syntax. It doesn’t seem intuitive and despite reading multiple blogs and posts about it, I think all that I’ve found are written from the perspective of someone using it for a while (duh...) and they don’t really address the questions a n00b Rust person would have. I spent about 3 hours reading, writing examples, and trying to think up where I’d use each use case. It’s still a bit fuzzy and I think I’d approach in this manner at the moment:

1) Lifetime Error, crap this will be annoying.

2) Try making it a reference &, and dereference when needed. This is great as long as the type or function you are calling implements clone or doesn’t care if it’s a pointer or reference.

3) Try using clone or to_string and then just convert it back later for simplicity sake

4) Try one of the crazy syntax examples I have and specify a lifetime.

5) Read more about Box and Enums since it seems if you get really lost you just wrap things and problems will go away.

That sounds like a horrible plan, but I need to read more and maybe come up with better use cases for this stuff. The examples I found were bare bones and didn’t have as much detail as I would have liked. The worst is when the syntax in the examples or blogs is outdated because Rust has evolved already during it’s short lifetime.

Can I return a LinkedList of str? No, str u8 is an undetermined size as compile time. LinkedList<&str>? Sure. LinkedList<Path>, no. LinkedList<&Path>, no. LinkedList<&PathBuf>, sure.

I’m not sure I’d grab for Rust again over C/C++, though I understand I’ve written safer code for having done so this time. At the moment it’s not more readable or expressive than C/C++ for me. It’s also not nearly as well documented as C/C++ libraries are because of the time they have been around. This will all probably change over time. 15 years of C coding with pointers, function pointers, and reference handling really has made a lasting mark on me.

TL;DR; Learning Rust by writing your first piece of software may not be the best idea. It’s hard to change how I think about memory management from C/C++. I wrote a torrent directory cleaner and you can download and try it out here: https://github.com/tsworman/tyD-torrent

0 notes

Text

LoveSac Sactional

TL;DR; Stop your sectional search and just buy a LoveSac Sactional. It’s worth the price (when on sale @30% off!) and it’s super comfy and amazing. Go sit in one at a store and you’ll be sold.

Let’s talk about LoveSac! We’ve been searching for the perfect couch for our loft since last September. We tried to purchase a sectional in October from ThreeChairs Co. in fowntown Ann Arbor, but the sales person on the day we went to purchase didn’t seem interested in our business. It was frustrating after a 3 week dance to find the right one and having the store owner at our house to give her suggestions on how to utilize the space. I really wanted to give them more of my business. Unfortunately I was so turned off by the experience that I decided I’d purchase somewhere else even if it meant paying more and looking for yet another couch.

In November we were in Colorado for a week and my wife was checking out all the furniture stores there and stumbled across LoveSac in the Park Meadows mall. We spent our rainy day checking out the store knowing nothing about the company or their furniture (except maybe a brief reddit reference?) and were super excited by it all. The sales reps were awesome and basically tore the store apart for us to show us all the configurations and let us try all the cushion types.

LoveSac started by making foam filled sacs that resemble bean bags. They are super comfy, but pricey. The sacs come in different sizes, the covers are removable, washable, and easy to change when you need a new style. On top of that they have an awesome warranty. LoveSac later introduced their Sactional. It’s a LoveSac take on a sectional couch. It’s very configurable, has washable covers, awesome warranty, and super comfy. It’s also pricey.

When I say pricey I mean that my previous most expensive purchase was a LaZBoy couch and loveseat set that cost roughly half of what purportedly well built, good looking, furniture can cost. Sectionals are pricey, even LaZBoy sectionals. This is not your $500 dollar Ikea couch. It doesn’t feel like that, it isn’t built like that, and I guarantee you that you can tell the difference the moment you sit in one.

Back to the LoveSac store. Two very helpful clerks showed us around, explained how the Sactional is like Lego for couches and proceeded to help us configure a couch that fit our area using these cool magnetic blocks. Then we had to pick a fabric from 300+. We wanted something that would wear well, cleanup well (we have many nieces and nephews), and take our daily abuse as this is going in the area we hang out all the time. We decided on the standard cushion fill, seats in a deep configuration, square arms and we wanted enough to make a 3 seat deep L, with a 4 section long main portion. This is not a small piece of furniture. We got a quote for the current sale price and flew back to Michigan. Over the next week we debated if it was really worth the price (I was still stuck on the $500 ikea couch idea) and finally decided on Black Friday to pull the trigger. I actually called the Colorado store because I wanted to make sure they got credit for the same and the company offers free shipping so it shouldn’t matter where I purchase it. The sale was slightly on Black Friday and they threw in a free LoveSac GamerSac (it’s not really free, but it made me feel better about the price!). The in store sales are apparently usually better than online sales also.

Awesome! Now the wait began.... Every other day my wife was asking if I had heard when it will ship. No! First the GamerSac arrived and man was it comfy. My sister in law declared it as being what was missing from her life! It’s slightly too small for larger adults but it’s still super relaxing to lay in. I feel like I’m flating and all my muscles are relaxed. It’s got the Elk Phur cover which really makes it soft and fuzzy. I believe we’ve sold 4-5 of these just by letting people sit in ours and saying, you know they make one the size of a couch right?

4 weeks later, just before Christmas we got the notice that our couch had shipped (and that it actually mostly arrived earlier in the day). The FedEx guy didn’t appear too thrilled that the 20 or so boxes took up most of his truck. We baked him cookies for the next time they made a delivery!

Kim spent about 6 hours unboxing and installing covers. They looked excellent! We hauled them to the loft, set them in place. Put the pucks on the floor and installed the U shaped clamps to build our couch. We enjoyed and it was amazing. Super comfy even! We figured we weren’t really going to use the Lego functionality unless we add on or move later. But then... a family member had the idea of removing one part of the couch and making the corner into what is essentially a queen sized bed. It looks like a lower case b or p with the round part being 4 rectangular cushions. This may be the best thing ever invented. Everyone lunges into it and is just overtaken by how soft and comfy it is. Movie time? Curl up in the corner with a blanket and fall asleep instead of watching the entire movie.

Cup holder add on - Awesome and useful!

Ability to walk on the sides and back - Indestructible when kids use it as a jungle gym.

Black padded velvet fabric - Cleans easily when kids spill stuff with just a wet paper towel. Washable covers if it got really bad!

Style - Looks great and fits in our modern home well.

Why you might hesitate: The reviews online are super mixed. It seems people are torn about the cost and feel they should get more for the price. I wonder if they have really looked at how other furniture in that price range is built. Sometimes it has nicer fabrics, but they still use MDF to construct the frame. That is not the case here. People also complain about how their items didn’t work together as they thought. We were with you there and it’s why we called the store multiple times to make sure our ideas worked. We needed 2 deep sides to make the couch fit in the space we have. Lots of complaints about slow shipping. Good things come to those who wait. 4 weeks for us! We could have had it faster if we bought a different fabric.

What could fix most of this? More reviews like this one telling you to just give in and go buy the couch, you won’t regret it. Additionally an online configuration tool so you can build a couch in any design and see how it works. If I did it again I might not even get backs for our couch since it sits against a half wall overlooking the downstairs. The one thing you can’t get online is really how the different seats feel with the 3 padding options and when they are in deep vs standard form.

Summary: We love the Sactional and no, you cannot have our GamerSac.

0 notes

Photo

Christmas time is here! It’s been a busy year and I’ve been short on posting. Short list of awesome things: Dog-sledding, moving a hackerspace, selling a business, eloping in New Zealand, 2 receptions, buying a house, moving, celebrating second fall, attempting to furnish aforementioned house, and finally... launching another product for sale in early January!

December has been the first month this year that I’ve considered slow. I’ve been using the time to focus on projects around the house. I’m slowly installing ethernet and wifi access points. We’ve been removing phone lines, jacks and old communication cabling and trying to tidy up the wiring while we are at it. The place has such a unique layout and construction. The downside is that high speed wireless doesn’t easily reach everywhere. I’ve switched my entire network stack to Ubiquiti gear and have been pleased so far. We’ve got 2 more access points to install, additional surveillance cameras, and a few VOIP phones yet. I’ve been investigating what it would take to startup an old school dial in BBS but haven’t pulled the trigger on the gear yet. I know of a few that still operate but it seems a bit different with everything just being available on the internet now.

I’m hoping to spend the start of the new year building lots of furniture for the house. We’ve got the wood drying in the garage and I’m going to start milling down a few pieces after the holidays are over. I’ll hopefully have more progress photos soon!

Happy Holidays to all!

0 notes

Text

Moving a Game Room

In the past 5 years I’ve built up quite an awesome man cave/game room in my basement. I never thought about what I’d have to do when it came time to move it. Everything fits so nicely. I’ve actually got less things than when I moved in but much of the furniture was custom built or purchased specifically for this space.

We just got confirmation/clear to close on a home a little bit away from here. It’s a bigger home, with many super fancy outdoor spots, but it has a smaller area for Sonic themed merchandise. As a result not only do I need to find some new furniture for my game room, some of the Sonic The Hedgehog items probably need to go. If you are interested, please reach out to me. I’d rather see them sold to another collector/fan then end up at our yard sale and a small child destroy them.

I’m told I still won’t have room for a Ski-ball machine. Maybe we picked the wrong home?

Interested in hearing what others do when it comes to moving this type of thing. Do you sell all the furniture and start over? Pair down the game consoles you haven’t touched in years (I’m looking at you 3D0, SNES, and Turbo)? Ditch the boxes of cords you may one day need (Surely I can’t live without a SCSI cable)?

0 notes