#the reuse of assets and tools can often enable the team to improve on other parts of the game

Text

Crafting Clean and Maintainable Code with Laravel's Design Patterns

In today's fast-paced world, building robust and scalable web applications is crucial for businesses of all sizes. Laravel, a popular PHP framework, empowers developers to achieve this goal by providing a well-structured foundation and a rich ecosystem of tools. However, crafting clean and maintainable code remains paramount for long-term success. One powerful approach to achieve this is by leveraging Laravel's built-in design patterns.

What are Design Patterns?

Design patterns are well-defined, reusable solutions to recurring software development problems. They provide a proven approach to structuring code, enhancing its readability, maintainability, and flexibility. By adopting these patterns, developers can avoid reinventing the wheel and focus on the unique aspects of their application.

Laravel's Design Patterns:

Laravel incorporates several design patterns that simplify common development tasks. Here are some notable examples:

Repository Pattern: This pattern separates data access logic from the business logic, promoting loose coupling and easier testing. Laravel's Eloquent ORM is a practical implementation of this pattern.

Facade Pattern: This pattern provides a simplified interface to complex functionalities. Laravel facades, like Auth and Cache, offer an easy-to-use entry point for various application functionalities.

Service Pattern: This pattern encapsulates business logic within distinct classes, promoting modularity and reusability. Services can be easily replaced with alternative implementations, enhancing flexibility.

Observer Pattern: This pattern enables loosely coupled communication between objects. Laravel's events and listeners implement this pattern, allowing components to react to specific events without tight dependencies.

Benefits of Using Design Patterns:

Improved Code Readability: Consistent use of design patterns leads to cleaner and more predictable code structure, making it easier for any developer to understand and modify the codebase.

Enhanced Maintainability: By separating concerns and promoting modularity, design patterns make code easier to maintain and update over time. New features can be added or bugs fixed without impacting other parts of the application.

Increased Reusability: Design patterns offer pre-defined solutions that can be reused across different components, saving development time and effort.

Reduced Complexity: By providing structured approaches to common problems, design patterns help developers manage complexity and write more efficient code.

Implementing Design Patterns with Laravel:

Laravel doesn't enforce the use of specific design patterns, but it empowers developers to leverage them effectively. The framework's built-in libraries and functionalities often serve as implementations of these patterns, making adoption seamless. Additionally, the vast Laravel community provides numerous resources and examples to guide developers in using design patterns effectively within their projects.

Conclusion:

By understanding and applying Laravel's design patterns, developers can significantly improve the quality, maintainability, and scalability of their web applications. Clean and well-structured code not only benefits the development team but also creates a valuable asset for future maintenance and potential growth. If you're looking for expert guidance in leveraging Laravel's capabilities to build best-in-class applications, consider hiring a Laravel developer.

These professionals possess the necessary expertise and experience to implement design patterns effectively, ensuring your application is built with long-term success in mind.

FAQs

1. What are the different types of design patterns available in Laravel?

Laravel doesn't explicitly enforce specific design patterns, but it provides functionalities that serve as implementations of common patterns like Repository, Facade, Service, and Observer. Additionally, the framework's structure and libraries encourage the use of various other patterns like Strategy, Singleton, and Factory.

2. When should I use design patterns in my Laravel project?

Design patterns are particularly beneficial when your application is complex, involves multiple developers, or is expected to grow significantly in the future. By adopting patterns early on, you can establish a well-structured and maintainable codebase from the outset.

3. Are design patterns difficult to learn and implement?

Understanding the core concepts of design patterns is essential, but Laravel simplifies their implementation. The framework's built-in libraries and functionalities often serve as practical examples of these patterns, making it easier to integrate them into your project.

4. Where can I find more resources to learn about design patterns in Laravel?

The official Laravel documentation provides a good starting point https://codesource.io/brief-overview-of-design-pattern-used-in-laravel/. Additionally, the vast Laravel community offers numerous online resources, tutorials, and code examples that delve deeper into specific design patterns and their implementation within the framework.

5. Should I hire a Laravel developer to leverage design patterns effectively?

Hiring a Laravel developer or collaborating with a laravel development company can be advantageous if you lack the in-house expertise or require assistance in architecting a complex application. Experienced developers can guide you in selecting appropriate design patterns, ensure their proper implementation, and contribute to building a robust and maintainable codebase.

0 notes

Text

Unleashing the Power of Custom eLearning Solutions for Business Growth

Introduction:

In the dynamic landscape of contemporary business, staying ahead requires continuous learning and development. One of the most effective tools for fostering employee growth and enhancing organizational efficiency is custom eLearning. Tailored to meet the unique needs of a business, custom eLearning solutions have emerged as a game-changer, offering a host of benefits that go beyond traditional training methods. This article explores the advantages of incorporating Custom eLearning for your business strategy and how it can contribute to sustained growth.

1. Personalized Learning Experience: Custom eLearning allows businesses to design learning modules that align with their specific industry, goals, and corporate culture. This personalization ensures that employees receive training that is directly relevant to their roles, making the learning experience more engaging and impactful. Tailored content enhances comprehension and retention, ultimately leading to a more skilled and knowledgeable workforce.

2. Flexibility and Accessibility: Traditional training methods often struggle to accommodate the diverse schedules and locations of modern workforces. Custom eLearning solutions break down these barriers by providing flexibility and accessibility. Employees can access training materials at their own pace and from anywhere, fostering a culture of continuous learning without disrupting daily operations.

3. Cost-Effectiveness: Developing and delivering traditional training programs can be resource-intensive. Custom eLearning, on the other hand, reduces costs associated with venue rentals, printed materials, and travel expenses. Additionally, the ability to reuse and update digital content reduces long-term expenses, making it a cost-effective solution for businesses of all sizes.

4. Real-time Progress Tracking: Custom eLearning platforms often come equipped with robust analytics tools that enable real-time tracking of employee progress. This feature allows businesses to monitor the effectiveness of their training programs, identify areas that may need improvement, and track individual or team performance. Data-driven insights contribute to informed decision-making and the continuous improvement of training strategies.

5. Adaptability to Change: In a rapidly evolving business landscape, the ability to adapt quickly is crucial. eLearning Solutions for your business can be easily updated and modified to reflect changes in industry regulations, company policies, or emerging trends. This adaptability ensures that employees are always equipped with the latest knowledge and skills needed to navigate evolving challenges.

6. Employee Engagement and Retention: Engaged employees are more likely to be productive and committed to their roles. Custom eLearning, with its interactive features, quizzes, and multimedia content, enhances engagement by making the learning process enjoyable. Engaged employees are not only more likely to retain the information presented but are also more likely to stay with a company that invests in their professional development.

7. Alignment with Business Goals: Custom eLearning is designed with a business's specific goals and objectives in mind. Whether it's improving customer service, enhancing product knowledge, or fostering leadership skills, these solutions can be crafted to align directly with the strategic objectives of the organization. This alignment ensures that the training investment directly contributes to the overall success of the business.

Conclusion:

In the digital era, where adaptability and continuous learning are essential for business survival, custom eLearning stands out as a powerful tool for fostering growth. Its ability to provide a personalized, flexible, and cost-effective learning experience, coupled with real-time progress tracking and adaptability, makes it an indispensable asset for businesses aiming to stay competitive. By embracing custom eLearning solutions, organizations can empower their workforce, drive innovation, and create a culture of continuous improvement, ultimately positioning themselves for sustained success in the ever-evolving business landscape.

Source Url:-https://sites.google.com/view/knotopiancom6/home

0 notes

Text

Nailing Creative Development to Make Your Advertising More Effective

The elements that go into a great ad, such as text, images, and buttons, shouldn’t be a mystery to any marketer. But the value of an ad creative testing framework is often underappreciated.

Testing has a long history in advertising. As far back as 1923, Claude C. Hopkins wrote in his famous book, Scientific Advertising, “Almost any question can be answered, cheaply, quickly and finally, by a test campaign.” In 2021, there are many more tools at a marketer’s disposal.

The newest development, automation, enables marketers to test every single element of a campaign, thousands of times over. This state of play can overwhelm marketers. Too many options make it difficult for marketers to use the data at their disposal. Messy testing processes can even promote poorly-performing ads and increase the chances of leaving the best on the cutting-room floor.

To scale your user acquisition effectively, you need a bulletproof testing process that selects the best ad at every new iteration. At Liftoff, we developed a framework in-house, used by the Ad Creative team to develop campaigns for many clients in different categories. By using the framework you can begin to start creating an efficient cycle that will help you build better ads.

5 Steps to Building Better Ads

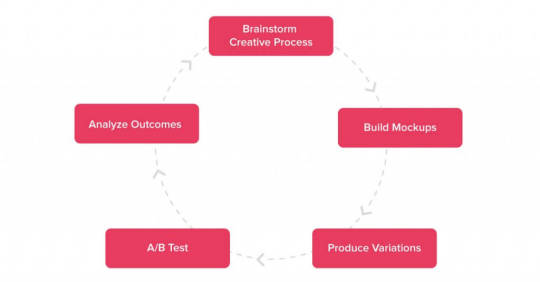

There are several steps in our ad building process. A/B testing is a critical part of this process, but it’s not the full picture. In fact, testing creative elements is only step four in a cycle of ad creation:

Here’s each step in more detail:

Brainstorm creative concepts: First, we develop narratives for an ad: what will happen, which product to show, and what outcome we plan to deliver (such as a reward for clicking through). These ideas inform all the creative variations that follow.

Build mockups of the best ideas: From all of the ideas we have, we then make the ads. For bigger campaigns (particularly video), we usually storyboard the creative to guide production.

Produce creative variations: Once we have a general creative concept and a single ad created, we begin to make variations of the ad, changing text, button colors, and so on.

A/B test the creative: Next, it’s time to set up experiments (in the form of campaigns) that compare our creative variations’ performance.

Analyze the outcome: The final step in the cycle is to monitor our experiments’ performance to see how well those creatives do.

Follow this simple structure to frame your ad creative production process. It’s easy to find new components to flesh out an ad creation process that makes sense for your team. Let’s now talk about what Liftoff’s Ad Creative Producers do when we’re onboarding a new app to show you how we think when creating new ads.

Improving Your Approach To The Creative Ad Production Process

It takes new ways of thinking to improve creatives. With every new campaign, we hone in on what users find more interesting. Here are some things we think about when trying to discover improvements:

1. Find the key benefit of the app: Every app has a few features that make users excited. We want to find out what that benefit is early in our A/B testing plan to develop from there.

2. How can we make the concept better? This question usually comes before building an animation or interactive that will provide the user with more information in a simple way.

3. Small changes can make a big difference: Tiny optimizations frequently apply across creatives.

4. Optimize! Continue to validate the hypothesis and strategy through A/B testing.

Ad Creative Frequently Asked Questions

Now that we’ve approached a process and improved our thinking let’s tackle critical questions you may have regarding the process.

What’s the Difference Between Incremental Tests and Concept Tests?

There are two kinds of tests you can conduct: incremental and concept testing. Incremental tests experiment with a single aspect of an ad, such as background or a single foreground image. Concept tests pitch two completely different creatives against each other.

Incremental testing allows you us to find the small wins that build best practices for a customer. Once you’ve optimized significantly in one direction, more extensive concept tests will enable us to find a completely new path to find opportunities. If marketers create a new offering, concept tests allow them to hone in on what works before optimization can occur.

How Do I Come Up With so Many New Concepts?

When adopting an ad creation process, you’ll quickly find that it’s often more challenging to generate entirely new concepts versus delivering variations on those concepts. Coming up with new ideas is difficult, especially without support or a process you fully own.

Keep this rule in mind: because it’s hard, take your time. Your goal should be to produce a steady stream of new concepts that outperform your competitors, not just your older ads. So, work to create new ideas in a way that fits your and your team’s preferences and capabilities. Brainstorms are only one of many techniques that help the Ad Creative team generate ideas, but we also like to share inspiration from creatives in different regions, ads in the wild, and from the world around us.

What Are Some Tips on Conducting A/B Tests for Creative Ads?

Testing can sometimes become a matter of quantity over quality. As Eric Seufert of Mobile Dev Memo writes, “the only way to optimally pair creative with segments that ad platforms algorithmically define is to test a massive number of ad creatives.” While that’s true, testing must have a goal in mind, or your campaign performance can drop massively.

Anything you add or change in a campaign creates fluctuation, whether positive, negative, or neutral. And what feels like a few tests can become an aggregate of thousands of different variations that don’t perform as well as if you were only running your best ad. You must balance testing and performance to even out potential dips or spikes in spend.

If that doesn’t change your mind on mass testing, bear in mind that we’ve seen a typical win rate (where new creative A beats high-performing creative B) reach 20 to 25% over the years. It’s remarkable that one in four ads has a chance to beat the status quo, but it still means for every four tests you could be running three poorly-performing ads. Knowing what doesn’t work is a valuable exercise, but marketing outcomes should not suffer from over-eager testing.

How Long Should I Be Testing an Ad For?

Time spent on a test isn’t as important as the number of installs and impressions an ad receives. Depending on your app’s popularity and budget size, tests will run quicker or slower. This makes setting an overall benchmark challenging. Here are the main metrics Liftoff’s creative team uses to ensure a test is going to plan:

Ads tests must be statistically significant, meaning that there’s no chance that higher performance was due to anything other than the creative. At Liftoff, we look for a confidence interval of 0.01 or below, meaning that we are 99% (or more) confident in the results shown. If you want a primer on statistical significance, Harvard Business Review has a full write up to learn from.

Each ad variant must receive a minimum number of impressions. Your testing figure will vary depending on traffic (some ads will need hundreds of impressions, others hundreds of thousands).

We also set a target for installs. This benchmark will vary depending on your app. Usually we need around 80 installs to analyze the best-performing ad.

Why Do I Need to Produce New Ads Constantly?

You might have heard of the Mere-exposure effect, which describes the phenomenon where “people tend to develop a preference for things merely because they are familiar with them.” Advertising operates on exposing the brand to people as often as possible to build familiarity. However, ads themselves have a half-life on a viewer’s attention. Known as “ad fatigue,” users will quickly get bored if they see the few same ads over and over again.

An advertiser should seek to find that point of deterioration where new ads begin to perform less well. You must replace a creative before performance collapses entirely. To do that, you need to start looking for a replacement as soon as the creative launches.

4 Small but Powerful Best Practices Behind Great Mobile Ads

There are a handful of best practices that help good ads become great. Here’s what we always consider when creating new ads:

Define objectives for your creative concepts, and develop a straightforward story around them

For ads to be effective, they need to be understood by the user. Simplicity always wins.

Don’t stuff your ads with features—it will dilute your creative and confuse your audience.

Be contextually creative

Ensure your creative is relevant to the user’s state of mind.

Make your ads cross-screen ready and available across all formats.

Mobile ads are different from social ads. What does well on Facebook may not perform well elsewhere.

Localize! It’s almost impossible to succeed in different markets with one language.

Use and reuse great assets

Ads must load quickly on mobile. File sizes must be small and adhere to technical restrictions.

Use high-resolution assets. Most phones have retina displays which require double the amount of pixels (640×100 versus the usual 320×50).

Reuse your collateral to create consistent App and/or Play Store pages.

Guide users with clear CTAs

Create a consistent, clear and direct call-to-action.

Work on your wording: “Tap to expand” or “Play the games” could be more effective than “Tap to learn more.”

Any button should have a clear CTA with a tappable ad element. Don’t include buttons that users cannot use.

Go Get ‘Em, Tiger

Surprising users with delightful copy is one thing, but making sure your ad sparkles throughout is much harder. By using Liftoff’s framework, you can begin to iterate on your best-performing ads faster and more effectively to get the most out of your user acquisition efforts.

For more on ad creatives, we’re soon releasing a new Ad Creatives Index, which you can use to discover the latest creative format trends. For now, you can learn how to optimize video campaigns with lead Ad Creative Producer Thomas Zukowski or discover the benefits of banner ads with an in-depth study on the blog.

The post Nailing Creative Development to Make Your Advertising More Effective appeared first on Liftoff.

Nailing Creative Development to Make Your Advertising More Effective published first on https://leolarsonblog.tumblr.com/

0 notes

Text

Why Architects Need Tools for Apache Kafka Service Discovery, Auditing, and Topology Visualization

You’re out of control. I hate to be the bearer of bad news, but sometimes we need to hear the truth. You know Apache Kafka, you love Apache Kafka, but as your projects and architecture have evolved, it has left you in an uncomfortable situation. Despite its real-time streaming benefits, the lack of tooling for Kafka service discovery, a reliable audit tool, or a topology visualizer has led you to a place I call “Kafka Hell”. Let me explain how you got here in 4 simple, detrimental, and unfortunately unavoidable steps.

You learned of the benefits of EDA and/or Apache Kafka.

Whether you came at it from a pure technology perspective, or because your users/customers demanded real-time access to data/insights, you recognized the benefits of being more real-time.

You had some small project implementation and success.

You identified a use case you thought events would work well for, figured out the sources of information, and the new modern applications to go with it. Happy days!

You reused your existing event streams.

Within your team, you made use of the one-to-many distribution pattern (publish/subscribe) and built more applications reusing existing streams. Sweetness!

You lost control.

Then other developers started building event-driven applications and mayhem ensued. You had so many topics, partitions, consumer groups, connectors – all good things, but then the questions started: What streams are available? Which group or application should be able to consume which streams? Who owns each stream? How do you visualize this complexity? It’s a mess, am I right?

History Repeats Itself

As we moved away from SOAP-based web services and REST became the predominant methodology for application interactions, there was a moment when many organizations faced the same challenges we face today with EDA and Apache Kafka.

Back then, SOA’s maturity brought about tooling which supported the ability to author, manage, and govern your SOAP/WSDL-based APIs. The tooling was generally categorized as “Service Registry and Repository.” The user experience sucked, but I bet you know that already!

Enter REST. Organizations which were/are technical pioneers quickly adopted the RESTful methodology; but since the tooling ecosystem was immature, they faced challenges as they moved from a handful of RESTful services to a multitude of them.

Sound like what we face with Kafka today?

The answer to the original problem was the emergence of the “API management” ecosystem. Led by Mulesoft, Apigee, and Axway, API management tools provided the following key capabilities:

Runtime Gateway: A server that acts as an API front end. It receives API requests, enforces throttling and security policies, passes requests to the back-end service, and then passes the response back to the requester. The gateway can provide functionality to support authentication, authorization, security, audit, and regulatory compliance.

API Authoring and Publishing tools: A collection of tools that API providers use to document and define APIs (for instance, using the OpenAPI or RAML specifications); generate API documentation, govern API usage through access and usage policies for APIs; test and debug the execution of APIs, including security testing and automated generation of tests and test suites; deploy APIs into production, staging, and quality assurance environments; and coordinate the overall API lifecycle.

External/Developer Portal: A community site, typically branded by an API provider. It encapsulates information and functionality in a single convenient source for API users. This includes: documentation, tutorials, sample code, software development kits, and interactive API console and sandboxes for trials. A portal allows the ability to register to APIs and manage subscription keys (such as OAuth2, Client ID, and Client Secret) and obtain support from the API provider and user community. In addition, it provides the linkage into productivity tooling that enables developers to easily generate consistent clients and service implementations.

Reporting and Analytics: Performing analysis of API usage and load, such as: overall hits, completed transactions, number of data objects returned, amount of compute time, other resources consumed, volume of data transferred, etc. The information gathered by the reporting and analytics functionality can be used by the API provider to optimize the API offering within an organization’s overall continuous improvement process and for defining software service-level agreements for APIs.

Without these functions, we would have had chaos. I truly believe the momentum behind RESTful APIs would have died a slow, agonizing death without a way to manage and govern the overwhelming quantity of APIs. This reality would have led to constantly breaking API clients, security leaks, loss of sensitive information, and interested parties generally flying blind with respect to existing services. It would have been a dark and gloomy time.

We Need to Manage and Govern Event Streams the Way We Do APIs

I bet if we all had a dollar for every time our parents said, “You need to grow up,” when we were younger, we would all be millionaires. But that is exactly what we need to do as it relates to event streams, whether you are using Apache Kafka, Confluent, MSK, or any other streaming technology. If we take our queues (no pun intended) from the success of API management – and the API-led movement in general – we have a long way to go in the asynchronous, event streaming space.

Over the last few years, I have poured a lot of my professional energy into working with organizations who have deployed Apache Kafka into production, and who I would consider to be technical leaders within their space. What I have heard time and time again is that the use of Apache Kafka has spread like wildfire to the point where they no longer know what they have, and the stream consumption patterns are nearly 1 to 1. This means that while data is being processed in real time (which is great), they are not getting a good return on their investment. A stream only being consumed once is literally a 1 to 1 exchange, but the real value of EDA lies in being able to easily reuse existing real-time data assets, and that can only be done if they are managed and governed appropriately.

Another common complaint about Apache Kafka is the inability to understand and visualize the way in which event streams are flowing. Choreographing the business processes and functions with Apache Kafka has become difficult without a topology visualizer. One architect described it as the “fog of war” – events are being fired everywhere, but nobody knows where they are going or what they are doing.

Events in large enterprises rarely originate from a Kafka-native application; they usually come from a variety of legacy applications (systems of record, old JEE apps, etc.) or from new, modern, IoT sensors and web apps. Thus, we need end-to-end visibility in order to properly understand the event-driven enterprise.

We need to adopt the methodology as described by the key capabilities of an API management platform, but for the Kafka event streaming paradigm. We already have the equivalent of the API Gateway which is your Kafka broker, but are sorely lacking stream authoring and publishing tools, external/developer portals, and the reporting and analytics capabilities found in API management solutions today. Ironically, I would claim the complexity and decoupling that you find in a large organization’s EDA/Kafka ecosystem is more complex and harder to manage than synchronous APIs which is why we need an “event management” capability now more than ever!

Technical Debt and the Need for Kafka Service Discovery

I hope by now you’ve bought into the idea that you need to govern and manage your Kafka event streams like you do your RESTful APIs. Your next question is most like likely, “Sounds great Jonathan, but I don’t know what I even have, and I surely don’t want to have to figure it out myself!” And to that, I say, “Preach!” I have walked in your shoes and recognize that technical documentation always gets out of date and is too often forgotten as an application continues to evolve. This is the technical debt problem that can spiral out of control as your use of EDA and Kafka grows over time.

So, that is exactly why it is a requirement to automate Kafka service discovery so you can introspect what topics, partitions, consumer groups, and connectors are configured so that you can begin down the road to managing them like you do for your other APIs. Without the ability to determine the reality (what’s going on in runtime is reality, whether you like it or not), you can document what you think you have but it will never be the source of truth you can depend on.

A reliable Kafka service discovery tool with the requirements I listed above will be that source of truth you need.

Once you have discovered what you have with a Kafka service discovery tool, you’ll need to find a way to keep it consistent as things inevitably change. There needs to be continuous probing to ensure that as the applications and architecture change, the documentation is kept up to date and continues to reflect the runtime reality. This means that on a periodic basis, the Kafka service discovery tool needs to be run in order to audit and find changes, enabling you to decide if the change was intended or not. This will ensure the Kafka event streams documentation (which applications are producing and consuming each event stream) and the schemas are always consistent.

Thus, the path to solving the technical debt dilemma and design consistency problem with Apache Kafka is a Kafka service discovery tool.

The Future of Kafka Service Discovery

I hope I’ve given you a little insight into why you are struggling to manage and understand your Kafka streams and what kind of tools the industry will need to solve these particular pain points. Recognizing the problem is the first step in solving it!

Solace has been taking a proactive role in developing the capabilities I outlined above, specifically for Kafka users: authoring, developer portal, metrics, service discovery, audit tool, etc. I encourage you to stay tuned and let us know if you agree that this type of capability is sorely needed! I am confident that soon you will be enabled to manage and govern your Apache Kafka event streams like you do your APIs. And won’t that be exciting!

The post Why Architects Need Tools for Apache Kafka Service Discovery, Auditing, and Topology Visualization appeared first on Solace.

Why Architects Need Tools for Apache Kafka Service Discovery, Auditing, and Topology Visualization published first on https://jiohow.tumblr.com/

0 notes

Text

15 Practical Tips to Improve Project Communication

I MAY EARN A SMALL COMMISSION IF YOU BUY A PRODUCT THROUGH ONE OF THE LINKS IN THIS ARTICLE, AT NO COST TO YOU. Do you want to improve communication on your projects? And yet make it easier to do at the same time? In this article I’ll share 15 tried-and-tested tips for effective project communications. 1. Use a milestone tracker The milestone tracker I use is a simple Excel spreadsheet. It lists the milestones in one column, organised by project phase. It also includes the name of the person responsible, the target date and any notes. That’s four columns. I colour-code the date column too, with red, amber and green to show whether the delivery is on track, at risk or needing serious management attention. When the date has been reached and the milestone achieved, I change it to say Complete. This document is a very simple, yet very effective way of communicating the high-level project dates to stakeholders. And they love it! Get the project workbook I use here (including the milestone tracker). 2. Use template emails How many times do you send the same messages to people? The covering emails for the ‘here is the monthly report’ and the ‘please find attached my expenses’ type emails can all be templated. Either set up templates in your email system or keep a file of template messages. Then copy and paste to save you typing them out. Communication tip for Outlook users In Outlook, create your message and save it as a draft. Don’t add any recipients. When you want to use it, click Ctrl+F to ‘forward’ the message. In Office 2013 and later, you’ll need to open the draft message first. Press Ctrl+F with the cursor inside one of the header fields e.g. where you would put the address. It will open a new version of the message, and your draft remains in the draft folder until you need to use it again. Good for: Sending out responses to frequently asked questions The bare bones of your weekly project status report email, so you only have to fill in the progress Messages you send often e.g. invites to project steering group meetings. 3. Use checklists Create checklists for tasks that you do frequently like hosting Project Board meetings or project kick off. Checklists are in use in hospitals and airlines because they help people remember everything they need to do and ensure nothing gets forgotten. Pick a few tasks that you struggle with (for me it’s creating POs and then paying the invoices) and create a checklist so you don’t have to think about it anymore. Read next: How to create a checklist in 3 easy steps. I also have checklists as a reminder for the daily, weekly, monthly and annual project management tasks I should be doing. That way, at least I always have the basic communication points covered. Get the checklists I use here. 4. Stand up during phone calls Have you tried this? It really works! Stand up during your phone calls and you’ll get through the conversation faster. 5. Book meetings now Get all your face-to-face meetings in the diary now for the year. Stakeholders are busy people so block out their time for your project today. Read next: Overcoming poor communication in project management by dealing with interference 6. Create a communications asset register A communications asset register is a list of all the communications assets that you have created for the project. That includes: Newsletters Press releases Internal magazine articles Posters Flyers and leaflets Photos. Use the register to note the type of asset, when it was created, where it is stored and how it could be reused. Then you’ll always be able to find that elusive picture when you need it! The register could be as simple as a shared folder on a network or Google drive where you drop all the comms items created by the team. Or you could create a list in a spreadsheet or Airtable to help you locate them next time. 7. Write thank yous Even if it’s just the tag on a gift – handwriting personal thank yous is a powerful message of appreciation. I got some lovely notecards from Etsy that I use to thank key stakeholders. I also got personalised holiday cards made from Moo, and postcards too, which I use if I just want to write a quick note to someone. 8. Use a meeting planner I use the TimeAndDate.com meeting planner. This is one of my top project team communication tips. If you work with international teams then this is invaluable — you absolutely need to know what time it is where they are. Think about your international colleagues and how you are going to effectively work with them as you put together your project communication plan. Try to switch your meetings around from time to time so one team isn’t always the group coming in really early or staying really late. 9. Use email mailing lists Set up distribution lists for your project. Create one for project team members, Project Board members and any other contacts. My mailings lists save me a lot of time trying to remember to include everyone. As project management communication tips go, this one is golden! Communication tip for email mailing lists Make sure there is more than one person on the team who has access to edit the email list. I’ve had issues on projects before when I needed to get the list changed and the owners was out of the business. It’s also a good idea to get a BAU owner to take responsibility for the email list. On one project, I was still approving people to join the list six months after the project had closed because there was no one else to take over the management of the list! Of course, there was, really, but they weren’t aware they had to — it was a project handover task that had been overlooked. 10. Create a communications calendar When you are thinking about how to improve communication in the project, one of the key things to do is make a plan for it. Plot out your project communications for the year. When are you doing a newsletter? When are you having a briefing conference call? What are the deadlines for your staff magazine? If I don’t do this I end up not having enough time to do the communications to a suitable standard (and therefore risk being late or working late). A calendar means I can add the dates to my project plan or diary and never miss a deadline. There’s a communications calendar inside my project workbook spreadsheet. 11. Book meetings with yourself This isn’t so much a project communication tip as a way to ensure you do project communications by making time for them. Diary your repeating events like reporting deadlines. Block time out in your calendar to do these tasks or you’ll find yourself sucked into the daily To Do list and squeezing your reporting into half an hour on Friday afternoon. 12. Automate reports What reporting can you automate? I don’t use any systems that enable automated project reporting but if you do have project management software that automatically creates dashboards or can produce bespoke reports at a single click then make sure you know how to use it. 13. Piggyback on others Time your project communications around what other people are doing. This is one of the project management communication strategies I recommend often, especially to programme managers! Talk to your colleagues about what they are sending out to department heads, key stakeholders and workstream leads. No one works in a vacuum. I bet that the subject matter experts doing stuff for you are also working on other projects. Plus, there are normal BAU standard communications that go out regularly. Tag on to another department’s newsletter and use other teams to get your message out where it makes sense to do so. Find the person who coordinates a broadcast message and work with them to slot your project request, update or whatever into the comms they are already sending. It’s win win! They get to have extra content in their communication (and in my experience, the people who write internal newsletters love not having to put it all together themselves) and you get your message out without having to create a standalone comms. 14. Update your voicemail I don’t do this enough – it’s something I need to work on this year. I used to update my voicemail message daily in one of my previous jobs but today I only change it when I’m on leave for more than a day. It is a good idea to update your voicemail message. It is so easy to do and it gives a very professional impression. Change it as often as sensible, for example to let callers know that you are out all day at a workshop or that you are on vacation. Just remember to change it back! 15. Get some apps Install some apps to help you be more productive while you are out and about. Make sure your team can get in contact with you so use tools that they use as well. There are plenty of collaboration tools to try. Download a few and see if they work for you and your team. If they don’t make you more productive, uninstall them and try something else. Unfortunately, it’s all about trial and error. What works for one project manager won’t work for another. Bonus Tip: Use video It works! Use video to communicate short messages. It’s much more personal and you’ll stand out, because I bet other project managers aren’t doing it. Read my case study here. How to improve communication in project management These 15 tips will help you improve the way you communicate with your team. As a project manager, you should constantly be looking for different ways to track what you do, and make changes to how you communicate. Keep trying different things until you find tricks, hacks and techniques that work for your team. Then keep them under review! What works today might not be so good in three months. Keep track of your project communication with my project workbook – the simplest and easiest way to keep control of all the things that are moving on your project. Get your copy here. Pin for later reading: Let me into the Resource Library! Get access to over 30 project management templates, ebooks, checklists and more. The secret password is in your confirmation email! You can read my privacy policy here.

from WordPress https://ift.tt/2ulWfO4

via IFTTT

0 notes

Text

How We Converted a Static Site to WordPress

Many of us who started out on the Web early are likely to have a hardcoded custom website.

That’s so 1999! On EasyWP we started quick and clean with a plain HTML landing page as well. Once you decide to create content more often this becomes a problem. Updating or adding pages to a static site is a nuisance at best.

In the worst case, it prevents you from publishing at all. Also, as a brand that specializes in WordPress, we wanted to have a proof of concept WP site that shows a better way of doing things.

Key Benefits of Having a WordPress Site

Do you already use WordPress? Then you know how simple self-controlled online publishing becomes with it. Sure, there may be third-party services that are even easier to use, but in exchange they own your content.

For those who do not yet use WordPress as a content management system for their site… You’re probably thinking: “Why bother? The static site works fine. Why fix something that’s not broken?”

Here are some of the advantages in comparison to static sites:

Ease of setup – no endless coding with ready-made themes. Changing the actual design of a static site is often quite tedious and requires lots of coding. On WordPress it’s straightforward.

Very simple to add new content – adding a new piece of content also requires HTML skills when you use a static site. A CMS like WordPress only offers code view as an advanced option. You don’t really need it.

Quick design changes – for both the overall site website and individual elements, you can move widgets (like a list of the latest comments for example) on WordPress with ease. A static website requires steps similar to a redesign.

One-click advanced features – you can add many features by merely searching for them in a huge library of plugins. A static website requires custom programming to enable that.

Automatic upload to the server – no FTP tools needed. In the past, you had to upload images and HTML pages manually using the file transfer protocol. WordPress does that in the background while you stay in the browser (Chrome, Firefox, Opera).

User and contributor management – it’s a breeze when using the most popular CMS. It’s almost impossible on a static site. You need to give FTP access rights etc.

Use case changes are no problem – you want to turn your site into an online store? No problem with WordPress. A static site has to be completely remade from the ground up.

Great overview of your content assets – you can see how many articles you have on your site, how many comments and how many images. You can also quickly search for them.

In essence, a static site is very limited in scope and hard to update or change. Even as a web developer you need a significant amount of time to implement basic changes or to add new content.

WordPress simplifies all the common content and website creation, as well as optimization tasks. You can solve problems quickly and for free, and not need the budget to cover a whole team of experts.

Upcycling Existing Design and Code from web hosting

Long story short—it was about time to create a proper website with a WordPress backend, and a custom theme on the way too. John, our lead front-end developer approached the task enthusiastically. Here’s how it went. (Spoiler Alert: it worked!)

We wanted to keep our existing design and the look and feel instead of creating a new one. We also didn’t want to code the site again from scratch.

Ideally, we would be able to copy and paste the site into WordPress. Of course, in reality that’s a much more complex process. So, let’s take a look at the nitty-gritty.

First of all, the goal was to reuse the current HTML/LESS code written for Laravel—one of the most popular PHP frameworks. The current frontend module used to display the homepage was to stay intact. In other words, rewriting things was an option.

Creating a Custom WordPress Theme – Where to Start? do you own reseller hosting ?

Building an HTML page is pretty easy once you know how the basic markup language works. Creating a site in WordPress requires more than just plain HTML and some styles, though. You need to make a template or theme in WordPress lingo.

The first step was to create the skeleton for a blank theme. In order not to start from scratch, the web dev decided to use the underscore theme boilerplate. It’s a blank theme and pretty similar to Twenty Nineteen – the standard WordPress theme shipped by default with WordPress 5+.

In order to reuse our styles and scripts, it seemed that we’d need some extra components to be able to compile the LESS and the ReactJS files. After several tests, we were able to take the same packages used for the current homepage version. Hooray!

The next question was how to make the current content of the Homepage editable. Understandably, the developer wanted to reuse the same template that was already working in Laravel.

Does Gutenberg Slow Down or Speed up the Process?

This answer is… it depends. For this project, we wanted to use the new Gutenberg block feature to make it compatible with the new WordPress editor. Thus, the goals were:

make the content editable by using the new WordPress editor

reuse the so-called blade/html template written in Laravel

After investigating the new Gutenberg editor: how it works when it comes to editing a page, the block concepts etc., it became apparent that writing a custom Gutenberg block is pretty tedious.

Instead, you can use a plugin called Block Lab, which provides a way to create one or more custom blocks connected to a PHP template.

That was pretty cool because we were able to make the page editable (ok, partially editable actually) and John managed to reuse his previous HTML template.

As you may imagine, we’re not simply looking for a static homepage. We also had to consider:

background images

custom alignments

“float” text

dynamic content

user interaction

Would we be able to translate all that into WordPress? It’s not a simple task, but even if we weren’t able to modify everything from the backend, we could still edit the content. Crucial page elements such as headlines and paragraphs among others are already editable. John was optimistic that we would able to improve the editing in a second step.

The next challenge was how to get the dynamic data related to the product list. Fortunately, we were able to get text from the JSON list directly from the old version by using a Request API.

In effect, this enabled us to get the product list from the EasyWP Dashboard. Thus John successfully recreated the whole Homepage inside WordPress.

So What Did We Learn?

Moving from a static one-page site (or rather a landing page) to a fully-fledged custom WordPress theme—one that was based on our own branding guidelines—definitely presented some challenges.

Overall, however, it showed that you can change the WordPress behavior and the theme structure with ease. Once you know how it’s done, the actual work is less complicated than you might first expect.

We always try to get new experiences when we develop something. More specifically, we always seek to create something reusable. Ideally, other people will be able to use our tools for their websites too. This way we can give back to the WordPress community and the open source community in general.

Giving Back to the WordPress Community

In this case, John has created an open source project—a framework which can be used to create a WordPress theme from scratch. It’s still a rough sketch but it’s the same one we actually used successfully in the current EasyWP theme.

The theme framework is called WP Spock and is licensed under the GPL 3. You can use it and modify or contribute in other ways. So join in the fun! You can also simply download WP Scotty. It’s a ready-made boilerplate theme.

Thus this project has been a twofold success. Not only for us—achieving our objective of moving a site from static HTML to WordPress. But we were also able to make the process and tools available for the broader public. It seems we can be satisfied with the end result!

Check out the result of our—mainly John’s—work: the actual WordPress-based EasyWP site. Do you notice the difference? Probably yes: it’s faster obviously!

from https://www.iwrahost.com/how-we-converted-a-static-site-to-wordpress/

from https://iwrahost1.tumblr.com/post/183562569698

0 notes

Text

How We Converted a Static Site to WordPress

Many of us who started out on the Web early are likely to have a hardcoded custom website.

That’s so 1999! On EasyWP we started quick and clean with a plain HTML landing page as well. Once you decide to create content more often this becomes a problem. Updating or adding pages to a static site is a nuisance at best.

In the worst case, it prevents you from publishing at all. Also, as a brand that specializes in WordPress, we wanted to have a proof of concept WP site that shows a better way of doing things.

Key Benefits of Having a WordPress Site

Do you already use WordPress? Then you know how simple self-controlled online publishing becomes with it. Sure, there may be third-party services that are even easier to use, but in exchange they own your content.

For those who do not yet use WordPress as a content management system for their site… You’re probably thinking: “Why bother? The static site works fine. Why fix something that’s not broken?”

Here are some of the advantages in comparison to static sites:

Ease of setup – no endless coding with ready-made themes. Changing the actual design of a static site is often quite tedious and requires lots of coding. On WordPress it’s straightforward.

Very simple to add new content – adding a new piece of content also requires HTML skills when you use a static site. A CMS like WordPress only offers code view as an advanced option. You don’t really need it.

Quick design changes – for both the overall site website and individual elements, you can move widgets (like a list of the latest comments for example) on WordPress with ease. A static website requires steps similar to a redesign.

One-click advanced features – you can add many features by merely searching for them in a huge library of plugins. A static website requires custom programming to enable that.

Automatic upload to the server – no FTP tools needed. In the past, you had to upload images and HTML pages manually using the file transfer protocol. WordPress does that in the background while you stay in the browser (Chrome, Firefox, Opera).

User and contributor management – it’s a breeze when using the most popular CMS. It’s almost impossible on a static site. You need to give FTP access rights etc.

Use case changes are no problem – you want to turn your site into an online store? No problem with WordPress. A static site has to be completely remade from the ground up.

Great overview of your content assets – you can see how many articles you have on your site, how many comments and how many images. You can also quickly search for them.

In essence, a static site is very limited in scope and hard to update or change. Even as a web developer you need a significant amount of time to implement basic changes or to add new content.

WordPress simplifies all the common content and website creation, as well as optimization tasks. You can solve problems quickly and for free, and not need the budget to cover a whole team of experts.

Upcycling Existing Design and Code from web hosting

Long story short—it was about time to create a proper website with a WordPress backend, and a custom theme on the way too. John, our lead front-end developer approached the task enthusiastically. Here’s how it went. (Spoiler Alert: it worked!)

We wanted to keep our existing design and the look and feel instead of creating a new one. We also didn’t want to code the site again from scratch.

Ideally, we would be able to copy and paste the site into WordPress. Of course, in reality that’s a much more complex process. So, let’s take a look at the nitty-gritty.

First of all, the goal was to reuse the current HTML/LESS code written for Laravel—one of the most popular PHP frameworks. The current frontend module used to display the homepage was to stay intact. In other words, rewriting things was an option.

Creating a Custom WordPress Theme – Where to Start? do you own reseller hosting ?

Building an HTML page is pretty easy once you know how the basic markup language works. Creating a site in WordPress requires more than just plain HTML and some styles, though. You need to make a template or theme in WordPress lingo.

The first step was to create the skeleton for a blank theme. In order not to start from scratch, the web dev decided to use the underscore theme boilerplate. It’s a blank theme and pretty similar to Twenty Nineteen – the standard WordPress theme shipped by default with WordPress 5+.

In order to reuse our styles and scripts, it seemed that we’d need some extra components to be able to compile the LESS and the ReactJS files. After several tests, we were able to take the same packages used for the current homepage version. Hooray!

The next question was how to make the current content of the Homepage editable. Understandably, the developer wanted to reuse the same template that was already working in Laravel.

Does Gutenberg Slow Down or Speed up the Process?

This answer is… it depends. For this project, we wanted to use the new Gutenberg block feature to make it compatible with the new WordPress editor. Thus, the goals were:

make the content editable by using the new WordPress editor

reuse the so-called blade/html template written in Laravel

After investigating the new Gutenberg editor: how it works when it comes to editing a page, the block concepts etc., it became apparent that writing a custom Gutenberg block is pretty tedious.

Instead, you can use a plugin called Block Lab, which provides a way to create one or more custom blocks connected to a PHP template.

That was pretty cool because we were able to make the page editable (ok, partially editable actually) and John managed to reuse his previous HTML template.

As you may imagine, we’re not simply looking for a static homepage. We also had to consider:

background images

custom alignments

“float” text

dynamic content

user interaction

Would we be able to translate all that into WordPress? It’s not a simple task, but even if we weren’t able to modify everything from the backend, we could still edit the content. Crucial page elements such as headlines and paragraphs among others are already editable. John was optimistic that we would able to improve the editing in a second step.

The next challenge was how to get the dynamic data related to the product list. Fortunately, we were able to get text from the JSON list directly from the old version by using a Request API.

In effect, this enabled us to get the product list from the EasyWP Dashboard. Thus John successfully recreated the whole Homepage inside WordPress.

So What Did We Learn?

Moving from a static one-page site (or rather a landing page) to a fully-fledged custom WordPress theme—one that was based on our own branding guidelines—definitely presented some challenges.

Overall, however, it showed that you can change the WordPress behavior and the theme structure with ease. Once you know how it’s done, the actual work is less complicated than you might first expect.

We always try to get new experiences when we develop something. More specifically, we always seek to create something reusable. Ideally, other people will be able to use our tools for their websites too. This way we can give back to the WordPress community and the open source community in general.

Giving Back to the WordPress Community

In this case, John has created an open source project—a framework which can be used to create a WordPress theme from scratch. It’s still a rough sketch but it’s the same one we actually used successfully in the current EasyWP theme.

The theme framework is called WP Spock and is licensed under the GPL 3. You can use it and modify or contribute in other ways. So join in the fun! You can also simply download WP Scotty. It’s a ready-made boilerplate theme.

Thus this project has been a twofold success. Not only for us—achieving our objective of moving a site from static HTML to WordPress. But we were also able to make the process and tools available for the broader public. It seems we can be satisfied with the end result!

Check out the result of our—mainly John’s—work: the actual WordPress-based EasyWP site. Do you notice the difference? Probably yes: it’s faster obviously!

From https://www.iwrahost.com/how-we-converted-a-static-site-to-wordpress/

from https://iwrahost1.wordpress.com/2019/03/19/how-we-converted-a-static-site-to-wordpress/

0 notes

Text

How We Converted a Static Site to WordPress

Many of us who started out on the Web early are likely to have a hardcoded custom website.

That’s so 1999! On EasyWP we started quick and clean with a plain HTML landing page as well. Once you decide to create content more often this becomes a problem. Updating or adding pages to a static site is a nuisance at best.

In the worst case, it prevents you from publishing at all. Also, as a brand that specializes in WordPress, we wanted to have a proof of concept WP site that shows a better way of doing things.

Key Benefits of Having a WordPress Site

Do you already use WordPress? Then you know how simple self-controlled online publishing becomes with it. Sure, there may be third-party services that are even easier to use, but in exchange they own your content.

For those who do not yet use WordPress as a content management system for their site… You’re probably thinking: “Why bother? The static site works fine. Why fix something that’s not broken?”

Here are some of the advantages in comparison to static sites:

Ease of setup – no endless coding with ready-made themes. Changing the actual design of a static site is often quite tedious and requires lots of coding. On WordPress it’s straightforward.

Very simple to add new content – adding a new piece of content also requires HTML skills when you use a static site. A CMS like WordPress only offers code view as an advanced option. You don’t really need it.

Quick design changes – for both the overall site website and individual elements, you can move widgets (like a list of the latest comments for example) on WordPress with ease. A static website requires steps similar to a redesign.

One-click advanced features – you can add many features by merely searching for them in a huge library of plugins. A static website requires custom programming to enable that.

Automatic upload to the server – no FTP tools needed. In the past, you had to upload images and HTML pages manually using the file transfer protocol. WordPress does that in the background while you stay in the browser (Chrome, Firefox, Opera).

User and contributor management – it’s a breeze when using the most popular CMS. It’s almost impossible on a static site. You need to give FTP access rights etc.

Use case changes are no problem – you want to turn your site into an online store? No problem with WordPress. A static site has to be completely remade from the ground up.

Great overview of your content assets – you can see how many articles you have on your site, how many comments and how many images. You can also quickly search for them.

In essence, a static site is very limited in scope and hard to update or change. Even as a web developer you need a significant amount of time to implement basic changes or to add new content.

WordPress simplifies all the common content and website creation, as well as optimization tasks. You can solve problems quickly and for free, and not need the budget to cover a whole team of experts.

Upcycling Existing Design and Code from web hosting

Long story short—it was about time to create a proper website with a WordPress backend, and a custom theme on the way too. John, our lead front-end developer approached the task enthusiastically. Here’s how it went. (Spoiler Alert: it worked!)

We wanted to keep our existing design and the look and feel instead of creating a new one. We also didn’t want to code the site again from scratch.

Ideally, we would be able to copy and paste the site into WordPress. Of course, in reality that’s a much more complex process. So, let’s take a look at the nitty-gritty.

First of all, the goal was to reuse the current HTML/LESS code written for Laravel—one of the most popular PHP frameworks. The current frontend module used to display the homepage was to stay intact. In other words, rewriting things was an option.

Creating a Custom WordPress Theme – Where to Start? do you own reseller hosting ?

Building an HTML page is pretty easy once you know how the basic markup language works. Creating a site in WordPress requires more than just plain HTML and some styles, though. You need to make a template or theme in WordPress lingo.

The first step was to create the skeleton for a blank theme. In order not to start from scratch, the web dev decided to use the underscore theme boilerplate. It’s a blank theme and pretty similar to Twenty Nineteen – the standard WordPress theme shipped by default with WordPress 5+.

In order to reuse our styles and scripts, it seemed that we’d need some extra components to be able to compile the LESS and the ReactJS files. After several tests, we were able to take the same packages used for the current homepage version. Hooray!

The next question was how to make the current content of the Homepage editable. Understandably, the developer wanted to reuse the same template that was already working in Laravel.

Does Gutenberg Slow Down or Speed up the Process?

This answer is… it depends. For this project, we wanted to use the new Gutenberg block feature to make it compatible with the new WordPress editor. Thus, the goals were:

make the content editable by using the new WordPress editor

reuse the so-called blade/html template written in Laravel

After investigating the new Gutenberg editor: how it works when it comes to editing a page, the block concepts etc., it became apparent that writing a custom Gutenberg block is pretty tedious.

Instead, you can use a plugin called Block Lab, which provides a way to create one or more custom blocks connected to a PHP template.

That was pretty cool because we were able to make the page editable (ok, partially editable actually) and John managed to reuse his previous HTML template.

As you may imagine, we’re not simply looking for a static homepage. We also had to consider:

background images

custom alignments

“float” text

dynamic content

user interaction

Would we be able to translate all that into WordPress? It’s not a simple task, but even if we weren’t able to modify everything from the backend, we could still edit the content. Crucial page elements such as headlines and paragraphs among others are already editable. John was optimistic that we would able to improve the editing in a second step.

The next challenge was how to get the dynamic data related to the product list. Fortunately, we were able to get text from the JSON list directly from the old version by using a Request API.

In effect, this enabled us to get the product list from the EasyWP Dashboard. Thus John successfully recreated the whole Homepage inside WordPress.

So What Did We Learn?

Moving from a static one-page site (or rather a landing page) to a fully-fledged custom WordPress theme—one that was based on our own branding guidelines—definitely presented some challenges.

Overall, however, it showed that you can change the WordPress behavior and the theme structure with ease. Once you know how it’s done, the actual work is less complicated than you might first expect.

We always try to get new experiences when we develop something. More specifically, we always seek to create something reusable. Ideally, other people will be able to use our tools for their websites too. This way we can give back to the WordPress community and the open source community in general.

Giving Back to the WordPress Community

In this case, John has created an open source project—a framework which can be used to create a WordPress theme from scratch. It’s still a rough sketch but it’s the same one we actually used successfully in the current EasyWP theme.

The theme framework is called WP Spock and is licensed under the GPL 3. You can use it and modify or contribute in other ways. So join in the fun! You can also simply download WP Scotty. It’s a ready-made boilerplate theme.

Thus this project has been a twofold success. Not only for us—achieving our objective of moving a site from static HTML to WordPress. But we were also able to make the process and tools available for the broader public. It seems we can be satisfied with the end result!

Check out the result of our—mainly John’s—work: the actual WordPress-based EasyWP site. Do you notice the difference? Probably yes: it’s faster obviously!

from https://www.iwrahost.com/how-we-converted-a-static-site-to-wordpress/

0 notes

Text

Your Prescription for Content: Healthcare and Digital Asset Management

You wake up one morning with a mind-numbing headache. Or maybe it’s sudden ear pain or a mole you never noticed.

Before you grab a pain reliever, you might head to your laptop and do a quick online search of your symptoms and pinpoint one of a dozen conditions it could possibly be.

It turns out you’re not the only medical Googler in America. More people are searching for health information online, with some even using the Internet to self-diagnose. Searching for health information is the third most common online activity and four out of 10 Americans do it, according to the Pew Research Center.

As more people turn to the Internet to learn about their health, it’s more important than ever for trusted sources to provide this information.

Today’s healthcare organizations need to create more and better content, and this content needs to be designed for reuse across channels — sometimes with localization and customization for specific audiences and platforms. The right system — such as digital asset management tools — can help healthcare organizations quickly and efficiently create and manage large volumes of content, so they can get relevant information to the right people, at the right time, in the right way, or via the right device. The key is having your content where your customers are, when they are there. A digital asset management system not only can help these organizations streamline content creation, it’s a valuable tool that allows healthcare organizations to achieve something even more important — loyalty and trust with their target audience and improved consumer health education.

Healthcare’s big content play.

Digital is forcing more industries to focus on customer experience. Healthcare is no different. Not only are consumers searching online for health information, they’re using their smartphones and computers to manage every part of their lives — whether it’s everyday tasks like grocery shopping and vacation planning to booking doctor’s appointments. Consumers now expect convenience and value at every touchpoint, and as they increasingly turn online to make their healthcare decisions, producing informative and useful content is a key way healthcare brands can engage them.

Some healthcare organizations already realize this. According to research conducted by Adobe in 2017, 29 percent of healthcare organizations plan to prioritize content marketing in the next few years.

“Content is gradually earning its place in healthcare campaigns, and the next few years will see content creation and storytelling emerging as prominent, very in-demand skills,” according to Econsultancy.

However, the healthcare sector faces unique challenges in integrating content into its marketing efforts. The industry is highly regulated, so gathering the data necessary to tailor content to specific audiences requires these consumers to opt-in or to authenticate their identity. Consumers also have a wide range of medical concerns and medical histories, so it isn’t always easy to segment audiences in healthcare in the same way a retail brand can.

But an even greater challenge may be how healthcare companies structure their content organizations and content management process.

“With some companies, it’s a manual or a completely disjointed digital method,” says Tom Swanson, head of Healthcare Industry Strategy at Adobe.

Tom says different business units may have their own siloed collection of assets and their own digital asset management systems, none of which speak to each other. This hinders efficiency, but it also creates an even bigger problem with revision control.

“If you have the same piece of content existing in multiple locations, if for some reason that content needs to be pulled or changed, it’s a very manual process of identifying where all of those different versions are housed and making sure that the revised version replaced the old version. In healthcare, that can literally be a life or death situation,” Tom says.

The benefits of digital asset management.

Having a centralized digital asset management system within a healthcare organization can address this problem and make the content production process much more efficient.

Healthcare organizations create a lot of content and often have distributed workforces across multiple offices and time zones, so they need a robust tool to help them execute their content strategy and deliver effective communications.

A digital asset management system offers multiple capabilities to streamline content production. It safely and securely stores all the content assets — including text articles, photos, audio files, video clips, animations, banner ads, and brochures — and supporting materials an organization needs to deliver health information to its audience. And it allows content creators to design their workflows and easily take a piece of content from concept, to review and approval. Everything is tagged, properly categorized and organized into a hierarchy with consistent taxonomies, so companies can easily find and reuse content in different ways, tailor it to various channels and audiences, and review performance data to optimize their campaigns. Tom says even with all the benefits a digital asset management system offers, it’s also important for healthcare organizations to ensure the system they’re using is HIPAA compliant (if required) and features tools that provide a clear audit trail for regulatory agencies like the FDA.

“If you have centralized management, you are mitigating the risk of version control. You are also mitigating the resources required to monitor this content,” he says. “You’re also reducing the amount of content that needs to be approved by your legal and regulatory bodies and you’re increasing reuse. All of this drives down costs in multiple areas.”

Some healthcare organizations are already seeing these benefits. Alere, which manufacturers point-of-care diagnostic tests, used a digital asset management system to centralize its global content creation. The company delivers content to 29 countries in 15 languages, so it used the DAM to ensure brand consistency and design, while allowing content authors to customize each asset for a local audience. Using a DAM cut down the time Alere’s content authors spent creating new content, reducing asset duplication by 53 percent across the company’s 2,000 web pages. The tool also helped Alere create a self-service asset library that reduced asset requests by 80 percent, which is remarkable considering Alere’s creative services team handled 70 such requests each week.

Covidien, a leading manufacturer of medical devices and supplies, also relied on a DAM system to improve the content production process. The company had to deliver multiple messages to different audiences, including employees, sellers, patients, and providers across different platforms and in different languages. Covidien faced risks with waste and inefficiencies, version control, legal and regulatory compliance, and ensuring that the wrong audience didn’t receive the wrong message. A DAM helped the company mitigate these challenges by enabling dynamic content authoring and regional approval workflows; by simplifying the creation of seller collateral, presentations, and e-learning content; and by creating a robust content stream to the company’s CRM and automated marketing tools — all within a secure, analytics-driven, cloud-based system.

The approach led to significant cost savings and reduced risk for Covidien — it created a single FDA-validated system for content creation, approval, distribution, and revision control; improved speed to market by 124 percent and content reuse by 200 percent; and led to $1 million in printing and warehousing savings, $1.2 million in translation savings, and $1.8 million in savings from creative redundancies.

“Centralizing content management provides the benefits of having a single source of truth for content,” Tom says.

As more consumers head online for health information, providing truthful and accurate information will be the most effective way for healthcare companies to differentiate themselves in the marketplace, and build trust with their audience. But trying to manage the content process without a centralized system opens the doorway for all kinds of risks, waste, and inefficiencies that healthcare organizations can’t afford. A basic CMS doesn’t give these organizations the capabilities they need to produce, publish, and distribute large volumes of content, and it certainly doesn’t provide the version control and auditing trail necessary for legal and regulatory compliance. But a digital asset management system does. It helps healthcare organizations deliver the right message to right audience — whether that’s a new patient, a healthcare provider, or your average medical Googler.

Read more about digital asset management and healthcare by exploring Adobe’s healthcare content.

The post Your Prescription for Content: Healthcare and Digital Asset Management appeared first on Digital Marketing Blog by Adobe.

from Digital Marketing Blog by Adobe https://blogs.adobe.com/digitalmarketing/customer-experience/prescription-content-healthcare-digital-asset-management/

0 notes

Text

Your Prescription for Content: Healthcare and Digital Asset Management

You wake up one morning with a mind-numbing headache. Or maybe it’s sudden ear pain or a mole you never noticed.

Before you grab a pain reliever, you might head to your laptop and do a quick online search of your symptoms and pinpoint one of a dozen conditions it could possibly be.

It turns out you’re not the only medical Googler in America. More people are searching for health information online, with some even using the Internet to self-diagnose. Searching for health information is the third most common online activity and four out of 10 Americans do it, according to the Pew Research Center.

As more people turn to the Internet to learn about their health, it’s more important than ever for trusted sources to provide this information.

Today’s healthcare organizations need to create more and better content, and this content needs to be designed for reuse across channels — sometimes with localization and customization for specific audiences and platforms. The right system — such as digital asset management tools — can help healthcare organizations quickly and efficiently create and manage large volumes of content, so they can get relevant information to the right people, at the right time, in the right way, or via the right device. The key is having your content where your customers are, when they are there. A digital asset management system not only can help these organizations streamline content creation, it’s a valuable tool that allows healthcare organizations to achieve something even more important — loyalty and trust with their target audience and improved consumer health education.

Healthcare’s big content play.