#flight prices scraping services

Text

Flight Price Monitoring Services | Scrape Airline Data

We Provide Flight Price Monitoring Services in USA, UK, Singapore, Italy, Canada, Spain and Australia and Extract or Scrape Airline Data from Online Airline / flight website and Mobile App like Booking, kayak, agoda.com, makemytrip, tripadvisor and Others.

#flight Price Monitoring#Scrape Airline Data#Airfare Data Extraction Service#flight prices scraping services#Flight Price Monitoring API#web scraping services

2 notes

·

View notes

Text

Gig apps trap reverse centaurs in Skinner boxes

Enshittification is the process by which digital platforms devour themselves: first they dangle goodies in front of end users. Once users are locked in, the goodies are taken away and dangled before business customers who supply goods to the users. Once those business customers are stuck on the platform, the goodies are clawed away and showered on the platform’s shareholders:

https://pluralistic.net/2023/01/21/potemkin-ai/#hey-guys

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

Enshittification isn’t just another way of saying “fraud” or “price gouging” or “wage theft.” Enshittification is intrinsically digital, because moving all those goodies around requires the flexibility that only comes with a digital businesses. Jeff Bezos, grocer, can’t rapidly change the price of eggs at Whole Foods without an army of kids with pricing guns on roller-skates. Jeff Bezos, grocer, can change the price of eggs on Amazon Fresh just by twiddling a knob on the service’s back-end.

Twiddling is the key to enshittification: rapidly adjusting prices, conditions and offers. As with any shell game, the quickness of the hand deceives the eye. Tech monopolists aren’t smarter than the Gilded Age sociopaths who monopolized rail or coal — they use the same tricks as those monsters of history, but they do them faster and with computers:

https://doctorow.medium.com/twiddler-1b5c9690cce6

If Rockefeller wanted to crush a freight company, he couldn’t just click a mouse and lay down a pipeline that ran on the same route, and then click another mouse to make it go away when he was done. When Bezos wants to bankrupt Diapers.com — a company that refused to sell itself to Amazon — he just moved a slider so that diapers on Amazon were being sold below cost. Amazon lost $100m over three months, diapers.com went bankrupt, and every investor learned that competing with Amazon was a losing bet:

https://slate.com/technology/2013/10/amazon-book-how-jeff-bezos-went-thermonuclear-on-diapers-com.html

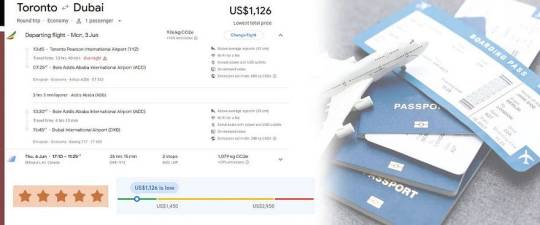

That’s the power of twiddling — but twiddling cuts both ways. The same flexibility that digital businesses enjoy is hypothetically available to workers and users. The airlines pioneered twiddling ticket prices, and that naturally gave rise to countertwiddling, in the form of comparison shopping sites that scraped the airlines’ sites to predict when tickets would be cheapest:

https://pluralistic.net/2023/02/27/knob-jockeys/#bros-be-twiddlin

The airlines — like all abusive businesses — refused to tolerate this. They were allowed to touch their knobs as much as they wanted — indeed, they couldn’t stop touching those knobs — but when we tried to twiddle back, that was “felony contempt of business model,” and the airlines sued:

https://www.cnbc.com/2014/12/30/airline-sues-man-for-founding-a-cheap-flights-website.html

And sued:

https://www.nytimes.com/2018/01/06/business/southwest-airlines-lawsuit-prices.html

Platforms don’t just hate it when end-users twiddle back — if anything they are even more aggressive when their business-users dare to twiddle. Take Para, an app that Doordash drivers used to get a peek at the wages offered for jobs before they accepted them — something that Doordash hid from its workers. Doordash ruthlessly attacked Para, saying that by letting drivers know how much they’d earn before they did the work, Para was violating the law:

https://www.eff.org/deeplinks/2021/08/tech-rights-are-workers-rights-doordash-edition

Which law? Well, take your pick. The modern meaning of “IP” is “any law that lets me use the law to control my competitors, competition or customers.” Platforms use a mix of anticircumvention law, patent, copyright, contract, cybersecurity and other legal systems to weave together a thicket of rules that allow them to shut down rivals for their Felony Contempt of Business Model:

https://locusmag.com/2020/09/cory-doctorow-ip/

Enshittification relies on unlimited twiddling (by platforms), and a general prohibition on countertwiddling (by platform users). Enshittification is a form of fishing, in which bait is dangled before different groups of users and then nimbly withdrawn when they lunge for it. Twiddling puts the suppleness into the enshittifier’s fishing-rod, and a ban on countertwiddling weighs down platform users so they’re always a bit too slow to catch the bait.

Nowhere do we see twiddling’s impact more than in the “gig economy,” where workers are misclassified as independent contractors and put to work for an app that scripts their every move to the finest degree. When an app is your boss, you work for an employer who docks your pay for violating rules that you aren’t allowed to know — and where your attempts to learn those rules are constantly frustrated by the endless back-end twiddling that changes the rules faster than you can learn them.

As with every question of technology, the issue isn’t twiddling per se — it’s who does the twiddling and who gets twiddled. A worker armed with digital tools can play gig work employers off each other and force them to bid up the price of their labor; they can form co-ops with other workers that auto-refuse jobs that don’t pay enough, and use digital tools to organize to shift power from bosses to workers:

https://pluralistic.net/2022/12/02/not-what-it-does/#who-it-does-it-to

Take “reverse centaurs.” In AI research, a “centaur” is a human assisted by a machine that does more than either could do on their own. For example, a chess master and a chess program can play a better game together than either could play separately. A reverse centaur is a machine assisted by a human, where the machine is in charge and the human is a meat-puppet.

Think of Amazon warehouse workers wearing haptic location-aware wristbands that buzz at them continuously dictating where their hands must be; or Amazon drivers whose eye-movements are continuously tracked in order to penalize drivers who look in the “wrong” direction:

https://pluralistic.net/2021/02/17/reverse-centaur/#reverse-centaur

The difference between a centaur and a reverse centaur is the difference between a machine that makes your life better and a machine that makes your life worse so that your boss gets richer. Reverse centaurism is the 21st Century’s answer to Taylorism, the pseudoscience that saw white-coated “experts” subject workers to humiliating choreography down to the smallest movement of your fingertip:

https://pluralistic.net/2022/08/21/great-taylors-ghost/#solidarity-or-bust

While reverse centaurism was born in warehouses and other company-owned facilities, gig work let it make the leap into workers’ homes and cars. The 21st century has seen a return to the cottage industry — a form of production that once saw workers labor far from their bosses and thus beyond their control — but shriven of the autonomy and dignity that working from home once afforded:

https://doctorow.medium.com/gig-work-is-the-opposite-of-steampunk-463e2730ef0d

The rise and rise of bossware — which allows for remote surveillance of workers in their homes and cars — has turned “work from home” into “live at work.” Reverse centaurs can now be chickenized — a term from labor economics that describes how poultry farmers, who sell their birds to one of three vast poultry processors who have divided up the country like the Pope dividing up the “New World,” are uniquely exploited:

https://onezero.medium.com/revenge-of-the-chickenized-reverse-centaurs-b2e8d5cda826

A chickenized reverse centaur has it rough: they must pay for the machines they use to make money for their bosses, they must obey the orders of the app that controls their work, and they are denied any of the protections that a traditional worker might enjoy, even as they are prohibited from deploying digital self-help measures that let them twiddle back to bargain for a better wage.

All of this sets the stage for a phenomenon called algorithmic wage discrimination, in which two workers doing the same job under the same conditions will see radically different payouts for that work. These payouts are continuously tweaked in the background by an algorithm that tries to predict the minimum sum a worker will accept to remain available without payment, to ensure sufficient workers to pick up jobs as they arise.

This phenomenon — and proposed policy and labor solutions to it — is expertly analyzed in “On Algorithmic Wage Discrimination,” a superb paper by UC Law San Franciscos Veena Dubal:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4331080

Dubal uses empirical data and enthnographic accounts from Uber drivers and other gig workers to explain how endless, self-directed twiddling allows gig companies pay workers less and pay themselves more. As @[email protected] explains in his LA Times article on Dubal’s research, the goal of the payment algorithm is to guess how often a given driver needs to receive fair compensation in order to keep them driving when the payments are unfair:

https://www.latimes.com/business/technology/story/2023-04-11/algorithmic-wage-discrimination

The algorithm combines nonconsensual dossiers compiled on individual drivers with population-scale data to seek an equilibrium between keeping drivers waiting, unpaid, for a job; and how much a driver needs to be paid for an individual job, in order to keep that driver from clocking out and doing something else.

@

Here’s how that works. Sergio Avedian, a writer for The Rideshare Guy, ran an experiment with two brothers who both drove for Uber; one drove a Tesla and drove intermittently, the other brother rented a hybrid sedan and drove frequently. Sitting side-by-side with the brothers, Avedian showed how the brother with the Tesla was offered more for every trip:

https://www.youtube.com/watch?v=UADTiL3S67I

Uber wants to lure intermittent drivers into becoming frequent drivers. Uber doesn’t pay for an oversupply of drivers, because it only pays drivers when they have a passenger in the car. Having drivers on call — but idle — is a way for Uber to shift the cost of maintaining a capacity cushion to its workers.

What’s more, what Uber charges customers is not based on how much it pays its workers. As Uber’s head of product explained: Uber uses “machine-learning techniques to estimate how much groups of customers are willing to shell out for a ride. Uber calculates riders’ propensity for paying a higher price for a particular route at a certain time of day. For instance, someone traveling from a wealthy neighborhood to another tony spot might be asked to pay more than another person heading to a poorer part of town, even if demand, traffic and distance are the same.”

https://qz.com/990131/uber-is-practicing-price-discrimination-economists-say-that-might-not-be-a-bad-thing/

Uber has historically described its business a pure supply-and-demand matching system, where a rush of demand for rides triggers surge pricing, which lures out drivers, which takes care of the demand. That’s not how it works today, and it’s unclear if it ever worked that way. Today, a driver who consults the rider version of the Uber app before accepting a job — to compare how much the rider is paying to how much they stand to earn — is booted off the app and denied further journeys.

Surging, instead, has become just another way to twiddle drivers. One of Dubal’s subjects, Derrick, describes how Uber uses fake surges to lure drivers to airports: “You go to the airport, once the lot get kind of full, then the surge go away.” Other drivers describe how they use groupchats to call out fake surges: “I’m in the Marina. It’s dead. Fake surge.”

That’s pure twiddling. Twiddling turns gamification into gamblification, where your labor buys you a spin on a roulette wheel in a rigged casino. As a driver called Melissa, who had doubled down on her availability to earn a $100 bonus awarded for clocking a certain number of rides, told Dubal, “When you get close to the bonus, the rides start trickling in more slowly…. And it makes sense. It’s really the type of

shit that they can do when it’s okay to have a surplus labor force that is just sitting there that they don’t have to pay for.”

Wherever you find reverse-centaurs, you get this kind of gamblification, where the rules are twiddled continuously to make sure that the house always wins. As a contract driver Amazon reverse centaur told Lauren Gurley for Motherboard, “Amazon uses these cameras allegedly to make sure they have a safer driving workforce, but they’re actually using them not to pay delivery companies”:

https://www.vice.com/en/article/88npjv/amazons-ai-cameras-are-punishing-drivers-for-mistakes-they-didnt-make

Algorithmic wage discrimination is the robot overlord of our nightmares: its job is to relentlessly quest for vulnerabilities and exploit them. Drivers divide themselves into “ants” (drivers who take every job) and “pickers” (drivers who cherry-pick high-paying jobs). The algorithm’s job is ensuring that pickers get the plum assignments, not the ants, in the hopes of converting those pickers to app-dependent ants.

In my work on enshittification, I call this the “giant teddy bear” gambit. At every county fair, you’ll always spot some poor jerk carrying around a giant teddy-bear they “won” on the midway. But they didn’t win it — not by getting three balls in the peach-basket. Rather, the carny running the rigged game either chose not to operate the “scissor” that kicks balls out of the basket. Or, if the game is “honest” (that is, merely impossible to win, rather than gimmicked), the operator will make a too-good-to-refuse offer: “Get one ball in and I’ll give you this keychain. Win two keychains and I’ll let you trade them for this giant teddy bear.”

Carnies aren’t in the business of giving away giant teddy bears — rather, the gambit is an investment. Giving a mark a giant teddy bear to carry around the midway all day acts as a convincer, luring other marks to try to land three balls in the basket and win their own teddy bear.

In the same way, platforms like Uber distribute giant teddy bears to pickers, as a way of keeping the ants scurrying from job to job, and as a way of convincing the pickers to give up whatever work allows them to discriminate among Uber’s offers and hold out for the plum deals, whereupon then can be transmogrified into ants themselves.

Dubal describes the experience of Adil, a Syrian refugee who drives for Uber in the Bay Area. His colleagues are pickers, and showed him screenshots of how much they earned. Determined to get a share of that money, Adil became a model ant, driving two hours to San Francisco, driving three days straight, napping in his car, spending only one day per week with his family. The algorithm noticed that Adil needed the work, so it paid him less.

Adil responded the way the system predicted he would, by driving even more: “My friends they make it, so I keep going, maybe I can figure

it out. It’s unsecure, and I don’t know how people they do it. I don’t know how I am doing it, but I have to. I mean, I don’t

find another option. In a minute, if I find something else, oh man, I will be out immediately. I am a very patient person, that’s why I can continue.”

Another driver, Diego, told Dubal about how the winners of the giant teddy bears fell into the trap of thinking that they were “good at the app”: “Any time there’s some big shot getting high pay outs, they always shame everyone else and say you don’t know how to use the app. I think there’s secret PR campaigns going on that gives targeted payouts to select workers, and they just think it’s all them.”

That’s the power of twiddling: by hoarding all the flexibility offered by digital tools, the management at platforms can become centaurs, able to string along thousands of workers, while the workers are reverse-centaurs, puppeteered by the apps.

As the example of Adil shows, the algorithm doesn’t need to be very sophisticated in order to figure out which workers it can underpay. The system automates the kind of racial and gender discrimination that is formally illegal, but which is masked by the smokescreen of digitization. An employer who systematically paid women less than men, or Black people less than white people, would be liable to criminal and civil sanctions. But if an algorithm simply notices that people who have fewer job prospects drive more and will thus accept lower wages, that’s just “optimization,” not racism or sexism.

This is the key to understanding the AI hype bubble: when ghouls from multinational banks predict 13 trillion dollar markets for “AI,” what they mean is that digital tools will speed up the twiddling and other wage-suppression techniques to transfer $13T in value from workers and consumers to shareholders.

The American business lobby is relentlessly focused on the goal of reducing wages. That’s the force behind “free trade,” “right to work,” and other codewords for “paying workers less,” including “gig work.” Tech workers long saw themselves as above this fray, immune to labor exploitation because they worked for a noble profession that took care of its own.

But the epidemic of mass tech-worker layoffs, following on the heels of massive stock buybacks, has demonstrated that tech bosses are just like any other boss: willing to pay as little as they can get away with, and no more. Tech bosses are so comfortable with their market dominance and the lock-in of their customers that they are happy to turn out hundreds of thousands of skilled workers, convinced that the twiddling systems they’ve built are the kinds of self-licking ice-cream cones that are so simple even a manager can use them — no morlocks required.

The tech worker layoffs are best understood as an all-out war on tech worker morale, because that morale is the source of tech workers’ confidence and thus their demands for a larger share of the value generated by their labor. The current tech layoff template is very different from previous tech layoffs: today’s layoffs are taking place over a period of months, long after they are announced, and laid off tech worker is likely to be offered a months of paid post-layoff work, rather than severance. This means that tech workplaces are now haunted by the walking dead, workers who have been laid off but need to come into the office for months, even as the threat of layoffs looms over the heads of the workers who remain. As an old friend, recently laid off from Microsoft after decades of service, wrote to me, this is “a new arrow in the quiver of bringing tech workers to heel and ensuring that we’re properly thankful for the jobs we have (had?).”

Dubal is interested in more than analysis, she’s interested in action. She looks at the tactics already deployed by gig workers, who have not taken all this abuse lying down. Workers in the UK and EU organized through Worker Info Exchange and the App Drivers and Couriers Union have used the GDPR (the EU’s privacy law) to demand “algorithmic transparency,” as well as access to their data. In California, drivers hope to use similar provisions in the CCPA (a state privacy law) to do the same.

These efforts have borne fruit. When Cornell economists, led by Louis Hyman, published research (paid for by Uber) claiming that Uber drivers earned an average of $23/hour, it was data from these efforts that revealed the true average Uber driver’s wage was $9.74. Subsequent research in California found that Uber drivers’ wage fell to $6.22/hour after the passage of Prop 22, a worker misclassification law that gig companies spent $225m to pass, only to have the law struck down because of a careless drafting error:

https://www.latimes.com/california/newsletter/2021-08-23/proposition-22-lyft-uber-decision-essential-california

But Dubal is skeptical that data-coops and transparency will achieve transformative change and build real worker power. Knowing how the algorithm works is useful, but it doesn’t mean you can do anything about it, not least because the platform owners can keep touching their knobs, twiddling the payout schedule on their rigged slot-machines.

Data co-ops start from the proposition that “data extraction is an inevitable form of labor for which workers should be remunerated.” It makes on-the-job surveillance acceptable, provided that workers are compensated for the spying. But co-ops aren’t unions, and they don’t have the power to bargain for a fair price for that data, and coops themselves lack the vast resources — “to store, clean, and understand” — data.

Co-ops are also badly situated to understand the true value of the data that is extracted from their members: “Workers cannot know whether the data collected will, at the population level, violate the civil rights of others or amplifies their own social oppression.”

Instead, Dubal wants an outright, nonwaivable prohibition on algorithmic wage discrimination. Just make it illegal. If

firms cannot use gambling mechanisms to control worker behavior through variable pay systems, they will have to find ways to maintain flexible

workforces while paying their workforce predictable wages under an employment model. If a firm cannot manage wages through digitally-determined variable pay systems, then the firm is less likely to employ algorithmic management.”

In other words, rather than using market mechanisms too constrain platform twiddling, Dubal just wants to make certain kinds of twiddling illegal. This is a growing trend in legal scholarship. For example, the economist Ramsi Woodcock has proposed a ban on surge pricing as a per se violation of Section 1 of the Sherman Act:

https://ilr.law.uiowa.edu/print/volume-105-issue-4/the-efficient-queue-and-the-case-against-dynamic-pricing

Similarly, Dubal proposes that algorithmic wage discrimination violates another antitrust law: the Robinson-Patman Act, which “bans sellers from charging competing buyers different prices for the same commodity. Robinson-Patman enforcement was effectively halted under Reagan, kicking off a host of pathologies, like the rise of Walmart:

https://pluralistic.net/2023/03/27/walmarts-jackals/#cheater-sizes

I really liked Dubal’s legal reasoning and argument, and to it I would add a call to reinvigorate countertwiddling: reforming laws that get in the way of workers who want to reverse-engineer, spoof, and control the apps that currently control them. Adversarial interoperability (AKA competitive compatibility or comcom) is key tool for building worker power in an era of digital Taylorism:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

To see how that works, look to other jursidictions where workers have leapfrogged their European and American cousins, such as Indonesia, where gig workers and toolsmiths collaborate to make a whole suite of “tuyul apps,” which let them override the apps that gig companies expect them to use.

https://pluralistic.net/2021/07/08/tuyul-apps/#gojek

For example, ride-hailing companies won’t assign a train-station pickup to a driver unless they’re circling the station — which is incredibly dangerous during the congested moments after a train arrives. A tuyul app lets a driver park nearby and then spoof their phone’s GPS fix to the ridehailing company so that they appear to be right out front of the station.

In an ideal world, those workers would have a union, and be able to dictate the app’s functionality to their bosses. But workers shouldn’t have to wait for an ideal world: they don’t just need jam tomorrow — they need jam today. Tuyul apps, and apps like Para, which allow workers to extract more money under better working conditions, are a prelude to unionization and employer regulation, not a substitute for it.

Employers will not give workers one iota more power than they have to. Just look at the asymmetry between the regulation of union employees versus union busters. Under US law, employees of a union need to account for every single hour they work, every mile they drive, every location they visit, in public filings. Meanwhile, the union-busting industry — far larger and richer than unions — operate under a cloak of total secrecy, Workers aren’t even told which union busters their employers have hired — let alone get an accounting of how those union busters spend money, or how many of them are working undercover, pretending to be workers in order to sabotage the union.

Twiddling will only get an employer so far. Twiddling — like all “AI” — is based on analyzing the past to predict the future. The heuristics an algorithm creates to lure workers into their cars can’t account for rapid changes in the wider world, which is why companies who relied on “AI” scheduling apps (for example, to prevent their employees from logging enough hours to be entitled to benefits) were caught flatfooted by the Great Resignation.

Workers suddenly found themselves with bargaining power thanks to the departure of millions of workers — a mix of early retirees and workers who were killed or permanently disabled by covid — and they used that shortage to demand a larger share of the fruits of their labor. The outraged howls of the capital class at this development were telling: these companies are operated by the kinds of “capitalists” that MLK once identified, who want “socialism for the rich and rugged individualism for the poor.”

https://twitter.com/KaseyKlimes/status/821836823022354432/

There's only 5 days left in the Kickstarter campaign for the audiobook of my next novel, a post-cyberpunk anti-finance finance thriller about Silicon Valley scams called Red Team Blues. Amazon's Audible refuses to carry my audiobooks because they're DRM free, but crowdfunding makes them possible.

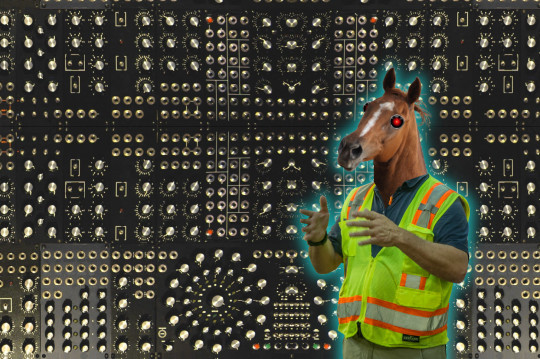

Image:

Stephen Drake (modified)

https://commons.wikimedia.org/wiki/File:Analog_Test_Array_modular_synth_by_sduck409.jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

—

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

—

Louis (modified)

https://commons.wikimedia.org/wiki/File:Chestnut_horse_head,_all_excited.jpg

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/deed.en

[Image ID: A complex mandala of knobs from a modular synth. In the foreground, limned in a blue electric halo, is a man in a hi-viz vest with the head of a horse. The horse's eyes have been replaced with the sinister red eyes of HAL9000 from Kubrick's '2001: A Space Odyssey.'"]

#pluralistic#great resignation#twiddler#countertwiddling#wage discrimination#algorithmic#scholarship#doordash#para#Veena Dubal#labor#brian merchant#app boss#reverse centaurs#skinner boxes#enshittification#ants vs pickers#tuyul#steampunk#cottage industry#ccpa#gdpr#App Drivers and Couriers Union#shitty technology adoption curve#moral economy#gamblification#casinoization#taylorization#taylorism#giant teddy bears

3K notes

·

View notes

Text

Website Scraping Services USA | UK | UAE

In the digital age, data is the new gold. The vast expanse of the internet holds an unimaginable volume of information, valuable to businesses, researchers, and entrepreneurs alike. However, accessing and utilizing this data isn't always straightforward. This is where website scraping services come into play. They offer a robust solution for extracting useful data from the web, turning unstructured content into structured, actionable insights. Let's dive into what website scraping services are, their benefits, and how they are transforming industries.

What Are Website Scraping Services?

Website scraping, also known as web scraping or data scraping, involves using automated tools to collect data from web pages. This process mimics the manual browsing and data collection activities of a human but does so

at a scale and speed that is unattainable through manual efforts. Website scraping services leverage sophisticated algorithms and tools to extract large volumes of data efficiently and accurately from various websites, transforming it into a structured format such as spreadsheets or databases.

The Mechanics of Website Scraping

The process of web scraping typically involves the following steps:

Identifying the Target Data: Determine the specific web pages and data elements to be scraped. This could be anything from product details on e-commerce sites to financial data on stock market websites.

Sending HTTP Requests: The scraping tool sends HTTP requests to the server hosting the target web pages, similar to how a web browser requests a page.

Parsing the HTML: Once the page is loaded, the scraper parses the HTML or XML structure to locate and extract the relevant data. This often involves using techniques like XPath, CSS selectors, or regular expressions.

Data Extraction and Storage: Extracted data is cleaned and formatted before being stored in a structured format such as CSV files, Excel spreadsheets, or databases.

Maintaining Scraping Tools: Regular maintenance is crucial as websites frequently update their structures, which can break scraping scripts.

Benefits of Website Scraping Services

Website scraping services provide a myriad of benefits, making them indispensable across various sectors:

1. Competitive Intelligence

Businesses can gain insights into competitors’ strategies, pricing, and product offerings. For instance, e-commerce companies can monitor rivals’ pricing to stay competitive, while travel companies can compare flight and hotel prices to offer better deals.

2. Market Research

Web scraping enables companies to gather extensive market data, such as customer reviews, trends, and product feedback. This data can be analyzed to understand market demands, customer preferences, and potential areas for new product development.

3. Lead Generation

B2B companies often use web scraping to extract contact information from business directories or social media platforms. This helps in building a database of potential clients and partners.

4. Content Aggregation

Content aggregators and news portals use scraping to pull together articles, news updates, and other content from various sources into a single platform, providing a comprehensive view for their audience.

5. Financial Analysis

Investors and financial analysts use web scraping to collect real-time data on stock prices, financial reports, and economic indicators. This information is crucial for making informed investment decisions.

6. Academic and Scientific Research

Researchers across disciplines use web scraping to gather data for studies, surveys, and analysis. This can include social media trends, public opinion, and other relevant data points.

Legal and Ethical Considerations

While web scraping is a powerful tool, it must be used responsibly. Many websites have terms of service that explicitly forbid scraping. Ignoring these rules can lead to legal consequences. Additionally, scraping can put a significant load on the server hosting the website, potentially leading to service disruptions. Ethical web scraping practices include:

Respecting the website's robots.txt file, which outlines permissible and forbidden scraping activities.

Limiting the frequency of requests to avoid overloading the server.

Using APIs provided by the website, if available, instead of scraping.

Choosing the Right Website Scraping Service

Selecting the right web scraping service depends on several factors:

Scalability: Ensure the service can handle the volume of data you need.

Customization: Look for services that offer tailored solutions to meet specific data requirements.

Compliance: Choose services that adhere to legal and ethical guidelines.

Support and Maintenance: Opt for providers that offer ongoing support and updates to scraping tools.

Future of Website Scraping

As technology advances, the capabilities and applications of web scraping continue to expand. With the rise of artificial intelligence and machine learning, we can expect web scraping tools to become even more sophisticated, capable of extracting data with higher accuracy and from more complex sources. Moreover, the growing emphasis on data privacy and security will likely drive the development of more compliant and ethical scraping solutions.

In conclusion, website scraping services are a gateway to the digital treasure trove, offering immense value to businesses and individuals. By automating the data extraction process, they unlock insights that can drive strategic decision-making and foster innovation. However, leveraging these services responsibly and ethically is crucial to ensuring sustainable and beneficial outcomes.

Final Thoughts

Embracing website scraping services is akin to equipping yourself with a powerful lens to explore the internet’s vast data landscape. Whether you're looking to boost your competitive edge, gain deep market insights, or streamline research processes, web scraping can be a game-changer. As you embark on your data journey, remember to navigate this terrain with both ambition and caution, balancing your quest for data with respect for the digital ecosystem.

0 notes

Text

How Does Web Scraping for Travel and Hospitality Data Help Travel Industry?

Introduction

In the competitive world of travel and hospitality, staying ahead of market trends, understanding customer preferences, and optimizing pricing strategies are crucial for success. One of the most effective ways to achieve these goals is through web scraping for travel and hospitality data. This method provides a powerful data collection solution for travel and hospitality industry, enabling businesses to gather valuable insights and make informed decisions. In this blog, we’ll explore how web scraping can help the travel industry, focusing on scraping travel and hospitality data, web scraping for the travel industry, and the benefits of hotel, travel and airline data scraping services.

What is Web Scraping?

Web scraping is the process of using automated tools or scripts to collect data from websites. This method allows businesses to gather large volumes of information quickly and efficiently, which can then be analyzed for various purposes. Web scraping for travel and hospitality data involves extracting information from travel websites, hotel booking platforms, airline ticketing sites, and review platforms. This data can include pricing information, customer reviews, availability, and much more.

Importance of Web Scraping in the Travel Industry

The travel industry is highly dynamic, with prices and availability changing frequently. Having access to real-time data is essential for making informed decisions. Here are some key reasons why web scraping is important in the travel and hospitality industry:

Competitive Pricing: Web scraping allows travel companies to monitor competitors’ prices in real-time. This information is crucial for dynamic pricing strategies, ensuring that businesses remain competitive while maximizing revenue.

Market Trends: By scraping data from various sources, businesses can identify emerging trends in travel preferences, popular destinations, and seasonal demand patterns. This helps in tailoring marketing strategies and offerings to meet current market demands.

Customer Insights: Extracting customer reviews and ratings from multiple platforms provides valuable insights into customer satisfaction and preferences. This information can be used to improve services and enhance the overall customer experience.

Availability and Inventory Management: Web scraping can help travel companies keep track of inventory levels, such as hotel room availability or flight seats. This ensures accurate and up-to-date information for customers, reducing the risk of overbooking.

Key Applications of Web Scraping for Travel and Hospitality Data

Competitive Pricing Analysis

One of the most significant benefits of web scraping for the travel industry is competitive pricing analysis. By continuously monitoring competitors’ prices, travel companies can adjust their pricing strategies to stay competitive. For instance, a hotel can use web scraping to track room rates of nearby hotels and adjust its prices accordingly to attract more guests while maximizing revenue.

Market Trend Analysis

Understanding market trends is essential for developing effective marketing strategies. Web scraping allows travel companies to gather data on popular destinations, travel packages, and customer preferences. By analyzing this data, businesses can identify trends such as increasing demand for eco-friendly travel options or a rise in popularity of certain destinations. This information helps in crafting targeted marketing campaigns and developing new products that cater to current market demands.

Customer Sentiment Analysis

Customer reviews and ratings provide valuable insights into customer satisfaction and areas needing improvement. Web scraping can collect reviews from various platforms, such as TripAdvisor, Yelp, and Google Reviews. By analyzing this data, travel companies can identify common issues, understand customer preferences, and enhance their services. For example, if multiple reviews mention poor Wi-Fi connectivity at a hotel, the management can address this issue to improve guest satisfaction.

Inventory and Availability Tracking

Web scraping helps travel companies keep track of inventory levels and availability in real-time. For example, airlines can monitor seat availability on different flights, and hotels can track room occupancy rates. This information is crucial for managing bookings and ensuring that customers have accurate and up-to-date information. Additionally, it helps in optimizing inventory management and reducing the risk of overbooking.

How to Implement Web Scraping for Travel and Hospitality Data?

Implementing web scraping for travel and hospitality data involves several steps. Here’s a detailed guide on how to get started:

Identify Target Websites

The first step in web scraping is to identify the websites from which you want to collect data. These could include:

Travel booking websites (e.g., Expedia, Booking.com)

Airline ticketing sites (e.g., Delta, American Airlines)

Hotel booking platforms (e.g., Marriott, Hilton)

Review platforms (e.g., TripAdvisor, Yelp)

Inspect the Website Structure

Use browser developer tools to inspect the structure of the web pages you want to scrape travel and hospitality data. Identify the HTML elements that contain the data you need, such as pricing information, customer reviews, and availability details.

Choose the Right Tools

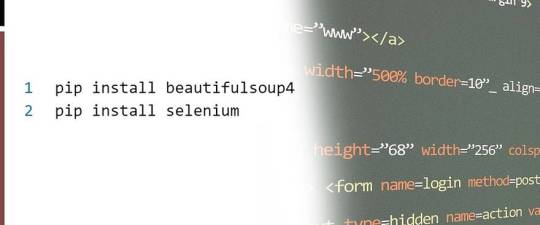

Several tools and libraries can be used for web scraping. Some popular ones include:

BeautifulSoup: A Python library for parsing HTML and XML documents.

Scrapy: An open-source web crawling framework for Python.

Selenium: A tool for automating web browsers, often used for scraping dynamic content.

Puppeteer: A Node.js library that provides a high-level API to control Chrome or Chromium browsers.

Write the Scraping Script

Using your chosen tool, write a script to extract the desired data. Ensure that your script can handle pagination if the data spans multiple pages. Here’s an example using BeautifulSoup:

Handle Anti-Scraping Measures

Many websites implement anti-scraping measures, such as CAPTCHAs and IP blocking. Here are some tips to handle these:

Use Proxies: Rotate proxies to avoid IP blocking.

Implement Rate Limiting: Avoid sending too many requests in a short period.

Solve CAPTCHAs: Use services like 2Captcha to solve CAPTCHAs automatically.

Store and Analyze the Data

After scraping, store the data in a structured format, such as a CSV file or a database. Use data analysis tools to derive insights from the collected data. This can involve sentiment analysis, trend analysis, and more.

Benefits of Using Hotel, Travel, and Airline Data Scraping Services

For businesses that prefer not to build their own scraping solutions, using hotel, travel and airline data scraping services offers several benefits:

Ease of Use: These services provide ready-to-use solutions, reducing the need for in-house development.

Scalability: They can handle large volumes of data, making them suitable for businesses of all sizes.

Real-Time Data: Many services offer real-time data, ensuring that businesses always have the latest information.

Reduced Maintenance: Using a third-party service means you don’t have to worry about maintaining and updating your scraping scripts.

Legal and Ethical Considerations

When using web scraping, it’s crucial to adhere to legal and ethical standards. Here are some best practices:

Respect Website Terms of Service: Always check and respect the terms of service of the websites you’re scraping.

Avoid Overloading Servers: Implement rate limiting to avoid overloading servers with too many requests.

Comply with Data Privacy Regulations: Ensure that your data scraping activities comply with regulations such as GDPR.

Future Trends in Web Scraping for the Travel Industry

As technology evolves, the methods and applications of web scraping in the travel industry are likely to expand. Here are some future trends to watch for:

AI and Machine Learning: Integrating AI and machine learning with web scraping can enhance data analysis, making it possible to uncover deeper insights and predictive analytics.

Blockchain Technology: Blockchain can provide more secure and transparent ways to scrape travel and hospitality data.

Advanced Automation: With advancements in automation, web scraping processes will become more efficient, reducing the need for manual intervention.

Enhanced Data Privacy: As data privacy regulations become more stringent, web scraping techniques will need to adapt to ensure compliance while still providing valuable data.

Conclusion

Web scraping is an indispensable tool for the travel and hospitality industry, providing a wealth of data that drives strategic decision-making. By extracting financial reviews data, monitoring market trends, and leveraging Reviews Scraping APIs, travel firms can remain competitive and responsive to ever-changing market dynamics. It’s essential to follow best practices and comply with legal standards to ensure ethical and effective data collection solution for travel and hospitality. As the industry evolves, so will web scraping techniques and applications, offering greater opportunities for innovation and insights. Embrace the power of Travel Scrape for comprehensive data solutions that drive success in the travel and hospitality industry. Explore our services today and transform your data strategy!

Know more>> https://www.travelscrape.com/web-scraping-for-travel-and-hospitality-data-help-travel-industry.php

#DataCollectionSolutionforTravelandHospitality#WebScrapingForTravelAndHospitalityData#ScrapeTravelandHospitalityData#CollectDataFromTravelandHospitality

0 notes

Text

How to Maximize Travel Business Success with Data Scraping in Travel Industry?

Introduction

In the highly competitive landscape of the travel industry, the significance of data scraping cannot be overstated. Data scraping in the travel industry involves using specialized tools, such as a travel data scraper or platforms like TripAdvisor scraper, to extract valuable information from diverse sources. This process, often called travel data collection, empowers businesses with critical insights that can propel them toward success.

One of the primary benefits of leveraging data scraping in the travel sector is the ability to extract comprehensive and real-time data. Whether pricing information, customer reviews, or competitor data, a well-implemented travel data scraper enables businesses to stay updated with the latest trends and market dynamics. This, in turn, facilitates effective price comparison and market research, allowing companies to make informed decisions and stay ahead of the curve.

Businesses can gain a competitive edge by strategically employing data scraping techniques to extract travel industry data. The insights derived from these efforts can inform pricing strategies, identify emerging trends, and enhance overall market positioning. Data scraping becomes a powerful tool for navigating the intricacies of the travel industry, enabling businesses to optimize their operations, improve customer experiences, and ultimately achieve lasting success.

Understanding Data Scraping in Travel

Data scraping in the travel industry is a pivotal technique involving the automated extraction of information from various online sources, enhancing the industry's ability to gather valuable insights. Data scraping utilizes specialized tools like a travel data scraper or dedicated platforms such as a TripAdvisor scraper to extract relevant data systematically. This process, also known as travel data collection, gives businesses a competitive advantage.

In the travel sector, the relevance of data scraping is evident in its ability to provide real-time and comprehensive information critical for decision-making. For instance, businesses can utilize data scraping to extract pricing details, customer reviews, and competitor information. This extracted data supports effective price comparison and aids in conducting market research, enabling companies to make informed strategic decisions.

Real-life examples of successful implementations highlight how data scraping has revolutionized the travel industry. From optimizing pricing strategies based on competitor insights to fine-tuning marketing campaigns through analysis of customer reviews, data scraping has become a cornerstone for businesses seeking to stay agile in a dynamic market.

These examples underscore the transformative impact of data scraping, positioning it as an invaluable tool for companies striving to enhance their operational efficiency and gain a competitive edge in the travel industry.

Identifying Key Data Sources

In the travel industry, identifying critical data sources is essential for harnessing the full potential of data scraping. A travel data scraper, including specialized tools like the TripAdvisor scraper, can be employed to explore diverse data outlets, offering valuable insights for business optimization.

One primary data source is customer reviews on platforms like TripAdvisor, where sentiments and feedback provide a rich resource for businesses to gauge customer satisfaction and improve services. Pricing information on various travel websites is another crucial data source, enabling companies to conduct thorough price comparison analyses. Businesses can strategically position themselves in the market by extracting data related to competitors' pricing strategies.

Furthermore, data scraping can target flight and accommodation availability, helping companies stay informed about real-time inventory. Social media platforms also contribute to data sources, providing information on trending destinations and customer preferences and aiding market research efforts. Additionally, weather data and local events can be scraped to enhance the personalization of travel offerings.

Exploring these diverse data sources through techniques like data scraping in the travel industry is paramount. The insights obtained not only facilitate business optimization but also empower companies to make informed decisions, adapt to market changes, and maintain a competitive edge in the dynamic landscape of the travel sector.

Implementing Effective Data Scraping Strategies

Implementing effective data scraping strategies is crucial for success in the travel industry. Leveraging a travel data scraper, such as the renowned TripAdvisor scraper, requires adherence to best practices to ensure efficient data extraction and business optimization.

Firstly, businesses should clearly define their objectives and the specific data they intend to scrape. This targeted approach helps streamline the process and ensures the extracted data aligns with the company's strategic goals. Additionally, it is essential to stay compliant with legal and ethical considerations, respecting the terms of service of the targeted websites.

From a technical standpoint, selecting the right tools and methodologies is paramount. Businesses can choose between web scraping libraries, APIs, or custom scripts based on their needs. Employing advanced scraping techniques, such as dynamic content handling and pagination, enhances the accuracy and completeness of data extraction.

Regularly monitoring and maintaining scraping processes are essential to adapt to changes in the targeted websites' structure or policies. Implementing rate limiting and respecting robots.txt guidelines helps prevent disruptions and maintain a positive relationship with the data sources.

An adequate data scraping strategy in the travel industry involves meticulous planning, technical proficiency, and ongoing maintenance. By incorporating best practices, businesses can harness the power of data scraping to conduct thorough market research, enable price comparison, and gain a competitive advantage in the dynamic travel sector.

Leveraging Data Insights for Business Growth

Leveraging data insights is a pivotal aspect of driving business growth in the travel industry through data scraping. With tools like a travel data scraper or specialized solutions like the TripAdvisor scraper, businesses can extract valuable data and transform it into actionable insights for strategic decision-making.

Extracted data can be analyzed for various purposes, including market research and price comparison. By scrutinizing competitor pricing strategies, businesses can optimize their pricing models to stay competitive. Understanding market trends and customer preferences through data scraping enables businesses to tailor their offerings, enhancing overall customer satisfaction.

One exemplary application is personalized marketing. Insights gained from scraped data allow businesses to craft targeted marketing campaigns, reaching specific demographics with tailored messages. This personalized approach has significantly improved customer engagement and conversion rates.

Moreover, data-driven decision-making extends to inventory management and service optimization. Real-time data on travel patterns and accommodation preferences aid in forecasting demand, ensuring businesses can adjust their offerings promptly.

Showcasing examples of how insights from data scraping drive business success emphasizes its transformative impact on the travel industry. By harnessing these insights, businesses can make informed decisions, refine strategies, and achieve sustainable growth in this dynamic sector.

Overcoming Challenges and Ensuring Compliance

While data scraping offers immense benefits in the travel industry, navigating potential challenges and ensuring compliance with data protection regulations is essential. Utilizing tools like a travel data scraper or platforms like the TripAdvisor scraper introduces specific risks that businesses must address.

One challenge involves the dynamic nature of websites, which may undergo changes in structure or terms of service. Regular monitoring and adaptation of scraping processes are crucial to maintaining data extraction accuracy. Additionally, legal considerations must be considered, as scraping data without proper authorization can lead to legal repercussions.

Ensuring compliance with data protection regulations, such as GDPR, is paramount. Travel businesses must obtain consent from users before scraping personal information and adhere to data minimization and purpose limitation principles. Implementing measures like anonymizing or pseudonymizing data can mitigate privacy risks.

To overcome technical challenges, businesses should employ ethical scraping practices, respecting the guidelines set by websites through robots.txt files. Developing a transparent and ethical approach to data scraping safeguards against legal issues and fosters positive relationships with data sources.

Addressing challenges associated with data scraping in the travel industry requires a balanced approach that combines technical vigilance with legal compliance. By navigating these challenges effectively, businesses can harness the power of data scraping while maintaining ethical standards and regulatory adherence.

Case Studies

Real Data API has driven significant growth for travel businesses through adequate data scraping strategies. One notable success story involves a leading travel agency that utilized a travel data scraper, including the renowned TripAdvisor scraper, to extract and analyze vast amounts of travel industry data. The agency conducted precise market research and optimized pricing strategies by extracting pricing information and customer reviews.

Another case highlights a hotel chain that employed data scraping to enhance its price comparison capabilities. Leveraging a travel data scraper allowed the hotel chain to gather real-time pricing data from competitors, enabling them to adjust their rates dynamically. This adaptive pricing strategy not only improved competitiveness but also resulted in increased bookings and revenue.

Furthermore, a tour operator utilized data scraping for travel data collection from various sources, including social media platforms. This data was then analyzed to identify emerging travel trends and popular destinations, allowing the operator to tailor their offerings to current market demands. The insights derived from data scraping optimized their product offerings and facilitated targeted marketing, resulting in a notable boost in customer engagement and bookings.

These case studies underscore the transformative impact of data scraping, showcasing how businesses in the travel industry can achieve substantial growth by harnessing the power of Real Data API and other advanced tools.

Conclusion

Data scraping emerges as a pivotal tool for achieving success in the travel industry, offering invaluable insights for strategic decision-making. By leveraging tools like Real Data API, businesses can extract and analyze vast amounts of data, optimize pricing, conduct market research, and tailor their offerings to customer preferences. As the travel landscape evolves, embracing data scraping strategies becomes essential for staying competitive. We encourage readers to explore the possibilities and implement data scraping techniques to unlock the full potential of their businesses. Take the first step towards success today!

Know More: https://www.realdataapi.com/web-data-scraping-travel-industry.php

#TravelDataScraper#TravelIndustryDataScraping#ScrapeTravelData#TripadvisorScraper#TravelDataCollection#ExtractTravelData

0 notes

Text

Scrape Flight & Rail App Listing Data – A Comprehensive Guide

Scrape Flight & Rail App Listing Data – A Comprehensive Guide

Dec 07, 2023

Introduction

Creating a dedicated search engine for verifying global flight and rail schedules and pricing information presents a strategic concept with immense potential. Fueled by comprehensive data collection, such a platform could revolutionize how individuals access and validate travel details swiftly without needing to visit each airline or rail service mobile app separately.

The key to the success of a travel-related mobile app lies in the meticulous separation of mobile app development, design components, and the critical element – accurate and up-to-date data. By considering Mobile App Scraping's Data-as-a-Service offering, the foundation for a robust and reliable travel information hub can be laid.

Mobile App Scraping excels in providing top-notch Flight and rail App Data Scraping services, specializing in extracting data from the Google Play Store. This valuable service ensures that your platform is enriched with the latest information on flight schedules, rail services, and pricing details from top-notch apps.

Harnessing the power of Mobile App Scraping's expertise, your travel-oriented search engine can deliver users real-time, accurate, and comprehensive insights. This innovative approach streamlines the user experience and consolidates fragmented data, offering a one-stop solution for individuals seeking verified and current travel information.

In essence, the collaboration with Mobile App Scraping empowers your venture to provide a seamless, data-driven travel experience, setting the stage for a dynamic and invaluable service in the ever-evolving landscape of global travel.

Why Flight & Rail App Listing Data?

Flight & Rail App Listing Data is crucial for various reasons in the travel industry. Here are some key points highlighting the significance of extracting and utilizing such data:

Comprehensive Travel Information

Flight and rail apps provide extensive details about schedules, routes, pricing, and other relevant information. Extracting data from these apps ensures access to a comprehensive set of travel-related data.

User-Friendly Travel Platforms

Integrating data from top-flight and rail apps enables the creation of user-friendly travel platforms. Users can conveniently access all necessary information in one place, streamlining their travel planning experience.

Real-Time Updates

Flight and rail information is dynamic, with frequent schedule updates, availability, and pricing. Scraping data from these apps ensures that the travel platform offers real-time and accurate information to users.

Market Analysis

Analyzing data from various flight and rail apps provides valuable insights into market trends, popular routes, and pricing strategies. This information is crucial for businesses and travel apps scraping services providers to make informed decisions.

Competitor Analysis

Monitoring the offerings and features of different flight and rail apps helps understand the competitive landscape. This data allows businesses to position themselves strategically in the market.

Enhanced User Experience

By offering a consolidated platform with data from top travel apps, businesses can enhance the overall user experience. Users can compare options, find the best deals, and make informed decisions seamlessly.

Customized Services

Access to flight and rail app data allows businesses to tailor their services based on user preferences. This customization enhances customer satisfaction and loyalty.

Innovation in Travel Technology

Utilizing data from these apps encourages innovation in travel technology. It opens avenues for developing new features, tools, and services to meet the evolving needs of travelers.

Flight & Rail App Listing Data is indispensable for creating efficient, user-centric travel platforms, staying competitive in the market, and advancing travel technology.

List Of Data Fields

At Mobile App Scraping, we excel in extracting a comprehensive set of data fields from top-flight and rail apps. Our scraping services cover the following key data points, providing clients with detailed and valuable information:

Availability

Carrier

Currency

Departure Date

Destination Airport

Discount

First Price

IB Aircraft

Inbound Airline Code

Inbound Airlines

Inbound Arrival Time

Inbound Class Type

Inbound Departure Time

Inbound Duration

Inbound Fare

Inbound Flight Details

Inbound Route

Inbound Times

Is Inclusive Rate

OB Aircraft

Outbound Airline Code

Outbound Airlines

Outbound Arrival Time

Outbound Class Type

Outbound Departure Time

Outbound Duration

Outbound Fare

Outbound Flight Details

Outbound Route

Outbound Times

Return Date

Service Charge

Source Airport

Stop Overs City Inbound

Stop Overs City Outbound

Stop Overs Inbound

Stop Overs Outbound

Tax Amount

To From Fare

Our meticulous scraping process ensures accurate and up-to-date information extraction, empowering businesses with the insights they need for competitive analysis, market research, and enhanced service offerings.

Streamlining Business Data Extraction: Simplifying Flight & Rail App Scraping For Online Insights

The journey begins with careful planning and selection in Flight and rail App Scraping. The crucial initial steps are choosing the right target apps and specifying the desired data fields. The accuracy and reliability of the extracted data heavily depend on the precision in selecting dependable sources.

Once the targeted apps are identified, the next critical phase is to define the necessary data points. These data points act as the guiding coordinates for the scraping process, determining the specific information to be extracted from the chosen mobile apps. For flight and rail apps, essential data points may include flight IDs, departure and arrival airports, aircraft details, pricing information, number of stops, check-in/check-out details, and more.

The Flight & Rail App Scraping process sets the stage for obtaining accurate and valuable insights from online sources by meticulously specifying the data and targeting the right apps. It's a strategic journey that ensures the extraction of high-quality data essential for informed decision-making in the dynamic travel industry.

List Of Top Flight & Rail Apps In India

MakeMyTrip:

Ratings: ⭐⭐⭐⭐⭐

Reviews: 1,000,000+

Installs: 100,000,000+

Cleartrip: Flights, Hotels, Train Booking App:

Ratings: ⭐⭐⭐⭐⭐

Reviews: 500,000+

Installs: 10,000,000+

Yatra - Flights, Hotels, Bus, Trains & Cabs:

Ratings: ⭐⭐⭐⭐

Reviews: 300,000+

Installs: 5,000,000+

Goibibo: Hotel, Flight, IRCTC Train & Bus Bookings:

Ratings: ⭐⭐⭐⭐⭐

Reviews: 700,000+

Installs: 50,000,000+

IRCTC Rail Connect:

Ratings: ⭐⭐⭐⭐

Reviews: 600,000+

Installs: 10,000,000+

ixigo: IRCTC Rail, Bus Booking, Flight Ticket App:

Ratings: ⭐⭐⭐⭐⭐

Reviews: 800,000+

Installs: 50,000,000+

Expedia:

Ratings: ⭐⭐⭐⭐⭐

Reviews: 200,000+

Installs: 10,000,000+

Trivago: Compare Hotels & Prices:

Ratings: ⭐⭐⭐⭐

Reviews: 400,000+

Installs: 50,000,000+

Skyscanner: Cheap Flights, Hotels, and Car Hire:

Ratings: ⭐⭐⭐⭐⭐

Reviews: 600,000+

Installs: 10,000,000+

Railyatri - Indian Railway Train Status & PNR Status:

Ratings: ⭐⭐⭐⭐

Reviews: 100,000+

Installs: 5,000,000+

Why Choose Mobile App Scraping To Scrape Flight & Rail App Listing Data?

Mobile App Scraping is a leading provider of Flight and rail Apps Listing Data Scraping services, offering several compelling reasons to choose their services:

Expertise in Mobile App Scraping: Mobile App Scraping specializes in extracting data from mobile applications, ensuring accurate and comprehensive results tailored to the unique structures of mobile platforms.

Targeted Data Extraction: The team at Mobile App Scraping can precisely target and extract specific data fields relevant to Flight and rail Apps, including details such as source and destination airports, departure and arrival times, pricing information, and more.

Data Quality Assurance: The company strongly emphasizes data quality, employing rigorous quality assurance measures to deliver reliable and error-free datasets.

Compliance with Legal Standards: Mobile App Scraping operates within legal and ethical boundaries, ensuring that the data extraction process complies with the terms of service of the target apps and relevant regulations.

Scalability and Efficiency: With the capability to handle large-scale scraping projects efficiently, Mobile App Scraping is well-equipped to meet the diverse data needs of clients, whether for small-scale extractions or extensive data sets.

Custom Solutions: The company offers custom scraping solutions, allowing clients to tailor the extraction process based on their specific requirements and objectives.

Timely Delivery: Mobile App Scraping is committed to delivering results within agreed-upon timelines, ensuring clients receive the extracted data promptly.

Conclusion

For businesses in need of Flight and Rail Apps Listing Data, Mobile App Scraping emerges as a trusted and seasoned partner. The company offers a host of advantages, including precise data extraction, robust data quality assurance, and scalable solutions to meet diverse needs. Whether you have specific requirements or need extensive datasets, Mobile App Scraping is equipped to deliver tailored solutions. Contact Mobile App Scraping today!

know more:

https://www.mobileappscraping.com/scrape-flight-and-rail-app-listing-data.php

#Flightdatascraping#RailDataScraper#ScrapeTravelappsData#ExtractTravelAppsData#ExtractFlightsData#RailappsDataCollection#ExtractRailappsData#travelappscraping#travelappsdatacollection

0 notes

Text

Proxies and Travel Fare Aggregation: Finding the Best Deals

Introduction

Planning a trip can be an exciting but daunting task, especially when it comes to finding the best travel fares. However, with the advent of proxies and travel fare aggregation, the process has become much more efficient and cost-effective. In this article, we will explore how proxies can enhance travel fare aggregation, enabling travelers to find the best deals and save money on their journeys.

Understanding Travel Fare Aggregation

Travel fare aggregation involves gathering and comparing prices from multiple travel websites and platforms to find the most affordable options for flights, hotels, car rentals, and other travel services. Instead of manually searching each platform separately, fare aggregation websites consolidate the information into one place, simplifying the process and saving time for travelers.

The Role of Proxies in Fare Aggregation

Proxies play a crucial role in travel fare aggregation by providing users with the ability to access and gather data from various travel websites without restrictions. Proxies act as intermediaries, allowing users to browse the internet with different IP addresses and locations. This enables travelers to overcome geographical limitations, access region-specific deals, and gather comprehensive data for fare comparison.

Bypassing Geographical Restrictions

Travel websites often implement geographical restrictions that limit access to certain deals or fares based on the user’s location. With the help of proxies, travelers can bypass these restrictions by connecting to servers in different countries or regions. By appearing as if they are browsing from a specific location, travelers can access localized deals and gain a competitive advantage in finding the best fares.

Scraping and Aggregating Data

Proxies enable travel fare aggregation platforms to scrape and aggregate data from multiple sources simultaneously. By rotating IP addresses and distributing requests across different proxies, fare aggregation websites can gather comprehensive and up-to-date fare information from various travel websites without raising suspicion or triggering anti-bot measures.

Enhancing Price Comparison

Price comparison is at the core of travel fare aggregation. Proxies allow fare aggregation websites to collect real-time prices from different sources, ensuring accurate and reliable comparisons. By accessing websites from different locations, proxies help eliminate biases in pricing and provide travelers with a comprehensive view of available fares.

Ensuring Privacy and Security

Privacy and security are paramount when conducting online travel searches. Proxies add an extra layer of privacy by masking the user’s true IP address and location. This protects travelers from potential tracking or profiling by travel websites. Additionally, proxies can encrypt data transmissions, safeguarding personal information and credit card details from potential cyber threats.

Choosing the Right Proxy for Travel Fare Aggregation

When selecting a proxy for travel fare aggregation, consider the following factors:

Proxy Type: Residential proxies are often preferred for fare aggregation as they provide IP addresses associated with real residential connections, minimizing the risk of detection.

Proxy Pool Size: Ensure that the proxy provider has a large and diverse proxy pool to access a wide range of locations and websites.

Speed and Reliability: Opt for proxies with high speed and uptime to ensure efficient data collection and fare aggregation.

Pricing and Scalability: Compare pricing plans and scalability options provided by different proxy providers to find a solution that aligns with your budget and anticipated usage.

Best Practices for Travel Fare Aggregation

To make the most of proxies and travel fare aggregation, follow these best practices:

Rotate IP Addresses: Utilize proxy rotation to simulate browsing from different locations and access localized deals.

Monitor Fare Changes: Set up fare tracking alerts to stay updated on price fluctuations and take advantage of the best deals as they become available.

Clear Cookies and Cache: Regularly clear cookies and cache to avoid biased pricing based on previous search history.

Verify Fare Details: Double-check fare details on the travel provider’s website before making a booking. Occasionally, fare aggregation websites may not display the most up-to-date information.

Conclusion

Proxies have revolutionized the way travelers search for the best travel fares. By utilizing proxies in travel fare aggregation, travelers can bypass geographical restrictions, scrape and aggregate data, enhance price comparison, and ensure privacy and security. When choosing a proxy for travel fare aggregation, consider factors such as proxy type, pool size, speed, reliability, pricing, and scalability. By following best practices and leveraging the power of proxies, travelers can find the best deals, save money, and make their travel dreams a reality.

0 notes

Text

Unleashing Web Scraping: A Multifaceted Tool Across Industries

Web scraping, once a niche technology, has evolved into a dynamic force shaping industries far and wide. Beyond its technical intricacies, this practice has become a cornerstone in driving innovation, insights, and informed decisions across various domains. Here's a look at how web scraping has transformed industries, without delving into its technical aspects.

Pioneering Business Intelligence

In the realm of business, the strategic significance of data is undeniable. Web scraping fuels this with actionable insights for companies of all sizes. By extracting and analyzing data from diverse online sources, businesses can track market trends, monitor competitors, and gauge customer sentiment. Armed with this knowledge, enterprises can make informed decisions that optimize their products, services, and marketing strategies.

Revolutionizing Marketing Campaigns

Web scraping has transformed marketing strategies by providing unprecedented access to consumer insights. Extracting data from diverse online sources empowers marketers to understand customer behaviors and trends. This enables the creation of highly targeted campaigns, tailoring products and ads to specific audiences. Data-driven insights enhance ROI and customer engagement, shaping effective, adaptive strategies that foster lasting relationships. In the era of web scraping, it's a pivotal tool for refining marketing approaches and driving business success.

Empowering Academic Inquiry

Academic research has been elevated by the capabilities of web scraping. Scholars can amass extensive data sets from online platforms, accelerating their studies across disciplines. This data-driven approach offers researchers the tools to uncover patterns, trace historical shifts, and glean insights that contribute to advancements in knowledge and understanding.

Redefining Real Estate Dynamics

In the real estate sector, web scraping is a game-changer. Property listings, historical pricing data, and demographic insights are now readily accessible. This empowers buyers, sellers, and investors with comprehensive information for making informed decisions about properties and investments.

Navigating Travel and Hospitality

For travelers and the hospitality industry alike, web scraping is a compass guiding choices. By aggregating data from various sources, travelers can compare flight prices, hotel accommodations, and destination reviews. Simultaneously, the industry benefits by gauging customer preferences, enhancing services, and adapting to evolving trends.

Catalyzing Healthcare Insights

In healthcare, web scraping accelerates the collection of data from medical journals, clinical trials, and patient forums. Researchers can then analyze trends, inform evidence-based practices, and contribute to drug discovery. This practice transforms the healthcare landscape by facilitating rapid access to vital information.

Informing Governance and Policy

Governments and policymakers harness web scraping to gauge public sentiment, monitor social trends, and evaluate policy effectiveness. By aggregating online data, authorities can make informed decisions that resonate with citizens and address emerging challenges.

Capturing Cultural and Social Shifts

Web scraping captures the essence of cultural and social changes occurring online. By analyzing conversations on social media, forums, and content-sharing platforms, researchers gain insights into evolving preferences, trends, and behaviors, influencing various industries.

In an era defined by information, web scraping has emerged as a catalyst for progress across industries. Its applications span from driving business strategies to illuminating societal issues, all while adhering to ethical considerations. As we navigate this data-rich landscape, web scraping stands as a beacon guiding us toward a future defined by informed decisions and transformative insights.

#googlescraping#WebScraping#DataDrivenMarketing#ConsumerInsights#TargetedCampaigns#MarketingRevolution#DigitalStrategy#CustomerEngagement#DataAnalytics#MarketingTrends

0 notes

Text

Plot Your Course Using Flight Cost Intelligence!

With our revolutionary Flight Pricing Intelligence, travel will be like never before. Armed with up-to-the-minute knowledge of airfares, offers, and trends, fly the skies. With the help of our cutting-edge technology, you can travel more efficiently and take advantage of the best airline deals. Our Flight Pricing Intelligence ups your travel game for everyone from budget travelers to jet-setters, ensuring that you soar to your destination with confidence. Uncover the secret of inexpensive flying and transform your holiday aspirations into high-flying realities!

0 notes

Text

Hotel, travel, and airline data scraping involves extracting information from various online sources to gather details on hotel prices, availability, travel itineraries, flight schedules, and fares. This data helps businesses in competitive analysis, dynamic pricing, market research, and enhancing travel planning services for consumers.

#AirlineDataScraping#HotelDataScraping#TravelDataScraping#airlinedata#vacationrentaldata#hotelpricing#flightpricemonitoring

0 notes

Text

Founded in 1998, Expedia is a portal to the wonders of the world. It is a digital gateway that allows you to effortlessly explore the planet. You can book your dream trip with just a few clicks. You can call it a one-stop shop for your travel requirements. Whether you are jet-setting across the globe or embarking on a local adventure.

Imagine you're staring at your computer screen, daydreaming about a faraway land. You're wondering how you'll get there, where you'll stay, and what you'll do once you arrive. That's where Expedia comes in. You can search for flights, hotels, rental cars, and activities in your desired destination, all in one place.

Expedia's reach is vast, with partnerships with thousands of airlines, hotels, and tour operators around the world. From budget-friendly to luxurious, from family-friendly to adults-only, and from urban to rural, there's something for everyone. And with their Best Price Guarantee, you can rest easy knowing you're getting the best deal possible.

But Expedia is more than just a booking platform. It's a resource for travelers. It offers travel tips, destination guides, and insider information for them. Their mobile app allows you to manage your bookings on the go. Also, their customer service team is available 24/7 to assist you with any questions or issues.

In short, Expedia is a virtual travel agent, a digital concierge, and a global community of adventurers. It's a tool to turn your travel dreams into reality, to help you explore the world and make memories that will last a lifetime.

0 notes

Text

How To Succeed In Travel Industry With Web Scraping?