#SQL Server optimization

Text

Optimizing SQL Server: Strategies to Minimize Logical Reads

Optimizing SQL Server: Strategies to Minimize Logical Reads

In today’s data-driven environment, optimizing database performance is crucial for maintaining efficient and responsive applications. One significant aspect of SQL Server optimization is reducing the number of logical reads. Logical reads refer to the process of retrieving data from the cache memory, and minimizing them can…

View On WordPress

#batch processing SQL#indexing strategies#reduce logical reads#SQL Server optimization#temporal tables

0 notes

Text

Optimize Your SQL Server Management : dbForge vs. Navicat

When it comes to managing and administering Microsoft SQL Server databases, having the right tools is crucial for efficiency and effectiveness

0 notes

Text

Best Practices for MySQL Database Optimization

New Post has been published on https://www.codesolutionstuff.com/best-practices-for-mysql-database-optimization/

Best Practices for MySQL Database Optimization

MySQL is one of the most popular open-source relational databases used by businesses and individuals around the world. As your database grows, it can become slower and less responsive, which can negatively impact your application's performance. In this blog post, we'll explore techniques and

#Buffering#Data Management#Database Administration#Database Design#Database Optimization#Indexing#Normalization#Open-Source Databases#Query Analysis#Query Optimization#Query Performance#Relational Databases#Server Configuration#SQL Commands#SQL Joins

0 notes

Text

Request for Programming/CS/Math blog recommendations:

I've been looking for more programming / computer science / software dev (and also math/probability/statistics) blogs to add to my RSS reader

(I’m not very picky about adding blogs, since I can always remove them later, and it’s tough to accurately estimate “how much will I really like having this in my RSS reader?” in advance. also, most blogs aren't updated that often anyway, so it's not like I'm going to be inundated with posts regardless)

I may as well list the ones I've liked so far, and a few of the languages/topics they post about. They all post about Software Engineering In General as well as whatever I mention explicitly

Ones I’ve been following for a little while (and/or I’ve read a bunch of their older posts):

https://buttondown.email/hillelwayne – language design, formal methods, model checking (TLA+)

https://blog.plover.com – Haskell, functional programming, math, git, CLI stuff (see "subtopics" in the sidebar for tags)

https://www.johndcook.com/blog – math, probability/statistics, numerical methods

https://blog.yossarian.net – Rust, Python, cryptography (tags listed here)

https://fasterthanli.me – Rust, profiling, Go (derogatory), networking, async

https://blog.ploeh.dk – Haskell, F#, functional programming, category theory, design patterns, testing, .NET (tags here)

https://jvns.ca – networking, servers, CLI stuff, SQL

https://wunkolo.github.io – graphics, fun with intel ISA extensions / SIMD

https://randomascii.wordpress.com – profiling, performance, windows-ecosystem things, fun posts about floating point

https://esoteric.codes – esolangs

https://www.parsonsmatt.org – Haskell

Others I’ve thrown onto my RSS reader but haven’t been following for as long, or haven’t read so much from their archives yet (not an exhaustive list):

https://thephd.dev/ – C++, C, Rust

https://fa.bianp.net – machine learning, math, probability/statistics, algorithms, optimization

https://danluu.com – added on the strength of this post, basically

https://nedbatchelder.com/blog – Python

https://martinheinz.dev – Python

https://hynek.me/articles – Python

https://www.b-list.org/weblog – Python

https://blog.demofox.org – graphics, gamedev, math, algorithms

https://utcc.utoronto.ca/~cks/space/blog – linux, sysadmin-ing, zfs, Python

For all of these blogs, I scroll past and try to ignore anything that’s not technical content (or about labor conditions / industry things)

I’m also interested in learning about things outside of the scopes / focuses of the above (e.g. I don’t really have any js blogs in there yet, but would definitely be interested!)

I would also definitely appreciate any recommendations for essays, article series, books, interesting repos, archives of blogs that are no longer active, anything along those lines

(Please no LessWronger recommendations. I’m not following any of these people for their politics, but LessWrongers will use anything and everything to plug their insufferable neo-reactionary cult shit, it’s too much to just scroll past. Unfortunately, most of the people on tumblr who post about math and/or programming are also LessWrong/SSC adherents.....Which unfortunately also means I’m unlikely to actually get any recommendations out of posting this. But you never know, worth a shot)

#programming#math#computer science#cs#(may as well Tag Post. though the number of inline links mean this post probably won't show up anyway)#my posts

89 notes

·

View notes

Text

Redgate SQL Search can help you optimize your SQL workflow.

If you work with SQL databases, you are aware of how crucial it is to have the appropriate equipment. One of the best and easiest-to-use tools for SQL searching is Redgate SQL Search. Redgate SQL Search makes it simple to locate the information you need and optimizes your process. Redgate SQL Search is a free utility that allows you to look for particular keywords, objects, and data in your SQL Server database. Finding tables, views, functions, stored procedures, and other database objects is made quick and simple with this tool.

2 notes

·

View notes

Text

With SQL Server, Oracle MySQL, MongoDB, and PostgreSQL and more, we are your dedicated partner in managing, optimizing, securing, and supporting your data infrastructure.

For more, visit: https://briskwinit.com/database-services/

3 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

Why Power BI Takes the Lead Against SSRS

In an era where data steers the course of businesses and fuels informed decisions, the choice of a data visualization and reporting tool becomes paramount. Amidst the myriad of options, two stalwarts stand out: Power BI and SSRS (SQL Server Reporting Services). As organizations, including those seeking Power BI training in Gurgaon, strive to extract meaningful insights from their data, the debate about which tool to embrace gains prominence. In this digital age, where data is often referred to as the "new oil," selecting the right tool can make or break a business's competitive edge.

Understanding the Landscape

What is Power BI?

Microsoft Power BI is a powerful business analytics application that enables organizations to visualize data and communicate insights across the organization. With its intuitive interface and user-friendly features, Power BI transforms raw data into interactive visuals, making it easier to interpret and draw actionable conclusions.

What is SSRS?

On the other hand, SSRS, also developed by Microsoft, focuses on traditional reporting. It enables the creation, management, and delivery of traditional paginated reports. SSRS has been a reliable choice for years, but the advent of Power BI has brought new dimensions to data analysis.

The Advantages of Power BI Over SSRS

In the realm of data analysis and reporting tools, Power BI shines as a modern marvel, surpassing SSRS in various crucial aspects. Let's explore the advantages that set Power BI apart:

1. Interactive Visualizations

Power BI's forte lies in its ability to transform raw data into interactive and captivating visual representations. Unlike SSRS, which predominantly deals with static reports, Power BI empowers users to explore data dynamically, enabling them to drill down into specifics and gain deeper insights. This interactive approach enhances data comprehension and decision-making processes.

2. Real-time Insights

While SSRS offers a snapshot of data at a particular moment, Power BI steps ahead with real-time data analysis capabilities. Modern businesses, including those enrolling in a Power BI training institute in Bangalore, require up-to-the-minute insights to stay competitive, and Power BI caters precisely to this need. It connects seamlessly to various data sources, ensuring that decisions are based on the latest information.

3. User-Friendly Interface

Power BI's intuitive interface stands in stark contrast to SSRS's somewhat technical setup. With its drag-and-drop functionality, Power BI eliminates the need for extensive coding knowledge. This accessibility allows a wider range of users, from business analysts to executives, to create and customize reports without depending heavily on IT departments.

4. Scalability

As a company grows, so does the amount of data it handles. Power BI's cloud-based architecture ensures scalability without compromising performance. Whether you're dealing with a small dataset or handling enterprise-level data, Power BI can handle the load, guaranteeing smooth operations and robust analysis.

5. Natural Language Queries

One of Power BI's standout features is its ability to understand natural language queries. Users can interact with the tool using everyday language and receive relevant visualizations in response. This bridge between human language and data analytics simplifies the process for non-technical users, making insights accessible to all.

The SEO Advantage

In the digital age, search engine optimization (SEO) plays a vital role in ensuring your content, including information about Power BI training in Mumbai, reaches the right audience. When it comes to comparing Power BI and SSRS in terms of SEO, Power BI once again takes the lead.

With their interactive visual content, Power BI-enhanced articles attract more engagement. This higher engagement leads to longer on-page time, lower bounce rates, and improved SEO rankings. Search engines recognize user behavior as a marker of content quality and relevance, boosting the visibility of Power BI-related articles.

For more information, contact us at:

Call: 8750676576, 871076576

Email: [email protected]

Website: www.advancedexcel.net

#power bi training in gurgaon#power bi coaching in gurgaon#power bi classes in mumbai#power bi course in mumbai#power bi training institute in bangalore#power bi coaching in bangalore

2 notes

·

View notes

Text

Unveiling the Ultimate Handbook for Aspiring Full Stack Developers

In the ever-evolving realm of technology, the role of a full-stack developer has undeniably gained prominence. Full-stack developers epitomize versatility and are an indispensable asset to any enterprise or endeavor. They wield a comprehensive array of competencies that empower them to navigate the intricate landscape of both front-end and back-end web development. In this exhaustive compendium, we shall delve into the intricacies of transforming into a proficient full-stack developer, dissecting the requisite skills, indispensable tools, and strategies for excellence in this domain.

Deciphering the Full Stack Developer Persona

A full-stack developer stands as a connoisseur of both front-end and back-end web development. Their mastery extends across the entire spectrum of web development, rendering them highly coveted entities within the tech sector. The front end of a website is the facet accessible to users, while the back end operates stealthily behind the scenes, handling the intricacies of databases and server management. You can learn it from Uncodemy which is the Best Full stack Developer Institute in Delhi.

The Requisite Competencies

To embark on a successful journey as a full-stack developer, one must amass a diverse skill set. These proficiencies can be broadly categorized into front-end and back-end development, coupled with other quintessential talents:

Front-End Development

Markup Linguistics and Style Sheets: Cultivating an in-depth grasp of markup linguistics and style sheets like HTML and CSS is fundamental to crafting visually captivating and responsive user interfaces.

JavaScript Mastery: JavaScript constitutes the linchpin of front-end development. Proficiency in this language is the linchpin for crafting dynamic web applications.

Frameworks and Libraries: Familiarization with popular front-end frameworks and libraries such as React, Angular, and Vue.js is indispensable as they streamline the development process and elevate the user experience.

Back-End Development

Server-Side Linguistics: Proficiency in server-side languages like Node.js, Python, Ruby, or Java is imperative as these languages fuel the back-end functionalities of websites.

Database Dexterity: Acquiring proficiency in the manipulation of databases, including SQL and NoSQL variants like MySQL, PostgreSQL, and MongoDB, is paramount.

API Expertise: Comprehending the creation and consumption of APIs is essential, serving as the conduit for data interchange between the front-end and back-end facets.

Supplementary Competencies

Version Control Proficiency: Mastery in version control systems such as Git assumes monumental significance for collaborative code management.

Embracing DevOps: Familiarity with DevOps practices is instrumental in automating and streamlining the development and deployment processes.

Problem-Solving Prowess: Full-stack developers necessitate robust problem-solving acumen to diagnose issues and optimize code for enhanced efficiency.

The Instruments of the Craft

Full-stack developers wield an arsenal of tools and technologies to conceive, validate, and deploy web applications. The following are indispensable tools that merit assimilation:

Integrated Development Environments (IDEs)

Visual Studio Code: This open-source code editor, hailed for its customizability, enjoys widespread adoption within the development fraternity.

Sublime Text: A lightweight and efficient code editor replete with an extensive repository of extensions.

Version Control

Git: As the preeminent version control system, Git is indispensable for tracking code modifications and facilitating collaborative efforts.

GitHub: A web-based platform dedicated to hosting Git repositories and fostering collaboration among developers.

Front-End Frameworks

React A potent JavaScript library for crafting user interfaces with finesse.

Angular: A comprehensive front-end framework catering to the construction of dynamic web applications.

Back-End Technologies

Node.js: A favored server-side runtime that facilitates the development of scalable, high-performance applications.

Express.js: A web application framework tailor-made for Node.js, simplifying back-end development endeavors.

Databases

MongoDB: A NoSQL database perfectly suited for managing copious amounts of unstructured data.

PostgreSQL: A potent open-source relational database management system.

Elevating Your Proficiency as a Full-Stack Developer

True excellence as a full-stack developer transcends mere technical acumen. Here are some strategies to help you distinguish yourself in this competitive sphere:

Continual Learning: Given the rapid evolution of technology, it's imperative to remain abreast of the latest trends and tools.

Embark on Personal Projects: Forge your path by creating bespoke web applications to showcase your skills and amass a portfolio.

Collaboration and Networking: Participation in developer communities, attendance at conferences, and collaborative ventures with fellow professionals are key to growth.

A Problem-Solving Mindset: Cultivate a robust ability to navigate complex challenges and optimize code for enhanced efficiency.

Embracing Soft Skills: Effective communication, collaborative teamwork, and adaptability are indispensable in a professional milieu.

In Closing

Becoming a full-stack developer is a gratifying odyssey that demands unwavering dedication and a resolute commitment to perpetual learning. Armed with the right skill set, tools, and mindset, one can truly shine in this dynamic domain. Full-stack developers are in high demand, and as you embark on this voyage, you'll discover a plethora of opportunities beckoning you.

So, if you aspire to join the echelons of full-stack developers and etch your name in the annals of the tech world, commence your journey by honing your skills and laying a robust foundation in both front-end and back-end development. Your odyssey to becoming an adept full-stack developer commences now.

3 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering

Classes: 200 hours of live classes

Lectures: 199 lectures

Projects: Collaborative projects and mini projects for each module

Level: All levels

Scholarship: Up to 70% scholarship on this course

Interactive activities: labs, quizzes, scenario walk-throughs

Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps

Classes: 180+ hours of live classes

Lectures: 300 lectures

Projects: Collaborative projects and mini projects for each module

Level: All levels

Scholarship: Up to 67% scholarship on this course

Interactive activities: labs, quizzes, scenario walk-throughs

Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Becoming a Full-Stack Developer: Unveiling the Skill Set

In the ever-evolving realm of web development, there's a professional who stands out for their versatility and expertise across the board – the full-stack developer. Often considered the Swiss Army knife of the web development world, full-stack developers possess the unique ability to handle both the front-end and back-end aspects of a software application or website. In this comprehensive guide, we'll explore what it means to be a full-stack developer, delve into the intricacies of front-end and back-end development, and highlight the importance of mastering this versatile skill set.

Front-End Development: Crafting the User Experience

When you visit a website or use a web application, the first thing that captures your attention is its visual presentation and user interface. Front-end development becomes important in this situation. Front-end developers are the creative minds behind the aesthetic aspects of websites, ensuring that the user experience is visually appealing and seamless.

Here's a closer look at the key components of front-end development:

User Interface Creation: Front-end developers are responsible for crafting the user interface (UI), which includes designing layouts, buttons, menus, and all the interactive elements that users see and interact with.

HTML & CSS Mastery: Mastery of HyperText Markup Language (HTML) and Cascading Style Sheets (CSS) is crucial. HTML provides the structure for web content, while CSS adds style and formatting to make it visually appealing.

JavaScript Wizardry: JavaScript, the dynamic scripting language, is the backbone of front-end development. It enables developers to create interactive features, animations, and real-time updates, enhancing the user experience.

Frameworks Galore: Front-end development often involves using frameworks like React and Angular. These frameworks provide pre-built components and libraries that streamline development and ensure consistency.

Back-End Development: Powering the Engine

While front-end development focuses on the visible aspects, back-end development deals with what happens behind the scenes. Back-end developers are responsible for building the server, managing databases, and handling server-side logic. This is where the data and functionality of a website or application come together.

A closer look into the world of back-end development is provided here:

Server-Side Management: Back-end developers create and maintain the server, ensuring it can handle requests from users, process data, and serve up the required content.

Database Wizardry: Databases are the storehouses of information. Back-end developers work with databases to organize and manage data efficiently. They use languages like SQL to query and manipulate data.

Server Frameworks: Various server-side frameworks, such as Node.js and Django, are used to streamline the development process. These frameworks provide tools and structure for building robust back-end systems.

Security and Performance: Back-end developers are tasked with implementing security measures and optimizing server performance to ensure data integrity and a smooth user experience.

Full-Stack Proficiency: The Complete Package

Full-stack developers are the ultimate all-rounders of web development. They possess an in-depth understanding of both front-end and back-end development, making them highly versatile and capable of handling every aspect of a project. Their unique skill set allows them to work on end-to-end solutions and tackle complex projects with ease.

Here's what sets full-stack developers apart:

Holistic Expertise: Full-stack developers have a holistic understanding of the entire web development process, from conceptualization and UI/UX design to database management and server-side logic.

Versatility: Their ability to work on both the client-side (front-end) and server-side (back-end) gives them the flexibility to contribute to various phases of a project.

Problem Solving: Full-stack developers excel at problem-solving. They can troubleshoot issues, debug code, and ensure the seamless functioning of web applications.

High Demand: In today's tech-driven world, full-stack developers are in high demand. Their ability to handle diverse tasks and contribute to multiple areas of a project makes them invaluable assets to companies.

If you aspire to become a proficient full-stack developer, there's no better place to start your journey than ACTE Technologies. Renowned for its comprehensive full-stack development courses, ACTE Technologies equips aspiring developers with the knowledge and hands-on experience needed to master both front-end and back-end development.

In conclusion, full-stack development is a dynamic and highly rewarding field that demands expertise in both front-end and back-end development. Full-stack developers are the architects of complete web solutions, and their skills are in constant demand. So, if you're ready to embark on a thrilling journey of mastering this versatile skill set, ACTE Technologies is your trusted partner on the path to success.

2 notes

·

View notes

Text

Leveraging Index-on-Index Strategies for Enhanced Performance in SQL Server

In the realm of database management, particularly with SQL Server, optimizing query performance for large read-only tables is paramount. This article delves into the nuanced approach of creating indexes on indexes, a technique that, when applied judiciously, can significantly boost read operations.

The Rationale Behind Index-on-Index

At first glance, the concept of creating an index on an index…

View On WordPress

#index creation strategies#index-on-index benefits#performance tuning in SQL#read-only table management#SQL Server optimization

0 notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

Accelerating transformation with SAP on Azure

Microsoft continues to expand its presence in the cloud by building more data centers globally, with over 61 Azure regions in 140 countries. They are expanding their reach and capabilities to meet all the customer needs. The transition from a cloudless domain like DRDC to the entire cloud platform is possible within no time, and a serverless future awaits. Microsoft gives the platform to build and innovate at a rapid speed. Microsoft is enhancing new capabilities to meet cloud services' demands and needs, from IaaS to PaaS Data, AI, ML, and IoT. There are over 600 services available on Azure with a cloud adoption framework and enterprise-scale landing zone. Many companies look at Microsoft Azure security compliance as a significant migration driver. Microsoft Azure has an extensive list of compliance certifications across the globe. The Microsoft services have several beneficial characteristics; capabilities are broad, deep, and suited to any industry, along with a global network of skilled professionals and partners. Expertise in the Microsoft portfolio includes both technology integration and digital transformation. Accountability for the long term, addressing complex challenges while mitigating risk. Flexibility to engage in the way that works for you with the global reach to satisfy the target business audience.

SAP and Microsoft Azure

SAP and Microsoft bring together the power of industry-specific best practices, reference architectures, and professional services and support to simplify and safeguard your migration to SAP in the cloud and help manage the ongoing business operations now and in the future. SAP and Microsoft have collaborated to design and deliver a seamless, optimized experience to help manage migration and business operations as you move from on-premises editions of SAP solutions to SAP S/4 HANA on Microsoft Azure. It reduces complexity, minimizes costs, and supports end-to-end SAP migration and operations strategy, platform, and services. As a result, one can safeguard the cloud migration with out-of-box functionality and industry-specific best practices while immaculately handling the risk and optimizing the IT environment. Furthermore, the migration assimilates best-in-class technologies from SAP and Microsoft, packed with a unified business cloud platform.

SAP Deployment Options on Azure

SAP system is deployed on-premises or in Azure. One can deploy different systems into different landscapes either on Azure or on-premises. SAP HANA on Azure large instances intend to host the SAP application layer of SAP systems in Virtual Machines and the related SAP HANA instance on the unit in the 'SAP HANA Azure Large Instance Stamp.' 'A Large Instance Stamp' is a hardware infrastructure stack that is SAP HANA TDI certified and dedicated to running SAP HANA instances within Azure. 'SAP HANA Large Instances' is the official name for the solution in Azure to run HANA instances on SAP HANA TDI certified hardware that gets deployed in ‘Large Instance Stamps’ in different Azure regions. SAP or HANA Large Instances or HLI are physical servers meaning bare metal servers. HLI does not reside in the same data center as Azure services but is in close proximity and connected through high throughput links to satisfy SAP HANA network latency requirements. HLI comes in two flavors- Type 1 and 2. IaaS can install SAP HANA on a virtual machine running on Azure. Running SAP HANA on IaaS supports more Linux versions than HLI. For example, you can install SAP Netweaver on Windows and Linux IaaS Virtual Machines on Azure. SAP HANA can only run on RedHat and SUSE, while NetWeaver can run on windows SQL and Linux.

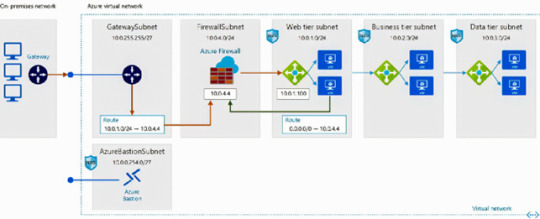

Azure Virtual Network

Azure Virtual Network or VNET is a core foundation of the infrastructure implementation on Azure. The VNET can be a communication boundary for those resources that need to communicate. You can have multiple VNETs in your subscription. If they weren't connected, we could call them Pierre in Azure wall; there will be no traffic flow in between. They can also share the same IP range. Understanding the requirements and proper setup is essential as changing them later, especially with the running production workloads, could cause downtime. When you provision a VNET, The private blocks must allocate address space. If you plan to connect multiple VNETs, you cannot have an overlapping address space. The IP range should not clash or overlap with the IP addressing in Azure while connecting on-premise to Azure via express route or site-site VPN. Configuring VNET to the IP address space becomes a DHP service. You can configure VNET with the DNS server's IP addresses to resolve services on-premise.VNETS can be split into different subnets and communicate freely with each other. Network security groups or NSGs are the control planes we use to filter traffic. NSGs are stateful but simple firewall rules based on the source and destination IP and ports.

Azure Virtual Gateway

For extensive connectivity, you must create a virtual gateway subnet. When you create a virtual gateway, you will get prompted for two options: VPN or Express Route Gateway; with VPN, you cannot connect to the Express Route Circuit. If you choose the Express Route Virtual Gateway, you can combine both.

There are two types of VPN;

1) The point-to-site VPN is used for testing and gives the lowest throughput.

2) The site-site VPN connection can offer better benefits by bridging networks.

This VPN offers zero support for SLA and uses this connection as a backup for the recommended connection on Azure, called the express route. Express route is a dedicated circuit using hardware installed on your data center, with a constant link to ‘Microsoft Azure Edge’ devices. Express route is inevitable for maintaining the communication between application VNET running in Azure and on-premise systems to HLI servers. The express route is safer and more resilient than VPN as it provides a connection through a single circuit and facilitates second redundancy; this helps route traffic between SAP application servers inside Azure and enables low latency. Furthermore, the fast path allows routine traffic between SAP application servers inside Azure VNET and HLI through an optimized route that bypasses the virtual network gateway and directly hops through edge routers to HLA servers. Therefore, an ultra-performance express route gateway must have a Fast Path feature.

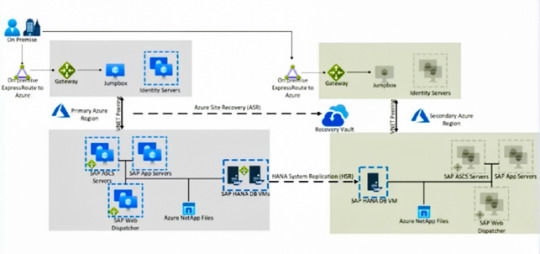

SAP HANA Architecture (VM)

This design gets centered on the SAP HANA backend on the Linux Suse or RedHat distributions. Even though the Linux OS implementation is the same, the vendor licensing differs. It incorporates always-on replication and utilizes synchronous and asynchronous replication to meet the HANA DB requirements. We have also introduced NetApp file share for DFS volumes used by each SAP component using Azure site recovery and building a DR plan for App ASCs and the web dispatches servers. Azure Active directory is used in synchronization with on-premises active directory, as SAP application user authenticates from on-premises to SAP landscape on Azure with Single Sign-On credentials. Azure high-speed express route gateway securely connects on-premises networks to Azure virtual machines and other resources. The request flows into highly available SAP central, SAP ABAP services ASCS and through SAP application servers running on Azure virtual machines. The on-demand request moves from the SAP App server to the SAP HANA server running on a high-performance Azure VM. Primary active and secondary standby servers run on SAP-certified virtual machines with a cluster availability of 99.95 at the OS level. Data replication is handled through HSR in synchronous mode from primary to secondary enabling zero recovery point objective. SAP HANA data is replicated through a disaster recovery VM in another Azure region through the Azure high-speed backbone network and using HSR in asynchronous mode. The disaster recovery VM can be smaller than the production VM to save costs.

SAP systems are network sensitive, so the network system must factor the design decisions into segmenting the VNETs and NSGs. To ensure network reliability, we must use low latency cross-connections with sufficient bandwidth and no packet loss. SAP is very sensitive to these metrics, and you could experience significant issues if traffic suffers latency or packet loss between the application and the SAP system. We can use proximity placement groups called PGS to force the grouping of different VM types into a single Azure data center to optimize the network latency between the different VM types to the best possible.

Security Considerations

Security is another core pillar of any design. Role-based Access control (RBAC) gets accessed through the Azure management bay. RBAC is backed up through AD using cloud-only synchronized identities. Azure AD can back up the RBAC through cloud-only or synchronized identities. RBAC will tie in those cloud or sync identities to Azure tenants, where you can give personal access to Azure for operational purposes. Network security groups are vital for securing the network traffic both within and outside the network environment. The NSGs are stateful firewalls that preserve session information. You can have a single NSG per subnet, and multiple subnets can share the same energy. Application security group or ASG handles functions such as web servers, application servers, or backend database servers combined to perform a meaningful service. Resource encryption brings the best of security with encryption in transit. SAP recommends using encryption at rest, so for the Azure storage account, we can use storage service encryption, which would use either Microsoft or customer-managed keys to manage encryption. Azure storage also adds encryption in transit, with SSL using HTTPS traffic. You can use Azure Disk Encryption (ADE) for OS and DBA encryption for SQL.

Migration of SAP Workloads to Azure

The most critical part of the migration is understanding what you are planning to migrate and accounting for dependencies, limitations, or even blockers that might stop your migration. Following an appropriate inventory process will ensure that your migration completes successfully. You can use in-hand tools to understand the current SAP landscape in the migration scope. For example, looking at your service now or CMDB catalog might reveal some of the data that expresses your SAP system. Then take that information to start drawing out your sizing in Azure. It is essential to ensure that we have a record of the current environment configuration, such as the number of servers and their names, server roles, and data about CPU and memory. It is essential to pick up the disk sizes, configuration, and throughput to ensure that your design delivers a better experience in Azure. It is also necessary to understand database replication and throughput requirements around replicas. When performing a migration, the sizing for large HANA instances is no different from sizing for HANA in general. For existing and deployment systems you want to move from other RDBMS to HANA, SAP provides several reports that run on your existing SAP systems. If migrating the database to HANA, these reports need to check the data and calculate memory requirements for the HANA instances.

When evaluating high availability and disaster recovery requirements, it is essential to consider the implications of choosing between two-tier and three-tier architectures. To avoid network contention in a two-tier arrangement, install database and Netweaver components on the same Azure VM. The database and application components get installed in three-tier configurations on separate Azure Virtual Machines. This choice has other implications regarding sizing since two-tier, and three-tier SAP ratings for a given VM differs. The high availability option is not mandatory for the SAP application servers.

You can achieve high availability by employing redundancy. To implement it, you can install individual application servers on separate Azure VMs. For example, you can achieve high availability for ASCS and SCS servers running on windows using windows failover clustering with SIOS data keeper. We can also achieve high availability with Linux clustering using Azure NetApp files. For DBMS servers, you should use DB replication technology using redundant nodes. Azure offers high availability through redundancy of its infrastructure and capabilities, such as Azure VM restarts, which play an essential role in a single VM deployment. In addition, Azure offers different SLAs depending on your configuration. For example, SAP landscapes organize SABC servers into different tiers; there are three diverse landscapes: deployment, quality assurance, and production.

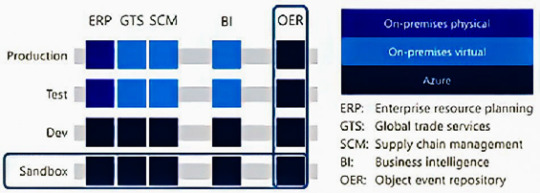

Migration Strategies:- SAP landscapes to Azure

Enterprises have SAP systems for business functions like Enterprise Resource Planning(ERP), global trade, business intelligence(BI), and others. Within those systems, there are different environments like sandbox developments, tests, and production. Each horizontal row is an environment, and each vertical dimension is the SAP system for a business function. The layers at the bottom are lower-risk environments and are less critical. Those towards the top are in high-risk environments and are more critical. As you move up the stack, there is more risk in the migration process. Production is the more critical environment. The use of test environments for business continuity is of concern. The systems at the bottom are smaller and have fewer computing resources, lower availability, size requirements, and less throughput. They have the same amount of storage as the production database with a horizontal migration strategy. To gain experience with production systems on Azure, you can use a vertical approach with low-risk factors in parallel to the horizontal design.

Horizontal Migration Strategy

To limit risk, start with low-impact sandboxes or training systems. Then, if something goes wrong, there is little danger associated with users or mission-critical business functions. After gaining experience in hosting, running, and administering SAP systems in Azure, apply to the next layer of systems up the stack. Then, estimate costs, limiting expenditures, performance, and optimization potential for each layer and adjust if needed.

Vertical Migration Strategy

The cost must be on guard along with legal requirements. Move systems from the sandbox to production with the lowest risk. First, the governance, risk, compliance system, and the object Event Repository gets driven towards production. Then the higher risk elements like BI and DRP. When you have a new system, it's better to start in Azure default mode rather than putting it on-premises and moving it later. The last system you move is the highest risk, mission-critical system, usually the ERP production system. Having the most performance virtual machines, SQL, and extensive storage would be best. Consider the earliest migration of standalone systems. If you have different SAP systems, always look for upstream and downstream dependencies from one SAP system to another.

Journey to SAP on Azure

Consider two main factors for the migration of SAP HANA to the cloud. The first is the end-of-life first-generation HANA appliance, causing customers to reevaluate their platform. The second is the desire to take advantage of the early value proposition of SAP business warehouse BW on HANA in a flexible DDA model over traditional databases and later BW for HANA. As a result, numerous initial migrations of SAP HANA to Microsoft Azure have focused on SAP BW to take advantage of SAP HANA's in-memory capability for the BW workloads. In addition, using the SAP database migration option DMO with the System Migration option of SUM facilitates single-step migration from the source system on-premises to the target system residing in Azure. As a result, it minimizes the overall downtime. In general, when initiating a project to deploy SAP workloads to Azure, you should divide it into the following phases. Project preparation and planning, pilot, non-production, production preparation, go-live, and post-production.

Use Cases for SAP Implementation in Microsoft Azure

Use cases

How does Microsoft Azure help?

How do organizations benefit?

Deliver automated disaster recovery with low RPO and RTO

Azure recovery services replicate on-premises virtual machines to Azure and orchestrate failover and failback

RPO and RTO get reduced, and the cost of ownership of disaster recovery (DR) infrastructure diminishes. While the DR systems replicate, the only cost incurred is storage

Make timely changes to SAP workloads by development teams

200-300 times faster infrastructure provisioning and rollout compared to on-premises, more rapid changes by SAP application teams

Increased agility and the ability to provision instances within 20 minutes

Fund intermittently used development and test infrastructure for SAP workloads

Supports the potential to stop development and test systems at the end of business day

Savings as much as 40-75 percent in hosting costs by exercising the ability to control instances when not in use

Increase data center capacity to serve updated SAP project requests

Frees on-premises data center capacity by moving development and test for SAP workloads to Microsoft Azure without upfront investments

Flexibility to shift from capital to operational expenditures

Provide consistent training environments based on templates

Ability to store and use pre-defined images of the training environment for updated virtual machines

Cost savings by provisioning only the instances needed for training and then deleting them when the event is complete

Archive historical systems for auditing and governance

Supports migration of physical machines to virtual machines that get activated when needed

Savings of as much as 60 percent due to cheaper storage and the ability to quickly spin up systems based on need.

References

n.d. Microsoft Azure: Cloud Computing Services. Accessed June 13, 2022. http://azure.microsoft.com.

n.d. All Blog Posts. Accessed June 13, 2022. https://blogs.sap.com.

n.d. Cloud4C: Managed Cloud Services for Enterprises. Accessed June 13, 2022. https://www.cloud4c.com.

n.d. NetApp Cloud Solutions | Optimized Storage In Any Cloud. Accessed June 13, 2022. http://cloud.netapp.com.

4 notes

·

View notes

Text

Django Best Practices for Building Secure and Scalable Web Applications

Django is a popular high-level Python web framework that makes it easy to build secure and scalable web applications. With its clean and simple syntax, Django allows developers to quickly and easily build complex web applications without having to worry about the underlying infrastructure. In this article, we will outline the best practices for building secure and scalable Django web applications.

1. Keep Your Django Version Up to Date

One of the most important things you can do to ensure the security of your Django web application is to keep your Django version up to date. This is because new releases of Django often include security updates that fix known vulnerabilities. Additionally, new releases of Django often include new features and performance improvements that can help you build better and faster web applications.

2. Use Secure Passwords

It is important to use strong passwords when building Django web applications. This includes not only the passwords used by users, but also the passwords used by administrators and other system accounts. Strong passwords should be at least 12 characters long and include a mix of upper and lowercase letters, numbers, and special characters.

3. Validate User Input

One of the most common ways that attackers can compromise a Django web application is by exploiting vulnerabilities in user input. This is why it is important to validate all user input before accepting it. This includes validating the format, length, and type of input, as well as checking for malicious content.

4. Use SSL/TLS Encryption

Another important aspect of building a secure Django web application is to use SSL/TLS encryption to protect sensitive information in transit. This includes information such as passwords, credit card numbers, and other sensitive data. SSL/TLS encryption ensures that the information being transmitted between the user and the server is encrypted and cannot be intercepted by an attacker.

5. Implement Access Control

Access control is an important aspect of building a secure web application. In Django, access control can be implemented using a combination of authentication and authorization. Authentication is the process of verifying that a user is who they claim to be, while authorization is the process of determining what actions a user is allowed to perform.

6. Use the Django Debug Toolbar

The Django Debug Toolbar is a powerful tool that can help you identify and fix performance and security issues in your Django web application. It provides a wealth of information about your application, including performance metrics, SQL queries, and other information that can help you optimize your application and make it more secure.

7. Monitor Your Logs

Monitoring your logs is an important part of building a secure and scalable Django web application. Logs can provide valuable information about the performance and security of your application, including error messages, access logs, and performance metrics. Regularly reviewing your logs can help you identify and fix issues with your application before they become major problems.

8. Regularly Back Up Your Data

Regularly backing up your data is an important part of building a secure and scalable Django web application. Backups can protect your data in the event of a hardware failure, a security breach, or other unexpected event. It is important to store backups in a secure location, such as an off-site server or a cloud-based storage service.

9. Use a Content Delivery Network (CDN)

Using a content delivery network (CDN) can help you improve the performance and scalability of your Django web application. A CDN caches your content on servers around the world, so that it is closer to users, reducing the time it takes for content to be delivered. This can help reduce server load and improve the overall performance of your application, making it more scalable.

10. Test Your Web Application Regularly

Regularly testing your web application is an important part of building a secure and scalable Django web application. This includes both functional testing, which verifies that the application is working as expected, and security testing, which identifies potential vulnerabilities in the application. By testing your web application regularly, you can identify and fix issues before they become major problems.

Conclusion

Building a secure and scalable Django web application requires a combination of good coding practices, regular maintenance, and attention to detail. By following the best practices outlined in this article, you can help ensure that your Django web application is robust, reliable, and secure. With a focus on security, performance, and scalability, you can build a high-quality Django web application that will meet the needs of your users for years to come.

At Capital Numbers, we offer comprehensive Django development services to help businesses build secure and scalable web applications. Our team of experienced Django developers leverages the latest technologies and best practices to deliver customized solutions that meet your unique business needs. From concept to deployment, we work closely with our clients to understand their requirements and provide tailored solutions that meet their goals. With a focus on quality, efficiency, and innovation, we help businesses build robust web applications that drive growth and improve user engagement. Whether you need a simple website or a complex web application, our team of experts is ready to help you achieve your goals with Django. Connect us and hire Django developers today.

#django#django development agencies#hire django developer#django development#django developers for hire#python django development

3 notes

·

View notes

Text

With SQL Server, Oracle MySQL, MongoDB, and PostgreSQL and more, we are your dedicated partner in managing, optimizing, securing, and supporting your data infrastructure.

For more, visit: https://briskwinit.com/database-services/

3 notes

·

View notes