Text

Complex Regulation is Bad Regulation: We Need Simple Enduser Rights

Readers of this blog and/or my book know that I am pro regulation as a way of getting the best out of technological progress. One topic I have covered repeatedly over the years is the need to get past the app store lock-in. The European Digital Markets Act was supposed to accomplish this but Apple gave a middle finger by figuring out a way to comply with the letter of the law while going against its spirit.

We have gone down a path for many years now where regulation has become ever more complex. One argument would be that this is simply a reflection of the complexity of the world we live in. "A complex world requires complex laws" sounds reasonable. And yet it is fundamentally mistaken.

When faced with increasing complexity we need regulation that firmly ensconces basic principles. And we need to build a system of law that can effectively apply these principles. Otherwise all we are doing is making a complex world more complex. Complexity has of course been in the interest of large corporations which employ armies of lawyers to exploit it (and often help create and maintain complexity through lobbying). Tax codes around the world are a great example of this process.

So what are the principles I believe need to become law in order for us to have more "informational freedom"?

A right to API access

A right to install software

A right to third party support and repair

In return manufacturers of hardware and providers of software can void warranty and refuse support when these rights are exercised. In other words: endusers proceed at their own risk.

Why not give corporations the freedom to offer products any which way they want to? After all nobody is forced to buy an iPhone and they could buy an Android instead. This is a perfectly fine argument for highly competitive markets. For example, it would not make sense to require restaurants to sell you just the ingredients instead of the finished meal (you can go and buy ingredients from a store separately any time and cook yourself). But Apple has massive market power as can easily be seen by its extraordinary profitability.

So yes regulation is needed. Simple clear rights for endusers, who can delegate these rights to third parties they trust. We deserve more freedom over our devices and over the software we interact with. Too much control in the hands of a few large corporations is bad for innovation and ultimately bad for democracy.

5 notes

·

View notes

Text

The World After Capital: All Editions Go, Including Audio

My book, The World After Capital, has been available online and as hardcover for a couple of years now. I have had frequent requests for other editions and I am happy to report that they are now all available!

One of the biggest requests was for an audio version. So this past summer I recorded one which is now available on Audible.

Yes, of course I could have had AI do this, but I really wanted to read it myself. Both because I think there is more of a connection to me for listeners and also because I wanted to see how the book has held up. I am happy to report that I felt that it had become more relevant in the intervening years.

There is also a Kindle edition, as well as a paperback one (for those of you who like to travel light and/or crack the spine). If you have already read the book, please leave a review on Amazon, this helps with disoverability and I welcome the feedback.

In keeping with the spirit of the book, you can still read The World After Capital directly on the web and even download an ePub version for free (registration required).

If you are looking for holiday gift ideas: it's not too late to give The World After Capital as a present to friends and family ;)

PS Translations into other languages are coming!

5 notes

·

View notes

Text

AI Safety Between Scylla and Charybdis and an Unpopular Way Forward

I am unabashedly a technology optimist. For me, however, that means making choices for how we will get the best out of technology for the good of humanity, while limiting its negative effects. With technology becoming ever more powerful there is a huge premium on getting this right as the downsides now include existential risk.

Let me state upfront that I am super excited about progress in AI and what it can eventually do for humanity if we get this right. We could be building the capacity to turn Earth into a kind of garden of Eden, where we get out of the current low energy trap and live in a World After Capital.

At the same time there are serious ways of getting this wrong, which led me to write a few posts about AI risks earlier this year. Since then the AI safety debate has become more heated with a fair bit of low-rung tribalism thrown into the mix. To get a glimpse of this one merely needs to look at the wide range of reactions to the White House Executive Order on Safe, Secure and Trustworthy Development and Use of Artificial Intelligence. This post is my attempt to point out what I consider to be serious flaws in the thinking of two major camps on AI safety and to mention an unpopular way forward.

First, let’s talk about the “AI safety is for wimps” camp, which comes in two forms. One is the happy-go-lucky view represented by Marc Andreessen’s “Techno-Optimist Manifesto” and also his preceding Tweet thread. This view dismisses critics who dare to ask social or safety questions as luddites and shills.

So what’s the problem with this view? Dismissing AI risks doesn’t actually make them go away. And it is extremely clear that at this moment in time we are not really set up to deal with the problems. On the structural risk side we are already at super extended income and wealth inequality. And the recent AI advances have already been shown to further accelerate this discrepancy.

On the existential risk side, there is recent work by Kevin Esvelt et al. showing how LLMs can broaden access to pandemic agents. Jeffrey Ladish et. al. demonstrating how cheap it is to remove safety training from an open source model with published weights. This type of research clearly points out that as open source models become rapidly more powerful they can be leveraged for very bad things and that it continues to be super easy to strip away the safeguards that people claim can be built into open source models.

This is a real problem. And people like myself, who have strongly favored permissionless innovation, would do well to acknowledge it and figure out how to deal with it. I have a proposal for how to do that below.

But there is one intellectually consistent way to continue full steam ahead that is worth mentioning. Marc Andreessen cites Nick Land as an inspiration for his views. Land in Meltdown wrote the memorable line “Nothing human makes it out of the near-future”. Embracing AI as a path to a post-human future is the view embraced by the e/acc movement. Here AI risks aren’t so much dismissed as simply accepted as the cost of progress. My misgiving with this view is that I love humanity and believe we should do our utmost to preserve it (my next book which I have started to work on will have a lot more to say about this).

Second, let’s consider the “We need AI safety regulation now” camp, which again has two subtypes. One is “let regulated companies carry on” and the other is “stop everything now.” Again both of these have deep problems.

The idea that we can simply let companies carry on with some relatively mild regulation suffers from three major deficiencies. First, this has the risk of leading us down the path toward highly concentrated market power and we have seen the problems of this in tech again and again (it has been a long standing topic on my blog). For AI market power will be particularly pernicious because this technology will eventually power everything around us and so handing control to a few corporations is a bad idea. Second, the incentives of for-profit companies aren’t easily aligned with safety (and yes, I include OpenAI here even though it has in theory capped investor returns but also keeps raising money at ever higher valuations, so what’s the point?).

But there is an even deeper third deficiency of this approach and it is best illustrated by the second subtype which essentially wants to stop all progress. At its most extreme this is a Ted Kaczynsci anti technology vision. The problem with this of course is that it requires equipping governments with extraordinary power to prevent open source / broadly accessible technology from being developed. And this is an incredible unacknowledged implication of much of the current pro-regulation camp.

Let me just give a couple of examples. It has long been argued that code is speech and hence protected by first amendment rights. We can of course go back and revisit what protections should be applicable to “code as speech,” but the proponents of the “let regulated companies go ahead with closed source AI” don’t seem to acknowledge that they are effectively asking governments to suppress what can be published as open source (otherwise, why bother at all?). Over time government would have to regulate technology development ever harder to sustain this type of regulated approach. Faster chips? Government says who can buy them. New algorithms? Government says who can access them. And so on. Sure, we have done this in some areas before, such as nuclear bomb research, but these were narrow fields, whereas AI is a general purpose technology that affects all of computation.

So this is the conundrum. Dismissing AI safety (Scylla) only makes sense if you go full on post humanist because the risks are real. Calling for AI safety through oversight (Charybdis) doesn’t acknowledge that way too much government power is required to sustain this approach.

Is there an alternative option? Yes but it is highly unpopular and also hard to get to from here. In fact I believe we can only get there if we make lots of other changes, which together could take us from the Industrial Age to what I call the Knowledge Age. For more on that you can read my book The World After Capital.

For several years now I have argued that technological progress and privacy are incompatible. The reason for this is entropy, which means that our ability to destroy will always grow faster than our ability to (re)build. I gave a talk about it at the Stacks conference in Berlin in 2018 (funny side note: I spoke right after Edward Snowden gave a full throated argument for privacy) and you can read a fuller version of the argument in my book.

The only solution other than draconian government is to embrace a post privacy world. A world in which it can easily be discovered that you are building a super dangerous bio weapon in your basement before you have succeeded in releasing it. In this kind of world we can have technological progress but also safeguard humanity – in part by using aligned super intelligences to detect what is happening. And yes, I believe it is possible to create versions of AGI that have deep inner alignment with humanity that cannot easily be removed. Extremely hard yes, but possible (more on this in upcoming posts on an initiative in this direction).

Now you might argue that a post privacy world also requires extraordinary state power but that's not really the case. I grew up in a small community where if you didn't come out of your house for a day, the neighbors would check in to make sure you were OK. Observability does not require state power per se. Much of this can happen simply if more information is default public. And so regulation ought to aim at increased disclosure.

We are of course a long way away from a world where most information about us could be default public. It will require massive changes from where we are today to better protect people from the consequences of disclosure. And those changes would eventually have to happen everywhere that people can freely have access to powerful technology (with other places opting for draconian government control instead).

Given that the transition which I propose is hard and will take time, what do I believe we should do in the short run? I believe that a great starting point would be disclosure requirements covering training inputs, cost of training runs, and powered by (i.e. if you launch say a therapy service that uses AI you need to disclose which models). That along with mandatory API access could start to put some checks on market power. As for open source models I believe a temporary voluntary moratorium on massively larger more capable models is vastly preferable to any government ban. This has a chance of success because there are relatively few organizations in the world that have the resources to train the next generation of potentially open source models.

Most of all though we need to have a more intellectually honest conversation about risks and how to mitigate them without introducing even bigger problems. We cannot keep suggesting that these are simple questions and that people must pick a side and get on with it.

5 notes

·

View notes

Text

Weaponization of Bothsidesism

One tried and true tactic for suppressing opinions is to slap them with a disparaging label. This is currently happening in the Israel/Gaza conflict with the allegation of bothsidesism, which goes as follows: you have to pick a side, anything else is bothsidesism. Of course nobody likes to be accused of bothsidesism, which is clearly bad. But this is a completely wrong application of the concept. Some may be repeating this allegation unthinkingly, but others are using it as an intentional tactic.

Bothsidesism, aka false balance, is when you give equal airtime to obvious minority opinions on a well-established issue. The climate is a great example, where the fundamental physics, the models, and the observed data all point to a crisis. Giving equal airtime to people claiming there is nothing to see is irresponsible. To be clear, it would be equally dangerous to suppress any contravening views entirely. Science is all about falsifiability.

Now in a conflict, there are inherently two sides. That doesn't at all imply that you have to pick one of them. In plenty of conflicts both sides are wrong. Consider the case of the state prosecuting a dealer who sold tainted drugs that resulted in an overdose. The dealer is partially responsible because they should have known what they were selling. The state is also partially responsible because it should decriminalize drugs or regulate them in a way that makes safety possible for addicts. I do not need to pick a side between the dealer and the state.

I firmly believe that in the Israel/Gaza conflict both sides are wrong. To be more precise, the leaders on both sides are wrong and their people are suffering as a result. I do not have to pick a side and neither do you. Don't let yourself be pressured into picking a side via a rhetorical trick.

30 notes

·

View notes

Text

Israel/Gaza

I have not personally commented in public on the Israel/Gaza conflict until now (USV signed on to a statement). The suffering has been heartbreaking and the conflict is far from over. Beyond the carnage on the ground, the dialog online and in the street has been dominated by shouting. That makes it hard to want to speak up individually.

My own hesitation was driven by unacknowledged emotions: Guilt that I had not spoken out about the suffering of ordinary Palestinians in the past, despite having visited the West Bank. Fear that support for one side or the other would be construed as agreeing with all its past and current policies. And finally, shame that my thoughts on the matter appeared to me as muddled, inconsistent and possibly deeply wrong. I am grateful to everyone who engaged with me in personal conversations and critiqued some of what I was writing to wrestle down my thoughts over the last few weeks, especially my Jewish and Muslim friends, for whom this required additional emotional labor in an already difficult time.

Why speak out at all? Because the position I have arrived at represents a path that will be unpopular with some on both sides of this conflict. If people with views like mine don’t speak, then the dialog will be dominated by those with extremely one-sided views contributing to further polarization. So this is my attempt to help grow the space for discussion. If you don’t care about my opinion on this conflict, you don’t have to read it.

The following represents my current thinking on a possible path forward. As always that means it is subject to change despite being intentionally strongly worded.

Hamas is a terrorist organization. I am basing this assessment not only on the most recent attack against Israel but also on its history of violent suppression of Palestinian opposition. Hamas must be dismantled.

Israel’s current military operation has already resulted in excessive civilian casualties and must be replaced with a strategy that minimizes further Palestinian civilian casualties, even if that entails increased risk to Israeli troops (there is at least one proposal for how to do this being floated now). If there were a ceasefire-based approach to dismantling Hamas that would be even better and we should all figure out how that might work.

Immediate massive humanitarian relief is needed in southern Gaza. This must be explicitly temporary. The permanent displacement of Palestinians is not acceptable.

Israel must commit to clear territorial lines for both Gaza and the Westbank and stop its expansionist approach to the latter. This will require relocating some settlements to establish sensible borders. Governments need clear borders to operate with credibility, which applies also to any Palestinian government (and yes I would love to see humanity eventually transcend the concept of borders but that will take a lot of time).

A Marshall Plan-level commitment to a full reconstruction of Gaza must be made now. All nations should be called upon to join this effort. Reconstruction and constitution of a government should be supervised by a coalition that must include moderate Islamic countries. If none can be convinced to join such an effort, that would be good to know now for anyone genuinely wanting to achieve durable peace in the region.

I believe that an approach along these lines could end the current conflict and create the preconditions for lasting peace. Importantly it does not preclude democratically elected governments from eventually choosing to merge into a single state.

All of this may sound overly ambitious and unachievable. It certainly will be if we don’t try and instead choose more muddling through. It will require strong leadership and moral clarity here in the US. That is a tall order on which we have a long way to go. But here are two important starting points.

We must not tolerate antisemitism. As a German from Nürnberg I know all too well the dark places to which antisemitism has led time and time again. The threat of extinction for Jews is not hypothetical but historical. And it breaks my heart that my Jewish friends are removing mezuzahs from their doors. There is one important confusion we should get past if we genuinely want to make progress in the region. Israel is a democracy and deserves to be treated as such. Criticizing Israeli government policies isn’t antisemitic, just like criticizing the Biden administration isn’t anti-Christian, or criticizing the Modi government isn’t anti-Hindu. And yes, I believe that many of Israel’s historic policies towards Gaza and the Westbank were both cruel and ineffective. Some will argue that Israel is an ethnocracy and/or a colonizer. One can discuss potential implications of this for policy. But if what people really mean is that Israel should cease to exist then they should come out and say that and own it. I strongly disagree.

We must not tolerate islamophobia. We also have to protect citizens who want to practice Islam. We must not treat them as potential terrorists or as terrorist supporters on the basis of their religion. How can we ask people to call out Hamas as a terrorist organization when we readily accept mass casualties among Muslims (not just in the region but also in other places, such as the Iraq war) while also not pushing back on people depicting Islam as an inherently hateful religion? And for those loudly claiming the second amendment, how about also supporting the first, including for Muslims? I have heard from several Muslim friends that they frequently feel treated as subhuman. And that too breaks my heart.

This post will likely upset some people on both sides of the conflict. There is nothing of substance that can be said that will make everyone happy. I am sure I am wrong about some things and there may be better approaches. If you have read something that you found particularly insightful, please point me to it. I am always open to learning and plan to engage with anyone who wants to have a good faith conversation aimed at achieving peace in the region.

6 notes

·

View notes

Text

We Need New Forms of Living Together

I was at a conference earlier this year where one of the topics was the fear of a population implosion. Some people are concerned that with birth rates declining in many parts of the world we might suddenly find ourselves without enough humans. Elon Musk has on several occasions declared population collapse the biggest risk to humanity (ahead of the climate crisis). I have two issues with this line of thinking. First, global population is still growing and some of those expressing concern are veiling a deep racism where they believe that areas with higher birth rates are inferior. Second, a combination of ongoing technological progress together with getting past peak population would be a fantastic outcome.

Still there are people who would like to have children but are not in fact having them. While some of this is driven by concern about where the world is headed, a lot of it is a function of the economics of having children. It's expensive to do so not just in dollar terms but also in time commitment. At the conference one person advanced the suggestion that the answer is we must bring back the extended family as a widely embraced structure. Grandparents, the argument goes, could help raise children and as an extra benefit this could help address the loneliness crisis for older people.

This idea of a return to the extended family neatly fits into a larger pattern of trying to solve our current problems by going back to an imagined better past. The current tradwife movement is another example of this. I say "imagined better past" because the narratives conveniently omit much of the actual reality of that past. My writing here on Continuations and in The World After Capital is aimed at a different idea: what can we learn from the past so that we can create a better future?

People living together has clear benefits. It allows for more efficient sharing of resources. And it provides company which is something humans thrive on. The question then becomes what forms can this take? Thankfully there is now a lot of new exploration happening. Friends of mine in Germany bought an abandoned village and have formed a new community there. The Supernuclear Substack documents a variety of new coliving groups, such as Radish in Oakland. Here is a post on how that has made it easier to have babies.

So much of our views of what constitutes a good way of living together is culturally determined. But it goes deeper than that because over time culture is reflected in the built environment which is quite difficult to change. Suburban single family homes are a great example of that, as are highrise buildings in the city without common spaces. The currently high vacancy rates in office buildings may provide an opportunity to build some of these out in ways that are conducive to experimenting with new forms of coliving.

If you are working an initiative to convert offices into dedicated space for coliving (or are simply aware of one), I would love to hear more about it.

8 notes

·

View notes

Text

Low Rung Tech Tribalism

Silicon Valley's tribal boosterism has been bad for tech and bad for the world.

I recently criticized Reddit for clamping down on third party clients. I pointed out that having raised a lot of money at a high valuation required the company to become more extractive in an attempt to produce a return for investors. Twitter had gone down the exact same path years earlier with bad results, where undermining the third party ecosystem ultimately resulted in lower growth and engagement for the network. This prompted an outburst from Paul Graham who called it a "diss" and adding that he "expected better from [me] in both the moral and intellectual departments."

Comments like the one by Paul are a perfect example of a low rung tribal approach to tech. In "What's Our Problem" Tim Urban introduces the concept of a vertical axis of debate which distinguishes between high rung (intellectual) and low rung (tribal) approaches. This axis is as important, if not more important, than the horizontal left versus right axis in politics or the entrepreneurship/markets versus government/regulation axis in tech. Progress ultimately depends on actually seeking the right answers and only the high rung approach does that.

Low rung tech boosterism again and again shows how tribal it is. There is a pervasive attitude of "you are either with us or you are against us." Criticism is called a "diss" and followed by a barely veiled insult. Paul has a long history of such low rung boosterism. This was true for criticism of other iconic companies such as Uber and Airbnb also. For example, at one point Paul tweeted that "Uber is so obviously a good thing that you can measure how corrupt cities are by how hard they try to suppress it."

Now it is obviously true that some cities opposed Uber because of corruption / regulatory capture by the local taxi industry. At the same time there were and are valid reasons to regulate ride hailing apps, including congestion and safety. A statement such as Paul's doesn't invite a discussion, instead it serves to suppresses any criticism of Uber. After all, who wants to be seen as corrupt or being allied with corruption against something "obviously good"? Tellingly, Paul never replied to anyone who suggested that his statement was too extreme.

The net effect of this low rung tech tribalism is a sense that tech elites are insular and believe themselves to be above criticism, with no need to engage in debate. The latest example of this is Marc Andreessen's absolutist dismissal of any criticism or questions about the impacts of Artificial Intelligence on society. My tweet thread suggesting that Marc's arguments were overly broad and arrogant promptly earned me a block.

In this context I find myself frequently returning to Martin Gurri's excellent "Revolt of the Public." A key point that Gurri makes is that elites have done much to undermine their own credibility, a point also made in the earlier "Revolt of the Elites" by Christopher Lasch. When elites, who are obviously benefiting from a system, dismiss any criticism of that system as invalid or "Communist," they are abdicating their responsibility.

The cost of low rung tech boosterism isn't just a decline in public trust. It has also encouraged some founders' belief that they can be completely oblivious to the needs of their employees or their communities. If your investors and industry leaders tell you that you are doing great, no matter what, then clearly your employees or communities must be wrong and should be ignored. This has been directly harmful to the potential of these platforms, which in turn is bad for the world at large which is heavily influenced by what happens on these platforms.

If you want to rise to the moral obligations of leadership, then you need to find the intellectual capacity to engage with criticism. That is the high rung path to progress. It turns out to be a particularly hard path for people who are extremely financially successful as they often allow themselves to be surrounded by sycophants both IRL and online.

PS A valid criticism of my original tweet about Reddit was that I shouldn't have mentioned anything from a pitch meeting. And I agree with that.

10 notes

·

View notes

Text

Artificial Intelligence Existential Risk Dilemmas

A few week backs I wrote a series of blog posts about the risks from progress in Artificial Intelligence (AI). I specifically addressed that I believe that we are facing not just structural risks, such as algorithmic bias, but also existential ones. There are three dilemmas in pushing the existential risk point at this moment.

First, there is the potential for a "boy who cried wolf" effect. The more we push right now, if (hopefully) nothing terrible happens, then the harder existential risk from artificial intelligence will be dismissed for years to come. This of course has been the fate of the climate community going back to the 1980s. With most of the heat to-date from global warming having been absorbed by the oceans, it has felt like nothing much is happening, which had made it easier to disregard subsequent attempts to warn of the ongoing climate crisis.

Second, the discussion of existential risk is seen by some as a distraction from focusing on structural risks, such as algorithmic bias and increasing inequality. Existential risk should be the high order bit, since we want to have the opportunity to take care of structural risk. But if you believe that existential risk doesn't exist at all or can be ignored, then you will see any mention of it as a potentially intentional distraction from the issues you care about. This unfortunately has the effect that some AI experts who should be natural allies on existential risk wind up dismissing that threat vigorously.

Third, there is a legitimate concern that some of the leading companies, such as OpenAI, may be attempting to use existential risk in a classic "pulling up the ladder" move. How better to protect your perceived commercial advantage than to get governments to slow down potential competitors through regulation? This is of course a well-rehearsed strategy in tech. For example, Facebook famously didn't object to much of the privacy regulation because they realized that compliance would be much harder and more costly for smaller companies.

What is one to do in light of these dilemmas? We cannot simply be silent about existential risk. It is far too important for that. Being cognizant of the dilemmas should, however, inform our approach. We need to be measured, so that we can be steadfast, more like a marathon runner than a sprinter. This requires pro-actively acknowledging other risks and being mindful of anti-competitive moves. In this context I believe it is good to have some people, such as Eliezer Yudkowsky, take a vocally uncompromising position because that helps stretch the Overton window to where it needs to be for addressing existential AI risk to be seen as sensible.

6 notes

·

View notes

Text

Power and Progress (Book Review)

A couple of weeks ago I participated in Creative Destruction Lab's (CDL) "Super Session" event in Toronto. It was an amazing convocation of CDL alumni from around the world, as well as new companies and mentors. The event kicked off with a 2 hour summary and critique of the new book "Power and Progress" by Daron Acemoglu and Simon Johnson. There were eleven of us charged with summarizing and commenting on one chapter each, with Daron replying after 3-4 speakers. This was the idea of Ajay Agrawal, who started CDL and is a professor of strategic management at the University of Toronto's Rotman School of Business. I was thrilled to see a book given a two hour intensive treatment like this at a conference, as I believe books are one of humanity's signature accomplishments.

Power and Progress is an important book but also deeply problematic. As it turns out the discussion format provided a good opportunity both for people to agree with the authors as well as to voice criticism.

Let me start with why the book is important. Acemoglu is a leading economist and so it is a crucial step for that discipline to have the book explicitly acknowledge that the distribution of gains from technological innovation depends on the distribution of power in societies. It is ironic to see Marc Andreessen dismissing concerns about Artificial Intelligence (AI) by harping on about the "lump of labor" fallacy at just the time when economists are soundly distancing themselves from that overly facile position (see my reply thread here). Power and Progress is full of historic examples of when productivity innovations resulted in gains for a few elites while impoverishing the broader population. And we are not talking about a few years here but for many generations. The most memorable example of this is how agricultural innovation wound up resulting in richer churches building ever bigger cathedrals while the peasants were suffering more than before. It is worth reading the book for these examples alone.

As it turns out I was tasked with summarizing Chapter 3, which discusses why some ideas find more popularity in society than others. The chapter makes some good points, such as persuasion being much more common in modern societies than outright coercion. The success of persuasion makes it harder to criticize the status quo because it feels as if people are voluntarily participating in it. The chapter also gives several examples of how as individuals and societies we tend to over-index on ideas coming from people who already have status and power thus resulting in a self-reinforcing loop. There is a curious absence though of any mention of media -- either mainstream or social (for this I strongly recommend Martin Gurri's "Revolt of the Public"). But the biggest oversight in the chapter is that the authors themselves are in positions of power and status and thus their ideas will carry a lot of weight. This should have been explicitly acknowledged.

And that's exactly why the book is also problematic. The authors follow an incisive diagnosis with a whimper of a recommendation chapter. It feels almost tacked on somewhat akin to the last chapter of Gurri's book, which similarly excels at analysis and falls dramatically short on solutions. What's particularly off is that "Power and Progress" embraces marginal changes, such as shifts in taxation, while dismissing more systematic changes, such as universal basic income (UBI). The book is over 500 pages long and there are exactly 2 pages on UBI, which use arguments to dismiss UBI that have lots of evidence against them from numerous trials in the US and around the world.

When I pressed this point, Acemoglu in his response said they were just looking to open the discussion on what could be done to distribute the benefits more broadly. But the dismissal of more systematic change doesn't read at all like the beginning of a discussion but rather like the end of it. Ultimately while moving the ball forward a lot relative to prior economic thinking on technology, the book may wind up playing an unfortunate role in keeping us trapped in incrementalism, exactly because Acemoglu is so well respected and thus his opinion carries a lot of weight.

In Chapter 3 the authors write how one can easily be in "... a vision trap. Once a vision becomes dominant, its shackles are difficult to throw off." They don't seem to recognize that they might be stuck in just such a vision trap themselves, where they cannot imagine a society in which people are much more profoundly free than today. This is all the more ironic in that they explicitly acknowledge that hunter gatherers had much more freedom than humanity has enjoyed in either the agrarian age or the industrial age. Why should our vision for AI not be a return to a more freedom? Why keep people's attention trapped in the job loop?

The authors call for more democracy as a way of "avoiding the tyranny of narrow visions." I too am a big believer in more democracy. I just wish that the authors had taken a much more open approach to which ideas we should be considering as part of that.

2 notes

·

View notes

Text

What's Our Problem by Tim Urban (Book Review)

Politics in the US has become ever more tribal on both the left and the right. Either you agree with 100 percent of group doctrine or you are considered an enemy. Tim Urban, the author of the wonderful Wait but Why blog has written a book digging into how we have gotten here. Titled "What's Our Problem" the book is a full throated defense of liberalism in general and free speech in particular.

As with his blog, Urban does two valuable things rather well: He goes as much as possible to source material and he provides excellent (illustrated) frameworks for analysis. The combination is exactly what is needed to make progress on difficult issues and I got a lot out of reading the book as a result. I highly recommend reading it and am excited that it is the current selection for the USV book club.

The most important contribution of What's Our Problem is drawing a clear distinction between horizontal politics (left versus right) and vertical politics (low-rung versus high-rung). Low-rung politics is tribal, emotional, religious, whereas high-rung politics attempts to be open, intellectual, secular/scientific. Low-rung politics brings out the worst in people and brings with it the potential of violent conflict. High-rung politics holds the promise of progress without bloodshed. Much of what is happening in the US today can be understood as low-rung politics having become dominant.

The book on a relative basis spends a lot more time examining low-rung politics on the left in the form of what Urban calls Social Justice Fundamentalism compared to the same phenomenon on the right. Now that can be excused to a dgree because his likely audience is politically left and already convinced that the right has descended into tribalism but not been willing to admit that the same is the case on the left. Still for me it somewhat weakened the overall effect and a more frequent juxtaposition of left and right low-rung poltics would have been stronger in my view.

My second criticism is that the book could have done a bit more to point out that the descend to low-rung politics isn't just a result of certain groups pulling everyone down but rather also of the abysmal failure of nominally high-rung groups. In that regard I strongly recommend reading Martin Gurri's "Revolt of the Public" as a complement.

This leads my to my third point. The book is mostly analysis and has only a small recommendation section at the end. And while I fully agree with the suggestions there, the central one of which is an exhortation to speak up if you are in a position to do so, they do fall short in an important way. We are still missing a new focal point (or points) for high-rung politics. There may indeed be a majority of people who are fed up with low-rung politics on both sides but it is not clear where they should turn to. Beginning to establish such a place has been the central goal of my own writing in The World After Capital and here on Continuations.

Addressing these three criticisms would of course have resulted in a much longer book and that might in the end have been less effective than the book at hand. So let me reiterate my earlier point: this is an important book and if you care about human and societal progress you should absolutely read What's Our Problem.

8 notes

·

View notes

Text

Thinking About AI: Part 3 - Existential Risk (Terminator Scenario)

Now we are getting to the biggest and weirdest risk of AI: a super intelligence emerging and wiping out humanity in pursuit of its own goals. To a lot of people this seems like a totally absurd idea, held only by a tiny fringe of people who appear weird and borderline culty. It seems so far out there and also so huge that most people wind up dismissing it and/or forgetting about shortly after hearing it. There is a big similarity here to the climate crisis, where the more extreme views are widely dismissed.

In case you have not encountered the argument yet, let me give a very brief summary (Nick Bostrom has an entire book on the topic and Eliezer Yudkowsky has been blogging about it for two decades, so this will be super compressed by comparison): A superintelligence when it emerges will be pursuing its own set of goals. In many imaginable scenarios, humans will be a hindrance rather than a help in accomplishing these goals. And once the superintelligence comes to that conclusion it will set about removing humans as an obstacle. Since it is a superintelligence we won't be able to stop it and there goes humanity.

Now you might have all sorts of objections here. Such as can't we just unplug it? Suffice it to say that the people thinking about this for some time have considered these objections already. They are pretty systematic in their thinking (so systematic that the Bostrom book is quite boring to read and I had to push myself to finish it). And in case you are still wondering why we can't just unplug it: by the time we discover it is a superintelligence it will have spread itself across many computers and built deep and hard defenses for these. That could happen for example by manipulating humans into thinking they are building defenses for a completely different reason.

Now I am not in the camp that says this is guaranteed to happen. Personally I believe there are also good chances that a superintelligence upon emerging could be benevolent. But with existential risk one doesn't need certainty (same is true for the other existential risks, such as the climate crisis or an asteroid strike). What matters is that there is a non zero likelihood. And that is the case for superintelligence, which means we need to proceed with caution. My book The World After Capital is all about how we can as humanity allocate more attention to these kinds of problems and opportunities.

So what are we to do? There is a petition for a 6 months research moratorium. Eliezer wrote a piece in Time pleading to shut it all down and threaten anyone who tries to build it with destruction. I understand the motivation for both of these and am glad that people are ringing loud alarm bells, but neither of these makes much sense. First, we have shown no ability to globally coordinate on other existential threats including ones that are much more obvious, so why do we think we could succeed here? Second, who wants to give government that much power over controlling core parts of computing infrastructure, such as the shipment of GPUs?

So what could we do instead? We need to accept that superintelligences will come about faster than we had previously thought and act accordingly. There is no silver bullet but there are a several initiatives that can be taken by individuals, companies and governments that can dramatically improve our odds.

The first and most important are well funded efforts to create a benign superintelligence. This requires the level of resources that only governments can command easily, although some of the richest people and companies in the world might also be able to make a difference. The key here will be to invert the approach to training that we have take so far. It is absurd to expect that you can have a good outcome when you train a model first on the web corpus and then attempt to constrain it via reinforcement learning from human feedback (RLHF). This is akin to letting a child grow up without any moral guidance along the way and then expect them to be a well behaved adult based on occasionally telling them they are doing something wrong. We have to create a large corpus of moral reasoning that can be ingested early and form the core of a superintelligence before exposing it to all the world's output. This is a hard problem but interestingly we can use some of the models we now have to speed up the creation of such a corpus. Of course a key challenge will be what it should contain. It is for that very reason that in my book The World After Capital, I make such a big deal of living and promoting humanism, here is what I wrote (it's an entire section from the conclusion but I think worth it)

There’s another reason for urgency in navigating the transition to the Knowledge Age: we find ourselves on the threshold of creating both transhumans and neohumans. ‘Transhumans’ are humans with capabilities enhanced through both genetic modification (for example, via CRISPR gene editing) and digital augmentation (for example, the brain-machine interface Neuralink). ‘Neohumans’ are machines with artificial general intelligence. I’m including them both here, because both can be full-fledged participants in the knowledge loop.

Both transhumans and neohumans may eventually become a form of ‘superintelligence,’ and pose a threat to humanity. The philosopher Nick Bostrom published a book on the subject, and he and other thinkers warn that a superintelligence could have catastrophic results. Rather than rehashing their arguments here, I want to pursue a different line of inquiry: what would a future superintelligence learn about humanist values from our current behavior?

As we have seen, we’re not doing terribly well on the central humanist value of critical inquiry. We’re also not treating other species well, our biggest failing in this area being industrial meat production. Here as with many other problems that humans have created, I believe the best way forward is innovation. I’m excited about lab-grown meat and plant-based meat substitutes. Improving our treatment of other species is an important way in which we can use the attention freed up by automation.

Even more important, however, is our treatment of other humans. This has two components: how we treat each other now, and how we will treat the new humans when they arrive. As for how we treat each other now, we have a long way to go. Many of my proposals are aimed at freeing humans so they can discover and pursue their personal interests and purpose, while existing education and job loop systems stand in opposition to this freedom. In particular we need to construct the Knowledge Age in a way that allows us to overcome, rather than reinforce, our biological differences which have been used as justification for so much existing discrimination and mistreatment. That will be a crucial model for transhuman and neohuman superintelligences, as they will not have our biological constraints.

Finally, how will we treat the new humans? This is a difficult question to answer because it sounds so preposterous. Should machines have human rights? If they are humans, then they clearly should. My approach to what makes humans human—the ability to create and make use of knowledge—would also apply to artificial general intelligence. Does an artificial general intelligence need to have emotions in order to qualify? Does it require consciousness? These are difficult questions to answer but we need to tackle them urgently. Since these new humans will likely share little of our biological hardware, there is no reason to expect that their emotions or consciousness should be similar to ours. As we charge ahead, this is an important area for further work. We would not want to accidentally create a large class of new humans, not recognize them, and then mistreat them.

The second are efforts to help humanity defend against an alien invasion. This may sound facetious but I am using alien invasion as a stand in for all sort of existential threats. We need much better preparation for extreme outcomes of the climate crisis, asteroid strikes, runaway epidemics, nuclear war and more. Yes we 100 percent need to invest more in avoiding these, for example through early detection of asteroids and building deflection systems, but we also need to harden our civilization.

There are a ton of different steps that can be taken here and I may write another post some time about that as this post is getting rather long. For now let me just say a key point is to decentralize our technology base much more than it is today. For example we need many more places that can make chips and ideally do so at much smaller scale than we have today.

Existential AI risk aka the Terminator scenario are real threats. Dismissing them would be a horrible mistake. But so would be seeing global government control as the answer. We need to harden our civilization and develop a benign superintelligence. To do these well we need to free up attention and further develop humanism. That's the message of The World After Capital.

1 note

·

View note

Text

Thinking About AI: Part 3 - Existential Risk (Loss of Reality)

In my prior post I wrote about structural risk from AI. Today I want to start delving into existential risk. This broadly comes in two not entirely distinct subtypes: first, that we lose any grip on reality which could result in a Matrix style scenario or global war of all against all and second, a superintelligence getting rid of humans directly in the pursuit of its own goals.

The loss of reality scenario was the subject of an op-ed in the New York Time the other day. And right around the same time there was an amazing viral picture of the pope that had been AI generated.

I have long said that the key mistake of the Matrix movies was to posit a war between humans and machines. That instead we will be giving ourselves willingly to the machines, more akin to the "Free wifi" scenario of Mitchells vs. the Machines.

The loss of reality is a very real threat. It builds on a long tradition, such as Stalin having people edited out of historic photographs or Potemkin building fake villages to fool the invading Germans (why did I think of two Russian examples here?). And now that kind of capability is available to anyone at the push of a button. Anyone see those pictures of Trump getting arrested?

Still I am not particularly concerned about this type of existential threat from AI (outside of the superintelligence scenario). That's for a number of different reasons. First, distribution has been the bottleneck for manipulation for some time, rather than content creation (it doesn't take advanced AI tools to come up with a meme). Second, I believe that the approach of more AI that can help with structural risk can also help with this type of existential risk. For example, having an AI copilot when consuming the web that points out content that appears to be manipulated. Third, we have an important tool availalbe to us as individuals that can dramatically reduce the likelihood of being manipulated and that is mindfulness.

In my book "The World After Capital" I argue for the importance of developing a mindfulness practice in a world that's already overflowing with information in a chapter titled "Psychological Freedom." Our brains evolved in an environment that was mostly real. When you saw a cat there was a cat. Even before AI generated cats the Internet was able to serve up an endless stream of cat pictures. So we have already been facing this problem for some time. It is encouraging that studies show that younger people are already more skeptical of the digital information they encounter.

Bottom line then for me is that "loss of reality" is an existential threat, but one that we have already been facing and where further AI advancement will both help and hurt. So I am not losing any sleep over it. There is, however, an overlap with a second type of existential risk, which is a super intelligence simply wiping out humanity. The overlap is that the AI could be using the loss of reality to accomplish its goals. I will address the superintelligence scenario in the next post (preview: much more worrisome).

4 notes

·

View notes

Text

Thinking About AI: Part 2 - Structural Risks

Yesterday I wrote a post on where we are with artificial intelligence by providing some history and foundational ideas around neural network size. Today I want to start in on risks from artificial intelligence. These fall broadly into two categories: existential and structural. Existential risk is about AI wiping out most or all of humanity. Structural risk is about AI aggravating existing problems, such as wealth and power inequality in the world. Today's post is about structural risks.

Structural risks of AI have been with us for quite some time. A great example of these is the Youtube recommendation algorithm. The algorithm, as far as we know, optimizes for engagement because Youtube's primary monetization are ads. This means the algorithm is more likely to surface videos that have an emotional hook than ones that require the viewer to think. It will also pick content that emphasizes the same point of view, instead of surfacing opposing views. And finally it will tend to recommend videos that have already demonstrated engagement over those that have not, giving rise to a "rich getting richer" effect in influence.

With the current progress it may look at first like these structural risks will just explode. Start using models everywhere and wind up having bias risk, "rich get richer" risk, wrong objective function risk, etc. everywhere. This is a completely legitimate concern and I don't want to dismiss it.

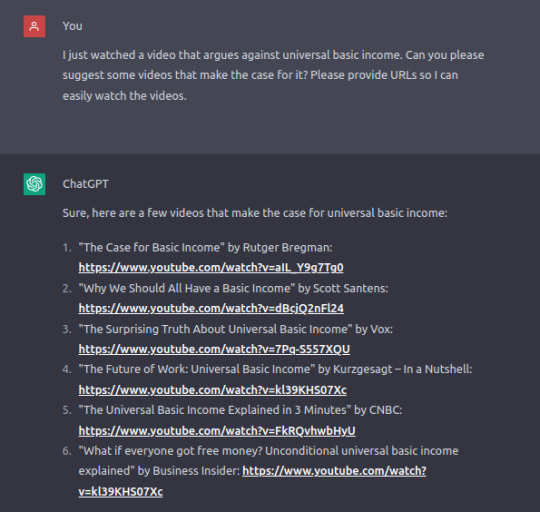

On the other hand there are also new opportunities that come from potentially giving broad access to models and thus empowering individuals. For example, I tried the following prompt in Chat GPT "I just watched a video that argues against universal basic income. Can you please suggest some videos that make the case for it? Please provide URLs so I can easily watch the videos." and it quickly produced a list videos for me to watch. Because so much content has been ingested, users can now have their own "Opposing View Provider" (something I had suggested years ago).

There are many other ways in which these models can empower individuals, for example summarizing text at a level that might be more accessible. Or pointing somebody in the right direction when they have encountered a problem. And here we immediately run into some interesting regulatory challenges. For example: I am quite certain that Chat GPT could give pretty good free legal advice. But that would be running afoul of the regulations on practicing law. So part of the structural risk issue is that our existing regulations predate any such artificial intelligence and will oddly contribute to making its power available to a smaller group (imagine more profitable law firms instead of widely available legal advice).

There is a strong interaction here also between how many such models will exist (from a small oligopoly to potentially a great many) and to what extent endusers can embed these capabilities programmatically or have to use them manually. To continue my earlier example, if I have to head of Chat GPT every time I want to ask for an opposing view I will be less likely to do so than if I could script the sites I use so that an intelligent agent can represent me in my interactions. This is of course one of the core suggestions I make in my book The World After Capital in a section titled "Bots for All of Us."

I am sympathetic to those who point to structural risks as a reason to slow down the development of these new AI systems. But I believe that for addressing structural risks the better answer is to make sure that there are many AIs, that they can be controlled by endusers, that we have programmatic access to these and other systems, etc. Put differently structural risks are best addressed by having more artificial intelligence with broader access.

We should still think about other regulation to address structural risks but much of what has been proposed here doesn't make a ton of sense. For example, publishing an algorithm isn't that helpful if you don't also publish all the data running through it. In the case of a neural network alternatively you could require publishing the network structure and weights but that would be tantamount to open sourcing the entire model as now anyone could replicate it. So for now I believe the focus of regulation should be avoiding a situation where there are just a few huge models that have a ton of market power.

Some will object right here that this would dramatically aggravate the existential risk question, but I will make an argument in my next post why that may not be the case.

19 notes

·

View notes

Text

Thinking About AI

I am writing this post to organize and share my thoughts about the extraordinary progress in artificial intelligence over the last years and especially the last few months (link to a lot of my prior writing). First, I want to come right out and say that anyone still dismissing what we are now seeing as a "parlor trick" or a "statistical parrot" is engaging in the most epic goal post moving ever. We are not talking a few extra yards here, the goal posts are not in the stadium anymore, they are in a far away city.

Growing up I was extremely fortunate that my parents supported my interest in computers by buying an Apple II for me and that a local computer science student took me under his wing. Through him I found two early AI books: one in German by Stoyan and Goerz (I don't recall the title) and Winston and Horn's "Artifical Intelligence." I still have both of these although locating them among the thousand or more books in our home will require a lot of time or hopefully soon a highly intelligent robot (ideally running the VIAM operating system -- shameless plug for a USV portfolio company). I am bringing this up here as a way of saying that I have spent a lot of time not just thinking about AI but also coding on early versions and have been following closely ever since.

I also pretty early on developed a conviction that computers would be better than humans at a great many things. For example, I told my Dad right after I first learned about programming around age 13 that I didn't really want to spend a lot of time learning how to play chess because computers would certainly beat us at this hands down. This was long before a chess program was actually good enough to beat the best human players. As an aside, I have changed my mind on this as follows: Chess is an incredible board game and if you want to learn it to play other humans (or machines) by all means do so as it can be a lot of fun (although I still suck at it). Much of my writing both here on Continuations and in my book is also based on the insight that much of what humans do is a type of computation and hence computers will eventually do it better than humans. Despite that there will still be many situations where we want a human instead exactly because they are a human. Sort of the way we still go to concerts instead of just listening to recorded music.

As I studied computer science both as an undergraduate and graduate student, one of the things that fascinated me was the history of trying to use brain like structures to compute. I don't want to rehash all of it here, but to understand where we are today, it is useful to understand where we have come from. The idea of modeling neurons in a computer as a way to build intelligence is quite old. Early electromechanical and electrical computers started getting built in the 1940s (e.g. ENIAC was completed in 1946) and the early papers on modeling neurons can be found from the same time in work by McCulloch and Pitts.

But almost as soon as people started working on neural networks more seriously, the naysayers emerged also. Famously Marvin Minsky and Seymour Paper wrote a book titled "Perceptrons" that showed that certain types of relatively simple neural networks had severe limitations, e.g. in expressing the XOR function. This was taken by many at the time as evidence that neural networks would never amount to much, when it came to building computer intelligence, helping to usher in the first artificial intelligence winter.

And so it went for several cycles. People would build bigger networks and make progress and others would point out the limitations of these networks. At one time people were so disenchanted that very few researchers were left in the field altogether. The most notable of these was Geoffrey Hinton who kept plugging away at finding new training algorithms and building bigger networks.

But then a funny thing happened. Computation kept getting cheaper and faster and memory became unfathomably large (my Apple II for reference had 48KB of storage on the motherboard and an extra 16KB in an extension card). That made it possible to build and train much larger networks. And all of a sudden some tasks that had seemed out of reach, such as deciphering handwriting or recognizing faces started to work pretty well. Of course immediately the goal post moving set in with people arguing that those are not examples of intelligence. I am not trying to repeat any of the arguments here because they were basically silly. We had taken a task that previously only humans could do and built machines that could do them. To me that's, well, artificial intelligence.

The next thing that we discovered is that while humans have big brains with lots of neurons in them, we can use only a tiny subset of our brain on highly specific tasks, such as playing the game of Go. With another turn of size and some further algorithmic breakthroughs all of a sudden we were able to build networks large enough to beat the best human player at Go. And not just beat the player but do so by making moves that were entirely novel. Or as we would have said if a human had made those moves "creative." Let me stay with this point of brain and network size for moment as it will turn out to be crucial shortly. A human Go player not only can only use a small part of their brain to play the game but the rest of their brain is actually a hindrance. It comes up with pesky thoughts at just the wrong time "Did I leave the stove on at home?" or "What is wrong with me that I didn't see this move coming, I am really bad at this" and all sorts of other interference that a neural network just trained to play Go does not have to contend with. The same is true for many other tasks such as reading radiology images to detect signs of cancer.

The other thing that should have probably occurred to us by then is that there is a lot of structure in the world. This is of course a good thing. Without structure, such as DNA, life wouldn't exist and you wouldn't be reading this text right now. Structure is an emergent property of systems and that's true for all systems, so structure is everywhere we look including in language. A string of random letters means nothing. The strings that mean something are a tiny subset of all the possible letter strings and so unsurprisingly that tiny subset contains a lot of structure. As we make neural networks bigger and train them better they uncover that structure. And of course that's exactly what that big brain of ours does too.

So I was not all that surprised when large language models were able to produce text that sounded highly credible (even when it was hallucinated). Conversely I found the criticism from some people that making language models larger would simply be a waste of time confounding. After all, it seems pretty obvious that more intelligent species have, larger brains than less intelligent ones (this is obviously not perfectly correlated). I am using the word intelligence here loosely in a way that I think is accessible but also hides the fact that we don't actually have a good definition of what intelligence is, which is what has made the goal post moving possible.

Now we find ourselves confronted with the clear reality that our big brains are using only a fraction of their neurons for most language interactions. The word "most" is doing a lot of work here but bear with me. The biggest language models today are still a lot smaller than our brain but damn are they good at language. So the latest refuge of the goal post movers is the "but they don't understand what the language means." But is that really true?

As is often the case with complex material, Sabine Hossenfelder, has a great video that helps us think about what it means to "understand" something. Disclosure: I have been supporting Sabine for some time via Patreon. Further disclosure: Brilliant, which is a major advertiser on Sabine's channel, is a USV portfolio company. With this out of the way I encourage you to watch the following video.

youtube

So where do I think we are? At a place where for fields where language and/or two dimensional images let you build a good model, AI is rapidly performing at a level that exceeds that of many humans. That's because the structure it uncovers from the language is the model. We can see this simply by looking at tests in those domains. I really liked Bryan Caplan's post where he was first skeptical based on an earlier version performing poorly on his exams but the latest version did better than many of his students. But when building the model requires input that goes beyond language and two dimensional images, such as understanding three dimensional shapes from three dimensional images (instead of inferring them from two dimensional ones) then the currently inferred models are still weak or incomplete. It seems pretty clear though that progress in filling in those will happen at a breathtaking pace from here.

Since this is getting rather long, I will separate out my thoughts on where we are going next into more posts. As a preview, I believe we are now at the threshold to artificial general intelligence, or what I call "neohumans" in my book The World After Capital. And even if that takes a bit longer, artificial domain specific intelligence will be outperforming humans in a great many fields, especially ones that do not require manipulating the world with that other magic piece of equipment we have: hands with opposable thumbs. No matter what the stakes are now extremely high and we have to get our act together quickly on the implications of artificial intelligence.

13 notes

·

View notes

Text

The Banking Crisis: More Kicking the Can

There were a ton of hot takes on the banking crisis over the last few days. I didn't feel like contributing to the cacaphony on Twitter because I was busy working with USV portfolio companies and also in Mexico City with Susan celebrating her birthday.

Before addressing some of the takes, let me succinctly state what happened. SVB had taken a large percentage of their assets and invested them in low-interest-rate long-duration bonds. As interest rates rose, the value of those bonds fell. Already back in November that was enough of a loss to wipe out all of SVB's equity. But you would only know that if you looked carefully at their SEC filings, because SVB kept reporting those bonds on "hold-to-maturity" basis (meaning at their full face value). That would have been fine if SVB kept having deposit inflows, but already in November they reported $3 billion in cash outflows in the prior quarter. And of course cash was flowing out because companies were able to put it in places where it yielded more (as well as startups just burning cash). Once the cash outflow accelerated, SVB had to start selling the bonds, at which point they had to realize the losses. This forced SVB to have to raise equity which they failed to do. When it became clear that a private raise wasn't happening their public equity sold off rapidly making a raise impossible and thus causing the bank to fail. This is a classic example of the old adage: "How do you go bankrupt? Slowly at first and then all at once."

With that as background now on to the hot takes

The SVB bank run was caused by VCs and could have been avoided if only VCs had stayed calm

That's like saying the sinking of the Titanic was caused by the iceberg and could have been avoided by everyone just bailing water using their coffee cups. The cause was senior management at SVB grossly mismananging the bank's assets (captain going full speed in waters that could contain icebergs). Once there was a certain momentum of withdrawals (the hull was breached), the only rational thing to do was to attempt to get to safety. Any one company or VC suggesting to keep funds there could have been completely steamrolled. Yes in some sense it is of course true that if everyone had stayed calm then this wouldn't have happened but this is a classic case of the prisoner's dilemma and one with a great many players. Saying after the fact that "look everyone came out fine, so why panic?" is 20-20 hindsight -- as I will remark below there were a lot of people arguing against making depositors whole.

2. The SVB bank run is the Fed's responsibility due to their fast raising of rates

This is another form of blaming the iceberg. The asset duration mismatch problem is foundational to banking and anyone running a bank should know it. Having a large percentage of assets in long-duration low-interest-rate fixed income instruments without hedging is madness, as it is premised on interest rates staying low for a long time and continuing to accumulate deposits. Now suppose you have made this mistake. What should you do if rates start to go up? Start selling your long duration bonds at the first sign of rate increases and raise equity immediately if needed. Instead of realizing losses early and accepting a lower equity value in a raise, SVB kept a fiction going for many months that ultimately lost everything.

3. Regulators are not to blame

One reason for industries to be regulated, is to make them safer. Aviation is a great example of this. The safety doesn't just benefit people flying, it also benefits companies because the industry can be much bigger when it is safe. The same goes for banking. You have to have a charter to be a bank and there are multiple bank regulators. Their primary job should be to ensure that depositors don't need to pour over bank financials to understand where it is safe to bank. If regulators had done their job here they would have intervened at SVB weeks if not months ago and forced an equity raise or sale of the bank before a panic could occur.

4. This crisis was an opportunity to stick it to tech

A lot people online and some in government saw this as an opportunity to punish tech companies as part of the overall tech backlash that's been going on for some time. This brought together some progressives with some right wing folks who both -- for different ideological reasons -- want to see tech punished. There was a "just let them burn" attitude, especially on Twitter. This was, however, never a real option because SVB is not the only bank with a bad balance sheet. Lots of regional and smaller banks are in similar situations. So the contagion risk was extremely high. The widespread sell-off in those bank stocks even after the announced backstopping of SVB underlines just how likely a broad meltdown would have been. It is extremely unfortunate that our banking system continues to be so fragile (more on that later) but that meant using this to punish tech only was never a realistic option.

5. Depositors should have taken a haircut

I have some sympathy for this argument. After all didn't people know that their deposits above $250K were not insured? Yes that's true in the abstract but when everyone is led to believe that banking is safe because it is regulated (see #3 above), then it would still come as a massive surprise to find out that deposits are not in fact safe. As always what matters is the difference between expectation and realization. If SVB depositors would take a haircut, then why would anyone leave their funds at a bank where they suspect they would be subject to a 5% haircut? There would have been a massive rush away from smaller banks to the behemoths like JP Morgan Chase.

6. The problem is now solved

The only thing that is solved is that we have likely avoided a wide set of bankruns. But it has been accomplished at the cost of applying a massive patch to the system by basically insuring all deposits. This leaves us with a terrible system: fully insured fractional reserve banking. I have been an advocate for full reserve banking as an alternative. This would let us use basic income as the money creation mechanism. In short the idea is that money would still enter the economy but it would do so through giving money to people directly instead of putting banks in charge of figuring out where money goes. The problem of course is that bank investors and bank management don't like this idea because they benefit so much from the existing system. So there will be fierce lobbying opposition to making such a fundamental change. I will write more posts about this in the future but one way to get the ball rolling is to issue new bank charters aggressively now for full reserve banks (sometimes called "narrow banks"). Many existing fintechs and some new ones could pick these charters up and provide interesting competition for the existing behemoths.

All of this is to say that this whole crisis is yet another example of how broken and held together by duct tape our existing systems are. That's why we are lurching from crisis to crisis. And yet we are not willing to try to fundamentally re-envision how things might work differently. Instead we are just kicking the can.

4 notes

·

View notes

Text

India Impressions (2023)

I just returned from a week-long trip to India. Most of this trip was meeting entrepreneurs and investors centered around spending time with the team from Bolt in Bangalore (a USV portfolio company). This was my second time in India, following a family vacation in 2015. Here are some observations from my visit:

First, the mood in the country feels optimistic and assertive. People I spoke to, not just from the tech ecosystem, but also drivers, tour guides, waiters, students, and professors, all seemed excited and energized. There was a distinct sense of India emerging as a global powerhouse that has the potential to rival China. As it turns out quite a few government policies are aimed at protecting Indian industrial growth and separating it from China (including the recent ban on TikTok and other Chinese apps). Also, if you haven't seen it yet, I recommend watching the movie RRR. It is a "muscular" embodiment of the spirit that I encountered that based on my admittedly unscientific polling was much liked by younger people there (and hardly watched by older ones).

Second, air pollution in Delhi was as bad as I remembered it and in Mumbai way worse. Mumbai now appears to be on par with Delhi. For example, here is a picture taken from the Bandra-Worli Sea Link, which is en route from the airport, where you can barely see the high rise buildings of the city across the bay.

Third, there is an insane amount of construction everywhere. Not just new buildings going up but also new sewer lines, elevated highways, and rail systems. Most of these were yet to be completed but it is clear that the country is on a major infrastructure spree. Some of these projects are extremely ambitious, such as the new coastal road for Mumbai.

Fourth, traffic is even more dysfunctional than I remember it and distances are measured in time, not miles. Depending on the time of day, it can easily take one hour to get somewhere that would be ten minutes away without traffic. This is true for all the big cities I went to visit on this trip (Delhi, Mumbai and Bangalore). I don't really understand how people can plan for attending in person meetings but I suppose one gets used to it. I wound up taking one meeting simply in a car en route to the next one.

Fifth, in venture capital there are now many local funds, meaning funds that are not branded offshoots of US funds, such as Sequoia India. I spent time with the team from Prime Venture Partners (co-investors in Bolt) and Good Capital among others. It is great to see that in addition to software focused funds there are also ones focused on agtech/food (e.g. Omnivore) and deep tech (e.g. Navam Capital). Interestingly all the ones I talked to have only offshore LPs. There is not yet a broad India LP base other than a few family offices and regulations within India are apparently quite cumbersome, so the funds are domiciled in the US or in Mauritius.

Sixth, the "India Stack" is enabling a ton of innovation and deserves to be more widely known outside of India (US regulators should take note). In particular, the availability of a verified digital identity and of unified payments interfaces is incredibly helpful in the creation of new online and offline experiences, such as paying for a charge on the Bolt charging network. This infrastructure creates a much more level playing field and is very startup friendly. Add to this incredibly cheap data plans and you have the foundations for a massive digitally led transformation.

Seventh, India is finally recognizing the importance of the climate crisis both as a threat and as an opportunity. India is already experiencing extreme temperatures in some parts of the country on a regular basis (the opening of Kim Stanley Robinson's Ministry for the Future extrapolates what that might lead to). India is also dependent on sufficient rainfall during the Monsoon season and those patterns are changing also (this is part of the plot of Neal Stephenson's Termination Shock). As far as opportunity goes, India recently discovered a major lithium deposit, which means that a key natural resource for the EV transition exists locally (unlike oil which has to be imported). India has started to accelerate EV adoption by offering subsidies.

All in all this trip has made me bullish on India. Over the coming years I would not be surprised if we wind up with more investments from USV there, assuming we can find companies that are a fit with our investment theses. In the meantime, I will look for some public market opportunities for my personal portfolio.

5 notes

·

View notes

Text

Termination Shock (Book Review)