#which was defined in the recursion short as a solution that's interesting and easy to visualize

Text

day 10 // 100doc && day 25 // 100dop

wiped down study space 🤧

watched the recursion & merge sort shorts (today i learned that recursion is actually very very dumb. recursive functions don't even know what they're doing, they just know to keep doing it. they're "dumber" than the iterative versions of the function. they're so efficient because they're so dumb. they only know what they need to know...and they don't care to know more. 🤯)

worked on 1/4 practice problems (my initial solution is wrong. debugger told me exactly what's wrong with my logic but i'm tired so i'll fix it tomorrow. at this point, the debugger does 50% of the work for me XD lately, every time smth goes wrong with anything, i just set a breakpoint XD)

read

piano (sometimes i get stuck in a zombie loop of "i must do this", this being coding rn, and then i disregard everything else and then i wonder why am i suddenly so unproductive? OH YEAH! it's cuz my brain needs a variety of stimuli. and i've been feeling sad that i don't know how to play the music playing in my head...)

tried and failed to find the theme used in cs50's vscode on the cloud... i like how it colors the variable name and the variable type differently for my bad eyes... anyone know what it is? XD

#thanks unsplash#codeblr#progblr#100 days of code#comp sci#cs50#stemblr#studyblr#stem student#dark academia aesthetic#dark academia#desk aesthetic#study aesthetic#studyspo#study space#astudentslifebuoy#recursion is such a dumb form of ''elegance''#which was defined in the recursion short as a solution that's interesting and easy to visualize#yeah its dumbness is definitely interesting bc it seems so smart...but it only seems that way bc it's really so dumb XD#god this is hilarious#100dop#100 days of productivity

12 notes

·

View notes

Text

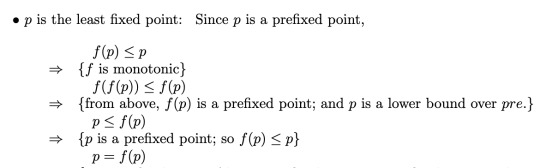

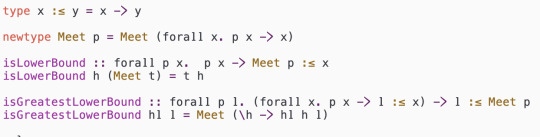

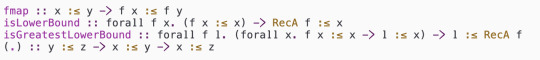

Generalized Church is the Curry-Howard of Knaster-Tarski

The proofs-as-programs principle (also known as the Curry-Howard isomorphism) sets up a correspondence between logical proof systems and type systems for programming languages. So occasionally a result is independently developed twice, once as a theorem and once as a program. Here’s a short and cute example of this phenomenon. (I read about it in this textbook, but I think it deserves to be better known).

The Program

The program we will consider is the roll and unroll functions. At this point you might like to try yourself to implement them (my own solution is further down this post). It’s an interesting exercise, because the function definitions look completely unintuitive, but they are actually easy to write if you let the types guide you. Using Haskell’s “typed holes” feature, you can start with just an underscore _, the typechecker will tell you “this underscore should be replaced by a term of type …”, and you can gradually refine it by putting in the only thing that has the right type at that point.

This coding of recursive types has been rediscovered many times, but I think it was made widely known by this note by Philip Wadler from 1990. I don’t know if there is a standard name for it, but I’ll call it the generalized Church encoding. (If you instantiate f with option you recover a slight variation of the usual Church encoding of natural numbers.) It’s imporant because what it tells us about the expressiveness of System F—it shows that just System F without any additional features can already encode (positive) recursive datatypes. But it’s not used much in practice because it has the usual drawbacks of Church encodings:

It’s inefficient at runtime, e.g. calculating the predecessor of a natural number takes O(n) time instead of O(1).

You often need impredicative polymorphism to define functions operating on them, which may offend your constructivist sensibilities or confound your type checker.

In standard Type Theory it’s not possible to prove the induction principle, so you can’t prove any interesting theorems about them. (However, as Wadler’s note shows, if you can prove or assume parametricity, that gives you some additional power.)

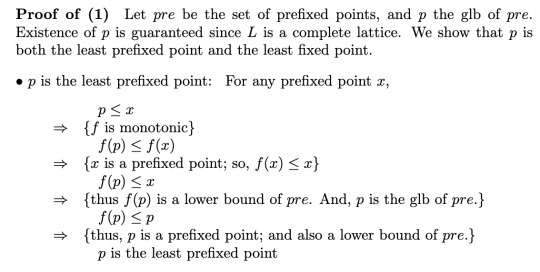

The Proof

Meanwhile, (part of) the Knaster-Tarski fixpoint theorem states that if f : X → X is a monotone function on a complete lattice, then ⋀{x | f x ≤ x} is the least fixpoint of f.

This theorem is well known to programming language researchers, because it can be used to as a unifying form for different kinds of induction principles. For example, Benjamin Pierce’s textbook Types and Programming Languages appeals to it to explain how induction and coinduction over typing relations work, by taking the lattice X to be a powerset of relation tuples, ordered by subset inclusion. (Note that this is not obviously connected to the problem of encoding recursive types. In Types and Programming Languages, the recursive terms are assumed as already given, and the theorem is used to define sets of those terms.)

The proof is only a few lines, but it’s still kindof tricky, each time I try to remember it I fail and have to look it up again. Here’s how it looks in a random pdf that google turned up:

It’s kindof neat, isn’t it? I particularly like the twist where it goes “in order to prove f(p) ≤ p, we first prove p ≤ f(p)”.

The Correspondence

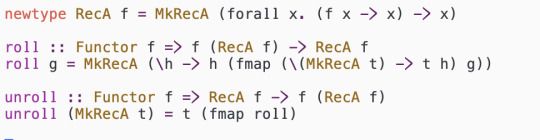

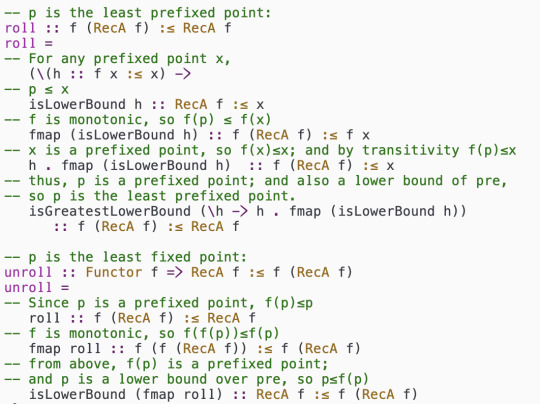

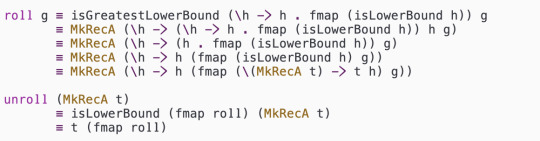

Here’s the definitions of roll and unroll that I came up with. I particularly like the twist where it goes “in order to define unroll, we first define roll”.

Now, it’s not obvious from just looking at it, but I claim that this is basically a line-by-line translation of the proof I quoted above.

In order help to make them match up, we will allow ourselves to write x≤y as alternative syntax for the function type x→y. So the lattice we will consider is the set of types ordered by function mappings, or (on the other side of proofs-as-programs) logical propositions ordered by implication. Let’s investigate each part of the proof.

Let pre be the set of prefixed points, and p the glb of pre. Existence of p is guaranteed since L is a complete lattice.

What the pdf calls p we will call (RecA f). This part of the proof is the only bit that requires some creative thought to set up a correspondence, because we have to invent the definition of RecA. It turns out that the lattice of types forms a complete lattice, where for any set of types {x | P x}, the greatest lower bound ⋀{x | P x} can be represented as the type ∀x.(P(x) → x). In order see that this really is the greatest lower bound, we prove two theorems, saying that it is a lower bound of {x | P x}, and that it’s greater than any other lower bound.

Then the type (RecA f) is just the type Meet, specialized to take P(x) := (f x ≤ x).

From now on we can forget the definition of ≤, and in the rest of the proof every occurrence of → refers to logical implication. All the rest of the proof is just a line-by-line translation, under the usual proof-as-programs correspondence:

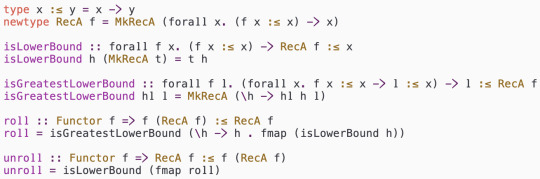

Here’s the program we just constructed:

We can expand out the definitions of isLowerBound, isGreatestLowerBound, and (.) to confirm that this really is exactly the same as the original Haskell program above:

But it’s also worth noting that it will still typecheck for any definition of the type constructor ≤, as long as we provide terms of the right type for the lattice lemmas. So this really is a translation of the proof in its full generality.

I’m not sure exactly what moral to draw from this exercise, except perhaps this: If you feel confused trying to prove the Knaster-Tarski theorem, you shouldn’t! If you pretend it’s a Haskell program, it’s easy to construct the proof by just following the types.

23 notes

·

View notes

Text

A little something about morality. Enough of my life has been spent working with mathematical structures and other theoretical constructs that the patterns of thought trained for those modes have bled through to other parts of my life. Morality is a core facet of life and its been shaped by the math just as much as the rest.

Generating complete mathematical proofs taught me more about skepticism than any other experience in my life. So often on the road to proving a theorem you will encounter the thought "it would be really convenient for this logical structure if fact X held". You can see how all the pieces fall in to place given the solidity of X and X probably has a certain internal plausibility and if X just held in truth then you could move on from this proof to something more relaxing (you may or may not have an unhealthy amount of animosity toward the proof built up at this point). It's tempting to write your first line "it's trivial to show X holds", sketch out the rest, and hope you've kicked up enough dust to pass - I've encountered my fair share of "proofs" using that structure. The sticking point is, it is mathematical maturity not to take the easy route of hope and instead to demonstrate the rigour required to generate a complete sound answer. And there's a reason for this. Your intuition in this area is untrained and often you discover (forging ahead) that you cannot prove X. After a lot of sweat and a pinch of luck you discover the counterexample or contradiction inherent in X that renders it false - the true proof proceeds along other lines.

This general lesson is useful. Convenience or wishing will not make a thing true and intuition should remain tempered with humility. This was actually one of the nails in the coffin for my religious inclinations. So many doubts and questions are answered if religion X is correct in its foundational claims but that has no bearing on whether X is true (only that its comforting). If you're honest with yourself then you cannot use that reasoning from most convenient world.

The next lesson comes from complexity theory. Sure complexity theory is an offshoot of the study of algorithms and so carries the faint stigma of applied mathematician to the theoretician but, stigma aside, it is a deep field full of interesting structure. Far more than I can render here so let's focus on one part. Complexity theory separates problems into an infinite leveled hierarchy depending on how hard the problem is to solve. In general, a problem is on level n+1 of the hierarchy if you can solve it when given access to a magic box that instantly solves any problem on level n. This simple picture is somewhat complicated by the fact that we don't know yet whether there are truly infinite levels or whether the structure collapses to some finite number of sets. This uncertainty is a source of some unease within the community.

Personally, I hold that the hierarchy is infinite because I believe that any universe where this is not true is simply not perverse enough to resemble our own. If my faction is correct then it is a point of mathematical correctness that there problems where its strictly easier to recognize a solution than it is to generate one (that one might also generalize, good writing and good editing are parallel skills but the first seems strictly harder than the second). By way of canonical example, its easier to verify given variable assignments to any random boolean equation than it is to generate those boolean assignments from scratch (verily, humans must earn their truth assignments by the sweat of their brow). This is the lesson I want you to learn from this section: when you see someone present an argument or a position and you compare it to your world view in a away that makes the argument seem both completely revolutionary and completely obvious, you have to step back and give them credit for locating that idea in the first place. This is a non-trivial amount of work. Conversely, when you recognize a particular solution to a general problem, give yourself a pat on the back because you've done something real.

An aside here, I realize that the spirit of the second part clashes somewhat with the lesson of the first part. All I'll say is that it all rounds down to humility and a conviction that issues we don't already recognize as easy almost certainly reduce to something intractable.

Let's talk about solvable. Wait, first a second aside - this is the true power of quantum algorithms. There are methods to use the uncertain nature of quantum bits to to quickly search the entire space of possible solutions and with high probability locate the exact solution to a given problem. It feels like magic and will revolutionize our world when engineering catches up to theory.

Let's talk about solvable. In particular, what do you do when the problem you wish to solve lies in one of the higher levels of the hierarchy, the levels we can't reach? Unless you're prepared to spend ungodly amounts of time on it or you get insanely lucky then you don't get to know an exact answer to the particulars of the problem. You *must* reframe it in a way that allows for an approximate answer, in effect transforming the question into a cousin living lower on the hierarchy. You're being forced to compromise, the universe is built to make you use heuristics. This is important - it's not about heuristics being quicker, it's about exact methods not being able to give you answers at all. Integer programming looks a lot like linear programming but it is in fact significantly more difficult in the general case. The structure of the universe means you must think in probabilities and in error bars and ponder trade-offs, in short the judgment no one asks of an oracle.

An event occurs in front of two of us. I know what I witnessed. And I'm almost certain that you didn't blink. And it's highly probable that you know I witnessed it. And its pretty likely that you think I think you witnessed it. And so on, with a little bit more uncertainty creeping in at each moment of recursion. This sad state of affairs is fine for the most part because usually the stakes are so low. But ask yourself how much more you'd care about common knowledge if your life depending on my having witnessed precisely the same event as you. We hear about that failure mode in the context of criminal trials all the time. Building shared knowledge requires enough work that it's used in the bureaucratic filter for asylum seekers and green card applicants. When you see one person able to get a group to agree on one particular interpretation of facts, remember that you just witnessed something hard. True common knowledge is infinite levels of recursion in the stack and it is impossible. For every situation, there's a point where the probability on the next level is too low for confidence, it's just a question of whether you calculate that far. And the probabilities deteriorate faster the more people you add.

We started with some fairly basic math truisms and moved through some hand-wavy logic constructions, let's end with something obscure. Communication is not free. If I want to learn about something that's happening far away or coordinate some communal action, I have to rely on messages that take multiple hops to reach their destination. We still have not mastered error correcting codes for common speech, much less methods for achieving better clarity in communication (case in point, how nonsensical most of this post reads), so your messages are going to get altered en-route. Beyond that, you're still the victim of the network. The effort/uncertainty of message sending defines a finite limit in the number of hops that we can use and still feel confident in the response. There are certain pathological graphs in which the vast majority of nodes believe thing one but when polling only their k-distant neighbors will come to believe that thing two is actually the majority belief. This is beyond government censorship or Overton windows, this is about never being able to know whether your mental image of society is accurate or if you've just been deceived by the structure of your communication.

It's the end and you're feeling cheated because I didn't say much about morals despite promises in my very first sentence. Morality as we understand it today is a bunch of ad hoc, best effort responses to a set of questions that were poorly defined in their original contexts and which haven't altered to match the multiple ways our social world has changed. If you've read the above then you realize solutions are hard and solutions to problems with many parts are harder. Social solutions addressing problems involving many people are worse and pro-social solutions are monumental. Answers are always going to rely on guesswork, methods need to be able to be executed under uncertainty, and if you think a general code of behavior is easy then you're wrong. You must cultivate judgment because the universe is not built to let you offload your thinking to some perfect algorithm.

7 notes

·

View notes