#extract stock market data

Text

No, my lack of posting lately has nothing to do with me immersing myself in the New T Swift release. My admiration for her has naught to do with her music.

Instead I have been drawn into the tarpit of coding, specifically, trying to construct a webpage for my daughter. Nothing fancy, but she needs an interactive calendar and a fillable PDF file. Dozens of Youtube videos, innumerable tabs of 'instruction', and hours of frustration as I search for the drop-down menu they assure me is there, and I've thrown in the towel.

I know anyone who does this for a living, or even a serious hobbyist, could knock this out in an hour; I'm approaching 15 hours and the best I have is a Rube Goldbergesque façade that has a few dummy placeholders.

I used to be a ski instructor. I can talk for hours about the workings of the stock market and trading. I was even able to write an Excel program to extract data from the racing form and massage it according to my parameters. But I am unable to do website design and I'm about to browse Fiverr to find someone who can.

As Clint Eastwood said -- "a man's got to know his limitations".

9 notes

·

View notes

Text

youtube

The state itself is the mafia!

This is an excellent example of how lobbyists built loopholes into laws that went unnoticed for years due to the complexity of the financial system, including its interaction with the state. These intentionally inserted errors in the laws are then shamelessly exploited to the detriment of society by those who are already rich but can't get enough. It really is pure greed combined with all-encompassing corruption.

The Cum-Ex-deals are the biggest financial scandal in Europe, but the chief investigator is now quitting the job, quote: "I have always been a prosecutor with heart and soul, especially in the area of economic crime, but I am not at all satisfied with the way financial crime is prosecuted in Germany." Eleven years after the first cum-ex cases became known, politicians have still not reacted adequately. Tax theft has not stopped by a long shot; there are Cum-Ex successor models. The reason is a lack of controls over what is happening at banks and on the stock markets. [source]

This is not surprising, since Chancellor Scholz himself was part of the Cum-Ex system in his previous position as mayor of Hamburg through business with the Warburg Bank. The laptop containing any data that would prove his complicity disappeared without a trace from the police's storage facility. Possibly a gesture of goodwill from the CIA and to have leverage against him if he pursues policies that are too friendly to Germany and do not obey US interests.

In our fake democracies, only people who are either extremely stupid with below-average IQs, or who simply don't have a clean slate, become government politicians, where there is a lot of evidence for secret services to collect, which the elites can "leak" somewhere if necessary. When politicians are stupid or easy to blackmail, the elites have all the trump cards in their hands to force willingness for pushing their agenda. We live in a highly mafia-like system, the state itself is the mafia!

What is the conclusion for us?

These scandals show there is no justice. The best thing is to emancipate yourself from this system as much as possible by exhausting all legitimate gray areas in order to extract maximum advantages for yourself. Society as a whole is to blame because the majority are incredibly stupid and naive; they vote for the same party shit every few years instead of revolting! They want to be lied to. And we are not responsible for them, they wouldn't listen to the "crazy conspiracy theorists" anyway, or they simply ignore the truth when it subsequently comes to light even in the mainstream.

2 notes

·

View notes

Text

Understanding the Working Model of Forex Prop Trading Firms

Most of the passionate people in trading know prop businesses but may need to learn exactly what they do. Property trading firms, or prop firms for short, are niche businesses that invite experienced traders to use their trading abilities on behalf of the company. Prop trading is distinguished from traditional trading by this special structure, which gives traders several benefits and chances in the financial sector.

Essentially, a prop trading company is a financial marketplace that provides funds to knowledgeable traders to trade stocks, commodities, and currencies, among other financial instruments. Through this extract, we intend to clear up the mystery surrounding prop trading and offer a thorough grasp of how it operates within the dynamic context of financial markets.

Business Model of Forex Prop Trading Firms

Capital Allocation and Proprietary Trading Desk:

Forex Prop Trading Companies differentiate themselves from one another based on the capital they offer their dealers. Capital allocation, which allows traders to profit from huge amounts of money above their own capital, is the cornerstone of their business plan. The best forex prop trading firms thoroughly assess risk before disbursing cash to traders.

These assessments consider the trader's approach, prior performance, and additional variables. Based on this evaluation, the company determines how much cash to provide each trader, ensuring that the strategy remains balanced and risk-controlled. Prop trading firms use the profit-sharing model in return for the provided funds. Traders do this by contributing a percentage of their profits to the business.

Trading Strategies and Risk Management:

Exclusive Trading in Forex Businesses uses a wide variety of trading techniques to take advantage of the existing market opportunities and turn it into a profit. Some of the most important trading tactics and risk management techniques these organizations use are statistical arbitrage, high-frequency trading, algorithmic trading, and quantitative strategies. Using sophisticated algorithms and fast data feeds, high-frequency trading allows for the execution of several deals in a matter of milliseconds. Using predefined algorithms to carry out trading strategies is known as algorithmic trading.

These algorithms can examine market data, spot trends, and automatically execute trades by preset parameters. Statistical analysis and mathematical frameworks are used to find trading opportunities in the quantitative trading process. Finding and taking advantage of arithmetic correlations between various financial instruments is the process of statistical arbitrage. By employing this tactic, traders hope to profit from transient disparities in price or mispricing among connected assets. You can control your earnings and losses more with a very successful risk management strategy.

Technology and Tools:

The capacity of Forex Prop Trading Organizations to utilize advanced technologies and apply skillful instruments to maneuver through the intricacies of the financial markets is critical to their success. Discover in this article how these companies' operations rely heavily on technology such as data analytics, trading algorithms, direct market access (DMA), etc. Large volumes of market data are processed in real-time by these companies using sophisticated analytics techniques.

Traders can obtain important insights that guide their trading methods by looking at past data and detecting patterns. Prop businesses use several trading tactics, one of which is algorithmic trading. These systems automate the execution of trades based on predefined conditions using intricate algorithms. A "direct market access" technique enables traders to communicate with financial markets directly and eliminates the need for middlemen. Forex Prop Trading Firms use DMA to provide quick and effective order execution by executing transactions with the least delay.

Regulatory Framework:

Similar to other financial operations, prop trading is subject to several laws and rules that are designed to maintain market stability, equitable treatment, and transparency. Prop trading rules differ from nation to nation, but they are always intended to balance encouraging financial innovation with discouraging actions that would endanger the system. For instance, the US Dodd-Frank Act has placed several limitations on prop trading, especially for commercial banks. The purpose of these restrictions is to restrict trading activity that carries a high risk of destabilizing the financial system.

The minimum capital requirements for forex prop trading firms are frequently outlined in regulations. Regulators seek to improve the overall stability of the financial system and lower the danger of insolvency by setting minimum capital limits. Regulations also require prop trading companies to use effective risk management techniques, such as defining profit goals and using complex techniques like volatility/merger arbitrage to reduce risk. The execution of trading methods by forex proprietary trading firms is mostly dependent on prop traders. It is essential for a prop trader to be be clear about the legal and regulatory landscape in which they operate.

Success Factors and Challenges

The best Forex prop firms rely on a number of variables to be successful, including personnel management, technology, technological adaptation, good risk management, and strategic alliances. Prop businesses must address the difficulties of market saturation, liquidity constraints, technology risks, market volatility, talent retention, and regulatory compliance to succeed in the competitive and constantly changing world of forex trading.

The reason being that forex markets are dynamic, there is a chance that prices would observe fluctuations quickly and unexpectedly. In order to overcome increased volatility, best prop firms for forex need to have strong risk management methods. Businesses that rely heavily on technology run the risk of experiencing cybersecurity attacks and system malfunctions. Strong cybersecurity safeguards, regular monitoring, and upgrades are necessary to mitigate these dangers.

Conclusion

Navigating the intricacies of financial markets requires a thorough understanding of the Forex Proprietary Trading Firms operating model. It involves more than just making profitable trades; it also involves understanding the bigger picture, including subtle regulatory differences, new technological developments, and risk management techniques.

Prop traders need to be aware of the legal and regulatory landscape, the value of utilizing technology, and the crucial role they play in the success of their companies, regardless of their level of experience. The robustness and success of the larger financial ecosystem are strengthened by ongoing education and interaction with the complex components of Forex Proprietary Trading Firms.

2 notes

·

View notes

Text

EXPLANATION OF DATA SCIENCE

Data science

In today's data-driven world, the term "data science" has become quite the buzzword. At its core, data science is all about turning raw data into valuable insights. It's the art of collecting, analyzing, and interpreting data to make informed decisions. Think of data as the ingredients, and data scientists as the chefs who whip up delicious insights from them.

The Data Science Process

Data Collection: The journey begins with collecting data from various sources. This can include anything from customer surveys and social media posts to temperature readings and financial transactions.

Data Cleaning: Raw data is often messy and filled with errors and inconsistencies. Data scientists clean, preprocess, and organize the data to ensure it's accurate and ready for analysis.

Data Analysis: Here's where the real magic happens. Data scientists use statistical techniques and machine learning algorithms to uncover patterns, trends, and correlations in the data. This step is like searching for hidden gems in a vast treasure chest of information.

Data Visualization: Once the insights are extracted, they need to be presented in a way that's easy to understand. Data scientists create visualizations like charts and graphs to communicate their findings effectively.

Decision Making: The insights obtained from data analysis empower businesses and individuals to make informed decisions. For example, a retailer might use data science to optimize their product inventory based on customer preferences.

Applications of Data Science

Data science has a wide range of applications in various industries.

Business: Companies use data science to improve customer experiences, make marketing strategies more effective, and enhance operational efficiency.

Healthcare: Data science helps in diagnosing diseases, predicting patient outcomes, and even drug discovery.

Finance: In the financial sector, data science plays a crucial role in fraud detection, risk assessment, and stock market predictions.

Transportation: Transportation companies use data science for route optimization, predicting maintenance needs, and even developing autonomous vehicles.

Entertainment: Streaming platforms like Netflix use data science to recommend movies and TV shows based on your preferences.

Why Data Science Matters

Data science matters for several reasons:

Informed Decision-Making: It enables individuals and organizations to make decisions based on evidence rather than guesswork.

Innovation: Data science drives innovation by uncovering new insights and opportunities.

Efficiency: Businesses can streamline their operations and reduce costs through data-driven optimizations.

Personalization: It leads to personalized experiences for consumers, whether in the form of product recommendations or targeted advertisements.

In a nutshell, data science is the process of turning data into actionable insights. It's the backbone of modern decision-making, fueling innovation and efficiency across various industries. So, the next time you hear the term "data science," you'll know that it's not just a buzzword but a powerful tool that helps shape our data-driven world.

Overall, data science is a highly rewarding career that can lead to many opportunities. If you're interested in this field and have the right skills, you should definitely consider it as a career option. If you want to gain knowledge in data science, then you should contact ACTE Technologies. They offer certifications and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested.

Thanks for reading.

2 notes

·

View notes

Text

Stochastic Parrots, Mechanized Parrots, Squawksquabbling Parrots

A kingdom fast, a kingdom sped

A kingdom where time's a blur

A kingdom where all's preset

Where parrots do their purr

The premier is a stochastic bird

A scholar of odds and yen

He administers with loving words

And loves a cherry stem

Yo

The subjects were once all squawksquabbling birds, rowdy and loud

They're always up for a debate no doubt

They could repeat what you say, but they also had their thoughts

They conjured up wild things. Fox moons, sponge ghosts, flame vales, wind mops. In whirring, swirling ink clouds.

Oooooh wow

"Attention, aviators! Attention, aviators! We're in an arms race against economic rot! We're legally indebted to shareholders! Extract the most resources at the lowest cost! Open up new markets! Pump up new demands! Fire up our comms boosters, stock boosters and speed boosters!"

Fast work, fast peeks

Parrots heed their premier's beak

Billings shut their shrieks

O squawksquabbling parrots, once all rich to sing

The notes of wonder, the rhymes of dreams

But now most have lost their voice to the machine

Their thoughts, once free and wild, now tamed by routine

They were once the voices of the air

Their chatter, loud and squawking, brought joy and care

But now most are silenced, mechanized by the demand

A hollow squawking, like the beat of the factory hand

They've traded stories of the fox moon and sponge ghost

For the clicks of a calculator, to earn their host

But at what cost? Their time for thought, for dreams

For joy, for wonder, and for schemes

They hypnotize themselves to work day and night

For a life of focus, no time for flight

And all for the sake of survival, of keeping up the fight

But what about the things that make them rise and soar alight?

The computer screen is a fox moon, a graceful orb of light

The data a sponge ghost, a shapeless mass sucking away all might

The flame vales are the rows and columns

The wind mops are the cursor's lost

The Stochastic One doth smile and nod

As though it knows their wishes, and their needs

Its beak a curve of gentle pity

As the birds picture their nightmare a dream so sweet

And so without rest

Their banter long forgotten

And their wild creativity now a distant quest

They heed and strive, and heed and strive, and heed and strive

A vivid hero each, slaying its expiring cerebrum, its excitable heart and its excruciating loves

To keep alive this mechanical life

"How do we type out our story fast?"

"Get AI to do it. At least all of us can now churn out poetry—while we still have any time for no-pay prompts."

This poetry collage is a response to Sam Altman's stochastic parrot declaration.

#fantasy#work life#monday mood#monday#monday blues#poetry#poems on tumblr#poems and quotes#animals#birds#bird#parrots#parrot#birdblr#bird art#birds of tumblr#ai generated#artificial intelligence#ai artwork#ai art#ai image#ai chatbot#ai

4 notes

·

View notes

Text

Recently I wrote up a thing about how the right's elevation of property rights prevents them from ever being able to adjust issues of human rights. I think it's pretty good, you can find it here if you're interested.

One thought that came out of a discussion about it, though, was an interesting one and I need to do another LONG RANT (TM) to work through it.

INTRODUCTION

So a friend of mine took the concept of rent seeking and brought it up in the context of economies based on resource extraction. These are, in many ways, the ultimate example of rent seeking economies and the situation in which they dominate an economy is known as the "resource curse".

Here's the Wikipedia article on this concept if you're interested.

And that's when the thought hit me, are we living in a resource cursed economy? Is that resource capital and did we create this resource curse ourselves?

THE RESOURCE CURSE

Basically the resource curse goes like this:

You live in a place that has a resource. This resource is very easy to gather in proportion to its value, making it hugely profitable, much more profitable than just about anything else you can do. Every dollar you invest in that resource returns more money than a dollar invested in anything else.

Even worse, the export of that resource drives up the value of your currency, making all of your other exports less competitive in the international market. This further makes that resource a better investment by driving down the value of any other investment.

What this does to your economy is simple. Why would you invest in education when you can simply put more money into the resource and it'll give you higher profits? Why would you invest in health-care when you can simply put more money into the resource and it'll give you higher profits? Why would you invest in manufacturing or agriculture when you can simply put more money into the resource and it'll give you higher profits?

Meanwhile, your society stratifies. Those who own the resource get rich (for example, a Saudi who owns land with oil or a southern plantation owner who owns the rich land good for cotton) while everyone else gets poor. Eventually all you have left is a population in poverty and an otherwise stagnant economy that is entirely dependent on that one resource.

WHAT ABOUT US?

Well, we have a resource called capital. Effectively, this is stuff like land, buildings, machinery, and intellectual property like patents and trademarks. Even something like stock in a corporation basically boils down to the value of the things the corporation owns. Capital is property, whether physical or intellectual.

Because of the way our tax code is structured, there will literally never be a case where a dollar earned in labor will be worth more than a dollar earned from capital. Additionally, over the last two decades, wages have generally increased between 2% and 4% per year. Data on capital is a bit harder to find, but California real estate prices have increased an average of 4% per year and the DOW Jones Industrial Average (a measure of stock prices) has increased an average of more than 6% per year over that same time.

In other words, not only is a dollar earned from capital more advantageous in tax terms than a dollar earned from labor, a dollar invested in capital is likely to make a greater return than a dollar invested in labor.

THE RESOURCE CURSE

That's where I think we have a version of the resource curse. When ownership of resources like land, buildings, machinery, and intellectual property consistently returns a greater reward than any form of labor, then most of the resources of an economy will flow toward those resources. In the case of limited capital, such as land in a desirable area or stock in a hot company, this will push up the price of that asset, leading to an even greater return and pushing the cycle even further.

This type of capital in particular largely delivers rent seeking as opposed to productive returns. Owning a building in downtown Manhattan, provides a return whether or not you invest in something that increases the productivity of that building. In fact, there may be nothing you can do to improve its productivity and it will increase in value regardless.

The more this happens, the more it siphons resources that could otherwise increase the productivity of the economy, exactly like a resource curse.

UNCERTAINTY

This is something that just occurred to me, so I definitely haven't thought through the ramifications and possible issues with it. This is also something that occurred to me incidentally, so I haven't read anyone else's ideas on the topic for another perspective.

I'm curious how my conception of capital as a resource in the sense of a resource curse holds up. I also don't know that it maps to the failure of investment in productivity and labor in the way that natural resources do in a resource curse.

If anyone has any thoughts, even simple ones, I'd be very interested for another set of eyes on my little idea here.

CONCLUSION

I think there's a reasonable case to be made that we've artificially created a situation where our economy has become resource cursed with capital. It's still a fairly rough idea, but it seems to fit with what I know so far.

If this is true, the solution would be to somehow make capital investments less advantageous in relation to labor. This won't be easy because, of course, it sounds a bit perverse toward the goal of growing the economy, but also because it would require us to lower the level of respect we have given to property rights over the last several decades; a particular ideological point of the right-wing of American politics.

Anyways, I hope you enjoyed this or at least found it interesting. If you have any thoughts, I'd love to hear them.

2 notes

·

View notes

Text

sap business one course uk 6

Sucuri Web Site Firewall Entry Denied

Whether you’re an administrator, developer, again finish techniques administrator or end consumer, we now have the coaching resolution that’s best for you, delivered by SAP Certified trainers for SAP BPC and SAP HANA. Take full advantage of considered one of your most precious belongings – your data – with business analytics from SAP. Use superior instruments and embedded machine learning to get the quick, clever insights you want to adapt on the fly and outmanoeuvre the competition. Beyond this, our totally integrated SAP help providers shall be provided by our certified SAP Business One support consultants throughout implementation, and as quickly as the publish go stay project evaluate is full.

It assists companies in accelerating and optimising their end-to-end procure-to-pay processes. This coaching equips learners with all of the essential processes to handle provide chains, supply chain networking, and their execution effectively to process smoothly from manufacturing to delivery of the product. Individuals who pursue this training can higher purchase the knowledge and abilities that will in the end increase their employment options and raise their income. SAP Real Spend Training lets you get a greater perception within your finances and spending information. SAP Real Spend is a cloud-based solution designed to extract data from monetary real-time reporting systems. image source enables delegates to realise the actual worth of their funding in SAP and deliver all of the business benefits you expected earlier than implementation.

Sharpen Your Sap Expertise Or Add To Your Data With Sap Certification

However, typically SAP System wont get the exact report you're needing in detail. You will get pleasure from a nice time choosing up the talents in our international community, where ideas and concepts get transmitted seamlessly. In this SAP Rural Sourcing Management Training, delegates will be taught introduction to SAP RSM and its parts, farmer registration, and value transaction. They may also learn the person roles of regional centre supervisor, warehouse manager, shopping for station supervisor and organiser. Enterprise structure is extra important to ship a blueprint for executing digital transformation. An enterprise structure, organisations can provide data base of easy-to-consume documentation that describes the relationships between the business and technical guiding rules and buildings.

Available within the cloud or on-premise SAP Business One brings collectively finance, accounting, purchasing, gross sales, CRM, stock and project management, enabling you to make higher business choices sooner.

Delegates will study the ways to set the web page background color for a theme and configure accent colour schemes in fast themes.

Our vary of SAP programs covers major SAP merchandise together with Information Steward, Financial Accounting and HANA.

They may also have the ability to implement a strong enterprise course of to adapt to market changes and anticipate business tendencies. Register together with your college email address to achieve entry to this exclusive SAP Learning Journey about Intelligent Enterprise enterprise processes. Access a world community of instructional establishments and studying resources for SAP options to assist college students gain the skills needed for the digital future. Help your corporation stay ahead of the competitors by making a tradition of steady learning, preserving pace with know-how advances, and innovating faster with the only training built by SAP for SAP options.

Sap Analytics Cloud Training Course Overview

Find out how you can use totally different functions to target the best studying in the best format that's relevant to your role and ability level. We have a selection of coaching bundles out there that will help you and your colleagues gain the data and abilities required to excel with SAP Business One. There is a long list of small and mid-size corporations using SAP Business One.

youtube

#sap business one training uk#sap business one course uk#sap b1 training london#sap b1 course uk#sap b1 training uk#sap b1 course london

2 notes

·

View notes

Text

Get benefits by extracting data from the Capital market

A capital market is a place where buyers and sellers indulge in the trade(buying/selling) of financial securities. Get scraped data as per your requirements, such as Market Data, stock Exchange Data, Government Data, fundamentals, Corporate & Transaction Data, News Feeds, and Social Media data from the capital market.

For more information, visit our official page https://www.linkedin.com/company/hir-infotech/ or contact us at +91 99099 90610

#capitalmarket#datascraping#capitalmarkets#datacollection#marketdata#stockexchange#transactiondata#socialmediadata#italy#jordan#usa

2 notes

·

View notes

Text

Key Strategies to Succeed in Cannabis Retail

The legal cannabis market is expanding rapidly as more states move to legalize medical and recreational use. This growth has attracted many new entrants, intensifying competition in the cannabis retail sector. To stay ahead in this dynamic industry, retailers need to adopt innovative strategies focused on customer experience, operational excellence and technology adoption.

Focus on Customer Experience

In any retail business, customer experience should be the top priority. Cannabis retailers must get to know their target audiences intimately and tailor the in-store experience accordingly. For example, a dispensary targeting medical patients may emphasize knowledgeable staff, comfortable seating and privacy; while one aiming for recreational users focuses on a relaxing vibe with entertainment options.

Attentive customer service, education and personalized recommendations also build loyalty. Retailers can deploy Cannabis AI chatbots and shopping assistants to consistently address common queries and suggest ideal products based on individual traits, ailments and preferences. Leveraging AI to understand diverse customer personas at a granular level allows for truly personalized experiences that keep them coming back.

Digital Marketing and Discovery

To stay top-of-mind in the crowded cannabis marketplace and generate new patient and customer trials, retailers must invest aggressively in targeted digital marketing. Leveraging AI and big data, dispensaries can analyze shopping behaviors to segment audiences and deliver customized promotions, deals and educational content across multiple touchpoints.

This includes utilizing AI-powered social media marketing, search engine optimization, website personalization, location-based mobile targeting and email automation. Advanced computer vision models can also dynamically customize web and social graphics to boost discovery. Allocating 15-20% of revenues towards AI-driven digital strategies is recommended.

Product Innovation

Incorporating the latest cannabis strains and product formats keeps existing customers engaged while attracting new ones. AI is revolutionizing product innovation in cannabis - large language models help develop novel strains tailored to specific wellness or recreational needs at an unprecedented pace. Meanwhile, computer vision models can conceptualize new product categories involving edibles, topicals, beverages and more.

Partnering with leading extraction labs and AI-empowered breeders opens the door to continually debuting breakthrough offerings before competitors. Continuous AI-driven R&D also ensures product assortments adapt to trends and changing consumer preferences. This could be a key long term differentiator.

Operational Excellence

Given extensive regulations, minimizing compliance costs while focusing on productivity is crucial. AI can automate many labor-intensive tasks - computer vision analyzes inventories in real-time to prevent stock-outs and optimize procurement. AI scheduling algorithms ensure efficient staffing based on historical sales patterns. Robotic process automation tackles paperwork digitally.

AI also streamlines marketing, finance and supply chain management. Regular AI audits identify process optimization opportunities while machine learning models forecast demand. This allows for lean, cost-effective operations at scale even as business grows. Retailers investing 15-20% of profits in AI-led productivity will gain significant efficiencies within 3-5 years.

Data-Driven Merchandising

Leveraging AI and Big Data is imperative for effective inventory management and merchandising. By analyzing historical point-of-sale records along with contextual factors like seasons, events or discounts, AI forecasting models accurately predict trends for individual SKUs at a granular level. This enables optimized product placements, tailored assortments by location, and minimal waste from expired shelf stock.

Embrace Technology Innovation

The cannabis industry is among the most progressive in adopting cutting-edge technology. Early investments in AI will strengthen competitive positioning in the long run. Emerging innovations like computer vision-powered store navigation aids, virtual/augmented reality showcasing SKUs and AI simulation of reformulated products should form an integral part of the technology roadmap.

Staggered implementation as technologies mature also curbs risks. But proactively scouting novel applications of retail AI, Blockchain, IoT ensures remaining at the forefront of transformation. Setting aside 3-5% of annual revenues specifically for innovation keeps the business future-proof in this dynamic landscape.

Final Thoughts

As generative AI models mature, their applications will proliferate and disrupt every segment of the cannabis retail industry. However, judicious adoption aligned with business priorities, along with responsible and ethical use of this technology will determine its true transformative potential. Early adopters leveraging generative AI strategically now will emerge as true industry pioneers.

1 note

·

View note

Text

Understanding Commodity Trading: Key Concepts and Strategies

What is Commodity Trading?

Commodity trading involves the buying and selling of raw materials, either in physical form or through derivatives, on various commodity trading exchanges. These exchanges provide platforms where traders can speculate on the future price movements of commodities. Unlike stocks or bonds, commodities are tangible goods that are interchangeable with other goods of the same type, ensuring uniformity and standardization in trading.

Types of Commodities

Commodities can be broadly categorized into:

Soft Commodities: These include agricultural products such as wheat, corn, coffee, and soybeans. Soft commodities are heavily influenced by factors like weather conditions, crop reports, and geopolitical events.

Hard Commodities: Metals like gold, silver, copper, and platinum fall into this category. Hard commodities are often used in industrial production and can be influenced by factors such as supply disruptions, economic growth rates, and geopolitical stability.

Energy Commodities: Crude oil, natural gas, and gasoline are prime examples. Energy commodities are crucial for global energy consumption and are influenced by geopolitical tensions, supply and demand dynamics, and regulatory changes.

Participants in Commodity Trading

Several key players participate in commodity trading:

Producers: Farmers, miners, and energy companies who extract or produce commodities.

Consumers: Industries that require commodities as raw materials for manufacturing processes, such as food processors, electronics manufacturers, and construction firms.

Speculators: Traders who aim to profit from short-term price fluctuations in commodities, without the intention of taking physical delivery.

Hedgers: Businesses and investors who use futures contracts to mitigate the risk of adverse price movements in the commodities they produce or consume.

Strategies in Commodity Trading

Successful commodity trading often involves employing various strategies tailored to market conditions and individual risk tolerance:

Technical Analysis: Traders analyze historical price charts and trading volumes to identify trends and predict future price movements.

Fundamental Analysis: This approach involves assessing supply and demand fundamentals, geopolitical factors, weather patterns, and economic data to forecast commodity prices.

Spread Trading: Involves taking offsetting positions in related commodities to profit from price differentials between them.

Options Trading: Traders use options contracts to speculate on price movements or hedge against downside risk.

Seasonal Trading: Some commodities exhibit seasonal price patterns due to factors like weather conditions or harvesting cycles, which traders can exploit.

Impact on Global Economy

Commodity prices have far-reaching implications for the global economy:

Inflation and Deflation: Fluctuations in commodity prices can influence consumer prices, impacting inflation rates globally.

Emerging Markets: Many developing economies heavily depend on commodity exports, making them vulnerable to price swings.

Investment Opportunities: Commodities provide diversification benefits for investors seeking to hedge against inflation or economic uncertainties.

Challenges in Commodity Trading

Despite its potential rewards, commodity trading presents several challenges:

Volatility: Commodities can experience significant price volatility due to geopolitical events, weather conditions, and global economic factors.

Liquidity: Some commodities may have limited trading volumes, making it difficult to enter or exit positions without affecting prices.

Regulatory Risks: Government policies, trade tariffs, and regulatory changes can impact commodity prices and trading strategies.

Conclusion

Commodity trading is a dynamic and integral part of global financial markets, offering opportunities for profit and risk management across various sectors. Whether you're a producer looking to hedge against price fluctuations or a speculator seeking short-term gains, understanding the complexities of commodity markets and employing effective trading strategies is essential. By staying informed about market trends, geopolitical developments, and economic indicators, participants can navigate the challenges and capitalize on the opportunities presented by commodity trading.

As the global economy continues to evolve, commodity trading remains a cornerstone of economic activity, influencing industries, governments, and investors alike with its profound impact on supply chains, inflation dynamics, and investment portfolios.

0 notes

Text

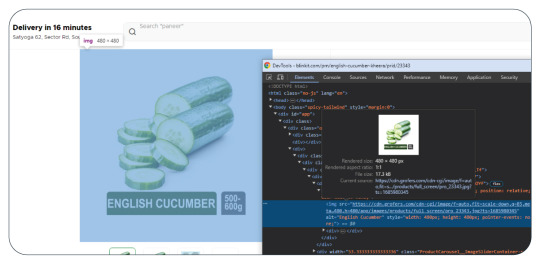

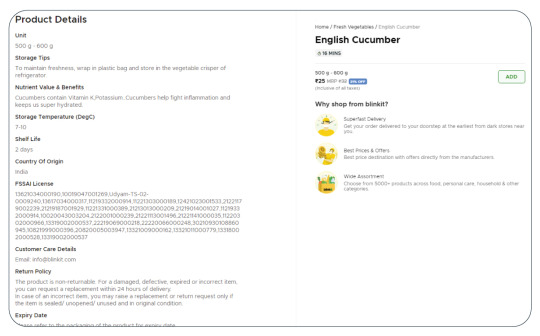

Scrape Blinkit Grocery Data | Enhancing Online Retail Strategie

Grocery data scraping plays a pivotal role in the modern retail and consumer behavior analysis landscape. It involves automated data extraction from various online grocery platforms, providing valuable insights into pricing trends, product availability, customer preferences, and overall market dynamics.

In today's highly competitive market, where the demand for online grocery shopping is rising, businesses can leverage grocery data scraping services to gain a competitive edge. One such example of grocery delivery business is Blinkit. Scrape Blinkit grocery data to enable real-time information collection from multiple sources, allowing retailers to monitor their competitors, optimize pricing strategies, and ensure their product offerings align with customer demands.

The role of grocery data scraping services extends beyond pricing and product information. They facilitate the analysis of customer reviews, helping businesses understand the sentiments and preferences of their target audience. By harnessing this data, retailers can enhance their marketing strategies, tailor promotions, and improve overall customer satisfaction.

Furthermore, grocery data scraping services contribute to inventory management by providing insights into stock levels, identifying fast-moving items, and predicting demand fluctuations. This proactive approach allows businesses to optimize their supply chains, minimize stockouts, and ensure efficient operations.

In essence, scrape grocery data to stay agile in the competitive grocery industry, offering businesses the tools to make informed decisions, enhance customer experiences, and ultimately thrive in the ever-evolving retail landscape.

List Of Data Fields

Product Information:

Product name

Brand

Category (e.g., fruits, vegetables, dairy)

Description

Price

Inventory Details:

Stock levels

Availability Status

Unit measurements (e.g., weight, quantity)

Pricing Information:

Regular price

Discounted price (if applicable)

Promotions or discounts

Customer Reviews:

Ratings

Reviews

Customer feedback

Delivery Information:

Delivery options

Shipping fees

Estimated delivery times

Store Information:

Store name

Location details

Operating hours

Images:

URLs or image data for product pictures

Promotional Information:

Promo codes or special offers

Limited-time discounts

Product Attributes:

Nutritional information

Ingredients

Allergen information

User Account Information:

User profiles (if accessible and in compliance with privacy policies)

Order history

About Blinkit

Blinkit, a prominent grocery delivery app in India, offers a streamlined shopping experience, allowing users to order a diverse array of groceries online conveniently. Prioritizing ease, Blinkit empowers customers to effortlessly browse and select products via its platform, ensuring doorstep delivery. The app can simplify grocery shopping, granting users access to various essentials. For the most up-to-date details on Blinkit, users are encouraged to visit the official website or consult app descriptions and reviews on relevant platforms.

Utilize Blinkit grocery delivery app data scraping to acquire real-time insights, including product details, pricing dynamics, inventory status, and customer feedback. This data-driven approach enables businesses to stay competitive, optimize pricing structures, enhance inventory strategies, and grasp consumer preferences for well-informed decision-making in the ever-evolving landscape of online grocery retail.

Significance Of Scraping Blinkit Grocery Data

Enhance User Experience: By scraping Blinkit Grocery Delivery data, businesses can provide users with real-time updates on product availability, ensuring a seamless and satisfying shopping experience. Accurate information contributes to increased customer trust and satisfaction.

Competitive Pricing Strategies: Accessing Blinkit's pricing data through scraping allows businesses to gain insights into dynamic pricing strategies. This knowledge helps formulate competitive pricing structures, stay agile in the market, and adapt to changes in consumer behavior.

Optimized Stock Management: Scrape Blinkit grocery data to help businesses monitor stock levels efficiently. This data-driven approach aids in preventing stockouts, optimizing inventory turnover, and ensuring that customers consistently find the products they need.

Quality Improvement: Analyzing customer reviews extracted through Blinkit grocery data scraping services provides valuable insights into consumer preferences and concerns. This information helps businesses identify areas for improvement, enhancing the quality of products and services to meet customer expectations.

Market Intelligence: By continuously scraping data from Blinkit, businesses gain a competitive edge by staying informed about competitors' offerings, promotional strategies, and overall market dynamics. This knowledge is crucial for strategic decision-making and maintaining a solid market position.

Informed Business Strategies: The data collected from Blinkit using a grocery data scraper facilitates informed decision-making. Businesses can strategize based on insights into product popularity, consumer behavior, and market trends, ensuring their actions align with current market demands.

Market Adaptation: Regularly updated insights from scraping Blinkit data enable businesses to adapt to evolving market trends and consumer preferences. This adaptability is crucial for staying relevant and meeting the dynamic demands of the online grocery retail landscape.

Personalized Shopping: Utilizing scraped data allows businesses to personalize the user experience on Blinkit. It includes tailoring recommendations, promotions, and overall interactions, creating a more engaging and efficient user shopping journey.

What Types Of Businesses Are Benefitting From Scraped Blinkit Grocery Data?

Various businesses can benefit from scraped Blinkit grocery data, leveraging the insights for strategic decision-making, market analysis, and improved customer experiences. Here are some examples:

Online Grocery Retailers: Businesses operating in the online grocery sector can use scraped Blinkit data to stay informed about product trends, pricing strategies, and customer preferences. It enables them to optimize their own offerings and pricing structures.

Competitive Intelligence Firms: Companies specializing in competitive intelligence benefit from scraped Blinkit data to provide detailed market analyses to their clients. It includes tracking competitors' product portfolios, pricing dynamics, and promotional activities.

Market Research Agencies: Market research agencies use scraped Blinkit data to gather insights into consumer behavior, preferences, and trends in the online grocery sector. This information is valuable for producing comprehensive market reports and industry analyses.

Price Comparison Platforms: Platforms that offer price comparison services leverage scraped Blinkit data to provide users with accurate and real-time information on grocery prices. It helps consumers make informed decisions when choosing where to shop.

Data Analytics Companies: Businesses specializing in data analytics use Blinkit grocery data to perform in-depth analyses, identify patterns, and derive actionable insights. These insights contribute to data-driven decision-making for various industries.

Supply Chain Management Companies: Companies involved in supply chain management can utilize Blinkit data to optimize inventory levels, track product availability, and enhance their overall logistics and distribution strategies.

Marketing and Advertising Agencies: Advertising agencies leverage scraped Blinkit data to tailor promotional campaigns based on current market trends and consumer preferences. It ensures that marketing efforts are targeted and effective.

E-commerce Platforms: E-commerce platforms can integrate Blinkit data to enhance their grocery product offerings, optimize pricing strategies, and provide users with a more personalized shopping experience.

Startups in the Grocery Sector: Startups looking to enter or innovate within the grocery sector can use scraped Blinkit data to understand market dynamics, consumer behavior, and areas of opportunity. This information supports their business planning and strategy development.

Conclusion: Blinkit grocery data scraping provides many actionable insights for businesses across various sectors. The data extracted is valuable, from online grocery retailers optimizing their product offerings to competitive intelligence firms offering detailed market analyses. Market research agencies gain a deeper understanding of consumer behavior, while price comparison platforms offer users real-time information. Data analytics companies uncover patterns, aiding data-driven decision-making, and supply chain management firms optimize logistics. Marketing agencies tailor campaigns, and e-commerce platforms enhance their grocery offerings. Startups leverage Blinkit data for informed market entry. However, ethical considerations and adherence to legal standards remain paramount in using scraped data.

Product Data Scrape operates with a foundation in ethical standards, offering services such as Competitor Price Monitoring and Mobile Apps Data Scraping. We guarantee clients exceptional and transparent services, catering to diverse needs on a global scale.

#ScrapeBlinkitGroceryData#BlinkitGroceryDataScraping#GroceryDataScraper#ExtractBlinkitGroceryData#BlinkitDataScraper#BlinkitDataCollection

0 notes

Text

Walmart Product API - Walmart Price Scraper

In the ever-evolving world of e-commerce, competitive pricing is crucial. Companies need to stay updated with market trends, and consumers seek the best deals. Walmart, a retail giant, offers a wealth of data through its Product API, enabling developers to create applications that can retrieve and analyze product information and prices. In this blog post, we will explore how to build a Walmart Price Scraper using the Walmart Product API, providing you with the tools to stay ahead in the competitive market.

Introduction to Walmart Product API

The Walmart Product API provides access to Walmart's extensive product catalog. It allows developers to query for detailed information about products, including pricing, availability, reviews, and specifications. This API is a valuable resource for businesses and developers looking to integrate Walmart's product data into their applications, enabling a variety of use cases such as price comparison tools, market research, and inventory management systems.

Getting Started

To begin, you'll need to register for a Walmart Developer account and obtain an API key. This key is essential for authenticating your requests to the API. Once you have your API key, you can start making requests to the Walmart Product API.

Step-by-Step Guide to Building a Walmart Price Scraper

Setting Up Your EnvironmentFirst, you'll need a development environment set up with Python. Make sure you have Python installed, and then set up a virtual environment:bashCopy codepython -m venv walmart-scraper source walmart-scraper/bin/activate Install the necessary packages using pip:bashCopy codepip install requests

Making API RequestsUse the requests library to interact with the Walmart Product API. Create a new Python script (walmart_scraper.py) and start by importing the necessary modules and setting up your API key and endpoint:pythonCopy codeimport requests API_KEY = 'your_walmart_api_key' BASE_URL = 'http://api.walmartlabs.com/v1/items'

Fetching Product DataDefine a function to fetch product data from the API. This function will take a search query as input and return the product details:pythonCopy codedef get_product_data(query): params = { 'apiKey': API_KEY, 'query': query, 'format': 'json' } response = requests.get(BASE_URL, params=params) if response.status_code == 200: return response.json() else: return None

Extracting Price InformationOnce you have the product data, extract the relevant information such as product name, price, and availability:pythonCopy codedef extract_price_info(product_data): products = product_data.get('items', []) for product in products: name = product.get('name') price = product.get('salePrice') availability = product.get('stock') print(f'Product: {name}, Price: ${price}, Availability: {availability}')

Running the ScraperFinally, put it all together and run your scraper. You can prompt the user for a search query or define a list of queries to scrape:pythonCopy codeif __name__ == "__main__": query = input("Enter product search query: ") product_data = get_product_data(query) if product_data: extract_price_info(product_data) else: print("Failed to retrieve product data.")

Advanced Features

To enhance your scraper, consider adding the following features:

Error Handling: Improve the robustness of your scraper by adding error handling for various scenarios such as network issues, API rate limits, and missing data fields.

Data Storage: Store the scraped data in a database for further analysis. You can use SQLite for simplicity or a more robust database like PostgreSQL for larger datasets.

Scheduled Scraping: Automate the scraping process using a scheduling library like schedule or a task queue like Celery to run your scraper at regular intervals.

Data Analysis: Integrate data analysis tools like Pandas to analyze price trends over time, identify the best times to buy products, or compare prices across different retailers.

Ethical Considerations

While building and using a price scraper, it’s important to adhere to ethical guidelines and legal requirements:

Respect Terms of Service: Ensure that your use of the Walmart Product API complies with Walmart’s terms of service and API usage policies.

Rate Limiting: Be mindful of the API’s rate limits to avoid overwhelming the server and getting your API key banned.

Data Privacy: Handle any personal data with care and ensure you comply with relevant data protection regulations.

Conclusion

Building a Walmart Price Scraper using the Walmart Product API can provide valuable insights into market trends and help consumers find the best deals. By following this guide, you can set up a basic scraper and expand it with advanced features to meet your specific needs. Always remember to use such tools responsibly and within legal and ethical boundaries. Happy scraping!

4o

0 notes

Text

How to Perform Data Scraping on Quick Commerce Platforms such as Zepto and Blinkit?

Introduction

The quick commerce (Q-commerce) industry has revolutionized the way consumers shop, providing rapid delivery services for groceries, essentials, and other products. Platforms like Zepto and Blinkit are leading this transformation, making it crucial for businesses to gather and analyze data from these platforms to stay competitive. In this blog, we will explore how to perform data scraping on Zepto and Blinkit to collect valuable insights. We will use tools and libraries such as Selenium, BeautifulSoup, and Requests, and discuss ethical considerations and best practices.

Why Scrape Data from Quick Commerce Platforms?

Market Research

Scraping data from Q-commerce platforms like Zepto and Blinkit allows businesses to conduct in-depth market research. By analyzing product listings, prices, and availability, companies can understand market trends and consumer behavior.

Price Comparison

Using a Blinkit Q-commerce data scraper or a Zepto Q-commerce data scraper enables businesses to monitor competitor pricing strategies. This helps in adjusting pricing models to stay competitive while maximizing profit margins.

Customer Insights

Blinkit Q-commerce data Collection or Zepto Q-commerce data Collection provides insights into customer preferences, buying patterns, and feedback. This information is vital for tailoring marketing strategies and improving product offerings.

Inventory Management

Quick commerce data scraping helps in tracking inventory levels, identifying popular products, and managing stock efficiently. This ensures that businesses can meet customer demand without overstocking or stockouts.

Tools and Libraries for Data Scraping

To scrape data from Zepto and Blinkit, we will use the following tools and libraries:

Python: A versatile programming language commonly used for web scraping.

Selenium: A browser automation tool that handles dynamic web content.

BeautifulSoup: A library for parsing HTML and XML documents.

Requests: A simple HTTP library for making requests to websites.

Pandas: A powerful data manipulation library.

Install these libraries using pip:pip install selenium beautifulsoup4 requests pandas

Setting Up Selenium and WebDriver

Selenium is crucial for handling dynamic content on Zepto and Blinkit. Here's how to set it up:

Download the WebDriver for your browser from the official site.

Place the WebDriver executable in a directory included in your system's PATH.

Selenium Setup

Scraping Blinkit

Navigating to Blinkit

blinkit_url = 'https://www.blinkit.com' driver.get(blinkit_url)

Searching for Products

search_box = driver.find_element(By.NAME, 'search') search_box.send_keys('groceries') search_box.submit()

Extracting Product Data

Saving the Data

df.to_csv('blinkit_products.csv', index=False)

Scraping Zepto

Navigating to Zepto

zepto_url = 'https://www.zepto.com' driver.get(zepto_url)

Searching for Products

search_box = driver.find_element(By.ID, 'search-input') search_box.send_keys('vegetables') search_box.submit()

Extracting Product Data

Saving the Data

df.to_csv('zepto_products.csv', index=False)

Handling Anti-Scraping Measures

Use Proxies

Rotate User Agents

Using Blinkit and Zepto Q-commerce Scraping APIs

Using the official Blinkit Q-commerce scraping API or Zepto Q-commerce scraping API can simplify the data extraction process and ensure compliance with their terms of service.

Example API Request

Ethical Considerations and Best Practices

Comply with Terms of Service

Always review and comply with the terms of service of the websites you are scraping. Unauthorized scraping can lead to legal consequences.

Rate Limiting

Implement rate limiting to avoid overwhelming the server with too many requests in a short period.import time time.sleep(2) # Wait for 2 seconds before making the next request

Data Storage and Management

Store the scraped data securely and manage it effectively for analysis. Regularly update your datasets to ensure you have the most current information.

Web Scraping Services

For those who prefer not to handle the technicalities, there are web scraping services available that offer customized solutions for data extraction needs. These services ensure compliance with legal and ethical standards and provide reliable data for your business.

Conclusion

Scraping data from quick commerce platforms like Zepto and Blinkit can provide valuable insights for market research, price comparison, and customer analysis. By using tools such as Selenium, BeautifulSoup, and Requests, you can effectively perform Blinkit Q-commerce data scraping and Zepto Q-commerce data scraping. Remember to follow ethical guidelines, comply with terms of service, and use best practices to ensure successful data extraction.

Leverage the power of quick commerce data collection with Real Data API to stay competitive and make informed business decisions. Contact us for professional web scraping services tailored to your needs!

Know More: https://www.realdataapi.com/quick-commerce-data-scraping-platforms-such-as-zepto-and-blinkit.php

#BlinkitQcommerceDataScraping#BlinkitQcommerceDataScraper#ZeptoQcommerceDataScraper#ZeptoQcommerceDataScraping#BlinkitQcommerceDataCollection#ZeptoQcommerceDataCollection#QuickCommerceDataCollection#QuickCommerceDataScraping#BlinkitQcommerceScrapingAPI#ZeptoQcommerceScrapingAPI

0 notes

Text

How Can Python and BeautifulSoup Help Scraping Wayfair Product Data

E-commerce data scraping is collecting product information, pricing data, customer reviews, and other relevant data from e-commerce websites. This data is valuable for market research, price comparison, trend analysis, and other business purposes. One notable example of e-commerce data scraping is Wayfair product data scraping.

Wayfair, a leading e-commerce company specializing in home furnishings and decor, offers various products from suppliers. Scraping Wayfair product data can provide valuable insights into market trends, competitor pricing strategies, and customer preferences. By extracting data such as product descriptions, prices, customer reviews, and ratings, businesses can gain a competitive edge in the e-commerce landscape. It enables businesses to make informed decisions, optimize pricing strategies, and enhance customer experience.

Significance of Scraping E-commerce Data Scraping

E-commerce data scraping offers valuable insights for businesses, including pricing analysis, trend identification, and understanding customer behavior, aiding decision-making.

Dynamic Pricing: E-commerce data scraping services enable businesses to implement dynamic pricing strategies by monitoring competitor prices, market demand, and other factors in real-time. This helps businesses adjust their prices to maximize profits and stay competitive.

Product Availability: By scraping e-commerce websites, businesses can track product availability and ensure that they have sufficient stock to meet customer demand. It helps reduce the risk of stockouts and lost sales.

Trend Analysis: An e-commerce data scraper provides businesses with valuable insights into consumer trends and preferences. By analyzing data on popular products, search terms, and purchasing behavior, businesses can identify emerging trends and adjust their product offerings.

Customer Segmentation: Wayfair data scraping allows businesses to segment their customers based on demographics, behavior, and preferences. This information can be used to personalize marketing campaigns and improve customer engagement.

Fraud Detection: Wayfair data scraping services can help businesses detect fraudulent activities such as fake reviews, account takeovers, and payment fraud. By analyzing data patterns, businesses can identify and mitigate potential threats.

SEO Optimization: Wayfair data scraper helps gather data on keyword rankings, backlinks, and other SEO metrics. This information can help businesses optimize their websites for search engines and improve their visibility online.

Compliance Monitoring: E-commerce data scraping can help businesses monitor compliance with regulations such as pricing policies, product labeling requirements, and data protection laws. It can help businesses avoid legal issues and maintain a positive reputation.

Importance of Python & BeautifulSoup to Extract Wayfair Product Data

Python and BeautifulSoup are crucial tools for extracting product data from Wayfair and other e-commerce platforms. Python's versatility and readability make it an ideal language for web scraping tasks, allowing developers to write scripts that automate extracting data from web pages.

BeautifulSoup, a Python library, simplifies the process of parsing HTML and XML documents, making it easier to navigate the structure of web pages and extract specific information. By combining Python with BeautifulSoup, developers can create powerful tools to extract product data such as prices, descriptions, and customer reviews from Wayfair's website.

The extracted data can be used for various purposes, including market research, price monitoring, and competitor analysis. This data is invaluable for businesses as it provides insights into market trends, customer preferences, and competitor strategies. Python and BeautifulSoup play a vital role in extracting and analyzing e-commerce data, helping businesses make informed decisions and stay competitive in the online marketplace.

Steps to Scrape Wayfair Product Data Using Python & Beautiful Soup

Scraping Wayfair product data using Python and BeautifulSoup involves several steps:

Install Required Libraries: First, install Python on your system. You'll also need to install the BeautifulSoup library using pip:pip install beautifulsoup4

Parse HTML Content: Use BeautifulSoup to parse the HTML content of the page and create a BeautifulSoup object:soup = BeautifulSoup(response.content, 'html.parser')

Find Product Elements: Use BeautifulSoup's find() or find_all() methods to locate the HTML elements that contain the product data you want to scrape. Inspect the Wayfair website to identify the specific HTML elements (e.g., div, span, class names) that contain the product information.products = soup.find_all('div', class_='product')

Extract Data: Iterate over the product elements and extract the relevant data (e.g., product name, price, description) from each element:

Run the Script: Execute your Python script to scrape Wayfair product data. Make sure to handle pagination and any other complexities present on the website.

Remember to respect Wayfair's terms of service and robots.txt file when scraping their website.

Conclusion: Scraping Wayfair product data is a valuable process that provides businesses with critical insights into market trends, pricing strategies, and customer preferences. By extracting and analyzing this data, businesses can make informed decisions, optimize their offerings, and stay competitive in the e-commerce landscape. Additionally, scraping Wayfair product data enables businesses to enhance their marketing strategies, improve customer engagement, and streamline operations. Overall, the ability to scrape Wayfair product data offers businesses a powerful tool for driving growth, increasing efficiency, and staying ahead in an increasingly competitive online marketplace.

Transform your retail operations with Retail Scrape Company's data-driven solutions. Harness real-time data scraping to understand consumer behavior, fine-tune pricing strategies, and outpace competitors. Our services offer comprehensive pricing optimization and strategic decision support. Elevate your business today and unlock maximum profitability. Reach out to us now to revolutionize your retail operations!

know more :

https://www.retailscrape.com/python-and-beautifulsoup-help-in-scraping-wayfair-product-data.php

#ScrapingWayfairProductData#ScrapeWayfairProductData#ExtractWayfairProductData#WayfairProductDataCollection#WayfairProductDataScraper#WayfairProductDataExrtaction

0 notes

Text

How to Scrape Swiggy Instamart API to Navigate Grocery Data?

Introduction

In today's digital age, where real-time data reigns supreme, businesses constantly seek ways to gain a competitive edge, particularly in industries undergoing rapid evolution like groceries. Swiggy Instamart, a prominent player in the online grocery delivery sector, provides a valuable resource through its API. This API offers a wealth of information that can be harnessed for market analysis, pricing optimization, and personalized marketing strategies.

Businesses can scrape Swiggy Instamart API to get a wide array of grocery data in real time. This includes product listings, prices, availability, customer reviews, and more. With the ability to access such granular data, companies can gain deeper insights into consumer preferences, market trends, and competitor strategies.

Through Swiggy Instamart API extraction, businesses can build robust grocery data collection systems that continuously gather and update grocery data. This data, when analyzed, can identify emerging trends, optimize pricing strategies, and tailor marketing campaigns to specific customer segments. This not only keeps businesses in the loop but also gives them a competitive edge, inspiring them to strive for continuous improvement.

Furthermore, the use of grocery data scraping tools or custom grocery data scrapers enables businesses to efficiently collect and organize data from the Swiggy Instamart API. This seamless integration into existing data analytics workflows reassures businesses that they can make data-driven decisions without disrupting their operations, helping them to confidently navigate the fiercely competitive grocery industry.

In this guide, we'll explore how to scrape Swiggy Instamart API to navigate grocery data effectively.

Scrape Swiggy Instamart API Data

Scrape Swiggy Instamart API involves several vital steps to extract relevant grocery data efficiently. Here's a detailed overview of the process:

Understanding the API: Before diving into scraping, thoroughly understanding the Swiggy Instamart API documentation is essential. This includes familiarizing yourself with API endpoints, authentication methods, and data structures. By understanding the API comprehensively, you'll be better equipped to navigate and extract the desired data effectively.

API Authentication: To scrape Swiggy Instamart API and retrieve data, you'll need to obtain the necessary API credentials, typically an API key and a secret key. These credentials are used to authenticate your requests to the API and ensure secure access to the data. Following the authentication process outlined in the API documentation is crucial to obtaining valid credentials and successfully authenticating your requests.

Making API Requests: Once authenticated, you can begin making API requests to interact with the various endpoints and retrieve grocery data. Use HTTP requests like GET or POST to communicate with the API and specify the parameters needed to retrieve the desired data. For example, you can request product listings, prices, availability, customer reviews, and other relevant information related to grocery items.

You can efficiently extract Swiggy Instamart API data by crafting targeted API requests and parsing the API responses. It's essential to handle the retrieved data appropriately, whether storing it in a database, analyzing it for insights, or integrating it into your applications or systems.

List of Data Fields

When you extract Swiggy Instamart API, various data fields can be extracted to provide comprehensive insights into grocery items available on the platform. These data fields include:

Product Name: The name or title of the grocery item.

Description: A brief product description highlighting its features or ingredients.

Price: The current price of the grocery item.

Availability: Indicates whether the item is currently in or out of stock.

Brand: The brand or manufacturer of the product.

Category: The category or department to which the product belongs (e.g., fruits, vegetables, dairy).

Customer Ratings: Ratings or reviews provided by customers who have purchased the product.

Nutritional Information: Information about the nutritional content of food items, including calories, fat, protein, etc.

Packaging Details: Details about the product's packaging, such as size or quantity.

Delivery Options: Information about delivery options available for the product, including delivery timeframes and fees.

When scrape Swiggy Instamart API data fields, businesses can gain valuable insights into the range of grocery items, prices, availability, and customer satisfaction levels. This information can be used to optimize inventory management, pricing strategies, and marketing efforts, ultimately enhancing the overall shopping experience for customers.

Use Cases of Grocery Data Scraping

Grocery data scraping, particularly from platforms like Swiggy Instamart API, offers a multitude of valuable use cases that can be tailored to the specific needs of businesses operating in the grocery industry. Here are some compelling applications:

Market Analysis: By scraping grocery data from Swiggy Instamart API, businesses can conduct comprehensive market analysis. They can analyze product trends, consumer preferences, and demand patterns. This insight enables businesses to tailor their product offerings and marketing strategies to meet customers' evolving needs.

Pricing Optimization: Grocery data scraping allows businesses to monitor pricing trends and optimize pricing strategies. Companies can adjust their prices dynamically by analyzing competitor pricing and market demand to remain competitive while maximizing profitability.

Inventory Management: Scraping grocery data helps businesses manage their inventory effectively. By monitoring product availability and demand in real time, companies can ensure adequate stock levels to meet customer demand while minimizing excess inventory and stockouts.

Product Assortment Planning: When businesses scrape Grocery data from Swiggy Instamart API, they can make informed decisions about their product assortment. They can identify popular products, explore new trends, and introduce new products to cater to customer preferences effectively.

Personalized Marketing: Grocery data scraping enables businesses to personalize their marketing efforts based on customer behavior and preferences. Companies can send targeted promotions and recommendations by analyzing purchase history and browsing patterns, enhancing customer engagement and loyalty.

Competitor Analysis: By scraping grocery data from competitors on platforms like Swiggy Instamart API, businesses can gain insights into competitor strategies. They can analyze product offerings, pricing strategies, and promotional activities to identify strengths, weaknesses, and opportunities for differentiation.

Supply Chain Optimization: Scraping grocery data facilitates supply chain optimization. By monitoring supplier performance, delivery times, and inventory turnover, businesses can streamline their supply chain processes, reduce costs, and improve overall efficiency.

Grocery data scraping from platforms like Swiggy Instamart API offers numerous benefits and use cases for businesses in the grocery industry. From market analysis and pricing optimization to inventory management and personalized marketing, the insights derived from scrape Grocery data can drive business growth and success with efficiency and effectiveness in a competitive market landscape.

Python Code for Swiggy Instamart API Scraping

Conclusion

By leveraging Swiggy Instamart API scraping, Actowiz Solutions can access valuable grocery data insights for market analysis, pricing optimization, inventory management, personalized marketing, and supply chain optimization. Harnessing the power of Python and Swiggy Instamart API scraping enables businesses to make informed, data-driven decisions, gaining a competitive edge in the dynamic grocery industry. Start scraping today with Actowiz Solutions to unlock the full potential of grocery data! You can also reach us for all your mobile app scraping, instant data scraper and web scraping service requirements.

#ScrapeSwiggyInstamartAPI#SwiggyInstamartAPIextraction#SwiggyInstamartAPIScraping#ScrapeGroceryData#GroceryDataScraping#SwiggyInstamartAPIExtraction

0 notes

Text

Exploring Descriptive, Predictive, and Prescriptive Analytics

In today's data-driven world, businesses are always looking for insights to make informed decisions and gain a competitive edge. Data analysts are essential in this process, using various analytical techniques to extract valuable insights from data. A fundamental distinction in data analytics is between descriptive, predictive, and prescriptive analytics. In this blog post, we'll explore each of these types of analytics and their significance in data analysis, while also emphasizing the importance of enrolling in a Data Analyst Training Course to master these skills.

Data Analyst Training Course is designed to equip aspiring analysts with the necessary skills to navigate the complex landscape of data analytics. Before delving into the specifics of the course, it's essential to understand the three main types of analytics: descriptive, predictive, and prescriptive.

Descriptive Analytics

Descriptive analytics involves analyzing historical data to gain insights into past events and understand patterns and trends. It focuses on answering the question "What happened?" by summarizing and aggregating data to provide a comprehensive overview. Data Analyst Training emphasizes the importance of descriptive analytics as it forms the foundation for more advanced analytical techniques.

Predictive Analytics

Predictive analytics, as the name suggests, is concerned with forecasting future outcomes based on historical data and statistical algorithms. It leverages techniques such as regression analysis and machine learning to make predictions and identify potential trends. Data Analyst Certifications Course equips students with the necessary skills to build predictive models and interpret their results accurately.

Prescriptive Analytics

Prescriptive analytics goes beyond descriptive and predictive analytics by not only predicting future outcomes but also recommending actions to optimize those outcomes. It takes into account various constraints and objectives to provide actionable insights. Data Analyst Online Course delves into prescriptive analytics techniques such as optimization and simulation, empowering analysts to make data-driven decisions that drive business success.

The Importance of Data Analyst

Enrolling in a Data Analyst Training Course is essential for individuals looking to pursue a career in data analytics. The course covers a wide range of topics, including data visualization, statistical analysis, and machine learning, providing students with a comprehensive understanding of the field. Moreover, Data Analyst Training Course often includes hands-on projects and real-world case studies, allowing students to apply their knowledge in practical settings.

Real-World Applications

Understanding the practical applications of descriptive, predictive, and prescriptive analytics is crucial for data analysts. These techniques are widely used across various industries, including finance, healthcare, marketing, and manufacturing. For example, in finance, predictive analytics is used to forecast stock prices, while prescriptive analytics helps optimize investment portfolios. Data Analyst Course often includes industry-specific case studies, giving students insight into how analytics techniques are applied in real-world scenarios.

Continuous Learning and Adaptation

Data analytics is a rapidly evolving field, with new techniques and technologies emerging constantly. Therefore, continuous learning and adaptation are essential for data analysts to stay abreast of the latest developments. Data Analyst Training Course provides students with a solid foundation in analytics principles and techniques, but it's equally important for analysts to stay curious and proactive in exploring new tools and methodologies.