#but the js file that formats the blog posts see it has like a specific format for text and everything so they look right

Text

Fandom Userscript Cookbook: Five Projects to Get Your Feet Wet

Target audience: This post is dedicated, with love, to all novice, aspiring, occasional, or thwarted coders in fandom. If you did a code bootcamp once and don’t know where to start applying your new skillz, this is for you. If you're pretty good with HTML and CSS but the W3Schools Javascript tutorials have you feeling out of your depth, this is for you. If you can do neat things in Python but don’t know a good entry point for web programming, this is for you. Seasoned programmers looking for small, fun, low-investment hobby projects with useful end results are also welcome to raid this post for ideas.

You will need:

The Tampermonkey browser extension to run and edit userscripts

A handful of example userscripts from greasyfork.org. Just pick a few that look nifty and install them. AO3 Savior is a solid starting point for fandom tinkering.

Your browser dev tools. Hit F12 or right click > Inspect Element to find the stuff on the page you want to tweak and experiment with it. Move over to the Console tab once you’ve got code to test out and debug.

Javascript references and tutorials. W3Schools has loads of both. Mozilla’s JS documentation is top-notch, and I often just keep their reference lists of built-in String and Array functions open in tabs as I code. StackOverflow is useful for questions, but don’t assume the code snippets you find there are always reliable or copypastable.

That’s it. No development environment. No installing node.js or Ruby or Java or two different versions of Python. No build tools, no dependency management, no fucking Docker containers. No command line, even. Just a browser extension, the browser’s built-in dev tools, and reference material. Let’s go.

You might also want:

jQuery and its documentation. If you’re wrestling with a mess of generic spans and divs and sparse, unhelpful use of classes, jQuery selectors are your best bet for finding the element you want before you snap and go on a murderous rampage. jQuery also happens to be the most ubiquitous JS library out there, the essential Swiss army knife for working with Javascript’s... quirks, so experience with it is useful. It gets a bad rap because trying to build a whole house with a Swiss army knife is a fool’s errand, but it’s excellent for the stuff we're about to do.

Git or other source control, if you’ve already got it set up. By all means share your work on Github. Greasy Fork can publish a userscript from a Github repo. It can also publish a userscript from an uploaded text file or some code you pasted into the upload form, so don’t stress about it if you’re using a more informal process.

A text editor. Yes, seriously, this is optional. It’s a question of whether you’d rather code everything right there in Tampermonkey’s live editor, or keep a separate copy to paste into Tampermonkey’s live editor for testing. Are you feeling lucky, punk?

Project #1: Hack on an existing userscript

Install some nifty-looking scripts for websites you visit regularly. Use them. Ponder small additions that would make them even niftier. Take a look at their code in the Tampermonkey editor. (Dashboard > click on the script name.) Try to figure out what each bit is doing.

Then change something, hit save, and refresh the page.

Break it. Make it select the wrong element on the page to modify. Make it blow up with a huge pile of console errors. Add a console.log("I’m a teapot"); in the middle of a loop so it prints fifty times. Savor your power to make the background wizardry of the internet do incredibly dumb shit.

Then try a small improvement. It will probably break again. That's why you've got the live editor and the console, baby--poke it, prod it, and make it log everything it's doing until you've made it work.

Suggested bells and whistles to make the already-excellent AO3 Savior script even fancier:

Enable wildcards on a field that currently requires an exact match. Surely there’s at least one song lyric or Richard Siken quote you never want to see in any part of a fic title ever again, right?

Add some text to the placeholder message. Give it a pretty background color. Change the amount of space it takes up on the page.

Blacklist any work with more than 10 fandoms listed. Then add a line to the AO3 Savior Config script to make the number customizable.

Add a global blacklist of terms that will get a work hidden no matter what field they're in.

Add a list of blacklisted tag combinations. Like "I'm okay with some coffee shop AUs, but the ones that are also tagged as fluff don't interest me, please hide them." Or "Character A/Character B is cute but I don't want to read PWP about them."

Anything else you think of!

Project #2: Good Artists Borrow, Great Artists Fork (DIY blacklisting)

Looking at existing scripts as a model for the boilerplate you'll need, create a script that runs on a site you use regularly that doesn't already have a blacklisting/filtering feature. If you can't think of one, Dreamwidth comments make a good guinea pig. (There's a blacklist script for them out there, but reinventing wheels for fun is how you learn, right? ...right?) Create a simple blacklisting script of your own for that site.

Start small for the site-specific HTML wrangling. Take an array of blacklisted keywords and log any chunk of post/comment text that contains one of them.

Then try to make the post/comment it belongs to disappear.

Then add a placeholder.

Then get fancy with whitelists and matching metadata like usernames/titles/tags as well.

Crib from existing blacklist scripts like AO3 Savior as shamelessly as you feel the need to. If you publish the resulting userscript for others to install (which you should, if it fills an unmet need!), please comment up any substantial chunks of copypasted or closely-reproduced code with credit/a link to the original. If your script basically is the original with some key changes, like our extra-fancy AO3 Savior above, see if there’s a public Git repo you can fork.

Project #3: Make the dread Tumblr beast do a thing

Create a small script that runs on the Tumblr dashboard. Make it find all the posts on the page and log their IDs. Then log whether they're originals or reblogs. Then add a fancy border to the originals. Then add a different fancy border to your own posts. All of this data should be right there in the post HTML, so no need to derive it by looking for "x reblogged y" or source links or whatever--just make liberal use of Inspect Element and the post's data- attributes.

Extra credit: Explore the wildly variable messes that Tumblr's API spews out, and try to recreate XKit's timestamps feature with jQuery AJAX calls. (Post timestamps are one of the few reliable API data points.) Get a zillion bright ideas about what else you could do with the API data. Go through more actual post data to catalogue all the inconsistencies you’d have to catch. Cry as Tumblr kills the dream you dreamed.

Project #4: Make the dread Tumblr beast FIX a thing

Create a script that runs on individual Tumblr blogs (subdomains of tumblr.com). Browse some blogs with various themes until you've found a post with the upside-down reblog-chain bug and a post with reblogs displaying normally. Note the HTML differences between them. Make the script detect and highlight upside-down stacks of blockquotes. Then see if you can make it extract the blockquotes and reassemble them in the correct order. At this point you may be mobbed by friends and acquaintainces who want a fix for this fucking bug, which you can take as an opportunity to bury any lingering doubts about the usefulness of your scripting adventures.

(Note: Upside-down reblogs are the bug du jour as of September 2019. If you stumble upon this post later, please substitute whatever the latest Tumblr fuckery is that you'd like to fix.)

Project #5: Regular expressions are a hard limit

I mentioned up above that Dreamwidth comments are good guinea pigs for user scripting? You know what that means. Kinkmemes. Anon memes too, but kinkmemes (appropriately enough) offer so many opportunities for coding masochism. So here's a little exercise in sadism on my part, for anyone who wants to have fun (or "fun") with regular expressions:

Write a userscript that highlights all the prompts on any given page of a kinkmeme that have been filled.

Specifically, scan all the comment subject lines on the page for anything that looks like the title of a kinkmeme fill, and if you find one, highlight the prompt at the top of its thread. The nice ones will start with "FILL:" or end with "part 1/?" or "3/3 COMPLETE." The less nice ones will be more like "(former) minifill [37a / 50(?)] still haven't thought of a name for this thing" or "title that's just the subject line of the original prompt, Chapter 3." Your job is to catch as many of the weird ones as you can using regular expressions, while keeping false positives to a minimum.

Test it out on a real live kinkmeme, especially one without strict subject-line-formatting policies. I guarantee you, you will be delighted at some of the arcane shit your script manages to catch. And probably astonished at some of the arcane shit you never thought to look for because who the hell would even format a kinkmeme fill like that? Truly, freeform user input is a wonderful and terrible thing.

If that's not enough masochism for you, you could always try to make the script work on LiveJournal kinkmemes too!

64 notes

·

View notes

Text

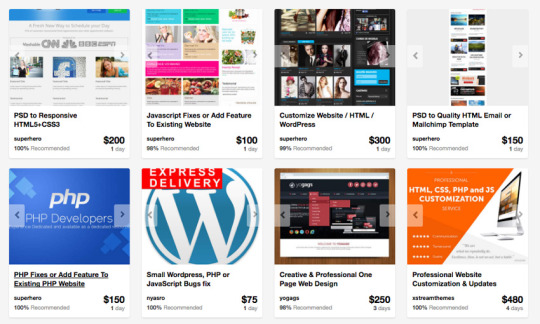

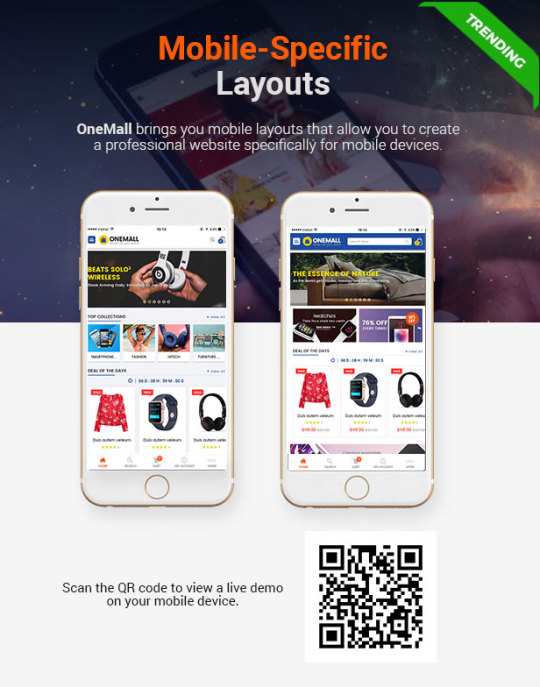

Destino - Advanced WooCommerce WordPress Theme with Mobile-Specific Layouts

New Post has been published on https://click.atak.co/destino-advanced-woocommerce-wordpress-theme-with-mobile-specific-layouts/

Destino - Advanced WooCommerce WordPress Theme with Mobile-Specific Layouts

DESTINO – HIGHLY CUSTOMIZABLE WOOCOMMERCE WORDPRESS THEME

Latest version 1.4.3: Released on June-09-2018 – See changelogs

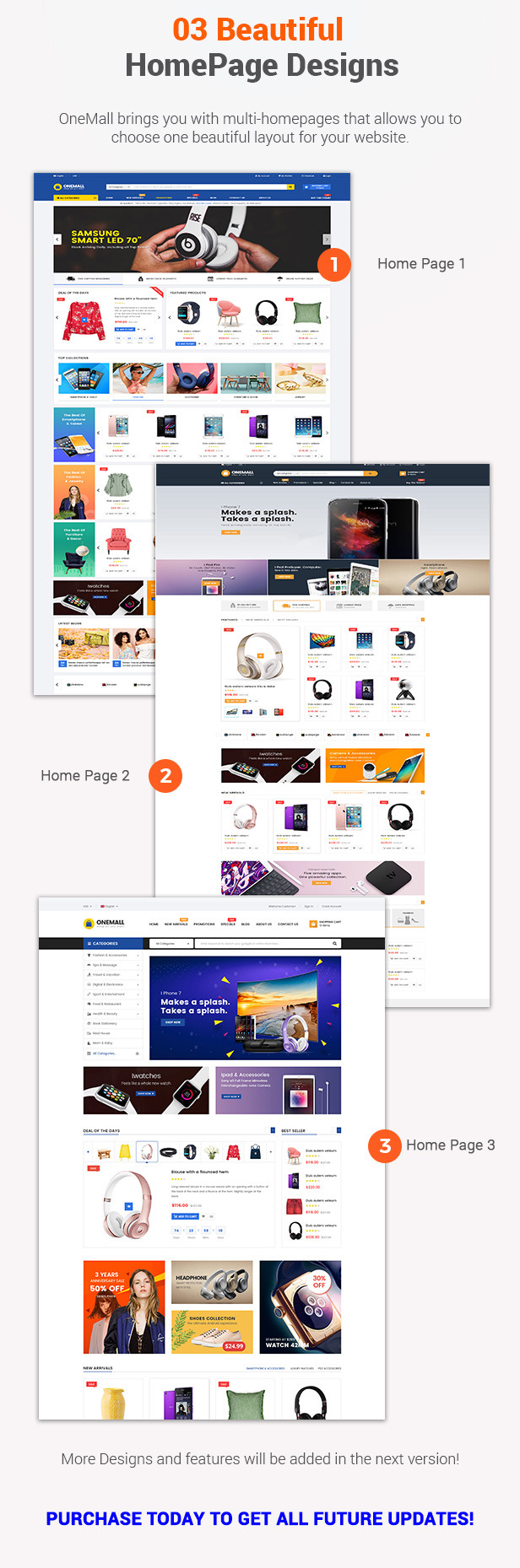

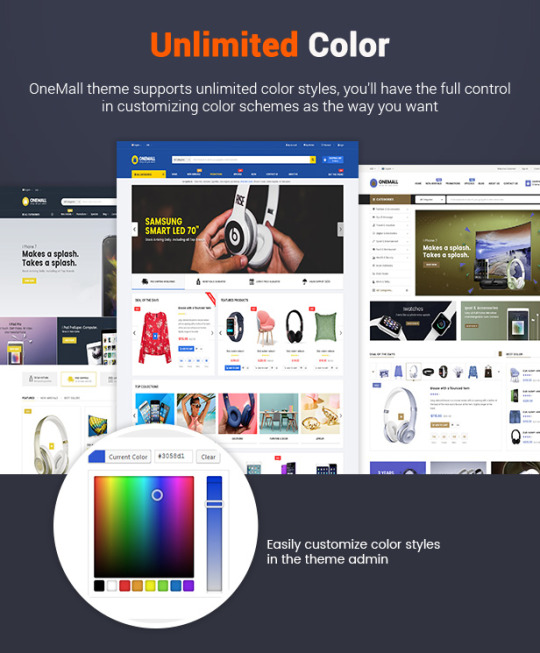

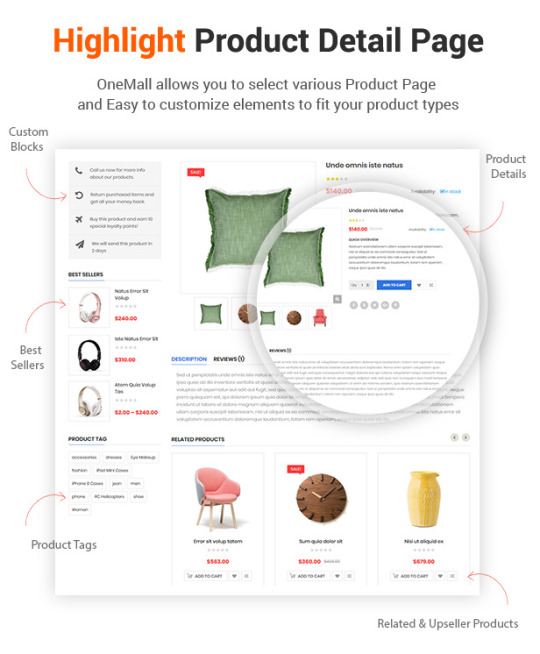

Destino is a well-designed eCommerce WordPress Theme that you can use for building an efficient online stores. The theme is extremely customizable with multiple homepage designs, tons of theme options and lots of eCommerce features that make website development an easy task.

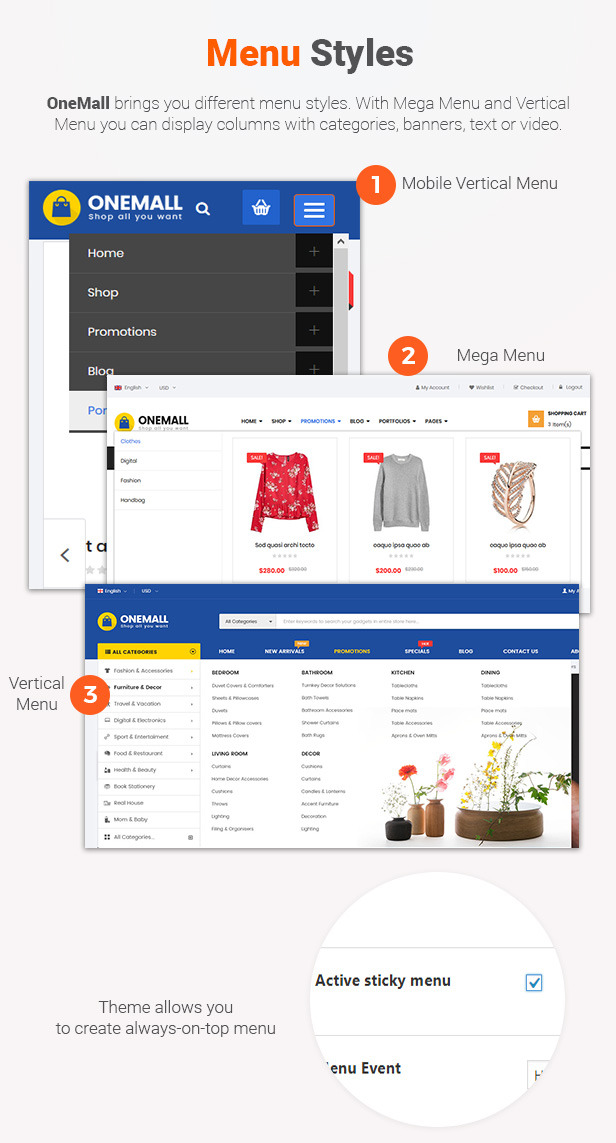

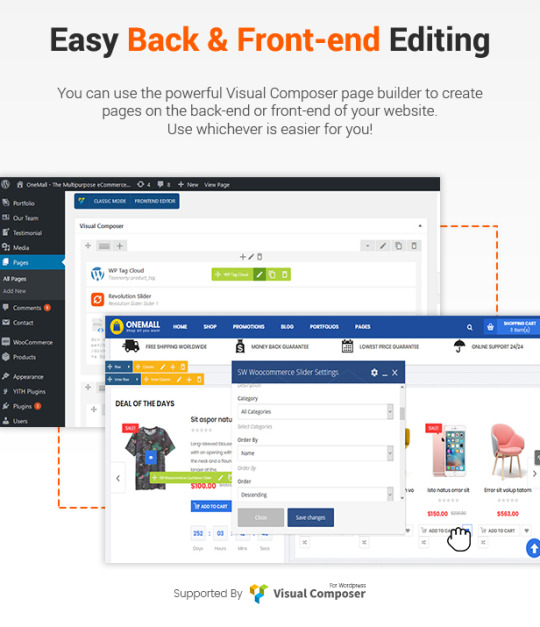

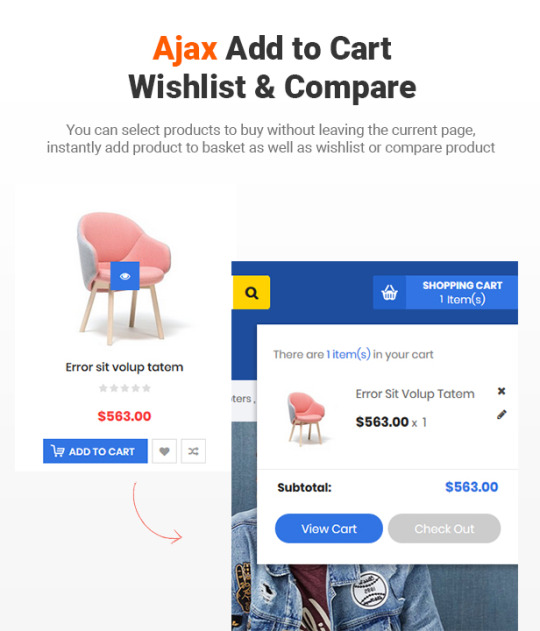

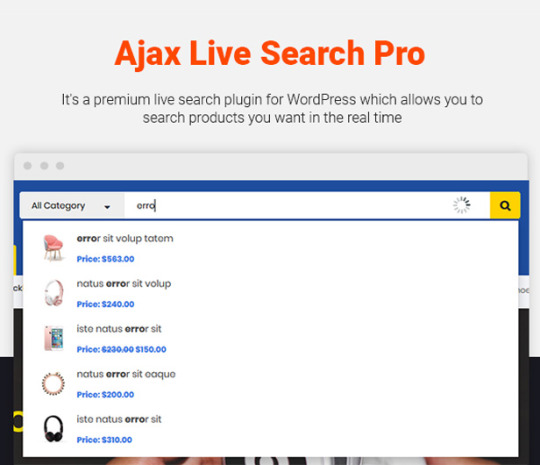

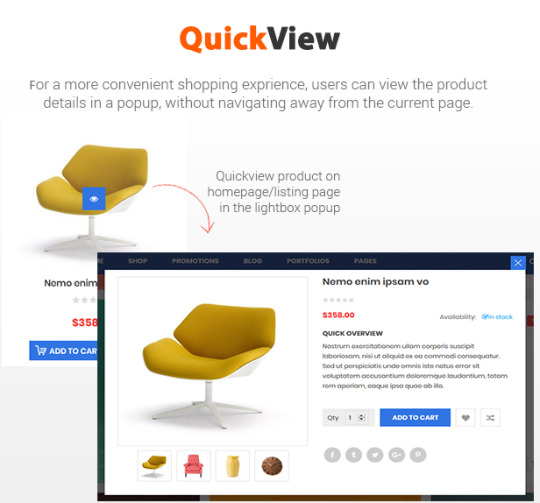

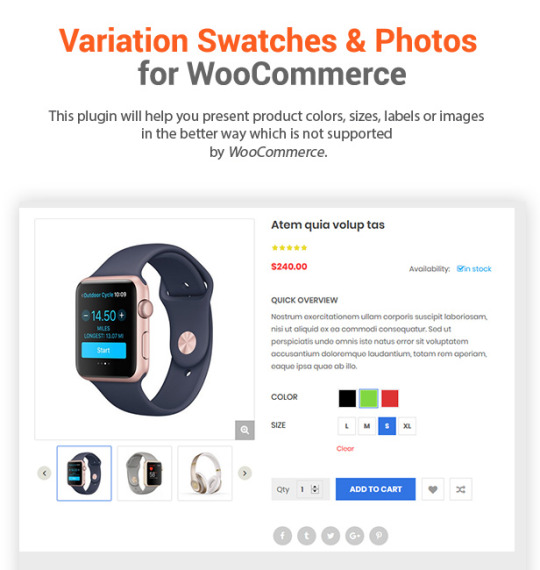

In addition, Destino allows you to build unique and advanced layouts for your posts & pages using a drag-n-drop back and front end editors – Visual Composers. It also brings you a lot of features like RTL Layout Support, Stunning Home slideshow by Revolution Slider, Powerful Mega Menu, Color Swatches, Product Quickview, Featuring Product Page, Ajax Cart, Variation Swatches & Photos, Pro Search Box & others.

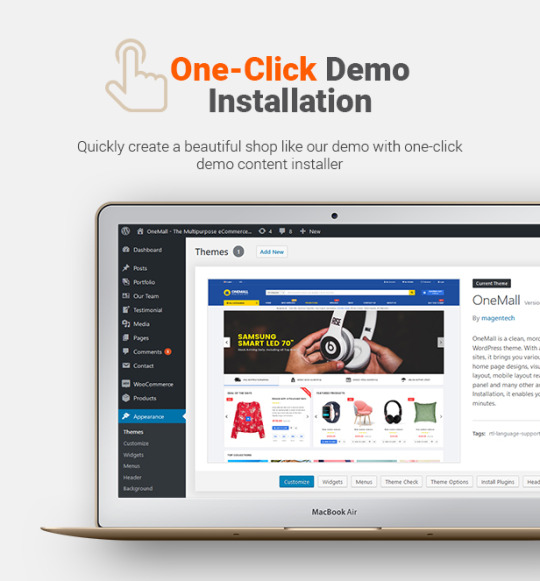

The One-click Demo Installation can import posts, pages, sliders, widget & data to get a complete eCommerce website look like the demo in a matter of moments.

Easy to build any website that works for you. Choose a layout and color scheme, and customize the header with different images or sliders

Integrating in theme are powerful premium plugins/addons, including Revolution Slider to create beautiful and smooth slider, Visual Composerwhich allows users to drag and drop elements to create page/post with ease.. and other amazing addons.

With Mega Menu, you are free to create amazing things with your website. Moreover, you can set your menu always-on-top when scolling down.

DESTINO BRINGS YOU PREMIUM FEATURES..

Full Feature List

Multi Homepage Layouts

Compatible with WordPress 4.9.x

WooCommerce 3.4.x Ready!

Revolution Slider 5.4.x (advanced page builder)

Visual Composer 5.4.x Ready!

MailChimp For WordPress Version

One Click Import Demo Site – Easiest and fastest way to build your website

Child Theme Included

Compare & Wishlist support

Different Header Styles

Various Shortcodes – Get creative with unlimited combinations of easy-to-use shortcodes and quickly create any type of page you like.

Responsive WordPress Theme

2 Preset Color Styles

Google Fonts Included

Fully integrated with Font Awesome Icon

Built in with HTML5, CSS3 & LESS

Contact Form 7 ready

Easy Customization

Typography – Highly customizable typography settings,

Support menu with Mega and dropdown style

Support primary menu location, widget sidebars

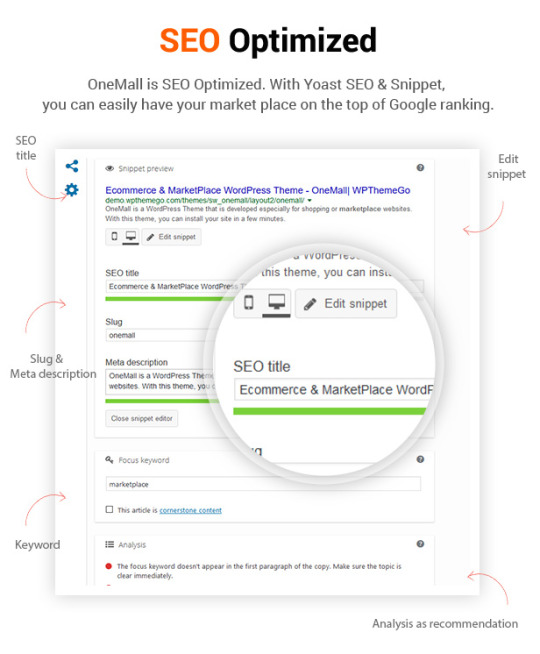

SEO Optimized

Blog Layout Options:

Left Sidebar with Grid view (2-4 columns)

Right Sidebar with Grid view (2-4 columns)

Left Sidebar with List view

Right Sidebar with List view

Post Format Types:

Post Format Image

Post Format Audio

Post Format Gallery

Post Format Video

Multiple portfolio pages: Portfolio Masonry, Portfolio 2-4 columns

Translation – WPML compatible and every line of your content can be translated

RTL Support

Support to add custom CSS/JS

Cross browser compatible – It works fine under modern, major browser (FireFox, Safari, Chrome, IE10+)

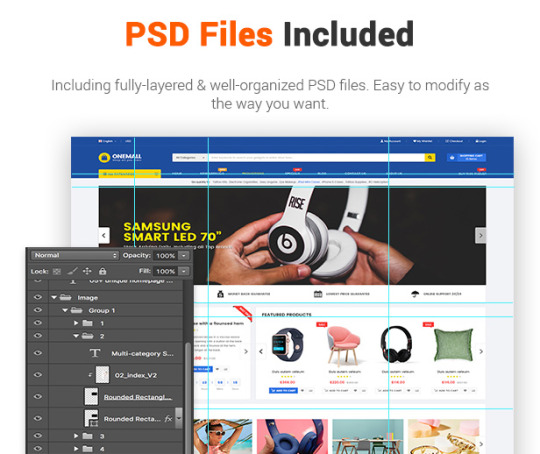

Download PSD files worth $12

Free Lifetime Updates

WHAT ARE INCLUDED?

Destino Theme Package

Use this package to install to your current site

PSD Sources

All PSD design themes

Detailed Documentation

Provide all detailed steps to configure theme

Check Out Popular WordPress Themes:

Change Log

------------ VERSION - 1.4.3: Released on Jun-09-2018 ------------ [+] Update WordPress 4.9.6 [+] Update WooCommerece 3.4.2 [+] Update Revolution Slider 5.4.7.4

------------ VERSION - 1.4.2: Released on April-5-2018 ------------ [+] Update WooCommerece 3.3.4 [+] Update Revolution Slider 5.4.7.2 [+] Update Visual Composer 5.4.7

------------ VERSION - 1.4.1: Released on Mar-05-2018 ------------ [+] Update WooCommerece 3.3.3

------------ VERSION - 1.4.0: Released on Feb-13-2018 ------------ [+] Update WooCommerece 3.3.1

------------ VERSION - 1.3.1: Released on October-30-2017 ------------ [+] Update WordPress 4.8.2 [+] Update Visual Composer 5.4.2 [+] Update WooCommerce 3.2.1 [+] Update Revolution Slider 5.4.6.1

------------ VERSION - 1.3.0: Released on August-07-2017 ------------ [+] Update WordPress 4.8.1 [+] Update Visual Composer 5.2.1 [+] New Feature: Variation swatches and images for WooCommerce.

------------ VERSION - 1.2.1: Released on July-14-2017 ------------ [+] Updated WordPress 4.8 [+] Updated WooCommerce 3.1.1 [+] Updated Visual Composer 5.2 [+] Updated Revolution Slider 5.4.5.1 [+] Updated showing SKU, Category and Tag in product detail page

------------ VERSION - 1.2.0: Released on June-02-2017 ------------ [+] Added Mobile layout Style #2

------------ VERSION - 1.1.0: Released on May-24-2017 ------------ [+] Updated Mobile layout Style #1 [+] Updated Featured Video for Product [+] Updated Search Result Layout [+] Updated WordPress 4.7.5 [+] Updated WooCoommerce 3.0.7 [+] Updated Revolution 5.4.3.1

------------ VERSION - 1.0.4: Released on May-16-2017 ------------ [+] Added the new homepage #7 (Watch Store) [#] Fixed error : images in the gallery didn't change to match the variation

------------ VERSION - 1.0.3: Released on May-10-2017 ------------ [+] Added the new homepage #6 [+] Updated WooCommerce 3.0.6 [+] Updated revolution slider 5.4.3.1 [+] Updatde style for search cate

------------ VERSION - 1.0.2: Released on April-25-2017 ------------ [+] Updated WordPress 4.7.4 [+] Updated WooCommerce 3.0.4 [+] Updated Visual Composer 5.4.3

------------ VERSION - 1.0.1: Released on April-21-2017 ------------ [+] Updated WooCommerce 3.0.4 [+] Updated WordPress 4.7.4

------------ VERSION - 1.0.0: Released on April-17-2017 ------------ [+] Initial Release

Other Versions

BUY From ENVATO Marketplace

#Advanced#blog#destino#Digital#digital store#e-commerce#ecommerce#Fashion#mobile#mobile layouts#modern#multipurpose#revolution slider#shopping#specific#theme#unlimited color#vendor#woocommerce#wordpress

1 note

·

View note

Text

An introduction, plus 0-9 review

Part 1: background

The idea behind this blog is to listen to all of the long live emo google drive, a drive containing “emocore” music from the “90’s” (more on this later), and comment the process. I found this archive while browsing some semi-obscure facebook group dedicated to emo music and decided to listen to all of it. I did this in part because I wanted to learn more about music, in part because it seems interesting to not have as much of a filter when listening to music as I’d only ever hear the most popular 90’s emocore otherwise but mostly because why not??

The E-word

(Feel free to skip this section if you already have a solid idea of what the hell “emocore” means)

(The rest of the section is basically just emo 101)

I like trying to categorize things, even though I’m aware that every categorization falls apart when you start really pushing it’s boundaries. I like strange cultural artifacts. Because of this, I am fascinated by emo. Emo is older than most people expect with it’s roots in the 80’s and that has meant several very different things both to different people and in different points in time. The part of emo that most people are familiar with is the one that was commercially successful maybe 10-15 years ago, and that is not even considered to be “real emo” by most people immersed into the subculture.

Emo started as an outgrowth of hardcore music in the 80’s and was pioneered by bands such as Rites of Spring, Moss Icon and Embrace. At the time it was referred to as “emotional hardcore” which was later shortened to “emocore” and finally just “emo”. During the 90’s a different style of emo was developed known as Midwest Emo which was much closer to alternative rock or indie rock while only maintaining some of it’s hardcore roots, with bands such as Sunny Day Real Estate, Mineral, the Promise ring and american football. During the 00’s there was more of an effort by record companies to make money of emo music which lead to more listenable and widely market music being created often closer to pop-punk and sometimes post-hardcore. Notable examples are The red jumpsuit apparatus, My chemical romance, Fall Out Boy, Taking back Sunday, Brand new and Jimmy Eat World. For a lot fans of more underground emo, most or all of these bands are considered fake emo. I’m gonna call it scene emo instead because that is a less loaded term. During the late 00’s, the so-called “emo revival” movement began to make more underground music with a more 90’s midwest sound. Some important revival bands are Snowing, The World is a Beautiful Place and I am no Longer Afraid to Die, Empire! Empire! (I was a lonely estate) and the Hotelier.

Basically, for some reason people consider Orchid, american football and Panic! At the Disco to be part of the same genre. To clear up any confusion, I’m using the word “emocore” to indicate that I’m talking about the more traditional “emotional hardcore” definition of emo. Note that the most common definition that you see in emo communities on the internet, or among more recent bands that call themselves emo is one that includes emocore (although this aspect is somewhat downplayed), 90’s midwest emo, a select few scene emo bands (specifically Brand New, Taking Back Sunday and Jimmy Eat World) as well as emo revival. This is also the kind of emo I am personally most familiar with.

There is however a contingent of people instead use the emocore definition, which you might recognize if you’ve ever seen the infamous real emo copypasta. The author of the long live emo archive, Лобынцев Артем, also seems to use this definition, although they probably actually know what they’re talking about.

“90’s” “emocore”

So some of the music on this drive isn’t actually from the 90’s, there’s also music from the 80’s and the early 2000’s. I’m calling it 90’s emocore because it paints a better picture than just emocore, even if it technically isn’t true. On a similar note, I’m calling it emocore even though there may very well be music that some genre nerd might argue isn’t actually emocore. As previously discussed, emo is a hopelessly broad term so I’m using emocore to give people a better idea of what to expect, not because it’s necessarily 100% accurate.

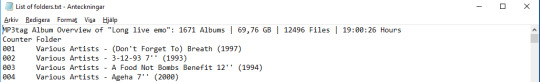

Part 2: The archive

The entire archive is 83.8 gigabyte which is more than 10% of my harddrive. I’ve spent several hours just downloading files, and I’ve only gotten up to the letter D. In the root of the folder there is a file called “List of folders.txt”. According to it, there are 1671 albums, 69.76 GB (this is presumably just the music without the image files also included? Maybe that’s what I should have called the blog) 12496 songs, and 19:00:26 hours. That’s in the format DD:HH:MM by the way.

This is an absurd amount of music. My plan is to try and finish it within one year, or before 2019/7/20. To do that, I would have to listen to an average of 4.6 albums, 34.22 songs or 1.25 hours a day. We’ll see how that goes.

Part 3: The review: 0-9 (finally!)

So this is the format: The drive is divided in to folders based on the first character of the band name. Every time I finish one such folder I will post a review like part 3 here (don’t worry, I wont spend hundreds of words talking about emo every post). I will talk about my favorite artist under that letter, my favorite band name, my favorite image (as I said, there are images included under some of the bands, mostly of their album covers and such) as well as anything else I find interesting. I might shake up the formula a bit as I go, who knows. Before writing this post I’ve listened to the first folder, titled “0-9”. This is one of the smallest folders in the compilation, despite technically containing ten different characters, with only 160 songs. For comparison, the letter a has 831 songs. A careful start in other words.

Here are all the songs, albums and bands I've been listening to: https://pastebin.com/Xm5b4ZN1

My favorite band

125, Rue Montmartre

youtube

So after all this talk about emocore I ended up picking the maybe least hardcore band, figures. Commenter Kyle Cornwell on Sophies Floarboard says that they’re emocore at least so we’re still good.

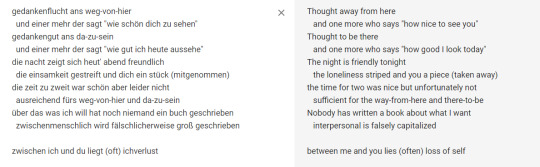

125, Rue Montmartre is a German band named after a street address in Paris. They where active between 1998 and 2000 and they released 1 EP, took part in 1 split with Maggat and appeared on 2 compilations. They have a female singer who not only sings but also speaks and whispers. The guitars mostly play short, catchy riffs or arpeggios with some occasional chords during more explosive parts. The bass is comparatively loud and often contributes to the melody of a given song more than the guitars. Their style is clearly closer to the Midwest style of emo with it’s arpeggiod guitars, more indie sound and more melancholy mood. Here are the lyrics to their song Disco Hijack along with it’s English translation, which are very emo:

My favorite band name

30 second motion picture

I didn’t really find any name that was all that interesting under the number category (although I expect to find a lot of good ones down the line) but this is the one that spoke to me the most for whatever reason

My favorite image

This is from 309 chorus’s 1994 demo.

There’s just something really endearing about this grainy picture of this nerdy-looking dude with arm tattoos playing in front of a sign that says “haymarket collective” and has a fist and a circle E. Makes me happy inside.

Expect a new post in maybe two weeks? I’m not sure exactly how long time everything takes yet

1 note

·

View note

Text

WE ARE THE BEST WEBSITE CONTENT DEVELOPMENT COMPANY IN USA AND NODE JS DEVELOPMENT COMPANY IN USA

While more people use the web regularly, the number of people using the web for product and service analysis continues to rise. This means that a company's website is usually the first thing that a target audience interacts with. Along with other critical factors (such as design), quality content development determines that prospective clients have a strong first impression of a Website Content Development Company in USA. First experiences are extremely important in this situation.

Understanding the Importance of Content Development:

If users don't like what they see instantly on your website, there's a fair chance they'll move on to the next platform that offers similar services and products. Furthermore, it is your role as a supportive, authoritative source in your industry that will build trust with those targeted users, and that it is the quality content on your website that will attract them there in the first instance. When used correctly, content development is effective methods that can help businesses excel in the vast world of online marketing.

Content Development:

Begin growing your business by implementing the most effective content marketing strategies. At Arkss Tech, we build content that helps you grow your Node js Development Company in USA and brand in the most effective way.

Increase traffic to your website with this effective marketing tool. Additionally, it boosts the conversion rate, which results in an increase in revenue for your business. You can bring your business up to new heights with Arkss Tech's Content development services.

What Is the Significance of Quality Content Development?

Quality content is professional, well-thought-out, correct spelling and formatted, and flows perfectly, from the most necessary information on your "contact us" page to informative, educational content in your blog posts.

Punctuation and grammar errors are indicators of careless writers. When users come across sloppy content, the business as a whole loses its reputation.

The appearance of written content on any specific webpage is also a significant factor in content development. Website visitors want to know who you are or what you have to say and concisely. They do not want an in-depth study of the business operations.

Additionally, you should avoid providing inadequate information, as this may confuse visitors and leave them in the dark about what your business is all about. If you are confident about your profession, developing an adequate amount of valuable content for your website pages will be simple and easy.

Why Should You Use Arkss Tech Content Development Services?

Arkss Tech content is designed to be scalable. You will note a steady rise in organic traffic to the website due to our content marketing service. The content development process can be customized to your marketing goals. We create content that will help you improve your marketing efforts.

We have a highly qualified team and innovative content developers who can convert complicated concepts into clear and concise language. Our content is smooth and transparent, and it is naturally usable. Our content is more than just a set of words. They are compelling and powerful.

Planning Website Content:

Content planning and development can be a challenging process. If your webpage has a few or one hundred pages, the content you choose to provide is critical to its overall success. The content includes written text and also images, diagrams, and downloadable files.

Assembling the appropriate content for your audiences and connecting with other associates of your company can be challenging. A little strategy and preparation would make this much simpler.

1. Examine Your Current Document

When designing a new website, the first step is to review the existing copy if this is the initial website, research existing materials such as brochures to determine what has been available.

Consider the following three points.

Is the content reliable or up to date?

Is there something that is missing?

Is this information helpful to my readers?

Ascertaining that all is right will ensure that you do not have any outdated pricing or information.

When you first built your site, your company has probably changed, and you would need to provide additional services. By defining a clear and concise objective for each piece of content, you will verify that you provide valuable content for readers.

2. Determine the Audience Objective

Before deciding on the type of content to provide on your site, determine who your website's target audience is. Understanding or identifying your audience will help you to organize the remainder of your content with better clarity.

This also enables you to determine whether or not the information is meaningful or even appropriate. It can be helpful to create primary and even secondary audiences to ensure that all of your visitors and their unique needs are taken into consideration.

3. Make Use of Sitemaps as Blueprints for Copywriting

When comparing the process of designing a website to that of building a house, the sitemap serves as the architect's blueprint. You could design a house with insufficient bathrooms or closets without it.

There are multiple techniques and software suites available for organizing data. Succinct, concise page titles perform better in navigational systems than lengthy sentences. You can rearrange and prioritize your content before you start writing if you take this step first.

4. Work in Collaboration with Others

If you are the sole proprietor of a Website Content Development Company in the USA, you can include others within the analysis and editing process to ensure that copying is perfectly correct and accessible to others.

If you work in a company that relies on support from other stakeholders, several approaches can help minimize this pain. Avoid storing all of your content in a single file, as this prevents simple collaboration. It's convenient to share a document for shared editing with Google Docs.

5. Use Storytelling Rather Than 'Storyselling.'

You can assume that your website is an opportunity for you to "share your story." Rather than that, it should highlight the success stories of those who have derived from your products or services.

Avoid overstating how effective the company is. Rather than that, provide evidence or information. Utilize vocabulary that the target audience is familiar with rather than industry-specific terminology.

Appropriately represent their views by describing your services or products as a solution. Provide concise advantages in the form of bullet points rather than lengthy paragraphs. Making the content more user-centric satisfies users' desires and tells their storyline rather than yours.

6. Create Content for Both Humans and Search Engines

If you know the value of writing your copies for SEO, do not concentrate too much on injecting your key search words that the text becomes unreadable. By adding your words to the website naturally, you make sure that you do not sound like a robot until anyone visits.

Additionally, using "semantic terminology," you can create several synonyms for your primary keywords. This will help you diversify your content while keeping terms that help in your search efforts.

7. Make the Action-Oriented Against Copying

Encourage readers to take action after they've completed your copy. If you want them to contact you to let you know more or purchase a product online, tell them your next move at the end of the copy. By including an email address or a link to your home page, you provide them with a simple way to contact you when your company remains top of mind.

8. Add Visual Appeal to the Copy

Supporting images, diagrams, and charts can be used to break up your text. Most people don't read your entire copy but scan it separately with testimonials and bulleted lists. Additionally, keep your paragraphs brief and divide sections with sub-headings.

Choosing the right typeface plays a major role in the readability of your copy. Most web designers suggest using a sans-serif typeface for body copy, whereas headlines can use a slightly larger serif. These strategies will help ensure that your copy is as attractive as it is functional.

9. Create Deadlines

While editing your website copy using a contents management system like WordPress is simpler than ever, it can be the opponent of achieving and publishing your new website. You'll need to establish specific deadlines to keep your project on track. By segregating content development activities into relevant chunks, you will ensure that tasks are completed on time.

Starting with the main section will help you determine what main differentiators to concentrate on the web and set the tone for the rest of your copy. Set the team's original copy deadlines, schedule feedback for all stakeholders, and decide when to add all copies to the web.

Final Thought:

Blogs are becoming increasingly important for businesses are looking to distinguish themselves from their competitors. A blog needs to be composed of well-written, edited content to achieve the goals for which it is created. Blogs should also be periodically updated to ensure that information is shared continuously from your platform to existing and potential customers. This task may sometimes become too difficult for a business owner or employee to perform. Arkss Tech, a Website Content Development Company in the USA, exists to assist clients who wish to hire a dedicated writer team to generate high-quality content on their behalf.

Content planning does not have to be as time-consuming as it might seem. The method can be made simpler by spending time in pre-planning and strategy.

0 notes

Text

How do you handle http request and response?

Every website on the internet is majorly based on a two-way communication between clients and servers. Here by clients, we mean browsers like Chrome or Safari or any type of computing program or device whereas servers are often computers in the cloud.

This communication between the client and a server is done by a special mechanism of HTTP requests and responses. In this cycle, first a client sends an HTTP request to the web. Here the client is often a web browser. After the web server receives the request, it runs an application to process the request. After the processing, the server returns an HTTP response. This response is basically the output displayed on the browser; it receives this response from the web server. The request that we are talking about is in various formats, for example, HTML page, style sheet, Javascript code or just a simple text. The response will also be similar according to the request, for example, HTML file, CSS file, JPG file, JS file or data in XML or JSON format. This is popularly known as the HTTP response request circle.

In this blog we are going to look at how the HTTP requests and response are handled? We will understand the concept of HTTP Requests before we proceed to HTTP responses. Let’s begin!

What are HTTP Requests?

As mentioned above, if you want to access any resource from the vast array of them present on the internet and hosted on different servers, then your browser needs to send a request to the servers. If this request gets accepted, the browser will display the requested resources on your screen. The underlying format used for the effective communication between a client and a server is HTTP which is an acronym for Hypertext Transfer Protocol. In this cycle of communication, the message that a client sends is known as an HTTP request. These requests are sent with the help of various methods known as HTTP request methods. These methods indicate specific action that has to be performed on a given resource. There are some standard features shared by the various HTTP request methods. Let’s discuss the concept of HTTP Request Methods.

An HTTP request is an action performed on a resource identified by an URL. There are several request methods with each one of them with a specific purpose. These methods should always be written in upper case as they are case-sensitive.

The working of HTTP Requests is simple. As mentioned above, an HTTP request is sent by the server and is submitted to the server. The request is processed and then the server sends a response which contains the status information of the request. It is clear that HTTP requests work as an intermediary between a client or an application and a server.

It is now time we discuss the various types of HTTP Request Methods. The first and probably the most popular request method is the GET method. It retrieves and requests data from a particular computing resource present in a server. In simple terms, this popular HTTP request techniques is used to retrieve information that is identified by the URL.

Another HTTP request method is HEAD. This technique requests a reaction that is similar to that of GET request. However, the only difference is that the HEAD method doesn’t have a message-body in its response. This method is useful in recovering the meta-data without transferring the entire content. HEAD method is commonly used for testing hypertext links for accessibility, validity or recent modification.

POST is another popular HTTP request method. It is used to send data to a server in order to create or update a resource. The information submitted to the server is archived in the request body. One common use of the HTTP POST method is to send user-generated data to a server, for example, uploading a profile photo by a user.

One method similar to POST method is PUT. It is also used for sending data to the server to create or update a resource; however, the difference is that PUT requests are idempotent. Hence, if the same PUT requests are called multiple times then the results will always be the same.

We have covered the basic HTTP request methods generally used in server communication. There are a couple of more methods which we will discuss in the blog ahead. One such request method is DELETE which, as the name suggests, is used to delete resources indicated by the URL. Basically, a DELETE request removes the targeted resource.

A method similar to POST and PUT is the PATCH method. It is used for applying partial changes to the resource. It is also a non-idempotent method however it requires the user to only send the updated username and not the complete user entity.

A HTTP request method used to invoke an application loop-back test along the path to the target resource is the TRACE method. It allows clients to view messages that are being received on the other end of the request chain. This information is used for testing functions.

One last method that we are going to discuss is the CONNECT method which is used to create a network connection to a web server by the client. It basically creates a tunnel to the identifies server. One example of the CONNECT request method is SSL tunnelling.

We discussed the various methods or techniques for http REQUEST. In the segment below, we discussed some similarities and distinctions between various HTTP REQUEST methods.

HTTP PUT and POST Request Methods discussed above are both used to facilitate data transmission between a client and a server. They have similar roles in the response request cycle but there are some differences between them also. For example, the PUT request method is idempotent whereas POST request method is not. Another difference is that PUT request operates as specific but POST operates as abstract.

GET and POST methods can also be compared in order to understand them better. In GET method, the parameters are saved in the browser’s history whereas in the POST method, the parameters aren’t archived. Also, the GET method can be bookmarked but the same cannot be said for the POST method.

With this, we come to an end of our discussion on the concept of HTTP Request and the various methods associated with it. We also took a brief look over the comparison between some similar methods. Now, we will move on to our next section, where we will talk in detail about HTTP response and the concepts that are useful in the response cycle. Let’s begin!

What is HTTP Response?

We learnt in detail about HTTP request, the next step a request is generated involves HTTP Response. It is nothing but a packet containing information sent by the server to the client in response to the request made by the client. HTTP Response basically has the information requested by the Client. In this segment, we are going to discuss the structure of HTTP Response.

The structure of HTTP Response contains a status line, any number of headers or it can also be with no header, a request body in a status line is also optional.

A response status line consists of HTTP protocol version, status code and a reason phrase.

Let’s understand the structure of HTTP response with the help of an example.

We are taking an example where we will see the response section. The first line is called the Status Line. It is written in the following manner – Status Line: HHTP/1.1 200 OK

The status line has the HTTP Protocol Version mentioned as (HTTP/1.1). the next is Status Code defines as 200 and then the Status Message which in this case is OK.

The next section after the status line is the response header. It has zero or more header lines. Generally, the header lines are not zero. Response headers are placed after the status Line and before the Response Body. These headers pass some additional information to the Client.

The HTTP Response Header, for example, a header content-type, contains an application/json header with charset=utf-8. This example signifies that the body of the response will contain a JSON formatted data. This information is given to the client by the server. The response body should be interpreted it as a JSON by the client.

Next is the response body which has the resource data requested by the client. For example, a web development course was requested for the fees data, then the response body will contain the information of fees for the web development course. The response body is variable and can also have other information like syllabus, testimonial, duration and a few more properties of the particular course. The format of the text contained in HTTP Response Body is defined by the Response headers. In the explanation above, when we said resource, we referred to the information requested by a client. In this example, the details corresponding to a course is a resource.

With this, we come to an end of our discussion on the concept of HTTP Response and its structure as well as the request method and its methods.

If you wish to start your journey in web development, we have something very interesting for you. There is a professional web development course that will address all your concerns regarding web development and help you ace your skills. Make sure you check out the course that can be a stepping stone to your dream of becoming a proficient web developer.

One such course that we would like to recommend is Konfinity’s Web Development Course . This course is a well-researched training course developed by experts from IIT DELHI in collaboration with tech companies like Google, Amazon and Microsoft. It is trusted by students and graduates from IIT, DTU, NIT, Amity, DU and more.

We encourage technocrats like you to join the course to master the art of creating web applications by learning the latest technologies, right from basic HTML to advanced and dynamic websites, in just a span of a few months.

Konfinity is a great platform for launching a lucrative tech career. We will get you started by helping you get placed in a high paying job. One amazing thing about our course is that no prior coding experience is required to take up our courses. Start your free trial here .

0 notes

Text

#WDILTW – Creating examples can be hard

This week I was evaluating AWS QLDB. Specifically the verifiable history of changes to determine how to simplify present processes that perform auditing via CDC. This is not the first time I have looked at QLDB so there was nothing that new to learn. What I found was that creating a workable solution with an existing application is hard. Even harder is creating an example to publish in this blog (and the purpose of this post). First some background. Using MySQL as the source of information, how can you leverage QLDB? It’s easy to stream data from MySQL Aurora, and it’s easy to stream data from QLDB, but it not that easy to place real-time data into QLDB. AWS DMS is a good way to move data from a source to a target, previously my work has included MySQL to MySQL, MySQL to Redshift, and MySQL to Kinesis, however there is no QLDB target. Turning the problem upside down, and using QLDB as the source of information, and streaming to MySQL for compatibility seemed a way forward. After setting up the QLDB Ledger and an example table, it was time to populate with existing data. The documented reference example looked very JSON compatible. Side bar, it is actually Amazon Ion a superset of JSON. INSERT INTO Person Now, MySQL offers with the X Protocol. This is something that lefred has evangelized for many years, I have seen presented many times, but finally I had a chance to use. The MySQL Shell JSON output looked ideal. { "ID": 1523, "Name": "Wien", "CountryCode": "AUT", "District": "Wien", "Info": { "Population": 1608144 } } { "ID": 1524, "Name": "Graz", "CountryCode": "AUT", "District": "Steiermark", "Info": { "Population": 240967 } } And now, onto some of the things I learned this week. Using AWS RDS Aurora MySQL is the first stumbling block, X Protocol is not supported. As this was a example, simple, mysqldump some reference data and load it into a MySQL 8 instance, and extract into JSON, so as to potentially emulate a pipeline. Here is my experiences of trying to refactor into a demo to write up. Launch a MySQL Docker container as per my standard notes. Harmless, right? MYSQL_ROOT_PASSWORD="$(date | md5sum | cut -c1-20)#" echo $MYSQL_ROOT_PASSWORD docker run --name=qldb-mysql -p3306:3306 -v mysql-volume:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=$MYSQL_ROOT_PASSWORD -d mysql/mysql-server:latest docker logs qldb-mysql docker exec -it qldb-mysql /bin/bash As it's a quick demo, I shortcut credentials to make using the mysql client easier. NOTE: as I always generate a new password each container, it's included here. # echo "[mysql] user=root password='ab6ea7b0436cbc0c0d49#' > .my.cnf # mysql ERROR 1045 (28000): Access denied for user 'root'@'localhost' (using password: NO) What the? Did I make a mistake, I test manually and check # mysql -u root -p # cat .my.cnf Nothing wrong there. Next check # pwd / bash-4.2# grep root /etc/passwd root:x:0:0:root:/root:/bin/bash operator:x:11:0:operator:/root:/sbin/nologin And there is the first Dockerism. I don't live in Docker, so these 101 learnings would be known. First I really thing using "root" by default is a horrible idea. And when you shell in, you are not dropped into the home directory? Solved, we move on. # mv /.my.cnf /root/.my.cnf Mock and example as quickly as I can think. # mysql mysql> create schema if not exists demo; Query OK, 1 row affected (0.00 sec) mysql> use demo; Database changed mysql> create table sample(id int unsigned not null auto_increment, name varchar(30) not null, location varchar(30) not null, domain varchar(50) null, primary key(id)); Query OK, 0 rows affected (0.03 sec) mysql> show create table sample; mysql> insert into sample values (null,'Demo Row','USA',null), (null,'Row 2','AUS','news.com.au'), (null,'Kiwi','NZ', null); Query OK, 3 rows affected (0.00 sec) Records: 3 Duplicates: 0 Warnings: 0 mysql> select * from sample; +----+----------+----------+-------------+ | id | name | location | domain | +----+----------+----------+-------------+ | 1 | Demo Row | USA | NULL | | 2 | Row 2 | AUS | news.com.au | | 3 | Kiwi | NZ | NULL | +----+----------+----------+-------------+ 3 rows in set (0.00 sec) Cool, now to look at it in Javascript using MySQL Shell. Hurdle 2. # mysqlsh MySQL Shell 8.0.22 Copyright (c) 2016, 2020, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. MySQL JS > var session=mysqlx.getSession('root:ab6ea7b0436cbc0c0d49#@localhost') mysqlx.getSession: Argument #1: Invalid URI: Illegal character [#] found at position 25 (ArgumentError) What the, it doesn't like the password format. I'm not a Javascript person, and well this is an example for blogging, which is not what was actually setup, so do it the right way, create a user. # mysql mysql> create user demo@localhost identified by 'qldb'; Query OK, 0 rows affected (0.01 sec) mysql> grant ALL ON sample.* to demo@localhost; Query OK, 0 rows affected, 1 warning (0.01 sec) mysql> SHOW GRANTS FOR demo@localhost; +----------------------------------------------------------+ | Grants for demo@localhost | +----------------------------------------------------------+ | GRANT USAGE ON *.* TO `demo`@`localhost` | | GRANT ALL PRIVILEGES ON `sample`.* TO `demo`@`localhost` | +----------------------------------------------------------+ 2 rows in set (0.00 sec) Back into the MySQL Shell, and hurdle 3. MySQL JS > var session=mysqlx.getSession('demo:qldb@localhost') mysqlx.getSession: Access denied for user 'demo'@'127.0.0.1' (using password: YES) (MySQL Error 1045) Did I create the creds wrong, verify. No my password is correct. # mysql -udemo -pqldb -e "SELECT NOW()" mysql: [Warning] Using a password on the command line interface can be insecure. +---------------------+ | NOW() | +---------------------+ | 2021-03-06 23:15:26 | +---------------------+ I don't have time to debug this, User take 2. mysql> drop user demo@localhost; Query OK, 0 rows affected (0.00 sec) mysql> create user demo@'%' identified by 'qldb'; Query OK, 0 rows affected (0.01 sec) mysql> grant all on demo.* to demo@'%' -> ; Query OK, 0 rows affected (0.00 sec) mysql> show grants; +-- | Grants for root@localhost | +--- | GRANT SELECT, INSERT, UPDATE, DELETE, CREATE, DROP, RELOAD, SHUTDOWN, PROCESS, FILE, REFERENCES, INDEX, ALTER, SHOW DATABASES, SUPER, CREATE TEMPORARY TABLES, LOCK TABLES, EXECUTE, REPLICATION SLAVE, REPLICATION CLIENT, CREATE VIEW, SHOW VIEW, CREATE ROUTINE, ALTER ROUTINE, CREATE USER, EVENT, TRIGGER, CREATE TABLESPACE, CREATE ROLE, DROP ROLE ON *.* TO `root`@`localhost` WITH GRANT OPTION | | GRANT APPLICATION_PASSWORD_ADMIN,AUDIT_ADMIN,BACKUP_ADMIN,BINLOG_ADMIN,BINLOG_ENCRYPTION_ADMIN,CLONE_ADMIN,CONNECTION_ADMIN,ENCRYPTION_KEY_ADMIN,FLUSH_OPTIMIZER_COSTS,FLUSH_STATUS,FLUSH_TABLES,FLUSH_USER_RESOURCES,GROUP_REPLICATION_ADMIN,INNODB_REDO_LOG_ARCHIVE,INNODB_REDO_LOG_ENABLE,PERSIST_RO_VARIABLES_ADMIN,REPLICATION_APPLIER,REPLICATION_SLAVE_ADMIN,RESOURCE_GROUP_ADMIN,RESOURCE_GROUP_USER,ROLE_ADMIN,SERVICE_CONNECTION_ADMIN,SESSION_VARIABLES_ADMIN,SET_USER_ID,SHOW_ROUTINE,SYSTEM_USER,SYSTEM_VARIABLES_ADMIN,TABLE_ENCRYPTION_ADMIN,XA_RECOVER_ADMIN ON *.* TO `root`@`localhost` WITH GRANT OPTION | | GRANT PROXY ON ''@'' TO 'root'@'localhost' WITH GRANT OPTION | +--- 3 rows in set (0.00 sec) mysql> show grants for demo@'%'; +--------------------------------------------------+ | Grants for demo@% | +--------------------------------------------------+ | GRANT USAGE ON *.* TO `demo`@`%` | | GRANT ALL PRIVILEGES ON `demo`.* TO `demo`@`%` | +--------------------------------------------------+ 2 rows in set (0.00 sec) Right, initially I showed grants of not new user, but note to self, I should checkout the MySQL 8 Improved grants. I wonder how RDS MySQL 8 handles these, and how Aurora MySQL 8 will (when it ever drops, that's another story). Third try is a charm, so nice to also see queries with 0.0000 execution granularity. MySQL JS > var session=mysqlx.getSession('demo:qldb@localhost') MySQL JS > var sql='SELECT * FROM demo.sample' MySQL JS > session.sql(sql) +----+----------+----------+-------------+ | id | name | location | domain | +----+----------+----------+-------------+ | 1 | Demo Row | USA | NULL | | 2 | Row 2 | AUS | news.com.au | | 3 | Kiwi | NZ | NULL | +----+----------+----------+-------------+ 3 rows in set (0.0006 sec) Get that now in JSON output. NOTE: There are 3 different JSON formats, this matched what I needed. bash-4.2# mysqlsh MySQL Shell 8.0.22 Copyright (c) 2016, 2020, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help' or '?' for help; 'quit' to exit. MySQL JS > var session=mysqlx.getSession('demo:qldb@localhost') MySQL JS > var sql='SELECT * FROM demo.sample' MySQL JS > shell.options.set('resultFormat','json/array') MySQL JS > session.sql(sql) [ {"id":1,"name":"Demo Row","location":"USA","domain":null}, {"id":2,"name":"Row 2","location":"AUS","domain":"news.com.au"}, {"id":3,"name":"Kiwi","location":"NZ","domain":null} ] 3 rows in set (0.0006 sec) Ok, that works in interactive interface, I need it scripted. # vi bash: vi: command not found # yum install vi Loaded plugins: ovl http://repo.mysql.com/yum/mysql-connectors-community/el/7/x86_64/repodata/repomd.xml: [Errno 14] HTTP Error 403 - Forbidden Trying other mirror. ... And another downer of Docker containers, other tools or easy ways to install them, again I want to focus on the actual example, and not all this preamble, so # echo "var session=mysqlx.getSession('demo:qldb@localhost') var sql='SELECT * FROM demo.sample' shell.options.set('resultFormat','json/array') session.sql(sql)" > dump.js # mysqlsh What the? Hurdle 4. Did I typo this as well, I check the file, and cut/paste it and get what I expect. # cat dump.js var session=mysqlx.getSession('demo:qldb@localhost') var sql='SELECT * FROM demo.sample' shell.options.set('resultFormat','json/array') session.sql(sql) # mysqlsh MySQL Shell 8.0.22 Copyright (c) 2016, 2020, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help' or '?' for help; 'quit' to exit. MySQL JS > var session=mysqlx.getSession('demo:qldb@localhost') MySQL JS > var sql='SELECT * FROM demo.sample' MySQL JS > shell.options.set('resultFormat','json/array') MySQL JS > session.sql(sql) [ {"id":1,"name":"Demo Row","location":"USA","domain":null}, {"id":2,"name":"Row 2","location":"AUS","domain":"news.com.au"}, {"id":3,"name":"Kiwi","location":"NZ","domain":null} ] 3 rows in set (0.0022 sec) This is getting crazy. # echo '[ > {"id":1,"name":"Demo Row","location":"USA","domain":null}, > {"id":2,"name":"Row 2","location":"AUS","domain":"news.com.au"}, > {"id":3,"name":"Kiwi","location":"NZ","domain":null} > ]' > sample.json bash-4.2# jq . sample.json bash: jq: command not found Oh the docker!!!!. Switching back to my EC2 instance now. $ echo '[ > {"id":1,"name":"Demo Row","location":"USA","domain":null}, > {"id":2,"name":"Row 2","location":"AUS","domain":"news.com.au"}, > {"id":3,"name":"Kiwi","location":"NZ","domain":null} > ]' > sample.json $ jq . sample.json [ { "id": 1, "name": "Demo Row", "location": "USA", "domain": null }, { "id": 2, "name": "Row 2", "location": "AUS", "domain": "news.com.au" }, { "id": 3, "name": "Kiwi", "location": "NZ", "domain": null } ] I am now way of the time I would like to spend on this weekly post, and it's getting way to long, and I'm nowhere near showing what I actually want. Still we trek on. Boy, this stock EC2 image uses version 1, we need I'm sure V2, and well command does not work!!!! $ aws qldb list-ledgers ERROR: $ aws --version $ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" $ unzip awscliv2.zip $ sudo ./aws/install $ export PATH=/usr/local/bin:$PATH $ aws --version Can I finally get a ledger now. $ aws qldb create-ledger --name demo --tags JIRA=DEMO-5826,Owner=RonaldBradford --permissions-mode ALLOW_ALL --no-deletion-protection { "Name": "demo", "Arn": "arn:aws:qldb:us-east-1:999:ledger/demo", "State": "CREATING", "CreationDateTime": "2021-03-06T22:46:41.760000+00:00", "DeletionProtection": false } $ aws qldb list-ledgers { "Ledgers": [ { "Name": "xx", "State": "ACTIVE", "CreationDateTime": "2021-03-05T20:12:44.611000+00:00" }, { "Name": "demo", "State": "ACTIVE", "CreationDateTime": "2021-03-06T22:46:41.760000+00:00" } ] } $ aws qldb describe-ledger --name demo { "Name": "demo", "Arn": "arn:aws:qldb:us-east-1:999:ledger/demo", "State": "ACTIVE", "CreationDateTime": "2021-03-06T22:46:41.760000+00:00", "DeletionProtection": false } Oh the Python 2, and the lack of user packaging, more crud of getting an example. $ pip install pyqldb==3.1.0 ERROR $ echo "alias python=python3 alias pip=pip3" >> ~/.bash_profile source ~/.bash_profile $ pip --version pip 9.0.3 from /usr/lib/python3.6/site-packages (python 3.6) $ python --version Python 3.6.8 $ pip install pyqldb==3.1.0 ERROR $ sudo pip install pyqldb==3.1.0 Yeah!, after all that, my example code works and data is inserted. $ cat demo.py from pyqldb.config.retry_config import RetryConfig from pyqldb.driver.qldb_driver import QldbDriver # Configure retry limit to 3 retry_config = RetryConfig(retry_limit=3) # Initialize the driver print("Initializing the driver") qldb_driver = QldbDriver("demo", retry_config=retry_config) def create_table(transaction_executor, table): print("Creating table {}".format(table)) transaction_executor.execute_statement("Create TABLE {}".format(table)) def create_index(transaction_executor, table, column): print("Creating index {}.{}".format(table, column)) transaction_executor.execute_statement("CREATE INDEX ON {}({})".format(table,column)) def insert_record(transaction_executor, table, values): print("Inserting into {}".format(table)) transaction_executor.execute_statement("INSERT INTO {} ?".format(table), values) table="sample" column="id" qldb_driver.execute_lambda(lambda executor: create_table(executor, table)) qldb_driver.execute_lambda(lambda executor: create_index(executor, table, column)) record1 = { 'id': "1", 'name': "Demo Row", 'location': "USA", 'domain': "" } qldb_driver.execute_lambda(lambda x: insert_record(x, table, record1)) $ python demo.py Initializing the driver Creating table sample Creating index sample.id Inserting into sample One vets in the AWS Console, but you cannot show that in text in this blog, so goes to find a simple client and there is qldbshell What the? I installed it and it complains about pyqldb.driver.pooled_qldb_driver. I literally used that in the last example. $ pip3 install qldbshell Collecting qldbshell Downloading https://artifactory.lifion.oneadp.com/artifactory/api/pypi/pypi/packages/packages/0f/f7/fe984d797e0882c5e141a4888709ae958eb8c48007a23e94000507439f83/qldbshell-1.2.0.tar.gz (68kB) 100% |████████████████████████████████| 71kB 55.6MB/s Requirement already satisfied: boto3>=1.9.237 in /usr/local/lib/python3.6/site-packages (from qldbshell) Collecting amazon.ion=0.5.0 (from qldbshell) Downloading https://artifactory.lifion.oneadp.com/artifactory/api/pypi/pypi/packages/packages/4e/b7/21b7a7577cc6864d1c93fd710701e4764af6cf0f7be36fae4f9673ae11fc/amazon.ion-0.5.0.tar.gz (178kB) 100% |████████████████████████████████| 184kB 78.7MB/s Requirement already satisfied: prompt_toolkit=3.0.5 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: ionhash~=1.1.0 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: s3transfer=0.3.0 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: jmespath=0.7.1 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: botocore=1.20.21 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: six in /usr/local/lib/python3.6/site-packages (from amazon.ion=0.5.0->qldbshell) Requirement already satisfied: wcwidth in /usr/local/lib/python3.6/site-packages (from prompt_toolkit=3.0.5->qldbshell) Requirement already satisfied: python-dateutil=2.1 in /usr/local/lib/python3.6/site-packages (from botocore=1.20.21->boto3>=1.9.237->qldbshell) Requirement already satisfied: urllib3=1.25.4 in /usr/local/lib/python3.6/site-packages (from botocore=1.20.21->boto3>=1.9.237->qldbshell) Installing collected packages: amazon.ion, qldbshell Found existing installation: amazon.ion 0.7.0 Uninstalling amazon.ion-0.7.0: Exception: Traceback (most recent call last): File "/usr/lib64/python3.6/shutil.py", line 550, in move os.rename(src, real_dst) PermissionError: [Errno 13] Permission denied: '/usr/local/lib/python3.6/site-packages/amazon.ion-0.7.0-py3.6-nspkg.pth' -> '/tmp/pip-p8j4d45d-uninstall/usr/local/lib/python3.6/site-packages/amazon.ion-0.7.0-py3.6-nspkg.pth' During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3.6/site-packages/pip/basecommand.py", line 215, in main status = self.run(options, args) File "/usr/lib/python3.6/site-packages/pip/commands/install.py", line 365, in run strip_file_prefix=options.strip_file_prefix, File "/usr/lib/python3.6/site-packages/pip/req/req_set.py", line 783, in install requirement.uninstall(auto_confirm=True) File "/usr/lib/python3.6/site-packages/pip/req/req_install.py", line 754, in uninstall paths_to_remove.remove(auto_confirm) File "/usr/lib/python3.6/site-packages/pip/req/req_uninstall.py", line 115, in remove renames(path, new_path) File "/usr/lib/python3.6/site-packages/pip/utils/__init__.py", line 267, in renames shutil.move(old, new) File "/usr/lib64/python3.6/shutil.py", line 565, in move os.unlink(src) PermissionError: [Errno 13] Permission denied: '/usr/local/lib/python3.6/site-packages/amazon.ion-0.7.0-py3.6-nspkg.pth' [centos@ip-10-204-101-224] ~ $ sudo pip3 install qldbshell WARNING: Running pip install with root privileges is generally not a good idea. Try `pip3 install --user` instead. Collecting qldbshell Downloading https://artifactory.lifion.oneadp.com/artifactory/api/pypi/pypi/packages/packages/0f/f7/fe984d797e0882c5e141a4888709ae958eb8c48007a23e94000507439f83/qldbshell-1.2.0.tar.gz (68kB) 100% |████████████████████████████████| 71kB 49.8MB/s Requirement already satisfied: boto3>=1.9.237 in /usr/local/lib/python3.6/site-packages (from qldbshell) Collecting amazon.ion=0.5.0 (from qldbshell) Downloading https://artifactory.lifion.oneadp.com/artifactory/api/pypi/pypi/packages/packages/4e/b7/21b7a7577cc6864d1c93fd710701e4764af6cf0f7be36fae4f9673ae11fc/amazon.ion-0.5.0.tar.gz (178kB) 100% |████████████████████████████████| 184kB 27.7MB/s Requirement already satisfied: prompt_toolkit=3.0.5 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: ionhash~=1.1.0 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: botocore=1.20.21 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: jmespath=0.7.1 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: s3transfer=0.3.0 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: six in /usr/local/lib/python3.6/site-packages (from amazon.ion=0.5.0->qldbshell) Requirement already satisfied: wcwidth in /usr/local/lib/python3.6/site-packages (from prompt_toolkit=3.0.5->qldbshell) Requirement already satisfied: python-dateutil=2.1 in /usr/local/lib/python3.6/site-packages (from botocore=1.20.21->boto3>=1.9.237->qldbshell) Requirement already satisfied: urllib3=1.25.4 in /usr/local/lib/python3.6/site-packages (from botocore=1.20.21->boto3>=1.9.237->qldbshell) Installing collected packages: amazon.ion, qldbshell Found existing installation: amazon.ion 0.7.0 Uninstalling amazon.ion-0.7.0: Successfully uninstalled amazon.ion-0.7.0 Running setup.py install for amazon.ion ... done Running setup.py install for qldbshell ... done Successfully installed amazon.ion-0.5.0 qldbshell-1.2.0 $ sudo pip3 install qldbshell $ qldbshell Traceback (most recent call last): File "/usr/local/bin/qldbshell", line 11, in load_entry_point('qldbshell==1.2.0', 'console_scripts', 'qldbshell')() File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 476, in load_entry_point return get_distribution(dist).load_entry_point(group, name) File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 2700, in load_entry_point return ep.load() File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 2318, in load return self.resolve() File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 2324, in resolve module = __import__(self.module_name, fromlist=['__name__'], level=0) File "/usr/local/lib/python3.6/site-packages/qldbshell/__main__.py", line 25, in from pyqldb.driver.pooled_qldb_driver import PooledQldbDriver ModuleNotFoundError: No module named 'pyqldb.driver.pooled_qldb_driver' $ pip list qldbshell DEPRECATION: The default format will switch to columns in the future. You can use --format=(legacy|columns) (or define a format=(legacy|columns) in your pip.conf under the [list] section) to disable this warning. amazon.ion (0.5.0) boto3 (1.17.21) botocore (1.20.21) ionhash (1.1.0) jmespath (0.10.0) pip (9.0.3) prompt-toolkit (3.0.16) pyqldb (3.1.0) python-dateutil (2.8.1) qldbshell (1.2.0) s3transfer (0.3.4) setuptools (39.2.0) six (1.15.0) urllib3 (1.26.3) So, uninstalled and re-installed and voila, my data. $ qldbshell usage: qldbshell [-h] [-v] [-s QLDB_SESSION_ENDPOINT] [-r REGION] [-p PROFILE] -l LEDGER qldbshell: error: the following arguments are required: -l/--ledger $ qldbshell -l demo Welcome to the Amazon QLDB Shell version 1.2.0 Use 'start' to initiate and interact with a transaction. 'commit' and 'abort' to commit or abort a transaction. Use 'start; statement 1; statement 2; commit; start; statement 3; commit' to create transactions non-interactively. Use 'help' for the help section. All other commands will be interpreted as PartiQL statements until the 'exit' or 'quit' command is issued. qldbshell > qldbshell > SELECT * FROM sample; INFO: { id: "1", name: "Demo Row", location: "USA", domain: "" } INFO: (0.1718s) qldbshell > q WARNING: Error while executing query: An error occurred (BadRequestException) when calling the SendCommand operation: Lexer Error: at line 1, column 1: invalid character at, '' [U+5c]; INFO: (0.1134s) qldbshell > exit Exiting QLDB Shell Right q is a mysqlism of the client, need to rewire myself. Now, I have a ledger, I created an example table, mocked a row of data and verified. Now I can just load my sample data in JSON I created earlier right? Wrong!!! $ cat load.py import json from pyqldb.config.retry_config import RetryConfig from pyqldb.driver.qldb_driver import QldbDriver # Configure retry limit to 3 retry_config = RetryConfig(retry_limit=3) # Initialize the driver print("Initializing the driver") qldb_driver = QldbDriver("demo", retry_config=retry_config) def insert_record(transaction_executor, table, values): print("Inserting into {}".format(table)) transaction_executor.execute_statement("INSERT INTO {} ?".format(table), values) table="sample" with open('sample.json') as f: data=json.load(f) qldb_driver.execute_lambda(lambda x: insert_record(x, table, data)) $ python load.py Traceback (most recent call last): File "load.py", line 2, in from pyqldb.config.retry_config import RetryConfig ModuleNotFoundError: No module named 'pyqldb' [centos@ip-10-204-101-224] ~ Oh sweet, I'd installed that, and used it, and re-installed it. $ pip list | grep pyqldb DEPRECATION: The default format will switch to columns in the future. You can use --format=(legacy|columns) (or define a format=(legacy|columns) in your pip.conf under the [list] section) to disable this warning. [centos@ip-10-204-101-224] ~ $ sudo pip3 install pyqldb WARNING: Running pip install with root privileges is generally not a good idea. Try `pip3 install --user` instead. Collecting pyqldb Downloading https://artifactory.lifion.oneadp.com/artifactory/api/pypi/pypi/packages/packages/5c/b4/9790b1fad87d7df5b863cbf353689db145bd009d31d854d282b31e1c1781/pyqldb-3.1.0.tar.gz Collecting amazon.ion=0.7.0 (from pyqldb) Downloading https://artifactory.lifion.oneadp.com/artifactory/api/pypi/pypi/packages/packages/7d/ac/fd1edee54cefa425c444b51ad00a20e5bc74263a3afbfd4c8743040f8f26/amazon.ion-0.7.0.tar.gz (211kB) 100% |████████████████████████████████| 215kB 24.8MB/s Requirement already satisfied: boto3=1.16.56 in /usr/local/lib/python3.6/site-packages (from pyqldb) Requirement already satisfied: botocore=1.19.56 in /usr/local/lib/python3.6/site-packages (from pyqldb) Requirement already satisfied: ionhash=1.1.0 in /usr/local/lib/python3.6/site-packages (from pyqldb) Requirement already satisfied: six in /usr/local/lib/python3.6/site-packages (from amazon.ion=0.7.0->pyqldb) Requirement already satisfied: s3transfer=0.3.0 in /usr/local/lib/python3.6/site-packages (from boto3=1.16.56->pyqldb) Requirement already satisfied: jmespath=0.7.1 in /usr/local/lib/python3.6/site-packages (from boto3=1.16.56->pyqldb) Requirement already satisfied: python-dateutil=2.1 in /usr/local/lib/python3.6/site-packages (from botocore=1.19.56->pyqldb) Requirement already satisfied: urllib3=1.25.4 in /usr/local/lib/python3.6/site-packages (from botocore=1.19.56->pyqldb) Installing collected packages: amazon.ion, pyqldb Found existing installation: amazon.ion 0.5.0 Uninstalling amazon.ion-0.5.0: Successfully uninstalled amazon.ion-0.5.0 Running setup.py install for amazon.ion ... done Running setup.py install for pyqldb ... done Successfully installed amazon.ion-0.7.0 pyqldb-3.1.0 Load one more time. $ cat load.py import json from pyqldb.config.retry_config import RetryConfig from pyqldb.driver.qldb_driver import QldbDriver # Configure retry limit to 3 retry_config = RetryConfig(retry_limit=3) # Initialize the driver print("Initializing the driver") qldb_driver = QldbDriver("demo", retry_config=retry_config) def insert_record(transaction_executor, table, values): print("Inserting into {}".format(table)) transaction_executor.execute_statement("INSERT INTO {} ?".format(table), values) table="sample" with open('sample.json') as f: data=json.load(f) qldb_driver.execute_lambda(lambda x: insert_record(x, table, data)) $ python load.py Initializing the driver Inserting into sample And done, I've got my JSON extracted MySQL 8 data in QLDB. I go to vett it in the client, and boy, didn't expect yet another package screw up. Clearly, these 2 AWS python packages are incompatible. That's a venv need, but I'm now at double my desired time to show this. $ qldbshell -l demo Traceback (most recent call last): File "/usr/local/bin/qldbshell", line 11, in load_entry_point('qldbshell==1.2.0', 'console_scripts', 'qldbshell')() File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 476, in load_entry_point return get_distribution(dist).load_entry_point(group, name) File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 2700, in load_entry_point return ep.load() File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 2318, in load return self.resolve() File "/usr/lib/python3.6/site-packages/pkg_resources/__init__.py", line 2324, in resolve module = __import__(self.module_name, fromlist=['__name__'], level=0) File "/usr/local/lib/python3.6/site-packages/qldbshell/__main__.py", line 25, in from pyqldb.driver.pooled_qldb_driver import PooledQldbDriver ModuleNotFoundError: No module named 'pyqldb.driver.pooled_qldb_driver' [centos@ip-10-204-101-224] ~ $ pip list | grep qldbshell DEPRECATION: The default format will switch to columns in the future. You can use --format=(legacy|columns) (or define a format=(legacy|columns) in your pip.conf under the [list] section) to disable this warning. qldbshell (1.2.0) $ sudo pip uninstall qldbshell pyqldb $ sudo pip install qldbshell WARNING: Running pip install with root privileges is generally not a good idea. Try `pip3 install --user` instead. Collecting qldbshell Downloading https://artifactory.lifion.oneadp.com/artifactory/api/pypi/pypi/packages/packages/0f/f7/fe984d797e0882c5e141a4888709ae958eb8c48007a23e94000507439f83/qldbshell-1.2.0.tar.gz (68kB) 100% |████████████████████████████████| 71kB 43.4MB/s Requirement already satisfied: boto3>=1.9.237 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: amazon.ion=0.5.0 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: prompt_toolkit=3.0.5 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: ionhash~=1.1.0 in /usr/local/lib/python3.6/site-packages (from qldbshell) Requirement already satisfied: s3transfer=0.3.0 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: botocore=1.20.21 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: jmespath=0.7.1 in /usr/local/lib/python3.6/site-packages (from boto3>=1.9.237->qldbshell) Requirement already satisfied: six in /usr/local/lib/python3.6/site-packages (from amazon.ion=0.5.0->qldbshell) Requirement already satisfied: wcwidth in /usr/local/lib/python3.6/site-packages (from prompt_toolkit=3.0.5->qldbshell) Requirement already satisfied: python-dateutil=2.1 in /usr/local/lib/python3.6/site-packages (from botocore=1.20.21->boto3>=1.9.237->qldbshell) Requirement already satisfied: urllib3=1.25.4 in /usr/local/lib/python3.6/site-packages (from botocore=1.20.21->boto3>=1.9.237->qldbshell) Installing collected packages: qldbshell Running setup.py install for qldbshell ... done Successfully installed qldbshell-1.2.0 Can I see my data now $ qldbshell -l demo Welcome to the Amazon QLDB Shell version 1.2.0 Use 'start' to initiate and interact with a transaction. 'commit' and 'abort' to commit or abort a transaction. Use 'start; statement 1; statement 2; commit; start; statement 3; commit' to create transactions non-interactively. Use 'help' for the help section. All other commands will be interpreted as PartiQL statements until the 'exit' or 'quit' command is issued. qldbshell > select * from sample; INFO: { id: 1, name: "Demo Row", location: "USA", domain: null }, { id: 1, name: "Demo Row", location: "USA", domain: null }, { id: "1", name: "Demo Row", location: "USA", domain: "" }, { id: 3, name: "Kiwi", location: "NZ", domain: null }, { id: 2, name: "Row 2", location: "AUS", domain: "news.com.au" }, { id: 3, name: "Kiwi", location: "NZ", domain: null }, { id: 2, name: "Row 2", location: "AUS", domain: "news.com.au" } INFO: (0.0815s) And yes, data, I see it's duplicated, so I must have in between the 10 steps run twice. This does highlight a known limitation of QLDB, no unique constraints. But wait, that data is not really correct, I don't want null. Goes back to the JSON to see the MySQL shell gives that. $ jq . sample.json [ { "id": 1, "name": "Demo Row", "location": "USA", "domain": null }, ... At some point I also got this load error, but by now I've given up documenting how to do something, in order to demonstrate something. NameError: name 'null' is not defined One has to wrap the only nullable column with IFNULL(subdomain,'') as subdomain and redo all those steps again. This is not going to be practical having to wrap all columns in a wider table with IFNULL. However, having exhausted all this time for what was supposed to be a quiet weekend few hours, my post is way to long, and I've learned "Creating examples can be hard".

http://ronaldbradford.com/blog/wdiltw-creating-examples-can-be-hard-2021-03-06/

0 notes

Photo

Underscore.js, React without virtual DOM, and why you should use Svelte

#504 — September 4, 2020

Unsubscribe | Read on the Web

JavaScript Weekly

Underscore.js 1.11.0: The Long Standing Functional Helper Library Goes Modular — “Underscore!?” I hear some of our longer serving readings exclaiming. Yes, it’s still around, still under active development, and still a neat project at a mere 11 years old. As of v1.11.0 every function is now in a separate module which brings treeshaking opportunities to all, but there’s also a monolithic bundle in ES module format for those who prefer that. This article goes into a lot more depth about the new modular aspects.

Jeremy Ashkenas

Mastering the Hard Parts of JavaScript — A currently 17-part blog post series written by someone taking Frontend Masters’ JavaScript: The Hard Parts course and reflecting on the exercises that have helped them learn about callbacks, prototypes, closures, and more.

Ryan Ameri

FusionAuth Now Offers Breached Password Detection and LDAP — FusionAuth is a complete identity and access management tool that saves your team time and resources. Implement complex standards like OAuth, OpenID Connect, and SAML and build out additional login features to meet compliance requirements.

FusionAuth sponsor

How Browsers May Throttle requestAnimationFrame — requestAnimationFrame is a browser API that allows code execution to be triggered before the next available frame on the device display, but it’s not a guarantee and it can be throttled. This post looks at when and why.

Matt Perry

Brahmos: Think React, But Without the VDOM — An intriguing user interface library that supports the modern React API and native templates but with no VDOM.

Brahmos

NativeScript 7.0: Create Native iOS and Android Apps with JS — A signficant step forward for the framework by aligning with modern JS standards and bringing broad consistency across the whole stack. Supports Angular, Vue, and you can even use TypeScript if you prefer.

NativeScript

⚡️ Quick bytes:

🎧 The Real Talk JavaScript podcast interviewed Rich Harris of the Svelte project – well worth a listen if you want to get up to speed with why you should be paying attention to Svelte.

ESLint now has a public roadmap of what they're working on next.

You've got nine more days to develop a game for the current JS13kGames competition, if you're up for it.

VueConfTO (VueConf Toronto) are running a free virtual Vue.js conference this November.

The latest on webpack 5's release plans. Expect a final release in October.

💻 Jobs

Senior JavaScript Developer (Warsaw, Relocation Package) — Open source rich text editor used by millions of users around the world. Strong focus on code quality. Join us.

CKEDITOR

JavaScript Developer at X-Team (Remote) — Join the most energizing community for developers and work on projects for Riot Games, FOX, Sony, Coinbase, and more.

X-Team

Find a Job Through Vettery — Create a profile on Vettery to connect with hiring managers at startups and Fortune 500 companies. It's free for job-seekers.

Vettery

📚 Tutorials, Opinions and Stories