#and there's no script extender for mac

Text

Something's Wrong with Sunny Day Jack - 2.0 Demo ( V.0.3 )

Another minor update, as we creep to 1/2 of the original script+!

Now with SEVERAL additional and new scenes!

The Patreon post with the download link is available for all paying Patreon members now! ❤️💙💛

We ask all fans to remember that this is a temporary, unoptimized build. It's being built at no additional cost to show off improved graphics/new story content-- but it is a modified build of the original demo.

This demo in no way reflects the capability/file size/etc.. of our current private build, and should be considered more of a content sample!

KNOWN ISSUES:

CGs absent

Windows only - TEMPORARY

File size, unoptimized

Temporary Build, not in final engine

AfterLife mode non-functional

FUTURE GOALS:

MAC BUILD

Updated CGs ( The longest taking task-- )

EXIT MAIN MENU FUNCTIONS

Extended Nick Scene

Additional AfterLife Mode episode

#somethings wrong with sunny day jack#swwsdj#sunny day jack#sdj#snaccpop studios#snaccpop#game development#visual novel#vndev#minors dni

502 notes

·

View notes

Note

Do you know how to mod the game? I've followed like 3 different tutorials but I'm a little dumb and keep fucking it up at some stage in the process ToT

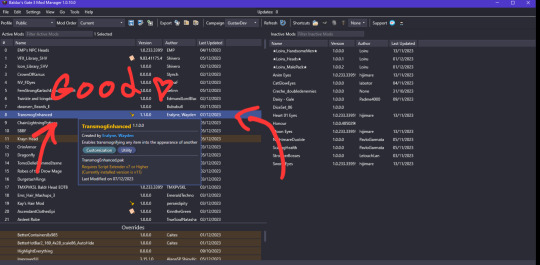

> install Baldur's Gate 3 mod manager from here

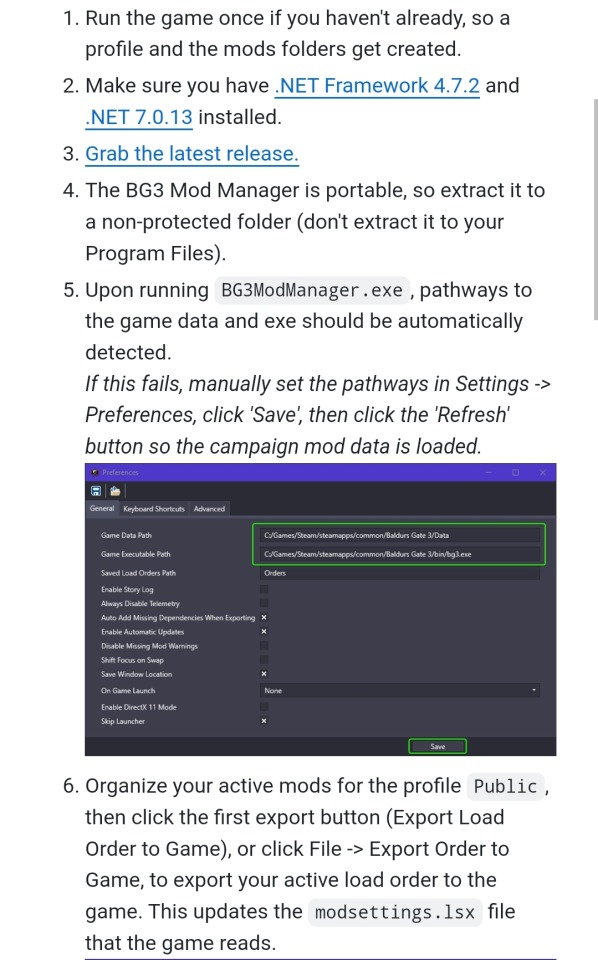

These are the important notes when installing it. The mananger will detect the game path automatically

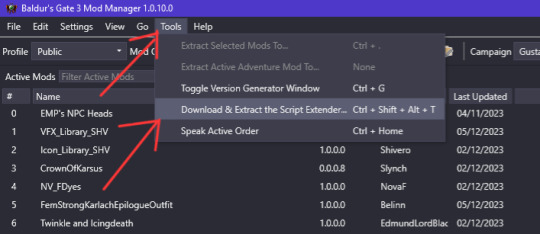

> in mod manager, go to tools at the top bar, install scripts extender from it.

>find a mod you like, install the mod and the listed requirements to run the mod.

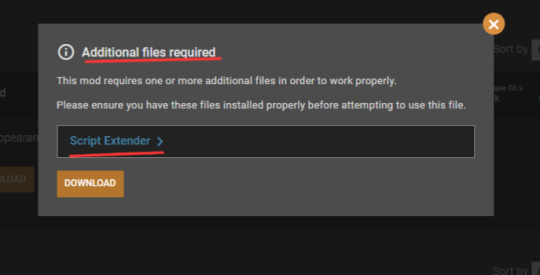

This mod only requires script extender, which we already installed from the mod manager.

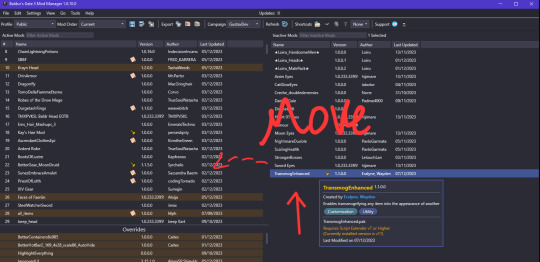

> in mod manager, go to "import" select the mods you downloaded. They will appear at the "inactive" side.

> drag them to then "active mods" side. If they don't appear at the inactive side, then the manager already added them at the bottom left.

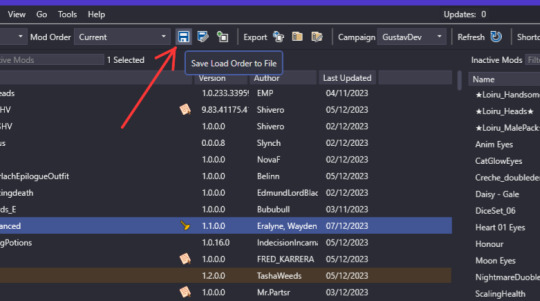

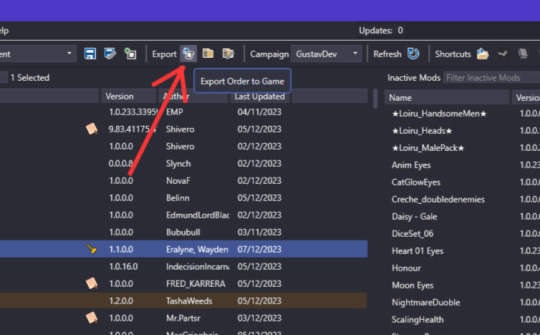

> click on the save icon, then on the export icon.

> open bg3

> the game should be modded now, if it isn't, try restarting your pc.

Is there any problem you're encountering? This modding method works for pc, I'm not sure if it works on mac.

7 notes

·

View notes

Text

TDSP WEEKEND UPDATE

Thank you all for your kind words on the demo! We took the feedback regarding the UI and fixed it. Again, there was no music because we are adding that last. For clarification, TDSP will only be available for PC, Mac, and Android. Why no iOS?

We can't put it on iOS because every app comes from the App Store, and if we put our sequel on there, it will get us into deep shit with copyright laws.

Bottom line: if we put it on the App Store, PB will sue our asses to the ground for copyright infringement.

The Captain had a huge meeting with the crew. We are in the home stretch, but still have a lot to do. The Captain and The First Mate agreed that if TDSP has be extended to 2025, the project will be scrapped. Luckily, everyone is working on getting TDSP done by 2024. The Captain has come up with new jobs for her crew to do when it comes to formatting the script to make the programmers' job easier.

COLOR CODERS: Color codes the script.

EMOTION CHOOSER: Chooses what emotions the character uses.

BACKGROUND CHOOSER: Chooses what backgrounds to use

MUSIC CHOOSER: Chooses what music to use.

These are easy jobs that can be done in an about an hour for each chapter, so they won't get too much in the way of everyone's daily lives.

9 notes

·

View notes

Note

hi im kinda new to gifing and i was wondering what you use to download/screen record episodes? ive just been screen recording with quicktime but i think its making my gifs way more pixelated especially darker scenes

hi!! so in my experience screen recording will almost always reduce the quality, so i screencap episodes instead!! basically instead of recording whatever scene(s) you want to gif, you’re screenshotting each frame. the main program i use is mplayer osx extended, and it’s available free to download online. this post explains how i do that, as well as the well programs used and how to import the frames to photoshop, but ill include the relevant part here!! (ive changed how i do a lot of other things in that post but the process for this is still the same) if you already have (high quality) eps downloaded then this should help keep the quality, especially for dark gifs, but if you have more questions about where to download from message me off anon!! and if you have any other questions/if anything needs to be explained more let me know :)

basic tutorial:

i open the clip in mplayer to get the screencaps ill use to make the gif. i do this by pausing the video right before the part i want to gif, then pressing and holding command+shift+s (for mac) until ive got the full scene. if you want, you can create a folder on your desktop for screencaps, but I usually just leave them there and delete them once im done.

the next step is when i actually open photoshop. to start, go to file>script>load files into stack

this is where you're going to load in the screencaps you just took. go to browse, then select the first screencap and hold down shift until you select the last screencap, then hit ok

3 notes

·

View notes

Text

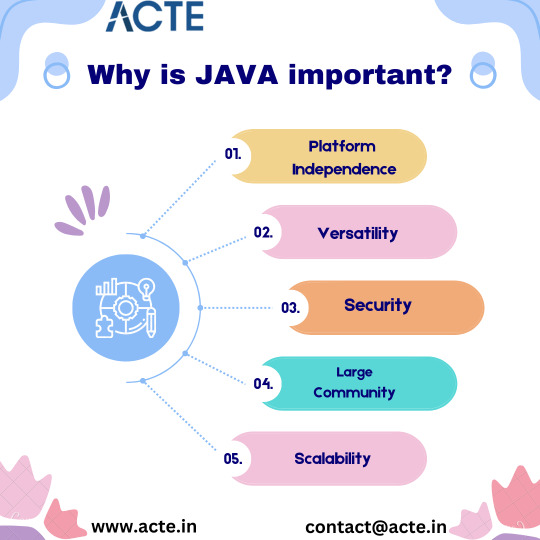

Unleashing the Power of Java: Your Guide to a Versatile Programming Language

Java: The Language of Digital Choreography

Programming languages are like the words we use to communicate with computers, and among these languages, Java stands out as a versatile and powerful tool. Imagine it as teaching your computer to dance to your tune, where your instructions are written in Java. But what makes Java so special, and why is it so widely embraced in the world of programming? Let's dive into the key reasons behind its importance.

1. Platform Independence: Java's Superpower

Java possesses a superpower—it can run on various computer systems without requiring modifications. How? Java programs are transformed into bytecode, a universal language understood by the Java Virtual Machine (JVM). This bytecode runs seamlessly on Windows, Mac, or Linux, making Java truly platform-independent.

2. Versatility: The Swiss Army Knife of Programming

Java is like a Swiss Army knife for programmers. It's not limited to one domain; you can use it to craft web applications, mobile apps, desktop software, and even embed it in small devices like smart thermostats. Its adaptability knows no bounds.

3. Security: A Robust Guardian

In an age where digital security is paramount, Java takes security seriously. It boasts built-in features that safeguard your computer and data from malicious software, providing peace of mind in our increasingly interconnected world.

4. Large Community: Your Support Network

The vast Java community is a valuable resource. With a multitude of Java developers out there, you can easily find help, access resources, and leverage libraries. If you encounter a coding challenge, chances are someone else has faced it and shared a solution.

5. Scalability: From Small to Massive

Java scales effortlessly. It's trusted by major corporations to power their colossal systems. Whether you're creating a small game or launching a massive e-commerce platform, Java can handle the challenge.

The Java Odyssey: Transforming Code into Digital Life

Understanding the inner workings of Java is paramount in the realm of programming. It unfolds as an intricate journey, a symphony of steps that bring your code to life, transforming instructions into digital ballet. Let’s delve deeper into this process:

1. Composition of Code: The Birth of Instructions

The journey commences with the composer, the programmer, crafting Java code that resonates with human understanding. It’s akin to writing the script for a grand performance, where every line of code becomes a note in the symphony of instructions.

2. Compilation: The Translator’s Artistry

Your code embarks on a transformative voyage known as compilation. This step resembles a skilled translator rendering a literary masterpiece into a universal language. The code metamorphoses into bytecode, a linguistic bridge that computers understand.

3. Bytecode: The Choreographer’s Notation

Bytecode emerges as the choreographer’s notation — a graceful set of instructions, akin to dance moves meticulously notated on a score. The Java Virtual Machine (JVM) is the dance floor, and bytecode is the choreographic masterpiece. It is platform-agnostic, a language that speaks fluently on any stage where a JVM is present.

4. Execution: The Grand Performance

The Java Virtual Machine takes center stage, assuming the role of the conductor and principal dancer. It orchestrates the grand performance by executing the bytecode on the target computer. This is where your program comes to life, faithfully translating your meticulously crafted instructions into tangible actions.

The Call to Adventure: Java Awaits

Now, are you ready to embark on the captivating adventure of Java? It beckons with the promise of exploring boundless possibilities and orchestrating digital feats. Java’s prowess extends across a myriad of applications, underpinned by its platform independence, fortified security, and the unwavering support of a vibrant community. If you harbor the eagerness to communicate with computers and guide them to perform incredible acts, Java stands as the perfect threshold.

Java is your gateway to a world where code transforms into enchanting performances, where the mundane becomes extraordinary. It is here that your journey unfolds, a journey of endless creativity and digital choreography that awaits your creative touch. Step onto the Java stage, and let your programming odyssey commence.

For those looking to deepen their understanding of Java, we strongly recommend exploring ACTE Technologies. Their qualified instructors can significantly enhance your learning journey, offering certificates and career placement opportunities. Whether you prefer online or offline access, ACTE Technologies provides a wealth of resources to help you master Java. Consider enrolling in one of their courses to embark on a step-by-step learning adventure.

#javacourse#javascript#javadevelopmentcompany#javaprogramming#javaprojects#best java developer jobs#best java training

4 notes

·

View notes

Text

How to Scrape Amazon Product Data using Python – A Comprehensive Guide.

Introduction

Web scraping, the process of extracting data from websites, offers businesses invaluable insights. Amazon web scraping, facilitated by tools like an Amazon data scraper or Amazon API dataset, provides access to crucial product information. This data is particularly beneficial for price comparison and market research, aiding businesses in making informed decisions.

With an Amazon ASINs data scraper, businesses can gather details on specific products, streamlining the process of obtaining accurate and up-to-date information. Amazon scraping services further enhance this capability, ensuring efficiency and accuracy. Whether for tracking prices, analyzing market trends, or optimizing strategies, the ability to scrape Amazon product data empowers businesses to stay competitive and agile in the dynamic e-commerce landscape. Harnessing this data-rich resource becomes essential for businesses seeking a comprehensive understanding of the market and seeking avenues for growth.

Understanding the Basics

Understanding the basics of scraping Amazon product data involves recognizing the importance of ethical practices. When engaging in Amazon web scraping, it is crucial to respect robots.txt directives and avoid overloading Amazon's servers. Ethical considerations extend to legal aspects, where scraping data from websites, including Amazon, must comply with terms of service and relevant laws.

Amazon data scraper tools, Amazon ASINs data scraper, and Amazon API datasets should be employed responsibly to gather information for purposes such as price comparison and market research. Adhering to ethical guidelines ensures fair usage and prevents potential legal consequences. Embracing responsible practices in Amazon scraping services not only safeguards against legal issues but also fosters a positive and sustainable approach to leveraging web data for business insights, promoting transparency and integrity in the data extraction process.

Setting Up the Environment

Setting up the environment for web scraping involves a few key steps. First, ensure Python is installed on your system. Creating a virtual environment (optional but recommended) helps manage dependencies. To do so, open a terminal and run:

Activate the virtual environment:

On Windows:

./venv/Scripts/activate

On macOS/Linux:

source venv/bin/activate

Now, install the necessary libraries:

pip install requests beautifulsoup4

Here, 'requests' facilitates HTTP requests, while 'BeautifulSoup' aids in HTML parsing. With the environment set up, you're ready to commence web scraping using Python. Be mindful of ethical considerations, adhere to website terms of service, and respect any relevant guidelines, ensuring responsible and legal scraping practices.

Analyzing Amazon Product Pages

Identifying desired data points on an Amazon product page can be done effectively using browser developer tools, such as Chrome DevTools. Here's a step-by-step guide focusing on common elements:

Open Developer Tools:

Right-click on the element you want to inspect (e.g., product title) on the Amazon product page and select "Inspect" or press Ctrl+Shift+I (Windows/Linux) or Cmd+Opt+I (Mac) to open Chrome DevTools.

Locate HTML Elements:

Use the "Elements" tab to explore the HTML structure. For the product title, look for a relevant HTML tag and class, usually associated with the title. Repeat this process for other elements like price, description, image URL, rating, and number of reviews.

Copy Selectors or XPath:

Right-click on the HTML element in the "Elements" tab and select "Copy" > "Copy selector" or "Copy XPath." This copied information helps locate the element programmatically.

Utilize Developer Console:

Switch to the "Console" tab and test your selectors. For example:

document.querySelector('your-selector-for-title').textContent

This allows you to verify that the selected elements correspond to the desired data points.

Implement in Your Code:

Remember to check Amazon's terms of service, robots.txt, and legal considerations to ensure compliance while scraping data. Additionally, respect the website's policies to maintain ethical scraping practices.

Retrieving Page HTML:

To retrieve the HTML content of an Amazon product page using the requests library in Python, follow these steps:

Explanation:

Import requests:

Import the requests library to make HTTP requests.

Define Amazon Product URL:

Set the amazon_url variable to the desired Amazon product page URL.

Send GET Request:

Use requests.get(amazon_url) to send an HTTP GET request to the specified URL.

Check Status Code:

Verify that the request was successful by checking the status code (200 indicates success).

Store HTML Content:

If the request was successful, store the HTML content in the html_content variable using response.text.

Print Preview:

Optionally, print a preview of the HTML content (first 500 characters in this example) to verify successful retrieval.

Now, html_content holds the HTML of the Amazon product page, allowing you to proceed with parsing and extracting the desired data using tools like BeautifulSoup.

Scraping Product Details Using Python Libraries:

Scraping product details using Python involves parsing HTML, and the BeautifulSoup library is a powerful tool for this purpose. Follow these steps to extract desired data points:

Install BeautifulSoup:

If not installed, use the following command:

pip install beautifulsoup4

Import Libraries:

Retrieve HTML:

Parse HTML with BeautifulSoup:

Create a BeautifulSoup object to parse the HTML:

soup = BeautifulSoup(html_content, 'html.parser')

Find and Extract Data Points:

Use BeautifulSoup methods like find() or select_one() to locate specific HTML elements. For example, to extract the product title:

title = soup.select_one('span#productTitle').text.strip()

Repeat this process for other data points like price, description, image URL, rating, and number of reviews.

Print or Store Results:

Print or store the extracted data points:

print(f'Title: {title}')

Adapt the code for other data points as needed.

Adapt the code for other data points by inspecting the HTML structure with browser developer tools and utilizing appropriate BeautifulSoup methods.

Product Title:

Explanation:

Send HTTP GET request and retrieve HTML: Use the requests library to fetch the HTML content of the Amazon product page.

Parse HTML with BeautifulSoup: Create a BeautifulSoup object to parse the HTML.

Find Product Title using id: Use the find method to locate the product title element with a specific id and extract its text content.

Find Product Title using CSS selector: Use the select_one method with a CSS selector to locate the product title element and extract its text content.

Print or store the extracted title: Display the product title or indicate if it was not found.

Inspect the Amazon product page's HTML structure with browser developer tools to identify the appropriate id or CSS selector for the product title. Adjust the code accordingly for other elements like price, description, etc.

Product Image:

To extract the product image URL using Python and BeautifulSoup, follow these steps:

Identify Image Element:

Find Image URL with BeautifulSoup:

Here, 'img#landingImage' is a sample CSS selector, and you should adapt it based on the actual HTML structure of the image element on the Amazon product page.

Print or Store the Image URL:

Print or store the extracted image URL as needed:

Ensure to check if the image element exists (if image_element) to avoid potential errors if the image is not found.

Adapt the code based on the specific HTML structure of the image element on the Amazon product page.

Product Description:

To extract the product description text using Python and BeautifulSoup, you can follow these steps:

Identify Description Element:

Inspect the HTML structure of the product page using browser developer tools. Locate the HTML element containing the product description, such as a <div> or a <p> tag.

Find Description Text with BeautifulSoup:

Here, 'div#productDescription' is a sample CSS selector, and you should adapt it based on the actual HTML structure of the description element on the Amazon product page.

Print or Store the Description Text:

Print or store the extracted description text as needed:

print(f'Product Description: {description_text}')

Ensure to check if the description element exists (if description_element) to avoid potential errors if the description is not found.

Adapt the code based on the specific HTML structure of the description element on the Amazon product page.

Product Price:

To extract the product price using Python and BeautifulSoup, follow these steps:

Identify Price Element:

Inspect the HTML structure of the product page using browser developer tools. Locate the price element, usually represented by a <span> or <div> tag with a specific class or identifier.

Find Price with BeautifulSoup:

Here, 'span#priceblock_ourprice' is a sample CSS selector, and you should adapt it based on the actual HTML structure of the price element on the Amazon product page.

Clean and Convert Price:

Print or Store the Price:

Print or store the cleaned and converted price as needed:

Ensure to check if the price element exists (if price_element) to avoid potential errors if the price is not found.

Adapt the code based on the specific HTML structure of the price element on the Amazon product page.

Reviews and Rating:

To extract the average rating and the number of reviews from an Amazon product page using Python and BeautifulSoup, follow these steps:

Identify Rating and Reviews Elements:

Inspect the HTML structure of the product page using browser developer tools. Locate the elements representing the average rating and the number of reviews. Typically, these may be found within <span> or <div> tags with specific classes or identifiers.

Find Rating and Reviews with BeautifulSoup:

Adapt the CSS selectors based on the actual HTML structure of the rating and reviews elements on the Amazon product page.

Clean and Convert Values:

Print or Store the Values:

Ensure to check if the rating and reviews elements exist (if rating_element and if reviews_element) to avoid potential errors if they are not found.

Adapt the code based on the specific HTML structure of the rating and reviews elements on the Amazon product page.

Complete Code with Data Storage:

This code defines a function scrape_amazon_product(url) that performs all the scraping steps mentioned earlier. The extracted data is stored in a dictionary (product_data) and then written to a JSON file (amazon_product_data.json). Adjust the URL and data storage format as needed for your specific use case.

Creating a Crawler for Product Links:

Web crawlers are automated scripts that navigate through websites, systematically fetching and parsing web pages to extract data. In the context of scraping data from multiple product pages, a web crawler can be designed to traverse a website, collecting links to individual product pages, and then extracting data from each of those pages.

To extract product links from a search results page, similar techniques as scraping individual product pages can be applied:

Identify Link Elements:

Inspect the HTML structure of the search results page using browser developer tools. Locate the HTML elements (e.g., <a> tags) that contain links to individual product pages.

Use BeautifulSoup for Parsing:

Adapt the CSS selector ('a', {'class': 's-title-instructions-placeholder'}) based on the specific HTML structure of the link elements on the search results page.

Clean and Filter Links:

Clean and filter the extracted links to ensure only valid product page URLs are included. You may use functions to check and filter based on specific criteria.

Store or Use the Links:

Store the extracted product links in a list for further processing or use them directly in your web crawler. You can then iterate through these links to visit each product page and scrape data.

Remember to review the website's terms of service and policies to ensure compliance with ethical and legal considerations while using web crawlers.

Challenges of Amazon Scraping:

Scraping data from Amazon poses several challenges due to its dynamic structure and efforts to prevent automated data extraction. Some key challenges include:

Frequent Website Structure Changes:

Amazon regularly updates its website structure, making it challenging to maintain stable scraping scripts. Changes in HTML structure, class names, or element identifiers can break existing scrapers.

Solutions:

Regularly monitor and update scraping scripts to adapt to any structural changes.

Use more robust selectors like XPath to locate elements, as they are less prone to changes.

Consider utilizing the Amazon Product Advertising API, which provides a structured and stable interface for accessing product data.

Implementing Anti-scraping Measures:

Amazon employs anti-scraping measures to protect its data from automated extraction. These measures include CAPTCHAs, rate limiting, and IP blocking, making it challenging to scrape data efficiently.

Solutions:

Rotate user agents: Use a pool of user agents to mimic different browsers and devices.

Respect robots.txt: Adhere to the guidelines specified in Amazon's robots.txt file to avoid potential issues.

Implement delays: Introduce random delays between requests to avoid triggering rate limits. This helps in simulating human-like browsing behavior.

Use headless browsers: Mimic user interactions by employing headless browsers, which can handle JavaScript-rendered content and reduce the likelihood of encountering CAPTCHAs.

Proxy and IP Blocking:

Amazon may block IP addresses that exhibit suspicious behavior or excessive requests, hindering the scraping process.

Solutions:

Use rotating proxies: Rotate through a pool of proxies to distribute requests and reduce the likelihood of IP blocking.

Monitor IP health: Regularly check the health of proxies to ensure they are not blacklisted.

Utilize residential IPs: Residential IPs are less likely to be detected as a part of a scraping operation.

Legal and Ethical Considerations:

Scraping Amazon data without permission can violate Amazon's terms of service and potentially lead to legal consequences.

Solutions:

Review and adhere to Amazon's terms of service and policies.

Consider using official APIs if available, as they provide a sanctioned means of accessing data.

Opt for responsible scraping practices, respecting the website's limitations and guidelines.

Handling CAPTCHAs:

CAPTCHAs are a common anti-scraping measure that can interrupt automated scraping processes and require human interaction.

Solutions:

Implement CAPTCHA-solving services: Use third-party services to solve CAPTCHAs, but ensure compliance with legal and ethical standards.

Use headless browsers: Headless browsers can handle CAPTCHAs more effectively than traditional scraping approaches.

Navigating these challenges requires a combination of technical expertise, continuous monitoring, and adherence to ethical and legal standards. Employing best practices and staying informed about changes in the scraping landscape are essential for successful and sustainable Amazon scraping.

Amazon Data Collection Using Web Scraping API

Web scraping APIs serve as an alternative approach to manual scraping by providing a structured and programmatic way to access and extract data from websites. These APIs are designed to simplify the data extraction process, offering pre-defined endpoints and responses for retrieving specific information from a website.

Advantages of Using a Web Scraping API:

Structured Data Access:

APIs offer structured endpoints for accessing data, reducing the complexity of manual scraping code.

Data is provided in a consistent format, making it easier to integrate into applications and databases.

Reduced Maintenance:

API endpoints are more stable than HTML structures, reducing the need for frequent updates to scraping scripts due to website changes.

Faster Development:

APIs streamline the development process by providing a clear interface for data retrieval, saving time compared to manually parsing HTML.

Compliance with Website Policies:

Some websites encourage the use of their APIs for data access, ensuring compliance with terms of service and avoiding legal issues associated with unauthorized scraping.

Less Resource Intensive:

API requests are often less resource-intensive compared to loading entire web pages, resulting in faster and more efficient data extraction.

Disadvantages of Using a Web Scraping API:

Limited Data Access:

APIs may provide limited access to data compared to what is available through manual scraping, as they expose only specific endpoints defined by the website.

API Rate Limits:

Websites often impose rate limits on API requests to prevent abuse. This can slow down data extraction, especially for large-scale projects.

Authentication Requirements:

Some APIs require authentication, making it necessary to obtain and manage API keys, tokens, or credentials.

Costs:

While some APIs are free, others may have associated costs based on usage. High-volume data extraction could lead to increased expenses.

Dependence on Third-Party Providers:

Relying on third-party APIs introduces a dependency on the provider's reliability and availability. Changes or discontinuation of an API can impact data collection.

Conclusion

Web scraping serves as a robust method for extracting valuable data from Amazon product pages, empowering businesses with insights for activities such as price comparison and market research. However, the process comes with its challenges, including frequent website structural changes and anti-scraping measures.

Navigating these challenges requires adaptive strategies and adherence to ethical scraping practices, such as respecting website policies and implementing techniques like rotating user agents and introducing delays between requests.

For those seeking a structured and programmatic approach, web scraping APIs offer an alternative, providing advantages like structured data access and reduced maintenance. The Real Data API stands as a reliable solution for scraping Amazon product data, offering services for Amazon web scraping, ASINs data scraping, and access to Amazon API datasets, facilitating tasks like price comparison and market research.

As users engage in web scraping, it is crucial to uphold responsible practices, respecting legal considerations and contributing to the sustainability of the web data extraction ecosystem. Additional resources, tutorials, and guides can aid in further learning and exploration of web scraping and API integration. Explore the Real Data API for an efficient and ethical approach to Amazon data collection, ensuring reliable and compliant extraction for diverse needs.

Know More: https://www.realdataapi.com/scrape-amazon-product-data-using-python.php

#AmazonProductDataScraping#AmazonScrapingServices#AmazonASINDataScraper#AmazonDataScraper#AmazonAPIDataSet

0 notes

Link

#AHL#AmericanHockeyLeague#BridgeportIslanders#ECHL#HartfordWolfPack#HersheyBears#LehighValleyPhantoms#NationalHockeyLeague#NewYorkRangers#TotalMortgageArena#XLCenter

0 notes

Text

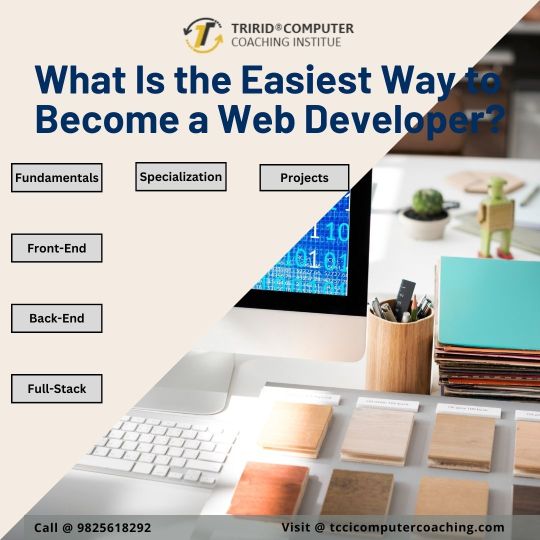

The easiest way to become a web developer is by learning HTML, CSS, JavaScript, Angular, Bootstrap, React, WordPress, ASP.Net, Java, PHP, Python etc.

HTML Hypertext mark-up language is standard mark-up language which is very useful in creating webpage and web application. HTML describes the structure of Web pages using mark-up. HTML elements are the building blocks of HTML pages.

CSS is an important way to control how your Web pages look. It controls colors, typography, and the size and placement of elements and images. But it can be very difficult to learn CSS, and some people would rather not learn it.

JavaScript is an object-based scripting language that is lightweight and cross-platform. It is useful for validating input in a web browser without needing to go to the server. It is useful for manipulating HTML DOM, CSS when a user is interacting with a web page.

Angular is a structural framework for dynamic web apps. It lets you use HTML as your template language and lets you extend HTML’s syntax to express your application’s components clearly and succinctly.

Bootstrap is a huge collection of reusable and very useful code snippets for developers. It is a front end development framework, written in HTML, CSS and JavaScript. Bootstrap allows designers and developers to quickly create fully responsive websites.

React, you can develop your own individual reusable components that you can think of as independent Lego blocks as per usage. These components are individual pieces of an interface in ReactJS.

WordPress is a capable semantic publishing platform, and it accompanies an incredible arrangement of elements intended to make your experience as a distributor on the Internet as simple, pleasant, and appealing as possible.

ASP.Net Microsoft .NET is a software component that runs on the Windows operating system. Programmers produce software by combining their source code with .NET Framework and other libraries.

Java technology is used to develop applications for a wide range of environments, from consumer devices to heterogeneous enterprise systems. In this section, get a high-level view of the Java platform and its components.

Python is an interpreted programming language that supports multiple operating systems such as Windows, Linux, and Mac. Code developed in Python can run on any platform without recompilation.

TCCI provides the best training in web programming through different learning methods/media is located in Bopal Ahmedabad and ISCON Ambli Road in Ahmedabad.

For More Information:

Call us @ +91 9825618292

Visit us @ http://tccicomputercoaching.com

#computer classes in bopal Ahmedabad#computer classes in ISKON Ambli Road Ahmedabad#web developer classes in bopal Ahmedabad#web developer classes in ISKON Ambli Road Ahmedabad#computer course in ISKON Ahmedabad

0 notes

Text

Best New Techno (P/D): October 2023 by Beatport

- Artists: Beatport

DATE CREATED: 2023-09-29

GENRES: Hard Techno, Techno (Peak Time / Driving)

Tracklist :

1. Marcel Fengler - Cypher(Original Mix)

2. Leopold Bär - Pretty Rise Device(Original Mix)

3. Inafekt - Rattle(Original Mix)

4. TiM TASTE - Control(Original Mix)

5. Matrefakt - Dimension(Original Mix)

6. Melvin Spix, Jeremy Wahab - Phantom(Original Mix)

7. Rabo, Traumata - Arohae(Original Mix)

8. Lazar (IT), Jurgen Degener - Arayashiki(Original Mix)

9. Black Dave - Soul Searcher(Original Mix)

10. Joyhauser - LIGHTBRINGERS(Original mix)

11. KILL SCRIPT - THE FUTURE(EXTENDED MIX)

12. DM-Theory, ZRAK - Wizzy Dizzy(Tecnica remix)

13. Saol Nua - Inn Deep(Original Mix)

14. RSRRCT - Touch the Stratosphere(Original Mix)

15. Sofian - Reminder(Original Mix)

16. Marbs - Yamaiay(Original Mix)

17. Kos:mo - Be Strong(Original Mix)

18. NANCY Live - Slant(Original Mix)

19. Heerhorst, Gizmo & Mac - Solar System(Heerhorst Mix)

20. Sandro Mure - Tribal War(Original

Read the full article

0 notes

Text

Best New Techno (P/D): October 2023 by Beatport

- Artists: Beatport

DATE CREATED: 2023-09-29

GENRES: Hard Techno, Techno (Peak Time / Driving)

Tracklist :

1. Marcel Fengler - Cypher(Original Mix)

2. Leopold Bär - Pretty Rise Device(Original Mix)

3. Inafekt - Rattle(Original Mix)

4. TiM TASTE - Control(Original Mix)

5. Matrefakt - Dimension(Original Mix)

6. Melvin Spix, Jeremy Wahab - Phantom(Original Mix)

7. Rabo, Traumata - Arohae(Original Mix)

8. Lazar (IT), Jurgen Degener - Arayashiki(Original Mix)

9. Black Dave - Soul Searcher(Original Mix)

10. Joyhauser - LIGHTBRINGERS(Original mix)

11. KILL SCRIPT - THE FUTURE(EXTENDED MIX)

12. DM-Theory, ZRAK - Wizzy Dizzy(Tecnica remix)

13. Saol Nua - Inn Deep(Original Mix)

14. RSRRCT - Touch the Stratosphere(Original Mix)

15. Sofian - Reminder(Original Mix)

16. Marbs - Yamaiay(Original Mix)

17. Kos:mo - Be Strong(Original Mix)

18. NANCY Live - Slant(Original Mix)

19. Heerhorst, Gizmo & Mac - Solar System(Heerhorst Mix)

20. Sandro Mure - Tribal War(Original

Read the full article

0 notes

Text

Python Course in Ghaziabad- Understanding Why Learning Python is a Smart Move

The need to learn Python is best illustrated by the unusual popularity of this language over the past few years. But what is it caused by? Python Full Stack Course in Ghaziabad is distinguished by its unique combination of simplicity and adaptability. Python, unlike other programming languages, is ideal for beginners, even children. Furthermore, owing to a huge number of ready-made modules, it has tremendous problem-solving skills. Let's briefly list all its advantages; without knowing which, it is impossible to understand what kind of language it is.

Why Learn Python

1. Universal: You can create anything on it. These are web development, application programs, games, plug-ins for various software, scripts for process automation, microcontroller programming, etc.

2. Advanced areas of programming related to artificial intelligence: big data analysis, machine learning, and neural networks.

Cars are a prime example. First, there was an automatic transmission, then cruise control, and now the development of unmanned vehicles is already in full swing. Today, few people are surprised by the capabilities of the face recognition system global robotization, and the number of areas of application of artificial intelligence is no longer countable. And it is clear that the horizon of development of these areas goes for many decades ahead. And it is the Python language that dominates them.

3. Cross-platform: Python applications work seamlessly on Windows, Unix, and Mac OS.

4. A huge number of ready-made free modules, such as the Django framework.

5. Good performance: It is lower than C++ but higher than PHP. This allowed Python to take a leading position in web development in such a short time.

6. High speed of creating applications, unlike the same Java or C ++: Python was originally focused on the speed of writing code and its readability. That is why its syntax, in comparison with other languages, is more understandable to beginners.

7. Demand for Python developers: Statistics show a shortage of such specialists, including even juniors. Even Google and Yandex used Python when creating their search engines; the same applies to the largest social networks and other Internet giants.

8. Great as a first programming language: Even schoolchildren are now learning Python.

And this is just some of the advantages of this language. But even these are enough to make the need to learn Python obvious.

In Conclusion

Python's popularity as a programming language cannot be overstated in a world driven by technological progress. Python's broad success stems from its unmatched blend of simplicity and adaptability. Python's capabilities extend beyond web development to artificial intelligence, making it the go-to choice for ambitious programmers. As we go forward into a technologically driven future, the need to understand Python becomes ever more evident. Through a Python Full Stack Course in Ghaziabad, you may go on a journey of coding excellence to harness its boundless potential and impact the digital environment.

Article For details about Ethical hacking training institute in ghaziabad please visit our website: caddcentreghaziabad.com/

1 note

·

View note

Text

Best Node.js Open Source Projects in GitHub in 2023

If you’re curious about discovering the best open-source projects in the Node.js domain, this article walks you through the best Node js open-source projects in GitHub in 2023.

A Brief History of NodeJS

Let’s start with the inception of Nodejs.

Ryan Dahl created Node.js in 2009 as a response to the limitations of existing servers at that time, which could not handle high-volume concurrent connections. Node.js enabled developers to utilize JavaScript for server-side scripting; as a result, developers could create web application development using a single language.

Initially, Node.js was exclusively available for Linux and Mac operating systems. However, In June 2011, Microsoft and Joyent collaborated to develop a native Windows version of Node.js, thereby extending its compatibility with Windows operating systems.

Node.js served as the foundation for the development of various frameworks and libraries, including Express, Socket.io, Hapi, Koa, and Ghost. In addition to libraries and frameworks, many tools were built upon Node.js to enhance its functionality and cater to different use cases.

Subsequently, Node.js expanded its capabilities in 2017 with the inclusion of HTTP/2 support. Consequently, These advancements led to a surge in the number of projects developed around Node.js, with approximately 80,000 open-source projects currently on the internet.

What is Node.js?

NodeJS is a server-side built on the Google Chrome V8 engine. It is an open-source, single-threaded, and cross-platform environment that provides faster execution of JavaScript programs, also allowing Javascript outside the browser. Since it uses event-driven, non-blocking I/O architecture, it is widely popular among web app developers. On the whole, It has gained immense popularity, leading to the development of numerous open-source projects centered around it.

Use Cases of Node.Js

Node.js is a versatile platform that can be utilized for creating various software solutions, including web applications, APIs, microservices, real-time chat applications like instant messaging apps, command line tools, streaming applications, IoT applications, and desktop applications compatible with multiple platforms.

Best Node.js GitHub Open Source Projects

In this article, we have included these metrics in figuring out the best projects.

Stars: The project has more than 10,000 stars on GitHub. The number of stars is often indicative of a framework’s popularity and the level of interest it receives from developers.

Active community: We have taken into account projects that have an active and engaged community. An active community indicates that the project is well-supported and has a vibrant community of developers who contribute to its growth and improvement.

Use cases: Also, we have considered the popularity and relevance of a project in various use cases. A widely used and well-suited project for multiple use cases indicates its versatility and potential to address different development needs.

Read more at https://www.brilworks.com/blog/best-node-js-open-source-projects-in-github/

0 notes

Text

Amazon.com: Portable Monitor 17.3 Inch 144HZ 2K Gaming Computer Monitor for Laptop with VESA 1MS AMD FreeSync HDR Frameless Ultra Slim Screen Extender Monitor Portable for Mac PS5 Xbox Switch Steam Deck : Electronics

"<iframe sandbox="allow-popups allow-scripts allow-modals allow-forms allow-same-origin" style="width:120px;height:240px;" marginwidth="0" marginheight="0" scrolling="no" frameborder="0" src="//ws-na.amazon-adsystem.com/widgets/q?ServiceVersion=20070822&OneJS=1&Operation=GetAdHtml&MarketPlace=US&source=ss&ref=as_ss_li_til&ad_type=product_link&tracking_id=pinterestsa03-20&language=en_US&marketplace=amazon®ion=US&placement=B07XDDY7TS&asins=B07XDDY7TS&linkId=6dfb0399826a6deac7c37c486dade0cc&show_border=true&link_opens_in_new_window=true"></iframe>"

https://www.amazon.com/dp/B07XDDY7TS/ref=sspa_dk_detail_4?pd_rd_i=B07XDDY7TS&pd_rd_w=s4MZs&content-id=amzn1.sym.0d1092dc-81bb-493f-8769-d5c802257e94&pf_rd_p=0d1092dc-81bb-493f-8769-d5c802257e94&pf_rd_r=ZTRDA1X6MATB9W9GT23D&pd_rd_wg=klGaI&pd_rd_r=c1324586-018d-4ae4-b81a-9daeb8b1f8ce&s=pc&sp_csd=d2lkZ2V0TmFtZT1zcF9kZXRhaWwy&th=1#:~:text=%3Ciframe%20sandbox%3D%22allow,link_opens_in_new_window%3Dtrue%22%3E%3C/iframe%3E

0 notes

Text

Saturday Morning Coffee

Good morning!

Welp, let’s see what kind of links I set aside this week. I feel like is pretty varied. Enjoy! ☕️

Vice

People in Utah who visit Pornhub will now be greeted by adult performer Cherie DeVille asking them to tell their representatives to change their age verification law.

I’ll bet VPN usage just went through the roof. Hopefully all VPN service providers offered a discount to sign up this week. If they didn’t use the discount code “Utah” they blew it. 😆

The Verge

By Monday, CNN anchor Jake Tapper would ask his guests to respond to a statement made by Sen. Brian Schatz, the first — but not only — US senator on Bluesky. “Senator Brian Schatz, just, uh, skeeted, on Bluesky,” said Tapper live on air before reading the skeet out loud.

So I mused yesterday on Mastodon that I thought the word skeet didn’t make sense but bravo to the person or persons who made it stick.

Well, someone clued me in on what skeet means. Darned kids and their made up words. 😳

Paul Lefebvre

I had recalled reading in the past that the Mac Pro CPU might also be upgradeable, but I had never looked into it before.

This is a fun read! There was a time when I’d repair and upgrade my own PC’s and I really enjoyed it. Apple has never been great at doing this but at least created a few Macs that could be opened and repaired or extended with other hardware. Give Paul’s piece a read it’s a nice little adventure and he’s improved an old Mac with a new CPU.

Like I said above Apple is horrible about creating a nice computer you can open and repair but it’s rare. Then once they do manage to make one they don’t make a way for you to really upgrade it. It’s a real shame folks dropped large sums of money on the latest Mac Pro only to have M1 and M2 based machines outstrip it.

Also, can someone take a Trash Can Mac and fill it with M2 Mac Mini guts? It might pose some challenges but I’d love to see one. The Trash Can is a beautiful artifact at this point and it would be nice to see it revived.

The Hollywood Reporter

Top Hollywood writers took to social media to express defiant support for their first union walkout in 15 years and showed their feelings about studios being unwilling to meet their contract demands.

Enjoy your scripted shows while you can. I’ve heard some shows have already shut down production.

Good luck to the Writers Guild of America. I hope you’re able to negotiate favorable terms. 🤞🏼

Vice

Adobe is warning some owners of its Creative Cloud software applications that they’re no longer allowed to use older versions of the software.

When you purchase software you don’t really own it, you’ve purchased a license to use it. That license can be revoked at any time but I’ve never experienced it.

For someone like me a really old version of Photoshop — pre Creative Cloud — would probably work just fine in a VM but folks who run small shops and don’t want to upgrade for their own reasons shouldn’t be punished. Just my horrible two cents on the matter.

Semafor

Zephyr, the only trans member of the Montana legislature, was barred from the House floor and gallery last week after facing backlash from Republicans during the debate over a bill that prohibits gender-affirming medical care for transgender minors.

I really hope Zephyr is allowed back on the floor and is allowed to represent her constituents properly.

Good luck Zephyr! 🍀

Elizabeth Valentine Haste

Under normal circumstances, I would be forbidden from discussing this. In fact, I am bound by NDA not to disclose many of the specific details of my experience at Rune Labs, but thanks to Washington’s Silenced No More Act (RCW 49.44.211), my ability to speak about discrimination, harassment, or retaliation that I have witnessed in the workplace is legally protected.

I had the pleasure of working with Elizabeth — we called her Val — at WillowTree and she’s very good at her job and is a fine, fine, person. Hire her.

Val, if for some reason you stumble across this come back to WillowTree! 🙏🏼

Aarthi & Sriram’s Podcast

Anders Hejlsberg is special - he is someone we idolized since we were teenagers, heard whispers and stories about his prowess and feats in our formative years at Microsoft.

The man created four extremely popular programming languages; Turbo Pascal, Delphi, C#, and TypeScript. That’s a crazy wonderful resume!

To create a single language used my millions and millions of developer would be a once in a lifetime achievement but four time? Simply incredible.

Hey, if you have to work in JavaScript(sorry!) at least Microsoft was able to tame some of the ugliness of JavaScript and make it more productive.

Electrify America CEO Rob Barrosa’s Coast-to-Coast Road Trip

The above link will take you to YouTube for the video. It’s not that long and is a real joy to watch, at least for me it was. I will point out WillowTree works with Electrify America but I’d still think this was cool even without that relationship. It’s nice to see more and more electric charging stations crop up across America.

The route he chose to take was an interesting one. It takes home through Chicago on a more northerly path. Interstate 40 is a pretty straight shot across the country I’ve driven many times.

It makes me wonder if he took this route because I-40 doesn’t have enough Electrify America charging stations to get you across America?

One of these days we have to try the route he took. Perhaps spend some time in Chicago?

Amazon Prime Video Blog

The initial version of our service consisted of distributed components that were orchestrated by AWS Step Functions. The two most expensive operations in terms of cost were the orchestration workflow and when data passed between distributed components. To address this, we moved all components into a single process to keep the data transfer within the process memory, which also simplified the orchestration logic.

I love reading about teams improving performance of their software and this is a particularly interesting read because they also lower the cost of their service by 90%! That’s a crazy good improvement.

Now if they could stabilize the Amazon app on Roku that would be amazing. 😃

Jalopnik

The 2024 Trax is Chevrolet’s bid for the lower end of the new car market in the U.S. In fact, the Trax is now going to be the cheapest car Chevy sells in America, which brings the General hope that the new and improved version of its entry-level crossover can turn a first-time Chevy buyer into a lifelong customer.

Our daughter has a Trax and it’s a great little car I love to drive when I get the chance. It’s small and really zippy! It’s just fun to drive.

I hope this 3-cylinder model is a great success so Chevrolet keeps making it.

Hey, Chevy, while you’re at it how ‘bout you make the Trax into a World Rally Championship car? Seems like it would be a great fit!

Ars Technica

After using System76’s Pangolin as my primary work laptop for nearly six weeks, I can tell you this: If you need a 15-inch Linux-focused laptop, this is the one to get.

If I were to invest in a PC I believe I’d go for a System76 box. It seems they’d make for a great little programming box. Plenty of horsepower to handle C++ and C# dev tools and compiling.

Question: Why do all PC trackpads suck? I mean like really suck. Everyone I’ve ever used has been a real pain. The Mac, no doubt, has the best trackpad in the business.

News Thump

After Florida governor Ron DeSantis signed into law a ban on abortion after 6 weeks, Taliban officials in Afghanistan have welcomed the move and praised the regressive thinking behind it.

I really hope this is true because we are seeing states in our Union beginning to turn into themselves into women and LGBTQ+ hating theocracies.

Seems fit the Taliban would praise them and DeSantis is a real piece of work worthy of export to Afghanistan. Maybe we can exchange him for some wonderful women looking to get a great education?

I hope you enjoy your weekend.

0 notes

Text

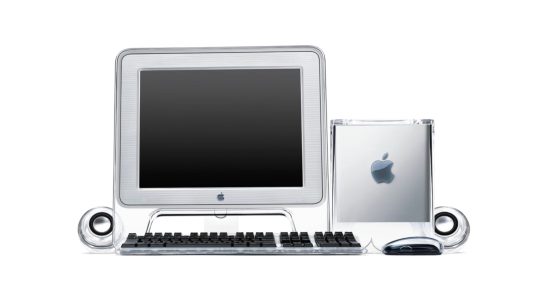

CEXUAL MISHAPS

I rarely buy new. One's junk is my treasure, or so I thought -- sometimes junk is junk -- and my latest adventure taught me many lessons in upCEXing. Let me share the wisdom I inadvertently acquired dealing with CEX and old technology for the modern times.

This is a guide for whoever is looking to buy a used Mac and get the best value for a limited budget. Having done a lot of research during this bumpy adventure, this “essay” will answer the following existential questions:

• Can a Mac Pro shaped like a trashcan do the job today?

• Why dust off an old FireWire iSight camera and how?

• Is third party SSD blade worth the trouble?

• Which budget display comes close to Apple’s retina experience?

• Why choose an Intel Mac over a new M1 or M2 “affordable” and more powerful Mac Mini?

• Is CEX’s 24-month warranty risk-free?

My iMac 5K (late 2014) is dying. It's had three open heart surgeries (logic board replacements) within the first three years of its extended warranty. A few months after the warranty ended, I had to replace the power supply for a third party part which actually fixed several USB/Bluetooth/audio streaming dropout issues I had had from the start, issues that Apple’s geniuses attributed to software. A genius by script is a fool. Now, the iMac's wonderful screen's usable space is shrinking by the month, a beige fog thickening from the edges in, creating a widening trench where ghosts appear and fade away very, very slowly. The screen defects coupled with hardware errors that point to a bad core prompted me to look for a replacement machine. It had to be Intel. Apple's new M1 and M2 computers offer great power but no upgradeable route for the future, and many of my favourite software plugins are Intel only.

Initially I looked for a used Intel Mac Mini, which has just been discontinued by Apple with the introduction of their new M2 Mini. It had to be an i7. Sadly they go for a great lump of dough and they are highly desirable, therefore hard to find -- I love recycling, but hate the chase... During my search, I stumbled across the "trashcan" Mac Pro. To my surprise, people can’t seem to shift them on the second-hand market -- not desirable, therefore cheap and easy to find -- my kind of bargain.

I always wanted a "trashcan" Mac Pro. I once had the Power Mac G4 Cube, and find the late 2013 Pro (not so affectionally dubbed ‘trashcan’) a direct successor to my beloved Cube. It’s more than nostalgia, I assure you.

Power Mac G4 Cube 2000 - 2001

I chose CEX for their 24 month peace-of-mind warranty, and set the sat-nav for the closest Trashcan Pro available. The unit was pristine! I drove back home drooling, almost foaming at the mouth, I even resisted stopping for a toilet break -- I was the owner of the legendary "trashcan" Mac Pro! YES! The same one that went for £5K when new! I paid for it all in trade! BARGAIN!

Except it wasn't a bargain at all.

You saw that coming, of course; why would I write about happy times? The Trashcan Pro was trash. This would be the start of ongoing trials and tribulations which I hope to be done with by the time I finish writing this.

Mac Pro (Late 2013) 2013 - 2019

At first glance, the Mac Pro (late 2013) may appear like a step back from my iMac 5K (late 2014) but it actually supports a newer OS (Monterey vs the iMac's Big Sur). The Mac Pro is also still officially supported by Apple whilst the iMac became obsoletea few years back. Also, the Mac Pro's guts, including the CPU, can be upgraded up to a 12 core CPU, 128 GB RAM vs my iMac which is already maxed out with a consumer grade CPU (i7 4core), 32 GB RAM, 4 GB VRAM. It seemed like the right upgrade in theory, but I would not find out with a defective unit.

CEX has a 14-day return policy and a 24-month peace-of-mind warranty, YIPPEE!

I chose a different model located further away (London) and opted for delivery. Eventually, after an agonising week trying to rouse CEX out of their stupor (the item remained at 'stock-picking-in-progress' for five days), I received a unit that had surely gone through hell and back: scratches, discolouration, sticky spillage, dust, dust, dust, and I'm not talking fine dust; I pulled dust-bunnies from the grills the width of my pinky toe.

CEX has a 14-day return policy and a 24-month peace-of-mind warranty, so I gave it a chance.

Lesson starts here, my friends…

• SSD, or how to upgrade an old MAC’s storage.

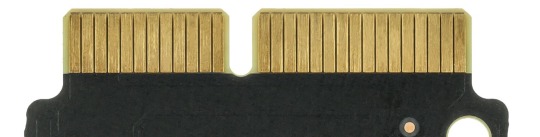

Sadly, the Mac Pro came with a third party 512 GB SSD fitted with an adapter.

Adapters - buy cheap buy thrice!

Between 2012 and 2019, Apple used two types of connector for their SSD blades, both proprietary. In order to use a third party blade, one would need an adapter. The part is widely available and cheap. Thankfully? No. Apple rarely does things willy nilly. In order to work nice with the Mac, the SSD itself needs to comply to the Mac's and its OS' requirements, ECC being the start of many geeky terms I won't bore you with. So you think you can use any SSD with the said adapter? No. No you cannot. It might work for a little while, but you'll soon encounter issues with basic functionality. You'll see the blank folder-of-doom with the question mark. You'll have to zap the PRAM in order to force it to detect the SSD, which can only be done with a wired keyboard, I should add (I love "vintage", but I'm not a fan of wires). Latest Mac OS update that necessitates a restart will struggle to complete and leave you sitting at a blank screen hoping that another PRAM ZAP will do the trick. Beyond that, every time you turn the Mac off, the adapter will cause a kernel panic and have the Mac instantly reboot instead, punishing you for modding it with a cheap hack.

☒ 2012 - mid 2013 7-17 pin SSD APPLE connector

☑︎ Mid 2013 - 2019 12-16 pin SSD APPLE connector

Cometh OWC, the Mac friendly people who offer a solution at a premium price, of course. Their Aura Pro X2 blades offer speeds the Mac's old architecture can realise, but at 4x the price of SSD blades on the market, I decided to look secondhand. Remember CEX and their peace-of-mind warranty?

CEX happened to have a 1 TB OWC at a bargain price — oh goody! Several days later, the order got cancelled. Apparently they couldn't dispatch the item without further explanation and the item returned online for the taking. I tried again. I'm still waiting. I expect the same outcome, but I have decided to play along and see where that leads me.

In the meantime, I discovered that Apple used three manufacturers for their SSD blades: Samsung, Toshiba and Sandisk. They used two different connectors: 7-17 pin between 2012 and up to mid 2013, and 12-16 pin from mid 2013 up to 2019. Technically, it's possible to source out an OEM Apple part! Good news! CEX, 14 day, 24 month. Can’t go wrong… Unless the item remains in stock picking limbo and ends in cancellation. The OEM Samsung did not return in-stock, however, and I’m still waiting on the OWC...

Cometh eBay. £45 shipping included, Apple OEM made by Samsung, 512 GB, apparently in good condition. I should get it in 48 hours. More on the SSD saga later.

• DISPLAY for MAC peeps.

Coming from a 5K retina display at 218 PPI which looks like ink on paper -- no apparent pixelation -- I could not go for the common sub 100 PPI display. High pixel density monitors are not easy to find, mainly because the PC market caters to gamers (fast refresh rate performance over quality). You'll find plenty of 4K screens at 120 Hz, but what about PPI?

Well...

Research lead me to a 2016 LG 24inch 4K display which offers 185PPI and a displayport. LG has since updated the model, rebranding it under their ultrafine line which only adds 1 pixel “advantage” over the old one (186PPI)... But with the latter using USB-C connectivity, and being twice as expensive, the old model it is! CEX, 14-day, 24-month warranty… But there’s a snag… They don't ship displays. Collect only.

The nearest one of the three available was in Bradford, seventy miles from my door. I love an adventure — DAY TRIP! Bradford is a massive crater illuminated to the brim with rows and rows of fairylights, like standing at the heart of a gigantic beehive breeding glowing larvas. I enjoyed the sight. The CEX employees were lovely. I discovered Dunkin' Donuts had infiltrated the UK too! Bonus! I got a £10 box of 6 (3 maple, 3 Boston creams) possibly for the first and last time, at least on this side of the pond. Dunkin’ Donuts isn’t worth nearly £2 a piece. But I felt the rush of the adventure, and the fruition of a successful bargain hunt raised my spirit.

CEX was too busy to let me test the display. 14 day. 24 month. Can return it to any store. High spirits. Sugar rush. I chose the countryside roads. Sundown. Narrow lanes. No street light. Peaks, twists, the thickest fog I have ever encountered. An omen.

Home, safe, the display turned out to be complete garbage: rows of dead pixels; wide long “burn marks” or "smears" with a bubble on the physical surface of the screen. I began to ask myself what is a 14-day / 24-month warranty worth if everything I buy from CEX is unusable?! I promptly returned the display at my local CEX. Despite it all, spellbound by their 14-day / 24-month warranty, I gave them another chance. What are the odds that a second display turned out as bad as the first? Sheffield? Crystal Peaks? Haven’t been in a while, why not… I waited for the online confirmation the next day, but got a cancellation notice instead. Apparently, the display can’t be located.

(…)

Cometh eBay. Pristine condition. No warranty, but fuss-free. Lovely seller. Unit like new. Why was he selling such a wonderful display? Because he is using Windows, and Windows can’t scale a high pixel density display like the Mac does. My gain. Cheaper than CEX’s. Jackpot!

One last piece of the puzzle missing, though…

• SSD saga continued…

After a week, the 1 TB OWC gets cancelled at CEX on the day I received the 512 GB Apple OEM from eBay. I could finally test my new Trashcan Pro properly.

Let’s gather up the gear I’m playing with first.

My "new" kit

• Mac Pro (Late 2013) 3.5 GHz 6-Core Intel Xeon E5 with dual AMD FirePro D500 3 GB

£380 from CEX

I added 2x 16 GB 1866 MHz DDR3, leaving 2 empty slots for later IF the Mac Pro is a good egg. The good egg gets more ram. The bad egg goes back to CEX and I rethink the whole thing.

I switched the problematic third party SSD with an Apple OEM (Samsung) 512 GB SSD, bypassing the need for an unsupported adapter.

• LG 24inch 4K UHD IPS LED 24UD58-B DISPLAY

£130 on eBay

As close to Apple’s retina display as a budget monitor can get. I’m very pleased with this purchase. I work with it at a 3008 x 1692 scale. My eyes can’t focus at a close enough distance from the screen to notice any discernible pixelation. At 185 PPI versus the iMac’s 218 PPI, the window round edges aren’t as smooth, but by a narrow margin. You’d need to be as hopelessly finicky as me to notice.

• Accessories

Magic keyboard + trackpad (space grey), Apple HI-FI (more on that), ORICO SSD 10 Gbps enclosure + various USB3 external HDDs, Apple iSight camera (more on that).

First, I dressed the Apple OEM SSD with a layer of thermal tape then formatted it in online recovery mode (command + option + R on restart), and installed Monterey from scratch. Since, the Mac Pro has behaved like a Mac should.

LESSON #1: don’t use third party SSD with adapter.

I should add that I tested 3 different adapters — all resulted with a less than desirable outcome. Unless you prevent your Mac to ever go to sleep and never, EVER restart it — I can’t recommend a third party solution.

Orico 10 Gbps SSD enclosure

Since I had bought a WD 1 TB “green” SSD to test the setup with, I opted for an ORICO SSD enclosure for fast external storage, which brings us to the Mac Pro’s older connections problem.

However tempting it is to get a Thunderbolt 2 enclosure, HDD, RAID, etc… DON’T! I already had a G-TECH raid for my iMac 5K, and the external SSD enclosure proved faster despite being limited by the Mac Pro’s USB3 speed (5 Gbps versus Thunderbolt 2’s 20 Gbps) which comes down to the mechanical HDD’s limitation versus the SSD’s. And yes, the SSD on USB3 outperformed the 7200RPM RAID 0 speed on both small and large files using Blackmagic’s speed test software.

LESSON #2: don’t waste your time and money searching for a Thunderbolt 2 solution.

Many RAID enclosures or external HDDs with Thunderbolt 2 are now too old to be trusted, and unless the unit is designed for the Mac, their software won’t be supported with the latest Mac OS. My G-Tech works with the Mac’s own disk utility tool, but others don’t and will be formatted for Windows.

Apple Hi-FI still rockin'

Speakers… I have 2 HomePod Minis that I wrestled with for a while. I used them -- or tried to use them -- as a stereo pair. Ideally I wanted to reproduce the look of the old Powermac G4 Cube with its gorgeous spherical speakers (see G4 Cube picture at the very top). But the HomePod Minis never worked as expected with the iMac 5K nor with the Mac Pro. I suspect it comes down to the older bluetooth 4 as the HomePod Minis play well with my Macbook Air M1 (bluetooth 5). Never mind, my Apple HI-FI still works and the Mac Pro has and optical sound output. The HI-FI is a fabulous boombox. They are hard to find and fetch a fortune, but I’m lucky to have gotten one back then. Apple’s HI-FI truly sounds amazing.

LESSON #3: old gear is fun

Apple iSight Camera 2003 - 2006

Speaking of legacy products… I love Apple’s old iSight camera. With the iMac 5K sporting a superior and integrated cam, I benched the FireWire iSight Camera and never thought I’d use it again… Except, the Mac Pro doesn’t come with a webcam, and it’s enough that I have to stare at LG’s generic plastic design all day that I thought of resurrecting the old iSight for a bit of Apple chic.

But it has FireWire 400, I hear you say dismissively?

Thunderbolt to FireWire 800 + FW800 - FW400 adapters

I found Apple’s Thunderbolt to FireWire adapter on Amazon for a reasonable price, £2 more than the cheapest one on eBay or CEX. With a £3 FW800 to FW400 adapter, my iSight camera is standing proud above all this “new” kit and working fine. Nostalgia? No! Not “entirely”! I call it recycling. Good for the environment, and I look better in standard definition too!

• SO IS IT WORTH ALL THE FUSS?!!!

Or is it Mac-fanatic indulgence? The Mac Pro actually outperforms the iMac 5k on many things, and I got quite lucky. As it turns out, the first Mac Pro I returned was manufactured in February 2014, not long after the model was first introduced. The second one, which is running smoooooothly so far, was manufactured in October 2018, not long before the model was discontinued. How can you find out when your secondhand Mac was manufactured? Here’s the link for you.

PROS

• Runs Monterey like a top

• Faster with multi-thread computation (particularly with video encoding) than many newer Macs

• Standalone unit — if it goes bust, I still have a working screen — if the screen dies, I still have the Mac Pro

• Upgradable to impressive specs - CPU - RAM

• Can boot with Windows unlike the M1/M2 Macs

• Compatibility with existing software

• Still handles latest pro apps without a sweat

CONS

• Very slightly slower on every day single-thread tasks such as surfing and mail (more cores = lower GHz)

• Output: Thunderbolt 2 (legacy mini displayport connection), USB 3 (5 Gbps), HDMI 1.4 (30Hz @ 4K), bluetooth 4

• Expensive eGPU expansion only

Right, enough geeking about for a while. I do hope this has helped some of you.

1 note

·

View note

Text

iso to dmg poweriso

While Macs don't come with any certain image editing application, you can use both iPhoto and Preview to do a couple of basic tasks. poweriso Registration Key is very used by quickly cropping, resizing and compressing posters. poweriso Full Crack is to open an image, crop it to an unusual area, resize it, and then save it out being a compressed file appropriate email or Web display.

Images can effect reputation management. Non-flattering images can truly hurt you can. Optimizing your images can help push any images a lot fewer rather the public not see off the effects pages.

We don't want to along with the original version belonging to the photograph, poweriso so that we are in order to be duplicate the layer by selecting LAYER from the menu bar, then DUPLICATE Coating. You should see two thumbnails of your photograph by the right side of just in case you in the floating container.

If possibly to in two pictures taken of the identical scene one inch JPEG then one in RAW (without any post-processing) then you might think the JPEG image looks better, a person wouldn't be wrong. However, if you were to look at a RAW image with post-processing work done you would think that running without shoes was the highest image. Why?

If poweriso Activation Code Free use a tripod when taking an image you should be able to push the print resolution under what the recommended 300ppi - this will allow you to obtain a larger print.

Google can see the wording in your Flash computer data files. Google takes a snippet of that and then can index that file. Google can read sites scripted with AS1 and AS2 files.

Also termed as a "file extensions" because they live within the of a filename, after "dot." Kind of like the last quotation mark in that last sentence in your essay. In many cases you, as user, in no way actually investigate extension, but believe me, it's and then there. File extensions are the believe a Word file you actually name "bobs files version 3.23" is more than likely not in order to be work. It's a list within the more common ones, and how they is used in the pre-press way.

Adding an adjustment layer and dragging the Highlight slider for the start on the histogram will extend the dynamic range and within the highlights and midtones appear brighter. Your dramatic and colorful background is now accomplished.

0 notes