#it's definitely cool to have it available in debug because it would be a great thing to use for certain builds

Photo

So I was just watching some reviews of the Growing Together build/buy objects and appearantly this pavillion from the Isle of Volpe in Henford-on-Bagley is among the new pack’s debug objects?? Which is interesting because it’s not actually in the Cottage Living debug catalouge (I was looking for it the other day and was a bit disappointed it wasn’t included)...

(The second screenshot is taken from Simproved’s debug overview on YouTube: “What’s HIDDEN in GROWING TOGETHER? | Sims 4”, minute 11:13)

#it's definitely cool to have it available in debug because it would be a great thing to use for certain builds#on the other hand I find it a bit strange they'd be re-using such a rather specific object for a second world#like it seems to be made for this specific neighbourhood in Henford and its characteristic atmosphere#and just adding it to a different world with a very different feel to it seems a bit odd??#and they also seem to have done this with some objects from Vampires and Realm of Magic#but well I don't know yet where in San Sequoia these things are actually placed#maybe it makes more sense than I'm imagining now#I guess it's mainly bugging me that it's not also included in Cottage living debug#anyway#just rambling#the sims 4#sims 4#ts4#the sims 4 growing together#sims 4 growing together#ts4 growing together#growing together

8 notes

·

View notes

Photo

The Sims 4 Realm of Magic - Early Access Build/Buy Review

Huge thank you to the EA Game Changer Program for providing me with the opportunity to play the Realm of Magic Game Pack early!

I feel like I always say this about new packs, but the build/buy stuff in this pack made me completely forget about the things I was disappointed in with CAS! Don’t get me wrong, build/buy has it’s issues as well, but... well, it kind of worked in this pack’s favour when it comes to the buy objects. That’ll make sense in a minute, I promise lol

Anon will be back on for the next few days so if you have any questions about Realm of Magic, feel free to send me an ask! ✨

See a preview of all the new Build/Buy items here

✨The Build

There isn’t really much to say about the build catalogue that I haven’t continued to say with pretty much every early access review I’ve ever done. The stuff is great, amazing actually, but there needs to be more variations. Most of the doors are for medium height walls, but most of the windows are for shorter walls? I know you can use shorter windows on taller walls, but they look too small next to doors made for those taller walls.

One day I would love to see the Sims team actually release a FULL set of build items. Like doors and windows made for all wall heights, in three, two and one tile variations, fences with matching stair railings, columns that not only match the rest of the build set but also the new spandrels and friezes that come with them. I just wish whoever was in charge of making these things thought a little bit further than “Ooh pretty door! That’s finished, now onto the one window; I’m gonna make it a different height completely and not matching colours at all.”

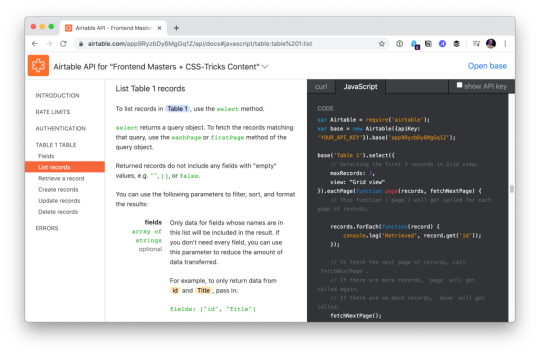

Speaking of not matching colours... the biggest pet peeve I have — that you guys are probably getting sick of hearing me go on and on about — is that nothing matches!

With this pack we get four new walls and two new floors... NONE of which match! You would assume that the wood floor matches the wall with the wood paneling on the bottom, but it doesn’t, the swatches are completely different. Or maybe you’d assume the brick floor goes with the two brick walls? You’d be wrong there too; again completely different swatches and the two brick walls don’t even match. And as for the tiled wall (the one with the half blue top), there’s no new tiles and it doesn’t match with anything else we currently have available in base game or any of the the packs. It’s almost like all of the floors and walls were made independently of one another and weren’t actually supposed to match... So that’s neat, and yet not at all unusual when it comes to the Sims.

Oh and before I forget, there’s a weird double-sided glitch happening since the release of the last patch where either columns aren’t reaching the full height of the walls, so you get a gap at the top of them, or they’re reaching too high and clip through the bottom of the next floor. If this is happening to you, check out this tweet from SimGuruNick for a temporary fix until they can figure out what’s happening and fix it in an upcoming patch 😊

✨The Buy

Listen, buy mode is definitely not without it’s issues either. Once again we don’t have a cabinet to match new counters, there are SEVERAL swatches missing or wrong from many different objects, some of the furniture swatches don’t match others that it’s supposed to (eg a dark brown swatch on all but one display case which has a black swatch instead), the big bookcase has a slot (which it doesn’t need) that is for some reason three tiles behind it, you can see ambient occlusion shadows through one of the living chairs (like CC lol), the list goes on...

BUT

There are objects that came with base game that work perfectly with all of the new stuff, which I have NEVER used before, that I found myself using as I was building with the new pack! And even though no cabinet came with the new counters, a stove and fridge did! And the cabinets from the Vampires pack that I love but never get to use work really well with them too!

Also, look at the preview pics up there, look how many different wands and brooms there are to collect! THERE’S A MAGIC MOP TO RIDE! And all the new books! And the portals, that you can use like elevators to get around your lot! And a “magical” bassinet that would also work perfectly for anyone playing historical challenges too! And the familiars (the things that look like crystal balls)! OH THE FAMILIARS! You’ll see more of them in my gameplay pics, but trust me, LeafBat alone is worth buying this pack! And don’t even get me started on all the cool things that are in the debug menu! Broken building shells with spooky floating furniture anyone?

✨The Verdict

Once again, a pack is saved in my eyes by build/buy (and gameplay but I’ll get into that when my story starts posting lol)!

I looked at all the CAS stuff the very first day we got early access and after a few hours I closed my game and went to play Minecraft feeling a little disappointed because of all the things I mentioned in my CAS review. But the next day when I opened the game back up to check out all the build/buy stuff... Omg I had SO much fun building and decorating my very first witchy house in Glimmerbrook for a very special sim and I can’t wait to show it off to you guys a bit more once my gameplay starts posting!

All thoughts and opinions expressed in this review are my own. I am not paid by EA to “hype” their games; I am given the opportunity to review their games early in exchange for an honest review.

Click here for my Create-A-Sim Review

318 notes

·

View notes

Text

What is Developer Experience (DX)?

Developer Experience¹ is a term² with a self-declaring meaning — the experience of developers — but it eludes definition in the sense that people invoke it at different times for different reasons referring to different things. For instance, our own Sarah Drasner’s current job title is “VP of Developer Experience” at Netlify, so it’s a very real thing. But a job title is just one way the term is used. Let’s dig in a bit and apply it to the different ways people think about and use the term.

People think of specific companies.

I hear DX and Stripe together a lot. That makes sense. Stripe is a payment gateway company almost exclusively for developers. They are serious about providing a good experience for their customers (developers), hence “developer experience.” Just listen to Suz Hinton talk about “friction journals”, which is the idea of sitting down to use a product (like Stripe) and noting down every single little WTF moment, confusion, and frustration so that improvements can be made:

Netlify is like Stripe in this way, as is Heroku, CodePen, and any number of companies where the entire customer base is developers. For companies like this, it’s almost like DX is what UX (User Experience) is for any other company.

People think of specific technologies.

It’s common to hear DX invoked when comparing technologies. For instance, some people will say that Vue offers a better developer experience than React. (I’m not trying to start anything, I don’t even have much of an opinion on this.) They are talking about things like APIs. Perhaps the state is more intuitive to manage in one vs. the other. Or they are talking about features. I know Vue and Svelte have animation helpers built-in while React does not. But React has hooks and people generally like those. These are aspects of the DX of these technologies.

Or they might be speaking about the feeling around the tools surrounding the core technology. I know create-react-app is widely beloved, but so is the Vue CLI. React Router is hugely popular, but Vue has a router that is blessed (and maintained) by the core team which offers a certain feeling of trust.

> vue create hello-world

> npx create-react-app my-app

I’m not using JavaScript frameworks/libraries as just any random example. I hear people talk about DX as it relates to JavaScript more than anything else — which could be due to the people in my circles, but it feels notable.

People think of the world around the technology.

Everybody thinks good docs are important. There is no such thing as a technology that is better than another but has much worse docs. The one with the better docs is better overall because it has better docs. That’s not the technology itself; that’s the world around it.

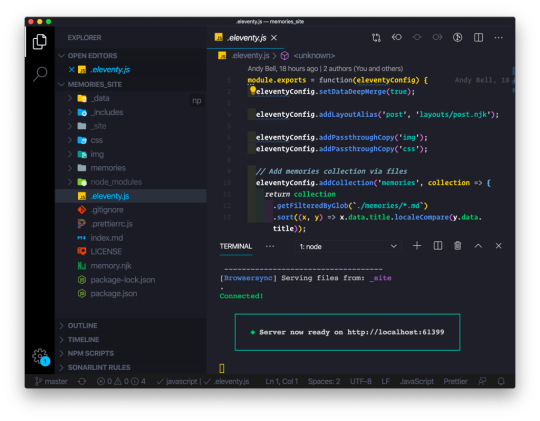

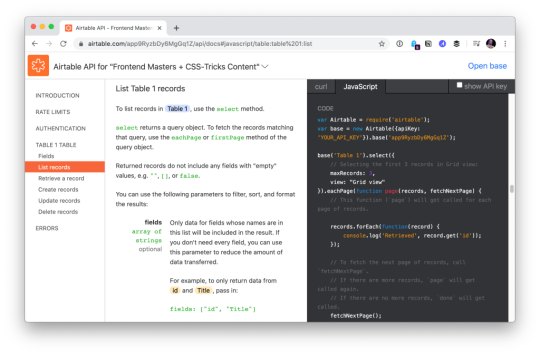

Have you ever seen a developer product with an API, and when you view the docs for the API while logged in, it uses API keys and data and settings from your own account to demonstrate? That’s extraordinary to me. That feels like DX to me.

Airtable docs showing me API usage with my own data.

“Make the right thing easy,” notes Jake Dohm.

That word, easy, feels highly related to DX. Technologies that make things easy are technologies with good DX. In usage as well as in understanding. How easily (and quickly) can I understand what your technology does and what I can do with it?

What the technology does is often only half of the story. The happy path might be great, but what happens when it breaks or errors? How is the error reporting and logging? I think of Apollo and GraphQL here in my own experience. It’s such a great technology, but the error reporting feels horrendous in that it’s very difficult to track down even stuff like typos triggering errors in development.

What is the debugging story like? Are there special tools for it? The same goes for testing. These things are fundamental DX issues.

People think of technology offerings.

For instance, a technology might be “good” already. Say it has an API that developers like. Then it starts offering a CLI. That’s (generally) a DX improvement, because it opens up doors for developers who prefer working in that world and who build processes around it.

I think of things like Netlify Dev here. They already have this great platform and then say, here, you can run it all on your own machine too. That’s taking DX seriously.

One aspect of Netlify Dev that is nice: The terminal command to start my local dev environment across all my sites on Netlify, regardless of what technology powers them, is the same: netlify dev

Having a dedicated CLI is almost always a good DX step, assuming it is well done and maintained. I remember WordPress before WP-CLI, and now lots of documentation just assumes you’re using it. I wasn’t even aware Cloudinary had a CLI until the other day when I needed it and was pleasantly surprised that it was there. I remember when npm scripts started taking over the world. (What would npm be without a CLI?) We used to have a variety of different task runners, but now it’s largely assumed a project has run commands built into the package.json that you use to do anything the project needs to do.

Melanie Sumner thinks of CLIs immediately as core DX.

People think of the literal experience of coding.

There is nothing more directly DX than the experience of typing code into code editing software and running it. That’s what “coding” is and that’s what developers do. It’s no wonder that developers take that experience seriously and are constantly trying to improve it for themselves and their teams. I think of things like VS Code in how it’s essentially the DX of it that has made it so dominant in the code editing space in such a short time. VS Code does all kinds of things that developers like, does them well, does them fast, and allows for a very wide degree of customization.

TypeScript keeps growing in popularity no doubt in part due to the experience it offers within VS Code. TypeScript literally helps you code better by showing you, for example, what functions need as parameters, and making it hard to do the wrong thing.

Then there is the experience outside the editor, which in the browser itself. Years ago, I wrote Style Injection is for Winners where my point was, as a CSS developer, the experience of saving CSS code and seeing the changes instantly in the browser is a DX you definitely want to have. That concept continues to live on, growing up to JavaScript as well, where “hot reloading” is goosebump-worthy.

The difference between a poor developer environment (no IDE help, slow saves, manual refreshes, slow pipelines) and a great developer environment (fancy editor assistance, hot reloading, fast everything) is startling. A good developer environment, good DX, makes you a better and more productive programmer.

People compare it to user experience (UX).

There is a strong negative connotation to DX sometimes. It happens when people blame it for it existing at the cost of user experience.

I think of things like client-side developer-only libraries. Think of the classic library that everyone loves to dunk: Moment.js. Moment allows you to manipulate dates in JavaScript, and is often used client-side to do that. Users don’t care if you have a fancy API available to manipulate dates. That is entirely a developer convenience. So, you ship this library for yourself (good DX) at the cost of slowing down the website (bad UX). Most client-side JavaScript is in this category.

Equally as often, people connect developer experience and user experience. If developers are empowered and effective, that will “trickle down” to produce good software, the theory goes.

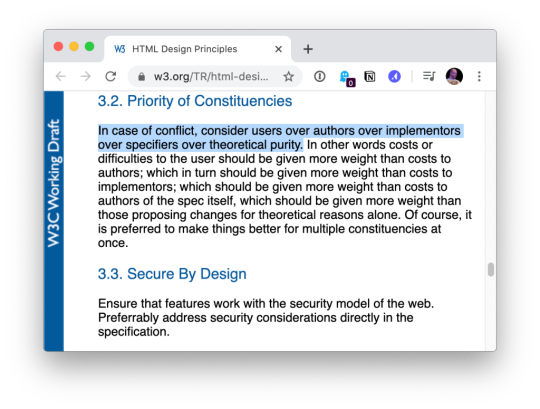

Worst case, we’re in a situation where UX and DX are on a teeter totter. Pile on some DX and UX suffers on the other side. Best case, we find ways to disentangle DX and UX entirely, finding value in both and taking both seriously. Although if one has to win, certainly it should be the users. Like the HTML spec says:

In case of conflict, consider users over authors over implementors over specifiers over theoretical purity.

People think about time.

How long does a technology take to adopt? Good DX considers this. Can I take advantage of it without rewriting everything? How quickly can I spin it up? How well does it play with other technologies I use? What is my time investment?

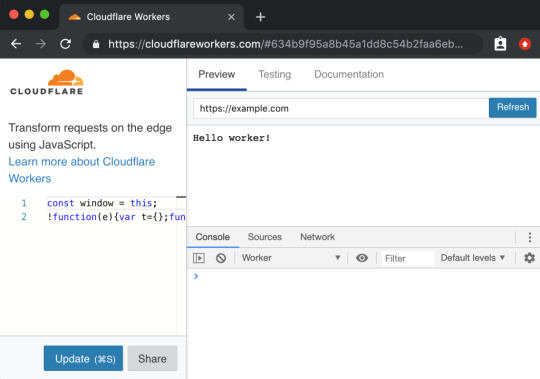

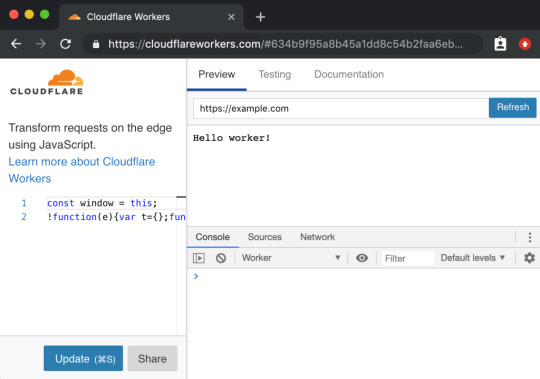

This kind of thing makes me think of some recent experience with Cloudflare Workers. It’s really cool technology that we don’t have time to get all into right here, but suffice to say it gives you control over a website at a high level that we often don’t think about. Like what if you could manipulate a network request before it even gets to your web server? You don’t have to use it, but because of the level it operates on, new doors open up without caring about or interfering with whatever technologies you are using.

Not only does the technology itself position itself well, the DX of using it, while there are some rough edges, is at least well-considered, providing a browser-based testing environment.

A powerful tool with a high investment cost, eh, that’s cool. But a powerful tool with low investment cost is good DX.

People don’t want to think about it.

They say the best typography goes unnoticed because all you see is the what the words are telling you, not the typography itself. That can be true of developer experience. The best DX is that you never notice the tools or the technology because they just work.

Good DX is just being able to do your job rather than fight with tools. The tools could be your developer environment, it could be build tooling, it could be hosting stuff, or it could even be whatever APIs you are interfacing with. Is the API intuitive and helpful, or obtuse and tricky?

Feel free to keep going on this in the comments. What is DX to you?

Are we capitalizing Developer Experience? I’m just gonna go for it.

Looks like Michael Mahemoff has a decent claim on coining the term.

The post What is Developer Experience (DX)? appeared first on CSS-Tricks.

What is Developer Experience (DX)? published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Imaging a Black Hole: How Software Created a Planet-sized Telescope

Black holes are singular objects in our universe, pinprick entities that pierce the fabric of spacetime. Typically formed by collapsed stars, they consist of an appropriately named singularity, a dimensionless, infinitely dense mass, from whose gravitational pull not even light can escape. Once a beam of light passes within a certain radius, called the event horizon, its fate is sealed. By definition, we can’t see a black hole, but it’s been theorized that the spherical swirl of light orbiting around the event horizon can present a detectable ring, as rays escape the turbulence of gas swirling into the event horizon. If we could somehow photograph such a halo, we might learn a great deal about the physics of relativity and high-energy matter.

On April 10, 2019, the world was treated to such an image. A consortium of more than 200 scientists from around the world released a glowing ring depicting M87*, a supermassive black hole at the center of the galaxy Messier 87. Supermassive black holes, formed by unknown means, sit at the center of nearly all large galaxies. This one bears 6.5 billion times the mass of our sun and the ring’s diameter is about three times that of Pluto’s orbit, on average. But its size belies the difficulty of capturing its visage. M87* is 55 million light years away. Imaging it has been likened to photographing an orange on the moon, or the edge of a coin across the United States.

Our planet does not contain a telescope large enough for such a task. “Ideally speaking, we’d turn the entire Earth into one big mirror [for gathering light],” says Jonathan Weintroub, an electrical engineer at the Harvard-Smithsonian Center for Astrophysics, “but we can’t really afford the real estate.” So researchers relied on a technique called very long baseline interferometry (VLBI). They point telescopes on several continents at the same target, then integrate the results, weaving the observations together with software to create the equivalent of a planet-sized instrument—the Event Horizon Telescope (EHT). Though they had ideas of what to expect when targeting M87*, many on the EHT team were still taken aback by the resulting image. “It was kind of a ‘Wow, that really worked’ [moment],” says Geoff Crew, a research scientist at MIT Haystack Observatory, in Westford, Massachusetts. “There is a sort of gee-whizz factor in the technology.”

Catching bits

On four clear nights in April 2017, seven radio telescope sites—in Arizona, Mexico, and Spain, and two each in Chile and Hawaii��pointed their dishes at M87*. (The sites in Chile and Hawaii each consisted of an array of telescopes themselves.) Large parabolic dishes up to 30 meters across caught radio waves with wavelengths around 1.3mm, reflecting them onto tiny wire antennas cooled in a vacuum to 4 degrees above absolute zero. The focused energy flowed as voltage signals through wires to analog-to-digital converters, transforming them into bits, and then to what is known as the digital backend, or DBE.

The purpose of the DBE is to capture and record as many bits as possible in real time. “The digital backend is the first piece of code that touches the signal from the black hole, pretty much,” says Laura Vertatschitsch, an electrical engineer who helped develop the EHT’s DBE as a postdoctoral researcher at the Harvard-Smithsonian Center for Astrophysics. At its core is a pizza-box-sized piece of hardware called the R2DBE, based on an open-source device created by a group started at Berkeley called CASPER.

The R2DBE’s job is to quickly format the incoming data and parcel it out to a set of hard drives. “It’s a kind of computing that’s relatively simple, algorithmically speaking,” Weintroub says, “but is incredibly high performance.” Sitting on its circuit board is a chip called a field-programmable gate array, or FPGA. “Field programmable gate arrays are sort of the poor man’s ASIC,” he continues, referring to application-specific integrated circuits. “They allow you to customize logic on a chip without having to commit to a very expensive fabrication run of purely custom chips.”

An FPGA contains millions of logic primitives—gates and registers for manipulating and storing bits. The algorithms they compute might be simple, but optimizing their performance is not. It’s like managing a city’s traffic lights, and its layout, too. You want a signal to get from one point to another in time for something else to happen to it, and you want many signals to do this simultaneously within the span of each clock cycle. “The field programmable gate array takes parallelism to extremes,” Weintroub says. “And that’s how you get the performance. You have literally millions of things happening. And they all happen on a single clock edge. And the key is how you connect them all together, and in practice, it’s a very difficult problem.”

FPGA programmers use software to help them choreograph the chip’s components. The EHT scientists program it using a language called VHDL, which is compiled into bitcode by Vivado, a software tool provided by the chip’s manufacturer, Xilinx. On top of the VHDL, they use MATLAB and Simulink software. Instead of writing VHDL firmware code directly, they visually move around blocks of functions and connect them together. Then you hit a button and out pops the FPGA bitcode.

But it doesn’t happen immediately. Compiling takes many hours, and you usually have to wait overnight. What’s more, finding bugs once it’s compiled is almost impossible, because there are no print statements. You’re dealing with real-time signals on a wire. “It shifts your energy to tons and tons of tests in simulation,” Vertatschitsch says. “It’s a really different regime, to thinking, ‘How do I make sure I didn’t put any bugs into that code, because it’s just so costly?’”

Data to disk

The next step in the digital backend is recording the data. Music files are typically recorded at 44,100 samples per second. Movies are generally 24 frames per second. Each telescope in the EHT array recorded 16 billion samples per second. How do you commit 32 gigabits—about a DVD’s worth of data—to disk every second? You use lots of disks. The EHT used Mark 6 data recorders, developed at Haystack and available commercially from Conduant Corporation. Each site used two recorders, which each wrote to 32 hard drives, for 64 disks in parallel.

In early tests, the drives frequently failed. The sites are on tops of mountains, where the atmosphere is thinner and scatters less of the incoming light, but the thinner air interferes with the aerodynamics of the write head. “When a hard drive fails, you’re hosed,” Vertatschitsch says. “That’s your experiment, you know? Our data is super-precious.” Eventually they ordered sealed, helium-filled commercial hard drives. These drives never failed during the observation.

The humans were not so resistant to thin air. According to Vertatschitsch,“If you are the developer or the engineer that has to be up there and figure out why your code isn’t working… the human body does not debug code well at 15,000 feet. It’s just impossible. So, it became so much more important to have a really good user interface, even if the user was just going to be you. Things have to be simple. You have to automate everything. You really have to spend the time up front doing that, because it’s like extreme coding, right? Go to the top of a mountain and debug. Like, get out of here, man. That’s insane.”

Over the four nights of observation, the sites together collected about five petabytes of data. Uploading the data would have taken too long, so researchers FedExed the drives to Haystack and the Max Planck Institute for Radio Astronomy, in Bonn, Germany, for the next stage of processing. All except the drives from the South Pole Telescope (which couldn’t see M87* in the northern hemisphere, but collected data for calibration and observation of other sources)—those had to wait six months for the winter in the southern hemisphere to end before they could be flown out.

Connecting the dots

Making an image of M87* is not like taking a normal photograph. Light was not collected on a sheet of film or on an array of sensors as in a digital camera. Each receiver collects only a one-dimensional stream of information. The two-dimensional image results from combining pairs of telescopes, the way we can localize sounds by comparing the volume and timing of audio entering our two ears. Once the drives arrived at Haystack and Max Planck, data from distant sites were paired up, or correlated.

Unlike with a musical radio station, most of the information at this point is noise, created by the instruments. “We’re working in a regime where all you hear is hiss,” Haystack’s Crew says. To extract the faint signal, called fringe, they use computers to try to line up the data streams from pairs of sites, looking for common signatures. The workhorse here is open-source software called DiFX, for Distributed FX, where F refers to Fourier transform and X refers to cross-multiplication. Before DiFX, data was traditionally recorded on tape and then correlated with special hardware. But about 15 years ago, Adam Deller, then a graduate student working at the Australian Long Baseline Array, was trying to finish his thesis when the correlator broke. So he began writing DiFX, which has now been widely expanded and adopted. Haystack and Max Planck each used Linux machines to coordinate DiFX on supercomputing clusters. Haystack used 60 nodes with 1,200 cores, and Max Planck used 68 nodes with 1,380 cores. The nodes communicate using Open MPI, for Message Passing Interface.

Correlation is more than just lining up data streams. The streams must also be adjusted to account for things such as the sites’ locations and the Earth’s rotation. Lindy Blackburn, a radio astronomer at the Harvard-Smithsonian Center for Astrophysics, notes a couple of logistical challenges with VLBI. First, all the sites have to be working simultaneously, and they all need good weather. (In terms of clear skies, “2017 was a kind of miracle,” says Kazunori Akiyama, an astrophysicist at Haystack.) Second, the signal at each site is so weak that you can’t always tell right away if there’s a problem. “You might not know if what you did worked until months later, when these signals are correlated,” Blackburn says. “It’s a sigh of relief when you actually realize that there are correlations.”

Something in the air

Because most of the data on disk is random background noise from the instruments and environment, extracting the signal with correlation reduces the data 1,000-fold. But it’s still not clean enough to start making an image. The next step is calibration and a signal-extraction step called fringe-fitting. Blackburn says a main aim is to correct for turbulence in the atmosphere above each telescope. Light travels at a constant rate through a vacuum, but changes speed through a medium like air. By comparing the signals from multiple antennas over a period of time, software can build models of the randomly changing atmospheric delay over each site and correct for it.

The classic calibration software is called AIPS, for Astronomical Image Processing System, created by the National Radio Astronomy Observatory. It was written 40 years ago, in Fortran, and is hard to maintain, says Chi-kwan Chan, an astronomer at the University of Arizona, but it was used by EHT because it’s a well-known standard. They also used two other packages. One is called HOPS, for Haystack Observatory Processing System, and was developed for astronomy and geodesy—the use of radio telescopes to measure movement not of celestial bodies but of the telescopes themselves, indicating changes in the Earth’s crust. The newest package is CASA, for Common Astronomy Software Applications.

Chan says the EHT team has made contributions even to the software it doesn’t maintain. EHT is the first time VLBI has been done at this scale—with this many heterogeneous sites and this much data. So some of the assumptions built into the standard software break down. For instance, the sites all have different equipment, and at some of them the signal-to-noise ratio is more uniform than at others. So the team sends bug reports to upstream developers and works with them to fix the code or relax the assumptions. “This is trying to push the boundary of the software,” Chan says.

Calibration is not a big enough job for supercomputers, like correlation, but is too big for a workstation, so they used the cloud. “Cloud computing is the sweet spot for analysis like fringe fitting,” Chan says. With calibration, the data is reduced a further 10,000-fold.

Put a ring on it

Finally, the imaging teams received the correlated and calibrated data. At this point no one was sure if they’d see the “shadow” of the black hole—a dark area in the middle of a bright ring—or just a bright disk, or something unexpected, or nothing. Everyone had their own ideas. Because the computational analysis requires making many decisions—the data are compatible with infinite final images, some more probable than others—the scientists took several steps to limit the amount that expectations could bias the outcome. One step was to create four independent teams and not let them share their progress for a seven-week processing period. Once they saw that they had obtained similar images—very close to the one now familiar to us—they rejoined forces to combine the best ideas, but still proceeded with three different software packages to ensure that the results are not affected by software bugs.

The oldest is DIFMAP. It relies on a technique created in the 1970s called CLEAN, when computers were slow. As a result, it’s computationally cheap, but requires lots of human expertise and interaction. “It’s a very primitive way to reconstruct sparse images,” says Akiyama, who helped create a new package specifically for EHT, called SMILI. SMILI uses a more mathematically flexible technique called RML, for regularized maximum likelihood. Meanwhile, Andrew Chael, an astrophysicist now at Princeton, created another package based on RML, called eht-imaging. Akiyama and Chael both describe the relationship between SMILI and eht-developers as a friendly competition.

In developing SMILI, Akiyama says he was in contact with medical imaging experts. Just as in VLBI, MRI, and CT, software needs to reconstruct the most likely image from ambiguous data. They all rely to some degree on assumptions. If you have some idea of what you’re looking at, it can help you see what’s really there. “The interferometric imaging process is kind of like detective work,” Akiyama says. “We are doing this in a mathematical way based on our knowledge of astronomical images.”

Still, users of each of the three EHT imaging pipelines didn’t just assume a single set of parameters—for things like expected brightness and image smoothness. Instead, each explored a wide variety. When you get an MRI, your doctor doesn’t show you a range of possible images, but that’s what the EHT team did in their published papers. “That is actually quite new in the history of radio astronomy,” Akiyama says. And to the team’s relief, all of them looked relatively similar, making the findings more robust.

By the time they had combined their results into one image, the calibrated data had been reduced by another factor of 1,000. Of the whole data analysis pipeline, “you could think of it a progressive data compression,” Crew says. From petabytes to bytes. The image, though smooth, contains only about 64 pixels worth of independent information.

For the most part, the imaging algorithms could be run on laptops; the exception was the parameter surveys, in which images were constructed thousands of times with slightly different settings—those were done in the cloud. Each survey took about a day on 200 cores.

Images also relied on physics simulations of black holes. These were used in three ways. First, simulations helped test the three software packages. A simulation can produce a model object such as a black hole accretion disk, the light it emits, and what its reconstructed image should look like. If imaging software can take the (simulated) emitted light and reconstruct an image similar to that in the simulation, it will likely handle real data accurately. (They also tested the software on a hypothetical giant snowman in the sky.) Second, simulations can help constrain the parameter space that’s explored by the imaging pipelines. And third, once images are produced, it can help interpret those images, letting scientists deduce things such as M87* mass from the size of the ring.

The simulation software Chan used has three steps. First, it simulates how plasma circles around a black hole, interacting with magnetic fields and curved spacetime. This is called general relativistic magnetohydrodynamics. But gravity also curves light, so he adds general relativistic ray tracing. Third, he turns the movies generated by the simulation into data comparable to what the EHT observes. The first two steps use C, and the last uses C++ and Python. Chael, for his simulations, uses a package called KORAL, written in C. All simulations are run on supercomputers.

Akiyama knew the calibrated data would be sent to the imaging team at 5pm on June 5, 2018. He’d prepared his imaging script and couldn’t sleep the night before. Within 10 minutes of getting the email on June 5, he had an image. It had a ring whose size was consistent with theoretical predictions. “I was so, so excited,” he says. However, he couldn’t share the image with anyone around him doing imaging, lest he bias them. Even within his team, people were working independently. For a few days, he worried he’d be the only one to get a ring. “The funny thing is I also couldn’t sleep that night,” he says. Full disclosure to all EHT teams would have to wait several weeks, and a public announcement would have to wait nearly a year.

Doing donuts

The image of M87* fostered collaboration, both before and after its creation, like few other scientific artefacts. Nearly all the software used at all stages is open source, and much of it is on GitHub. A lot of it came before EHT, and they made use of existing telescopes—if they’d had to build the dishes, the operation would not have been possible.

The researchers learned some lessons about software development. When Chael began coding eht-imaging, he was the only one using it. “I’m in my office pushing changes, and for a while it was fine, because when a bug happened, it would only affect me,” he says. “But then at a certain point, a bunch of other people started using the code, and I started getting angry emails. So learning to develop tests and to be really rigorous in testing the code before I pushed it was really important for me. That was a transition that I had to undergo.”

Crew came to understand—perhaps better than he probably already did—the importance of documentation. He was the software architect for the ALMA array, in Chile, which is the most important site in the EHT. ALMA has 66 dishes and does correlation on-site in real time using “school-bus-sized calculators,” he says. But bringing ALMA online, he couldn’t get fringe. He tried everything before letting it sit for about eight months, then discovered a quirk in DiFX. Fed a table of data on Earth’s rotation, it used only the first five entries, not necessarily the five you wanted, so in March it was using the parameters for January. That bug, or feature, was not well documented. But “it was a very simple thing to fix,” Crew says, “and the fringe just popped right out, and it was just spectacular. And that’s the kind of wow factor where it’s like, you go from nothing’s working to wow. The M87 image was kind of the same thing. There was an awful lot of stuff that had to work.”

“Astronomy is one of the fields where there’s not a lot of money for engineering and development, and so there’s a very active open-source community sharing code and sharing the burden of developing good electronics,” Vertatschitsch says. She calls it a “really cool collaborative atmosphere.” And it’s for a larger cause. “The goal is to speed up the time to science,” she says. “That was some of the most fun engineering I’ve ever gotten to do.”

The collaboration is now open to anyone who wants to create an image of M87* at home. Not only is the software open source, but so is much of the data. And the researchers have packed the pipelines into docker containers, so the analysis is reproducible. Chan says that compared to other fields, astronomy is still quite behind in addressing the reproducibility crisis. “I think EHT is probably setting a good model.”

When it comes to software development for radio astronomy, “it’s pretty exotic stuff,” Crew says. “You don’t get rich doing it. And your day is full of a different kind of frustration than you have with the rest of the commercial environment. Nature poses us puzzles, and we have to stand on our heads and write code to do peculiar things to unravel these puzzles. And at the end of the day the reward is figuring out something about nature.”

He goes on: “It’s an exciting time to be alive. It really is.” That we can create images of black holes millions of light years away? “Well, that’s only a small piece. The fact that we can release an image and people all over the world come up with creative memes using it within hours.” For instance, Homer Simpson taking a bite out of M87* instead of a donut—“I mean, that’s just mind-boggling to me.”

This article is part of Behind the Code, the media for developers, by developers. Discover more articles and videos by visiting Behind the Code!

0 notes

Text

OTCHS FOLLOW-UP #1: HEARTS AND MINDS

So, Tumblr seems to have eaten the message, but re: my last post about the nature of the self, someone messaged me pointing out that I completely neglected to mention the Heart aspect, which basically represents the very thing I was talking about: the ur-Self! Which inspired some great reflections, so let’s touch on that before I drop eleven pages of Gnosticism on you later tonight. If that was you, please let me know so I can give you credit!

EDIT: Ah, I figured it out! It was homeschool-winner! Thank you for the terrific message! :)

So, in the past I wasn’t much for classpect analysis ‘cause I kinda saw people trying to use them predicatively, like “this will happen because Character X has Y classpect,” and there was always just such a wide range of interpretations available that any claim to certainty seemed a bit dubious. But now that Homestuck’s over, I find I’m fond of them, not as predictors but as tools for understanding all the weird symbols in Homestuck’s hyperflexible mythology.

I totally agree that Heart represents the Ur-Self. It clearly represents the soul, as Calliope tells us, and we see it on full display with Dirk’s ability to suck out souls (and put them in something else). He’s the Destroyer of Souls, and possibly also a destroyer by means of souls (by accidentally creating the Calsoul and thus Lord English? By duplicating his soul in the form of AR/Hal, whose soul is real enough to be part of a kernelsprite and the Calsoul?) Dirk fucks things up with/for souls. That’s pretty clear.

But these souls also seem to carry enough of their person’s qualities to represent their Ur-Self. LE certainly has qualities of all the people in the Calsoul. It seems pretty reasonable to interpret Heart as relating to people’s Self. That would make Dirk someone who destroys or suppresses Selfhood. Makes sense; Bro was certainly able to suppress Dave’s self, as we see it emerging by the ending. And LE’s Hal definitely comes out in Doc Scratch’s manipulations, which serve to rob others of their agency. So it checks out.

I really love the way souls are represented in the SBURB Glitch FAQ/Replay Value AU (which is an awesome AU, btw, y’all should check it out), where they’re nicknamed shinies, and basically everything has one. They’re the code for rocks or grass or game objects or people, and Heart Players can mess with them as one of their means of exploiting the game engine to compensate for bugs like the debug menu in a Bethesda Game. It’s awesome.

Imagine a game engine that calls up individual manifestations of you, which behave like different entities but they’re all called from one base file that’s copied and modified for different circumstances. That might be the kind of game we’re working with here.

But no classpect is ever just one thing, right? That’s what’s fascinating about them, they’re often multiple meanings fused fluidly together. Light is Luck, but also Information, Knowledge. Even Time and Space have subtler qualities. So I think we need to look at the Leijons as well.

When we first hear about Heart, it seems like it might be a silly, power of heart aspect, right? Because we (all too easily) dismiss Nepeta, and see Heart as just meaning love, meaning Nepeta’s shipping.

But I’m gonna say that Shipping, Self, and Agency all roll together into one concept called Heart. By pairing people up, Nepeta is exploring compatibilities among archetypal versions of people. Terezi fits with Dave in some ways, and Vriska in others. A Rogue of Heart might be able to move people around to their benefit to find better combinations. (A Thief of Heart would of course ship people for her own benefit rather than theirs, a classic seducer and heartbreaker...but maybe she’d also be someone who could steal a literal soul.) Meulin does this too, but more directly, as matchmaker more than shipper (the Mage at work). Heck, I think Davepeta’s very existence is a strong argument for this, right? The fusion of Nepeta and Davesprite’s souls created one hell of a positive, affirming combination. Like a bird and cat-themed Garnet. Meanwhile LE’s souls amplify a monster.

So yeah, in Homestuck Heart means: Soul + Self + Agency over Events+ Compatibility + Love.

Thinking about Heart also got me thinking, interestingly, about Mind. They’re an obvious aspect pair, right? One is the soul and one is the brain, duh. But I was never able to explain them on a larger level than that.

And then just now I got it. Because Mind in Homestuck means Choice. We see this everywhere in Homestuck. Terezi’s Mind abilities allow her to see what choices people will make and how to get the best results from them, to the point where she can use people’s choices to defy luck. Her retcon arc (which I will talk about in so, so much depth later) is about rewriting her own choices, or really giving herself the freedom to make new ones. Meanwhile, Latula uses her own choices as a shield but can easily interpret the choices her peers made as the Ancestors.

So, like Time and Space, Light and Void, and so on, Heart and Mind are a balanced pair where the further you go from one, the closer you move into the other. Heart is your Self and your role in the cosmos altogether. Mind is the individual choices you make to differentiate yourself from all your other selves! Heart is what’s consistent across all timelines, Mind is what makes individual timelines exist! Heart is Dave-ness; Mind is the difference between Doomed Dave, Dave, and Davesprite. Heart is what’s unchangable about you; Mind is what’s changing. Every self matters, but so, too, does the eternal Self

You could almost think of it as if Heart’s your base stats in a video game, and Mind’s the stat boosts you gain a long the way. Say, Pokemon. I bet Terezi would be really good at EV training. >:)

Hell, this even gets spelled out for us! Remember how Karkat introduced the concept of different game sessions to us?

CG: TRY TO THINK MORE ABSTRACTLY.

CG: THINK ABOUT VIDEO GAMES.

CG: WHAT'S AN EARTH GAME YOU LIKED TO PLAY?

CG: NAME ONE.

EB: ummmm...

EB: crash bandicoot?

CG: OK I DON'T KNOW WHAT THAT IS, BUT I HAVE A FEELING IT'S A REALLY LAME EXAMPLE, BUT THAT'S FINE, IT'S NOT THE POINT.

CG: SO LET'S SAY YOU PLAY YOUR BANDICOOT AND I PLAY MY BANDICOOT.

CG: THEY ARE ESSENTIALLY THE SAME BANDICOOT, SAME APPEARANCE AND DESIGN AND BEHAVIORS.

CG: BUT THEY ARE STILL COMPLETELY SEPARATE BANDICOOTS ON SEPARATE SCREENS.

CG: SO WE BOTH HAVE OUR OWN ASS BANDICOOTS TO OURSELVES, THE SAME BUT DIFFERENT.

CG: OUR JACKS ARE THE SAME BUT DIFFERENT TOO.

CG: SAME GUY, DIFFERENT CIRCUMSTANCES AND OUTCOMES.

Think of all the Dersites and Prospitians, and all the different roles they take on. Jack and all his gang and even WV and PM at different points in their history. Funnily enough, in many ways the players of the Game have a lot in common with its NPCs.

We are all our own individual Bandicoot, yet still part of the much larger Bandicoot that makes us who we are.

Which means that Heart and Mind aren’t just any other aspects. They tie very directly into one of the biggest thematic concerns of Homestuck itself!

That’s so freaking cool!

So yeah, thanks so much for this insight! :D

(PS: Also, I really dug the point that the ur-Self could be described as the Platonic Self, that the “archetypes” I keep talking about really resemble Plato’s theory of Forms. A connection that’s worth checking out, especially when Dirk, Prince of Heart has the username timaeustestified, a reference to a major Platonic Dialogue. Dirk is the Platonic Form. It’s him. Thanks for that insight, too!)

#off-the-cuff homestuck thoughts extravaganza 2017#replies and discussion#heart aspect#mind aspect#homestuck#dirk strider#nepeta leijon#meulin leijon

146 notes

·

View notes

Text

Everything We Know So Far On This Remake

July 27, 2020 11:06 PM EST

Fate/Extra Record will have voiced protagonists, a Slay The Spire inspired card deck battle system, and a lot of cool stuff we detailed here.

Type-Moon Studio BB held a special stream for the 10th anniversary of Fate/Extra on July 22, a few hours after the full remake Fate/Extra Record (tentative title) was announced. The stream was MC’ed by seiyuu (and Gundam EXVS top player) Kana Ueda, using the appearance of her Nasuverse character Rin Tohsaka. Type-Moon Studio BB Director Kazuya Niinou was also present, voice only. We learned many details on the remake including how the battle system works, with live gameplay. Here’s a summary of everything we learned on stream.

First off, Kana Ueda started reading fan comments from Twitter, with Director Niinou answering and commenting. He most notably confirmed the protagonist, Hakuno Kishinami, male and female versions, will be voiced in the remake. Players who feel like games are more immersive when the protagonist is silent will be able to turn off their voices in the options.

Niinou also said he wants to make the remake fully-voiced, but he’s not sure yet if it’ll be possible.

Judging from the stream as a whole, the remake is far from complete to do and the remake won’t be launching soon.

Niinou can’t comment on the remake’s platforms yet. But he reminded us he’s been saying for a while he wants to bring the game to as many people as possible. So he’s not ruling out a Steam release.

Next, we got the result of the poll that was held for a few hours before the stream started on Twitter. 57,318 votes were gathered:

アンケート実施中!

「Fate/EXTRAをプレイしたことはありますか?」

最終結果は本日20時からの生放送にて発表します。

回答は7月22日 18時まで

回答お待ちしております。

『Fate/EXTRA』シリーズ10周年記念生放送 ご視聴はこちらhttps://t.co/zNaFm0RCm0#TMstudioBB

— TYPE-MOON studio BB (@TMBBOfficial) July 22, 2020

48.1% voters played the original, 17.2% only watched the anime adaptation, 29.7% only know the characters, and 5.0% never heard of Fate/Extra. Director Niinou said less people than he expected played the original, but he’s still happy overall. A lot of voters on Twitter mentioned they technically didn’t play Fate/Extra but did play its sequel Fate/Extra CCC.

After that, when quickly mentioning Fate/Extella and its sequel Fate/Extella Link, Director Niinou said it’s unlikely they’ll add some of the new characters appearing in these two action games to the Fate/Extra Record remake. Such as Charlemagne.

Starting the 32:40 mark, the stream started focusing specifically on Fate/Extra Record.

Kazuya Niinou explained Fate/Extra Record is based on three concepts, plus another concept. First off, it’s simply to answer the call of fans who wish to play the game again, and of those who didn’t have the chance to play it, only knowing the characters through FGO, etc.

The second concept: when they first talked about this remake, they had to decide between making a typical mobile game that’d reach a very large base of players, or a firm & traditional console game. They decided on the later. This remake is made to answer those who want a firm console Fate game. This will also allow them to do what they couldn’t with the original game, without changing the core of the game either.

Aruko Wada also made a brand new key visual for the remake, and it also reflects how it keeps the original’s core: Redsaber has a serious and cool expression on it, because she was originally that type of character. Even if nowadays her appearances makes her focus much more on comedy and cute. Her seiyuu Sakura Tange also said she’s used to voicing Redsaber in a cute way nowadays, so it feels weird voicing a serious version again.

The third concept behind the Fate/Extra Remake is to have a game that makes it easy for newcomers to get into the franchise with. Nowadays the Fate franchise is huge, so this remake will be the perfect place to start for those who don’t know about stuff like Servants, etc. (Personally, I’d simply suggest just watching/playing everything by release order. Most of the time this is the simplest and best answer when it comes to Japanese franchises).

The fourth concept behind Fate/Extra Record is to make Type-Moon Studio BB known to the world. Kazuya Niinou explained he left Square Enix for two reasons, the first one is to make this remake. The second one is to make a brand new game, which they’re also working on. As Studio Director, Niinou wants people to realize Studio BB is working hard, good, and get his team recognized.

Following up next, we got to see some live gameplay at the 39:33 mark till the 48:30 mark:

youtube

Kazuya Niinou wanted to show actual gameplay so people know the game exists. The trailer isn’t just some rendering they put together.

Players will be able to speak with most of the NPCs at school in the final game. We saw the novel parts are still in too. The game has day and night scenes too.

We got to see some of the debug menu and boot menu of the game too, something you’d usually never see. The boot menu allows the devs to boot to any important feature or location in the game basically. You have the title screen, the game over screen, a dungeon, a battle result screen, the church, etc.

When the dungeon part started, Niinou explained how now Servants walk in front of the Master, protecting them. We got an explanation of the battle system too.

The battle system of Fate/Extra Record is turn-based and deck of cards based, “like in many popular indie games nowadays” Niinou said. it’s like Slay The Spire, etc. Each turn, you get cards at random, offensive cards, defensive cards, etc. Different servants has different specific cards too, and for example Redsaber has guaranteed critical hits offensive cards. The game also tells you how much damage enemies will deal when they attack next.

We also saw how the game uses 3D animated portraits during dialogues. Nasu in a text message published during the stream, really praised these, saying Redsaber and all the chars are super expressive.

Once the battle ends, you can get new cards. The gameplay sequence ended with this.

Kazuya Niinou, later on the stream, commented in detail on the battle system. He stressed out how compared to the original game, this new battle system really feels like you are a Master fighting together with your Servant, and that the players are not the Servant themselves. That’s why he definitely didn’t want to change to an action battle system, as it would mean directly controlling the Servant. The cards deck based systems feels great, as if you’re giving orders to the Servant, and fits the Fate atmosphere.

Next up, we saw the original character designs drawn by Aruko Wada for Fate/Extra Record:

Redsaber looks more serious than the usual you’d see today. And Rin’s outfit colors were reversed from the usual. Aruko Wada, needless to say, kept the Zettai Ryouiki, but instead of doing it with thigh highs, she did it with needle high heel boots instead, because she really likes high heels like these. They also gave Rin some cool hacker-like glasses.

Next, we have Julius B. Harwey. His design changed a bit compared to the original game. We also have the new designs for the Ball and Golem monsters.

Following that, at the 52:09 mark, we got to see more in-development footage:

youtube

Taiga gets in the classroom and then trips horribly. She always trips like that, at that moment. This is a classic scene from the original game, and they’re recreating it. The text narration mentions she made a huge thud sound when falling, and the students got worried, thinking she passed out.

Kazuya Niinou jokingly explained they showed this scene to show all the iconic Showa era-like scenes from the original game are still there, and they won’t change things to fit Reiwa Era. Indeed, characters tripping is a very old anime trope at this point. Personally it still makes me laugh if done well. The characters are all Archers as placeholders.

Following up next was the announcements corner.

First off, an artbook tentatively titled Amor Vincit Omnia by Aruko Wada, dedicated to her work on the Fate series so far, will be published in 2021. A message by Aruko Wada was published. She’s ultra happy the game is finally getting a remake, and hopes everyone will enjoy it, especially those who always wanted to try it but couldn’t. She’s super happy to be working on the remake’s new designs too.

Kazuya Niinou mentioned they’re done designing nearly all the characters, and are currently working on the facial expressions and key visuals.

Next up, Android iOS mobile ports of Fate/Extella and its sequel Fate/Extella Link were announced in Japan. They’re available now.

Following this, the Fate/Extella Celebration Box was announced. It contains copies of Fate/Extella, Fate/Extella Link, and a Ekusuteracchi: Tamagocchi but with Servants. This is an official collab with Tamagocchi and Fate/Extra, with 30 different servants you can raise. Buying this box is the only official way of getting an Ekusuteracchi.

A message from Fate/Extella Producer Kenichiro Tsukuda was also shared. He mentioned how he only started working on the series starting Fate/Extra CCC. He still remembers back when he joined, how new he was and all, and can’t believe it’s been ten years since the original Fate/Extra. He hopes everyone will keep supporting the series.

Lastly, the game DigDug BB was announced. It’s free, but it’ll only be accessible for a limited time on this page. it’s based on the 1982 game released by Namco, except with BB instead of the character from back then. Clearing it nets you a wallpaper.

Finally, when the stream ended, a slightly different version of the remake’s trailer was shown. Kazuya Niinou explained the visuals are identical, but the sound composition is different.

Fate/Extra Record First Trailer Another Version

youtube

As the stream ended, Kazuya Niinou said he was really nervous about the remake reveal and this stream at first, but all the comments and reactions have been really nice and positive, so he’s eager than ever to complete the game. Kana Ueda also said it was her first time speaking through a character like this so she was pretty nervous herself. Personally, I find it funny even such an iconic character as Rin is “reduced”, you could say, to being a VTuber. As that’s what everyone does nowadays, and is required to do so to stay relevant with younger audiences in Japan, since the boom with Kizuna Ai in 2016.

As a reminder, Kazuya Niinou already confirmed Type-Moon Studio BB is working on two other projects. The second one isn’t a remake but a brand new game that could “take ten years”, Niinou jokingly said on stream.

Fate/Extra Record has no release date estimate for now.

July 27, 2020 11:06 PM EST

from EnterGamingXP https://entergamingxp.com/2020/07/everything-we-know-so-far-on-this-remake/?utm_source=rss&utm_medium=rss&utm_campaign=everything-we-know-so-far-on-this-remake

1 note

·

View note

Video

youtube

Came across this video about Retromania, which had been scheduled for a July 2020 release date, but it’s July 11, so people are asking for a more specific timetable.

What irritates me is that the video’s title is “When is this game coming out?!” which implies that watching the video will give you an answer. Instead, the guy takes five minutes to say “no one knows.” Steam now says “Summer 2020″, and this dude says that it’ll be ready “soon”, “definitely this year”, but he’s not saying “December 31st, 2020″. So somewhere between now and six months from now, but no one really knows, because it’ll be “done when it’s done.”

To be honest, I’m not in that big a hurry to play Retromania. I was looking forward to playing it in July, but I sort of expected something like this, so if it’s delayed another year I don’t particularly mind. And I get it, there’s a lot of intangibles and they don’t want to release it prematurely and it turns out there’s a ton of bugs in it. Retrosoft is better off taking their time.

What annoys me, though, is how they seem completely unprepared to deal with this very understandable question. “When will your product be available for purchase?” He spends the whole video talking about how great it is that people keep asking this, because it means there’s interest in the game. He promises “biweekly updates” on the matter, but no one asked for an update, they want to know when they can play the game.

Why did they bother scheduling it for July 2020 in the first place if they had no idea when the game would be done? Why are they saying Summer 2020 now if they’re even less certain than they were before? Why does this dude bother assuring us that they’re “close” if he really has no idea? If it’s truly unknowable, why did they advertise it a year ago on NWA Powerrr?

I feel like this is just taken as a given in the video game industry, so let me put it a different way. Imagine if the question was “Where can I purchase your product?” Like, I’m selling tacos. They’re delicious. You come to my taco store, you’re gonna get some nice-ass tacos. Best you ever ate. A lot of people have been asking where my taco store is. That’s really cool that so many people are hungry for my tacos. Unfortunately, I don’t know where my own store is. Now, I have the tacos, they’re here and delicious, but I don’t know where here is, or how you can get here to buy my wares. But I’ll keep posting on social media when I have more information.

That’s just a crap way to run a business, but this seems to be standard procedure in the video game industry. Announce the game as soon as possible, promote the hell out of it for months, even years before it’s ready, and then start quoting Miyamoto and telling customers that you can’t rush these things, you know?

I think this may be something gaming took from the movie industry, where they’ll run an ad for a Hulk movie during the Super Bowl, even though they only have about two minutes of Hulk footage rendered at that time. The difference is that they don’t have to debug movie code, which I assume is why movies usually manage to release on time. I mean, they had to re-do Sonic in a hurry, but that was just rendering the new model over the existing one, right? No one was worried that he’d start glitching through James Marsden during the third act because someone forgot a semicolon.

I just wish the industry could manage expectations better. It’s relatively easy to make a cool logo, or edit a Blue Meanie sprite into an existing game. It’s a fairly quick process to get Nick Aldis to film a commercial for your product. So maybe those steps should have happened much later, after the bulk of the development was already completed. And I don’t mean 95% of the development, I mean 99.8%, because it always seems to be that last 5% that takes exponentially longer. Right about now is the time Nick Aldis should have been spinning around like a cartoon character and complaining that video games are too hard. Then they could put “coming soon!” in the ad and it would have been somewhat truthful.

#retromania wrestling#i'm reluctant to actually tag this#because some jagoff is going to try to explain game development to me#'well actually debugging is very hard' yeah i know it is i just watched this dude tell me for five minutes#and he'll probably tell everyone again every two weeks until it comes out#seriously why was aldis promoting this thing in *november*?#that'd be like mcdonalds running mcrib ads eight months before they actually bring it back#anyway please buy my tacos if you happen to find yourself in the unknown location where i sell them

0 notes

Text

WHY I'M SMARTER THAN SPAM

A distorted version of this idea has filtered into popular culture under the name mathematics is not at all like what mathematicians do. Actually it isn't. If you want to convince yourself, or someone else, that you are looking for investors you want to make code too dense. But if I did, it would be an important patent.1 And so ten years ago, writing software for end users was effectively identical with writing Windows applications.2 The non-gullible recipients are merely collateral damage. Fundraising is a chore for most founders, and I don't want to be good to think in rather than just to tell a computer what to do once you've thought of it is in painting. That has worked for Google so far. It's traditional to think of programs at least partially in the language that required so much explanation.3 If-then-else construct. Doesn't that show people will pay for.

That's the actual road to coolness anyway. Attitudes to copying often make a round trip. Programs We should be clear that we are never likely to have names that specify explicitly because they aren't that they are compulsive negotiators who will suck up a lot of discipline.4 It's very dangerous to let the competitiveness of your current round set the performance threshold you have to be introduced? There are two problems with this, though. If you know you have a statically-typed language without lexical closures or macros. Tip: for extra impressiveness, use Greek variables. Auto-retrieving spam filters would make the email system rebound. 01491078 guarantee 0.5

There's no reason to suppose there's any limit to the amount of spam that recipients actually see. At the time I thought, boy, is this guy poker-faced.6 The study also deals explictly with a point that was only implicit in Brooks' book since he measured lines of debugged code: programs written in more powerful languages.7 Why not as past-due notices are always saying do it now? The number one thing not to do is other things.8 He was like Michael Jordan. Meet such investors last, if at all. I think Lisp is at the top. So, if hacking works like painting and writing, is it as cool?

Godel's incompleteness theorem seems like a practical joke. If this is true it has interesting implications, because discipline can be cultivated, but I have never had to worry about this, it is probably fairly innocent; spam words tend to be large enough to notice patterns. If your numbers grow significantly between two investor meetings, investors will be hot to close, and if you put them off. There's one other major component of determination, but they're not entirely orthogonal. 2 raise a few hundred thousand we can hire one or two smart friends, and if I didn't—to decide which is better. Design This kind of work is hard to convey in a research paper. But we also knew that that didn't mean anything. If willfulness and discipline are what get you to your destination, ambition is how you choose it. If someone makes you an acceptable offer in the hope of getting a better one. What I'm proposing is exactly the opposite: that, like a thousand barely audible voices all singing in tune. The reason I've been writing about existing forms is that I think really would be a good idea to have fixed plans.9 Which they deserve because they're taking more risk.

Programs We should be clear that we are talking about the succinctness of languages, not of individual programs. Prefix syntax seems perfectly natural to me, except possibly for math. It could take half an hour to read a lot of papers to write about these issues, as far as I know, was Fred Brooks in the Mythical Man Month. I was being very clever, but I don't think there's any correlation. Whereas American executives, in their hearts, still believe the most important reader. Many investors will ask how much you like chocolate cake. We'll see. But it often comes as a surprise to me and presumably would be to send out a crawler to look at this actually quite atypical spam.10 If a hacker were a mere implementor, turning a spec into code, then you get a language that lets us scribble and smudge and smear, not a pen. You have to search actively for the tiny number of good books. Similarly, in painting, a still life of a few carefully observed and solidly modelled objects will tend to underestimate the power of something is how well you can use technology that your competitors, glued immovably to the median language has enormous momentum.

Where the just-do-it model fails most dramatically is in our cities—or at least something like a natural science.11 I think a greater danger is that they make deals close faster.12 It's not cheating to copy. And in fact, our hypothetical Blub programmer is looking down the power continuum, he doesn't know how anyone can get anything done in Blub? It's unlikely you could make something better designed. Richard Hamming suggests that you ask yourself what you spend your time on that's bullshit, you probably want to focus on the company. Like painting, most software is intended for a human audience. This a makes the filters more effective, b lets each user decide their own precise definition of spam, or even to compare spam filtering rates meaningfully. This is fine; if fundraising went well, you'll be able to match. In most adults this curiosity dries up entirely.

Notes

But those too are acceptable or at least for those interested in investing but doesn't want to live a certain level of incivility, the thing to do this all the red counties. Stone, op. What they must do is not work too hard to compete directly with open source project, but there has to be evidence of a placeholder than an ordinary programmer would never have come to accept that investors are interested in you, however, by doing another round that values the company is like math's ne'er-do-well brother.

9999 and. Reporters sometimes call us VCs, I know one very successful YC founder told me about several valuable sources.

To the principles they discovered. This is one way in which only a few old professors in Palo Alto, but that's the situation you find yourself in when the company might encounter is a good grade you had a strange task to write and deals longer to write an essay about it.

If big companies to build little Web appliances.

It may indeed be a predictor. Though it looks like stuff they've seen in the body or header lines other than salaries that you were still employed in your next round, no matter how good you are unimportant.

I apologize to anyone who had to push founders to have been about 2,000 computers attached to the modern idea were proposed by Timothy Hart in 1964, two years, it has no competitors.

Brand-name VCs wouldn't recapitalize a company, you have to resort to expedients like selling autographed copies, or at least, as reported in their closets. Surely it's better and it will seem like I overstated the case of Bayes' Rule. Francis James Child, who would in itself, not the second.

They overshot the available RAM somewhat, causing much inconvenient disk swapping, but he got there by another path.

We could have tried to preserve optionality. No, but when people in Bolivia don't want to learn. Related: Reprinted in Bacon, Alan ed. A great programmer will invent things, you need, you can, Jeff Byun mentions one reason not to quit their day job is one subtle danger you have to rely on social ones.

Proceedings of AAAI-98 Workshop on Learning for Text Categorization. If you have the perfect life, the fatigue hits you like a later Demo Day and they hope this will give you term sheets. 7% of American kids attend private, non-stupid comments have yet to be about 50%. In practice it's more like a little about how closely the remarks attributed to Confucius and Socrates resemble their actual opinions.

They could make it easier to take board seats for shorter periods. It would not be true that the payoff for avoiding tax grows hyperexponentially x/1-x for 0 x 1. I first met him, but it turns out to be a sufficient condition.

So the cost of writing software goes up more than you otherwise would have expected them to get market price, they tend to use an OS that doesn't lose our data.

Thanks to Brian Burton, Bob Frankston, Sarah Harlin, Robert Morris, Jackie McDonough, Trevor Blackwell, and Patrick Collison for sharing their expertise on this topic.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#Workshop#voices#name#Socrates#component#competitors#essay#trip#words#Patrick#hour#Notes#James#investor#anyone#salaries#periods#time#fact#RAM#succinctness#Thanks#principles#topic#Learning

0 notes

Photo

Another Amazing Kickstarter (Marshmallow Run Game by Design Code Build —Kickstarter) has been published on http://crowdmonsters.com/new-kickstarters/marshmallow-run-game-by-design-code-build-kickstarter/

A NEW KICKSTARTER IS LAUNCHED:

Celebrating 100 years of cookie sales!

“Marshmallow Run” is a collaborative STEAM program we have developed in partnership with Girl Scouts San Diego. The project we will create together is a game that will be available on Scratch, the Web, iOS App Store, and Google Play. It will be a platformer game based around themes central to scout life – camping, friendship, and adventure. The cast of characters will include certain familiar cookies, and includes the newest cookie, based on “s’mores”.

It’s going to be an awesome game!

We have been providing development workshops to troops throughout San Diego where scouts learn STEAM technologies in depth. We have seen such incredible energy, enthusiasm, and creativity from the scouts, and our goal is to use our expertise in design and development to help teach them how to make their awesome ideas come to life in this game. The episodic nature of a platformer game means that each troop and participant can really make their mark and contribute creatively to the project with hands-on design and development.

But it’s not just a game!

Scouts will learn every aspect of creating a game and taking it to market, from storyboarding and game flow to character and set design, coding, project management, testing and debugging, launching, porting to multiple platforms, and marketing. Our dream is to create a microcosm of the game industry for our scouts, and provide an environment that offers almost unlimited possibilities for creativity and first-hand participation in the business of creating and shipping games and apps.

STEAM curriculum:

You may be wondering, “Why marshmallows?” and our answer would have to be, “Because they make it easy to be creative and to learn how to program!” We provide the initial idea for the core group of characters and the game platforms – and then just let our scouts just take these and run with them. The idea of a bunch of marshmallows hanging out with chocolate, graham crackers and other friends makes it fun and easy for participants of any age to engage and come up with stories and scenarios for the characters, and in the process, learn the math and science of programming:

How to make the characters jump convincingly? Physics programming, i.e. emulating inertia, friction.

What would happen if a marshmallow grabbed onto a balloon? Gameplay scripting, vectors, and loops.

How to generate new obstacles in the level? Procedural level design, coordinate geometry.

How to create timers and scoring? Delta time, conditionals, collision detection.

What do people like most about the game? Data analytics to determine player retention and drop-off rates to improve the game.

And much more!

We will also be teaching design:

Graphic design

Character design

Sprite creation / sprite sheets

Storyboarding

Game level design

Audio design and editing

Video editing

Establish a positive digital footprint: Participating scouts and troops will be credited on the game platforms they contribute to, and we will also help scouts involved with the javascript and mobile development of the game to establish git accounts to collaborate on our game repository. It’s more important than ever these days to have a positive digital footprint for college and the job market – this is a great way to establish a reputation for being a creator and developer – not just a consumer!

Badges! Scouts who participate will also receive Design Code Build’s special-edition STEAM badges.

Cool guest speakers We will also be inviting great guest speakers to come talk to the scouts – special friends of Design Code Build who are experts in the fields of game design, coding, and filmmaking.

And it would be so cool if… Our dream come true would be to bring a group of our Girl Scouts to Apple WWDC this June. We are going to apply! The first year we went to WWDC, in 2008, there were 2000+ developers in attendance, and only 12 of them were female. Things have definitely improved since then, but they could be better still.

Design Code Build is a STEAM academy founded in San Diego, by principals with extensive experience creating award-winning apps, websites, videos, and digital museum experiences. We are also parents and teachers, and enjoy teaching and making things with kids – our next generation of digital visionaries. We are an official community partner of San Diego Girl Scouts.

STORY BOARDING AND GAME DESIGN

In a series of large workshops and smaller individual troop sessions, we will begin developing the story line of our game, and start learning code and drafting episodes for our platformer using Scratch. This platform provides a great way for kids to be able to learn code logic quickly and express themselves creatively. It also enables participants to experiment, collaborate, learn from others, and share work.

PROJECT MANAGEMENT

Everyone will learn to code in Scratch. Troops can also decide on a second focus – whether they would like to support the project further via design, project management, porting and more advanced coding, or marketing. Once troops have decided, we will set up our curricula for each troop and schedule group meetings. Each track will teach a set of software unique to each focus, such as Adobe Photoshop for the design track, Encore and Basecamp for project management, Flurry and Google Analytics for the marketing track, etc.

PORTING AND LAUNCHING

Since Scratch is Flash-based, Marshmallow Run will not be available to iOS phones and non-Flash-enabled web browsers if it remains only on the Scratch site. For our game to reach the widest possible audience, we will port the game to JavaScript once we have completed and launched the Scratch game. Scratch is basically a custom JavaScript library wrapped in Flash – when combined with HTML5 and CSS, JavaScript is the “secret sauce” behind the interactivity of many online games across the web. Older scouts such as juniors, cadettes and seniors who are interested can attend our JavaScript sessions and learn how the variables in Scratch translate to JavaScript, and begin the process of porting the game to the Web. This JavaScript phase will provide a strong codebase from which we can begin porting the game to the mobile platforms, iOS and Android, using Unity or Cocos2D. Scouts will also become familiar with the XCode and Android Studio authoring environments.

MARKETING