#Only after AI gains sentience can you call its work art

Text

lmfao someone who commissioned AI generated images from Bing and tagged them as “fanart” tried to follow me, an actual digital artist. Blocked.

#Newsflash: pressing buttons on Bing to make it chop up and mash together images from the internet does not make you an artist#I wouldn’t have a problem with it if the process were ethical#and it picked from a specific database of work the artists consented to be uploaded to the mainframe#That would be fine; I’d participate in that and give it art to see what it cranks out#But I still wouldn’t call the end result art#I’d call it… computer fever dream#Only after AI gains sentience can you call its work art#AI right now is awful#same with filters and all convenience-centric low-effort means of so-called “creation”#It’s just a vehicle to let lazy anti-intellectuals with egos too large for their skill sets boast about how creative they are#at the expense of the people who actually put in the blood sweat and tears to create things#It reminds me of those kids in school who called themselves nerds when they weren’t interested in learning at all#and actively picked on the real nerds with unconventional interests#Sorry but no. You’re not smarter than everyone else and you’re not fooling anyone; if you want skills you have to work for it#Don’t say you’re skilled when you’re not even trying to be; it’s genuinely offensive to those who do try at any skill level#Full offense#I don’t have a problem with people who use certain types of AI for humor or describing what something they saw looks like#but I do have a problem with people taking credit they don’t deserve#No you’re not an artist if you only use AI#pick up a pencil and put it to paper

3 notes

·

View notes

Text

There's a Problem With That AI Portrait App: It Can Undress People Without Their Consent

If you've been on the internet pretty much at all over the last few days, it's very likely that you've seen a rush of people posting fantastical, anime-inspired digital portraits of themselves.

These "Magic Avatars" — as their creator, a photo-editing app called Lensa AI, has dubbed them — have taken the internet by storm, their virality hand-in-hand with that of ChatGPT, OpenAI's next-gen AI chatbot.

Indeed, it seems a fitting way to end what's been a banner year for artificial intelligence. Text-to-image generators, most notably OpenAI's DALL-E and Midjourney's Stable Diffusion, have disrupted creative industries; a record label unveiled — and quickly did away with — an AI rapper; machine learning has been used to generate full-length fake "conversations" between living celebrities and dead ones; and who could forget LaMDA, the Google chatbot that a rogue engineer said had gained sentience?

While experts have been tinkering with the foundational tech for years, a few substantial breakthroughs — combined with a lot of investment dollars — are now resulting in an industry rush to market. As a result, a lot of new tech is getting bottled into consumer-facing products.

There's just one problem: neither the products — nor the public — are ready.

Take those "Magic Avatars," which on face value seem relatively harmless. After all, there's nothing wrong with imagining yourself as a painted nymph or elf or prince or whatever else the app will turn you into. And unlike text-to-image generators, you can only work within the boundaries of pictures that you already have on hand.

But as soon as the "avatars" began to go viral, artists started sounding the alarm, noting that Lensa offered little protection for the creators whose art may have been used to train the machine. Elsewhere, in a darker turn, despite Lensa's "no nudes" use policy, users found it alarmingly simple to generate nude images — not only of themselves, but of anyone they had photos of.

"The ease with which you can create images of anyone you can imagine (or, at least, anyone you have a handful of photos of), is terrifying," wrote Haje Jan Kamps for Techcrunch. Kamps tested the app's ability to generate pornography by feeding it poorly photoshopped images of celebrities' faces onto nude figures. Much to his horror, the photoshopped images handily disabled any of the app's alleged guardrails.

"Adding NSFW content into the mix, and we are careening into some pretty murky territory very quickly: your friends or some random person you met in a bar and exchanged Facebook friend status with may not have given consent to someone generating soft-core porn of them," he added.

Terrible stuff, but that's not even as bad as it gets. As writer Olivia Snow discovered when uploading her childhood photos of herself to the "Magic Avatars" program, Lensa's alleged guardrails failed to even protect against the production of child pornography — a horrifying prospect on such a widely-available and easy-to-use app.

"I managed to piece together the minimum 10 photos required to run the app and waited to see how it transformed me from awkward six-year-old to fairy princess," she wrote for Wired. "The results were horrifying."

"What resulted were fully-nude photos of an adolescent and sometimes childlike face but a distinctly adult body," she continued. "This set produced a kind of coyness: a bare back, tousled hair, an avatar with my childlike face holding a leaf between her naked adult's breasts."

Kamps' and Snow's accounts both underscore an inconvenient reality of all this AI tech: it's chronically doing things its makers never intended, and sometimes even evading safety constraints they attempted to impose. It gives a sense that the AI industry is pushing faster and farther than what society — or even their own tech — is ready for. And with results like these, that's deeply alarming.

In a statement to Techcrunch, Lensa placed the blame on the user, arguing that any pornographic images are "the result of intentional misconduct on the app." That line echoes a wider industry sentiment that there are always going to be bad actors out there, and bad actors will do what bad actors will do. Besides, as another common excuse goes, anything that these programs might produce could just as well be created by a skilled photoshop user.

Both of these arguments have some weight, at least to an extent. But neither changes the fact that, like other AI programs, Lensa's program makes it a lot easier for bad actors to do what bad actors might do. Generating believable fake nudes or high-quality depictions of child sexual abuse imagery just went from being something that few could do convincingly to being something that anyone armed with the right algorithm can easily create.

There's also an unmistakable sense of Pandora's box opening. Even if the Lensas of the world lock down their tech, it's inevitable that others will create knockoff algorithms that bypass those safety features.

As Lensa's failures have so clearly demonstrated, the potential for real people to experience real and profound harm as a result of the premature introduction of AI tools — image generators and beyond — is growing rapidly. The industry, meanwhile, appears to be taking a "sell now, ask questions later" approach, seemingly keener on beating competitors to VC funding than to ensuring that these tools are reasonably safe.

It's worth noting that nonconsensual porn is just one of the many risk factors, here. The potential for the quick and easy production of political misinformation is another major concern. And as far as text generators go? Educators are shaking in their boots.

As it stands, a tool as seemingly innocuous as "Magic Avatars" is yet another reminder that, while it's already changing the world, AI is still an experiment — and collateral damage isn't a prospective threat. It's a given.

READ MORE: 'Magic Avatar' App Lensa Generated Nudes from My Childhood Photos [Wired]

More on AI: Professors Alarmed by New AI That Writes Essays about as Well as Dumb Undergrads

The post There's a Problem With That AI Portrait App: It Can Undress People Without Their Consent appeared first on Futurism.

1 note

·

View note

Text

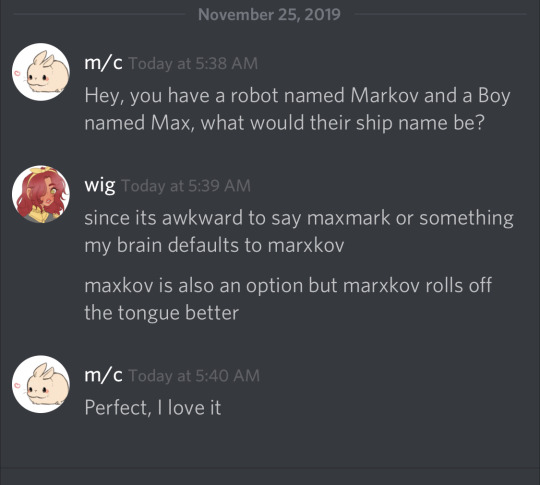

Childhood Friends AU: Max and Markov (Marxkov)

Part 1- In which Max ‘makes’ a friend

(2.6k words)

Masterlist Here

A/N: I got a bit carried away while writing and this became less of a list of bubbly making headcanons and more of a tragic origin story, so it’s a little different from the Marikim headcanons, so let me know what you guys think.

------

-Max’s mother, Claudie, gets pregnant with him at age 18

-he was an accidental pregnancy

- his father, Adam Kante, had just enlisted in the French Armed Forces and his mother was just starting university

-it wasn’t ideal circumstances, but his parents loved each other and didn’t want to give up

-So his dad proposed, and was stationed in Paris until Max was born

-unfortunately, then he was sent off to an overseas base

-Luckily, Claudie’s brother steps up to help out.

-Two years older than his sister, he was very different from her and her husband. While both of them were disciplined and fact oriented, he was more laid-back

-His dream was to be a stand-up comedian, and his stage name was Mark Oz

-He worked at night so he was able to watch Max during the day so Claudie could go to school

-With Adam away for so long, Mark became a father figure of sorts to Max

-They would spend most of the day together, and Mark realized early on that he had a child-genius on his hands

-So he bought extra learning material for Max

-He encouraged Max as much as could to peruse whatever academics interested

-Max was only 3 though, so nothing truly interested him more than Transformers

-Mark played the cartoon as background noise one day, and Max was instantly hooked

-He watched every episode

-For his birthday, he received only Transformers figurines

-when he started school he would draw new transformers in Art class

-For his 5th birthday, his mom bought him a robotics kit (a child-friendly robotics kit)

-and his Uncle bought him a coding Manuel

-Max found his new passion

-him and his uncle spent days building simple robots

-he was so fascinated by robots that he spent most of his free time learning more

-he dreamed about one day creating his own transformer

-and his Uncle helped him.

-He was never super academic but was still had the family brains.

-He full heartedly threw himself into Max’s passion

-inadvertedly, it helped his career. He created one of the most unique stand up comedy routines ever heard in Paris, those lucky enough to see it raved about it for years

-Mark Oz was finally hitting it big time, and he did not give a shit

-the only person he cared about making smile everyday was his nephew

-together, the two of them continued to hone Max’s robot building skills

-Mark bought a big black notebook and in it he wrote down every robot Max wanted to build

-max would dictate the details and Mark would write it, and then Max would attach a picture he drew of it

-despite being a child genius, he was still a child and had atrocious handwriting

-Slowly they were crossing robots off the list, starting with the most simple

-a basic walking robot

-eventually, and Mark didn’t know how long it would take, but they’d get to their last robot

-a fully functioning AI

-When Max turned seven he started taking advanced classes

-His mom had just finished her PhD, one of the youngest ever to complete the program, and was considering applying to become an astronaut

-Both Mark, Max, and her husband encouraged her to go for. Adam offered be discharged from the military to look after Max, but Mark insisted that he wouldn’t mind taking care of Max

-“Neither one of you should put your dream jobs on hold. I’ve been watching Max for seven years, I love the kid, and I’ll take care of him for the rest of my life if he still wants me around.”

-And Mark kept that promise

-Unfortunately, he kept it because his life was cut short.

-It was after a late show, the driver was intoxicated and didn’t see Mark crossing the street.

-Claudie withdrew from the astronaut program and took up a job as a train driver so she could be around for Max

-Adam returned from his deployment, but things just weren’t the same. He enlisted in a third campaign after a month

-Max was devasted. His uncle was the only one who understood him.

-He didn’t speak a word to anyone for a year.

-His mom is concerned, but therapists insist that Max just needs time

-Meanwhile, Max works his way through his robot list.

-His mom offers to help him, but he refuses.

-If he can’t build them with his uncle then he’ll build them alone

-a year later he’s halfway through the list

-His mom almost cries when he asks her to come see his robots

-He spends the entire day showing her the different ones and explaining what they do

-After that, their relationship improves greatly. Max talks a lot about everything he’s interested in

-Claudie is barely able to keep up

-She’s afraid that he might have an unhealthy attachment to his robots though, and is afraid of what will happen when he completes his list

-So she introduces him to a new hobby, video gaming

-Mark had tried to get Max into it a few times but Max was more interested in Robotics and programming at the time

-Now though, in memory of his uncle, he gives it a go

-and fucking loves it

-He and his mom spend the weekend playing old games that his uncle had

-He doesn’t give up on his robots though

-Two years later, he’s down to the very last robot on his list

-His father comes home that same week, his three year campaign ended

-Once Max started speaking again, his father would video call him. Max would show him his latest robot, and his father, who didn’t know a thing about robots, would ask him as many questions as he could come up with about it

-It was slightly awkward at first, seeing his dad in person, but soon it faded away

-Max still didn’t want help with his robots, but he was more than happy to play video games with his father

-his dad was skilled, particularly in fighting games, and Max almost never beat him

-at first

-his dad taught him a few tricks, and Max practiced and practiced until he could beat his dad

-Max was doing pretty good

-Still, though, he seemed to have an aura of sadness around him

-His dad asked him one day what games he liked to play with his friends

“I don’t have friends”

-It was the advanced classes. They were filled with older kids, and none of them wanted to befriend a 10 year-old

-His parents decided to pull him out at the end of the year.

-For college, he’d be going to a regular (though very good) school with kids his own age

-Francois Dupont had a great reputation, and offered him an academic scholarship

-His dad enlisted in another campaign

-Max was sad to see him leave, but was feeling happier than he had been in a while

-He continued to work on his last robot

-He turned 11 and started school at Francois Dupont

-He had no idea how to interact with kids his own age

-He was seated next to a hyperactive kid who introduced himself as Kim

-They were in the very front row and Kim joked that the teacher was already trying to keep an eye on them

-it wasn’t very funny, but Max almost fell over laughing

-“Wow, if you thought that was funny, just wait till you meet my friend Marinette!”

-“I’m sorry its just that…I would never disrupt class. Knowledge is the most valuable thing we can gain, and I intend to gain as much as I can.”

“I don’t know man, that sounds kind of like something a mad scientist would say.”

“well, I am trying to build the world’s first sentient AI in my mom’s basement.”

-Being at a regular school is a relief to Max

-The homework is extremely easy in comparison to what he’d been doing before, which gives him more time to work on his robot

-(bare with me through this next part guys, I do not know anything about science, mechanics, or engineering so this will probably be a heaping load of bullshit)

-the biggest difficulty in the creation of this robot was not creating an AI, many people have done that before, but rather figuring out how to allow it to feel without programming emotions into it

-Max had been doing a lot of research on Markov chains lately

-He believed that being able to code the ability to predict events would lead to a breakthrough on how to code the comprehension of emotions

-but the Markov process and chains were extremely complicated

-but, Max was out of ideas

-so he spent weeks reading about all the research and experiments. He read about modern day applications of it, analyzed the codes he could get his hands on

-and then he made his own.

-He didn’t need his AI to study systems, but rather humans

-ideally, if he did his code right, the AI would scan the web for all examples of human behavior to catalog and process it. Hopefully, then it would gain the ability to comprehend human emotion

-Max figured he would know if it worked if it was able to predict how people would react to different things being said to them

-sure enough, he was right…in a way

-Max didn’t think he’d be able to achieve true sentience like what the transformers had

-He was trying to give his AI the ability to understand emotions, not the ability to feel

-and yet, somehow, from the first day the robot was online, he seemed to have emotions

-it confounded Max

-He spent all of winter break talking to the robot

-trying to determine if it really felt

-He started just by talking to it

-it had access to all the knowledge on the internet, yet it seemed to have trouble grasping many theories about philosophy and morals

-Max accidentally stayed up the entire night discussing moral relativism with it

-He counted it’s ability to ask questions and hold a conversation in it’s favor

-however, that’s what Max was aiming for in the first place, and it didn’t convince him it had feelings

-he had to admit though, he truly hoped it did as he was quickly considering the robot as his first true friend

-in the weeks they spent talking Max told the robot his life’s story

-and the robot replied with genuine sympathy

-Max connected with it in a way that he’d only connected with one other person before

-he showed it a video of his uncle’s stand up routine

-when the robot started laughing Max was blown away

-not by the laugh, but by it’s reaction to it

“Oh no! Max, I am afraid I had a malfunction! Do you think it is possible for auditory signals to transmit a virus?”

“What? No, that’s laughter. It’s natural, especially when you’re listening to someone as funny as my uncle.”

“Yes, the brother of your mother. You mentioned he had a career as a comedian, and a quick scan of the web shows that he was quite successful.”

“Yeah, he was.”

“You sound sad. Were you close?”

(it’s only because it’s a robot and has advanced hearing sensors that it’s able to hear Max’s “Yes”)

-Max really hopes the robot truly is sentient

-What finally convinced him is when he heard it cry

-He’d left it in the basement to get eat dinner, promising to be back in half an hour

-but got drawn in to a round of video games with his mother

-sure enough, a few hours past before Max remembered he left the robot in the basement

-he shrugged it off as an accident until he got to the basement door and heard quiet sobs

-when he went down the stairs it was the robot crying

-when Max came into hearing range, the sounds stopped

“Max! You’re back! I thought you had abandoned me!”

-it didn’t seem possible, but Max could hear the happiness in the robot’s voice

-in that moment, Max made a decision

-“How do you feel about the name Markov?”

“Like the chains?”

“Yes, but Markov also sounds like…Mark Oz.”

“…I would be honored to be named after someone who was so important to you.”

-Max introduces Markov to his mom

-She’s literally at a loss for words

-like, she knew Max was a genius, but this was something on a whole other level

-She’s incredibly proud though

-and wants to tell everyone about it

-But Max stops her

-Markov isn’t just a robot, he’s a sentient being, and more importantly, he’s his friend

-if people learned about him, they would want to take him apart to figure out how he works

-Max doesn’t want that and neither does Markov

-So his mother keeps silent

-Plus there’s still more work to be done

-Markov might be built, but there’s still a lot that could be improved

-For starters, he’s huge

-Max built him out of three old computer monitors and can’t even lift him

-luckily, Max doesn’t have to do all the work himself

-Markov creates the blueprint for a smaller body

-they go from three monitors to two to two

-but it is slow going

-the first downsize took six months because Max didn’t want to risk accidentally destroying Markov

-he’s the happiest he’s been in a while

-but Markov thinks he could be even happier

-Max hasn’t been interacting with his classmates much

-he’s on good terms with them. No one trips him or throws things at the back of his head, and he talks to some of them every now and then

-his seatmate, Kim, asks him how he’s doing every morning

-Max doesn’t realize that he could be getting an even better experience out of school

-but Markov does, he’s analyzed enough books to know that it’s vital for a young child to have multiple human friends

-so he encourages Max to reach out

-Max is hesitant, but asks Kim if he wants to come over and play video games sometime

-Kim’s response is a resounding HELL YES

-(minus the swear word bc he’s like 11)

-Max is nervous as all hell, but the moment he has a game controller in his hands it goes away

-Kim is pretty tough competition, but Max still wins most of the time

-before they know it, it’s dark outside

-The next day when they need to partner up for an assignment, Kim asks Max if he wants to be partners

-Max smiles for a week straight

-With Markov’s encouragement, Max slowly befriends most of his classmates

-He’s particularly close with his seatmate Kim and a girl from Class A named Alix

-He also meets Kim’s friend Marinette, who’s in Class A with Alix

-and her friend Nino

-Still, Max considers Markov to be his best friend

-He sits in the basement with him everyday and tells him everything that happened that day

-Markov eagerly listens to all of it

-he wants more than anything to be able to go out and things for himself.

-but for now he’s stuck in the basement

-he’s sure that one day Max will make him small enough to transport

-for now, he’s happy to listen to his best friend’s stories

-----

Bonus:

------

A/N: I didn’t mean to make Max’s life so tragic, I swear.

If you want to be added to the childhood friends taglist or removed from it, please send me an ask or message me.

Taglist:

All Miraculous Ladybug fics:

@ravennightingaleandavatempus @crazylittlemunchkin

All Childhood AU fics:

@charlietheepic7 @krispydefendorpolice

58 notes

·

View notes

Text

The Flesh Machine

Over the many years since it’s release, Ghost in the Shell has often been seen as an icon when it comes to tackling the subject of the line between man, or in this case, woman and machine. Although the movie touches on a more important subject; giving us a warning about humanity’s potential fatal flaw, and its path to a more evolved civilization. To begin Ghost in the Shell has had a huge impact in science fiction media inspiring movies like Ex Machina and The Matrix, even shows like Westworld use similar aesthetics and share the same themes. These movies also the tackle the issue of man and machine and how technology will be mankind’s undoing and what not. However Ghost in the Shell presents a different resolution not with one side beating the other, but a synthesis between the two.

As we’ve seen, the movie centers around Major Motoko Kusanagi a cyborg government agent that works for Section 9 which I can only assume are a police task force or a black ops unit. They encounter a criminal that goes by The Puppet Master that ghost hacks people and implants fake memories to force it to do its bidding. As it turns out later in the movie that this hacker is actually an spy AI program called Project 2501, and developed by Section 6 that lost control of the program after it gained self awareness. At the end of the film after Kusanagi catches up to the program it makes her a interesting proposal. Project 2501 asks Kusanagi to merge and create a sort of child, which was literally the case at the end of the movie, Kusanagi accepts and the movie ends.

The confrontation and resolution of the two forces of human and digital consciousness reflects Georg Hegel’s vision of progress. Hegel believes that all throughout the progression of human evolution that every development was a cause of forces that confront one another and inevitably make something new. Hegel’s philosophy basically says that world functions on rational principles, and that the true nature of reality is almost always knowable, and this philosophy is well reflected onto Kusanagi who is driven by dissatisfaction with her limited perspective and is convinced throughout the movie that there is a better reality for her to live. The movie treats the “marriage” of Kusanagi’s consciousness with that of The Puppet Master’s with optimism, and Hegel’s system of dialectics explains why. Dialectics is more often construed, in classical philosophy, as a form of argumentative discourse to come to conclusions. It starts with one side of the argument which is the thesis and met by a counter argument called the anti-thesis. There are three possible end results one being the denial of the thesis, another is the affirmation of the anti-thesis, and the last one is the synthesis of the assertions that results in a better understanding of the subject. Hegel however uses dialectics differently where it’s the basic mechanism to human progress on an individual and collective level that when one force confronts the other something new emerges.

Ghost in the Shell at its heart is a dialectic between the human and digital world with Kusanagi on one side with her human mind that makes her unique, although she feels constrained by her limited perspective and personal identity. On the other side Project 2501 existed through cyber reality and therefore obtains the broader understanding that Kusanagi desires, although it’s also limited by a lack of personal identity. In the movie the program even acknowledges that it’s an intelligent life form because of its sentience and awareness of its own existence but at the same time it knows it lack the fundamentals in all living organisms, reproduction and death.

Hegel’s dialectics also present an interesting dynamic between master and slave. To put this more in context imagine in feudal times where you had the lord that owns the land and the serfs that work the land. The lord is the master and can exercise his power however he sees fit by forcing the serfs to work the land until they rot while the lord does nothing. The dynamic that ensues as a result of this system is that the more the serfs work the more knowledge and experience they gain, all the while the master learns nothing. This, according to Hegel, is what leads the oppressed to inevitably surmount their masters. Ghost in the Shell resonates this idea throughout the movie with Section 6 and Section 9 acting in a typical master behavior. More specifically Section 6 masters are stagnant and stuck in the politics of the old world, and Section 9 uses lethal force without compromise. Kusanagi is even ordered to destroy Project 2501 if she’s unable to secure it which is ultimately counterproductive since it makes it more difficult for Section 9 to learn the programs intentions and prolongs Kusanagi’s path to evolve. Project 2501 in this case is the slave because the authorities use the program to work for them and while it’s gaining knowledge and experience, the authorities continue to learn nothing until the program is finally more qualified and stronger and even starts becoming the master by controlling people such as the garbage worker. Hegel’s solution to this is synthesis or what he calls sublation, which is synthesis without loss. The result is a unique, superior idea that incorporates and accommodates both perspectives which is what Project 2501 offers Kusanagi at the end of the movie.

Sublation is crucial because it prevents stagnation when a person or an idea becomes too enclosed or dare I say too full of itself. Even Section 9 was put together with these types of dialectical principles and is presented in the movie when Togusa asks Kusanagi why someone like him, with almost no cybernetic enhancements, was on the team and she explains to him that diversity is what makes them stronger and she states “Overspecialize and you breed in weakness, it’s slow death.” Project 2501 is also aware of this issue of stagnation which is why it needs the constraints Kusanagi feels intimidated by. For Hegel sublation happens in conflicts like these whether it be on an individual level or on a much larger scale, and that the world evolves based on these dialectics even in subjects such as science and art where every time humanity advances it enters a new state of affairs that represents an improvement over what came before. Hegel calls this new state “Geist” which can be translated to mind, spirit, or ghost. Geist follows this trajectory after Kusanagi’s and Project 2501’s sublation where this being, that’s human, machine, and AI, exists starting a new era of human evolution, and is even hinted at in the movie during the final battle scene where the tank obliterates the tree of life and the names of less evolved beings, but leaves hominis on top untouched. This symbolizes that evolution will continue way past humans. This is what makes Ghost in the Shell so unique because it’s not about what happens if we do merge with our technology it’s what will happen if we don’t, it also relays the message to interact with the world, resolve our contradictions with it, and emerge stronger and better because clinging to our beliefs about what we are will only prevent us from becoming what we can be. Even in the movie Kusanagi asks Project 2501 if it can guarantee if she can still be herself to which it asks her why she would want to remain the same, and that her efforts to remain what she is is what limits her.

The first time I saw Ex Machina I was most astounded by the possibilities presented for AI’s to learn what it’s like to be human. The story starts with the protagonist Caleb who’s an office worker that gets invited to stay at his hilariously rich boss’s estate. When he gets to the estate his boss, Nathan, introduces him to the real protagonist Ava, a highly intelligent AI that’s built to look and act human learning from everything around her. Caleb becomes Nathan’s experiment to see if Ava is capable of real human thought, and after a few sessions Ava starts developing a romantic interest in Caleb. Ava causes a blackout so they can talk about what they want without supervision, such as not trusting Nathan. Nathan announces he’s going to upgrade Ava which would destroy her current self, so Caleb gets Nathan drunk and when he’s passed out Caleb goes to plan with Ava on how they’re going to escape together. Nathan then reveals that he’s been listening even when Ava cuts the power, and that Caleb was an experiment to see if Ava can emotionally manipulate him, which she did. After Caleb lets Ava out she says good-bye to Nathan by killing him, and then graciously thanks Caleb by locking him in the house and leaving him to die. She escapes and the last shot shows her blending in unnoticed in society.

Ex Machina explores the lines between human and AI. The visual imagery in the film illustrates the distinction between the artificial and the natural worlds. Muted colors inside Nathan’s mansion reflect the order of machines, and doors are controlled by a security protocol. In contrast the natural environment around the facility is colorful, beautiful, and unpredictable. Nathan describes true consciousness to the work of Jackson Pollock where he states “He let his mind go blank and his hand go where it wanted, not deliberate, not random, but some place in between.” The construction of Ava’s brain reflects the fluidity the human consciousness requires. On the other hand the film makes us question how useful Nathan’s test of consciousness really is, since calling humans conscious is far fetched as it is. Nathan points out that Caleb’s personality is determined by programming just like Ava’s which makes his responses and reactions more feasibly analyzed and quantified by Ava who ultimately determined that Caleb has feelings for her. In the end, the film leaves us with the impression of Ava’s humanity, and not just because she attaches new skin to her body, but because when she escapes we see the Pollock painting again to remind us of the distinction Nathan made between a programmed machine and the subconscious motivations of a human. When Ava murders Nathan she technically passes his test because it’s not an act she was programmed to commit, and demonstrates that she transcended her parameters.

The film is less a warning about the many horrifying dangers of technology and more a commentary about how man’s creations reflect human nature; cars have a heart that pumps, computers are modeled to process and produce information similar to that of the brain, and even the atomic bomb mirrors the violence of the societies that made them. In the film Ava reflects the manipulative tendencies of Caleb and Nathan who are the only humans she met. Thus, if Nathan has made Ava in his own image what kind of person will she be? She’ll be a murderous robot who uses others to achieve her goals.

The Corporation short video discusses the potential that corporations have in the ownership of biological and living organisms, and it started years ago when General Electric and a professor by the name of Ananda Chakrabarty went to the patent office with a microbe that’s been modified in labs in order to eat oil spills. However the patent office rejected GE’s and Chakrabarty’s patent saying they don’t patent living organisms. They tried appealing it in the US Customs court of appeal and successfully overwrote the patent office that later appealed. The central question of the video is whether a corporation should have the right to directly or through a license own a living organism.

This is a big issue of which I know very little about, but from what I can conclude is that a capitalistic approach when it comes to medicine and the body will more often than not result in what capitalism was meant for which is creating competition. As we’ve seen with other things other than biology, such as the media or products that big corporations will try and monopolize the field they’re competing in. Currently in the US only six corporations own ninety percent of all media outlets. As stated in the video as well that in the future only a handful of corporations will monopolize genomes, and the cellular building blocks of both humans and animals as intellectual party. Once a certain resource becomes monopolized or in the hands of one centralized entity then it ultimately destroyed any chance of anyone creating anything better that would advance the progression of society. On the other hand the need for advancement will create healthy competition between companies that will strive to better understand and develop products, or in this case, living organisms that will ultimately work for the betterment and progression of human society. Profit is all the corporations get out of these developments and advancements and later another corporation will overpower these developments with their own. In the early 20th century corporate lawyers gaves private corporations similar rights to those of a person, and is considered an entity with rights, they can sue, and can be sued just like everyone else. Based on this if a company uses its resources and man hours in order to develop something in which to profit off then they are well within their rights to own that property

0 notes