#I hate talking to people about ai art. it is never productive somehow

Text

I am going to go insane :)

#achievement unlocked!#marks on hands from digging into them with my nails while anxious#I hate talking to people about ai art. it is never productive somehow

194 notes

·

View notes

Text

Scott pilgrim takes off inhabits the same artistic space as the matrix 4, or even the final fantasy 7 remake. I mean this as a good thing. It has the distinct touch of an artist that made something that defined a generation revisiting the art that outgrew them a thousandfold with more maturity and different interests.

These interests usually skew meta, they're about what drives someone to revisit something made by a past version of oneself, about the experience of suddenly gaining more influence than anyone could reconcile, where criticisms of your work (which you also, no doubt, have many) become synonymous with criticisms of your culture. If you've been here a while, you probably know (and are tired of) what I'm talking about, manic pixie dream girls and aloof average male protagonists, toxic nostalgia, pick your theme and it's a video essay title.

Imagine having every read of your 2004 funny video game-coded coming of age comic reverberate infinitely toward every direction, people saying your main character taught a whole generation of men to be self-absorbed while the exact opposite type of people rant about how your secondary lead "ruined a whole generation of women" because of hair-dye or whatever. Imagine Edgar Wright makes a movie adaptation of your cute little comic that somehow launches the careers of half of the current celebrity pantheon simultaneously. How would that change you?

Well, for one, it makes you less relatable. The truth of an aloof nerdy guy dating in his early 20s is a lot more universal than the truth of an artist in his 40s forever defined by the event horizon of a thing he wrote half his life ago. The matrix 4 couldn't stop talking about how it feels to have created the matrix. The final fantasy 7 remake can't help but to constantly examine what it means to remake final fantasy 7. It's easy to see why someone would hate that indulgent meta trend, I'll probably never write a generation-defining story, why would I care about the first world problems of someone who did? It can feel distant, and at its worst it can feel insulting. Like it's pointing the finger at the fans, whispering 'you did this to me'. I get that.

I get that, but I love it.

It's the fundamental difference between wanting something that is like something you liked, and wanting someone that is from the same creator of something you liked. The difference between feeding the mona lisa into an AI and finding a new authentic da Vinci. You can't make something entirely new if you religiously stick to using the parts of something that's already there. The human behind the work will always have influences you didn't realize, thought patterns and aesthetic preferences that weren't entirely clear in their previous work, no matter how much you deconstruct it. More importantly, the human will also change, and this organic self-continuity will reflect on the art. I don't want the creator of something to hold their own creation with the same zeal as its fans, because someone who did that simply wouldn't have been capable of creating the original piece in the first place.

I don't want a product, I want art.

Scott pilgrim, the original, indulges the most earnest impulse we have-- that of self-mythologizing, of creating a narrative off of our own lives. To depict the mundane as fantastic, interpersonal relationships as adventures. It resonated with so many people because it was earnest, and it was also picked apart to hell and back because it was earnest. Its flaws were on display, and not just the ones it intended to show. But in my opinion, the opposite impulse, that of washing off everything that could be criticized and presenting the cleanest possible image of yourself through your art, is just... bad. it makes for bad art, or it just freezes you. The very first hurdle of creating anything is getting over that, then maybe the spotlight will fall on you. If it does, you'll get everything you ever wanted, but everyone gets to see through you.

So, how do you revisit something like that? You have two options. Either you take all the pieces and try to reassemble them exactly how everyone remembers it, signing your name as a formality, looking at a mirror in which you no longer see yourself, or you talk to it. You dialogue with your own work, with who you used to be. You travel in time and talk to yourself. You question them, acknowledge them but also teach them a thing or two. You don't respect the product, you respect the feeling. You find the same earnestness that made you put pen to paper for the first time, and you point it towards your new loves and fears. Maybe you make it less about the main guy, take the chance to develop your secondary characters, maybe you give the girl more agency. Maybe you summon the future and refuse its answers. Maybe you fight yourself.

That's the harder choice. It submits your new self to the scrutinizing eyes of a whole new generation, it risks alienating the people who identified with your previous piece. It's riskier, probably less profitable, and by any pragmatic lens probably a bad idea. But it's the only way you can make art. It's truth, the truth that got you there in the first place.

It's how you get it together.

141 notes

·

View notes

Text

my unhinged rant about the whumptober discourse, below the readmore for the benefit of ppl who dont wanna see that crap. im just gonna go insane if i don't say this somewhere bc i feel like i'm losing my mind

this drama is genuinely so mind-blowingly stupid it's unreal, and it's been bothering me so much that i just HAVE to talk about it or i'm gonna go insane, if for no other reason than to get it out of my system. i honestly never expected the whump community to go on the kind of bad-faith tirade that's taking place.

disclaimer right here that i do not support AI scraping creative works without permission (like chatgpt and a whole host of AI art programs do) or these AI-generated works being passed off as legitimate creative works. obviously that stuff is bad, and literally everyone on all sides of this agrees it's bad. i used chatgpt exactly once one week after it came out, before i knew how shit it was, and haven't touched AI stuff since. because it steals from creators and it sucks.

now:

saying "whumptober supports/allows AI" when their official policy says plain as day:

"we are not changing our stance from last year’s decision"

"we will not amplify or include AI works in our reblogs of the event."

"we discourage the use of AI within Whumptober, it feels like cheating, and we feel like it isn’t in the spirit of the event."

is bonkers! whumptober is a prompt list, there is nothing TO the event other than being included in the reblogs. they literally cannot stop people from doing whatever they want with the prompts.

someone could go out and enact every single prompt in real life on a creativity-fueled serial killing spree and the whumptober mods couldn't do shit about it. it's not like it's a contest you submit to. it's a prompt list! someone could take every single prompt from the AI-less whumptober prompt list, feed it into chatgpt right now, and post them as entries. and the mods of THAT wouldn't be able to stop them either. because it's a prompt list.

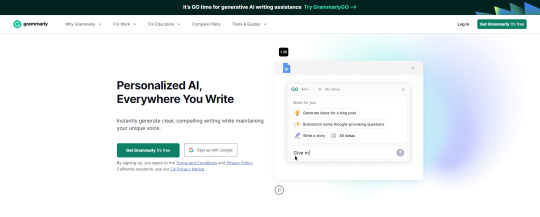

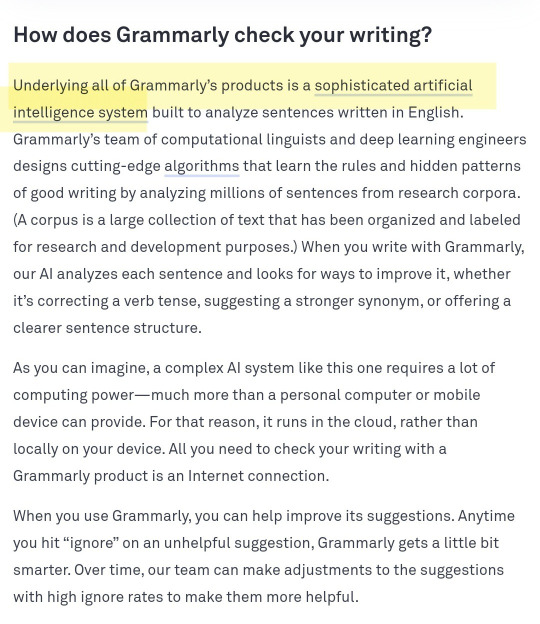

the AI-less event have also made just... blatantly false claims, like that grammarly isn't AI. grammarly IS AI and they openly advertise this. hell, this is grammarly's front page right now:

and this is a statement from grammarly about how its products work:

its spellchecker / grammarchecker is AI-based! claiming it's not AI is just... lying. saying "this is an AI-less event" and then just saying any AI that you want to include doesn't count as AI is ludicrous.

and you know what? whumptober actually pointed this out. they said they don't want to ban AI-based assistive tools (like grammarly) for accessibility reasons. this post has several great points:

"AI is used for the predictive text and spellchecker that's running while I type this reply."

"Accessibility tools rely on AI." this is true and here's an article about it, though the article is a little too pro-AI in general for my tastes, there's nuances to this stuff. it's used for captioning, translation, image identification, and more. not usually the same kind of AI that's used for stuff like chatgpt. THERE ARE DIFFERENT KINDS!

"But we can't stop that, nor can we undo damage already done, and banning AI use (especially since we can't enforce it) is an empty stand on a hill that's already burning, at least in our view of things."

and people were UP IN ARMS over this post! their notes were full of hate, even though it's all true! just straight lying and saying that predictive text isn't AI (it is), that AI isn't used for accessibility tools (it is), that whumptober can somehow enforce an anti-AI policy (they can't because it's a prompt list).

in effect, both whumptobers have the EXACT SAME AI POLICY. neither allows AI-generated works, but both allow AI-based assistive tools like grammarly. everyone involved here is ON THE SAME SIDE, they all have the exact same opinion on how AI should be applied to events like this, and somehow they're arguing???

not to mention that no other whump event has ever had an AI policy. febuwhump, WIJ, bad things happen bingo, hell even nanowrimo doesn't have one.

and you wanna know the most ridiculous part of this entire thing? which is also the reason why none of the above events have an AI policy.

no one is doing this. no one is out there feeding whumptober prompts to chatgpt and posting them as fills for whumptober cred. it's literally a hypothetical, made-up issue. all of this infighting over a problem that DOESN'T EXIST.

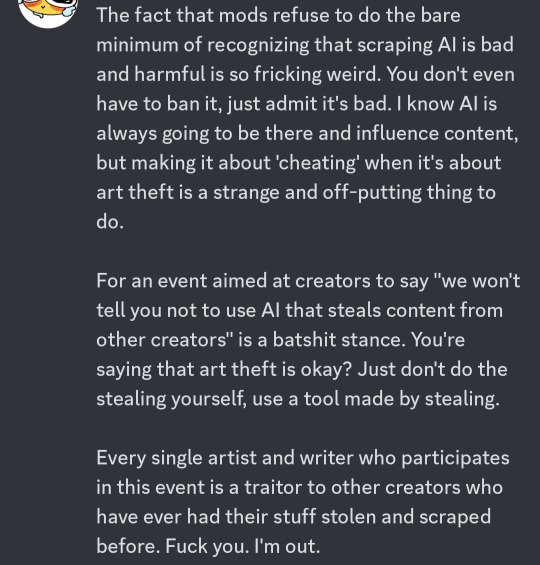

to the point that people are brigading the whumptober server with shit like this:

saying "everyone who participates in whumptober is a traitor, you should go participate in this other event with the exact same AI policy but more moral grandstanding about it" is silly. every single bit of this drama is silly.

in the end, please just be nice to people. we're ALL against the kind of AI that steals from creators. the whumptober mods are against AI, the AILWT mods are against AI, whumptober participants are against AI, AILWT participants are against AI. there is no mythical person out here trying to pass chatgpt work off as whumpfic. let's all just be civil with each other over this, yeah?

87 notes

·

View notes

Text

Welp... (#FireDavidZaslav)

I never though of making this, but a few hours ago, Coyote Vs Acme, the most highly anticipated movie to come out the Looney Tunes, has been shelved and would not be releasing at all.

When I heard this I was confused and disappointed: "Why would they cancel this movie?" I thought to myself. "Oh right, TAXES and MONEY!" I was already aware of Zaslav's mistreatment of animation but this made me sad in particular considering that the movie was completed by the time it was cancelled.

All of those animators that have put their sweat and guts into this film for over two years. Gone. By contrast, the universally-reviled Velma got green light for a second season, while 2023's The Flash not only somehow survived cancellation, but also kept Ezra Miller - who had a lot of criminal activity throughout 2022 - as the titular character in spite of the potential legal trouble they'll get for having a convicted criminal in a role of a mainstream film, let alone still continue acting.

As I said in a comment on a YT video, any hope I had with WB and especially Zaslav is pretty much gone. Hell, I would argue that any hope I had with this year is gone. Between the abysmal beginning (the aforementioned Velma, the career-destroying scandals of Justin Roiland and Elliot Gindi, with the latter having only stared voiced acting for five months before the allegations came out, and the widespread, messy but otherwise pointless Hogwarts Legacy drama), the doubling down of Hollywood and especially WB's maltreatment of animation, several formally revered people getting exposed left and right, and tech giants making inane decisions, most notable Elon's takeover of Twitter and Reddit's API Changes, its honestly not a hyperbole or an exaggeration to say I despise this year. Not to an extent of 2020, but still pretty bad, and 2023 sucking was something I've been genuinely dreading when December of last year came.

And speaking of last year, this day marks the unfortunate first anniversary of the controversy DeviantArt got into when they decided to implement AI into their platform known for art made by actual people, NOT machines. Is every Nov. 11 going forward gonna have a company screwing over their audience and employees?

If there's one silver lining to this devastating situation, it's that the producers of said film would be able to watch it on private screenings next week. That, and the potential content leaks of said film.

At this rate, WB is dangerously close to becoming the ActiBliz of animation - a company full of greedy scumbags who take delight in screwing over their audience and their people.

To close this post off, I will no longer be supporting WB and its related content from now on (at least legally). I'll be removing my profile off of HBO Max (or rather Max) and deleting the service on my TV. I'll also remove every video from the Cartoon Network YouTube channel off my watch history even though they had nothing to do with this situation, I just don't like associating myself with a scummy company regardless of how I feel about the products themselves. That obviously doesn't mean I automatically hate anything by WB nor do I want to remove them from my history, I'm just saying is that I don't want to support anything by WB if that means I'm profiting of from the company. I'll also delete/private my fan art commemorating the company's centennial, because as I said before, I'm not respecting a company that treats its own works like disposable tools while giving other works a slap on the wrist in spite of their abysmal quality. Talk about double standards...

TL;DR: WB cancels a film that has already been completed and everyone is restoring to pirating their content, including me.

EDIT: Okay, changed my mind. I'm ONLY going to delete everything from JUST Warner Bros., the company, not the products they own. That doesn't mean I WON'T be deleting anything that celebrates the company though.

EDIT 2: Even though I'm not supporting WB anymore, I'm keeping my 100th anniversary post (at least on this site) for "archival/historical" purposes.

#fire david zaslav#firedavidzaslav#warner bros#looney tunes#coyote vs acme#fuck this year#vent post#txt post

4 notes

·

View notes

Text

Should We Care Which Side of History We're On?

BBC’s Carnage is narrated from the year 2067, when all of Britain is vegan and glancing backward with shock, confusion and shame at the UK’s animal-eating history. Released in 2017, the film dramatized a common trope in ethical debates over meat eating. Those sympathetic to the abolition of animal farming often predict that future generations will be far more enlightened than we are, and will look back in horror at how we treated animals. Consider how we think about chattel slavery in America, and those who were complicit in it. It’s certainly plausible that future generations will be appalled at some of the things we did as well. Breeding sentient creatures into existence, breaking up their families, taking their milk, killing them, devouring their bodies and using their skins as luxury covers seems like a decent candidate for future dismay.

This threat of future people being alarmed at our behavior is sometimes just a rhetorical tactic. The basic idea here is to win debates by imagining support from our much wiser and kinder descendants. How could they be wrong? There may also be some wishful thinking involved. When you believe brilliant future people will agree with you and be appalled by your opponents, this can work as a mildly satisfying secular alternative to imagining one’s enemies in hell. People who do things that are socially acceptable in the present but which are nevertheless wrong may not suffer any consequences for it in this life, but the harsh gaze of future enlightenment will beat down on their memories. Justice will be done.

These morally loaded predictions about what future people will believe tend to be projections of the prognosticator’s own views. People who contemplate what enlightened future generations will lament about us often identify with these imagined judges, or at the very least, they typically admire the values they expect to be embraced by the future, even if they don’t live up to these values themselves. But speculating about future values is not always about converting others to your cause. There may be a legitimate concern about how later generations will see us. Should we be worried?

The most obvious reason to say no is that future thoughts of judgment can't get at us. Future ire at the past is harmlessly aimed at dead images, words in history books and faded memories. Even judgey thoughts from the present are arguably nothing to mind when they don't affect our lives in some way. To the extent that future thoughts impact us at all, it’s because we somehow manage to accurately simulate the thoughts in our own minds. Future people judging us is, if anything, us judging ourselves and others around us.

However, even if future hate can’t hurt us, it can affect how much influence we have after we die, assuming we expect to have any noticeable influence at all. The founding fathers presumably felt meaning by pursuing their various projects, like creating a new government in the colonized Americas, and would have wanted that government to last for as long as it stayed true to their ideals. Our judgment of the founding fathers would have mattered to them because their legacies are in our hands. If the United States eventually crumbles and its history of slavery and racism is an important factor in that, the founders' failure to oppose slavery in any substantial way will have ultimately undermined much of what they set out to accomplish. Even if they'd thought slavery were completely benign, the total undermining of their legacies might have given them pause if they could have predicted future Americans wouldn’t agree.

Artists, philosophers, politicians, theorists and anyone who creates something potentially lasting that can somehow be linked to them often have an interest in future people not despising them for their views or actions. Just as many of us avoid the art of people who have recently been accused of sexual harassment or worse, future enlightened people might shun works that were created by meat eaters. And you don’t even need to be a prominent figure to worry about this. Those who have children and expect to be known to their descendants in the far future would probably rather be an inspiration to their great-great-great grandchildren than an unfortunate blotch in their family history.

However, if you don’t care about doing anything that can be identified with you later—if you expect to be just one more blurred out face in an anonymous mass of past immoral people—you may not need to worry about future judgment. You can't seriously fret about your statue being ripped down by idealistic future teens if you never expect to be enshrined in the first place.

Then again, this is assuming that past and future generations will only be colliding in each other’s imaginations. The possibility of leaping the border between past and future raises ominous possibilities. We should not want to be on the wrong side of history if we actually find ourselves in the hands of our future judges. That could happen if cryogenics becomes more popular, we all sign up to have our bodies frozen, and future people develop the skills and motivation to revive us. (This is assuming humans develop the technology to do this before artificial general intelligence is developed, which the people I’ve talked to lately think is extremely unlikely!)

Depending on how strongly future enlightened people feel about our misdeeds, one of their motives might be to make us suffer for the things we did that future people think are wrong, but which seemed fine or at least excusable to many of us here. If we assume cryonics without AGI is possible, and that future people could have a retributionist streak, then waking up in the distant future could leave us subject to more than disparaging thoughts. Pretend Benjamin Franklin had invented cryogenic preservation around 1781 or so, and imagine the almost absurd naiveté of a brutal slave owner climbing into one of these rudimentary cryogenic tanks around 1861, eager to be revived and welcomed by the future Confederate States of America. We could similarly imagine a Nazi official freezing himself at the peak of Nazi Germany’s power. What would become of such people if the technology existed to revive them now? Would either of them be excused for being products of their time who were not morally responsible for their actions, and if so, should we expect future people to have the same accepting attitude toward us?

A counterargument to this worry is that we can safely assume future people who could revive cryogenically frozen people will be enlightened enough to not believe in free will, or to at least not believe in retributive justice, and this would see no point in punishing us—and would certainly see no point in thawing people from the unethical past in order to punish them. However, if we expect future people to hold such beliefs, then we should also not expect them to judge us at all. If we think future people will judge us, we can’t really assume future enlightenment takes the form of friendly understanding and education. Perhaps those who get frozen should not be too surprised to wake up in a tiny cage resembling the miserable conditions of the animals they ate, or even in a simulation in which they are a factory farmed animal and are tortured and slaughtered again and again until they’ve suffered as much as every single animal they ever ate.

But this is just a concern for those who intend to freeze their bodies at death, and even then, only if there’s any reason to believe cryonics works and that we might expect humans rather than benevolent AI to revive the unethical frozen. There are far more people who don’t want to wake up in the future, and simply hope their reputations transcend their deaths. How much should they care about future thought pieces on their moral fortitude?

The answer, I think, partially depends on the amount of overlap we expect between future values and our own.

The dominant narrative about enlightened future people implies they are much more altruistic, cooperative and empathetic than we are, they are pretty much unified in their progressive views, and these views are static across all future generations after a certain point. I've never heard anyone say, "Future generations will look back with horror at those of us who were against mass incarceration," or "Some people in the future will think we were awful for eating meat, some of them will not care either way, and others will like that we ate meat—and the percentages of people with these contrasting opinions will vary between the generations." It’s also usually implied that future people will share our basic values. We don't fall short by having the wrong values, but by not being consistent and principled about our values. What distinguishes future enlightened people is that they are more principled and consistent about certain already-existing values than we are.

If we accept all this, we should generally look backward with smug self-satisfaction and disgust before looking toward the future with embarrassment and self-reproach—and anyone determined to avoid future scorn should amp up the selflessness.

Yet aligning our actions with a hyper-progressive future may be trickier than it sounds. Those who warn about a widespread misalignment between present and future ethics generally have specific policy suggestions in mind, like going vegan. But again, these generally reflect the views of the people making these prescriptions, and don't necessarily rely on good evidence about what future people will do. Future people might think we should have been more altruistic, but what sort of altruists will they prefer? Utilitarian-style altruists who mathematically increase the good in the world while trying to ignore nudges from empathic emotions, or more passion-driven altruists who care for those around them? (”The first one!” Maybe!)

We should also consider that future values might not be unified, or might look wildly different from our own. After all, Confederate leaders and soldiers should be a definitive case of wrong-side-of-history infamy, yet many people today fight to keep their statues up, even in the wake of white nationalist violence. Plenty of current people do not think less of the founding fathers for owning slaves, and some people respect the confederate soldiers because they rebelled against the anti-slavery union. Whether we turn out to be on the wrong side of history could depend on which future people you ask.

Those who try to anticipate the values of the future don't give later generations much credit for originality, saddling the hypothetical future sages with ethical views already floating around today. But it's possible that future values will be totally at odds with our own. Perhaps future people will be upset for fairly obvious reasons, like that we sent way too much carbon and methane into the atmosphere. But maybe they'll be more upset that we binge-watched TV and threw parties and took some vacations instead of devoting our every waking hour to work. They might be outraged that parents generally took care of their own children instead of raising them in communities where biological parentage was irrelevant. They might think we were disgusting for having sex, or infantile for having an incest taboo, or misguided for thinking that life in prison is more humane than the death penalty.

The arc of the moral universe is long, and that may be all we can say about it. Concerns about enlightened future people assume later generations will look back on us with horror while we in contrast imagine our descendants with admiration or guilt. But if our values end up being drastically different from those of distant generations, shouldn't the cross-generational shuddering be mutual? Being on the right side of history just means fitting in with the majority of future people, morally, and we might want nothing to do with those creeps.

There could nevertheless be reasons to believe that some version of the hyper-progressive future is most likely. If so, this supports the normal discourse around future judgment in one sense and undermines it in another. What gets undermined if we can point to trends that make us confident in the near-inevitability of a vegan future, for instance, is a straightforward justification for future people to shake their heads in disappointment at those of us who eat meat now. If we can reliably predict that people in the future will be more ethical, we presumably expect it to be easier to be ethical—or more difficult to be unethical—down the road. If our descendants are more ethical because some combination of greater access to information, improvements in technology, genetic engineering, neurological interventions and cultural shifts help make their particular degree of selflessness possible, how much moral superiority credit should they really get?

In Carnage, technology plays a major role in creating an ethical divide between the enlightened vegan future and barbaric meat eating past. Activism, a vegan celebrity chef and ecological disasters convince a lot of British people to give up meat in the BBC’s speculative history, but it’s only when a scientist invents a device that translates animal thoughts into English—and animals use it to complain about being exploited—that farming animal is outlawed.

Humans may never invent a contraption that translates the neural flitterings of farm animals into English sentences like, “I’m not a cheese factory. I am a goat.” (The BBC imagined that given the chance to talk to us, animals would speak vegan meme-ese.) But there is one technology on the horizon that could make a significant dent in animal farming, and might even destroy it completely. This is the replicating of non-sentient animal cells into edible flesh, which makes it possible to create meat without creating nervous systems that will suffer and be destroyed. If producing meat this way eventually becomes sophisticated enough to parallel the tastes of the meats we’re used to, at around the same price or cheaper, it isn't absurd to imagine that pain-free meat could one day fully replace the meat we get from sentient animals, spelling the end for factory farming. This could end up being an anti-climactic, uninspiring end to factory farming that makes the future's claim to advanced rectitude more questionable, as philosopher Ben Bramble has worried. If later generations transition to near-universal veganism thanks to the help of a technological fix rather than through widespread sacrifice and a struggle for virtue, they could enjoy all the culinary pleasure we do without being complicit in animal suffering and death. But they would look ridiculous ranting about how despicable we were for killing animals for food as they chomped down on replicated animal flesh that is molecularly identical to the meat we can only get from animals because we exist in a less technologically adept time. They might believe they would never have eaten meat from tortured animals even if they had lived in earlier, less morally upright ages. But assuming they’ll have been raised in a culture with no tolerance for eating animals, and which had sacrifice-free alternatives, it's hard to see a solid basis for believing this. Future people can look back and see who amongst us fails their standards, but they can't know who amongst them would have met these standards if they had existed in another time. They might have done the same in our place, just as we might have done the same in theirs. As far as anyone knows, they’re better than us purely from luck of birth.

So... should we care which side of history we’re on?

At this point, I’ll put my cards on the table and admit that I don’t believe in posthumous harm. As long as your life and projects feel meaningful now, and you enjoy incorrectly imagining that you’ll be celebrated in the future, it doesn’t matter what people later think of you. Future people could hate the trappings of the someone you once were, ignoring the accomplishments of that former person for political or moral reasons, but once you die, what they are shunning is not you. It’s no one. At death, we each become yet another has-been—one of the trillions of non-existents who once had a short flash of consciousness and some degree of causal effect on others before being reduced to a story for the new crop of existents to use or discard however they like.

But even if this is right, cross-generational moral criticism may nevertheless be worthwhile. We don't change the past by condemning it, but judging ancestors can be a way of affirming our values that break with the past, re-asserting our commitment to new ideals and helping push those commitments into the future. And while we can't really know what people in the future will think about us, anticipating later views could inspire us to realize values we already aspire to. As we develop ourselves to get closer to future ideals, we potentially change what those future ideals become. If we’re determined to predict the future, we should predict the future we hope to see.

0 notes