Text

Word Labels

Working at NoRedInk, I have the opportunity to work on such a variety of challenges and puzzles! It's a pleasure to figure out how to build ambitious and highly custom components and applications.

Recently, I built a component that will primarily be used for labeling sentences with parts of speech.

This component was supposed to show "labels" over words while guaranteeing that the labels wouldn't cover meaningful content (including other labels). This required labels to be programmatically and dynamically repositioned to keep content readable:

It takes some CSS and some measuring of rendered content to avoid overlaps:

All meaningful content needs to be accessible to users, so it's vital that content not be obscured.

In this post, I'm going to go through a simplified version of the Elm, CSS, and HTML I used to accomplish this goal. I'm going to focus primarily on the positioning styles, since they're particularly tricky!

Balloon

The first piece we need is a way to render the label in a little box with an arrow. To avoid confusion over HTML labels, we'll call this little component "Balloon."

balloon : String -> Html msg balloon label = span [ css [ Css.display Css.inlineFlex , Css.flexDirection Css.column , Css.alignItems Css.center ] ] [ balloonLabel label , balloonArrow initialArrowSize ] balloonLabel : String -> Html msg balloonLabel label = span [ css [ Css.backgroundColor black , Css.color white , Css.border3 (Css.px 1) Css.solid black , Css.margin Css.zero , Css.padding (Css.px 4) , Css.maxWidth (Css.px 175) , Css.property "width" "max-content" ] ] [ text label ] initialArrowSize : Float initialArrowSize = 10 balloonArrow : Float -> Html msg balloonArrow arrowHeight = span [ attribute "data-description" "balloon-arrow" , css [ Css.borderStyle Css.solid -- Make a triangle , Css.borderTopWidth (Css.px arrowHeight) , Css.borderRightWidth (Css.px initialArrowSize) , Css.borderBottomWidth Css.zero , Css.borderLeftWidth (Css.px initialArrowSize) -- Colors: , Css.borderTopColor black , Css.borderRightColor Css.transparent , Css.borderBottomColor Css.transparent , Css.borderLeftColor Css.transparent ] ] []

Ellie balloon example

Positioning a Balloon over a Word

Next, we want to be able to center a balloon over a particular word, so that it appears that the balloon is labelling the word.

This is where an extremely useful CSS trick can come into play: position styles don't have the same frame of reference as transform styles.

position styles apply with respect to the relative parent container

transform translations apply with respect to the element itself

This means that we can combine position styles and transform translations in order to center an arbitrary-width balloon over an arbitary-width word.

Adding the following styles to the balloon container:

, Css.position Css.absolute , Css.left (Css.pct 50) , Css.transforms [ Css.translateX (Css.pct -50), Css.translateY (Css.pct -100) ]

and rendering the balloon in the same position-relative container as the word itself:

word : String -> Maybe String -> Html msg word word_ maybeLabel = span [ css [ Css.position Css.relative , Css.whiteSpace Css.preWrap ] ] (case maybeLabel of Just label -> [ balloon label, text word_ ] Nothing -> [ text word_ ] )

handles our centering!

Ellie centering-a-balloon example

Conveying the balloon meaning without styles

It's important to note that while our styles do a solid job of associating the balloon with the word, not all users of our site will see our styles. We need to make sure we're writing semantic HTML that will be understandable by all users, including users who aren't experiencing our CSS.

For the purposes of the NoRedInk project that the component that I'm describing here will be used for, we decided to use a mark element with ::before and ::after pseudo-elements to semantically communicate the meaning of the balloon to assistive technology users. Then we marked the balloon itself as hidden, so that the user wouldn't experience annoying redundant information.

Since this post is primarily focused on CSS, I'm not going to expand on this more. Please read "Tweaking Text Level Styles" by Adrian Roselli to better understand the technique we're using.

Ellie improving the balloon-word relationship

Fixing horizontal Balloon overlaps

Balloons on the same line can potentially overlap each other on their left and right edges. Since we want users to be able to adjust font size preferences and to use magnification as much as they want, we can't guarantee anything about the size of the labels or where line breaks occur in the text.

This means we need to measure the DOM and reposition labels dynamically. For development purposes, it's convenient to add a button to measure and reposition the labels on demand. For production uses, labels should be measured and repositioned on page load, when the window changes sizes, or when anything else might happen to change the reflow.

To measure the element, we can use Browser.Dom.getElement, which takes an html id and runs a task to measure the element on the page.

type alias Model = Dict.Dict String Dom.Element update : Msg -> Model -> ( Model, Cmd Msg ) update msg model = case msg of GetMeasurements -> ( model, Cmd.batch (List.map measure allIds) ) GotMeasurements balloonId (Ok measurements) -> ( Dict.insert balloonId measurements model , Cmd.none ) GotMeasurements balloonId (Err measurements) -> -- in a real application, handle errors gracefully with reporting ( model, Cmd.none ) measure : String -> Cmd Msg measure balloonId = Task.attempt (GotMeasurements balloonId) (Dom.getElement balloonId)

Then we can do some logic (optimized for clarity rather than performance, since we're not expecting many balloons at once) to figure out how far the balloons need to be offset based on these measurements:

arrowHeights : Dict.Dict String Dom.Element -> Dict.Dict String Float arrowHeights model = let bottomY { element } = element.y + element.height in model |> Dict.toList -- -- first, we sort & group by line, so that we're only looking for horizontal overlaps between -- balloons on the same line of text |> List.sortBy (Tuple.second >> bottomY) |> List.Extra.groupWhile (\( _, a ) ( _, b ) -> bottomY a == bottomY b) |> List.map (\( first, rem ) -> first :: rem) -- -- for each line,we find horizontal overlaps |> List.concatMap (\line -> line |> List.sortBy (Tuple.second >> .element >> .x) |> List.Extra.groupWhile (\( _, a ) ( _, b ) -> (a.element.x + a.element.width) >= b.element.x) |> List.map (\( first, rem ) -> first :: rem) ) -- -- now we have our overlaps and our singletons! |> List.concatMap (\overlappingBalloons -> overlappingBalloons -- -- we sort each overlapping group by width: we want the widest balloon on top -- (why? the wide balloons might overlap multiple other balloons. Putting the widest balloon on top is a step towards a maximally-compact solution.) |> List.sortBy (Tuple.second >> .element >> .width) -- then we iterate over the overlaps and account for the previous balloon's height |> List.foldl (\( idString, e ) ( index, height, acc ) -> ( index + 1 , height + e.element.height , ( idString, height ) :: acc ) ) ( 0, initialArrowSize, [] ) |> (\( _, _, v ) -> v) ) |> Dict.fromList

Then we thread the offset we get all the way through to the balloon's arrow so that it can expand in height appropriately.

This works!

We can reposition from:

to:

Ellie initial repositioning example

Fixing Balloons overlapping content above them

Our balloons are no longer overlapping each other, but they still might overlap content above them. They haven't been overlapping content above them in the examples so far because I sneakily added a lot of margin on top of their containing paragraph tag. If we remove this margin:

This seems like a challenging problem: how can we make an absolutely-positioned item take up space in normal inline flow? We can't! But what we can do is make our normal inline words take up more space to account for the absolutely positioned balloon.

When we have a label, we are now going to wrap the word in a span with display: inline-block and with some top padding. This will guarantee that's there's always sufficient space for the balloon after we finish measuring.

I've added a border around this span to make it more clear what's happening in the screenshots:

This approach also works when the content flows on to multiple lines:

{-| The height of the arrow and the total height are different, so now we need to calculate 2 different values based on our measurements. -} type alias Position = { arrowHeight : Float , totalHeight : Float } word : String -> Maybe { label : String, id : String, position : Maybe Position } -> Html msg word word_ maybeLabel = let styles = [ Css.position Css.relative , Css.whiteSpace Css.preWrap ] in case maybeLabel of Just ({ label, position } as balloonDetails) -> mark [ css (styles ++ [ Css.before [ Css.property "content" ("\" start " ++ label ++ " highlight \"") , invisibleStyle ] , Css.after [ Css.property "content" ("\" end " ++ label ++ " \"") , invisibleStyle ] ] ) ] [ span [ css [ Css.display Css.inlineBlock , Css.border3 (Css.px 2) Css.solid (Css.rgb 0 0 255) , Maybe.map (Css.paddingTop << Css.px << .totalHeight) position |> Maybe.withDefault (Css.batch []) ] ] [ balloon balloonDetails , text word_ ] ] Nothing -> span [ css styles ] [ text word_ ]

Ellie avoiding top overlaps

Fixing multiple repositions logic

Alright! So we've prevented top overlaps and we've prevented balloons from overlapping each other on the sides.

There is still a repositioning problem though! We need to reposition the labels again based on window events like resizing. Right now, we're measuring the height of the entire balloon including the arrow, and then using that height to calculate how tall a neighboring balloon's arrow needs to be. This means that subsequent remeasures can make the arrows far taller than they need to be!

We're measuring the entire rendered balloon when we check for overlaps and figure out our repositioning, but we should really only be taking into consideration whether balloons overlap when in their starting positions.

Essentially, we need to disregard the measured height of the arrow entirely when calculating new arrow heights. A straightforward way to do this is to measure the content within the balloon separately from the overall balloon measurement.

We add a new id to the balloon content:

balloonContentId : String -> String balloonContentId baseId = baseId ++ "-content" balloonLabel : { config | label : String, id : String } -> Html msg balloonLabel config = p [ css [ Css.backgroundColor black , Css.color white , Css.border3 (Css.px 1) Css.solid black , Css.margin Css.zero , Css.padding (Css.px 4) , Css.maxWidth (Css.px 175) , Css.property "width" "max-content" ] , id (balloonContentId config.id) ] [ text config.label ]

and measure the balloon content when we measure the total balloon:

measure : String -> Cmd Msg measure balloonId = Task.map2 (\balloon_ balloonContent -> { balloon = balloon_, balloonContent = balloonContent }) (Dom.getElement balloonId) (Dom.getElement (balloonContentId balloonId)) |> Task.attempt (GotMeasurements balloonId)

We also change our position calculation helper from:

positions : Dict.Dict String Dom.Element -> Dict.Dict String Position positions model = ... -- then we iterate over the overlaps and account for the previous balloon's height |> List.foldl (\( idString, e ) ( index, height, acc ) -> ( index + 1 , height + e.element.height , ( idString , { totalHeight = height + e.element.height - initialArrowSize , arrowHeight = height } ) :: acc ) ) ( 0, initialArrowSize, [] ) ...

to

positions : Dict.Dict String { balloon : Dom.Element, balloonContent : Dom.Element } -> Dict.Dict String Position positions model = ... -- then we iterate over the overlaps and account for the previous balloon's height |> List.foldl (\( idString, e ) ( index, height, acc ) -> ( index + 1 , height + e.balloonContent.element.height , ( idString , { totalHeight = height + e.balloonContent.element.height , arrowHeight = height } ) :: acc ) ) ( 0, initialArrowSize, [] ) ...

Ellie with fixed multiple repositioning logic

Fixing overlaps with the arrow

I claimed previously that we fixed overlaps between the balloons. This is true-ish: we actually only fixed overlaps between pieces of balloon content. A meaningful piece of balloon content can actually still overlap another balloon's arrow! And, since the content stacks from left to right, there's a chance that meangingful content might be obscured by an arrow:

Ellie showing the balloon and arrow overlap problem

There are two problems here:

The balloons need a clearer indication of their edges when they're close together.

The left-to-right stacking context won't work. We need to put the bottom balloon on top of the stacking context so that balloon content is never obscured.

The first problem is improved by adding white borders to the balloon content and shifting the balloon arrow up a corresponding amount.

Ellie where each balloon has a white border around its content

The second part of the problem can be fixed by adding a zIndex to the balloon based on position in the overlapping rows, so that arrows never cover label content:

positions : Dict.Dict String { balloon : Dom.Element, balloonContent : Dom.Element } -> Dict.Dict String Position positions model = ... -- now we have our overlaps and our singletons! |> List.concatMap (\overlappingBalloons -> let maxOverlappingBalloonsIndex = List.length overlappingBalloons - 1 in overlappingBalloons -- -- we sort each overlapping group by width: we want the widest balloon on top |> List.sortBy (Tuple.second >> .balloon >> .element >> .width) -- then we iterate over the overlaps and account for the previous balloon's height |> List.foldl (\( idString, e ) ( index, height, acc ) -> ( index + 1 , height + e.balloonContent.element.height , ( idString , { totalHeight = height + e.balloonContent.element.height , arrowHeight = height , zIndex = maxOverlappingBalloonsIndex - index } ) :: acc ) ) ( 0, initialArrowSize, [] ) ...

Ellie with the zIndex logic applied

Fixing content extending beyond the viewport

We're almost done with ways that the balloons can overlap content!

For our purposes, we expect words marked with a balloon to be the only meaningful content showing in a line. That is, we're not worried about the balloon overlapping something meaningful to its right or left because we know how the component will be used. This is great, since it really simplifies the overlap problem for us: it means we only need to worry about the viewport edges cutting off balloons.

Browser.Dom.getElement also returns information about the viewport, which we can use to adjust our position logic to get the amount that a given balloon is "cut off" on the horizontal edges. Once we have this xOffset, using CSS to scootch a balloon over is nice and straightforward:

balloonLabel : { label : String, id : String, position : Maybe Position } -> Html msg balloonLabel config = p [ css [ Css.backgroundColor black , Css.color white , Css.border3 (Css.px 2) Css.solid white , Css.margin Css.zero , Css.padding (Css.px 4) , Css.maxWidth (Css.px 175) , Css.property "width" "max-content" , Css.transform (Css.translateX (Css.px (Maybe.map .xOffset config.position |> Maybe.withDefault 0))) ] , id (balloonContentId config.id) ] [ text config.label ]

Ellie with x-offset logic

Please note that when elements are pushed to the right of the viewport edge, by default, the browser will give you a scrollbar to get over to see the content.

Ellie demoing right-scroll when an element is translated off the right edge of the viewport.

You may want to hide overflow in the x direction to prevent the scrollbar from appearing/your mobile styles from getting messed up.

That's (mostly) it!

You might notice that the solution isn't maximally compact:

This is where our MVP line was. If it turns out that our content ends up taking up too much vertical space, and we want to go for the maximally compact version, we'll revisit the implementation. Since the logic is all in one easily-testable Elm function, improving the algorithm should be pretty straightfoward.

Additionally, this post didn't get into high-contrast mode styles. The way that the balloon is styled currently is utterly inaccessible in high contrast mode! Anytime you're changing background color and font color, it's important to check in high-contrast mode to see how the styles are being coerced. I've written a couple of previous posts talking about color considerations (Custom Focus Rings, Presenting Styleguide Colors) so I don't want to dig into how to fix this problem in this post. Please be aware that while the positioning styles in this post are fairly solid, the rest of the styles are not production ready.

Thanks for reading along! I had a great time working on this project and I'm quite pleased with how it came out. I'm looking forward to sharing the next interesting project I work on with you!

Tessa Kelly @t_kelly9

2 notes

·

View notes

Text

Custom Focus Rings

Many people who operate their devices with a keyboard need a visual indicator of keyboard focus. Without a visual indicator of which element has focus, it's hard to know what, say, hitting enter might do. Anything might happen!

It's reasonable to think that either the browser or the operating system should be in charge of making sure keyboard focus is perceivable to keyboard users. You might think that it's best for devs not to overwrite focus styles at all. However, if your website is customizing the look of inputs, there's a good chance that the default focus ring won't actually be visible to your users.

This is the situation we found ourselves in at NoRedInk, and what follows is what we did to improve things.

The Problem

Borders

At NoRedInk, both our primary and secondary buttons feature blue (specifically, #0A64FF) borders. On a Mac using Chrome, this particular blue color on the edge of the button essentially makes the default focus rings invisible.

Can you tell the difference between these two pictures? The second one has a focus ring, but if the pictures weren't side by side, there's no way you would know.

Okay, you might be thinking, don't have blue buttons. However, changing the button color, even if it were feasible from a branding standpoint, doesn't necessarily solve the problem. The outline color that's used for the default focus ring depends on the browser stylesheet, the operating system, and user settings.

Personally, I have my macOS accent color set to pink, which results in a focus ring that disappears against NoRedInk's danger button style.

Backgrounds

Even if we could customize all of our buttons, inputs, and components so that the border and the focus ring outline always showed cleanly against each other, we still wouldn't have solved the entire problem.

This is because there's not only the question of the focus indicator showing up against an input to consider: we also need to consider the contrast of the focus indicator against the background color of an input's container.

The importance of taking the background color into account became more apparent to us at NoRedInk when we started working on a redesign of NoRedInk's logged out home page that made heavy use of blue and navy backgrounds.

Some browsers have implemented two-toned focus rings that will show up clearly on different backgrounds, but there are major browsers that haven't.

Here are two screenshots of the same link being actively focused. The first screenshot, taken in Chrome, shows a focus indicator. The second screenshot, taken in Safari, shows only how a focus indicator can become truly and totally indistinguisable from a background.

Problem summary

If we are customizing input styles, we probably also need to make sure that our focus ring (1) has enough contrast with the edge color of the input and (2) has enough contrast with the input's container's background color.

Approach

While we could have customized the focus ring color for every input and background color individually, we worried that having the focus indicator appear differently in different contexts would make it harder for folks to understand the meaning of the indicator.

Consistency in UX is really important for usability in general. We didn't want a user to ever have to hunt for their focus. Keeping the focus indicator colors consistent and vivid helped us achieve this goal, and using a two-tone indicator allowed us to have a familiar look & feel everywhere.

Reducing cognitive load is also important for usability: folks who don't use the keyboard for most of their interactions shouldn't be distracted by a weird, bright ring that follows them around as they interact.

The Accessibility Team's designer, Ben Dansby, crafted a high-contrast two-toned focus ring that would appear only for users whose last interaction with the application indicated that they were keyboard users.

Ben used red (#e70d4f) and white (#ffffff) for the two tones. These colors don't strictly guarantee sufficient contrast for all possible inputs, but it's straightfoward to check that our specific color palette will work well with these specific focus ring colors.

Ellie with elm-charts code that produced the diagram

Learn more about contrast requirements in the WebAIM article "Contrast and Color Accessibility".

Implementation

The two-toned focus ring Ben made used box-shadow to create a red and white outline with gently curved corners:

[ Css.borderRadius (Css.px 4) , Css.property "box-shadow" <| "0 0 0px 3px " ++ toCssString Colors.white ++ ", 0 0 0 6px " ++ toCssString Colors.red ]

We want the focus ring to only show for keyboard users, so we use the :focus-visible pseudoselector when attaching these styles.

However, :focus-visible will result in the focus ring showing for text areas and text inputs regardless of whether the user last used a key for navigation or the mouse for navigation.

We wanted keyboard users' text input focus clearly indicated with the new candy-cane bright indicator alongside our usual subtle blue focus effect.

Blurred text input:

Text input focused by a click:

Text input focused by a key event:

This required a more involved approach, beyond just using :focus-visible and changing the box-shadow.

We needed to keep track of the last event type manually

We needed to not overwrite the box-shadow for text input when showing the focus ring

To accomplish the first of these goals, we stored a custom type type InputMethod = Keyboard | Mouse on the model and used Browser.Events.onKeyDown and Browser.Events.onMouseDown to set the InputMethod. We used different styles based on the InputMethod. Since we didn't want, say, arrow events within a textarea to change the user's InputMethod, we also added some light logic based on the tagName of the event target.

For the second of these goals, we needed to be able to customize focus ring styles for inputs that already had box-shadow styles. This work needed to happen one component at a time.

For example, styles for the text input might be applied as follows:

forKeyboardUsers : List Css.Global.Snippet forKeyboardUsers = [ Css.Global.class "nri-input:focus-visible" [ [ "0 0 0 3px " ++ toCssString Colors.white , "0 0 0 6px " ++ toCssString Colors.red , "inset 0 3px 0 0 " ++ toCssString Colors.glacier ] |> String.join "," |> Css.property "box-shadow" , ... ] , ... ] forMouseUsers : List Css.Global.Snippet forMouseUsers = [ Css.Global.everything [ Css.outline Css.none ] , Css.Global.class "nri-input:focus-visible" [ Css.property "box-shadow" ("inset 0 3px 0 0 " ++ toCssString Colors.glacier) , ... ] , ... ]

Now we have nice focus styles for keyboard users, nice focus styles for mouse users, as well as nice blurred styles! Of course, these are just the styles for our text input. There are lots more components to consider!

This is the kind of change where having a library of example uses of every shared component becomes super useful. Having one view to go to to check all the focus rings makes it straightforward -- although tedious -- to make sure that the focus ring will look great everywhere.

For us, we discovered that we needed a "tight" focus ring as well, where the box shadow is more inset, for cases where the ring would otherwise overlap other important content.

[ Css.borderRadius (Css.px 4) , Css.property "box-shadow" <| "inset 0 0 0 2px " ++ toCssString Colors.white ++ ", 0 0 0 2px " ++ toCssString Colors.red ]

We found also that some of our components already had border radiuses, and changing the border radious to 4px so that the focus ring would be nicely rounded was worse than keeping the initial border radius. This meant more per-component customization!

Removing the outline

You may have noticed that so far, none of the code samples for keyboard styles have actually hidden the default browser outline focus indicator. This is an area where we initially made an error: we naively added outline: none, thinking that our fancy new two-toned box-shadow-based focus ring would suffice.

We were wrong!

We forgot to consider and failed to test cases where users are in OS-based high contrast modes. High contrast modes essentially limit the colors that users are shown -- the mode is not inherently high contrast, since the user can customize the palette that is used -- by removing extraneous styling and forcing styles to match the given palette.

Guess what counts as extraneous? The box-shadow comprising our two-toned focus ring!

And if you set outline: none, the outline will not show in high contrast mode either.

Instead of setting outline: none, we need to change the outline to be transparent for keyboard users: Css.outline3 (Css.px 2) Css.solid Css.transparent. The transparent color will (perhaps surprisingly) be coerced to a real color in high contrast mode.

Summing it up

Customizing the look of a focus indicator can make it more useful for users, but it can take a surprising amount of work to get it just right. This work will be easier if you have a centralized place to see every common focusable element from your application at once. The two-toned focus ring in particular is great if your application has content over many different colored backgrounds, but it will be harder to implement if you commonly use box-shadows to accentuate inputs. Don't forget to consider and test high-contrast mode!

Relevant resources

WCAG 2.1 Understanding Success Criterion 2.4.7: Focus Visible

WCAG 2.1 Technique C4: Creating a two-color focus indicator to ensure sufficient contrast with all components

Tessa Kelly

@t_kelly9

0 notes

Text

Presenting Styleguide Colors

The Web Content Accessibility Guidelines (WCAG) include guidelines around how to use colors that contrast against each other so that more people can distinguish them. Color is a fuzzy topic (brains, eyes, displays, and light conditions are all complicating factors!) so it's a good idea to rely on industry-wide standards, like WCAG. The current version of the WCAG standards define algorithms for calculating luminance & contrast and set target minimum contrasts depending on the context in which the colors are used.

These algorithms should be used for their primary purpose -- ensuring that content is accessible and conforms to WCAG -- but they can also be used for other purposes, like making colors in a styleguide presentable.

Colors & Branding

NoRedInk is an education technology site, and the vast majority of our users are students. We use a lot of colors!

To keep track of our colors, we have one file that defines all of our color values with names. We use the Elm programming language on the frontend with the Elm package rtfeldman/elm-css, so our color file looks something like this:

module Nri.Colors exposing (..) import Css exposing (Color) redLight : Color redLight = Css.hex "#f98e8e" redMedium : Color redMedium = Css.hex "#ee2828" ...

(But we have around 70 named colors instead of two.)

We also have a styleguide view that shows each color in an appropriately-colored tile along with the color's name, hex value, and rgb value. These tiles help facilitate communication between Engineering and Design.

But how to present information on the color tiles? How can we make sure that the name and metadata about the color are readable on top of an arbitrarily-colored tile?

We can apply some of the color math that WCAG uses when considering contrast!

Luminance & Contrast

The calculation for "relative luminance" takes a color defined in terms of sRGB and figures out a rough approximation of perceptual brightness. "Relative luminance" is inherently conceptually squishy, but it is a reasonable representation of how prominent a given color is to the human eye.

The relative luminance of pure black is 0 and the relative luminance of pure white is 1.

Once you have the relative luminance of two colors, you're ready to figure out the contrast ratio between them.

(L1 + 0.05) / (L2 + 0.05)

L1 is the relative luminance of the lighter of the colors, and

L2 is the relative luminance of the darker of the colors.

For black and white:

(whiteLuminance + 0.05) / (blackLuminance + 0.05) (1 + 0.05) / (0 + 0.05) 21 Css.Color -> Html msg viewColor name color = dl [ css [ -- Dimensions Css.flexBasis (Css.px 200) , Css.margin Css.zero , Css.padding (Css.px 20) , Css.borderRadius (Css.px 20) -- Internals , Css.displayFlex , Css.justifyContent Css.center -- Colors , Css.backgroundColor color , Css.color (Css.hex "#00") ] ] [ div [] [ dt [] [ text "Name" ] , dd [] [ text name ] , dt [] [ text "RGB" ] , dd [] [ text color.value ] ] ]

Ellie example 1

The content that is supposed to be showing on each tile is often totally illegible!

We know, though, which colors have the minimum and maximum luminance (black and white respectively), which means we know how to figure out whether to use black or white text on any arbitrary color tile.

At NoRedInk, we use the highContrast function from the color library tesk9/palette. tesk9/palette and rtfeldman/elm-css model colors differently, so we do need to do conversions back and forth, but the advantage is that we get nice-looking, readable color tiles without resorting to box-shadow effects or background color tricks. Depending on what rendering libraries you're using, you may or may not need to do conversions.

viewColor : String -> Css.Color -> Html msg viewColor name color = let highContrastColor = toCssColor (SolidColor.highContrast (fromCssColor color)) in dl [ css [ -- Dimensions Css.flexBasis (Css.px 200) , Css.margin Css.zero , Css.padding (Css.px 20) , Css.borderRadius (Css.px 20) -- Internals , Css.displayFlex , Css.justifyContent Css.center -- Colors , Css.backgroundColor color , Css.color highContrastColor ] ] [ div [] [ dt [] [ text "Name" ] , dd [] [ text name ] , dt [] [ text "RGB" ] , dd [] [ text color.value ] ] ]

Ellie example 2

Now the content is legible on each tile!

Legible according to which WCAG level?

I mentioned previously that context (including the target WCAG conformance level) influences the minimum level of contrast required. The highest WCAG level, AAA, requires 7:1 contrast for normal text sizes, which means if our high-contrast color generation always picks a color with at least a contrast of 7, we won't need to worry about the contextual details.

However, for colors with a luminance between 0.1 and 0.3, neither black nor white will be high-enough contrast for WCAG AAA. Either (not both!) black or white will be sufficient for WCAG AA, as the contrast is higher than 4.5.

Ellie with elm-charts code that produced the diagram

What sorts of colors might have a luminance between 0.1 and 0.3?

Relative luminance is defined as:

L = 0.2126 * R + 0.7152 * G + 0.0722 * B where R, G and B are defined as:

if RsRGB - if GsRGB - if BsRGB and RsRGB, GsRGB, and BsRGB are defined as:

RsRGB = R8bit/255

GsRGB = G8bit/255

BsRGB = B8bit/255

Relative luminance definition

I don't want to dig into the details of relative luminance too much, but it's worth paying attention to the different weights for red, green, and blue in the equation. Since the weight for red is 0.2126, pure red falls right in the zone where it cannot be used for WCAG AAA-conformant normal text.

Name RGB color Luminance White contrast Black contrast Red (255, 0, 0) 0.2126 3.99 5.25 Green (0, 255, 0) 0.7152 1.37 15.3 Blue (0, 0, 255) 0.0722 8.59 2.44

Going Further

There is lots more we could do with these color tiles. We could print the color value in other color spaces (if using tesk9/palette, this is easy to do with SolidColor.toHex and SolidColor.toHSLString). We could add a grayscale toggle (see SolidColor.grayscale) to help folks consider if they're using only color to indicate meaning (see "Understanding Success Criterion 1.4.1: Use of Color"). We could organize colors by purpose, by hue, by luminance, or by some combination thereof. We could add a chart showing all our colors & their contrast with all of our other colors, so it's easy for designers to check which colors they can use in combination with other colors. We could also consider the user experience we want when users have adjusted operating system-level color settings, like the popular Windows high contrast mode (learn more about Windows high contrast mode specifically in "The Guide To Windows High Contrast Mode" by Cristian Díaz in Smashing Magazine).

Contrast & luminance values are key building blocks in presenting colors in a styleguide, and, luckily, they are fun values to explore as well.

Future WCAG versions

Now that I've got you excited about WCAG 2.1's contrast calculation, I should also warn you that the 3.0 version of the color guidelines will almost certainly change dramatically. Until WCAG 3.0 is much further along, you likely do not need to understand the changes. The new version promises to be interesting!

If you'd like to play with the WCAG 3.0 Advanced Perception of Color Algorithm (APCA), the website Contrast tools is a nice place to start.

Future CSS properties

Someday, it will be possible to select high-enough contrast colors for a given context directly in CSS with color-contrast(). Watch this snippet from Adam Argyle's 2022 State of CSS presentation to learn more. Be sure to check support for color-contrast before using it anywhere user-facing, though.

Tessa Kelly @t_kelly9

0 notes

Text

SVGs as Elm Code

Moving SVGs out of the file system and into regular Elm code can make icons easier to manage, especially if you find you need to make accessibility improvements.

Imagine we have an arbitrary SVG file straight from our Design team’s tools:

<?xml version="1.0" encoding="utf-8"?><svg version="1.1" id="Layer_1" xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" x="0px" y="0px" viewbox="0 0 21 21" style="enable-background:new 0 0 21 21;" xml:space="preserve"><style type="text/css"> .st0{fill:#FFFFFF;stroke:#146AFF;stroke-width:2;} </style><title>star-outline</title><desc>Created with Something Proprietary 123.</desc><g id="Page-1"><g id="star-outline"><path id="path-1_1_" class="st0" d="M11.1,1.4l2.4,4.8c0.1,0.2,0.3,0.4,0.6,0.4l5.2,0.8c0.4,0.1,0.7,0.4,0.6,0.8 c0,0.2-0.1,0.3-0.2,0.4l-3.8,3.8c-0.2,0.2-0.2,0.4-0.2,0.6l0.9,5.3c0.1,0.4-0.2,0.8-0.6,0.8c-0.2,0-0.3,0-0.5-0.1l-4.7-2.5 c-0.2-0.1-0.5-0.1-0.7,0l-4.7,2.5c-0.4,0.2-0.8,0.1-1-0.3c-0.1-0.1-0.1-0.3-0.1-0.5l0.9-5.3c0-0.2,0-0.5-0.2-0.6L1.2,8.7 c-0.3-0.3-0.3-0.8,0-1c0.1-0.1,0.3-0.2,0.4-0.2l5.2-0.8c0.2,0,0.4-0.2,0.6-0.4l2.4-4.8c0.2-0.4,0.6-0.5,1-0.3 C10.9,1.1,11,1.3,11.1,1.4z"></path></g></g></svg>

Notice that there’s lots of extraneous information in the SVG — including some information that’s distinctly unhelpful! The title of the SVG ends up being used as the accessible name of the SVG — it’s more or less equivalent to an img tag’s alt. A title of “star-outline” will not help our users to understand what this icon is supposed to represent.

Compare the raw SVG value to what it might look like if rewritten as Elm code and tidied-up by a human developer:

import Svg exposing (..) import Svg.Attributes exposing (..) starOutline : Svg msg starOutline = svg [ x "0px" , y "0px" , viewBox "0 0 21 21" ] [ Svg.path [ fill "#FFF" , stroke "#146AFF" , strokeWidth "2" , d "M11.1,1.4l2.4,4.8c0.1,0.2,0.3,0.4,0.6,0.4l5.2,0.8c0.4,0.1,0.7,0.4,0.6,0.8 c0,0.2-0.1,0.3-0.2,0.4l-3.8,3.8c-0.2,0.2-0.2,0.4-0.2,0.6l0.9,5.3c0.1,0.4-0.2,0.8-0.6,0.8c-0.2,0-0.3,0-0.5-0.1l-4.7-2.5 c-0.2-0.1-0.5-0.1-0.7,0l-4.7,2.5c-0.4,0.2-0.8,0.1-1-0.3c-0.1-0.1-0.1-0.3-0.1-0.5l0.9-5.3c0-0.2,0-0.5-0.2-0.6L1.2,8.7 c-0.3-0.3-0.3-0.8,0-1c0.1-0.1,0.3-0.2,0.4-0.2l5.2-0.8c0.2,0,0.4-0.2,0.6-0.4l2.4-4.8c0.2-0.4,0.6-0.5,1-0.3 C10.9,1.1,11,1.3,11.1,1.4z" ] [] ]

Example 1 Ellie link

Once the SVG is rewritten in Elm, we can leverage the Elm type system to guarantee that icons in our application are always rendered in a consistent way. By exposing the Icon type but not exposing the Icon constructor, we can ensure that there's only one way to produce HTML from an Icon. This strategy is the opaque type pattern, which you can learn more about in former NoRedInk engineer Charlie Koster's blog post series on advanced types in Elm and in the Elm Radio podcast's Intro to Opaque Types episode.

module Icons exposing (Icon, toHtml, starOutline) type Icon = -- `Never` is used here so that our Icon type doesn't need a type hole. Essentially, the `Never` is saying "this kind of Svg cannot produce messages ever" Icon (Svg Never) toHtml : Icon -> Html msg toHtml (Icon icon) = -- "Html.map never" transforms `Svg msg` into `Svg Never` Html.map never icon starOutline : Icon -- notice the type changed starOutline = svg ... |> Icon

Now that we've got consistently-rendered icons, we can start thinking about what an accessible way to render the SVGs might be. Carie Fisher’s article Accessible SVGs - Perfect Patterns For Screen Reader Users is the resource to use when considering how to render SVGs in an accessible way. We will be using Pattern 5, <svg> + role="img" + <title>, to ensure that our meaningful icons have appropriate text alternatives. (You might also want to read through the WAI’s Images Tutorial if you haven't already — it might surprise you!)

We need to conditionally insert a title element into the list of SVG children — which means we need to change how we’re modeling Icon. We can easily & safely do this, though, because we’ve used an opaque type to minimize API disturbances.

type Icon = Icon { attributes : List (Attribute Never) , label : Maybe String , children : List (Svg Never) } toHtml : Icon -> Html msg toHtml (Icon icon) = case icon.label of Just label -> svg icon.attributes (Svg.title [] [ text label ] :: icon.children) |> Html.map never Nothing -> svg icon.attributes icon.children |> Html.map never emptyStar : Icon emptyStar = Icon { attributes = [ x "0px" , y "0px" , viewBox "0 0 21 21" ] , label = Nothing , children = [ Svg.path [ fill "#FFF" , stroke "#146AFF" , strokeWidth "2" , d "M11.1,1.4l2.4,4.8c0.1,0.2,0.3,0.4,0.6,0.4l5.2,0.8c0.4,0.1,0.7,0.4,0.6,0.8 c0,0.2-0.1,0.3-0.2,0.4l-3.8,3.8c-0.2,0.2-0.2,0.4-0.2,0.6l0.9,5.3c0.1,0.4-0.2,0.8-0.6,0.8c-0.2,0-0.3,0-0.5-0.1l-4.7-2.5 c-0.2-0.1-0.5-0.1-0.7,0l-4.7,2.5c-0.4,0.2-0.8,0.1-1-0.3c-0.1-0.1-0.1-0.3-0.1-0.5l0.9-5.3c0-0.2,0-0.5-0.2-0.6L1.2,8.7 c-0.3-0.3-0.3-0.8,0-1c0.1-0.1,0.3-0.2,0.4-0.2l5.2-0.8c0.2,0,0.4-0.2,0.6-0.4l2.4-4.8c0.2-0.4,0.6-0.5,1-0.3 C10.9,1.1,11,1.3,11.1,1.4z" ] [] ] }

Example 2 Ellie Link

At this point, the internals of Icon can handle a text alternative being present, but there’s no way from the API to actually set the text alternative . We need to expose a way to set the value of the label of emptyStar and any other icons we later create.

We could change emptyStar to take a Maybe String, or we could add a withLabel : String → Icon → Icon helper, or we could move the label value off of the Icon type and thread the value through toHtml.

At NoRedInk, we use the withLabel : String → Icon → Icon pattern, but any of these patterns could work.

withLabel : String -> Icon -> Icon withLabel label (Icon icon) = Icon { icon | label = Just label }

Once we have a way to add the text alternative to the Icon, we’re ready for the “role” portion of the <svg> + role="img" + <title> pattern.

import Accessibility.Role as Role -- this is from tesk9/accessible-html toHtml : Icon -> Html msg toHtml (Icon icon) = case icon.label of Just label -> svg (Role.img :: icon.attributes) (Svg.title [] [ text label ] :: icon.children) |> Html.map never Nothing -> svg icon.attributes icon.children |> Html.map never

Example 3 Ellie Link

Now, our functional icons should render nicely with a title and with the appropriate role! (Although if we were supporting Internet Explorer, we would also want to add focusable=false)

We still have the decorative icons to consider, though. We can mark these decorative icons as hidden in the accessibility tree so that assistive technologies know to ignore it with aria-hidden=true:

toHtml : Icon -> Html msg toHtml (Icon icon) = case icon.label of Just label -> svg (Role.img :: icon.attributes) (Svg.title [] [ text label ] :: icon.children) |> Html.map never Nothing -> svg (Aria.hidden True :: icon.attributes) icon.children |> Html.map never

Example 4 Ellie Link

And that’s it!

Over time, it’s likely that an Elm-based SVG icon library will need to support more and more customization: colors, styles, attributes, animations, hover effects. All of these and more can be added to the Icon opaque type, without breaking current icons.

There we have it: tidy icon modeling leading to tidy icons.

Tessa Kelly

@t_kelly9

0 notes

Text

Funding the Roc Programming Language

At NoRedInk, we're no strangers to cutting-edge technology or to funding open-source software. When React was released in the summer of 2013, we were early adopters. Shortly after that, we got into Elm—really into it—and we began not only sponsoring Elm conferences, but also funding Elm's development for several years by directly paying its creator, Evan Czaplicki, to develop the language full-time.

I'm beyond thrilled to announce that NoRedInk is now making a similar investment in the Roc programming language. Beginning in April, my job at NoRedInk will become developing Roc full-time!

I created Roc in 2018 because I wanted an Elm-like language for use cases that are out of scope for Elm. Roc compiles to machine code instead of to JavaScript, and at NoRedInk we're interested in using it on the server to go with our Elm frontend—as well as for some command-line tooling. Although Roc isn't ready for production use yet, funding its development like this will certainly get it to that point sooner.

It's impossible to overstate how excited I am about this opportunity. When I laid out a vision for Roc in a 2020 online meetup, I assumed I'd be developing it outside of work with a couple of other volunteers for the foreseeable future. I was stunned by the reaction to the video; many people started getting involved in developing the compiler—most of whom had never worked on a compiler before!—and one brave soul even did Advent of Code in Roc that year.

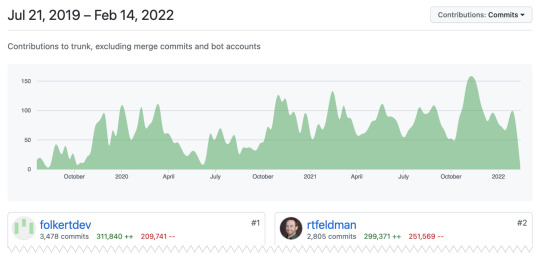

Today Roc has 12,660 commits. The top 5 committers all have either hundreds or thousands of commits, and even though I had a considerable head start, I no longer have the most commits in the repo - the excellent Folkert de Vries does. I'm massively grateful to every contributor for making this project exist, and although commits are easy to count, Roc's design and community would not be what they are without so many wonderful contributions outside the code base—in video chats, on GitHub issues, and of course on Roc chat. Thank you, all of you, for making Roc the language it is today.

The language still has a ways to go before it's ready for production use, but this investment from NoRedInk is both a game-changer for the project's development as well as a strong vote of confidence in Roc's future. Most companies benefit from the open-source ecosystem and give little to nothing back; I feel great about working for a company that builds a product that helps English teachers while making serious investments in open-source software.

By the way, we're hiring!

If you're interested in learning more about Roc, trying it out, or getting involved in its development, roc-lang.org has all the details. I'm so excited for the future, and can't wait for this language to reach its full potential!

Richard Feldman

@rtfeldman

0 notes

Text

Tuning Haskell RTS for Kubernetes, Part 2

We kept on tweaking our Haskell RTS after we reached "stable enough" in part 1 of this series, trying to address two main things:

Handle bursts of high concurrency more efficiently

Avoid throttling due to CFS quotas in Kubernetes

If you're unfamiliar with it, here's a comprehensive article from Omio

We also learned some interesting properties of the parallel garbage collector in the process.

TL;DR

Profile your app in production

Threadscope is your friend

Disabling the parallel garbage collector is probably a good idea

Increasing -A is probably a good idea

-N⟨x⟩ == available_cores is bad

We ran into this problem in the previous post of our series, where we tried to set:

-N3

--cpu-manager-policy=static

requests.cpu: 3

requests.limits: 3

We tried this configuration because we were hoping to disable Kubernetes CFS quotas and only rely on CPU affinity to prevent noisy neighbours and worker nodes overload.

Trying this out I saw p99 response times rise from 16ms to 29ms, enough to affect stability of our upstream services.

Confused, I reached out for help on the Haskell Discourse.

Threadscope

Folks on Discourse were quick to help me drill down into GC as a possible cause for slowness, but I had no idea how to go about tuning it, if not at random.

The first helpful advice I got was to use Threadscope, a graphical viewer for thread profile information generated by GHC.

Capturing event logs for ThreadScope

The first thing I had to do to be able to use ThreadScope was to build a version of our app with the -eventlog flag in our package.yaml:

executables: quiz-engine-http: dependencies: ... ghc-options: - -threaded + - -eventlog main: Main.hs ...

This makes it so our app ships with the necessary instrumentation, which we can turn on and off at launch.

Then I had to enable event logging by launching our app with the -l RTS flag, like so:

$ quiz-engine-http +RTS -N3 -M5.8g -l -RTS

This makes it so Haskell logs events to a file while it's running. I decided to make a single Pod use these settings alongside the rest of our fleet, taking production traffic.

Last, I had to grab the event log, which gets dumped to a file like your-executable-name.eventlog. That could be done with kubectl cp.

The log grew around 1.2MB/s, and Threadscope takes a while to load large event logs, so I went for short recording sessions of around 3min.

Launching ThreadScope

With the event log in hand, I could finally launch ThreadScope:

$ threadscope quiz-engine-http.eventlog

ThreadScope showed me a chart of app execution vs GC execution and a bunch of metrics.

Interesting metrics

Productivity was the first interesting metric I saw. It tells you what % of time your actual application code is running, the remainder of which is taken over by GC.

In our case, we had 88.2% productivity, so 11.8% of the time our app was doing nothing, waiting for the garbage collector to run.

Our average GC pause was 20μs long, or 0.0002s. Really fast.

GHC made 103,060 Gen 0 collections in the 210s period, which is a bit ridiculous. This means we did 490 pauses per second, or one pause every 2ms. Our app's average response time is 1.8ms, so with 3 capabilities, we were running GC on average once every 6 requests.

In comparison, we made 243 Gen 1 collections, so a little over 1s. Gen 1 was OK.

Is the parallel GC helping me?

Another quick suggestion I got on Discourse was disabling the parallel garbage collector, so I went on to test that, with Threadscope by my side.

I used the -qg RTS flag to disable parallel GC, and -qn⟨x⟩ for keeping it enabled, but only using ⟨x⟩ threads.

This is how ThreadScope metrics and our 99th percentile response times were affected by the different settings:

RTS setting p99 productivity g0 pauses/s avg g0 pause -N3 -qn3 29ms 88.2% 488 0.2ms -N3 -qn2 21ms 89.8% 558 0.1ms -N3 -qg 17ms 88.9% 593 0.1ms

Pauses times seemed to improve, but we don't have enough resolution in Threadscope to see whether it was a 0.01ms improvement, or a full 0.1ms improvement.

Collections got more frequent, for reasons unknown

Productivity lowered when we went down from 2 threads to 1 thread

p99 response time was the best when the parallel GC was disabled

Conclusion: the parallel GC wasn't helping us at all

Is our allocation area the right size?

The last of the helpful suggestions we got on Discourse was tweaking -A, which controls the size of Gen 0.

The docs warn:

Increasing the allocation area size may or may not give better performance (a bigger allocation area means worse cache behaviour but fewer garbage collections and less promotion).

What does cache behavior mean here? Googling led me to this StackOverflow answer by Simon Marlow explaining that using -A higher than the CPU's L2 cache size means we lower the L2 hit rate.

Our AWS instances are running Intel Xeon Platinum 8124M, which has 1MB of L2 cache per core, and the default -A is 1MB, so any increase would already spell a reduced L2 hit rate for us.

We compared 3 different scenarios:

RTS setting p99 productivity g0 pauses/s avg g0 pause -N3 -qn3 -A1m 29ms 88.2% 488 0.2ms -N3 -qn3 -A3m 18ms 95.6% 144 0.2ms -N3 -qn3 -A128m 16ms 99.6% 1.2 2ms

The L2 cache hit rate penalty didn't seem to affect the sorts of computations we are running, as -A128m still has the fastest p99 response time.

-A128m seemed a bit ridiculous, but we had memory to spare, so we went with it. The 2ms average pause was close to our p75 response time, so it seemed fine to stop the world once per second for the time of 1 request slow'ish request to take out the trash.

Unlocking higher values for -N

Our app had been having hiccups in production. For a second a database would get slow and would cause our Haskell processes, which usually handle around 2-4 in-flight requests at a time, to flood with 20-40 of them.

Eating through this pile of requests would often take less than a minute, but would then cascade upstream into request queueing and some high-latency alerts, informing us that a high percentage of our users were having a frustrating experience with our website.

Whenever this happened, we did not see CPU saturation. CPU usage remained around 65-70%. It made me think our Haskell threads were not being effective, and higher parallelism could help us leverage our cores better, even at the cost of some context switching.

I was eager to try a higher -N than the taskset core count I gave to our processes, but was unable to until now, because setting -N higher than the core count would bring the productivity metric down quickly, and would increase p99 response times.

With our new findings, and a close eye on nonvoluntary_ctxt_switches in /proc/[pid]/status, I managed to get to us to -N6, which seemed enough to reduce the frequency of our hiccups to a few times a month, versus daily.

These were our final RTS settings, with -N6, compared to what we started:

RTS setting p99 productivity g0 pauses/s avg g0 pause -N3 -qn3 -A1m 29ms 88.2% 488 0.2ms -N6 -qg -A128m 13ms 99.5% 0.8 4.2ms

These numbers were captured on GHC 8.8.4. We did upgrade to GHC 8.10.6 to try the new non-moving garbage collector, but saw no improvement.

Conclusion

Haskell has pretty good instrumentation to help you tune garbage collection. I was intimidated by the prospect of trying to tune it without building a mental model of all the settings available first, but profiling our workload in production proved easy to set up and quick to iterate on.

Juliano Solanho @julianobs Engineer at NoRedInk

Thank you Brian Hicks and Ju Liu for draft reviews and feedback! 💜

0 notes

Text

Tuning Haskell RTS for Kubernetes, Part 1

We're running Haskell in production. We've told that story before.

We are also running Haskell in production on Kubernetes, but we never talked about that. It was a long journey and it wasn't all roses, so we're going to share what we went through.

TL;DR

Configure Haskell RTS settings:

Match -N⟨x⟩ to your limits.cpu

Match -M⟨size⟩ to your limits.memory

You can set them between +RTS ... -RTS arguments to your program, like +RTS -M5.7g -N3 -RTS.

Our scenario

We had been running Haskell in production before Kubernetes. Each application was the single inhabitant of its own EC2 instance. Things were smooth. We launched the executable, provisioned what looked like fast enough instances, and things just worked.

We could have kept our conditions pretty much the same when moving to Kubernetes by giving our Haskell Pods as much requests.memory and requests.cpu as our worker nodes, so each machine runs a single Pod.

We had two main incentives to run small Pods, all packed together into beefier worker nodes:

Our traffic is very seasonal, and even within a single day we go from 1000 requests per minute at night to close to 500,000 requests per minute when both east- and west-coast are at school. If we can scale down to the smallest footprint at idle, we save money.

We use Datadog for infrastructure monitoring, and Datadog charges customers on a per-host basis. If we used small worker nodes, at peak traffic we'd be needing so many of them that our Datadog bill would become prohibitive.

We wanted effective resource utilization at idle and at peak while keeping costs under control.

We googled for tips, war stories or even fanfiction on Haskell in Kubernetes, and the two ⁽¹⁾⁽²⁾ results we found were pretty old, and didn't get into any specifics on how Haskell itself behaves in a containerized environment, so it seemed like there'd be no dragons here.

With this in mind we launched our highest traffic Haskell service in prod with:

2 cores

200MB memory

70% target CPU usage on our Horizontal Pod Autoscaler

And called it a day.

Fires

After we went live we saw:

😨 Terrible performance: everything was slow

😳 Frequent container restarts: it looked like the GC wasn't working at all and the processes were getting OOMKilled frequently

🤕 Horrendous performance at scale-up: When we got bursts of traffic, response times would shoot up and cause request queueing in our upstream service

This last one was kind of obvious. At 70% target CPU usage, even if our app was able to saturate the machine's CPU to 99.99% without slowing down, and even if it had linear rate for requests to CPU usage, we'd only have room for 30% growth in traffic while waiting for a scale-up. This was not enough slack, due to two main factors:

AWS EKS takes close to 3 minutes to scale up Pods when worker node scaling is also necessary. 3 minutes is a lot time when we're ramping up 500x in a few hours. At peak season, we more than double our traffic every 3 minutes during ramp-up when the East Coast is starting school.

It's partly fixable by using the cluster-overprovisioner pattern, which we do, but outside of 1-node scale-ups, the option seems to be tweaking AWS VPC CNI's startup, which we haven't looked into yet.

Kubernetes has no concept of create-before-destroy. Shifting Pods around for Cluster Autoscaler's bin-packing operation and for kubectl drain works by first terminating one or more Pods, then letting the scheduler recreate them on another Node. Say we have 3 Pods alive, between terminating one Pod and going Ready with its substitute, our compute capacity is reduced by 33%.

It might be fixable by writing our own create-before-destroy operation, forking cluster-autoscaler to use that instead, and also using it to write our own drain script. Things like Pod eviction due to taints will be out of reach, but that might be acceptable. Regardless, we chose not to go down that path.

So we lowered our target CPU usage to 50%, and scale-ups were safe.

While fighting frequent container restarts, we kept being less conservative about memory, going all the way up to 2GB per core. Our app consistently used ~100MB of RAM before moving to Kubernetes, so we were surprised. It might be we introduced space/memory leaks at the same time as we moved to Kubernetes, but also, the Haskell garbage collector didn't seem to be aware it was reaching a memory limit. So we started looking at Haskell RTS GC settings.

While diagnosing terrible performance, we noticed Haskell was spinning up dozens of threads for each tiny Pod, and we knew from working with the Elm compiler (also written in Haskell), that Haskell doesn't care about virtualized environments when figuring out the capabilities of the machine it's running on. We figured something similar was at play and we might have to tune the RTS.

Tuning the RTS

The two settings that helped us get over terrible performance and frequent container restarts were:

-M⟨size⟩

This setting tells the Haskell RTS what should be the maximum Heap size, which also informs other garbage collector parameters.

So we set the maximum heap size a bit below our Pods' limits.memory, and the GC started acting more aggressively to prevent us from going over limits.memory. We managed to stop getting OOMKilled.

Eventually, as sneakily as they appeared, our space or memory leaks went away, and we went down to a stable 200MB of memory usage per process.

-N⟨x⟩

The docs are a bit misleading here:

Use ⟨x⟩ simultaneous threads when running the program

Without reading further, we thought setting -N2 would get us 2 threads for our 2-core Pods, but we were still seeing more than 10 threads per process.

⟨x⟩ here is what the RTS calls capabilities, which the docs clarify further on:

A capability is animated by one or more OS threads; the runtime manages a pool of OS threads for each capability, so that if a Haskell thread makes a foreign call (see Multi-threading and the FFI) another OS thread can take over that capability.

Normally ⟨x⟩ should be chosen to match the number of CPU cores on the machine

Ok, that's expected then, albeit a bit weird that it's such a big pool for only two capabilities.

Regardless, performance was actually good again with -N matching our CPU count.

In the end, we landed on 3 cores per Pod and -N3: Kubernetes reserves a few hundred millicores of each worker node for its manager process (the kubelet) and this meant we'd only be able to use 14 cores on a 16 cores node. 2 cores would go to waste, unless we had enough pebbles in our cluster, which we didn't.

Obligatory detour through CFS Throttling

At the same time we also learned about CFS throttling, and learned to keep an eye on how much we were getting throttled. For -N2 and 2 cores, it was infrequent.

In the hopes of disabling CFS completely, like Zalando did, we did trial running our Nodes with --cpu-manager-policy=static. This uses taskset to give Pods exclusive access to certain cores.

Our idea was to constrain high throughput Pods to their own cores, in order to spare processes from noisy neighbours and prevent worker nodes from overloading.

We saw a steep drop in performance, so we backed away. We ended up figuring out why, but that's the subject of another blog post. (hint: it's the parallel GC)

Production-ready enough

Performance was good

Containers weren't restarting anymore

We were churning out close to 500,000 requests per minute on 7 Pods, each with 3 capabilities and eating less than 200MB of RAM

Autoscaling was smooth

It wasn't the end of our ramblings on the Haskell RTS options page, we still had daily incidents where Haskell would slow down for a few seconds, cause upstream request queueing and trigger our fire alerts, but that's a story for another day.

Juliano Solanho @julianobs Engineer at NoRedInk

Thank you, Brian Hicks, Ju Liu and Richard Feldman for draft reviews and feedback! ❤️

1 note

·

View note

Text

Haskell for the Elm Enthusiast

Many years ago NRI adopted Elm as a frontend language. We started small with a disposable proof of concept, and as the engineering team increasingly was bought into Elm being a much better developer experience than JavaScript more and more of our frontend development happened in Elm. Today almost all of our frontend is written in Elm.

Meanwhile, on the backend, we use Ruby on Rails. Rails has served us well and has supported amazing growth of our website, both in terms of the features it supports, and the number of students and teachers who use it. But we’ve come to miss some of the tools that make us so productive in Elm: Tools like custom types for modeling data, or the type checker and its helpful error messages, or the ease of writing (fast) tests.

A couple of years ago we started looking into Haskell as an alternative backend language that could bring to our backend some of the benefits we experience writing Elm in the frontend. Today some key parts of our backend code are written in Haskell. Over the years we’ve developed our style of writing Haskell, which can be described as very Elm-like (it’s also still changing!).

🌳 Why be Like Elm?

Elm is a small language with great error messages, great documentation, and a great community. Together these make Elm one of the nicest programming languages to learn. Participants in an ElmBridge event will go from knowing nothing of the language to writing a real application using Elm in 5 hours.

We have a huge amount of Elm code at NoRedInk, and it supports some pretty tricky UI work. Elm scales well to a growing and increasingly complicated codebase. The compiler stays fast and we don’t lose confidence in our ability to make changes to our code. You can learn more about our Elm story here.

📦 Unboxing Haskell

Haskell shares a lot of the language features we like in Elm: Custom types to help us model our data. Pure functions and explicit side effects. Writing code without runtime exceptions (mostly).

When it comes to ease of learning, Haskell makes different trade-offs than Elm. The language is much bigger, especially when including the many optional language features that can be enabled. It’s entirely up to you whether you want to use these features in your code, but you’ll need to know about many of them if you want to make use of Haskell’s packages, documentation, and how-tos. Haskell’s compiler errors typically aren’t as helpful as Elm’s are. Finally, we’ve read many Haskell books and blog posts, but haven’t found anything getting us from knowing no Haskell to writing a real application in it that’s anywhere near as small and effective as the Elm Guide.

🏟️ When in Rome, Act Like a Babylonian

Many of the niceties we’re used to in Elm we get in Haskell too. But Haskell has many additional features, and each one we use adds to the list of things that an Elm programmer will need to learn. So instead we took a path that many in the Haskell community took before us: limit ourselves to a subset of the language.

There are many styles of writing Haskell, each with its own trade-offs. Examples include Protolude, RIO, the lens ecosystem, and many more. Our approach differs in being strongly inspired by Elm. So what does our Elm-inspired style of writing Haskell look like?

🍇 Low hanging fruit: the Elm standard library

Our earliest effort in making our Haskell code more Elm-like was porting the Elm standard library to Haskell. We’ve open-sourced this port as a library named nri-prelude. It contains Haskell counterparts of the Elm modules for working with Strings, Lists, Dicts, and more.

nri-prelude also includes a port of elm-test. It provides everything you need for writing unit tests and basic property tests.

Finally, it includes a GHC plugin that makes it so Haskell’s default Prelude (basically its standard library) behaves like Elm’s defaults. For example, it adds implicit qualified imports of some modules like List, similar to what Elm does.

🎚️ Effects and the Absence of The Elm Architecture

Elm is opinionated in supporting a single architecture for frontend applications, fittingly called The Elm Architecture. One of its nice qualities is that it forces a separation of application logic (all those conditionals and loops) and effects (things like talking to a database or getting the current time). We love using The Elm Architecture writing frontend applications, but don’t see a way to apply it 1:1 to backend development. In the F# community, they use the Elm Architecture for some backend features (see: When to use Elmish Bridge), but it’s not generally applicable. We’d still like to encourage that separation between application logic and effects though, having seen some of the effects of losing that distinction in our backend code. Read our other post Pufferfish, please scale the site! if you want to read more about this.

Out of many options we’re currently using the handle pattern for managing effects. For each type of effect, we create a Handler type (we added the extra r in a typo way back and it has stuck around. Sorry). We use this pattern across our libraries for talking to outside systems: nri-postgresql, nri-http, nri-redis, and nri-kafka.

Without The Elm Architecture, we depend heavily on chaining permutations through a stateful Task type. This feels similar to imperative coding: First, do A, then B, then C. Hopefully, when we’re later on in our Haskell journey, we’ll discover a nice architecture to simplify our backend code.

🚚 Bringing Elm Values to Haskell

One way in which Haskell is different from both Elm and Rails is that it is not particularly opinionated. Often the Haskell ecosystem offers multiple different ways to do one particular thing. So whether it’s writing an http server, logging, or talking with a database, the first time we do any of these things we’ll need to decide how.

When adopting a Haskell feature or library, we care about

smallness, e.g. introduce new concepts only when necessary

how “magical” is it? E.g. How surprising is it?

How easy is it to learn?

how easy is it to use?

how comprehensible is the documentation?

explicitness over terseness (but terseness isn’t implicitly bad).

consistency & predictability

“safety” (no runtime exceptions).

Sometimes the Haskell ecosystem provides an option that fits our Elm values, like with the handle pattern, and so we go with it. Other times a library has different values, and then the choice not to use it is easy as well. An example of this is lens/prism ecosystem, which allows one to write super succinct code, but is almost a language onto itself that one has to learn first.

The hardest decisions are the ones where an approach protects us against making mistakes in some way (which we like) but requires familiarity with more language features to use (which we prefer to avoid).

To help us make better decisions, we often try it both ways. That is, we’re willing to build a piece of software with & without a complex language feature to ensure the cost of the complexity is worth the benefit that the feature brings us.

Another approach we take is making decisions locally. A single team might evaluate a new feature, and then demo it and share it with other teams after they have a good sense the feature is worth it. Remember: a super-power of Haskell is easy refactorability. Unlike our ruby code, going through and doing major re-writes in our Haskell codebase is often an hours-or-days-long (rather than weeks-or-months-long) endeavor. Adopting two different patterns simultaneously has a relatively small cost!

Case studies in feature adoption:

🐘 Type-Check All Elephants

One example where our approach is Elm-like in some ways but not in others is how we talk to the database. We’re using a GHC feature called quasiquoting for this, which allow us to embed SQL query strings directly into our Haskell code, like this:

{-# LANGUAGE QuasiQuotes #-} module Animals (listAll) where import Postgres (query, sql) listAll :: Postgres.Handler -> Task Text (List (Text, Text)) listAll postgres = query postgres [sql|SELECT species, genus FROM animals|]

A library called postgresql-typed can test these queries against a real Postgres database and show us an error at compile time if the query doesn’t fit the data. Such a compile-time error might happen if a table or column we reference in a query doesn’t exist in the database. This way we use static checks to eliminate a whole class of potential app/database compatibility problems!

The downside is that writing code like this requires everyone working with it to learn a bit about quasi quotes, and what return type to expect for different kinds of queries. That said, using some kind of querying library instead has a learning curve too, and query libraries tend to be pretty big to support all the different kinds of queries that can be made.

🔣 So Many Webserver Options

Another example where we traded additional safety against language complexity is in our choice of webserver library. We went with servant here, a library that lets you express REST APIs using types, like this:

import Servant data Routes route = Routes { listTodos :: route :- "todos" :> Get '\[JSON\] [Todo], updateTodo :: route :- "todos" :> Capture "id" Int :> ReqBody '[JSON] Todo :> Put '[JSON] NoContent, deleteTodo :: route :- "todos" :> Capture "id" Int :> Delete '[JSON] NoContent } deriving (Generic)

Servant is a big library that makes use of a lot of type-level programming techniques, which are pretty uncommon in Elm, so there’s a steep learning cost associated with understanding how the type magic works. Using it without a deep understanding is reasonably straightforward.

The benefits gained from using Servant outweigh the cost of expanded complexity. Based on a type like the one in the example above, the servant ecosystem can generate functions in other languages like Elm or Ruby. Using these functions means we can save time with backend-to-frontend or service-to-service communication. If some Haskell type changes in a backward-incompatible fashion we will generate new Elm code, and this might introduce a compiler error on the Elm side.

So for now we’re using servant! It’s important to note that what we want is compile-time server/client compatibility checking, and that’s why we swallow Servant’s complexity. If we could get the same benefit without the type-level programming demonstrated above, we would prefer that. Hopefully, in the future, another library will offer the same benefits from a more Elm-like API.

😻 Like what you see?

We're running the libraries discussed above in production. Our most-used Haskell application receives hundreds of thousands of requests per minute without issue and produces hardly any errors.

Code can be found at NoRedInk/haskell-libraries. Libraries have been published to hackage and stackage. We'd love to know what you think!

2 notes

·

View notes

Text

🌉 Bridging a typed and an untyped world

Even if you work in the orderly, bug-free, spic-and-span, statically-typed worlds of Elm and Haskell (like we do at NoRedInk, did you know we’re hiring?), you still have to talk to the wild free-wheeling dynamically-typed world sometimes. Most recently: we were trying to bridge the gap between Haskell (🧐) and Redis(🤪). Here we’ll discuss two iterations of our Redis library for Haskell, nri-redis.

All examples in this code are in Haskell and use a few functions from NoRedInk’s prelude nri-prelude. Specifically, we will use |> instead of &, Debug.toString instead of show and a few functions from Expect. Most of the example code could be real tests.

💬 Redis in Haskell

Let’s begin with a look at an earlier iteration of nri-redis (a wrapper around hedis). We are going to work with two functions get and set which have the following type signatures:

set :: Data.Aeson.ToJSON a => Text -> a -> Query () get :: Data.Aeson.ToJSON a => Text -> Query (Maybe a)

Let’s use this API for a blogging application that stored blog posts and users in Redis.

data User = User { name :: Text -- maybe more fields later } deriving (Generic, Show, Eq) data Post = Post { title :: Text -- ... } deriving (Generic, Show)

Maybe you noticed that we derive Generic for both types. We will store users and posts as JSON in Redis. Storing data as JSON in Redis is simple, and we only need an additional instance for decoding and encoding to JSON.

instance Data.Aeson.ToJSON User instance Data.Aeson.FromJSON User instance Data.Aeson.ToJSON Post instance Data.Aeson.FromJSON Post

Now how do we write something to Redis?

Redis.set "user-1" User { name = "Luke" } -- create a query |> Redis.query handler -- run the query |> Expect.succeeds -- fail the test if the query fails

We use Redis.set, which corresponds to set. We can then execute the query using Redis.query. We can read the data back using a get.

maybeUser <- Redis.get "user-1" |> Redis.query handler |> Expect.succeeds Expect.equal (Just User {name = "Luke" }) maybeUser

🐛 What can go wrong?

Now that we know how to read and write to Redis let’s look at this example. Can you spot the error?

let key1 = "user-1" let key2 = "post-1" Redis.set key1 User { name = "Obi-wan Kenobi" } |> Redis.query handler |> Expect.succeeds Redis.set key1 Post { title = "Using the force to wash your dishes" } |> Redis.query handler |> Expect.succeeds maybeUser <- Redis.get key1 |> Redis.query handler |> Expect.succeeds Expect.equal (Just User {name = "Obi-wan Kenobi"}) maybeUser -- !!! "Could not decode value in key: Error in $: parsing User(User) failed, key 'name' not found"

A runtime error?! in Haskell?! Say it ain’t so.

Maybe you spotted the bug: We are using key1 to set the post instead of key2.

First, we set the data in key1 to be a User

We then replaced it with a Post.

We fetch the data from key1 (a Post) into maybeUser.

The compiler thinks maybeUser is of type Maybe User, because we compare it with Maybe User in Expect.Equal.

At runtime, the generated code from the FromJSON instance will then fail to decode the Post’s json-serialization into User.

which will cause our program to crash.

This is not the only thing that can go wrong! Let’s consider the next example:

let users = [ ("user-1", User { name = "Obi"}) , ("user-2", User { name = "Yoda"}) ] Redis.set "user-1" users |> Redis.query handler |> Expect.succeeds maybeUser <- Redis.get "user-1" |> Redis.query handler |> Expect.succeeds Expect.equal (Just User { name = "Obi"}) maybeUser -- !!! "Could not decode value in key: Error in $: parsing User(User) failed, expected Object, but encountered Array"

We called set with users instead of a User (or called Redis’s mset on the list users). Again, this compiles but fails at runtime when we assume that we receive one User when we call get for this key.

🛡️ Can we make the bug impossible?

The previous examples showed how easy it was to write bugs with such a generic API—the program compiled in both cases but failed at runtime. The compiler couldn't save us because set and get both accept any Text as a key and only constrain the value to have an instance of To/FromJSON.

-- reminder: the API that allowed all kinds of havoc set :: Data.Aeson.ToJSON a => Text -> a -> Query () get :: Data.Aeson.ToJSON a => Text -> Query (Maybe a)

Want to set a User and fetch a list of Posts from the same key? This API will let you (and then fail loudly in production).

Ideally, we would get a compiler error that prevents mixing up keys or passing the wrong value when writing to Redis. We decided to use an Elm-ish approach, avoiding making the API too magic.

Instead of using commands directly, we introduced a new type called Redis.Api key value. Its purpose is to bind a concrete type for key and value to commands.

Let’s try to make the same mistake we made earlier, using the wrong type, with our new Redis.Api. Let’s first create such an Api.

newtype UserId = UserId Int userApi :: Redis.Api UserId User userApi = Redis.jsonApi (\userId -> "user-" ++ Debug.toString userId)

We bound UserId to User by adding a top-level definition and giving it a type signature. Additionally, we created a newtype instead of relying on Text as the key. This will guarantee that we don’t call userApi with a post key.

Now to use this, we call functions by “passing” them the new userApi. Side note: Redis.Api is a record, and we get the commands from it.

let users = [(UserId 1, User { name = "Obi"}), (UserId 2, User { name = "Yoda"})] Redis.set userApi (UserId 1) users |> Redis.query handler |> Expect.succeeds

We catch the bug at compile time without writing a test (and well before causing a fire in production).

• Couldn't match expected type ‘User’ with actual type ‘[(UserId, User)]’ | 325 | Redis.set userApi key1 users | ^^^^^

🤗 Making Tools Developer-Friendly

Our initial Redis API used a very generic type for keys and values (only constraint to implement To/FromJSON). This optimized the library for versatility but made the resulting application code less maintainable and error-prone.