Text

Resolution vs Sharpness: Slide 61

Slide 61 sits somewhere in the middle of a 98-slide Keynote deck presented by Panavision and Light Iron at the 2017 Camerimage Film Festival in Bydgoszcz, Poland. I’ve spent time at numerous festivals including Cannes, Sundance, and Tribeca, but comparatively speaking, the Camerimage Film Festival is without question my favorite. Among many cultural elements, what sets this festival apart from others is the quality of lectures and the atmosphere for high-end learning. Unlike spontaneous panels or even Q&A’s at other festivals, Camerimage has top-quality presentations from both the creative and technical perspectives. Since my personal mantra is always to combine these worlds together with what I call a technative approach, Camerimage is an ideal place to hear new ideas, discuss new ideas, and present new concepts.

So last November, my colleagues Dan Sasaki, Ian Vertovec, and myself presented a resolution analysis in a session at the MCK Theater, a lecture hall a short walk away from the Opera Nova where most of the films at the festival are screened. Our session was titled “The Beauty of 8K Large Format” and the 260 seat room was quickly filled to capacity while the MCK lobby played host to another hundred or so who viewed the lecture on monitors that lined the lobby walls.

Throughout our presentation were excerpts from an interview with cinematographer Peter Deming, ASC that I conducted a few days before the event. Between Peter’s powerful testimonial and our research, I felt the audience was pretty engaged by the time we got to Slide 61. In fact, Slide 61 is actually pretty simple and straight forward. You don’t have to fully understand how CMOS image capture actually works in order to make sense of what Slide 61 demonstrates. But what I found most interesting is what Slide 61 means to different people. To some, it represents justification for a decade of digital cinema passion with resolution being a familiar anchor point. For others, Slide 61 explains a lot about the tenuous relationship between the different dogmas of the RED and Arri camps. And to some, Slide 61 represents something creatives haven’t considered before, perhaps an opportunity to take a second look at how resolution has not only been misunderstood, but misrepresented.

In all, Slide 61 sparked an impressive amount of conversations in a dozen different tongues. Over the course of the next few days, I found myself in countless discussions with people from all over the world about our conclusions and was told by a few, “This was one of the best presentations I’ve ever seen!” (#humbled). Others said, “That slide (61) could change a lot about digital cinema theory going forward.” A few even said, “This is the slide heard around the world.”

Before we get to Slide 61, I wanted to briefly share a more personal note. This event embodies why I do what I do. Over the years I have had the honor of leading teams of talent who are willing to challenge the status quo and I always gravitate towards people who are comfortable with being uncomfortable. While all are welcome to disagree with my conclusions, I find few are willing to criticize my passion. My primary goal is to identify the boundaries of where technology and creativity intersect and learn how to leverage that area for improved creative control. While doing that, I aim to keep an open mind as to what living in this intersection teaches me over time. This technological and creative place is often a state of mind; a place where I believe the best ingredients reside for unbridled innovation. I call working in this place being technative.

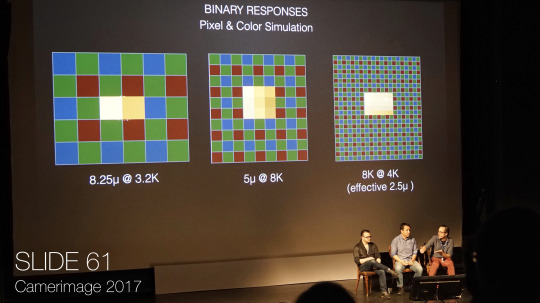

So here is Slide 61. Just a few colored boxes perched above a few basic numbers. This photo of myself, Dan, and Ian not only represents a crossroads in opinion, it’s also one of the most rewarding moments of my career. To get the full impact of this slide, you can watch the presentation here.

We all understand that some things in art are binary; that is to say they are absolute (aspect ratios, framerates, focal lengths, incident light readings, etc.). But we also respect many things in art are open to interpretation. This is why conversations are so critical and why an open mind is possibly the most important tool for artists, especially in technologically-driven industries like ours. In our Camerimage presentation, we argued that resolution is not only important, it’s the core ingredient to the 3 most important mechanical components in creating images from a camera:

1. Acquisition & Exhibition (separating these concepts from one another)

2. Resolution & Sharpness (separating these concepts from one another)

3. Magnification & Perspective (understanding their relationship with 1 & 2)

Every time Dan, Ian, and myself made a point, we used a chart (binary) to back up our point followed by an image (subjective) to allow for personal interpretation. Our strategy in delivering this message was to use the combination of technology and creativity together in order to allow each audience member to follow along and draw their own conclusions. So why is it that Slide 61 resonated with so many people?

The actual story of Slide 61 began a few years back when I borrowed the first prototype RED 8K VV sensor from RED President, Jarred Land. At the time, many people on our team (not withstanding the cinematography community) were concerned about what a 35 megapixel CMOS sensor would do to a face. Specifically, there was concern about the balance between sharpness, contrast, and optics based on an massive jump in motion picture pixel count and pixel density. At the time time we were doing initial tests, the climate for large format photography was beginning an upward trend (you could argue, again). Arri recently released the Alexa 65, a 20 megapixel, 54mm large format sensor. Tarantino recently directed The Hateful Eight, shot and released in Panavision Ultra 70mm. My father and I attended a special screening of Kubrick’s 2001: A Space Odyssey remastered in 4K and released on a brand new 65mm print at Arclight Cinemas. So after testing a prototype 8K Weapon, I decided to publish one of our tests and see what the reaction was. AC Phil Newman, Keenan Mock, and AP Megan Swanson helped me shoot a short portrait of my friend Erin Gales, a health coach and professional body builder. On the surface, this portrait was a safe way to gather intel about what people understood or didn’t understand about large format and high resolution. The comments on the Vimeo link still demonstrate how new the concept of large format is. It’s also pretty clear proof as to how expectation and bias significantly impacts opinion.

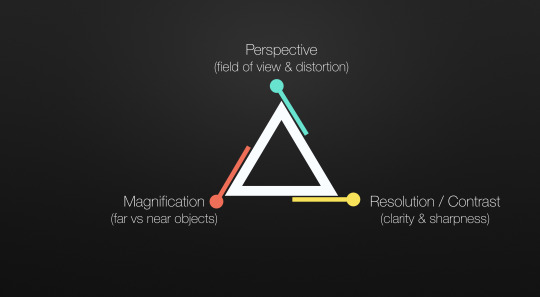

But underneath, this test was a way to further evaluate the Primo 70 lenses using the RED’s 16bit, 35 megapixel, 46mm large format sensor and how it would (eventually) behave in the DXL camera. I didn’t realize it at the time, but the results of this test ended up being the first step in understanding the elements that would eventually make up Slide 61: perspective, magnification, resolution and contrast. These are the core elements that make a large format image trigger a different response from that of typical 35 images (it’s actually also one of the main reasons other than aspect ratio that anamorphic images trigger such a unique response). The below equilateral triangle is designed by Dan Sasaki to measure the balance between these three tenants and how they rely on each other to improve an image.

It was images from the Erin Gales test (and subsequent tests like it) that helped pave the way for Dan’s triangle. In the below frame grab you can see the physical results of a shallower depth of field and increased z-depth based on the focal length (magnification), less distortion or trapezoidal geometry (perspective), and more subtle transitions in dynamic range from shadow to highlight (resolution contrast). These exact characteristics in this exact situation are only possible possible through the combination of high resolution and a large format sensor.

Now, it is possible for these tenets to be independent from one another. For example, you can have high resolution (8K) on a smaller sensor (such as a Helium) which results in less magnification but more transitional dynamic range (due to a 3.6µ pixel pitch). Or you can have a less resolution (6K) on a larger sensor (59mm) which results in more magnification but with less transitional dynamic range (due to a 8.25µ pixel pitch).

This is probably as good a place as any to bring up a comment you may have heard before...maybe you’re even thinking of it now. In fact, if you ever want to see Ian and I get visibly frustrated, just walk up to either of us and say, “We don’t want more pixels we want better pixels!” Not only does this statement make no sense, we believe it is merely a product designed to inaccurately satisfy a significant confirmation bias. Here is how I break this phrase down: in order to understand the claim, “We don’t want more pixels we want better pixels,” we need to be able to measure it. Assuming the (barely) quantifiable term in this statement is the word “better,” we need to define what actually makes a pixel better. At Panavision and Light Iron, we believe a better pixel is one that offers a better-quality or more malleable output. We argue a better pixel is one that has more dynamic range, more flexibility, and more range of manipulation. Do you agree? A better pixel is one that is generated with a lower signal-to-noise ratio and has a greater bit depth. A better pixel produces an image that is smooth and can accurately replicate what the lens maker designed. In other words, a better pixel (or arguably the best pixel) is the one that creates a final image that can be changed any way you want with little to no compromise. Another way of understanding pixels is that a better input pixel will always produce a better output pixels. Pixels on a sensor behave like a light meter and when you run the camera, pixels are given starting input values based on the light and colors they are fed. And because pixels work in concert to generate an image as a whole, the increase in overall pixel count is the source of where manipulation, optical representation, signal to noise, bit depth, clarity, transition, contrast, and ultimately creative control begin. In other words, pixels initially work independently to register a high quality input and then work together to create an image as a whole. Therefore, a higher quality input yields a higher quality output. So if a “better” pixel is measured by the collective properties of output, it’s “better” qualifier is enhanced when more pixels work together at the source. Therefore, I believe the statement is made more accurate by saying, “We want more pixels to create better pixels.”

We discussed this concept among others when Dan Sasaki, Ian Vertovec and myself presented 90 minutes of analysis on the importance and beneficiary effects of creative control granted through high resolution. It’s important to note that our end presentation was not our objective; in other words, we were not set on proving resolution is power through our conclusions, rather our independent research concluded there is power in resolution. However, I’ve done well over a hundred presentations in my life and there was something odd about this presentation which is actually what prompted me to document this entire story:

When it was over, there were no questions.

In a standing-room-only and overflowing hallways full of attentive artists, technologists, filmmakers, and students, and even competitors, no one raised their hand. To buy some time and in an attempt to cajole even a delayed reaction, I pretended I couldn’t see the audience (even thought I could with the houselights slightly up). I lifted my hand to my brow, squinted my eyes and lied like a pro saying, “I can’t really see you with your hands up, so you’ll have to just speak up.”

In the awkward silence, my first reaction on stage was fear. Who wouldn’t be scared of presenting a mountain of work to what apparently was a cemetery. There we were in a gigantic room under the banner of Panavision at one of the most a prestigious festivals presenting years of theory to the best in the world and virtually no one has anything to say.

No critiques?

No comments?

No connections?

In this giant room below the shadow of our presentation Keynote slides, my fear turned to self doubt. But through an extended moment of patience (which admittedly felt like minutes) eventually @RedSharknews made the comment, “Why has no one assembled these concepts together before? This is profound! Bravo!”

The silence, however, continued. My instinct was to rationalize a motionless room and my mind quickly filled with all the mistakes, the fumbles, the imperfect slides, and misspellings that possibly contributed to accidentally murdering our audience. I then attempted to make a joke to lighten the mood, “Sooo, was it that easy??? You all just get it???”

The room chuckled.

Then, somewhat thankfully, one person (a competitor I have tremendous personal respect for) made a comment. That was it. We responded with a respectful disagreement and the presentation ended. At first I thought we failed to defend our conclusions. I thought my slides were too few. Then perhaps too much. We had not previously practiced the deck, so maybe the audience felt we were unprepared. But as it turns out, none of this was the case. And that’s where this experience will never leave me. Over the next few hours, days, weeks, and now even months, more and more people reach out and say this information was so profound, even revolutionary, that people simply needed time to react to it. One person explained to me the following day, “This information is so profound it cuts to the core of some of our beliefs. You challenged my believes so well that I am beginning to doubt what I thought was true. When I doubt myself instead of defend myself, my reaction is to remain silent. In that moment, I think the whole room was going though the same process.”

That made me feel a lot better and I hope at least a few of these concepts resonate with you, too.

I don’t yet know if this event was momentary thing; lightning in a bottle, or perhaps the beginning of new awakening, but what I do know is the images we are capturing in 8K are different in ways we could have never predicted when we started this journey. Leon Silverman told me once, “In the complex world of art and technology, the teacher is only one semester ahead of the student.” That’s exactly what encapsulates moments like this. And that’s exactly what personally drives me to keep exploring what’s around the next corner.

| m |

vimeo

6 notes

·

View notes

Text

Nuclear Fusion: RED Hydrogen

My father once told me, “Sometimes those who lead get so far ahead, followers mistake them for the enemy.”

Entrepreneurs are used to facing ridicule and doubt. We have what’s referred to as “positivity bias” which allows us to focus on the success of incremental problem solving instead of being demoralized by predictable points of failure. I tell my colleagues when we brainstorm, “Skeptics are always welcome.” I find that skepticism from educated and rational people can be a useful tool in triangulating the trajectory of a future that is still the fringes. You just have to filter out the logic from the luddite. And while most people tend to stay in the safety zone where things are predictable, eventually fringe-ideas mature, stabilize, and the biggest resistors can slowly evolve into paying customers.

RED knows this process better than most, only this time they have a lot more experience with the challenges of a blue ocean product like Hydrogen. Even though there are numerous people boldly sharing negative reactions to today’s product announcement, the truth is without companies like RED and visionaries like Jim Jannard, the world’s technological trajectory becomes a default future.

The skeptics might not realize it, but their negative comments actually have two positive effects on entrepreneurs. First, skepticism is like jet fuel in a turbine engine. The more fuel you compress, the more powerful the thrust. Skeptics that make a lot of noise create echoes through their connection networks which inadvertently validates the mission of the inventor. Often times the greater the resistance to an idea, the greater potential impact the idea actually has. Entrepreneurs know this.

The second thing skepticism does is work as a product roadmap. Many skeptics make good points through logical criticisms and when a good company is listening, those criticisms can lead to course corrections. This is precisely why Hydrogen was announced before its release. In the case of the Panavision DXL camera, as product manager I insisted on showing a concept camera to the market 7 months before delivering it. This allowed us to capture valuable input and course-correct the product in the months leading up to anyone actually shooting with it. During this time, we were paying close attention to the critics and now I travel the world on tour with DXL hearing in city after city, “You guys thought of everything!” We actually didn’t think of everything, we just listened more to the skeptics than to our fans.

But fans are also part of the equation and necessary as early adopters to help evolve the product, build infrastructure, and eventually bring the skeptics over. On the surface, RED’s announcement of the Hydrogen is not much more than an overpriced, underwhelming Solidworks picture of a phone. As far as we’ve been told, that’s what the product is (or at least appears to be). Because there are still many unanswered questions, what I encourage people to do is think out of the box and try to understand what the product means. From the perspective of meaning, new ideas begin to emerge as to what is possible, or even probable, and how meaning can change the market. Here are some concepts that I see when I examine the product meaning, its makers, and its potential.

• Apple, LG, Samsung, and Sony are focused on the average consumer. Their products and features are based on pricing tolerances which often means the products they release have known compromises in order to achieve a target price point. Because RED’s core business is in the professional and prosumer markets, their phone can pick up where the incumbents are finically forced to leave off.

• RED is diversifying. There are a finite amount of people that RED can consistently sell cameras to. It’s conceivable that the RED professional market is beginning to flatline, which is likely the motivation for Raven to be introduced at a specific price point and add new customers to RED’s portfolio. In most cases, it is healthy for a company to diversify, especially when it desires to maintain relevance in the existing market (as Jim Jannard and Jarred Land stated). This is a sign of health, not of instability.

• Professionals are special. Apple has been under tremendous pressure from professionals (including myself) to increase a product roadmap that includes the often unique needs that the small market of professionals require to do our jobs. When it comes to the phone market, I have the same phone my mother has, which is to say one is no more “pro” than the other. While I may use additional tools, accessories, or apps in order to increase my phone’s professional appeal, the products, at their core, are identical. I see Hydrogen as an attempt at identifying a market that has been overlooked: a specialized phone for professionals who work in multi-media.

• Users make the best inventors. When Reed Hastings was penalized for returning Apollo 13 late, Blockbuster had no idea they were the core inspiration behind Neflix, which eventually put them out of business. I have spent a fair amount of time with Jim and Jarred and looking back, this Hydrogen idea has been with them for quite a long time. They are always comparing and complaining about smartphones. In the middle of a routine conversation, Jarred will get a text and complain about the phone, it’s inability to sync, its service provider, the quality of the battery, the OS, or how poorly it fits in his gigantic hands. If you’ve been keeping track of how smartphones let you down for 10 years, you'd probably be an excellent candidate for inviting a better one.

• Focus on the knowns, not the unknowns. With over 6 months before expected delivery dates, information is, at this moment, scarce. But from the people we know and the market we can observe, some really excellent ingredients that are likely to be incorporated into this new product in numerous ways:

RED is a modular company and modularity means more choices

RED’s core business is advanced compression and will incorporate that into the product

RED has virtually unlimited possibilities with large sensors and this will be one of the crown jewels of Hydrogen

RED’s claims about the holographic screen are not any more outlandish as a 4K camera that shoots to CF cards for $17,500

Helium is made out of Hydrogen and that metaphor means integration with cameras is guaranteed

There are numerous crossover points between RED cameras and Hydrogen which means R&D is in powerful a feedback loop which could accelerate developments of both product lines

In the end, few of us (especially creatives) want a default future. The path to reformation requires trailblazing which means there will be blood. Unfortunately, as evidenced with many of todays top critics, psychological changes are probably more difficult to navigate than the technological ones. But thanks to the ingenuity and vision of RED, I believe when you examine the claims, the market, and their history, they possess what it will take to pull it off.

But it doesn’t end there: we still play an important role in this process as well. RED can’t get this right without our input, and that’s why the entrepreneur in me believes that our role is necessary to making this product even better:

Some of us are the skeptics: share your criticisms in logical and constructive ways

Some of us are haters: unnecessary malice can often mask your valid input

Some of us are fans: don’t let your love for RED prevent you from having a wide perspective

If there is a healthy balance between all these things, I’m fairly confident that we’re all going to experience a very positive chain reaction when RED Hydrogen is released.

Michael

Twitter: @michaelcioni

Instagram: michaelcioni

4 notes

·

View notes

Text

Arming Yourself with 8K Weapons

Back in June of 2015 I was able to do some preliminary tests with the first 8K Weapon camera. Some of you may have seen the "8K Aerials" Light Iron photographed and presented at CineGear Expo on a 98" BOE 8K television. For people in attendance, CineGear probably marked the first time they saw an 8K image on and 8K display and I believe it was our first peek into what 8K means for the future...

Since then, RED has been refining Weapon 8K and after a new camera test I just completed, I am certain Weapon 8K is absolutely RED's best work to date. If you are a RED enthusiast, you are going to love what this camera can do for you. But moreover, if you are RED cautionary, you re going to love what this camera can do for you.

There are already a number of people chatting about how unnecessary 8K is and that the "race for resolution" is a pointless contest. Let me explain why this way of thinking is flawed:

1. COMPONENTS of UHD ADOPTION

I believe UHD (4K) will be the mezzanine standard for home entertainment displays until around 2025. By this, it's important for people to understand that we are entering a decade (minimum) of consumer UHD adoption, which means you can get comfortable with investing in it today. By 2018, it will be impossible to purchase an HDTV and by 2020, broadcast technology will deliver content to less than 50% of consumers with OTT groups becoming the leading majority of content distribution (and for some of them, content creation). Once OTT servers more than 50% of the population, they will make all the rules, and they're already telling us what those rules are going to look like.

2. BROADCAST VS BROADBAND

I have had to get comfortable with the notion that the internet plays the most important role in the road to 4K exhibition, which is to say that the world of motion picture cinema is unfortunately not going to rise to the occasion like I had hoped. Already guided by brave leaders like Netflix and Amazon, 4K capture is the minimum standard in acquisition for their original content. Unlike broadcasters (many of which are stuck with 720p 59.94i broadcast infrastructures), OTT groups have a major technological advantage by instantly upgrading their exhibition systems with relatively inexpensive and simple software upgrades. They know that in just a few short years, every home entertainment system will not only be SMART, they are bi-directional and will enable UHD resolution, high dynamic range pictures (HDR), and a wider color gamut (WCG) to users as adaptation of these technologies widens. I call UHD, HDR, and WCG the “Tripod of Fidelity." When I was at NAB 2015 I asked a leading major network engineer what it would take to implement this tripod of fidelity at his network like the OTT companies. His reply was, "At this point, it would be impossible."

3. AVOIDING THE UPSCALE

If you've ever run the video tap of a modern digital cinema camera to a SONY OLED monitor on set, you can look at that picture and say with confidence, "Wow! HD looks really good!" The fact is, HD does look good when super-sampled from a higher quality source. But after those same images are conformed, colored, compressed, and consumed, they are a long way from where they started. By 2020, every image not mastered and delivered in UHD, HDR, WCG, will become mangled in a calamity of unpredictable scale, contrast, and color conversions. All of us have experienced the tragedy of SD>HD broadcast upconversions. To master in HD or 2K today is to relinquish your image to any number of factors you cannot control in the near future. UHD/HDR/WCG mastering is the only way to ensure present-proofing.

4. EMBRACING THE OVERSAMPLE

Avoiding the upscale is a major part of the equation, but the next critical step in superior images is ensuring we have more pixels than we actually need. When the RED ONE hit the market in 2007, the images were so fantastic because even at bayer-pattern 4K, they were super-samples of 2K and performed wonderfully in HD. Now that OTT UHD is becoming the new normal, we need to apply that same logic to today's content which is where the introduction of Weapon 8K becomes a powerful tool. Don't just use resolution as a pixel meter for bragging rights, rather use it as a Swiss Army knife multi-tool that can be leveraged in a number of creatively constructive ways.

5. RESOLUTION IS NOT SHARPNESS

When you look at your Facebook wall you instantly know the difference between an iPhone photo and a DSLR photo. Yet on Facebook, if the compression and size of the photos are identical, how can you tell? Certainly the iPhone is not blurry. In fact, I find iPhone photos to be remarkably sharp and with surprisingly excellent DR and color. So what is the intangible difference between a lowres iPhone photo and lowres DSLR photo?

The digitally-educated understand that you cannot make a perfect circle using pixels because they are merely a series of polygons. Film, ironically, can actually photograph a perfect circle because it's not limited to polygon arrangements. By this, the more pixels you can apply towards a perfect circle, the more perfect the circle appears to be. And there is the #1 reason for lovers and haters alike to test Weapon 8K:

8K is not about sharpness, it's about smoothness.

When the stills world migrated to digital cameras, one thing they weren't necessarily looking for was film-like resolution. What they were actually looking for was a more film-like smoothness. And the smoothness they were after became better and better as sensor resolutions increased. And that is what 8K is all about. The same way a 32 megapixel DSLR camera can look good after being rendered down to Facebook, so will Weapon 8K when rendered down to UHD.

6. WEAPON IS NOT EPIC

I first noticed "The Smoothing Effect" when the 6K Dragon came out in the Epic. I remember seeing the first set of dailies come in from Don Burgess, ASC on the 2017 release "Monster Trucks" and saying to my team, "This doesn't look sharper...but it does look smoother." Over time it was clear that the super-sample of Dragon 6K was producing a smoother transition of round objects which made them appear more realistic (the opposite of hidden/blurred aliasing or approximating that lower resolution BP cameras must do to hide imperfect edges). Now with 32 megapixels in a Weapon 8K, people will get the best representation between what resolution feels like without sharpness.

7. THE SUPER SENSOR

So as I said at the beginning;

"There are already a number of people chatting about how unnecessary 8K is and that the 'race for resolution' is a pointless contest.”

What I encourage everyone to consider is that 8K is not the new 4K. Instead, 8K is about to open up an entirely new era of cameras which I now call "The Super Sensors." Super Sensors are camera systems like Alexa65 or Weapn 8K that are capturing with so much resolution that (like a DSLR) they are able to create a new level of smoothness that makes things look more like a photograph and less like a digital representation of film. Ansel Adams shot large format and no one has ever said, "His images look too sharp!" On the contrary, Adams' images look smoother, cleaner, and multi-dimensional because they were super samples. These are the creative words I think people will begin to use when describing what they see while shooting Weapon 8K.

But creativity aside, there are also technical elements that I used in my test that I was excited to evaluate. I used the new Panavision Primo 70mm lenses which cover the 8K sensor beautifully up through my widest lens, which was a 24mm. They are a perfect blend between clarity, size, weight, and texture. If ultra-high quality glass is important to you, you need to get to the nearest Panavision and do some tests with the Primo 70s. You will fall in love with how perfectly they harmonize with a super sensor.

I also tested our first Weapon Module called the Panavision Hot Swap Module.

This module seamlessly ties into the Weapon body and provides:

• D-tap on top powered from either input

• audio in & out

• 5v USB

• 00 Lemo Control connector

• 5 pin Timecode in with Genlock

• 3 - HDSDI outputs

• 3 - 12v outputs

• 2 - 24v outputs

• hot swap between studio power and Anton Bauer gold mount

• built and 3D printed out of carbon polymer

• total weight of 8K Weapon camera & module is 5.4lbs

This is the camera exercise I did with the help of my friend, Erin Gales. Everything was shot Weapon 8K at 1280ISO except for a 2nd camera angle during interviews, which was a Dragon 6K. Special thanks to Phil Newman, Megan Swanson, and Keenan Mock for their help on set.

vimeo

5 notes

·

View notes

Text

ENDER'S GAME

For most of my life my mind has wrestled with the ongoing question: what to do when braun seems to overpower brain? I have always been small in stature, always the shortest in my class and, therefore, always somewhat limited to what I could physically accomplish. But don't we all have mechanical limitations to our abilities? Don't we all share some sort of inability based on what makes us who we are?

I think so.

For me, limitations that manifested themselves of a physical nature simply revealed to me "there must be another way." Whether it's something high up on a shelf or something too heavy to lift, it was the act of creative problem solving that became both the source of fuel and satisfaction of success. Little did I know that this type of arrangement and experience as both a child and an adult was absolutely the best preparation for taking on the life of an entrepreneur in an industry on the brink of change.

Author Malcolm Gladwell's latest book, "David and Goliath" is the story of the complex relationship between strength and weakness. Based on this old Bible story, Gladwell is quick to point out giants are not always as strong or powerful as they may seem. In fact, David wasn't the lowly sacrificial lamb that the Philistine's thought was about to commit suicide, rather David knew he was going to win before anything ever happened. So how could a shepherd boy armed with five stones and a sling walk to an eight-foot warrior and know, without a doubt, that he was going to win? The answer is simple: David was not going to fight on Goliath's terms.

All too often, we find ourselves losing battles because we are fighting them on the terms of our adversaries. The story of David and Goliath is not one of luck, but one of control. David knew that the source of Goliath's greatest strength was simultaneously the source of his biggest weakness: Size. And that's exactly what the information age is proving over and over and over again: The size, age, and design of giants can be overtaken by newer, smaller, and innovative ideas that are not playing by the same rules.

Recently, the Light Iron team had the pleasure of collaborating with one of the best cinematographers of all time, Don McAlpine, on the film, Ender's Game. Like us, Don recognizes Goliath when he sees him and has no intention of falling into a trap.

In Don's words, "I chose RED because the Hollywood establishment had produced so much anti-propoganda that I knew it must be equal or superior to the more established equipment. While I will leave the future equipment requirements to younger and more agile minds than mine, I will, however, be very pleased to consider any device that can expend our vision." Fittingly, Ender's Game is a film about strength and weakness and an unlikely character who succeeds by holding strong to a vision that others lack.

To my friends and colleagues: Whenever I am engaged in situations that I do not control I get confused, I become fearful, and I am prone to make mistakes in a battle I most likely cannot win. It's overwhelming. I know many of you know what that feels like. But when I take control, make predictions, and adequately prepare based on known weaknesses, I can face the biggest giants with the certainty I will succeed. In the business of digital cinema, when you arm yourself with workflow and innovation, you move the odds in your favor. This is the story of how just a few self-driven people armed with workflow and innovation were able to manage one of the biggest films of the year. And it's a story that if you "get it,"demonstrates how you can accomplish exactly the same thing.

https://vimeo.com/78581143

1 note

·

View note

Text

"Advancing the Mind at the Same Speed as the Processor"

Think about it: Processor upgrading compared to mental upgrading.

While I’m not attempting to make an argument for the theory of technical singularity (not yet, at least), I would like to point out that many technological developments for cinema are ahead of the average user. Looking back, the transformation of the motion picture industry from a fully linear/analog group to a fully file/digital group is now almost two-thirds complete. What started en masse in 1999 I estimate should be complete by 2020 and we’re now past the halfway point. But as the distance to the d-cinema finish line approaches over the next 5 years, the widening gap between the processor and the mind is not just easier to see, it’s becoming more unguarded and in some cases, worrisome.

My observation comes from the discrepancy between the spoken desire for progressive techniques and the ultimate choice to simply maintain the status quo. I know I’m not the only one who notices this…

While some of us are motivated to examine and explore the pros and cons of emerging technologies through real experience, some of us draw conclusions indirectly based on the opinions of others. I believe that an evolving education may be the most valuable tool we have in the d-cinema transformation, and that those who elect to lead for the right reasons will have a memorable place in history while the luddites will be forgotten.

To prove my point, I’ve tried something a little different: an innovative tools case study.

In my spare time, I compose and record music on about 6 different instruments I play on a regular basis. Sometimes I get to collaborate with other musicians and earlier this year I was introduced to the upcoming artist Kayte Grace (www.kaytegracemusic.com). After being able to collaborate with Kayte on her latest EP, we began talks about a music video, which I was interested in using as a vehicle for a case study about some innovative tools that most people haven’t had direct exposure to. So the following case study was designed and documented in effort to demonstrate the validity of the tools I chose to experiment with in this project. In addition, I wanted to demonstrate that the behavior of taking calculated risks is a trait which all of us (myself included) need to have the courage to consistently possess.

*NOTE*

I have been consistently asked to consult for companies over the years and enjoy evaluating tools and their impact on the creative process as part of my role as a filmmaker and facility owner. I can see my fingerprints and those of my partners in many of the tools professionals use. But when companies want endorsements, I never really know how to do that well. This case study is my attempt at un-marketed information. These are simply examples of technology I particularly enjoy working with. But this video was done without the consent or request of any of the associated companies mentioned.

• No company saw an edit before I uploaded it

• No company contracting me to do this in any way

• I was not paid for any of this information

| m |

https://vimeo.com/73797466

2 notes

·

View notes

Text

The DIT Dilemma

This entry was based on a reduser.net thread about building DIT carts. The thread asked a lot of challenging questions about the process, the tools, the players, the evolution and the future. For reference, the original thread can be viewed here:

http://www.reduser.net/forum/showthread.php?88935-Building-a-DIT-cart

The official role of a DIT is one that continues requiring discussion. Defining the DIT is hard for anyone to "officially" quantify, though there are rules and regulations that suggest a textbook definition. But the more one talks to DITs, the more you find they are not "consistent" in their feelings on the job. This is not normal. If you ask pilots what they do, they tend to agree on their role. Same with directors or even script supervisors and DPs. This suggests that the future is not yet written for DITs, which means they have the unique opportunity to either shape the industry going forward in their favor, or let another entity define what their role is and even make the DIT an unnecessary expense. Going into 2013, there is significant evidence for both arguments.

[ Response to reduser.net thread]

I've enjoyed reading this thread since it started, and other threads like it. There are some good points made by all contributors and I take the time to read each one and "feel out" where the writer might be coming from. As they say, try to imagine yourself in another man's shoes before you jump to conclusions.

That said, I want to make perfectly clear that what I'm about to write is based not only on extensive on-set experience, but on the relationships with production supervisors, unit production managers, producers, production companies and studios who run digitally captured features and television shows. Some DITs recognize that the "Light Iron way" of managing the DIT and data management roles differ from other entities. -Some prefer our arrangement, others do not. But the reason Light Iron manages the DIT process the way we do is because we largely designed our system based on the[I] feedback[/I] we receive from the people who do the hiring and firing. So take a read of my comments if you like and understand that I oversee the on-set dailies process of close to 100 projects per year - which accounts for more than 1,000 shoot days annually. -I don't have every answer, but I've come across a lot in a short time and deal with DITs and the people who pay them.

Consider this: If you are interested in knowing what a DIT does, ask a DIT. They have plenty of ways to answer that question. But I am more interested in knowing what a paying production thinks a DIT [I]should[/I] do: so I asked the people who hire them.

From there, it should come as no surprise that the role of the DIT is one that carries along with it some controversy. -Some readers may feel the word [I]controversy[/I] is a bit extreme, but I can easily say that the role of the DIT is one that is consistently debated (partially proven by this post). But the debate of the DIT doesn't stem from the DITs themselves - why would a DIT ever want to have to defend their own relevance? No creature on earth wants to have to defend its very right to exist, yet DITs constantly fall into this trap. No, the debate of the DIT comes from the top down and the growing number of productions that choose *not* to have a DIT as part of their arrangement. While I often feel the anti-DIT position is definitely short-sighted and (in many cases) flat our wrong, productions that regularly operate without DITs do not seem to demonstrate an inability to make their shoot days or create high quality content. So how is that possible?

Well in my experience, the DIT Dilemma is a lot more serious than some realize. There is no other production role on digital productions that is equally "in question" other than the DIT. No one is questioning the relevance of DPs, script supervisors, actors or camera operators. But whether or not a DIT is needed is a common discussion in the production office and above-the-line meetings. I have personally been in dozens of production meetings on large and small productions in which *not* having a DIT has been fervently discussed. Since I am ultimately in favor of the DIT, my vote counts in that direction, but there are plenty of cases in which DITs are not hired on a show or are demoted to another ancillary position. -If anything, that should be the initial reason DITs should be thinking about this issue: [I]they have been named.[/I] And once you are "named" and the momentum of defending ones relevance comes into question, it becomes more and more difficult to maintain favor as time goes on and trends inevitably shift.

Ironically, it was during this this observation that my partners and I continued to examine the role of the post house as well. While DIT's have been named, they're not the only ones: post houses are also being challenged. And then we thought: these two related areas represent endangered species in our changing media ecosystem. Consider this: if a measurable portion of the industry feels like they no longer want to rely on post houses, and another measurable portion of the industry feels like they no longer want to rely on DITs, then the beginnings of a predictable outcome starts to be revealed. -Clearly these two issues are related in some way! We can even assume that there is some portion of these two camps that believe the same thing. -Overlapping their no-post/no-DIT notion together! -Those became people worth targeting and inquiring as to "[B]WHY[/B]?" The results are typically plain and simple: economics. Everyone wants more for less. I don't care if it's a producer on a film or your mother at the grocery store. There is a perceived notion in the eyes of many producers that the DIT and the Post House are more expensive than the return service they provide. -And for many, that's not a new perspective, but until now, an alternative simply didn't exist.

The data that I've collected and successfully applied into my business suggests that the post house is less desirable than ever before and in terms of dailies provisions, will not exist by 2017. Mark my words. Likewise, the sophistication of cameras continues to increase and for the DITs who have been in the game for 10 years, you know that many of the tools you used to require to "normalize" images on your cart have been absorbed into the camera itself. In fact, I predict that by 2021, all the capture, transcodes (there won't be transcodes, but the equivalent of the transcode), sync, color, windowburn, watermarking, versioning, color space conversions and even lined-script notes based on totalcode-timecode during capture will *ALL* be recorded and managed by the camera, saved to an online cloud server and instantly distributed worldwide. In other words, a significant portion of what Light Iron does today to make its money will not exists in 10 years (which is the same for thousands of people around the globe). Again, mark my words.

These predictions are based on following the data that has been compiling for 10 years, analyzing Moore's Law, talking with targets of manufacturing, evaluating the market evolution and making a few educated guesses. The result: in 2021, we will not have DITs or dailies post houses. -Sure, I'm scared, too, but I know enough of my own abilities to predict the market that I intend to evolve along with it - as opposed to devolve in spite of it (as some foolishly attempt do). If you are a DIT today, I can assure you that you won't be a DIT in 2021. -Maybe that's a relief:-) But it means that one needs to find ways to A) build a career that leads to professional satisfaction in the future and B) find ways to extend your relevance today as far in the future as appropriate.

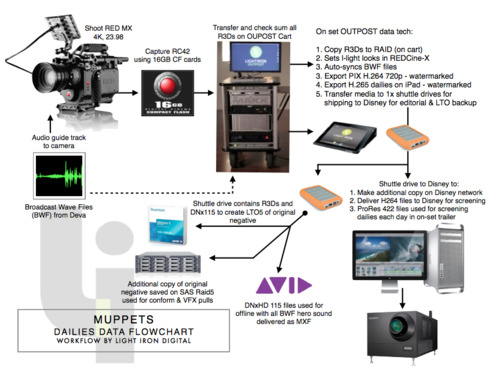

For those that are interested in what that exactly looks like, I can tell you what Light Iron does. Again, while some people disagree with the Li model, I can tell you that it has changed the lives of the producers who take advantage of the system and the people who are our regular operators. We call it "OUTPOST." OUTPOST is not the only system out there, but it's the largest fleet of mobile systems and has cumulatively done more shoot days than just about anyone else combined. To illustrate the significance of that point: Light Iron is a company of less than 30 people at the time this was written. Currently, there are 16 carts out in the field servicing shows internationally. Concurrently, we're in the middle of 8 DIs. That's a combined active slate of 24 projects. No post company has ever had a business model that can service nearly a 1:1 ratio of feature film projects to employees. That's never been possible before and that is why my findings should be taken very seriously. When the implications of a statement that significant are fully comprehended, the future and the past will have finally and fully divorced.

On Light Iron systems, we have been tailoring our tools so they can provide these popular processes on a set:

• Checksum

• Triplication

• Visual QC

• Automated Scene and Take naming

• CDL color

• Advanced Live Grading (based on a new system we are announcing publicly in 2013)

• Sound Syncing with 1/4 frame accuracy

• Transcoding at over 90 frames per second

• Parallel rendering

• Advanced reporting (PDF, CSV, XML and TD, ALE)

• Advanced iPad integration

• LTO [automated robot support]

• (Virtually) unlimited camera support

• ACES or 709 color space management

• Transcoding to MXF & Quicktime with custom naming/automated Scene and Take metadata

• CDL/ALE/ArriXML/XML/FLEx/EMD/RMD sidecars

Admittedly, there are a lot of very smart people who can build systems that are capable of all of the above. But just as there are differences between a Dodge Neon, Dodge Ram and Dodge Viper, so there are differences between tools that claim to provide the same thing. On Li OUTPOST carts, we have operators that handle up to 4 hours of ArriRAW and provide everything listed above on the set. Even on our smallest mobile system, Lily Pad Case, we had an operator do an average of 6 hours of dailies in Africa which included backup, color, syncing, rendering to AVID MXF and H.264 for web uploading and ended up uploading to the web for dailies viewing. But on the average show that shoots 2.5-3.5 hours of footage per day, the operators are having fun doing all this so quickly and efficiently. Creative liberation is what speed delivers, and the creatives you serve sense that right after the first mag.

Sound people play along when we manage expectations correctly. Sound will be perfectly synced - in fact, I teach a syncing training course that gets people to learn how to sync takes in under 8 seconds per take (which means a 20-take magazine can be synced in about 2 minutes and 30 seconds - even with TC drifts). We build custom drives that eliminate slow downloads and transfers - as our systems take that into account with 500MB/s read/write for shuttles (ie: once off the mag, we move 1 hour of Epic 5:1 in 7.5 minutes to the Li shuttle drive). Smart people make it clear that doing this correctly is expensive - which is the truth. Be prepared to invest if you want to do this without compromises. But when LIght Iron is able to improve the horsepower with more significant investments, realize that is an answer to the call of the production, not a threat against weaker, less expensive owner-operator tools. I don't set the pricing, I simply install what is required to solve the above list. That requires a good DIT to have access to the latest and greatest and even develop improvements, software and hardware.

The post house is at the end of its life, and unless DIT's realize this is their last chance, DIT's will share in the slowly fading demise of post houses. The DITs that quickly point out they don't have time to do certain tasks, or that it's not their job are the prey of the post house; big post houses absolutely love the DITs who don't deliver things complete, correct or compliant because it enables them to charge production for the same services all over again. -Often at a premium! But for the enabler DIT - the ones that have desire and the skill set to provide the services that post is, they are the threat to the post house and the white knight to the producer. Remember: based on the changing media ecosystem, most post houses use similar equipment DITs use. -Only they are willing and able to finish the job. If you share similar tools, then it becomes more of a mental game of completing the task, not a physical one.

CONSIDER THIS:

In a matter of survival: focus on what you can bring to the production, not what you can't.

Focus on how you can protect the filmmaker, not protect yourself.

Focus on what technologies exist that elevate potential and possibly, not just copying what everyone else uses (there are lots of bad DIT habits that are spread across the world).

Focus on practicing and improving on the areas in which you struggle, not ignoring them or pass them off to another person.

I have personally operated numerous jobs in order to learn the way and the truth and from that experience, I've applied it into some of the best DIT carts in the world. I've also done some of the hardest jobs in the world and probably do the largest jobs with the smallest footprint. -And our systems are getting better with 2 new massive upgrades in 2013. I love working with DITs and helping them make more money and provide more services and satisfying the creatives they serve in a completely new way.

If you are providing all of the above, then you know what I'm taking about. Your job is a lot more protected when you can demonstrate you are more relevant than a dailies post house.

If you are doubting all of the above, then I wish you the best. It truly is a dilemma and we can all agree it's a complex issue. But know that the post house is dying and that it's hoping that DIT's make mistakes and refuse to provide services. The less DIT's do, the more air in their lungs. And while that may seem good for both the DIT and post house, it's bad for the creative. The truth is getting out and there are creatives who are experiencing life without a post house thanks to the talent of good DIT's. By this, a new standard is being born and I have watched it explode over the past 3 years - which is why this is all worth debating. But one things is for sure, 3 years ago on-set dailies was but a fraction of a fraction of services on set. Today, it's 100% of my dailies business and growing with companies all over the world. If you think the DIT as a post provider is a phase that will phase itself out, then you are already less valuable than your employers think...but they'll figure it out soon. They always do.

| m |

14 notes

·

View notes

Text

The New Breed of Communicators

Think back to a significant or monumental moment in your life in which you were experiencing with many others around you. Try to find a moment you can easily recall, perhaps talk about often and remember where you were when you heard about it.

Are you thinking of a moment of monumental progress - like the Apollo 11 landing? Or are you thinking of something more destructive - like the September 11th attacks in New York and Washington D.C.? As it turns out, when asked this question, statistics show most people tend to recall more traumatic events as opposed to more encouraging ones. In other words, when asked about profound moments in life they will never forget, people tend to recall disruptive events such as the death of a rockstar over something encouraging like their wedding day.

But the question I’m proposing is not about which end of the spectrum you choose to recall significant events; rather the question whether or not you even realize the event is significant when it’s happening.

When the event was happening, did you have the inclination to stop and document it while you were experiencing it? Did you get out a camera and take pictures of the event or the TV screen? Did you record the reactions of others in the area or in your home? Did you write in a journal what happened that day and who was there? In other words, when a significant event is happening, are we typically able to realize that it was, in fact, profound at all?

For most people, the significance or impact of an event only tends to become clearer as the passage of time physically distances you from the initial affair. Psychologists sometimes qualify a version of this as traumatic disassociation. For most people, the greater the impact on your life, the greater the traumatic disassociation affect. Admittedly, contrasting circumstances produce varying affects on individuals, but one thing is fairly consistent: the average person is not necessarily “aware” of a moment of significance until well after the moment has taken place.

It’s easier to quantify moments of massive trauma when they affect a nation or a generation - especially when heavily covered by the media. But what about circumstances of dramatic change that are not covered by the media? What about episodes that large groups experience over a long time period? What about instances that are happening “under the surface” of mainstream culture? I believe there is a connection between what is happening in digital cinema and the notion that most people are unaware of its pending affects. Without the clear realization that something significant is occurring, people, on average, are unable to prepare or even react to a moment of significance...until either a lot of time passes or a larger group of people collective agree on its significance.

Imagine being in a large room with a lightbulb that was dimmed 1% every 60 seconds. It might be hours before you notice that the room was slowly getting darker. A few people might notice when the ambient light level is at 50%. And for others, they might not notice until the light was nearly gone. But no one would be able to determine the change after only a few minutes. I believe the most dangerous element of our profoundly changing media ecosystem is that it is so subtle, so effortless and so orthodox that for most professionals, its affects are virtually undetectable. I don’t feel the word dangerous is an exaggeration in the least. Motion picture communicators are in the beginning of an era of change that will never be undone, and will never be outdone. To the most experienced professionals, the digital cinema transformation is, in fact, so traumatic an event that most people have yet to comprehend its significance. There is a divide in our professional culture, spanning every international territory, in which only a few are aware that the lights are dimming. This divide is creating more mechanical controversy over the creation of motion pictures than we will likely ever see. But for those of us that are aware that the lights are dimming, the conviction to technological resistance is starting to become increasingly frustrating. But I want to clarify that this is not necessarily a generational thing. My research shows that while technologically solvent people tend to be younger, it is not always the case. But while there are many masters of the craft who are acutely aware and in support of this transformation, there are actually very few industry new-comers who make arguments against the practices and protocols of progressive technical development. In other words, being older doesn’t mean you lack the ability to grasp imminent change, but that younger counterparts will adapt with less resistance, if any at all.

Man’s ability to create technology is one of the most fundamental components to consistent progression. Without routine technology development, talents, tools and techniques quickly become stale and obsolete. While that sounds fairly obvious, the last ten years working in the motion picture business have shown me that rapid advancements in technology are not necessarily welcomed by all professionals. For many, the technological side of creativity is liberating. For others, it can represent a serious threat. Because of this, I’ve tried to make education a normal part of my life. In a given year, I likely give in the neighborhood of 150 lectures focused on creative technological progression to all sorts of demographics. I also have done everything I can to be transparent in what I believe, why I believe it and how I do it. -So transparent that in some cases, I’ve been criticized for “sharing too much with competitors.” But I believe that the risk of sharing trade secrets is worth the gain in global education. That being said, sadly in some of these discussions, I have unfortunately ended up encountering people who make strong arguments against many aspects of technological development - particularly information technology (IT) components as they pertain to both production and post production.

But fear and resistance to technological change in creative outlets is not new. It’s not rare and it’s not unique to the motion picture business by any means. -It just seems new to generations of artists who are frustrated with (what many claim is) an unpredictable, unstable, unstandardized, undeveloped, unproven and un-led movement.

In the early 19th Century, a mechanical version of the loom was invented and brought to England where it quickly became an asset to the growing textile industry. For centuries up until that time, skilled artists would hand-weave threads together to make anything from clothing to blankets and scarfs. But faced with the notion that a seemingly less-skilled worker was now adding value to manufacturing through the use of modern technology, creative artists felt they were being cheated out of their jobs and began to revolt. Known today as “Luddites,” this group of artists began breaking into manufacturing plants and destroying looms, burning materials and stealing from their employers in protest of producing textiles with machines instead of artists.

The Luddites were among the first groups of people in recorded history that were victims of a theory known as “Skilled Based Technological Change” (SBTC). Personally, I have witnessed a measurable increased Luddite mentality over the past 5 years as well as an increase in SBTC conditions along with it. While at first conditions seemed to manifest themselves in what I would consider appropriate amounts, today I believe it is reaching a level in which (for some people) political agendas and (more significantly) personal legacies are at stake. This means opposers of new technologies are becoming less comfortable because some of the introductory technology is beginning to become accepted faster, thus the balance of power is starting to list.

Assembling all this together, I believe this boils down to two key ingredients which are somewhat linked together:

Traumatic disassociation affects the people in today’s professional industry who fail to recognize the significance of the changing media ecosystem

Skilled Based Technological Change represents the people who resist and attack the change they are being forced to recognize

Referencing the past as an aid to predict the future, I submit people fall into one of three categories of professionals:

Those of us who believe in democratization and technological evolution for creatives is part of designing the best future possible = (I’ll get to this title later)

Those of us who fail to recognize the benefits will still reap all the benefits once the progressive movement has completely overcome the traditional = followers

Those of us who choose to resist the benefits of change delay the potential of expedited progress and create a controversial environment for all parties = Luddites

It is because of these groups that I feel today is perhaps the best time to be a working professional in the motion picture industry since its inception. Teaching, learning, challenging, preparing and evolving is paramount to what we must do to succeed in this, and yes, to especially help those who are impervious to it.

One of the most common arguments I run across is the criticism that digital cinema and the subsequent file-based transformation is without rules, without standards and without best practices. It lacks defined leadership which forces professionals to make misinformed choices in directions that I believe will have detrimental affects in the future. It’s even been likened to the extreme anecdote of 19th century lawlessness in the form of “the digital wild west.” Actually, I feel this is a fair criticism in many ways. On the whole, the changing media ecosystem does lack the rigidity of more traditional approaches and many people can cite bona fide examples in which progressive techniques fail more often than they succeed. But for those of us who are a part of refining our craft, we are left with two choices: We can either yell louder than those who we feel are in error, or address the criticisms head-on in order to remedy them. It’s a classic case of aimlessly criticizing the powers that be, or do something positive to change their minds.

By far the worst thing we can do for the cause is to argue amongst ourselves. Fruitless arguments for the technical betterment of creatives have been camouflaging the real issues for years, holding both parties back from necessary development. Below are some examples of rampant arguments in the market that are not only counterproductive, but they act as a distraction of the real issues at hand:

AVID vs. Final Cut

The actual issue here is not which software is better for editors, rather that editors use nonlinear editing technology over linear tape or film cutting systems.

RED vs. Alexa

The actual issue here is not which camera is better for cinematographers, rather that images are being captured at high resolution and high fidelity instead on film or tape.

Tape Masters vs. Disks Masters

The actual issue here is not which format is better for archiving, rather that we are fixed on a digital direction instead of an analog one.

Compression vs. Non-compression

The actual issue here is not to suggest that non-compression is the best way for professionals to work, rather that advanced compression technology has yielded ongoing developments in lossless compression.

Resolve vs. Pablo

The actual issue here isn’t that expensive or cheap solutions essentially do the same thing, rather that color correction is all being done through computer-based tools instead of film negative cutting.

3K vs. 4K vs. 8K

The issue here isn’t that Arri or RED or Canon or SONY have different positions on sensor size, rather that all camera manufactures are building single-sensor solutions that produce higher fidelity images than film.

Satellites vs. Terrestrial Distribution

The issue here isn’t which technology is better and for whom, rather that we are getting away from film prints and tape cassettes for exhibiting movies and television programs.

RAW vs. RGB

The issue here isn’t which one offers more color, contrast or control, rather that we are all in agreement to move away from tape and film for image acquisition and rely exclusively on file-based capture.

On-set vs. The Lab

The issue here isn’t to figure out which one is better for you, rather to show that some legacy infrastructure are able to be replaced by progressive tools and reduce reliance on large, expensive corporations for support.

Indy vs. Studio

The issue here isn’t that good movies are high quality and bad movies are low quality, rather democratization is enabling people with less money to partake in shared technologies that generate images which compete with bigger budgets.

These are just a few examples in which people designate as battlegrounds worth fighting for, when in reality, I think there is a much bigger picture worth fighting for. If we could harness all these discussions and more and focus the energy on the bigger picture, I know we could build a new breed of communicators that could change the way the entire world tells stories.

Generals and politicians alike know that you need a plan before you engage in a conflict. Perhaps the lack of planning has been the problem all along. Perhaps it stems from the inability for many to recognize that this change is happening. Or perhaps it’s because much of the opposition holds high office and people tend to “look up” for direction. Whatever the case may be, I propose the beginning of a solution that starts with the most basic element. -An element that has been missing from the beginning. -An element that can be used to define a movement. -An element that people of all ages can relate to. -An element that people want to get behind. -An element that represents those of us who know the lights are dimming. I propose we start by giving a NAME to who we are:

We are Technatives.

We are technological creatives. Equally able to service both initiatives. We know we’re different from our mentors, we just didn’t know why. We are fueled by advancing technology and creatively applying it not just to our work, but our entire lives. We embrace change, often design it ourselves, and weave it into the creative outlets that are ultimately used to tell our stories. We reject the limits of being labeled either left-brained or right-brained. We are able to shoot, edit, configure, troubleshoot, write, direct and develop. We teach our children and our parents how technology can be used to improve the quality of life. We track changes in the market and are eager to set new trends. We seek out leaders in the industry that embrace technological creativity. We celebrate updates, upgrades and new releases instead of delaying them. We are comfortable with being uncomfortable. We are are early adopters. We take risks. We’re not afraid to be attacked. We’re not afraid to be labeled. When we discover something, we make our ideas a matter of public record. We are inventing new business models. We are most happy when we’re the first to do something.

So welcome technatives. The artists of tomorrow need us today to be the architects of the future. The lights are dimming, but our acute senses are already crafting ways in which we can capitalize change for the betterment of the art. Steve Jobs taught us that. Probably because was the very first technative. Steve made nerds cool and made artists nerds, all at the same time. That’s where I want to be. That’s where I feel normal.

| m |

14 notes

·

View notes

Text

iPad going invisible

On March 14th, Apple made good on a number of rumors with the release of its 3rd installment of iPad. While they don't officially call it the iPad 3, people in my circle are quickly defining it as such so as to distinguish it from previous iterations.

About 2 weeks ago I had the pleasure of hosting a really cool group of people that belong to the Independent Filmmakers of the Inland Empire (IFIE) led by Eric Harnden. This was just before the latest iPad came out. It's not uncommon for my company to host different groups and give tours of the facility. I always think that sharing knowledge with others trumps keeping it to yourself. That is why we pride ourselves as educators and do our best to maintain a transparent identity with the community. After the tours, myself and a few of my partners sat down to talk about tools, technology and techniques with the group. One of the members of IFIE asked a great question: "What is it that bothers you most about the current state of tools and technology?" I think a few of us gave answers because it's always a rare opportunity to answer such a bold question. I think my colleagues and close friends commonly agree that what bothers us the most is the way in which technology can be an obstruction. It's becoming clearer to us that there are people in different parts of the industry that are using technology in ways to influence or even validate their position. In some cases I feel there are types of people that are opportunistic, others are just part of a transition. But the equation remains:

As time goes on > Moore's Law applies > new technology develops > people's expectations go up > masters of the technology immerge > technology becomes innate > all people master it > need for masters goes away > and the cycle repeats

In other words, we are a society with the immense capability to acclimate. I think every sociologist would agree with that. And in that acclimation there are times where we need to be led, and then times where we take the lead. And in that transition to becoming a user/master/leader of a technique or technology the need for help dissipates. That is where some people use technology to create an overt level of complexity (in some cases unnecessarily), where the technology is literally designed to be a wall between the filmmaker and the end goal in order to justify existence. Almost no one does this maliciously, but this is a growing problem that few are able to detect until well after a technology has matured. After technological maturity and adaptation passes 2/3rds, the need for help becomes harder to justify. But it's not all the fault of the masters, in fact some people ask me routinely, "Where do you think my job went?" I tell them the same metaphor, "Some hosts eventually accept the transplant." Once a transplant is accepted, the host no longer needs a routine doctor or even medicine. But this isn't all bad. I actually think for many it's a sign of massive opportunity because as the cycle repeats, the same early masters of the technology can re-immerge and become new masters and lead people through the next wave! The mistake is when you ride the wave too long and wonder how you got so far out to sea.

And so comes quite possibly the world's best example of this: the modern Apple company. This is a group of people who have found ways to repeat the cycle of architecting a new technology, then relying on masters to adapt it, then enabling everyone to adapt it, then making it so "normal" that the next iteration is accepted with less hesitation than the preceding cycle. They build and built upon this until they are not just leading the market, they (in many cases) control the market. An example of this is with Meg Whitman, CEO of HP, who recently spoke about a new tablet device HP is designing. She said it was going to be a great addition and impact on the tablet market. She was then corrected by someone in suggesting, "It's not the tablet market, it's the iPad market." Apple iPad has greater than 90% in the tablet world...so if you want to get into the world of tablets, there is only one object to design against. And Apple is not selling people short, they start out their latest iPad introductory video with a statement that I identify with, I believe in, I focus on, and I want to live by example:

"We believe that technology is at its very best when it's invisible."

Man, I love that. I love realizing that the most complex item I own is the easiest to use. Could there be a better resolution to the evolution of the computer in which we initially measured them by size in square feet? Occam's Razor suggests that the path of least resistance in a given problem is likely the best solution. When technology becomes invisible, then everyone can leverage its benefits. For some, that's an invasion of space. For others, it's purely liberating. And when I opened and turned on my new iPad, the cycle reset, fresh ideas poured in, and the technology continued to disappear.

3 years ago when the first iPad was announced I was in a meeting with a post production supervisor for a movie we were about to start shooting. I told her that 2 weeks after production started, the iPad 1 would come out and we would send a few to production for dailies viewing. She looks at me and says, "Why would anyone ever want to use an iPad for viewing dailies?" 3 years later, iPad dailies review has become the preferred method of image delivery (at least amongst my circle). The physically-limited endangered species known as "DVD" has been falling further and further behind the technological developments of file-based tools such as iPads. And when the rumors of a Retina displaying iPad was in the works, I knew that was the technological breakthrough that we needed in order to retire DVDs and tapes forever.

The A5X chip is enabling a major amount of pixels to be populated. With a screen of 2048x1536, the iPad is able to display nearly the exact same resolution of a 2K film scan. With 1 million pixels more than an HDTV, the new iPad is putting HDTV on notice. Consider this:

In 2002 Apple effectively told the music industry, "This is how you're going to operate and why."

-No one believed them.

In 2007 Apple effectively told the telecommunications industry, "This is how you're going to operate and why."

-Few believed them.

I predict in 2013 Apple will effectively tell the broadcast industry, "This is how you're going to operate and why."

-Who will believe them?

If I were laying the groundwork for taking on broadcasting and migrating it to broadbanding, I would need three major things:

1. I would start with releasing tools that enhance the viewing experience, not inhibit it (Retina). Every person that I show the new iPad to is literally floored with the pictures. And I realized this is the first step in getting consumers acclimated to images that make high definition seem mediocre.

2. I would need to find a way to integrate that tool into the existing monitoring system (televisions). Last year, Apple made a big push for AirPlay which allows you to easily and wirelessly push content from your computer or iPad to your television.

3. I would need a single place in which to control and distribute all content without the limitations of terrestrial broadcast, satellite or cable (Apple Television). While this hasn't even been announced yet, I predict that Apple is building a device that enables the power of interconnected device control (iPhone, iPad, MacBook, etc) to a computer that is the hybrid of a television and computer in one. This cloud-centric device means you will control it with your phone (goodbye TV remote) and view what you want, when you want through an Apple Television application similar to iTunes (maybe iViews).

Clearly Apple has a plan and I think we're experiencing some major foundational components that are required for the release. But that doesn't mean we can't enjoy the isolated power of this latest chip set on the new iPad.

For me and my team, we took a moment to do some experiments with the display, the resolution and some high fidelity files that we had. First, we took some resolution charts and loaded them on the ipad to see how well the display handled. This picture is not the file itself, rather a 600dip, 6K (6299x4725) resolution file loaded onto the iPad, then screen captured on the iPad and off-loaded. The result is amazing. I can see nearly twice as deep into the zone as my 15" Macbook Pro.

***Note: the images on the Tumblr blog are 1/4 the resolution of the source. These frame grabs unfortunately do not exhibit the actual resolution of the iPad. For the exact files themselves, I encourage you to download and inspect them using my FTP site. You can find the images at:

webftp.lightiron.com

user: ipad

pass: resolution

This file does the same, only it shows 2K all the way to the edge without and banding or aliasing.