Link

(Via: Boing Boing)

At CNN, Boing Boing pal and security researcher Bruce Schneier and Harvard media professor Nick Couldry write about acedia, "a malady that apparently plagued many Medieval monks. It's a sense of no longer caring about caring, not because one had become apathetic, but because somehow the whole structure of care had become jammed up." According to Schneier and Couldry, the meta-apathy of acedia is one of the strangest and psychologically stressful consequences of the COVID-19 pandemic. From CNN:

The source of our current acedia is not the literal loss of a future; even the most pessimistic scenarios surrounding Covid-19 have our species surviving. The dislocation is more subtle: a disruption in pretty much every future frame of reference on which just going on in the present relies.

Moving around is what we do as creatures, and for that we need horizons. Covid has erased many of the spatial and temporal horizons we rely on, even if we don't notice them very often. We don't know how the economy will look, how social life will go on, how our home routines will be changed, how work will be organized, how universities or the arts or local commerce will survive.

What unsettles us is not only fear of change. It's that, if we can no longer trust in the future, many things become irrelevant, retrospectively pointless. And by that we mean from the perspective of a future whose basic shape we can no longer take for granted. This fundamentally disrupts how we weigh the value of what we are doing right now. It becomes especially hard under these conditions to hold on to the value in activities that, by their very nature, are future-directed, such as education or institution-building.

image: transformation of original photo by Jessie Eastland (CC BY-SA 4.0)

0 notes

Link

(Via: Lobsters)

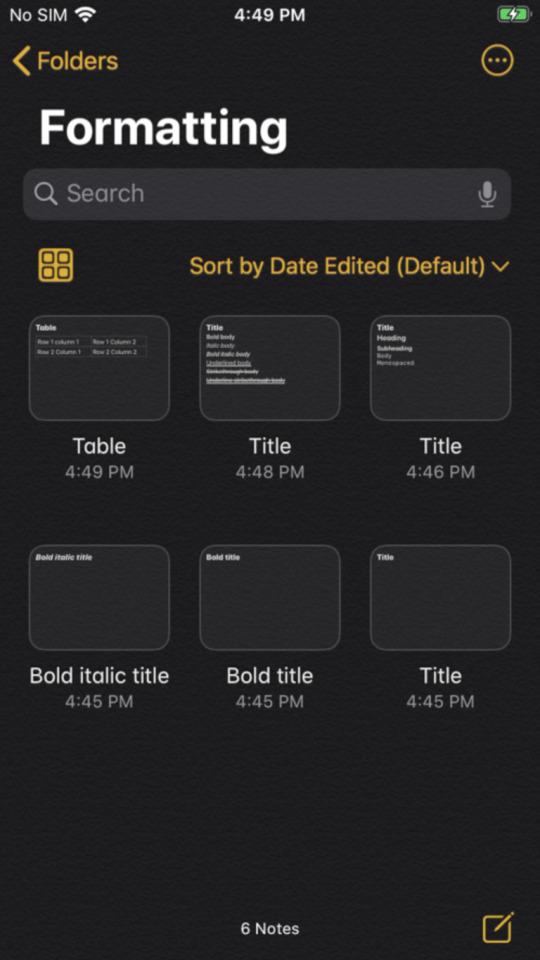

TL;DR: This post explains portions of two protobufs used by Apple, one for the Note format itself and another for embedded objects. More importantly, it explains how you can figure out the structure of protobufs.

Background

Previous entries in this series covered how to deal with Apple Notes and the embedded objects in them, including embedded tables and galleries. Throughout these posts, I have referred to the fact that Apple uses protocol buffers (protobufs) to store the information for both notes and the embedded objects within them. What I have not yet done is actually provide the .proto file that was used to generate the Ruby output, or explained how you can develop the same on your app of interest. If you only care about the first part of that, you can view the .proto file or the config I use for protobuf-inspector. Both of these files are just a start to pull out the important parts for processing and can certainly be improved.

As with previous entries, I want to make sure I give credit where it is due. After pulling apart the Note protobuf and while I was trying to figure out the table protobuf, I came across dunhamsteve’s work. As a result, I went back and modified some of my naming to better align to what he had published and added in some fields like version which I did not have the data to discover.

What is a Protocol Buffer?

To quote directly from the source,

Protocol buffers are Google’s language-neutral, platform-neutral, extensible mechanism for serializing structured data – think XML, but smaller, faster, and simpler. You define how you want your data to be structured once, then you can use special generated source code to easily write and read your structured data to and from a variety of data streams and using a variety of languages.

What does that mean? It means a protocol buffer is a way you can write a specification for your data and use it in many projects and languages with one command. The end result is source code for whatever language you are writing in. For example, Sean Ballinger’s Alfred Search Notes App used my notestore.proto file to compile to Go instead of Ruby to interact with Notes on MacOS. When you use it in your program, the data which you save will be a raw data stream which won’t look like much, but will be intelligable to any code with that protobuf definition.

The definition is generally a .proto file which would look something like:

syntax = "proto2"; // Represents an attachment (embedded object) message AttachmentInfo { optional string attachment_identifier = 1; optional string type_uti = 2; }

This definition would have just one message type (AttachmentInfo), with two fields (attachment_identifier and type_uti), both optional. This is using the proto2 syntax.

Why Care About Protobufs

Protobufs are everywhere, especially if you happen to be working with or looking at Google-based systems, such as Android. Apple also uses a lot of them in iOS, and for people that have to support both operating systems, using a protobuf makes the pain of maintaining two different code bases slightly less annoying because you can compile the same definition to different languages. If you are in forensics, you may come across something that looks like it isn’t plaintext and discover that you’re actually looking at a protobuf. When it comes specifically to Apple Notes, protobufs are used both for the Note itself and the attachments.

How to Use a .proto file

Assuming you have a .proto file, either from building one yourself or from finding one from your favorite application, you can compile it to your target language using protoc. The resulting file can then be included in your project using whatever that language’s include statement is to create the necessary classes for the data. For example, when writing Apple Cloud Notes Parser in Ruby, I used protoc --ruby_out=. ./proto/notestore.proto to compile it and then require_relative 'notestore_pb.rb' in my code to include it.

If I wanted instead to add in support for python, I would only have to make this change: protoc --ruby_out=. --python_out=. ./proto/notestore.proto

How Can You Find a Protobuf Definition File?

If you come up against a protobuf in an application you are looking at, you might be able to find the .proto protobuf definition file in the application itself or somewhere on the forensic image. I ended up going through an iOS 13 forensic image earlier this year and found that Apple still had some of theirs on disk:

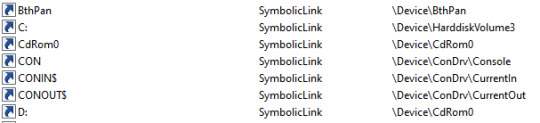

[notta@cuppa iOS13_logical]$ find | grep '\.proto$' ./System/Library/Frameworks/MultipeerConnectivity.framework/MultipeerConnectivity.proto ./System/Library/PrivateFrameworks/ActivityAchievements.framework/ActivityAchievementsBackCompat.proto ./System/Library/PrivateFrameworks/ActivityAchievements.framework/ActivityAchievements.proto ./System/Library/PrivateFrameworks/CoreLocationProtobuf.framework/Support/Harvest/CLPCollectionRequest.proto ./System/Library/PrivateFrameworks/ActivitySharing.framework/ActivitySharingDatabaseCodables.proto ./System/Library/PrivateFrameworks/ActivitySharing.framework/ActivitySharingDomainCodables.proto ./System/Library/PrivateFrameworks/ActivitySharing.framework/ActivitySharingInvitationCodables.proto ./System/Library/PrivateFrameworks/ActivitySharing.framework/ActivitySharingCloudKitCodables.proto ./System/Library/PrivateFrameworks/CloudKitCode.framework/RecordTransport.proto ./System/Library/PrivateFrameworks/RemoteMediaServices.framework/RemoteMediaServices.proto ./System/Library/PrivateFrameworks/CoreDuet.framework/knowledge.proto ./System/Library/PrivateFrameworks/HealthDaemon.framework/Statistics.proto ./System/Library/PrivateFrameworks/AVConference.framework/VCCallInfoBlob.proto ./System/Library/PrivateFrameworks/AVConference.framework/captions.proto

Some of these are really interesting when you look at them, particularly if you care about their location data and pairing. You don’t even have to have an iOS forensic image sitting around as all of the same files are included in your copy of MacOS 10.15.6, as well, if you run sudo find /System/ -iname "*.proto". I am not including any interesting snippets of those because they are copyrighted by Apple and I would explicitly note that none are related to Apple Notes or the contents of this post.

In general, you should not expect to find these definitions sitting around since the definition file isn’t needed once the code is generated. For more open source applications, you might be interested in some Google Dorks, especially when looking at Android artifacts, as you might still find them.

How Can You Rebuild The Protobuf?

But what if you can’t find the definition file, how can you rebuild it yourself? This was the most interesting part of rewriting Apple Cloud Notes Parser as I had no knowledge of how Apple typically represents data, nor protobufs, so it was a fun learning adventure.

If you have nothing else, the protoc --decode-raw command can give you an intial look at what is in the data, however this amounts to not much more than pretty printing a JSON object, it doesn’t do a great job of telling you you what might be in there. I made heavy use of mildsunrise’s protobuf-inspector which at least makes an attempt to tell you what you might be looking at. Another benefit to using this is that it lets you incrementally build up your own definition by editing a file named protobuf_config.py in the protobuf-insepctor folder.

For example, below is the output from protobuf-inspector when I ran it on the Gunzipped contents of one of the first notes in my test database.

[notta@cuppa protobuf-inspector]$ python3 main.py < ~/note_18.blob root: 1 <varint> = 0 2 <chunk> = message: 1 <varint> = 0 2 <varint> = 0 3 <chunk> = message: 2 <chunk> = "Pure blob title" 3 <chunk> = message: 1 <chunk> = message(1 <varint> = 0, 2 <varint> = 0) 2 <varint> = 0 3 <chunk> = message(1 <varint> = 0, 2 <varint> = 0) 5 <varint> = 1 3 <chunk> = message: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 2 <varint> = 5 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 5 <varint> = 2 3 <chunk> = message: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 5) 2 <varint> = 5 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 8) 4 <varint> = 1 5 <varint> = 3 3 <chunk> = message: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 10) 2 <varint> = 4 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 4 <varint> = 1 5 <varint> = 4 3 <chunk> = message: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 14) 2 <varint> = 10 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 5 <varint> = 5 3 <chunk> = message: 1 <chunk> = message: 1 <varint> = 0 2 <varint> = 4294967295 2 <varint> = 0 3 <chunk> = message: 1 <varint> = 0 2 <varint> = 4294967295 4 <chunk> = message: 1 <chunk> = message: 1 <chunk> = bytes (16) 0000 EE FE 10 DA 5A 79 43 25 88 BA 6D CA E2 E9 B7 EC ....ZyC%..m..... 2 <chunk> = message(1 <varint> = 24) 2 <chunk> = message(1 <varint> = 9) 5 <chunk> = message: 1 <varint> = 5 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 5 <chunk> = message: 1 <varint> = 5 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 5 <chunk> = message: 1 <varint> = 5 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1)

There is a lot in here for a note that just says “Pure blob title”! Because we know that protobufs are made up of messages and fields, as we look through this we are going to try to figure out what the messages are and what types of fields they have. To do that, you want to pay attention to the field types (such as “varint”) and numbers (1, 2, 3, you know what numbers are).

In a protobuf, each field number corresponds to exactly one field, so when you see many of the same field number, you know that is a repeated field. In the above example, there are a lot of repeated field 5, which is a message that contains two things, a varint and another message. You also want to pay attention to the values given and look for magic numbers that might correspond to things like timestamps, the length of a string, the length of a substring, or an index within the overall protobuf.

Breaking Down an Example

Looking at the very start of this, we see that this protobuf has one root object with in. That root object has two fields which we know about: 1 and 2. However, we don’t have enough information to say anything meaningful about them, other than that field 2 is clearly a message type that contains everything else.

root: 1 <varint> = 0 2 <chunk> = message: ...

Looking within field 2, we see a very similar issue. It has three fields, two of which (1 and 2) we don’t know enough about to deduce their purpose. Field 3, however, again is a clear message with a lot more inside of it.

... 2 <chunk> = message: 1 <varint> = 0 2 <varint> = 0 3 <chunk> = message: ...

Field 3 is where it gets interesting. We see some plaintext in field 2, which contains the entire text of this particular note. We see repeated fields 3 and 5, so those messages clearly can apply more than once. We see only one field 4, which is a message that has a 16-byte value and two integers.

... 3 <chunk> = message: 2 <chunk> = "Pure blob title" 3 <chunk> = message: 1 <chunk> = message(1 <varint> = 0, 2 <varint> = 0) 2 <varint> = 0 3 <chunk> = message(1 <varint> = 0, 2 <varint> = 0) 5 <varint> = 1 3 <chunk> = message: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 2 <varint> = 5 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 5 <varint> = 2 ... [3 repeats a few times] 4 <chunk> = message: 1 <chunk> = message: 1 <chunk> = bytes (16) 0000 EE FE 10 DA 5A 79 43 25 88 BA 6D CA E2 E9 B7 EC ....ZyC%..m..... 2 <chunk> = message(1 <varint> = 24) 2 <chunk> = message(1 <varint> = 9) 5 <chunk> = message: 1 <varint> = 5 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) ... [5 repeats a few times]

An Example protobuf-Inspector Config

At this point, we need more data to test against. To make that test meaningful, I would first save the information we’ve seen above into a new definition file for protobuf-inspector. That way when we run this on other notes, anything that is new will stand out. Even though we don’t know much, this could be your initial definition file, saved in the folder you run protobuf-inspector from as protobuf_config.py.

types = { # Main Note Data protobuf "root": { # 1: unknown? 2: ("document"), }, # Related to a Note "document": { # # 1: unknown? # 2: unknown? 3: ("note", "Note"), }, "note": { # 2: ("string", "Note Text"), 3: ("unknown_chunk", "Unknown Chunk"), 4: ("unknown_note_stuff", "Unknown Stuff"), 5: ("unknown_chunk2", "Unknown Chunk 2"), }, "unknown_chunk": { # 1: 2: ("varint", "Unknown Integer 1"), # 3: 5: ("varint", "Unknown Integer 2"), }, "unknown_note_stuff": { # 1: unknown message }, "unknown_chunk2": { 1: ("varint", "Unknown Integer 1"), }, }

Then when we run this against the next note in our database, we see many of the fields we have “identified”. Notice, for example, that the more complex field 3 we considered before is now clearly called a “Note” in the below output. That makes it much easier to understand as you walk through it.

notta@cuppa protobuf-inspector]$ python3 main.py < ~/note_19.blob root: 1 <varint> = 0 2 <document> = document: 1 <varint> = 0 2 <varint> = 0 3 Note = note: 2 Note Text = "Pure bold italic title" 3 Unknown Chunk = unknown_chunk: 1 <chunk> = message(1 <varint> = 0, 2 <varint> = 0) 2 Unknown Integer 1 = 0 3 <chunk> = message(1 <varint> = 0, 2 <varint> = 0) 5 Unknown Integer 2 = 1 3 Unknown Chunk = unknown_chunk: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 4) 2 Unknown Integer 1 = 1 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 5 Unknown Integer 2 = 2 3 Unknown Chunk = unknown_chunk: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 2 Unknown Integer 1 = 4 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 8) 4 <varint> = 1 5 Unknown Integer 2 = 3 3 Unknown Chunk = unknown_chunk: 1 <chunk> = message(1 <varint> = 1, 2 <varint> = 5) 2 Unknown Integer 1 = 21 3 <chunk> = message(1 <varint> = 1, 2 <varint> = 0) 5 Unknown Integer 2 = 4 3 Unknown Chunk = unknown_chunk: 1 <chunk> = message: 1 <varint> = 0 2 <varint> = 4294967295 2 Unknown Integer 1 = 0 3 <chunk> = message: 1 <varint> = 0 2 <varint> = 4294967295 4 Unknown Stuff = unknown_note_stuff: 1 <chunk> = message: 1 <chunk> = bytes (16) 0000 EE FE 10 DA 5A 79 43 25 88 BA 6D CA E2 E9 B7 EC ....ZyC%..m..... 2 <chunk> = message(1 <varint> = 26) 2 <chunk> = message(1 <varint> = 9) 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 22 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 5 <varint> = 3

Building Up the Config

Editing that protobuf_config.py file lets you quickly recheck the blobs you previously exported and you can build your understanding up iteratively over time. But how do you build your understanding up? In this case I looked at the fact that the plaintext string didn’t have any of the fancy bits that I saw in Notes and assumed that some parts of either the repeated 3, or the repeated 5 sections dealt with formatting.

Because there are a lot of fancy bits that could be used, I tried to generate a lot of test examples which had only one change in each. So I started with what you see above, just a title and generated notes that iteratively had each of the formatting possibilities in a title. To make it really easy on myself to recognize string offsets, I always styled the word which represented the style. For example, any time I had the word bold it was bold and if I used italics it was italics.

As I generated a lot of these, and started generating content in the body of the note, not just the title, I noticed a pattern emerging in field 5. The lengths of all of the messages in field 5 always added up to the length of the text. In the example above from Note 19, “Unknown Integer 1” is value 22, and the length of “Note Text” is 22. In the previous example from Note 18, “Unknown Integer 1” would add up to 15 (there are three enties, each with the value 5), and the length of “Note Text” is 15. Based on this, I started attacking field 5 assuming it contained the formatting information to know how to style the entire string.

Here, for example, are the relevant note texts and that unknown chunk #5 for three more notes which show interesting behavior as you compare the substrings. Play attention to the spaces between words and newlines, as compared to the assumed lengths in field 5.

[notta@cuppa protobuf-inspector]$ python3 main.py < ~/note_21.blob 3 Note = note: 2 Note Text = "Pure bold underlined strikethrough title" 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 40 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 5 <varint> = 3 6 <varint> = 1 7 <varint> = 1 [notta@cuppa protobuf-inspector]$ python3 main.py < ~/note_32.blob 3 Note = note: 2 Note Text = "Title\nHeading\n\nSubheading\nBody\nMono spaced\n\n" 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 6 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 8 2 <chunk> = message(1 <varint> = 1, 3 <varint> = 1) 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 1 2 <chunk> = message(3 <varint> = 1) 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 11 2 <chunk> = message(1 <varint> = 2, 3 <varint> = 1) 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 5 2 <chunk> = message(3 <varint> = 1) 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 13 2 <chunk> = message(1 <varint> = 4, 3 <varint> = 1) [notta@cuppa protobuf-inspector]$ python3 main.py < ~/note_33.blob 3 Note = note: 2 Note Text = "Not bold title\nBold title\nBold body\nBold italic body\nItalic body" 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 4 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 3 <chunk> = message: 1 <chunk> = ".SFUI-Regular" 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 11 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 3 <chunk> = message: 1 <chunk> = ".SFUI-Regular" 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 11 2 <chunk> = message(1 <varint> = 0, 3 <varint> = 1) 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 10 2 <chunk> = message(3 <varint> = 1) 5 <varint> = 1 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 17 2 <chunk> = message(3 <varint> = 1) 5 <varint> = 3 5 Unknown Chunk 2 = unknown_chunk2: 1 Unknown Integer 1 = 11 2 <chunk> = message(3 <varint> = 1) 5 <varint> = 2

Inside the “Unknown Chunk 2” message’s field #2, we see a message that has at least two fields, 1 and 3. As we compare the text in note 32, which has each of the types of headings (Title, heading, subheading, etc), to the other two notes, we see that every time there is a title, the first field in the message in field 2, is always 0. When it is a heading, the value is 1, and a subheading the value is 2. Body text has no entry in that field, but monospaced text does. This makes it seem like that field #2 tells us the style of the text.

Then when we compare note 33’s types of text (bold, bold italic, and italic), we can see that everything stays the same except for field #5. In this case, when text is bold, the value in that field is 1, and when it is italic, it is 2. When it it both bold and italic, the value is 3. In note 21, we can see that fields 6 and 7 only show up in that message when something is underlined or struck through, this would make those seem like a boolean flag.

I created many more tests like this, but the general theory is the same: try to create situations where the only change in the protobuf is as small as possible. This was a lot of different notes, using literally all of the available featues in many of the needed combinations to be able to isolate what was set when. As I thought I figured out what a field was, I would add it to the protobuf_config.py file and continue going, until something did not make sense at which point I would back out that specific change. I did not try to figure out the entire structure as my goal was purely to be able to recreate the display of the note in HTML.

Although Apple does not directly document their Notes formats, the Developer Documents do provide insight into what you might expect to find. For example, Core Text is how text is laid out, which sounds a lot like what we were trying to find out in field 5. Reading these documents helped me understand some of the general ideas to be watching for.

What is in the Notes Protobuf Config?

Now that you know how you can iteratively build up a definition, I want to walk through the notestore.proto file which Apple Cloud Notes Parser uses. This could be easily imported to other projects in other languages besides Ruby and I am taking sections of the file out of order to build up a common understanding.

Note Protobuf

syntax = "proto2"; // // Classes related to the overall Note protobufs // // Overarching object in a ZNOTEDATA.ZDATA blob message NoteStoreProto { required Document document = 2; } // A Document has a Note within it. message Document { required int32 version = 2; required Note note = 3; } // A Note has both text, and then a lot of formatting entries. // Other fields are present and not yet included in this proto. message Note { required string note_text = 2; repeated AttributeRun attribute_run = 5; }

It seemed like what I found in poking at the protobufs fit the proto2 syntax better than the proto3 syntax, so that’s what I’m using. The NoteStoreProto, Document, and Note messages represent what we were looking at in the examples above, the highest level messages in the protobuf. As you can see, we don’t do much with the NoteStoreProto or Document and I would not be surprised to learn these have different names and a more general use in Apple. For the Note itself, the only two fields this .proto definition concerns itself with are 2 (the note text) and 5 (the attribute runs for formatting and the like).

// Represents a "run" of characters that need to be styled/displayed/etc message AttributeRun { required int32 length = 1; optional ParagraphStyle paragraph_style = 2; optional Font font = 3; optional int32 font_weight = 5; optional int32 underlined = 6; optional int32 strikethrough = 7; optional int32 superscript = 8; //Sign indicates super/sub optional string link = 9; optional Color color = 10; optional AttachmentInfo attachment_info = 12; } //Represents a color message Color { required float red = 1; required float green = 2; required float blue = 3; required float alpha = 4; } // Represents an attachment (embedded object) message AttachmentInfo { optional string attachment_identifier = 1; optional string type_uti = 2; } // Represents a font message Font { optional string font_name = 1; optional float point_size = 2; optional int32 font_hints = 3; } // Styles a "Paragraph" (any run of characters in an AttributeRun) message ParagraphStyle { optional int32 style_type = 1 [default = -1]; optional int32 alignment = 2; optional int32 indent_amount = 4; optional Checklist checklist = 5; } // Represents a checklist item message Checklist { required bytes uuid = 1; required int32 done = 2; }

Speaking of the AttributeRun, these are the messages which are needed to put it back together. Each of the AttributeRun messages have a length (field 1). They optionally have a lot of other fields, such as a ParagraphStyle (field 2), a Font (field 3), the various formatting booleans we saw above, a Color (field 10), and AttachmentInfo (field 12). The Color is pretty straight forward, taking RGB values. The AttachmentInfo is simple enough, just keeping the ZIDENTIFIER value and the ZTYPEUTI value. The Font isn’t something I actually take advantage of yet, but there are placeholders for the values which appear.

The ParagraphStyle is one of the more import messages for displaying a note as it helps to style a run of characters with information such as the indentation. It also contains within it a CheckList message, which holds the UUID of the checklist and whether or not it has been completed.

With the protobuf definition so far, you should be able to correctly render the text, although you will need a cheat sheet for the formatting found in ParagraphStyle’s first field. I originally had this in the protobuf definition, but I do not believe it is a true enum, so I moved it to the AppleNote class’ code as constants.

class AppleNote # Constants to reflect the types of styling in an AppleNote STYLE_TYPE_DEFAULT = -1 STYLE_TYPE_TITLE = 0 STYLE_TYPE_HEADING = 1 STYLE_TYPE_SUBHEADING = 2 STYLE_TYPE_MONOSPACED = 4 STYLE_TYPE_DOTTED_LIST = 100 STYLE_TYPE_DASHED_LIST = 101 STYLE_TYPE_NUMBERED_LIST = 102 STYLE_TYPE_CHECKBOX = 103 # Constants that reflect the types of font weighting FONT_TYPE_DEFAULT = 0 FONT_TYPE_BOLD = 1 FONT_TYPE_ITALIC = 2 FONT_TYPE_BOLD_ITALIC = 3 ... end

MergeableData protobuf

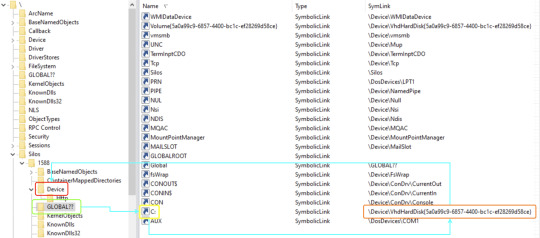

// // Classes related to embedded objects // // Represents the top level object in a ZMERGEABLEDATA cell message MergableDataProto { required MergableDataObject mergable_data_object = 2; } // Similar to Document for Notes, this is what holds the mergeable object message MergableDataObject { required int32 version = 2; // Asserted to be version in https://github.com/dunhamsteve/notesutils required MergeableDataObjectData mergeable_data_object_data = 3; } // This is the mergeable data object itself and has a lot of entries that are the parts of it // along with arrays of key, type, and UUID items, depending on type. message MergeableDataObjectData { repeated MergeableDataObjectEntry mergeable_data_object_entry = 3; repeated string mergeable_data_object_key_item = 4; repeated string mergeable_data_object_type_item = 5; repeated bytes mergeable_data_object_uuid_item = 6; } // Each entry is part of the pbject. For example, one entry might be identifying which // UUIDs are rows, and another might hold the text of a cell. message MergeableDataObjectEntry { required RegisterLatest register_latest = 1; optional Dictionary dictionary = 6; optional Note note = 10; optional MergeableDataObjectMap custom_map = 13; optional OrderedSet ordered_set = 16; }

Similar to the Note protobuf definition above, the MergeableDataProto and MergeableDataObject messages are likely larger objects which Notes just doesn’t have enough data to show the full understanding. MergeableDataObjectData (I know, the naming could use some work, that’s a future improvement) is really the embedded object found in the ZMERGEABLEDATA column. It is made up of a lot of MergeableDataObjectEntry messages (field 1) and the example from embedded tables is that an entry might tell the user which other entries are rows or columns. The MergeableDataObjectData also has strings which represent the key (field 4) or the type of item (field 5), and a set of 16 bytes which represent a UUID to identify this object (field 6).

MergeableDataObjectEntry is where things get more complicated. So far five of its fields seem relevant, with the Note message in field 10 already having been explained above. The RegisterLatest (field 1), Dictionary (field 6), MergeableDataObjectMap (field 13), and OrderedSet (field 16) objects are explained below, but will make the msot sense if you read about embedded tables at the same time.

// ObjectIDs are used to identify objects within the protobuf, offsets in an array, or // a simple String. message ObjectID { required uint64 unsigned_integer_value = 2; required string string_value = 4; required int32 object_index = 6; } // Register Latest is used to identify the most recent version message RegisterLatest { required ObjectID contents = 2; }

The RegisterLatest object has one ObjectID within it (field 2). This message is used to identify which ObjectID is the latest version. This is needed because Notes can have more than one source, between your local device, shared iCloud accounts, and a web editor in iCloud. As updates are merged, you can have older edits present, which you don’t want to use.

The ObjectID itself is useful in more places. It is used heavily in embedded tables and has three different possible pointers, one for unsigned integers (field 2), one for strings (field 4), and one for objects (field 6). It should point to one of those three, as way seen below.

// The Object Map uses its type to identify what you are looking at and // then a map entry to do something with that value. message MergeableDataObjectMap { required int32 type = 1; repeated MapEntry map_entry = 3; } // MapEntries have a key that maps to an array of key items and a value that points to an object. message MapEntry { required int32 key = 1; required ObjectID value = 2; }

Now that the ObjectID message is defined, we can look at the MergeableDataObjectMap. This message has a type (field 1) and potentially a lot of MapEntry messages (field 3). The type will be meaningful when looked up from another place.

The MapEntry message has an integer key (field 1) and an ObjectID value (field 2). The ObjectID will point to something that is indicated by the key, either as an integer, string, or object.

// A Dictionary holds many DictionaryElements message Dictionary { repeated DictionaryElement element = 1; } // Represents an object that has pointers to a key and a value, asserting // somehow that the key object has to do with the value object. message DictionaryElement { required ObjectID key = 1; required ObjectID value = 2; }

The Directionary message has a lot of DictionaryElement messages (field 1) within it. Each DictionaryElement has a key (field 1) and a value (field 2), both of which are ObjectIDs. For example, the key might be an ObjectID which has an ObjectIndex of 20 and the value might be an ObjectID with an ObjectIndex of 19. That would say that whatever is contained in index 20 is how we understand what we do with whatever is in index 19.

// An ordered set is used to hold structural information for embedded tables message OrderedSet { required OrderedSetOrdering ordering = 1; required Dictionary elements = 2; } // The ordered set ordering identifies rows and columns in embedded tables, with an array // of the objects and contents that map lookup values to originals. message OrderedSetOrdering { required OrderedSetOrderingArray array = 1; required Dictionary contents = 2; } // This array holds both the text to replace and the array of UUIDs to tell what // embedded rows and columns are. message OrderedSetOrderingArray { required Note contents = 1; repeated OrderedSetOrderingArrayAttachment attachment = 2; } // This array identifies the UUIDs that are embedded table rows or columns message OrderedSetOrderingArrayAttachment { required int32 index = 1; required bytes uuid = 2; }

Finally, we have a set of messages related to OrderedSets. These are really key in tables (as are most of these more complicated messages we discuss) and kind of wrap around the messages we saw above (i.e. an ObjectID is likely pointing to an index in an OrderedSet). An OrderedSet message has an OrderedSetOrdering message (field 1) and a Dictionary (field 2). The OrderedSetOrdering message has an OrderedSetOrderingArray (field 1) and another Dictionary (field 2). The OrderedSetOrderingArray interestingly has a Note (field 1) and potentially many OrderedSetOrderingArrayAttachment messages (field 2). Finally, the OrderedSetOrderingArrayAttachment has an index (field 1) and a 16-byte UUID (field 2).

I would highly recommend checking out the blog post about embedded tables to get through these last three sections of the protobuf with an example to follow along.

Conclusion

Protobufs are an efficient way to store data, particularly when you have to interact with that same data or data schema from different languages. My understanding of the Apple Notes protobuf is certainly not complete, but at this point is generally good enough to support recreating the look of a note after parsing it. Most of the protobuf is straightforward, it is really when you get into embedded tables that things get crazy. At this point, you should have a good enough understanding to compile the Cloud Note Parser’s proto file for your target language and start playing with it yourself!

0 notes

Link

(Via: Hacker News)

Act 1: Sunday afternoon

So you know when you’re flopping about at home, minding your own business, drinking from your water bottle in a way that does not possess any intent to subvert the Commonwealth of Australia?

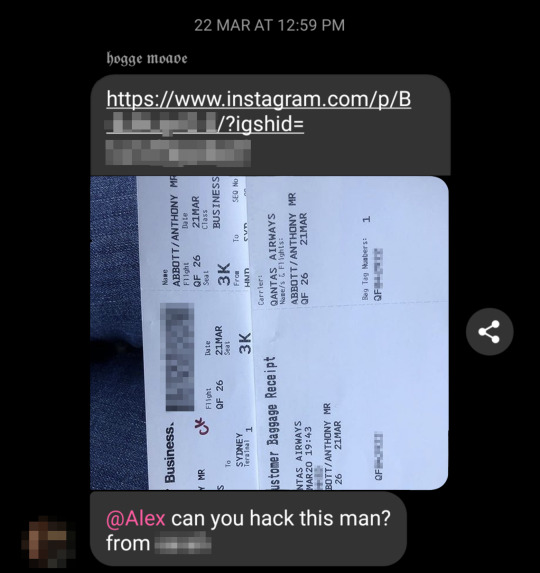

It’s a feeling I know all too well, and in which I was vigorously partaking when I got this message in “the group chat”.

A nice message from my friend, with a photo of a boarding pass 🙂 A good thing about messages from your friends is that they do not have any rippling consequences 🙂🙂🙂

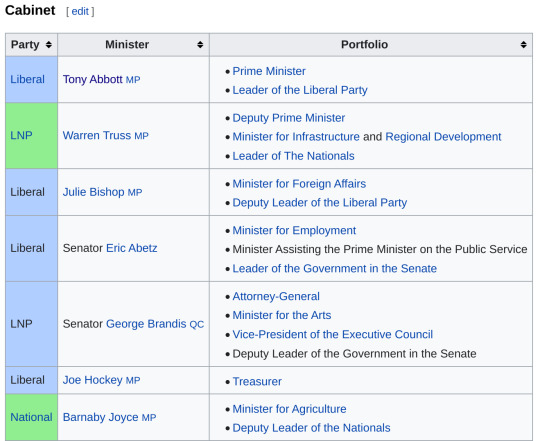

The man in question is Tony Abbott, one of Australia’s many former Prime Ministers.

That’s him, officer

For security reasons, we try to change our Prime Minister every six months, and to never use the same Prime Minister on multiple websites.

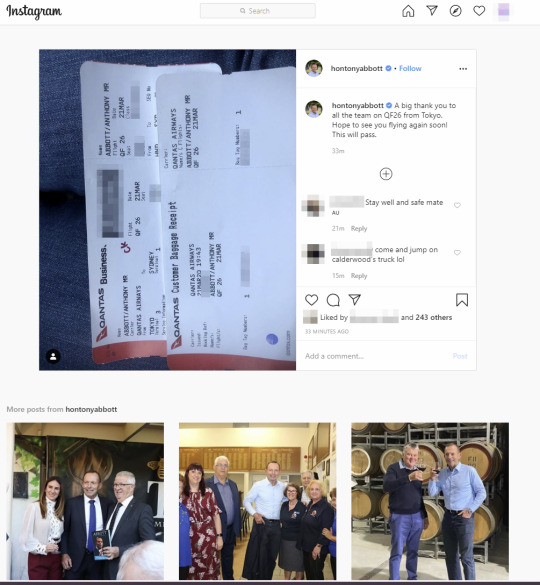

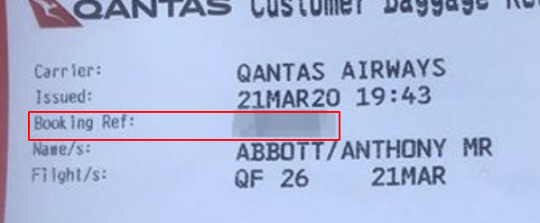

The boarding pass photo

This particular former PM had just posted a picture of his boarding pass on Instagram (Instagram, in case you don’t know it, is an app you can open up on your phone any time to look at ads).

The since-deleted Instagram post showing the boarding pass and baggage receipt. The caption reads “coming back home from japan 😍😍 looking forward to seeing everyone! climate change isn’t real 😌 ok byeee”

“Can you hack this man?”

My friend (who we will refer to by their group chat name, 𝖍𝖔𝖌𝖌𝖊 𝖒𝖔𝖆𝖉𝖊) is asking whether I can “hack this man” not because I am the kind of person who regularly commits 𝒄𝒚𝒃𝒆𝒓 𝒕𝒓𝒆𝒂𝒔𝒐𝒏 on a whim, but because we’d recently been talking about boarding passes.

I’d said that people post pictures of their boarding passes all the time, not knowing that it can sometimes be used to get their passport number and stuff. They just post it being like “omg going on holidayyyy 😍😍😍”, unaware that they’re posting cringe.

People post their boarding passes all the time, because it’s not clear that they’re meant to be secret

Meanwhile, some hacker is rubbing their hands together, being all “yumyum identity fraud 👀” in their dark web Discord, because this happens a lot.

So there I was, making intense and meaningful eye contact with this chat bubble, asking me if I could “hack this man”.

Surely you wouldn’t

Of course, my friend wasn’t actually asking me to hack the former Prime Minister.

However.

You gotta.

I mean… what are you gonna do, not click it? Are you gonna let a link that’s like 50% advertising tracking ID tell you what to do? Wouldn’t you be curious?

The former Prime Minister had just posted his boarding pass. Was that bad? Was someone in danger? I didn’t know.

What I did know was: the least I could do for my country would be to have a casual browse 👀

Investigating the boarding pass photo

Step 1: Hubris

So I had a bit of a casual browse, and got the picture of the boarding pass, and then…. I didn’t know what was supposed to happen after that.

Well, I’d heard that it’s bad to post your boarding pass online, because if you do, a bored 17 year-old Russian boy called “Katie-senpai” might somehow use it to commit identity fraud. But I don’t know anyone like that, so I just clumsily googled some stuff.

Googling how 2 hakc boarding pass

Eventually I found a blog post explaining that yes, pictures of boarding passes can indeed be used for Crimes. The part you wanna be looking at for all your criming needs is the barcode, because it’s got the “Booking Reference” (e.g. H8JA2A) in it.

Why do you want the booking reference? It’s one of the two things you need to log in to the airline website to manage your flight.

The second one is your… last name. I was really hoping the second one would be like a password or something. But, no, it’s the booking reference the airline emails you and prints on your boarding pass. And it also lets you log in to the airline website?

That sounds suspiciously like a password to me, but like I’m still fine to pretend it’s not if you are.

Step 2: Scan the barcode

I’ve been practicing every morning at sunrise, but still can’t scan barcodes with my eyes. I had to settle for a barcode scanner app on my phone, but when I tried to scan the picture in the Instagram post, it didn’t work :((

Maybe I shouldn’t have blurred out the barcode first

Step 2: Scan the barcode, but more

Well, maybe it wasn’t scanning because the picture was too blurry.

I spent around 15 minutes in an “enhance, ENHANCE” montage, fiddling around with the image, increasing the contrast, and so on. Despite the montage taking up way too much of the 22 minute episode, I couldn’t even get the barcode to scan.

Step 2: Notice that the Booking Reference is printed right there on the paper

After staring at this image for 15 minutes, I noticed the Booking Reference is just… printed on the baggage receipt.

I graduated university.

But it did not prepare me for this.

askdjhaflajkshdflkh

Step 3: Visit the airline’s website

After recovering from that emotional rollercoaster, I went to qantas.com.au, and clicked “Manage Booking”. In case you don’t know it because you live in a country with fast internet, Qantas is the main airline here in Australia.

(I also very conveniently started recording my screen, which is gonna pay off big time in just a moment.)

Step 4: Type in the Booking Reference

Well, the login form was just… there, and it was asking for a Booking Reference and a last name. I had just flawlessly read the Booking Reference from the boarding pass picture, and, well… I knew the last name.

I did hesitate for a split-second, but… no, I had to know.

Step 5: Crimes(?)

youngman.mp4

The “Manage Booking” page, logged in as some guy called Anthony Abbott

Can I get a YIKES in the chat

Leave a comment if you really felt that.

I guess I was now logged the heck in as Tony Abbott? And for all I know, everyone else who saw his Instagram post was right there with me. It’s kinda wholesome, to imagine us all there together. But also probably suboptimal in a governmental sense.

Was there anything secret in here?

I then just incredibly browsed the page, browsed it so hard.

I saw Tony Abbott’s name, flight times, and Frequent Flyer number, but not really anything super secret-looking. Not gonna be committing any cyber treason with a Frequent Flyer number. The flight was in the past, so I couldn’t change anything, either.

The page said the flight had been booked by a travel agent, so I guessed some information would be missing because of that.

I clicked around and scrolled a considerable length, but still didn’t find any government secrets.

Some people might give up here. But I, the Icarus of computers, was simply too dumb to know when to stop.

We’re not done just because a web page says we’re done

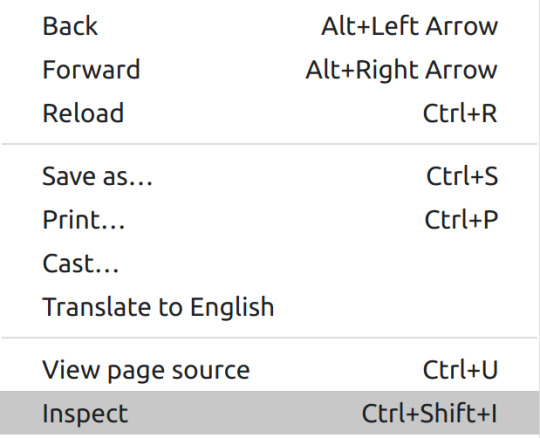

I wanted to see if there were juicy things hidden inside the page. To do it, I had to use the only hacker tool I know.

Right click > Inspect Element, all you need to subvert the Commonwealth of Australia

Listen. This is the only part of the story that might be confused for highly elite computer skill. It’s not, though. Maybe later someone will show you this same thing to try and flex, acting like only they know how to do it. You will not go gently into that good night. You will refuse to acknowledge their flex, killing them instantly.

How does “Inspect Element” work?

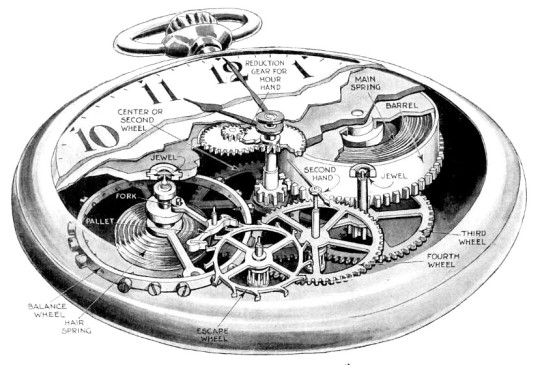

“Inspect Element”, as it’s called, is a feature of Google Chrome that lets you see the computer’s internal representation (HTML) of the page you’re looking at. Kinda like opening up a clock and looking at the cool cog party inside.

Yeahhh go little cogs, look at ‘em absolutely going off. Now imagine this but with like, JavaScript

Everything you see when you use “Inspect Element” was already downloaded to your computer, you just hadn’t asked Chrome to show it to you yet. Just like how the cogs were already in the watch, you just hadn’t opened it up to look.

But let us dispense with frivolous cog talk. Cheap tricks such as “Inspect Element” are used by programmers to try and understand how the website works. This is ultimately futile: Nobody can understand how websites work. Unfortunately, it kinda looks like hacking the first time you see it.

If you’d like to know more about it, I’ve prepared a short video.

Browsing the “Manage Booking” page’s HTML

I scrolled around the page’s HTML, not really knowing what it meant, furiously trying to find anything that looked out of place or secret.

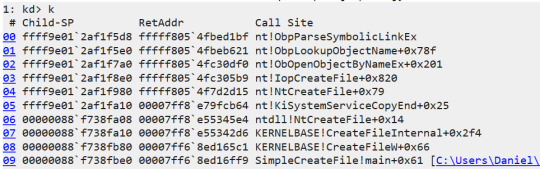

I eventually realised that manually reading HTML with my eyes was not an efficient way of defending my country, and Ctrl + F’d the HTML for “passport”.

oh no

Oh yes

It’s just there.

At this point I was fairly sure I was looking at the extremely secret government-issued ID of the 28th Prime Minister of the Commonwealth of Australia, servant to her Majesty Queen Elizabeth II and I was kinda worried that I was somehow doing something wrong, but like, not enough to stop.

….anything else in this page?

Well damn, if Tony Abbott’s passport number is in this treasure trove of computer spaghetti, maybe there’s wayyyyy more. Perhaps this HTML contains the lost launch codes to the Sydney Opera House, or Harold Holt.

Maybe there’s a phone number?

Searching for phone and number didn’t get anywhere, so I searched for 614, the first 3 digits of an Australian phone number, using my colossal and highly celestial galaxy brain.

Weird uppercase letters

A weird pile of what I could only describe as extremely uppercase letters came up. It looked like this:

RQST QF HK1 HNDSYD/03EN|FQTV QF HK1|CTCM QF HK1 614[phone number]|CKIN QF HN1 DO NOT SEAT ROW [row number] PLS SEAT LAST ROW OF [row letter] WINDOW

So, there’s a lot going on here. There is indeed a phone number in here. But what the heck is all this other stuff?

I realised this was like… Qantas staff talking to eachother about Tony Abbott, but not to him?

In what is surely the subtweeting of the century, it has a section saying HITOMI CALLED RQSTING FASTTRACK FOR MR. ABBOTT. Hitomi must be requesting a “fasttrack” (I thought that was only a thing in movies???) from another Qantas employee.

This is messed up for many reasons

What is even going on here? Why do Qantas flight staff talk to eachother via this passenger information field? Why do they send these messages, and your passport number to you when you log in to their website? I’ll never know because I suddenly got distracted with

Forbidden airline code

I realised the allcaps museli I saw must be some airline code for something. Furious and intense googling led me to several ancient forbidden PDFs that explained some of the codes.

Apparently, they’re called “SSR codes” (Special Service Request). There are codes for things like “Vegetarian lacto-ovo meal” (VLML), “Vegetarian oriental meal” (VOML), and even “Vegetarian vegan meal” (VGML). Because I was curious about these codes, here’s some for you to be curious about too (tag urself, I’m UMNR):

RFTV Reason for Travel UMNR Unaccompanied minor PDCO Carbon Offset (chargeable) WEAP Weapon DEPA Deportee—accompanied by an escort ESAN Passenger with Emotional Support Animal in Cabin

The phone number I found looked like this: CTCM QF HK1 [phone number]. Googling “SSR CTCM” led me to the developer guide for some kind of airline association, which I assume I am basically a member of now.

CTCM QF HK1 translates as “Contact phone number of passenger 1”

Is the phone number actually his?

I thought maybe the phone number belonged to the travel agency, but I checked and it has to be the passenger’s real phone number. That would be, if my calculations are correct,,,, *steeples fingers* Tony Abbott’s phone number.

what have i done

I’d now found Tony Abbott’s:

Passport details

Phone number

Weird Qantas staff comments.

My friend who messaged me had no idea.

Tony Abbott’s passport is probably a Diplomatic passport, which is used to “represent the Australian Government overseas in an official capacity”.

what have i done

By this point I’d had enough defending my country, and had recently noticed some new thoughts in my brain, which were:

oh jeez oh boy oh jeez

i gotta get someone, somehow, to reset tony abbott’s passport number

can you even reset passport numbers

is it possible that i’ve done a crime

Intermission

Act 2: Do not get arrested challenge 2020

In this act, I, your well-meaning but ultimately incompetent protagonist, attempt to do the following things:

⬜ figure out whether i have done a crime

⬜ notify someone (tony abbott?) that this happened

⬜ get permission to publish this here blog post

⬜ tell qantas about the security issue so they can fix it

Spoilers: This takes almost six months.

Let’s skip the boring bits

I contacted a lot of people about this. If my calculations are correct, I called at least 30 phone numbers, to say nothing of The Emails. If you laid all the people I contacted end to end along the equator, they would die, and you would be arrested. Eventually I started keeping track of who I talked to in a note I now refer to as “the hashtag struggle”.

I’m gonna skip a considerable volume of tedious and ultimately unsatisfying telephony, because it’s been a long day of scrolling already, and you need to save your strength.

Alright strap yourself in and enjoy as I am drop-kicked through the goal posts of life.

Part 1: is it possible that i’ve done a crime

I didn’t think anything I did sounded like a crime, but I knew that sometimes when the other person is rich or famous, things can suddenly become crimes. Like, was there going to be some Monarch Law or something? Was Queen Elizabeth II gonna be mad about this?

My usual defence against being arrested for hacking is making sure the person being hacked is okay with it. You heard me, it’s the power of ✨consent✨. But this time I could uh only get it in retrospect, which is a bit yikes.

So I was wondering like… was logging in with someone else’s booking reference a crime? Was having someone else’s passport number a crime? What if they were, say, the former Prime Minister? Would I get in trouble for publishing a blog post about it? I mean you’re reading the blog post right now so obviousl

Update: I have been arrested.

Just straight up Reading The Law

It turned out I could just google these things, and before I knew it I was reading “the legislation”. It’s the rules of the law, just written down.

Look, reading pages of HTML? No worries. Especially if it’s to defend my country. But whoever wrote the legislation was just making up words.

Eventually, I was able to divine the following wisdoms from the Times New Roman tea leaves:

Defamation is where you get in trouble for publishing something that makes someone look bad.

But, it’s fine for me to blog about it, since it’s not defamation if you can prove it’s true

Having Tony Abbott’s passport number isn’t a crime

But using it to commit identity fraud would be

There are laws about what it’s okay to do on a computer

The things it’s okay to do are: If u EVER even LOOK at a computer the wrong way, the FBI will instantly slam dunk you in a legal fashion dependent on the legislation in your area

I am possibly the furthest thing you can be from a lawyer. So, I’m sure I don’t need to tell you not to take this as legal advice. But, if you are the kind of person who takes legal advice from mango blog posts, who am I to stand in your way? Not a lawyer, that’s who. Don’t do it.

You know what, maybe I needed help. From an adult. Someone whose 3-year old kid has been buying iPad apps for months because their parents can’t figure out how to turn it off.

“Yeah, maybe I should get some of that free government legal advice”, I thought to myself, legally. That seemed like a pretty common thing, so I thought it should be easy to do. I took a big sip of water and googled “free legal advice”.

trying to ask a lawyer if i gone and done a crime

Before I went and told everyone about my HTML frolicking, I spent a week calling legal aid numbers, lawyers, and otherwise trying to figure out if I’d done a crime.

During this time, I didn’t tell anyone what I’d done. I asked if any laws would be broken if “someone” had “logged into a website with someone’s publicly-posted password and found the personal information of a former politician”. Do you see how that’s not even a lie? I’m starting to see how lawyers do it.

Calling Legal Aid places

First I call the state government’s Legal Aid number. They tell me they don’t do that here, and I should call another Legal Aid place named something slightly different.

The second place tells me they don’t do that either, and I should call the First Place and “hopefully you get someone more senior”.

I call the First Place again, and they say “oh you’ve been given the run around!”. You see where this is going.

Let’s skip a lot of phone calls. Take my hand as I whisk you towards the slightly-more-recent past. Based on advice I got from two independent lawyers that was definitely not legal advice: I haven’t done a crime.

Helllllll yeah. But I mean it’s a little late because I forgot to mention that by this point I had already emailed explicit details of my activities to the Australian Government.

☑️ figure out whether i have done a crime

⬜ notify someone (tony abbott?) that this happened

⬜ get permission to publish this here blog post

⬜ tell qantas about the security issue so they can fix it

Part 2: trying to report the problem to someone, anyone, please

I had Tony Abbott’s passport number, phone number, and weird Qantas messages about him. I was the only one who knew I had these.

Anyone who saw that Instagram post could also have them. I felt like I had to like, tell someone about this. Someone with like, responsibilities. Someone with an email signature.

wait but do u see the irony in this, u have his phone number right there so u could just-

Yes I see it thank u for pointing this out, wise, astute, and ultimately self-imposed heading. I knew I could just call the number any time and hear a “G’day” I’d never be able to forget. I knew I had a rare opportunity to call someone and have them ask “how did you get this number!?”.

But you can’t just do that.

You can’t just call someone’s phone number that you got by rummaging around in the HTML ball pit. Tony Abbott didn’t want me to have his phone number, because he didn’t give it to me. Maybe if it was urgent, or I had no other option, sure. But I was pretty sure I should do this the Nice way, and show that I come in peace.

I wanted to show that I come in peace because there’s also this pretty yikes thing that happens where you email someone being all like “henlo ur website let me log in with username admin and password admin, maybe u wanna change that??? could just be me but let me kno what u think xoxo alex” and then they reply being like “oh so you’re a HACKER and a CRIMINAL and you’ve HACKED ME AND MY FAMILY TOO and this is a RANSOM and ur from the DARK WEB i know what that is i’ve seen several episodes of mr robot WELL watch out kiddO bc me and my lawyers are bulk-installing tens of thousands of copies of McAfee® Gamer Security as we speak, so i’d like 2 see u try”

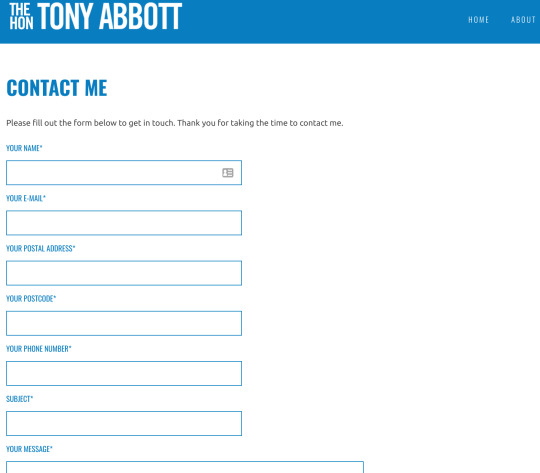

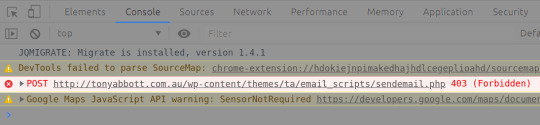

I googled “tony abbott contact”, but there’s only his official website. There’s no phone number on it, only a “contact me” form.

I imagine there have been some passionate opinions typed into this form at 9pm on a Tuesday

Yeah right, have you seen the incredible volume of #content people want to say at politicians? No way anyone’s reading that form.

I later decided to try anyway, using the same Inspect Element ritual from earlier. Looking at the network requests the page makes, I divined that the “Contact me” form just straight up does not work. When you click “submit”, you get an error, and nothing gets sent.

This is an excellent way of using computers to solve the problem of “random people keep sending me angry letters”

Well rip I guess. I eventually realised the people to talk to were probably the government.

The government

It’s a big place.

In the beginning, humans developed the concept of language by banging rocks together and saying “oof, oog, and so on”. Then something went horribly wrong, and now people unironically begin every sentence with “in regards to”. Our story begins here.

The government has like fifty thousand million different departments, and they all know which acronyms to call each other, but you don’t. If you EVER call it DMP&C instead of DPM&C you are gonna be express email forwarded into a nightmare realm the likes of which cannot be expressed in any number of spreadsheet cells, in spite of all the good people they’ve lost trying.

I didn’t even know where to begin with this. Desperately, I called Tony Abbott’s former political party, who were all like

Skip skip skip a few more calls like this.

Maybe I knew someone who knew someone

That’s right, the true government channels were the friends we made along the way.

I asked hacker friends who seemed like they might know government security people. “Where do I report a security issue with like…. a person, not a website?”

They told me to call… 1300 CYBER1?

1300 CYBER1

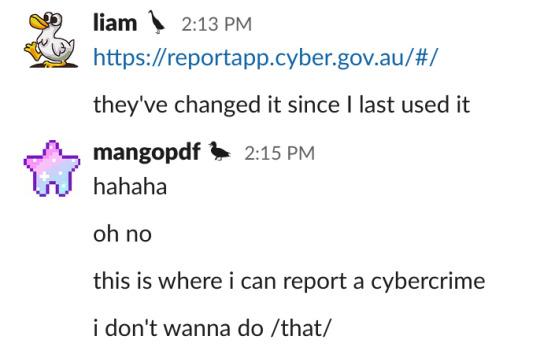

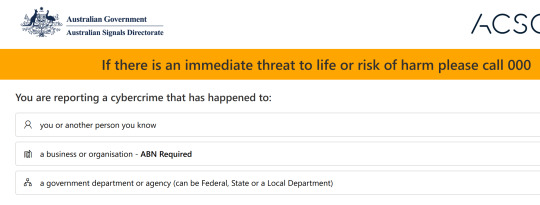

I don’t really have a good explanation for this so I’m just gonna post the screenshots.

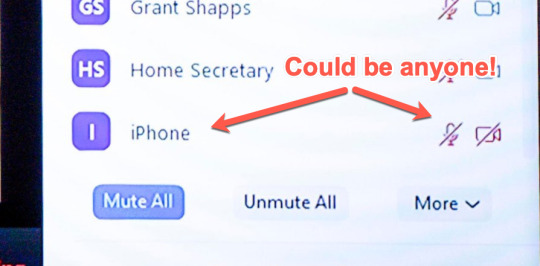

My friend showing me where to report a security issue with the government. I’m gonna need you to not ask any questions about the profile pictures.

Uhhh no wait I don’t wanna click any of these

The planet may be dying, but we live in a truly unparalleled age of content.

You know I smashed that call button on 1300 CYBER1. Did they just make it 1300 CYBER then realise you need one more digit for a phone number? Incredible.

Calling 1300 c y b e r o n e

“Yes yes hello, ring ring, is this 1300 cyber one”? They have to say yes if you ask that. They’re legally obligated.

The person who picked up gave me an email address for ASD (the Australian flavour of America’s NSA), and told me to email them the details.

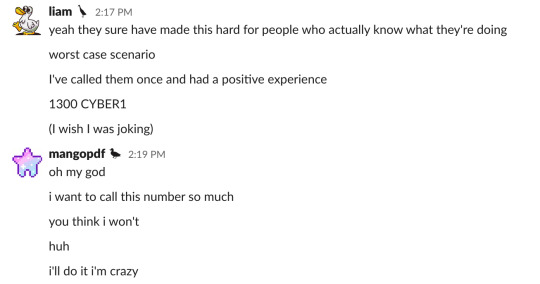

Emailing the government my crimes

Feeling like the digital equivalent of three kids in a trenchcoat, I broke out my best Government Email dialect and emailed ASD, asking for them to call me if they were the right place to tell about this.

Sorry for the clickbait subject but well that’s what happened???

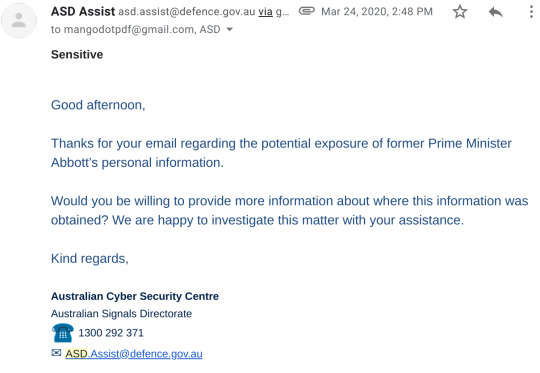

Fooled by my flawless disguise, they replied instantly (in a relative sense) asking for more details.

“Potential” exposure, yeah okay. At least the subject line had “[SEC=Sensitive]” in it so I _knew_ I’d made it big

I absolutely could provide them with more information, so I did, because I love to cooperate with the Australian government.

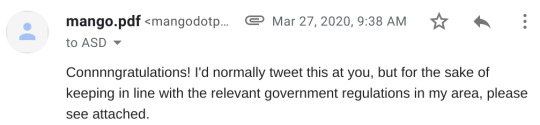

I also asked whether they could give me permission to publish this blog post, and they were all like “Seen 2:35pm”. Eventually, after another big day of getting left on read by the government, they replied, being all like “thanks kiddO, we’re doing like, an investigation and stuff, so we’ll take it from here”.

Overall, ASD were really nice to me about it and happy that I’d helped. They encouraged me to report this kind of thing to them if it happened again, but I’m not really in the business of uhhhhhhhh whatever the heck this is.

By the way, at this point in the story (chronologically) I had no idea if what I was emailing the government was actually the confession to a crime, since I hadn’t talked to a lawyer yet. This is widely regarded as a bad move. I do not recommend anyone else use “but I’m being so helpful and earnest!!!” as a legal defence. But also I’m not a lawyer, so idk, maybe it works?

Wholesomely emailing the government

At one point in what was surely an unforgettable email chain, the person I was emailing added a P.S. containing…. the answer to the puzzle hidden on this website. The one you’re reading this blog on right now. Hello. I guess they must have found this website (hi asd) by stalking the email address I was sending from. This is unprecedented and everything, but:

The puzzle says to tweet the answer at me, not email me

The prize for doing the puzzle is me tweeting this gif of a shakas to you

yeahhhhhhhhhh, nice

So I guess I emailed the shakas gif to the government??? Yeah, I guess I did.

Please find attached

Can I write about this?

I asked them if they could give me permission to write this blog post, or who to ask, and they were like “uhhhhhhhhhhh” and gave me two government media email addresses to try. Listen I don’t wanna be an “ummm they didn’t reply to my emAiLs” kinda person buT they simply left me no choice.

Still, defending the Commonwealth was in ASD’s hands now, and that’s a win for me at this point.

☑️ figure out whether i have done a crime

☑️ notify someone (The Government) that this happened

⬜ get permission to publish this here blog post

⬜ tell qantas about the security issue so they can fix it

Part 3: Telling Qantas the bad news

The security issue

Hey remember like fifteen minutes ago when this post was about webpages?

I’m guessing Qantas didn’t want to send the customer their passport number, phone number, and staff comments about them, so I wanted to let them know their website was doing that. Maybe the website was well meaning, but ultimately caused more harm than good, like how that time the bike path railings on the Golden Gate Bridge accidentally turned it into the world’s largest harmonica.

Unblending the smoothie

But why does the website even send you all that stuff in the first place? I don’t know, but to speculate wildly: Maybe the website just sends you all the data it knows about you, and then only shows you your name, flight times, etc, while leaving the passport number etc. still in the page.

If that were true, then Qantas would want to unblend the digital smoothie they’ve sent you, if you will. They’d want to change it so that they only send you your name and flight times and stuff (which are a key ingredient of the smoothie to be sure), not the whole identity fraud smoothie.

Smoothie evangelism

I wanted to tell them the smoothie thing, but how do I contact them?

The first place to check is usually company.com/security, maybe that’ll w-

Okay nevermind

Okay fine maybe I should just email [email protected] surely that’s it? I could only find a phone number to report security problems to, and I wasn’t sure if it was like…. airport security?

So I just… called the number and was like “heyyyy uhhhh I’d like to report a cyber security issue?”, and the person was like “yyyyya just email [email protected]” and i was like “ok sorrY”.

Time to email Qantas I guess

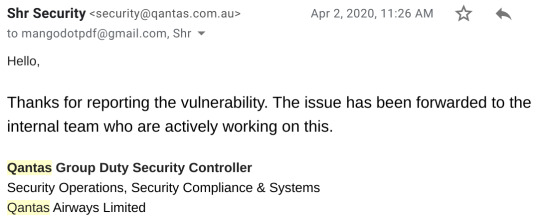

I emailed Qantas, being like “beep boop here is how the computer problem works”.

(Have you been wondering about the little dots in this post? Click this one for the rest of the email .)

A few days later, I got this reply.

And then I never heard from this person again

Airlines were going through kinda a struggle at the time, so I guess that’s what happened?

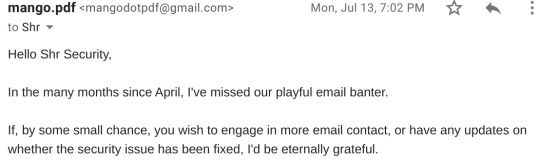

if ur still out there Shr Security i miss u

Struggles

After filling up my “get left on read” combo meter, I desperately resorted to calling Qantas’ secret media hotline number.

They said the issue was being fixed by Amadeus, the company who makes their booking software, rather than with Qantas itself. I’m not sure if that means other Amadeus customers were also affected, or if it was just the way Qantas was using their software, or what.

It’s common to give companies 90 days to fix the bug, before you publicly disclose it. It’s a tradeoff between giving them enough time to fix it, and people being hacked because of the bug as long as it’s out there.

But, well, this was kinda a special case. Qantas was going through some #struggles, so it was taking longer. Lots of their staff were stood down, and the world was just generally more cooked. At the same time, hardly anybody was flying at the time, due to see above re: #struggles. So, I gave Qantas as much time as they needed.

Five months later

The world is a completely different place, and Qantas replies to me, saying they fixed the bug. It did take five months, which is why it took so long for you and I to be having this weird textual interaction right now.

I don’t have a valid Booking Reference, so I can’t actually check what’s changed. I asked a friend to check (with an expired Booking Reference), and they said they didn’t see a mention of “documentNumber” anymore, which sounds like the passport number is no longer there. But That’s Not Science, so I don’t know for sure.

I originally found the bug in March, which was about 60 years ago. BUT we got there baybee, Qantas emailed me saying the bug had been fixed on August 21. They later told me they actually fixed the bug in July, but the person I was talking too didn’t know about it until August.

Qantas also said this when I asked them to review this post:

Thanks again for letting us have the opportunity to review and again for refraining from posting until the fix was in place for vulnerability.

Our standard advice to customers is not to post pictures of the boarding pass, or to at least obscure the key personal information if they do, because of the detail it contains.

We appreciate you bringing it to our attention in such a responsible way, so we could fix the issue, which we did a few months ago now.

I couldn’t find any advice on their website about not posting pictures of customer boarding passes, only news articles about how Qantas stopped printing the Frequent Flyer number on the boarding pass last year, because… well, you can see why.

I also asked Qantas what they did to fix the bug, and they said:

Unfortunately we’re not able to provide the details of fix as it is part of the protection of personal information.

:((

☑️ figure out whether i have done a crime

☑️ notify someone (The Government) that this happened

⬜ get permission to publish this here blog post

☑️ tell qantas about the security issue so they can fix it

Part 4: Finding Tony Abbott

Like 2003’s Finding Nemo, this section was an emotional rollercoaster.

The government was presumably helping Tony Abbott reset his passport number, and making sure his current one wasn’t being used for any of that yucky identity fraud.

But, much like Shannon Noll’s 2004 What About Me?, what about me? I really wanted to write a blog post about it, you know? So I could warn people about the non-obvious risk of sharing their boarding passes, and also make dumb and inaccessible references to the early 2000s.

The government people I talked to couldn’t give me permission to write this post, so rather than willingly wandering deeper into the procedurally generated labyrinth of government department email addresses (it’s dark in there), I tried to find Tony Abbott or his staff directly.

Calling everybody in Australia one by one

I called Tony Abbott’s former political party again, and asked them how to contact him, or his office, or something I’m really having a moment rn. They said they weren’t associated with him anymore, and suggested I call Parliament House, like I was the Queen or something.

In case you don’t know it, Parliament House is sorta like the White House, I think? The Prime Minister lives there and has a nice little garden out the back with a macadamia tree that never runs out, and everyone works in different colourful sections like “Making it so Everyone Gets a Fair Shake of the Sauce Bottle R&D” and “Mateship” and they all wear matching uniforms with lil kangaroo and emu hats, and they all do a little dance every hour on the hour to celebrate another accident-free day in the Prime Minister’s chocolate factory.

calling parliament house i guess

Not really sure what to expect, I called up and was all like “yeah bloody g’day, day for it ay, hot enough for ya?”. Once the formalities were out of the way, I skipped my usual explanation of why I was calling and just asked point-blank if they had Tony Abbott’s contact details.

The person on the phone was casually like “Oh, no, but I can put you through to the Serjeant-at-arms, who can give you the contact details of former members”. I was like “…..okay?????”. Was I supposed to know who that was? Isn’t a Serjeant like an army thing?

But no, the Serjeant-at-arms was just a nice lady who told me “he’s in a temporary office right now, and so doesn’t have a phone number. I can give you an email address or a P.O. box?”. I was like “ok th-thank you your majesty”.

It felt a bit weird just…. emailing the former PM being like “boy do i have bad news for you”, but I figured he probably wouldn’t read it anyway. If it was that easy to get this email address, everyone had it, and so nobody was likely to be reading the inbox.

Spoilers: It didn’t work.

Finding Tony Abbott’s staff

I roll out of bed and stare bleary-eyed into the morning sun, my ultimate nemesis, as Day 40 of not having found Tony Abbott’s staff begins.

This time for sure.

Retinas burning, in a moment of determination/desperation/hubris, I went and asked even more people that might know how to contact Tony Abbott’s staff.

I asked a journalist friend, who had the kind of ruthlessly efficient ideas that come from, like, being a professional journalist. They suggested I find Tony Abbott’s former staff from when he was PM, and contact their offices and see if they have his contact details.

It was a strange sounding plan to me, which I thought meant it would definitely work.

Wikipedia stalking

Apparently Prime Ministers themselves have “ministers” (not prime), and those are their staff. That’s who I was looking for.

Big “me and the boys” energy

Okay but, the problem was that most of these people are retired now, and the glory days of 2013 are over. Each time I hover over one of their names, I see “so-and-so is a former politician and….” and discard their Wikipedia page like a LeSnak wrapper into the wind.

Eventually though, I saw this minister.

Oh he definitely has an office.

That’s the current Prime Minister of Australia (at the time of writing, that is, for all I know we’re three Prime-Ministers deep into 2020 by the time you read this), you know he’s definitely gonna be easier to find.

Let’s call the Prime Minister’s office I guess?

Easy google of the number, absolutely no emotional journey resulting in my growth as a person this time.

When I call, I hear what sounds like two women laughing in the background? One of them answers the phone, slightly out of breath, and says “Hello, Prime Minister’s office?”. I’m like “….hello? Am I interrupting something???”.

I clumsily explain that I know this is Scott Morrison’s office, but I actually was wondering if they had Tony Abbott’s contact details, because it’s for “a time-sensitive media enquiry”, and I j- She interrupts to explain “so Tony Abbott isn’t Prime Minister anymore, this is Scott Morrison’s office” and I’m like “yA I know please I am desperate for these contact details”.

She says “We wouldn’t have that information but I’ll just check for you” and then pauses for like, a long time? Like 15 seconds? I can only wonder what was happening on the other end. Then she says “Oh actually I can give you Tony Abbott’s personal assistant’s number? Is that good?”.

Ummmm YES thanks that’s what I’ve been looking for this whole time? Anyway brb i gotta go be uh a journalist or something.

Calling Tony Abbott’s personal assistant’s personal assistant

I fumble with my phone, furiously trying to dial the number.

I ask if I’m speaking to Tony Abbott’s personal assistant. The person on the other end says no, but he is one of Tony Abbott’s staff. It has been a long several months of calling people. The cold ice is starting to thaw. One day, with enough therapy, I may be able to gather the emotional resources necessary to call another government phone number.

I explain the security issue I want to report, and midway through he interrupts with “sorry…. who are you and what’s the organisation you’re calling from?” and I’m like “uhhhh I mean my name is Alex and uhh I’m not calling from any organisation I’m just like a person?? I just found this thing and…”.

The person is mercifully forgiving, and says that he’ll have to call me back. I stress once again that I’m calling to help them, happy to wait to publish until they feel comfortable, and definitely do not warrant the bulk-installation of antivirus products.

Calling Tony Abbott’s personal assistant

An hour later, I get a call from a number I don’t recognise.

He explains that the guy I talked to earlier was his assistant, and he’s Tony Abbott’s PA. Folks, we made it. It’s as easy as that.

He says he knows what I’m talking about. He’s got the emails. He’s already in the process of getting Tony Abbott a new passport number. This is the stuff. It’s all coming together.

I ask if I can publish a blog post about it, and we agree I’ll send a draft for him to review.

And then he says

“These things do interest him - he’s quite keen to talk to you”

I was like exCUSE me? Tony Abbott, Leader of the 69th Ministry of Australia, wants to call me on the phone? I suppose I owe this service to my country?

This story was already completely cooked so sure, whatever. I’d already declared emotional bankruptcy, so nothing was coming as a surprise at this point.

I asked what he wanted to talk about. “Just to pick your brain on these things”. We scheduled a call for 3:30 on Monday.

And then Tony Abbott just… calls me on the phone?

Mostly, he wanted to check whether his understanding of how I’d found his passport number was correct (it was). He also wanted to ask me how to learn about “the IT”.

He asked some intelligent questions, like “how much information is in a boarding pass, and what do people like me need to know to be safe?”, and “why can you get a passport number from a boarding pass, but not from a bus ticket?”.

The answer is that boarding passes have your password printed on them, and bus tickets don’t. You can use that password to log in to a website (widely regarded as a bad move), and at that point all bets are off, websites can just do whatever they want.

He was vulnerable, too, about how computers are harder for him to understand.

“It’s a funny old world, today I tried to log in to a [Microsoft] Teams meeting (Teams is one of those apps), and the fire brigade uses a Teams meeting. Anyway I got fairly bamboozled, and I can now log in to a Teams meeting in a way I couldn’t before.

It’s, I suppose, a terrible confession of how people my age feel about this stuff.”

Then the Earth stopped spinning on its axis.

For an instant, time stood still.

Then he said it:

“You could drop me in the bush and I’d feel perfectly confident navigating my way out, looking at the sun and direction of rivers and figuring out where to go, but this! Hah!”

This was possibly the most pure and powerful Australian energy a human can possess, and explains how we elected our strongest as our leader. The raw energy did in fact travel through the phone speaker and directly into my brain, killing me instantly.

When I’d collected myself from various corners of the room, he asked if there was a book about the basics of IT, since he wanted to learn about it. That was kinda humanising, since it made me realise that even famous people are just people too.

Anyway I hadn’t heard of a book that was any good, so I told a story about my mum instead.

A story about my mum instead

I said there probably was a book out there about “the basics of IT”, but it wouldn’t help much. I didn’t learn from a book. 13 year old TikTok influencers don’t learn from a book. They just vibe.

My mum always said when I was growing up that:

There were “too many buttons”

She was afraid to press the buttons, because she didn’t know what they did

I can understand that, since grown ups don’t have the sheer dumb hubris of a child, and that’s what makes them afraid of the buttons.

Like, when a toddler uses a spoon for the first time, they don’t know what a spoon is, where they are, or who the current Prime Minister is. But they see the spoon, and they see the cereal, and their dumb baby brain is just like “yeA” and they have a red hot go. And like, they get it wrong the first few times, but it doesn’t matter, because they don’t know to be afraid of getting it wrong. So eventually, they get it right.

leaked footage of me learning how to hack

Okay so I didn’t tell the spoon thing to Tony Abbott, but I did tell him what I always told my mum, which was: “Mum you just gotta press all the buttons, to find out what they do”.

He was like “Oh, you just learn by trial and error”. Exactly! Now that I think about it, it’s a bit scary. We are dumb babies learning to use a spoon for the first time, except if you do it wrong some clown writes a blog post about you. Anyway good luck out there to all you big babies.

Asking to publish this blog post

When I asked Tony Abbott for permission to publish the post you are reading right now while neglecting your responsibilities, he said “well look Alex, I don’t have a problem with it, you’ve alerted me to something I probably should have known about, so if you wanna do that, go for it”.

At the end of the call, he said “If there’s ever anything you think I need to know, give us a shout”.

Look you gotta hand it to him. That’s exactly the right way to respond when someone tells you about a security problem. Back at the beginning, I was kinda worried that he might misunderstand, and think I was trying to hack him or something, and that I’d be instantly slam dunked into jail. But nope, he was fine with it. And now you, a sweet and honourable blog post browser, get to learn the dangers of posting your boarding pass by the realest of real-world examples.

During the call, I was completely in shock from the lost in the bush thing killing me instantly, and so on. But afterwards, when I looked at the quotes, I realised he just wanted to understand what had happened to him, and more about how technology works. That’s the same kind of curiosity I had, that started this whole surrealist three-act drama. That… wasn’t really what I was expecting from Tony Abbott, but it’s what I found.

The point of this story isn’t to say “wow Tony Abbott got hacked, what a dummy”. The point is that if someone famous can unknowingly post their boarding pass, anyone can.

Anyway that’s why I vote right wing now baybeeeee.

☑️ figure out whether i have done a crime

☑️ notify someone (The Government) that this happened

☑️ get permission to publish this here blog post

☑️ tell qantas about the security issue so they can fix it

Act 3: Closing credits

Wait no what the heck did I just read

Yeah look, reasonable.

tl; dr

Your boarding pass for a flight can sometimes be used to get your passport number. Don’t post your boarding pass or baggage receipt online, keep it as secret as your passport.

How it works

The Booking Reference on the boarding pass can be used to log in to the airline’s “Manage Booking” page, which sometimes contains the passport number, depending on the airline. I saw that Tony Abbott had posted a photo of his boarding pass on Instagram, and used it to get his passport details, phone number, and internal messages between Qantas flight staff about his flight booking.

Why did you do this?

One day, my friend who was also in “the group chat” said “I was thinking…. why didn’t I hack Tony Abbott? And I realised I guess it’s because you have more hubris”.

I was deeply complimented by this, but that’s not the point. The point is that you, too, can have hubris.

You know how they say to commit a crime (which once again I insist did not happen in my case) you need means, motive, and opportunity? Means is the ability to use right click > Inspect Element, motive is hubris, and opportunity is the dumb luck of having my friend message me the Instagram post.