Text

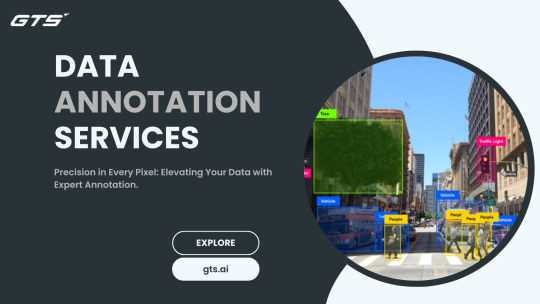

The Invisible Heroes of AI: Understanding the Significance of Data Annotation

The Foundation of Machine Learning:

At the heart of machine learning (ML) and AI lies the fundamental concept of learning from data. Whether it's recognizing objects in images, understanding speech, or making predictions, AI algorithms require vast amounts of labelled data to generalise patterns and make accurate decisions. This is where data annotation becomes indispensable. By annotating data, human annotators assign labels, tags, or attributes to each data point, providing the necessary context for machines to learn and make sense of the information.

Methodologies of Data Annotation:

Data annotation encompasses various methodologies tailored to different types of data and AI tasks:

Image Annotation: In computer vision tasks, such as object detection and image segmentation, annotators outline objects, draw bounding boxes, or create pixel-level masks to highlight relevant features within images.

Text Annotation: Natural language processing (NLP) tasks rely on text annotation, where annotators label entities, sentiment, or parts of speech to train models for tasks like named entity recognition, sentiment analysis, and machine translation Medical datasets

Audio Annotation: For speech recognition and audio processing, annotators transcribe spoken words, label audio segments, or identify key sounds within recordings.

Video Annotation: Video annotation involves annotating objects or actions within video frames, enabling AI systems to understand temporal relationships and dynamic scenes.

The Human Element:

Despite advances in automation, data annotation remains a predominantly human-driven task. Annotators bring contextual understanding, domain expertise, and nuanced judgement that automated systems often lack. Their ability to interpret ambiguous data, adapt to diverse contexts, and refine annotations based on feedback is invaluable in ensuring the accuracy and relevance of labelled datasets.

Challenges in Data Annotation:

While data annotation is crucial, it's not without its challenges:

Scalability: As AI applications demand increasingly large and diverse datasets, scaling data annotation processes while maintaining quality and consistency becomes a significant challenge.

Subjectivity: Annotation tasks can be subjective, leading to inconsistencies among annotators. Establishing clear guidelines, providing adequate training, and implementing quality control measures are essential to mitigate this issue.

Cost and Time: Data annotation can be resource-intensive in terms of both time and cost, especially for specialised domains requiring domain expertise or intricate labelling.

Data Bias: Annotator biases can inadvertently introduce biases into labelled datasets, leading to skewed model outputs and ethical concerns. Addressing bias requires careful consideration of dataset composition, diversity, and fairness.

The Impact on AI Advancements:

Despite these challenges, data annotation remains a cornerstone of AI advancements. High-quality annotated datasets serve as the fuel that powers AI innovation, enabling the development of robust models with real-world applicability. From autonomous vehicles to personalised healthcare, the quality and diversity of annotated data directly influence the performance and reliability of AI systems, shaping their ability to address complex challenges and deliver meaningful solutions.

Conclusion:

In the ever-evolving landscape of AI, data annotation stands as a silent yet pivotal force driving progress and innovation. Behind every AI breakthrough lies a meticulously annotated dataset, crafted by human annotators who play a critical role in shaping the future of AI. As we continue to push the boundaries of what AI can achieve, let us not forget to acknowledge the unsung heroes – the data annotators – whose dedication and expertise fuel the journey towards AI excellence.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service; It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

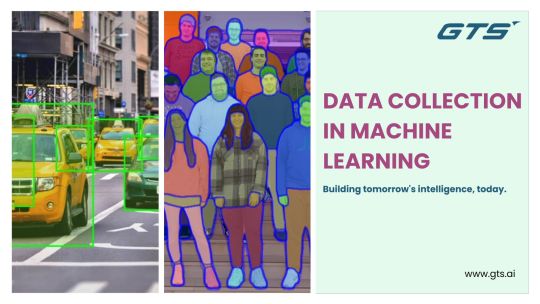

Unveiling the Power of Data Collection in Machine Learning: A Comprehensive Guide

Introduction:

Machine Learning (ML) has rapidly evolved, becoming a driving force behind numerous technological advancements. At the heart of this evolution lies the critical role of data collection. In the realm of ML, the saying "garbage in, garbage out" underscores the importance of high-quality, relevant data for building robust and effective models. This blog explores the intricacies of data collection in machine learning, shedding light on its significance, challenges, and best practices.

The Significance of Data in Machine Learning:

Foundation of Machine Learning Models:

Data is the bedrock upon which machine learning models are built. Whether it's for supervised learning, unsupervised learning, or reinforcement learning, having diverse, representative, and clean data is essential. The model learns patterns, correlations, and features from the data it is trained on.

Training and Testing:

Machine learning models require two sets of data - one for training and the other for testing. The training data teaches the model to recognize patterns and make predictions, while the testing data evaluates its performance on new, unseen examples. The quality of these datasets directly influences the model's accuracy and generalisation.

Bias and Fairness:

The data used to train models can inadvertently introduce biases. If the training data is skewed or not representative of the real-world scenario, the model may exhibit biassed behaviour. Ensuring fairness and mitigating biases is a critical aspect of responsible data collection.

Challenges in Data Collection for Machine Learning:

Quality vs. Quantity:

Striking the right balance between the volume of data and its quality is challenging. While more data can enhance model performance, it must be relevant, accurate, and diverse. Collecting and curating large datasets without compromising quality is an ongoing challenge.

Labelling and Annotation:

Supervised learning relies on labelled data, which often requires human annotation. This process can be time-consuming, expensive, and subjective. Ensuring consistency and accuracy in labelling is crucial for the model's success.

Privacy Concerns:

As data collection becomes more pervasive, privacy concerns come to the forefront. Balancing the need for data with ethical considerations and privacy regulations is an ongoing challenge in the ML community.

Best Practices for Data Collection in Machine Learning:

Clearly Defined Objectives:

Clearly define the objectives of your machine learning project before embarking on data collection. Understanding what you want the model to achieve helps in collecting relevant data.

Diverse and Representative Samples:

Ensure that your dataset is diverse and representative of the population or scenarios you intend to apply the model to. This helps in building a more robust and generalizable model.

Data Cleaning and Preprocessing:

Invest time in cleaning and preprocessing the data. Address missing values, outliers, and inconsistencies to improve the overall quality of the dataset.

Continuous Monitoring and Updating:

Data is dynamic, and its characteristics may change over time. Continuously monitor and update your dataset to ensure that the model remains relevant and accurate as new patterns emerge.

Conclusion:

In the ever-expanding landscape of machine learning, the role of data collection cannot be overstated. The success of ML models hinges on the quality, relevance, and diversity of the data they are trained on. By understanding the significance of data, acknowledging the challenges, and adhering to best practices, the machine learning community can harness the true potential of this transformative technology responsibly and ethically.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Unveiling the Power of Data Collection in Machine Learning: A Comprehensive Guide

Introduction:

Machine Learning (ML) has rapidly evolved, becoming a driving force behind numerous technological advancements. At the heart of this evolution lies the critical role of data collection. In the realm of ML, the saying "garbage in, garbage out" underscores the importance of high-quality, relevant data for building robust and effective models. This blog explores the intricacies of data collection in machine learning, shedding light on its significance, challenges, and best practices.

The Significance of Data in Machine Learning:

Foundation of Machine Learning Models:

Data is the bedrock upon which machine learning models are built. Whether it's for supervised learning, unsupervised learning, or reinforcement learning, having diverse, representative, and clean data is essential. The model learns patterns, correlations, and features from the data it is trained on.

Training and Testing:

Machine learning models require two sets of data - one for training and the other for testing. The training data teaches the model to recognize patterns and make predictions, while the testing data evaluates its performance on new, unseen examples. The quality of these datasets directly influences the model's accuracy and generalisation.

Bias and Fairness:

The data used to train models can inadvertently introduce biases. If the training data is skewed or not representative of the real-world scenario, the model may exhibit biassed behaviour. Ensuring fairness and mitigating biases is a critical aspect of responsible data collection.

Challenges in Data Collection for Machine Learning:

Quality vs. Quantity:

Striking the right balance between the volume of data and its quality is challenging. While more data can enhance model performance, it must be relevant, accurate, and diverse. Collecting and curating large datasets without compromising quality is an ongoing challenge.

Labelling and Annotation:

Supervised learning relies on labelled data, which often requires human annotation. This process can be time-consuming, expensive, and subjective. Ensuring consistency and accuracy in labelling is crucial for the model's success.

Privacy Concerns:

As data collection becomes more pervasive, privacy concerns come to the forefront. Balancing the need for data with ethical considerations and privacy regulations is an ongoing challenge in the ML community.

Best Practices for Data Collection in Machine Learning:

Clearly Defined Objectives:

Clearly define the objectives of your machine learning project before embarking on data collection. Understanding what you want the model to achieve helps in collecting relevant data.

Diverse and Representative Samples:

Ensure that your dataset is diverse and representative of the population or scenarios you intend to apply the model to. This helps in building a more robust and generalizable model.

Data Cleaning and Preprocessing:

Invest time in cleaning and preprocessing the data. Address missing values, outliers, and inconsistencies to improve the overall quality of the dataset.

Continuous Monitoring and Updating:

Data is dynamic, and its characteristics may change over time. Continuously monitor and update your dataset to ensure that the model remains relevant and accurate as new patterns emerge.

Conclusion:

In the ever-expanding landscape of machine learning, the role of data collection cannot be overstated. The success of ML models hinges on the quality, relevance, and diversity of the data they are trained on. By understanding the significance of data, acknowledging the challenges, and adhering to best practices, the machine learning community can harness the true potential of this transformative technology responsibly and ethically.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service; It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Unlocking Insights: Exploring the World of E-commerce Product Datasets

Introduction:

In the vast landscape of e-commerce, data plays a pivotal role in shaping business strategies, enhancing customer experiences, and driving innovation. E-commerce product datasets serve as treasure troves of information, offering a wealth of insights that businesses can leverage to gain a competitive edge. In this blog, we'll delve into the significance of e-commerce product datasets, exploring their applications, challenges, and the potential they hold for businesses.

The Power of E-commerce Product Datasets:

Understanding Customer Behaviour:

E-commerce product datasets provide a window into customer behaviour. By analysing purchase histories, browsing patterns, and product interactions, businesses can gain valuable insights into what customers want, how they shop, and what influences their purchasing decisions.

Personalization and Recommendations:

Personalization is at the heart of successful e-commerce platforms. With robust datasets, businesses can create personalised experiences for users, offering tailored product recommendations based on historical data, preferences, and demographics. This not only enhances customer satisfaction but also boosts conversion rates.

Inventory Management:

Efficient inventory management is crucial for e-commerce success. By analysing product datasets, businesses can optimise stock levels, predict demand patterns, and minimise overstock or stockouts. This, in turn, helps in reducing costs and improving overall operational efficiency.

Competitor Analysis:

E-commerce datasets enable businesses to keep a close eye on the competition. Analysing competitor pricing, product assortments, and customer reviews provides valuable insights that can inform pricing strategies, product positioning, and marketing campaigns.

Fraud Detection and Security:

Security is a top concern in e-commerce. Product datasets can be utilised to detect fraudulent activities, such as unauthorised transactions or account takeovers. Machine learning algorithms can analyse patterns and flag suspicious behaviour, helping businesses safeguard their platforms and protect their customers.

Challenges in Handling E-commerce Product Datasets:

Data Quality and Cleaning:

E-commerce datasets can be vast and diverse, often requiring thorough cleaning and preprocessing. Inaccuracies in product information, missing values, or inconsistencies can impact the reliability of analyses and predictions.

Privacy Concerns:

E-commerce datasets often contain sensitive customer information. Striking the right balance between utilising data for business insights and respecting customer privacy is a challenge that businesses must navigate carefully.

Data Integration:

E-commerce platforms generate data from various sources—sales, customer interactions, marketing campaigns, etc. Integrating these diverse datasets into a cohesive and meaningful analysis can be complex but is essential for obtaining a holistic view.

Scalability:

As businesses grow, so does the volume of data generated. Scalability becomes a challenge, and infrastructure must be robust enough to handle increasing data loads without compromising on performance.

Conclusion:

In the dynamic world of e-commerce, harnessing the potential of product datasets is essential for staying competitive and meeting customer expectations. By understanding customer behaviour, optimising operations, and embracing data-driven decision-making, businesses can unlock new opportunities and pave the way for sustained growth. However, it's crucial to address the challenges associated with data quality, privacy, integration, and scalability to fully leverage the power of e-commerce product datasets. As technology continues to advance, businesses that master the art of data utilisation will be better positioned to thrive in the ever-evolving e-commerce landscape.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Navigating the Landscape of Medical Data Collection: Balancing Innovation and Privacy

Introduction:

In the era of advanced technology and data-driven healthcare, the collection and utilisation of medical data have become integral components in shaping the future of medicine. From electronic health records (EHRs) to wearable devices, medical data collection plays a pivotal role in enhancing patient care, advancing medical research, and improving overall health outcomes. However, as we embrace these innovations, it is crucial to strike a delicate balance between harnessing the potential of medical data and safeguarding patient privacy.

The Evolution of Medical Data Collection:

Medical data collection has undergone a profound transformation over the years. Traditional paper-based records have given way to electronic systems, enabling healthcare providers to store and access patient information efficiently. Furthermore, the advent of wearable devices, remote monitoring tools, and mobile health apps has ushered in an era of real-time, continuous data collection.

Key Benefits of Medical Data Collection:

Improved Patient Care:

EHRs streamline communication among healthcare providers, ensuring that relevant medical information is readily available.

Remote monitoring devices empower patients to actively participate in their care, enabling healthcare professionals to intervene promptly when necessary.

Advancements in Medical Research:

Large-scale data sets facilitate research initiatives, helping scientists identify patterns, correlations, and potential breakthroughs.

Data-driven research accelerates the development of personalised medicine, tailoring treatments to individual patients based on their unique characteristics.

Enhanced Public Health:

Surveillance systems utilising medical data assist in tracking and managing the spread of infectious diseases.

Population-level data analysis contributes to the development of targeted public health interventions.

Challenges and Ethical Considerations:

Privacy Concerns:

The collection of sensitive health information raises concerns about unauthorized access and potential breaches.

Striking a balance between data access for healthcare professionals and protecting patient privacy remains a significant challenge.

Informed Consent:

Ensuring that patients fully understand and consent to the use of their data is crucial. This becomes more complex with the increasing volume and variety of data sources.

Data Security:

The digital nature of medical data makes it susceptible to cyber threats. Robust security measures are imperative to protect against unauthorized access and data breaches.

Data Ownership and Sharing:

Determining who owns the medical data and how it can be responsibly shared among different stakeholders, including researchers, healthcare providers, and technology companies, requires careful consideration.

Regulatory Frameworks and Best Practices:

HIPAA Compliance:

The Health Insurance Portability and Accountability Act (HIPAA) in the United States sets standards for the secure handling of protected health information (PHI).

GDPR and Global Standards:

The General Data Protection Regulation (GDPR) in the European Union establishes comprehensive data protection rules, influencing global standards for data privacy.

Transparency and Accountability:

Institutions collecting medical data should adopt transparent practices and be accountable for the ethical use of the information.

Conclusion:

Medical data collection is a powerful tool that has the potential to revolutionize healthcare, driving advancements in patient care, research, and public health. However, it is essential to approach this transformation with a commitment to ethical practices, privacy protection, and regulatory compliance. By navigating these challenges responsibly, we can harness the benefits of medical data collection while respecting the rights and privacy of individuals, ultimately shaping a healthier and more connected future.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Navigating the Landscape of Medical Data Collection: Balancing Innovation and Privacy

Introduction:

In the era of advanced technology and data-driven healthcare, the collection and utilisation of medical data have become integral components in shaping the future of medicine. From electronic health records (EHRs) to wearable devices, medical data collection plays a pivotal role in enhancing patient care, advancing medical research, and improving overall health outcomes. However, as we embrace these innovations, it is crucial to strike a delicate balance between harnessing the potential of medical data and safeguarding patient privacy.

The Evolution of Medical Data Collection:

Medical data collection has undergone a profound transformation over the years. Traditional paper-based records have given way to electronic systems, enabling healthcare providers to store and access patient information efficiently. Furthermore, the advent of wearable devices, remote monitoring tools, and mobile health apps has ushered in an era of real-time, continuous data collection.

Key Benefits of Medical Data Collection:

Improved Patient Care:

EHRs streamline communication among healthcare providers, ensuring that relevant medical information is readily available.

Remote monitoring devices empower patients to actively participate in their care, enabling healthcare professionals to intervene promptly when necessary.

Advancements in Medical Research:

Large-scale data sets facilitate research initiatives, helping scientists identify patterns, correlations, and potential breakthroughs.

Data-driven research accelerates the development of personalised medicine, tailoring treatments to individual patients based on their unique characteristics.

Enhanced Public Health:

Surveillance systems utilising medical data assist in tracking and managing the spread of infectious diseases.

Population-level data analysis contributes to the development of targeted public health interventions.

Challenges and Ethical Considerations:

Privacy Concerns:

The collection of sensitive health information raises concerns about unauthorized access and potential breaches.

Striking a balance between data access for healthcare professionals and protecting patient privacy remains a significant challenge.

Informed Consent:

Ensuring that patients fully understand and consent to the use of their data is crucial. This becomes more complex with the increasing volume and variety of data sources.

Data Security:

The digital nature of medical data makes it susceptible to cyber threats. Robust security measures are imperative to protect against unauthorized access and data breaches.

Data Ownership and Sharing:

Determining who owns the medical data and how it can be responsibly shared among different stakeholders, including researchers, healthcare providers, and technology companies, requires careful consideration.

Regulatory Frameworks and Best Practices:

HIPAA Compliance:

The Health Insurance Portability and Accountability Act (HIPAA) in the United States sets standards for the secure handling of protected health information (PHI).

GDPR and Global Standards:

The General Data Protection Regulation (GDPR) in the European Union establishes comprehensive data protection rules, influencing global standards for data privacy.

Transparency and Accountability:

Institutions collecting medical data should adopt transparent practices and be accountable for the ethical use of the information.

Conclusion:

Medical data collection is a powerful tool that has the potential to revolutionize healthcare, driving advancements in patient care, research, and public health. However, it is essential to approach this transformation with a commitment to ethical practices, privacy protection, and regulatory compliance. By navigating these challenges responsibly, we can harness the benefits of medical data collection while respecting the rights and privacy of individuals, ultimately shaping a healthier and more connected future.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service; It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Unveiling the Feast: A Comprehensive Guide to Food Segmentation Datasets

Introduction:

In the ever-evolving landscape of computer vision and artificial intelligence, one of the most captivating applications is food segmentation. As the demand for smart solutions in the food industry continues to rise, the need for robust and diverse food segmentation datasets becomes increasingly essential. This blog explores the significance of food segmentation datasets and introduces some of the noteworthy datasets that are fueling advancements in this exciting field.

Why Food Segmentation Datasets Matter:

Food segmentation involves the partitioning of images or videos into distinct regions, with each region corresponding to a specific food item. This process plays a pivotal role in various applications, such as automated food recognition, dietary analysis, and restaurant menu digitization. To train accurate and reliable models for these applications, a high-quality and diverse food segmentation dataset is indispensable.

Key Characteristics of an Ideal Food Segmentation Dataset:

Diversity of Food Types:

A comprehensive dataset should cover a wide range of cuisines, dishes, and food types to ensure that the model is versatile and capable of recognizing various foods.

Varied Backgrounds and Settings:

Food is often photographed in different environments, from restaurants to home kitchens. A dataset should include images with diverse backgrounds, lighting conditions, and perspectives to enhance the model's adaptability.

Annotated Ground Truth:

Accurate annotation is crucial for training machine learning models. A reliable food segmentation dataset should provide pixel-level annotations, indicating the boundaries of individual food items in the images.

Large Scale:

To train deep learning models effectively, a dataset should be large enough to capture the complexity and diversity of real-world scenarios. This enables the model to generalize well to new, unseen data.

Open Access:

Accessibility is vital for fostering research and innovation. Openly sharing food segmentation datasets encourages collaboration and accelerates progress in the field.

Noteworthy Food Segmentation Datasets:

Food-101:

Overview: Food-101 is a widely used dataset that consists of 101,000 images across 101 food categories.

Features: It covers a diverse range of cuisines and is suitable for both food recognition and segmentation tasks.

Foodseg:

Overview: Foodseg is a dataset specifically curated for food segmentation. It includes high-resolution images with pixel-level annotations for various foods.

Features: With a focus on segmentation, Foodseg is valuable for training models dedicated to precisely delineating food boundaries.

UNICT-FD889:

Overview: UNICT-FD889 is a large-scale dataset comprising 889 food categories with over 300,000 images.

Features: Its extensive coverage makes it a valuable resource for researchers and practitioners working on food-related computer vision tasks.

Conclusion:

As the field of food segmentation continues to flourish, the role of high-quality datasets cannot be overstated. These datasets not only fuel research and development but also pave the way for innovative solutions in the realms of food recognition, dietary analysis, and beyond. By staying abreast of the latest datasets and contributing to their growth, researchers and developers can collectively enhance the capabilities of food segmentation models, bringing us closer to a future where technology seamlessly interacts with the culinary world.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Unveiling the Feast: A Comprehensive Guide to Food Segmentation Datasets

Introduction:

In the ever-evolving landscape of computer vision and artificial intelligence, one of the most captivating applications is food segmentation. As the demand for smart solutions in the food industry continues to rise, the need for robust and diverse food segmentation datasets becomes increasingly essential. This blog explores the significance of food segmentation datasets and introduces some of the noteworthy datasets that are fueling advancements in this exciting field.

Why Food Segmentation Datasets Matter:

Food segmentation involves the partitioning of images or videos into distinct regions, with each region corresponding to a specific food item. This process plays a pivotal role in various applications, such as automated food recognition, dietary analysis, and restaurant menu digitization. To train accurate and reliable models for these applications, a high-quality and diverse food segmentation dataset is indispensable.

Key Characteristics of an Ideal Food Segmentation Dataset:

Diversity of Food Types:

A comprehensive dataset should cover a wide range of cuisines, dishes, and food types to ensure that the model is versatile and capable of recognizing various foods.

Varied Backgrounds and Settings:

Food is often photographed in different environments, from restaurants to home kitchens. A dataset should include images with diverse backgrounds, lighting conditions, and perspectives to enhance the model's adaptability.

Annotated Ground Truth:

Accurate annotation is crucial for training machine learning models. A reliable food segmentation dataset should provide pixel-level annotations, indicating the boundaries of individual food items in the images.

Large Scale:

To train deep learning models effectively, a dataset should be large enough to capture the complexity and diversity of real-world scenarios. This enables the model to generalize well to new, unseen data.

Open Access:

Accessibility is vital for fostering research and innovation. Openly sharing food segmentation datasets encourages collaboration and accelerates progress in the field.

Noteworthy Food Segmentation Datasets:

Food-101:

Overview: Food-101 is a widely used dataset that consists of 101,000 images across 101 food categories.

Features: It covers a diverse range of cuisines and is suitable for both food recognition and segmentation tasks.

Foodseg:

Overview: Foodseg is a dataset specifically curated for food segmentation. It includes high-resolution images with pixel-level annotations for various foods.

Features: With a focus on segmentation, Foodseg is valuable for training models dedicated to precisely delineating food boundaries.

UNICT-FD889:

Overview: UNICT-FD889 is a large-scale dataset comprising 889 food categories with over 300,000 images.

Features: Its extensive coverage makes it a valuable resource for researchers and practitioners working on food-related computer vision tasks.

Conclusion:

As the field of food segmentation continues to flourish, the role of high-quality datasets cannot be overstated. These datasets not only fuel research and development but also pave the way for innovative solutions in the realms of food recognition, dietary analysis, and beyond. By staying abreast of the latest datasets and contributing to their growth, researchers and developers can collectively enhance the capabilities of food segmentation models, bringing us closer to a future where technology seamlessly interacts with the culinary world.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Navigating the World of Wake Words and Voice Commands in US English

Introduction:

In the ever-evolving landscape of technology, voice-activated devices have become an integral part of our daily lives. From virtual assistants to smart home devices, the convenience of interacting with technology through voice commands has reshaped the way we live. At the heart of this seamless interaction lies the concept of "wake words." In this blog post, we'll delve into the fascinating world of wake words and voice commands, with a specific focus on US English.

Understanding Wake Words:

Wake words, also known as trigger words or hot words, are specific phrases or keywords that initiate the activation of voice-activated systems. These words serve as a cue for devices to start listening to and processing voice input. In the realm of US English, some commonly used wake words include "Hey Siri," "Okay Google," and "Alexa."

The Role of Wake Words in Voice Recognition:

Voice recognition technology relies heavily on wake words to distinguish between regular conversation and commands directed at the device. The utilisation of wake words ensures that the device doesn't respond to every random conversation but remains attentive and ready to act when the designated trigger word is detected.

Popular Voice Command Platforms:

Amazon Alexa:

"Alexa" serves as the wake word for Amazon's voice-activated assistant.

Users can issue commands like "Alexa, set a timer for 10 minutes" or ask questions such as "Alexa, what's the weather like today?"

Apple Siri:

Apple users are familiar with the wake word "Hey Siri," activating the voice assistant on iPhones, iPads, and other Apple devices.

Siri assists with tasks like sending messages, making calls, and providing information.

Google Assistant:

"Okay Google" is the wake word for Google Assistant, accessible on various devices, including smartphones and smart speakers.

Users can ask Google Assistant for directions, search queries, and control smart home devices.

Enhancements in Wake Word Technology:

As technology advances, researchers and developers continually work to improve wake word detection and accuracy. Machine learning algorithms play a pivotal role in enhancing the performance of wake word systems, enabling them to adapt to diverse accents, variations in pronunciation, and ambient noise levels.

Privacy Concerns and Security Measures:

While voice-activated systems offer unparalleled convenience, they also raise concerns about privacy and security. As these devices constantly listen for wake words, there is a potential risk of unintentional activation and the recording of private conversations. To address these concerns, manufacturers implement measures such as local processing of wake words and the option for users to review and delete voice recordings.

Conclusion:

Wake words and voice commands have revolutionised the way we interact with technology, providing a hands-free and seamless experience. As these technologies continue to evolve, it's crucial to strike a balance between convenience and privacy. Users can anticipate further advancements in wake word technology, ensuring even more accurate and responsive interactions with voice-activated devices in the future. In the realm of US English, the journey of wake words and voice commands is far from over, promising exciting developments on the horizon.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Navigating the World of Wake Words and Voice Commands in US English

Introduction:

In the ever-evolving landscape of technology, voice-activated devices have become an integral part of our daily lives. From virtual assistants to smart home devices, the convenience of interacting with technology through voice commands has reshaped the way we live. At the heart of this seamless interaction lies the concept of "wake words." In this blog post, we'll delve into the fascinating world of wake words and voice commands, with a specific focus on US English.

Understanding Wake Words:

Wake words, also known as trigger words or hot words, are specific phrases or keywords that initiate the activation of voice-activated systems. These words serve as a cue for devices to start listening to and processing voice input. In the realm of US English, some commonly used wake words include "Hey Siri," "Okay Google," and "Alexa."

The Role of Wake Words in Voice Recognition:

Voice recognition technology relies heavily on wake words to distinguish between regular conversation and commands directed at the device. The utilisation of wake words ensures that the device doesn't respond to every random conversation but remains attentive and ready to act when the designated trigger word is detected.

Popular Voice Command Platforms:

Amazon Alexa:

"Alexa" serves as the wake word for Amazon's voice-activated assistant.

Users can issue commands like "Alexa, set a timer for 10 minutes" or ask questions such as "Alexa, what's the weather like today?"

Apple Siri:

Apple users are familiar with the wake word "Hey Siri," activating the voice assistant on iPhones, iPads, and other Apple devices.

Siri assists with tasks like sending messages, making calls, and providing information.

Google Assistant:

"Okay Google" is the wake word for Google Assistant, accessible on various devices, including smartphones and smart speakers.

Users can ask Google Assistant for directions, search queries, and control smart home devices.

Enhancements in Wake Word Technology:

As technology advances, researchers and developers continually work to improve wake word detection and accuracy. Machine learning algorithms play a pivotal role in enhancing the performance of wake word systems, enabling them to adapt to diverse accents, variations in pronunciation, and ambient noise levels.

Privacy Concerns and Security Measures:

While voice-activated systems offer unparalleled convenience, they also raise concerns about privacy and security. As these devices constantly listen for wake words, there is a potential risk of unintentional activation and the recording of private conversations. To address these concerns, manufacturers implement measures such as local processing of wake words and the option for users to review and delete voice recordings.

Conclusion:

Wake words and voice commands have revolutionised the way we interact with technology, providing a hands-free and seamless experience. As these technologies continue to evolve, it's crucial to strike a balance between convenience and privacy. Users can anticipate further advancements in wake word technology, ensuring even more accurate and responsive interactions with voice-activated devices in the future. In the realm of US English, the journey of wake words and voice commands is far from over, promising exciting developments on the horizon.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service; It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Creating a Comprehensive Menu Dataset: A Guide

In today's digital age, data plays a crucial role in various industries, and the food and hospitality sector is no exception. Restaurants, cafes, and food delivery services leverage datasets to enhance customer experiences, streamline operations, and make informed business decisions. One fundamental aspect of this data is the menu dataset, which contains information about the items offered by an establishment. In this guide, we'll explore how to create a comprehensive menu dataset for your business.

Define Dataset Structure

a. Item Information

Item ID: A unique identifier for each menu item.

Name: The name of the menu item.

Description: A brief description of the item.

Category: The category to which the item belongs (e.g., appetizer, main course, dessert).

Price: The cost of the item.

b. Dietary Information

Ingredients: A list of ingredients used in the preparation.

Allergens: Any allergens present in the dish.

Calories: The estimated calorie count.

c. Availability

Availability Status: Indicates if the item is currently available or not.

Available Time: Specify if the item is available only during specific hours.

Data Collection

a. Menu Item Details

Gather information about each menu item, ensuring accuracy and completeness.

Collaborate with chefs and kitchen staff to obtain details about ingredients and preparation methods.

b. Pricing Strategy

Consider factors like ingredient costs, market trends, and competitor pricing.

Decide on a pricing strategy that aligns with your business goals.

c. Allergen and Nutritional Information

Work with nutritionists to gather accurate data on allergens and nutritional content.

Clearly communicate this information to customers for transparency.

Data Management

a. Database Setup

Choose a suitable database system (e.g., SQL, NoSQL) to store your menu dataset.

Ensure proper indexing for quick retrieval.

b. Regular Updates

Keep the dataset up-to-date with seasonal changes, new additions, and removals.

Implement a system to track menu updates and modifications.

Data Integration

POS Systems

Integrate the menu dataset with Point of Sale (POS) systems for seamless order processing.

Ensure real-time synchronisation between the menu dataset and the POS system.

Online Platforms

If applicable, sync the dataset with online platforms to display accurate information on websites and food delivery apps.

Provide APIs for third-party platforms to access your menu data.

Data Security and Compliance

a. Customer Privacy

Implement measures to protect customer data, especially if you collect and store order history.

Comply with data protection regulations.

Regular Audits

Conduct regular audits to identify and address potential security vulnerabilities.

Keep abreast of changes in data protection laws and update your practices accordingly.

Data Analytics

a. Customer Preferences

Analyse customer order history to identify popular items and trends.

Use this information to optimise menu offerings.

b. Sales Performance

Track sales performance of each menu item to understand profitability.

Adjust the menu based on sales data to maximise revenue.

Conclusion

A well-structured and managed menu dataset is a valuable asset for any food establishment. It not only facilitates smooth operations but also enables data-driven decision-making. By following the steps outlined in this guide, you can create a comprehensive menu dataset that serves as a foundation for success in the competitive food and hospitality industry. Keep adapting and refining your dataset to meet the evolving needs of your business and customers.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service; It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Creating a Comprehensive Menu Dataset: A Guide

In today's digital age, data plays a crucial role in various industries, and the food and hospitality sector is no exception. Restaurants, cafes, and food delivery services leverage datasets to enhance customer experiences, streamline operations, and make informed business decisions. One fundamental aspect of this data is the menu dataset, which contains information about the items offered by an establishment. In this guide, we'll explore how to create a comprehensive menu dataset for your business.

Define Dataset Structure

a. Item Information

Item ID: A unique identifier for each menu item.

Name: The name of the menu item.

Description: A brief description of the item.

Category: The category to which the item belongs (e.g., appetizer, main course, dessert).

Price: The cost of the item.

b. Dietary Information

Ingredients: A list of ingredients used in the preparation.

Allergens: Any allergens present in the dish.

Calories: The estimated calorie count.

c. Availability

Availability Status: Indicates if the item is currently available or not.

Available Time: Specify if the item is available only during specific hours.

Data Collection

a. Menu Item Details

Gather information about each menu item, ensuring accuracy and completeness.

Collaborate with chefs and kitchen staff to obtain details about ingredients and preparation methods.

b. Pricing Strategy

Consider factors like ingredient costs, market trends, and competitor pricing.

Decide on a pricing strategy that aligns with your business goals.

c. Allergen and Nutritional Information

Work with nutritionists to gather accurate data on allergens and nutritional content.

Clearly communicate this information to customers for transparency.

Data Management

a. Database Setup

Choose a suitable database system (e.g., SQL, NoSQL) to store your menu dataset.

Ensure proper indexing for quick retrieval.

b. Regular Updates

Keep the dataset up-to-date with seasonal changes, new additions, and removals.

Implement a system to track menu updates and modifications.

Data Integration

POS Systems

Integrate the menu dataset with Point of Sale (POS) systems for seamless order processing.

Ensure real-time synchronisation between the menu dataset and the POS system.

Online Platforms

If applicable, sync the dataset with online platforms to display accurate information on websites and food delivery apps.

Provide APIs for third-party platforms to access your menu data.

Data Security and Compliance

a. Customer Privacy

Implement measures to protect customer data, especially if you collect and store order history.

Comply with data protection regulations.

Regular Audits

Conduct regular audits to identify and address potential security vulnerabilities.

Keep abreast of changes in data protection laws and update your practices accordingly.

Data Analytics

a. Customer Preferences

Analyse customer order history to identify popular items and trends.

Use this information to optimise menu offerings.

b. Sales Performance

Track sales performance of each menu item to understand profitability.

Adjust the menu based on sales data to maximise revenue.

Conclusion

A well-structured and managed menu dataset is a valuable asset for any food establishment. It not only facilitates smooth operations but also enables data-driven decision-making. By following the steps outlined in this guide, you can create a comprehensive menu dataset that serves as a foundation for success in the competitive food and hospitality industry. Keep adapting and refining your dataset to meet the evolving needs of your business and customers.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Exploring the Depths of Medical Imaging: A Comprehensive Guide to CT Scan Image Datasets

Introduction:

In the ever-evolving landscape of healthcare and technology, medical imaging plays a pivotal role in diagnosis, treatment planning, and monitoring patients. Computed Tomography (CT) scans, in particular, have become indispensable tools for healthcare professionals, providing detailed cross-sectional images of the human body. Behind the scenes, the power of CT scans is amplified by the availability of vast datasets, which serve as the backbone for developing and training cutting-edge algorithms in the field of medical imaging.

Understanding CT Scan Image Datasets:

A CT scan image dataset is a curated collection of anonymized images acquired through computed tomography. These datasets serve as invaluable resources for researchers, data scientists, and developers working on enhancing diagnostic accuracy, automating image analysis, and advancing medical research. The datasets typically include images of various anatomical structures and conditions, offering a diverse range of cases to study and analyse.

Key Components of CT Scan Image Datasets:

Anatomical Coverage:

Datasets often cover a wide range of anatomical regions, including the head, chest, abdomen, and pelvis. This diversity allows researchers to focus on specific areas of interest or develop algorithms that can analyse multiple anatomical regions simultaneously.

Pathological Conditions:

CT scan datasets encompass a variety of medical conditions such as tumours, fractures, infections, and vascular abnormalities. These pathological cases contribute to the development of algorithms capable of identifying and categorising abnormalities within the human body.

Patient Diversity:

To ensure the robustness of algorithms, datasets aim to include images from a diverse patient population, considering factors such as age, gender, and ethnicity. This inclusivity enhances the generalizability of the models across different patient demographics.

Image Modalities:

Some datasets may include multi-modal images, combining CT scans with other imaging modalities like positron emission tomography (PET) or magnetic resonance imaging (MRI). This integration provides a more comprehensive view of the patient's condition.

Applications of CT Scan Image Datasets:

Algorithm Development:

Researchers leverage CT scan datasets to train and validate algorithms for image segmentation, object detection, and disease classification. These algorithms contribute to automating the analysis of CT scans, reducing the workload on healthcare professionals and improving diagnostic accuracy.

Clinical Decision Support:

CT scan image datasets are instrumental in the development of clinical decision support systems. These systems assist radiologists in making more informed decisions by providing additional information and highlighting potential abnormalities in the images.

Medical Research:

The wealth of data in CT scan image datasets facilitates medical research, allowing scientists to explore patterns, trends, and correlations within large patient cohorts. This research may lead to new insights into disease progression, treatment responses, and patient outcomes.

Challenges and Considerations:

Data Privacy and Security:

Handling patient data requires strict adherence to privacy regulations. Dataset curators and users must ensure that sensitive information is adequately protected and anonymized to comply with ethical standards.

Data Quality and Annotation:

The accuracy of algorithms heavily depends on the quality of the dataset. Proper annotation and validation of images are crucial to training robust models capable of generalising to real-world scenarios.

Conclusion:

CT scan image datasets are indispensable resources that drive innovation in medical imaging and healthcare. As technology continues to advance, these datasets will play a pivotal role in the development of sophisticated algorithms and tools that

enhance diagnostic capabilities, improve patient outcomes, and contribute to the overall advancement of medical science. As we move forward, collaboration between healthcare professionals, researchers, and data scientists will be key to unlocking the full potential of CT scan image datasets for the betterment of patient care.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Exploring the Depths of Medical Imaging: A Comprehensive Guide to CT Scan Image Datasets

Introduction:

In the ever-evolving landscape of healthcare and technology, medical imaging plays a pivotal role in diagnosis, treatment planning, and monitoring patients. Computed Tomography (CT) scans, in particular, have become indispensable tools for healthcare professionals, providing detailed cross-sectional images of the human body. Behind the scenes, the power of CT scans is amplified by the availability of vast datasets, which serve as the backbone for developing and training cutting-edge algorithms in the field of medical imaging.

Understanding CT Scan Image Datasets:

A CT scan image dataset is a curated collection of anonymized images acquired through computed tomography. These datasets serve as invaluable resources for researchers, data scientists, and developers working on enhancing diagnostic accuracy, automating image analysis, and advancing medical research. The datasets typically include images of various anatomical structures and conditions, offering a diverse range of cases to study and analyse.

Key Components of CT Scan Image Datasets:

Anatomical Coverage:

Datasets often cover a wide range of anatomical regions, including the head, chest, abdomen, and pelvis. This diversity allows researchers to focus on specific areas of interest or develop algorithms that can analyse multiple anatomical regions simultaneously.

Pathological Conditions:

CT scan datasets encompass a variety of medical conditions such as tumours, fractures, infections, and vascular abnormalities. These pathological cases contribute to the development of algorithms capable of identifying and categorising abnormalities within the human body.

Patient Diversity:

To ensure the robustness of algorithms, datasets aim to include images from a diverse patient population, considering factors such as age, gender, and ethnicity. This inclusivity enhances the generalizability of the models across different patient demographics.

Image Modalities:

Some datasets may include multi-modal images, combining CT scans with other imaging modalities like positron emission tomography (PET) or magnetic resonance imaging (MRI). This integration provides a more comprehensive view of the patient's condition.

Applications of CT Scan Image Datasets:

Algorithm Development:

Researchers leverage CT scan datasets to train and validate algorithms for image segmentation, object detection, and disease classification. These algorithms contribute to automating the analysis of CT scans, reducing the workload on healthcare professionals and improving diagnostic accuracy.

Clinical Decision Support:

CT scan image datasets are instrumental in the development of clinical decision support systems. These systems assist radiologists in making more informed decisions by providing additional information and highlighting potential abnormalities in the images.

Medical Research:

The wealth of data in CT scan image datasets facilitates medical research, allowing scientists to explore patterns, trends, and correlations within large patient cohorts. This research may lead to new insights into disease progression, treatment responses, and patient outcomes.

Challenges and Considerations:

Data Privacy and Security:

Handling patient data requires strict adherence to privacy regulations. Dataset curators and users must ensure that sensitive information is adequately protected and anonymized to comply with ethical standards.

Data Quality and Annotation:

The accuracy of algorithms heavily depends on the quality of the dataset. Proper annotation and validation of images are crucial to training robust models capable of generalising to real-world scenarios.

Conclusion:

CT scan image datasets are indispensable resources that drive innovation in medical imaging and healthcare. As technology continues to advance, these datasets will play a pivotal role in the development of sophisticated algorithms and tools that

enhance diagnostic capabilities, improve patient outcomes, and contribute to the overall advancement of medical science. As we move forward, collaboration between healthcare professionals, researchers, and data scientists will be key to unlocking the full potential of CT scan image datasets for the betterment of patient care.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

The Evolution of Speech Recognition: Empowering Voice Assistants in Our Daily Lives.

Introduction:

In the era of rapid technological advancements, voice assistants have emerged as indispensable companions, transforming the way we interact with our devices. At the heart of this revolution lies the sophisticated technology of speech recognition, a field that has witnessed remarkable progress in recent years. In this blog, we delve into the fascinating world of speech recognition, exploring its evolution, applications, challenges, and the pivotal role it plays in powering our favourite voice assistants.

Evolution of Speech Recognition:

Speech recognition technology has come a long way since its inception. Early attempts were characterised by limited vocabulary and accuracy, but with advancements in machine learning and artificial intelligence, significant breakthroughs have been achieved. The transition from rule-based systems to statistical models and, more recently, to deep learning techniques has propelled speech recognition into new realms of accuracy and versatility.

Applications of Speech Recognition:

Voice Assistants: Perhaps the most prominent application of speech recognition is in the development of voice assistants like Siri, Google Assistant, and Amazon Alexa. These virtual companions leverage advanced speech recognition algorithms to understand and respond to user commands, making tasks like setting reminders, sending messages, or playing music a seamless experience.

Accessibility Features: Speech recognition has been a game-changer for individuals with disabilities. Voice-controlled interfaces enable people with mobility impairments to interact with devices using spoken commands, providing a level of independence that was previously challenging to achieve.

Transcription Services:

In professional and personal settings, speech recognition is used for transcription services. Meetings, interviews, and dictations can be automatically transcribed, saving time and enhancing productivity.

Navigation Systems: Speech recognition is integrated into navigation systems, allowing drivers to control their vehicles, access directions, and make calls hands-free, promoting safety on the roads.

Challenges in Speech Recognition:

While speech recognition has made significant strides, several challenges persist:

Accents and Dialects: Variations in accents and dialects pose challenges for speech recognition systems, as they may struggle to accurately interpret diverse linguistic patterns.

Background Noise: Ambient noise can interfere with the accuracy of speech recognition, making it challenging for voice assistants to operate effectively in noisy environments.

Contextual Understanding: Achieving a deep understanding of context in natural language remains a complex task. Speech recognition systems often face difficulties in accurately interpreting the nuances of human conversation.

Future Trends and Innovations:

The future of speech recognition holds exciting possibilities:

Multimodal Interaction: Integrating speech recognition with other modalities like gesture recognition and facial expressions will enable more natural and intuitive human-computer interactions.

Improved Context Awareness: Advances in natural language processing and context-aware computing will enhance the ability of voice assistants to understand and respond to complex user queries.

Edge Computing: Moving computation closer to the source of data (edge computing) can reduce latency and enhance the real-time performance of speech recognition systems, making them more responsive.

Conclusion:

Speech recognition has evolved into a cornerstone technology, enabling the proliferation of voice assistants and revolutionising the way we interact with technology. As researchers continue to push the boundaries of what is possible, the future holds promise for even more sophisticated and contextually aware speech recognition systems, further enriching our digital experiences. The journey from early experiments to today's intelligent voice assistants is a testament to the relentless pursuit of innovation in the field of speech recognition, opening doors to a world where our voices truly shape the future of technology.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

The Evolution of Speech Recognition: Empowering Voice Assistants in Our Daily Lives.

Introduction:

In the era of rapid technological advancements, voice assistants have emerged as indispensable companions, transforming the way we interact with our devices. At the heart of this revolution lies the sophisticated technology of speech recognition, a field that has witnessed remarkable progress in recent years. In this blog, we delve into the fascinating world of speech recognition, exploring its evolution, applications, challenges, and the pivotal role it plays in powering our favourite voice assistants.

Evolution of Speech Recognition:

Speech recognition technology has come a long way since its inception. Early attempts were characterised by limited vocabulary and accuracy, but with advancements in machine learning and artificial intelligence, significant breakthroughs have been achieved. The transition from rule-based systems to statistical models and, more recently, to deep learning techniques has propelled speech recognition into new realms of accuracy and versatility.

Applications of Speech Recognition:

Voice Assistants: Perhaps the most prominent application of speech recognition is in the development of voice assistants like Siri, Google Assistant, and Amazon Alexa. These virtual companions leverage advanced speech recognition algorithms to understand and respond to user commands, making tasks like setting reminders, sending messages, or playing music a seamless experience.

Accessibility Features: Speech recognition has been a game-changer for individuals with disabilities. Voice-controlled interfaces enable people with mobility impairments to interact with devices using spoken commands, providing a level of independence that was previously challenging to achieve.

Transcription Services:

In professional and personal settings, speech recognition is used for transcription services. Meetings, interviews, and dictations can be automatically transcribed, saving time and enhancing productivity.

Navigation Systems: Speech recognition is integrated into navigation systems, allowing drivers to control their vehicles, access directions, and make calls hands-free, promoting safety on the roads.

Challenges in Speech Recognition:

While speech recognition has made significant strides, several challenges persist:

Accents and Dialects: Variations in accents and dialects pose challenges for speech recognition systems, as they may struggle to accurately interpret diverse linguistic patterns.

Background Noise: Ambient noise can interfere with the accuracy of speech recognition, making it challenging for voice assistants to operate effectively in noisy environments.

Contextual Understanding: Achieving a deep understanding of context in natural language remains a complex task. Speech recognition systems often face difficulties in accurately interpreting the nuances of human conversation.

Future Trends and Innovations:

The future of speech recognition holds exciting possibilities:

Multimodal Interaction: Integrating speech recognition with other modalities like gesture recognition and facial expressions will enable more natural and intuitive human-computer interactions.

Improved Context Awareness: Advances in natural language processing and context-aware computing will enhance the ability of voice assistants to understand and respond to complex user queries.

Edge Computing: Moving computation closer to the source of data (edge computing) can reduce latency and enhance the real-time performance of speech recognition systems, making them more responsive.

Conclusion:

Speech recognition has evolved into a cornerstone technology, enabling the proliferation of voice assistants and revolutionising the way we interact with technology. As researchers continue to push the boundaries of what is possible, the future holds promise for even more sophisticated and contextually aware speech recognition systems, further enriching our digital experiences. The journey from early experiments to today's intelligent voice assistants is a testament to the relentless pursuit of innovation in the field of speech recognition, opening doors to a world where our voices truly shape the future of technology.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service; It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Unveiling the Power of Video Transcription Services: Transforming Content Accessibility and Searchability

Introduction:

In the rapidly evolving landscape of digital content, videos have become a ubiquitous and powerful medium for communication, education, and entertainment. However, the inherent challenge with video content lies in its limited accessibility and searchability. This is where video transcription services play a crucial role, offering a transformative solution to make videos more inclusive, searchable, and user-friendly.

Understanding Video Transcription:

Video transcription involves converting spoken words in a video into written text. This textual representation of audio content serves various purposes, from making content accessible to a wider audience to enhancing search engine optimization (SEO) for video-based platforms.

Key Benefits of Video Transcription Services:

Accessibility for All:

Inclusive Content: Video transcriptions make content accessible to individuals with hearing impairments. This inclusivity is not only a legal requirement in many jurisdictions but also a testament to the commitment to providing information to all members of the audience.

Multilingual Accessibility: Transcribing videos facilitates translation into multiple languages, broadening the reach of content globally.

Enhanced SEO:

Improved Search Engine Rankings: Search engines cannot watch or listen to videos directly. Transcribing video content provides search engines with text to index, improving the chances of higher rankings in search results.

Keyword Optimization: Video transcriptions enable content creators to identify and incorporate relevant keywords, boosting the discoverability of their videos.

User Engagement and Retention:

Content Consumption: Users often prefer consuming information through text. Providing a transcript alongside a video enhances user experience and encourages engagement.

Skim-Friendly Format: Transcripts allow users to quickly scan and find specific information within a video, increasing user satisfaction and retention.

Efficient Editing and Repurposing:

Content Editing: Video transcriptions serve as a valuable resource for content creators, allowing them to easily review and edit the text. This is particularly useful for creating accurate captions or correcting errors.

Content Repurposing: Transcribed content can be repurposed into blog posts, articles, or social media posts, maximising the reach and impact of the original video.

Legal and Compliance Requirements:

Documentation and Verification: Certain industries, such as legal, medical, and educational, require accurate documentation of video content for legal and compliance purposes. Transcriptions serve as a verifiable record of the spoken content.

Choosing the Right Video Transcription Service:

When selecting a video transcription service, consider the following factors:

Accuracy: Look for services that offer high accuracy through advanced speech recognition technologies and human review processes.

Turnaround Time: Evaluate the service's ability to provide timely transcriptions to meet your content delivery deadlines.

Security and Confidentiality: Ensure the service follows stringent security measures to protect sensitive information contained in video content.

Customization Options: Some services allow customization of transcriptions to match specific formatting or style preferences.

Cost-Effectiveness: Compare pricing models to find a service that aligns with your budget while maintaining quality.

Conclusion:

Video transcription services play a pivotal role in revolutionising the way we consume, create, and share video content. By making videos more accessible, searchable, and versatile, these services contribute to a more inclusive digital landscape and open up new possibilities for content creators, businesses, and individuals alike. As technology continues to advance, the role of video transcription services will likely become even more significant in shaping the future of online communication.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes