Text

The tools, techniques and conclusions of diskmag archeology

Not exactly a text post, but here's a video recording from my talk from Evoke about how we turned age-old diskmags into something web-readable. Best enjoyed if you're into byte-level hackery, reverse-engineering, oldskool DOS culture, and self-indulgent projects no-one understands.

youtube

Sorry about the visual, I'm aware I look like someone left a kiwi in a bucket of drain cleaner for a week.

I also hope to get a post about my DOS demo up at some point, because it was a project I had a ton of fun with, and it was super-cathartic to work on.

19 notes

·

View notes

Text

ÜD20: 30 seconds and 64 kilobytes

There's something to be said about unwanted situations that jostle bits of your creative thinking that you wouldn't otherwise really care to use: this was pretty much the case a few weeks ago, when Slyspy/UF decided to announce a 30 second demo compo, and our little contribution caused me to try out a few things that I've faintly considered to be a good idea, but never really had the situation to try out.

youtube

Hit and run.

Below are a few bits and pieces of the process that contributed to the creation of the intro.

Mischievous intent

To be perfectly honest, the idea of making something that only lasts 30 seconds didn't sound too enticing: I always felt that demos were too short (except for bad ones which were usually too long), and that making something that's 30 seconds was sentencing the prod to be ephemeral by design.

The final motivational push was a combination of things: First, I realized that the 20th anniversary of Ümlaüt Design was the same week as the competition, and I would've felt very bad if we didn't make something to celebrate, and second, I figured it could be a good idea to do a second pass on the 64k toolchain that I built during the end of last year and made "in::flux" with - after all, 30 seconds sounds like it'd fit in 64k, right?

As it was a celebratory thing, I decided to talk a bit to Procyon/ÜD about it - he's been out the scene for a while and was looking for a way to get back in the groove again, and we threw a few ideas around for something that subverts the expectations within the scope of 30 seconds: If we'd pretend that the first bit of the intro is something retro and pixely, that'd give him the chance to work on some software rendering code (something which was more in his wheelhouse) and I'd get to do something more highend for the latter half of the intro, and we'd be able to work relatively independently since all he'd be doing is drawing into a pixel buffer, that I'd later put into a texture and render out into a quad.

Now we had a plan.

First things first: Infrastructural changes

Before we started working on anything, I was adamant to do three things:

First off, I wanted to move the whole project to Github for easy access; we've had a Bitbucket repo for a while, and there's of course stuff like Gitlab, but let's face it: the reason everyone uses Github is because their features and interface are simply better, and now that they offer private repos for free, there's no reason not to use that as a main collaborative platform.

Secondly, I wanted to finally be able to compile 64k "properly" again, without VS runtime requirements; I of course used to be able to do this ages ago, but there was a lot of code rot happening and I never really bothered to clean it all up since I was mostly making 64ks for non-64k compos anyway. The Handmade Network has a great article on how to approach this to "trick" the compiler/linker; I also decided to leverage VS2019's ability to run multiple compiler environments, and included the "final" intro build in the same solution, simply using VS2010.

One fun little aspect that I ended up spending way too much time on is creating Github Workflows for continuous integration: initially I just made a quick one that performs a compile test on each build, but for giggles I drove that far enough that it eventually not only installs VS2010 (using the Chocolatey package), but also downloads the latest DirectX SDK to grab the D3DX libraries from, compiles and compresses the intro and zips the output up as an artifact. This was working wonderfully until I realized that the VS2010 install was taking ~8-10 minutes for each commit, and we ran out of minutes on the free account in a weekend. (Oops.)

Another thing that I spent a day / night on - only to ultimately have to revert all of it - was my hope to get custom build tools integrates to the engine - this would mean any random raw data file included into the project would get converted into an object file that can be referenced as a symbol in the source code. I spent an inordinate amount of time trying to get this to work, and I got to a point where I was actually getting results, except VS wouldn't pick up changes in a file. Out of desperation I decided to check some other projects implementing the same thing, and when they seemed to reproduce the same exact problem, I asked the creators, and their answer was essentially "Yeah, we know, it's broken ¯\_(ツ)_/¯", so I decided to just shelve it and focus on making an actual intro.

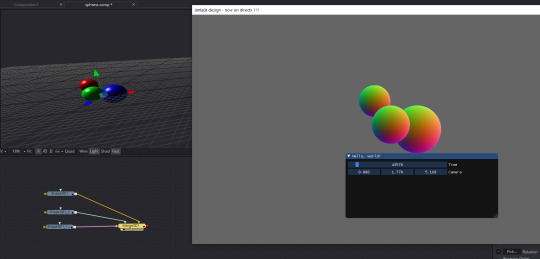

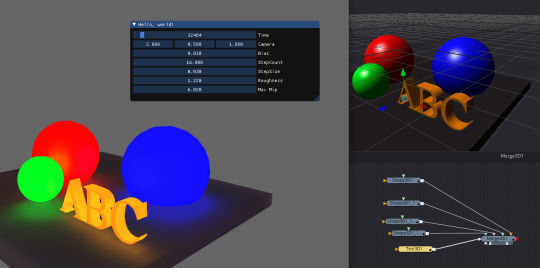

The final thing I really wanted to lean into is to finally integrate ImGui: For those who don't know (and really, who doesn't?), ImGui is a wonderful little GUI library that allows the quick-tweaking of values with very very simple single-line pieces of code that create complex convenient ad-hoc user interfaces - it is almost universally used in gamedev, and it has a ubiquitous presence in the scene among those who don't want to write a demotool.

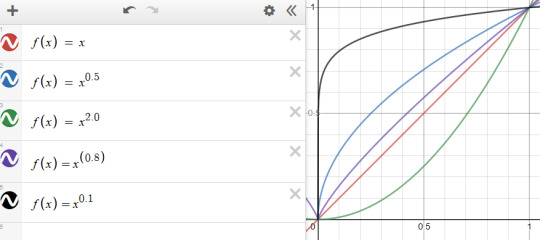

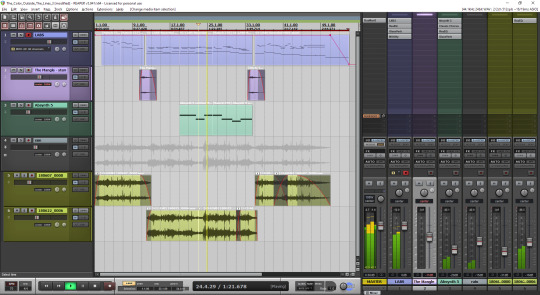

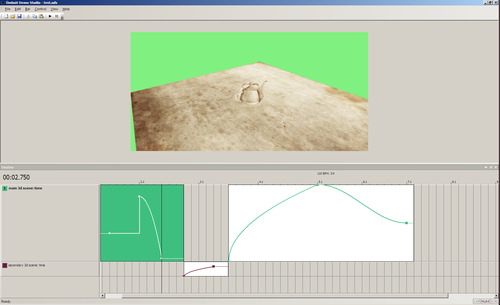

The joy of ImGui: each of these controls (including the tabstrip!) is a single line of code

Adding ImGui is really a work of 5 minutes, and from then on, each camera or color tweak is as easy as pie - the only downside, of course, is that there's no "obvious" mechanism on saving values, and while I briefly considered writing a quine-like wrapper around ImGui that overwrites its own source file that contains the variables, I ended up just manually copying the values back to the source, because sometimes the fastest method is the best.

The move to DirectX 11

So this is the "duh" part of the post where everyone smugly welcomes me to the 21st century, but as I explained earlier, my reasoning for sticking with DirectX 9.0c so long was based on the idea that me as a hobbyist-yet-perfectionist, who ostensibly does this not only for "fun" but also to learn things, should first wring the absolute maximum out of an environment before moving on to something more expansive - eating the vegetables before the dessert, if you will.

This proved to be a good idea on many fronts: While my initial forays into 11 were often confusing (the mode selection is considerably fiddlier than in 9.0), there was a distinct moment where I understood that if I approach everything from the perspective of what I always wished 9.0 would have, a lot of those wishes are the exact changes 11 has.

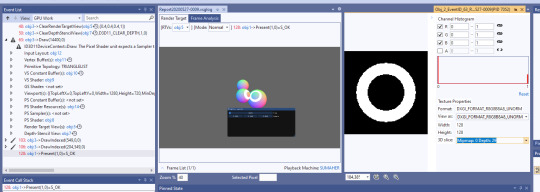

Graphics debugging integrated into Visual Studio? Sign me up! (It worked for about 2 days until I started using UAVs and then it just crashed. Oh well - back to PIX...)

One of the things I needed to get used to but makes sense in hindsight is "views": rather than the OpenGL/DX9 way of creating a texture and sticking that into the shader, DX11 expects you to create "views" on top of a texture (which it essentially treats as a generic buffer), and instead provide those views to the shader. Initially this step felt gratuituous, but I eventually realized that the same step (creating a render-target view) allows you to turn the same texture into a rendertarget, or a compute-target, or even a depth buffer - and underneath all that, it's still just a buffer with numbers in it. The flexibility that I always wanted was suddenly there.

Once I got reasonably comfortable with the renderer, it was time to get some content in.

Exporting from Fusion

The first hurdle that set my mind off thinking was the question of how I'm gonna get content into the intro without writing any sort of tooling - there simply just wasn't enough time, and by my estimation it probably would've been single use tooling anyway, so I needed an adhoc solution.

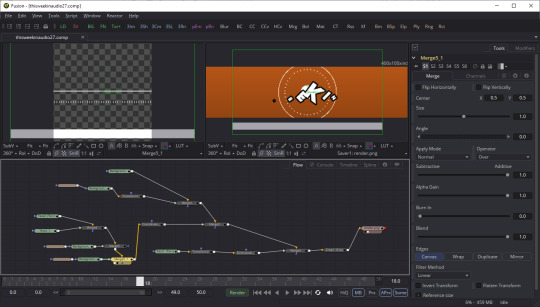

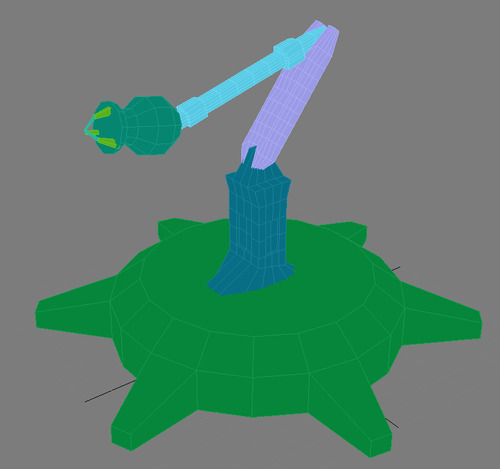

Luckily, I had something in mind: a few years ago I started digging into Blackmagic Fusion when I noticed that the 9.0 version was available for free (and it no longer is, for whatever reason), and have gotten quite proficient at it at this point; Fusion itself is effectively a node-based video-compositing tool, but at some point they added basic 3D scene composing to it, and as it had a few useful procedural nodes, it felt perfect for the kindof "blocky" sci-fi geometry that I was hoping to achieve: as long as I was able to 1) reimplement basic versions of the 3D nodes it had and 2) somehow get the data out of it, I essentially had my quick-iteration 64k tool.

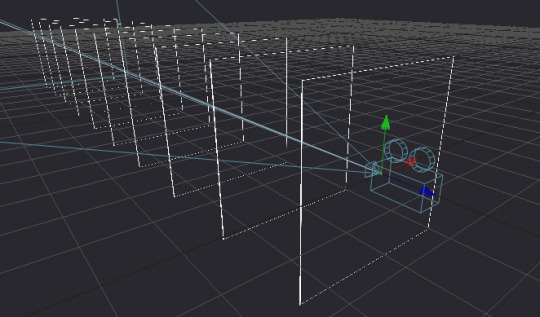

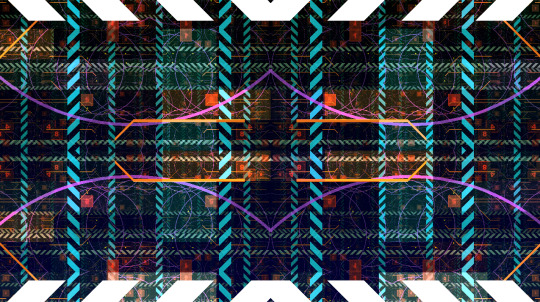

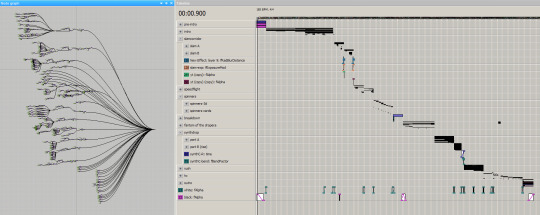

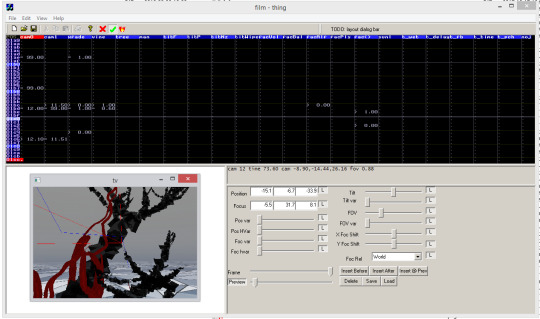

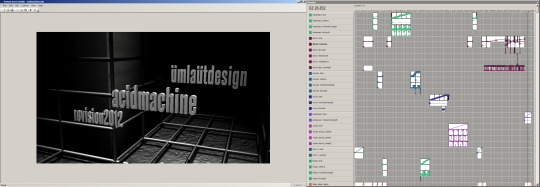

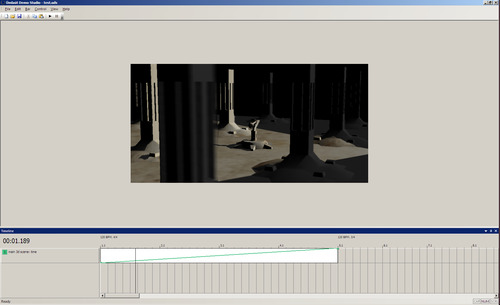

Fusion in action - I shall endeavour to make sure that my next demotool looks like this.

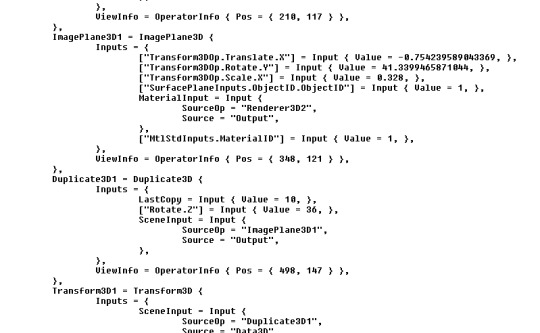

Getting the data out seemed more like a chore than a challenge; while the Fusion format (.comp) was text-based, it used an odd JSON-esque format that would've needed a parser - normally for something like this I'd juse use some sort of arcane regexp or PHP script, but this time because of the graph-based nature of the data, I decided I was better off doing it "semi-properly", and looked around on the market for programmable AST-parsers, preferably in C#. I eventually found Superpower, which seemed to be just the ticket, and I spent a few days deconstructing their example JSON parser, and modding it to be able to load Fusion Comp files.

This is like JSON after a drinking binge.

It was far from being an obvious process, as one look at some of the stranger aspects of Fusion Comps will tell you: their format seems to be a mix between flat arrays, objects, objects with attributes, and key-value pairs. Ultimately, I decided that as long as the object data is relatively correctly represented, I'm okay with having to write some C# code around it for easy access, so that's what I did. One curious little challenge was that tokenizers tend to sneer a bit at non-strict syntax use, for example JSON's array syntax ([1,2,3]) which is strict, whereas in JavaScript, you're allowed to have a trailing comma ([1,2,3,]) and the "empty" element wouldn't be counted. Fusion's Comp format was also not strict in this sense, which was a bit of a headache to handle in the parser, but ultimately I decided to just bamboozle the parser that ,} and } were both valid terminators to an array, and it fell for it. (The code for the parser is available here.)

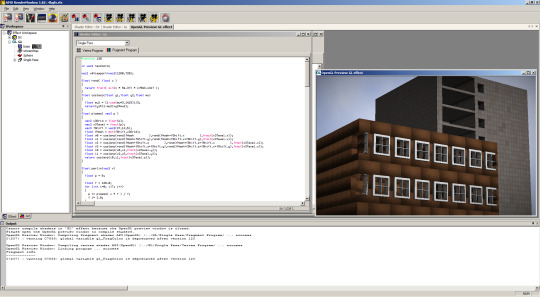

Once the data was in C#, I decided to just simply make it export C++ code that created my scene - now, fair warning, this is actually terrible practice: what you should be doing for optimal size is putting all your data in one simple binary blob, have a basic parser that loads in data and performs the actual scene generation, thus making sure that stuff like object allocations are only represented in code once, and that data compression doesn't mix with code compression. The simple reason I didn't do it this way was just laziness and convenience: I wasn't worried about space (we were going to be well within 64k), and I didn't feel like fiddling with yet another data format. Instead, I just exported straight C++ code. (Inque tools used to do this, by the way, so it's not an unworkable method, but still.)

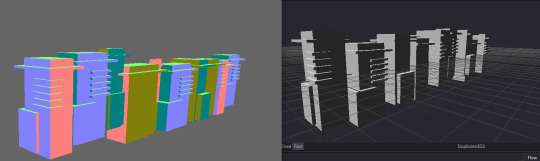

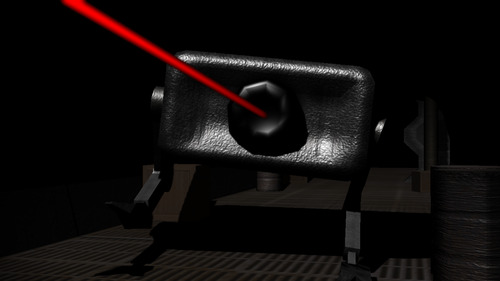

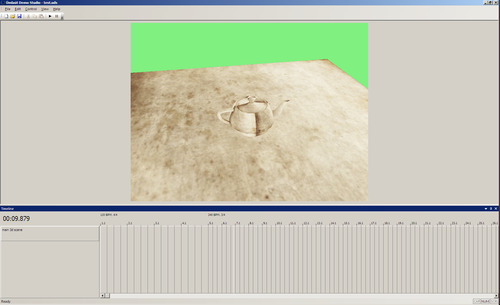

My first exporting test, just as a proof of concept; one thing I had to realize early on is that Fusion is RHS, not LHS.

Reimplementing the Fusion nodes wasn't particularly hard, and halfway into it I had an epiphany: since I was mostly using Fusion's Shape3D and Text3D nodes, why don't I just use the D3DXCreate* functions for everything? Then I'd have basic shapes (boxes, toruses, cylinders, texts) for free - the only somewhat hilarious downside was that I had to sneakily create a DX9 device to make it work (and avoid DX11 throwing a hissy about it when going fullscreen), but once that was done, snatching out the vertex data was trivial.

There were a few problems with my approach: First off, the UV mapping function in Fusion was a bit crap (understandibly), but I just used my own and decided to live with having different looking results. Secondly, the material system in Fusion was completely different; I eventually just relied on the basic material colour, and used the comment field on each node to indicate whether that particular object was emissive or diffuse. (Huge hack, but who cares? Again, adhoc solutions work fine.) A minor annoyance was that certain things in Fusion just didn't look the same after exporting: text was always slightly offset, and of course randomized geometry always looked different - I decided to just compensate for the former and ignore the latter, although I eventually found out that the source code for most of the 3D nodes is available in the plugin SDK, but by that time I comfortably settled into the routine of making stuff, and didn't want to bother, because the scene was shaping up nicely.

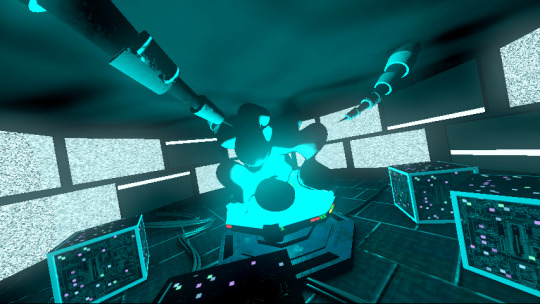

An early test of just some boxes exported from Fusion; the Offscreen Colonies influence is obvious.

Adding shinies

When thinking about where to get some visual inspiration, I happened to remember "Shapeshift" by Cocoon, and in particular one of the "opening" scenes, which takes place in a very similar setting that I was hoping to create:

"Shapeshift" by Cocoon

Upon closer analysis, however, something kept boggling my mind. How do these reflections work?

Note the two blobs in the middle; this screenshot was taken from the corner edge of the image, so there's nothing to the left of it.

They cannot be simple planar, since the surfaces are angled, but they can't be screen-space because they reflect things that are off-screen.

Luckily, I realized Guille was on Discord and quickly asked what the technique and he was glad to explain: voxel cone tracing.

The idea behind voxel cone tracing is relatively simple in theory: for "correct" reflections, you'd need to calculate the reflection of the entire scene into every pixel, which isn't viable: you'd either need to render the whole scene again from the perspective of each pixel, or do some sort of raytracing - of a polygon scene, nonetheless. But what if we would have the polygon scene also available as a voxel grid? That way, we could do some rough sampling into the voxel grid for each pixel, and have a reasonably good idea of what reflects - sure, it would lose a considerable amount of resolution (as our voxel grid is of course memory-limited), but for some rough moody reflection (and some added fake-global-illumination as a side effect), it'll do just fine.

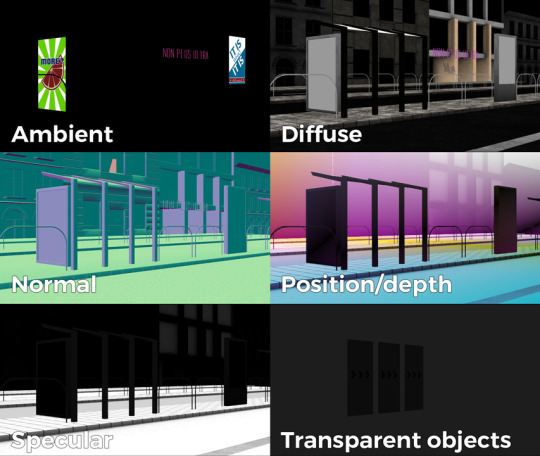

Ostensibly, there's two steps to the process: first, we voxelize the scene, and second, we render the scene and calculate the reflections. Both of these steps are finicky in their own ways: the former needs our scene to be rendered into a 3D texture, the latter is more straightforward, but requires a lot of parameter-fiddling.

So how to voxelize a scene? Well, first off, we need a way to be able to render into a 3D texture; normally you'd do this in slices, but that is very slow (as you have to render the scene once for each slice). Luckily, DX11 introduced something Unordered Access Views (or UAVs for short): as their name says, if you have an UAV associated to your render target, the rendering can be "unordered", i.e. you get to pick WHERE you write into the texture. What this allows us is to render the scene "as-is" (i.e. with a relatively simple vertex/pixel shader), but at the end of the pixel shader, instead of returning a color, we write directly into the 3D texture, based on the world-space position of that point.

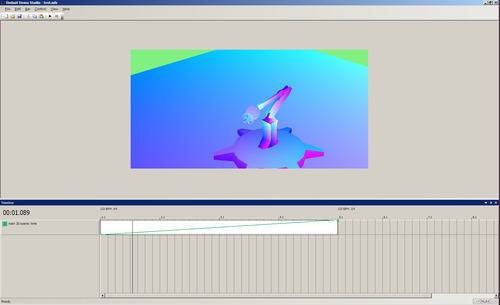

My first test of voxelizing a scene - I didn't want to bother with writing a voxel viewer, so I just used PIX.

The obvious solution for the vertex shader is to orthographically project the scene and designate the corners and clip planes of the ortho matrix to be corners of our voxel cube; this gets us there halfway. However, there's still an interesting problem: even though we're writing into a 3D texture, the pixels written are only ever the ones that provide coverage in that given viewport - meaning that if we're looking straight down the Z axis, the top and sides of a cube would only produce a few pixels (if any) as they are parallel to our view. This usually results in gaps or missing surfaces in our voxels.

The fun little solution for this is to simply "fake" the triangle facing forward for the pixel shader - after all, even if we completely distort the triangle, we can still pass the actual, accurate world space position of each written pixel, so whatever we pass as POSITION to the pixel shader isn't really relevant. So that's what we can do: We simply check the "dominant" axis of the triangle (i.e. where it's facing), and if it's not facing towards us (i.e. the Z-axis), we trick the pixel shader to think that it does by pretending that the view has been rotated. That way we get the perfect amount of pixels. Normally, this method sometimes results in a few gaps between triangle edges; this can be fixed with what people call "conservative rasterization", i.e. making sure that voxels don't get missed by being low-resolution, but I didn't bother with this.

My initial reflection tests

There was an issue, however, that I didn't have the time or energy to fix: There's considerable flickering at places. Guille noted that he actually works with two voxel cubes: one is rendered once, during loading, with all the static geometry, and one is rendered each frame with the animated geometry, and the two are then combined, for which I used a compute shader. The problem was, the dynamic voxel seemed to flicker even when the frame data wasn't changing; I eventually concluded this was due to what I can best describe is a GPU race condition: GPUs render massively parallel with units called "wavefronts" (AMD) or "warps" (NVIDIA), that schedule the actual rendering threads calculating the pixels. What happens in our case is since the resolution we're rendering the "fake" polygon in is larger than the actual voxel, some threads end up with the same world-space coordinate, and as such, voxels get written into multiple times by multiple threads, and the color really depends on whichever thread got there last. There's a solution to this in the form of atomic shader writes (the Interlocked* HLSL operations), but they only work on integer texture formats, and I wasn't feeling like rewriting the whole rendering path one day before delivery just to fix something that's only really prominently visible when you freezeframe - that said of course, it's nice to get such experience in relatively "harmless" projects.

Texture generation

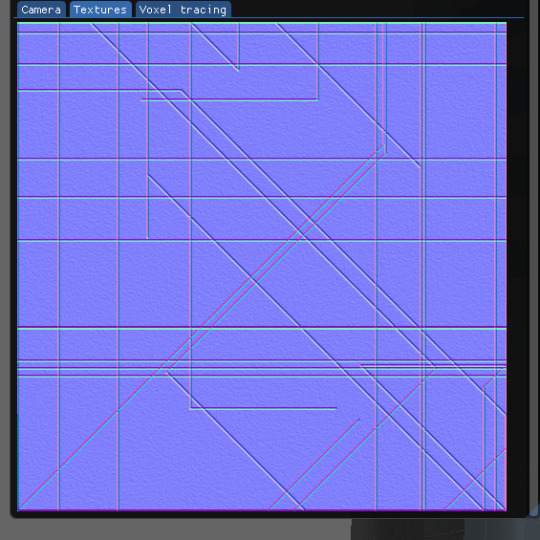

Another thing I observed with Shapeshift is the use of normalmaps - they're a great way to break up geometry and add detail to flat surfaces, but at the same time I also didn't want to overdo it to the point where the "buildings" would look like they were in serious need of sandblasting.

After doing some research on similar "sci-fi" normal maps, my impression was that they kinda looked like Voronoi diagrams with Manhattan-distance calculation, which was a valid option to do in 64k, but I felt it would've been overkill for the situation, so I decided to break it down more primitively:

The motif most noticable was the set of horizontal and vertical lines - I could easily generate those of course.

There were also lines consisting of either horizontal, vertical, or diagonal bits. I could essentially just do this by picking a random point, and just drawing a line strip with random directions and lengths.

I also decided to add a very very tiny noise layer, just so that the reflections become a bit rougher and more uneven, to hide the inaccuracies.

Et voilà, the result:

Brutalist normalmaps are my jam.

Another thing I noticed in Shapeshift is the use of the grungy / scratchy specular maps to occasionally break up the reflective surfaces; I gave this a shot with standard perlin, but I could never get the result I wanted, so I eventually just abandoned it.

Cloudy bits

One thing that I felt would work when it comes to adding atmosphere is volumetric clouds; first off, it'd add a nice parallax depth and a bit of definition to the image, but most importantly, I already had the effect lying around for a rather peculiar reason: A few months ago, my good friend and art-nanny Mulm was sad to tell me that one of her Internet friends passed away, and that she was hoping to do a VR world as a tribute, preferably with large explorable fractals; I was on board to help out and fiddled around a bit in Unity to wrap one up. Eventually the conversation turned to clouds and I suggested I could probably make that too, and got to work, only to realize the moment I was finished and sending the shader that it was one minute past midnight and the day turned into her birthday, and that I inadvertently gave a shader to someone as a birthday gift... (It's a thing that admittedly wasn't on my bucket list, but it was one of those innocently positive little moments that still makes me smile.)

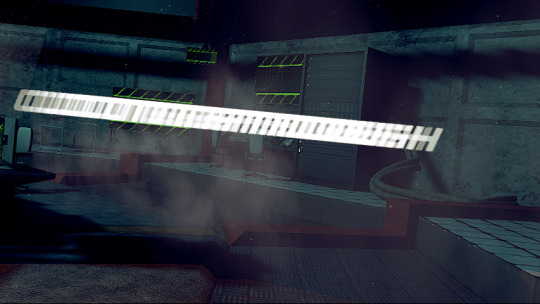

The effect itself is pretty straightforward and is mostly just a riff on the raymarching-into-a-polygon-scene technique seen in "PILEDRIVER", with one important distinction in the rendering path: Because of the aforementioned view-system, DirectX 11 allows you to treat the depth-stencil target as a texture from the get-go! No FOURCC hax, no separate depth pass, just create a shader view on it and you're done: perfectly valid depth values coming out from the sampler.

The actual raymarching algorithm for the clouds is very simple: just fixed step raymarching and sampling 3D Perlin noise. Where it became tricky is forming actual clouds out of it, and tweaking parameters: The technique I ended up using is simply ignoring samples under a certain threshold value, and then cranking up the intensity on the samples that were above it - this made the clouds more "chunky" and feel more like plumes of smoke rather than a continuous fog, which fit better with the visuals.

The nice thing about clouds done this way versus billboard particles is that 1. they interact more naturally with geometry (no clipping) and 2. they don't "rotate" with the viewpoint

Once that effect was in place, it felt like everything fell into place, and all it needed was some heavy duty ImGui-poking to turn a relatively decent looking scene into a well-directed decent looking scene.

A job well done

Making the intro was far from a smooth process - and I'll let Procyon explain those details in his own time - but I'm glad I was finally getting to stretch a bit into something more "modern-adjacent", and more importantly, this little excursion gave me a better understanding of how I should be writing my next "major" engine... Which I'll... start... any day now... eventually...

4 notes

·

View notes

Text

Getting stuff done - practical solutions for pulling off large projects

So this might be an unusual post for a site that I predominantly intended to be a collection of tech-articles, but over the recent years I've both been observing and noticing in conversations that while there's plenty of technical material online about how to learn to program, do graphics, engineer music, and so on, much of the writing about how to get projects done seem to veer over into either "life hacks" about how you should do stretches and open your window for fresh air, or escalate into the territory of motivational speeches and emotional, heart-to-heart peptalks; now, while I understand and appreciate their usefulness in certain scenarios, they seem to cultivate a misunderstanding that all you need to get a project done is a positive attitude and ab-crunches.

After having been in an industry for 10 years that has a relatively high throughput, and having had many relatively recent conversations with people who were in awe or disbelief about my ability to handle large projects, I was reinforced in my belief that most people are unaware how much of getting a large amount of work done is down to cold science, scheduling, professional planning, and the ability to retain control of a project even when unexpected events happen.

With that in mind I'd like to present a few random bits and bobs about what I learned about project management - not necessarily only in programming, but in any sort of creative activity - while hoping to keep the popular psychology element in them to the mininum.

A quick note before we dive right in: I'll be talking entirely about sparetime activity that you want to invest time in. While it can be useful to apply professional techniques to your sparetime activity, you should definitely not treat the two as they're one of the same. That's a good way to hate what you used to like. (Trust me.)

Who's producin'?

Before we start talking about actual work, it is important to introduce ourselves to an important term, namely production. Most people think "production" is the act of making something, and the "producer" is someone who makes things, while I would wager a large portion of the populace can't tell you what a "film producer" does aside from "making films"; on a day-to-day basis, that role seems to be an opaque cloud to most people, even if it's one of the most important.

The short version is that the producer's job is to make sure that the project is finished on time, and on budget. The slightly longer version is that the producer takes the amount of time available, the amount of resources available (budget, people, inventory, etc.), consults the respective heads of departments actually doing the work, and then comes up with the plan and schedule as to what happens when, when something finishes, who works on what, and what resources will it take; once production starts, they transition their role into making sure everything goes to plan and if something doesn't, what are the options to salvage things.

There is a reason why in most professional environments, there are entire departments for this: it is a tough job, often frustrating, sometimes disheartening, but at the same time a producer is always aware of the state of the project and can have a good idea of progress being done, and whether the whole thing will come together in the end. This will become extremely important later.

Weeks of coding can save you hours of thinking

Projects are usually broken up to three important segments: Pre-production, production, and post-production. These tend to vary from industry to industry as to what they mean, but pre-production generally consists of building a production plan out of the resources and time available. Pre-production is one of the most important parts of a project, because it will define how the rest of the project will be committed, and thus it's important that we first delve into the ins-and-outs of planning.

First thing first, decide if you have a deadline for your project. Deadlines are cruel, they're unforgiving, but that's why they work miracles on productivity; projects without deadlines don't put pressure on the authors and eventually they just get dropped down the chute of "maybe later". Pick a hard deadline, or pick a reason to have a hard deadline. (Sidenote: demoparties work wonders as deadlines.)

Secondly, once you picked a deadline, assess your project's needs: Assuming your project is in some way technically complex, what are the things that you don't have and need to create or acquire for this? If you're doing music, is there plugins or samples you need, or instruments to acquire, or musicians to talk to? If you're painting, do you have the paints and brushes, or is your tablet broken and need to get it fixed? If you're making a demo, do you have an engine, can it do what's needed? Think about every single thing you need.

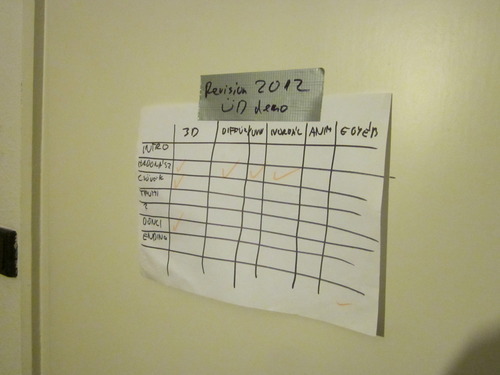

Once that list is done, break down your project into elements, in detail - this is the most crucial part of production. If it's a song, roughly jot out the parts on paper and maybe the list of instruments you want in there. If it's a large 3D scene, write down the elements you want in it in detail. If it's a novel, jot out the chapter titles. If it's a demo, chart out the parts with rough ideas on how long each is and what's in it. If it's a game, map out all the mechanics, characters, levels, cutscenes, and so on. Break everything down to parts, and do it visibly so that if something is missing, you can spot it. Once that's done, take the parts and break them down further: What is needed for each part? Roughly how long is the duration of each part? What are the technical necessities of each part? What are the assets needed for each part? What is the process for creating each part?

There are many reasons why all of this is crucial. Firstly, this will serve as your gameplan for the rest of the production process. Secondly, and more importantly, the more you break a large project down to small parts, the less daunting it will seem: if you embark on a project that can take months to complete, you have to take it on one day at a time, and each day will need to end with some sort of progress. If you have a roadmap that tells you each day what that day needs to get ticked off at the end of it, you will no longer be fighting an endless battle; instead you'll focus on the task at hand, with a bigger roadmap to keep you in check.

This leads us into another important consideration, and one of the more murky ones: time estimates - for each of the broken down tasks, give a good guess as to how long it will take. Then multiply it by at least two, and that'll give you a good idea of the time you will need. See, the first thing you should learn about when producing is contingency: contingency is a multiplier for each task's estimate that adds extra headroom that accounts for anything the estimate doesn't account for. There are many things that can make a task take longer, and not all of them are relevant to the task: you might run into edge-cases that add extra complexity, but on a much more basic human level, you never know when you're going to break a leg, or your cat will get sick, or your apartment gets flooded - anything can happen, and it probably will, so make sure you account for that, and leave yourself plenty of headroom as contingency, and best case scenario you'll just have a more relaxed process.

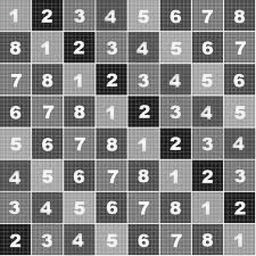

Sometimes, however, even contingency isn't enough, and you'll find yourself running behind schedule. This is why this next step is important: Nail down your scope, and stick to it. There are many things you have listed in your above breakdown, but they all vary in importance, so make sure that next to the time needed, you also note down their importance. As the late great Randy Pausch said in his Time Management talk, it's important you distinguish between things that are important, and things that are urgent: there might be things that are urgent for a project, but ultimately not important, and it is up to your discretion to rank them upfront. [Update: I've recently found out that this is called the "Eisenhower Decision Matrix".] As for ranking, this is up to you; you can do it on a scale of 1 to 10, but I personally prefer what they call the MoSCoW method, where "MoSCoW" stands for "Must", "Should", "Could" and "Would"/"Won't", and they signify how important that specific thing is to the project: "Must"-s are the things that are the bare minimum to get a project off the ground, "Should"-s are important, but not necessary, "Could" are polish, and "Would" are just whatever there's time left for if you're ahead of schedule. Ranking by necessity is very important, because once you start running out of scheduled time, the only option you will have aside from having a project fail is to cut stuff. Ranking by importance also allows you to schedule accordingly, making sure that unimportant polish tasks are moved towards the end of the process instead of wasting time with them at the start.

One thing to note here is that it takes decades of experience to get all of this right, so much like your actual skills of making things, your production skills will get better with time - a good rule of thumb here is to just keep your eye on the ball: decide whether an item on the list fundamentally contributes to the production, or is it just something that you think it would be cool to do, or something you wanted to do anyway. It's tempting to get sidetracked by excessive detail like splurging on instrument recordings or adding engine features, but focus on the goal: If it doesn't contribute to your end goal, cut it.

I also want to remark that I actually did the same for this article: earlier I wrote down bullet points about what I want to mention and started arranging them into a flow.

Doin' the work

Once you've planned your stuff out, then it's just a matter of discipline of taking off the next item on your list, and working on it until it's done. This is of course both easier and harder than it sounds, but remember: you made this list with full knowledge and intention to follow through on it, so at this point this is your best bet to get to your goal. I usually reinforce my adherence to the plan by putting the physical (printed) schedule in a visible place in front of me somewhere in the room, to keep me focused on the goal; I also mark off items that I'm finished with, because I personally find the physical act of ticking off or crossing out an item very satisfying - it's the little things, really.

The production document for PILEDRIVER

There are a few things that can help with getting into a good workflow: One thing a lot of people underestimate are placeholders - when your final art isn't done, just stick something in there that only vaguely resembles what you want in there, and every day you work on it, not only will it remind you that it needs to be replaced, but on occasion it will reveal problems in the grand picture.

To bring a practical example, both with "Signal Lost" and especially with "PILEDRIVER" I went absolutely crazy with placeholders, essentially building both of those demos twice as what in the 3D industry they'd call "animatics": just rough boxy versions of the scenes with approximate camera/lighting, just to be able to get a feel for the pacing / length of the scenes, the camera speed, and so on. Making an animatic version of the scene and getting it in the engine usually took less than 15 minutes, but with the music done, I was able to immediately get a feel for whether I need to adjust my initial idea slightly, or perhaps I needed to find another camera angle, or break a section up to more parts.

youtube

The PILEDRIVER animatic

I actually went one step further earlier in the process; I knew I wanted the rap part in the middle, but the lyrics I wrote were fairly complex, and I knew Fantom would struggle with some of it (especially in pronounciation), I decided to take the lyrics and put them through my favourite speech synth, Daniel, and then chop it up so that it resembles the flow I thought sounded best for that particular part. This not only helped with the recording, but also reassured me that the idea would work.

youtube

Against all odds, I managed to coax Daniel out of retirement.

As you can see, my work methodology was often designed around one thing: quick feedback and iteration. I find myself having very clouded visions about art-related things most of the time, so for me it is very crucial to be able to try stuff as quick as I can and fail early, so especially with music, I do what the late Linds Redding coined as The Overnight Test: If something I make late at night doesn't sound great the next morning, I scrap it and start something new, maybe cannibalizing the good bits from the previous iteration. When on a timer, sometimes iteration means not getting stuck on something that would never work.

Note: I'm aware that Mr. Redding used his term in a more somber context later in his life, but I'm merely sticking to its original meaning here.

And speaking of overnight, let me stress one thing: always do nightly snapshots of what you're doing. If you're making a demo, build the whole demo every night and watch it, maybe run it on your work computer the next day during lunch. If you're making a song, render it out and put it on your portable player and listen to it on the bus the next day. Not only will you internalize the work and make a list of issues to fix over the course of the day, but it assures the integrity of the technical side of the project - I've watched way too many demogroups, as recent as last month, make an awesome prod that just doesn't compile or crashes before the deadline because they were too busy working on content and never tested the actual executable. If you do this every night, or maybe even every few nights (but always at least once a week), you're more likely to realize that your compile config hasn't been updated for a while and you really should do that before you're 5 hours before the deadline and you're drunk.

Sidenote: I'm aware that this is perhaps too specific of an advice to programmers, but probably worth mentioning, and I can imagine variations for other artforms as well, like importing/exporting problems with 3D.

Pushing on

So this is the bit in the text where we might be veering close to pretend version of popular psychology and talk about subjective things like motivation and dedication, so I'd like to make a disclaimer that I've never formally studied these things, and you should not take any of this as gospel or any level of scientific; these are just things I noticed about myself, and tried some simple methods to counter them, and they (mostly) worked fine.

One of the big things I've mentioned earlier is the daunting vision of the road ahead; with large projects, it's incredibly demoralizing to just sit at the start and be crushed by the amount of work that needs to be done, especially if you're doing something where it takes a large amount of work just to be able to have something basic; with music it perhaps only takes a ~10 second loop to feel like to be on the right track, with a full demo, the aforementioned placeholders do a great job, but with e.g. writing a larger engine, it can take weeks before you get to the point where you have anything on the screen simply because of all the modules you need to work on first. My mitigation for this was what I call the skeleton method: What's the least amount of work I need to do to get anything to get on screen? For that, what's the least amount of work I need to do to get something to just run? All of this ends up in the pre-production document as well, so when I start working, I can relatively quickly get to a stage where it's just a blank window, but it's built on an engine skeleton where replacing things is straightforward once I reach that point in the process. I myself noticed that my work morale improves once I'm able to send screenshots or chunks of the thing I'm working on to people, so I try to get there as fast as I can.

Another thing I noticed about my working style is that even with managable work chunks I have problems getting started simply because it takes a while to get into "the groove", so a little technique I've been using is that when I'm about to wrap up for the day, I make sure there's a glaring problem with what I just did, like an obvious bug or mix error that I could fix in a few minutes - and then I leave it like that until the next day. That way, the next day I'm able to jump in and start with something easy.

The final thing - which I kinda hesitate to mention because we're already way too deep into motivational speech territory to my taste - that I found extremely useful is regimen: making sure that there's a period of time either or daily or weekly that I get to work on the project. Perhaps the ultimate guru of regimen is the great Beeple, who has been releasing a piece of art every day for more than 10 years now, and has given expletive-laden talks on the subject of why regimen is important. Now, I think we can safely consider him an edge-case with 10 years of everydays, but at the same time, there's value in doing something in a regular manner, and there's also a consideration of the amount of work you need to do versus the amount of time you have.

One important aspect all of this is that while guilt can be a good force of motivation, you shouldn't beat yourself up if something doesn't work out. Failures are a part of life, and they're an even larger part of any artistic or techical process, and you should accept that sometimes best laid plans can go absolutely up in flames because of a variety of reasons - like, say, because there's a global pandemic going on, and that the existential pressure of it takes away your motivation to do things. All of that is okay. As Eisenhower once (supposedly) said, "Plans are worthless, but planning is invaluable."

In summary

I'm mentioning that failing is always part of the process, because I've been listing a lot of rules and constaints and limitations on what you should and shouldn't do, and I must again emphasize that all of this is meant as advice and observations with regards to spare-time activity, and that how much of this you use will depend on how bad do you want one thing over the other: Managing spare time can require a delicate balance especially if you have a healthy social life, and if you do, you have to decide how much to work on retaining that versus how much you work on your passion project, and in my eyes this ultimately all leads back to pre-production: if you know you can't spend time on something every day and the deadline is right around the corner, rein in your scope early so that you can deliver rather than giving up halfway, because no unfinished project is as impressive as a finished one.

27 notes

·

View notes

Text

A breakdown of the Revision 2020 Threeway Battle shader

Those of you who have been following this year's edition of Revision probably remember the unexpected twist in Sunday's timeline, where I was pitted in a coding "battle" against two of the best shader-coders in the world to fend for myself. Admittedly the buzz it caused caught me by surprise, but not as much as the feedback on the final shader I produced, so I hope to shed some light on how the shader works, in a way that's hopefully understandable to beginners and at least entertaining to experts, as well as providing some glimpses into my thought process along the way.

youtube

Recorded video of the event

But before we dive into the math and code, however, I think it's important to get some context by recounting the story of how we got here.

A brief history of demoscene live-coding

Visual coding has been massively opened up when graphics APIs began to introduce programmable fragment rendering, perhaps best known to most people as "pixel shaders"; this allowed programmers to run entire programmable functions on each pixel of a triangle, and none was more adamant to do that than a fellow named Iñigo Quilez (IQ), an understated genius who early on recognized the opportunity in covering the entire screen with a single polygon, and just doing the heavy lifting of creating geometry in the shader itself. His vision eventually spiraled into not only the modern 4k scene, but also the website ShaderToy, which almost every graphics programmer uses to test prototypes or just play around with algorithms. IQ, an old friend of mine since the mid-00s, eventually moved to the US, worked at Pixar and Oculus, and became something of a world-revered guru of computer graphics, but that (and life) has unfortunately caused him to shift away from the scene.

His vision of single-shader-single-quad-single-pass shader coding, in the meantime, created a very spectacular kind of live coding competition in the scene where two coders get only 25 minutes and the attention of an entire party hall, and they have to improvise their way out of the duel - this has been wildly successful at parties for the sheer showmanship and spectacle akin to rap battles, and none emerged from this little sport more remarkably than Flopine, a bubbly French girl who routinely shuffled up on stage wearing round spectacles and cat ears (actually they might be pony ears on second thought), and mopped the floor up with the competition. Her and a handful of other live-coders regularly stream on Twitch as practice, and have honed their live-coding craft for a few years at this point, garnering a considerable following.

youtube

Just a sample of insanity these people can do.

My contribution to this little sub-scene was coming up with a fancy name for it ("Shader Showdown"), as well as providing a little tool I called Bonzomatic (named after Bonzaj / Plastic, a mutual friend of IQ and myself, and the first person to create a live coding environment for demoparties) that I still maintain, but even though I feel a degree of involvement through the architectural side, I myself haven't been interested in participating: I know I can do okay under time pressure, but I don't really enjoy it, and while there's a certain overlap in what they do and what I do, I was always more interested in things like visual detail and representative geometry aided by editing and direction rather than looping abstract, fractal-like things. It just wasn't my thing.

Mistakes were made

But if I'm not attracted to this type of competition, how did I end up in the crossfire anyway? What I can't say is that it wasn't, to a considerable degree, my fault: as Revision 2020 was entirely online, most of the scene took it to themselves to sit in the demoscene Discord to get an experience closest to on-site socializing, given the somber circumstances of physical distancing. This also allowed a number of people who hasn't been around for a while to pop in to chat - like IQ, who, given his past, was mostly interested in the showdowns (during which Flopine crushed the competition) and the 4k compo.

As I haven't seen him around for a while, and as my mind is always looking for an angle, I somehow put two and two together, and asked him if he would consider taking part in a showdown at some point; he replied that he was up for it - this was around Saturday 10PM. I quickly pinged the rest of the showdown participants and organizers, as I spotted that Bullet was doing a DJ set the next day (which would've been in a relatively convenient timezone for IQ in California as well), and assumed that he didn't really have visuals for it - as there was already a "coding jam" over Ronny's set the day before, I figured there's a chance for squeezing an "extra round" of coding. Flopine was, of course, beyond excited by just the prospect of going against IQ, and by midnight we essentially got everything planned out (Bullet's consent notwithstanding, as he was completely out of the loop on this), and I was excited to watch...

...that is, until Havoc, the head honcho for the showdowns, off-handedly asked me about an at that point entirely hypothetical scenario: what would happen if IQ would, for some reason, challenge me instead of Flopine? Now, as said, I wasn't really into this, but being one to not let a good plan go to waste (especially if it was mine), I told Havoc I'd take one for the team and do it, although it probably wouldn't be very fun to watch. I then proceeded to quickly brief IQ in private and run him through the technicalities of the setup, the tool, the traditions and so on, and all is swell...

...that is, until IQ (this is at around 2AM) offhandedly mentions that "Havoc suggested we do a three-way with me, Flopine... and you." I quickly try to backpedal, but IQ seems to be into the idea, and worst of all, I've already essentially agreed to it, and to me, the only thing worse than being whipped in front of a few thousand people would be going back on your word. The only way out was through.

Weeks of coding can spare you hours of thinking

So now that I've got myself into this jar of pickles, I needed some ideas, and quick. (I didn't sleep much that night.) First off, I didn't want to do anything obviously 3D - both IQ and Flopine are masters of this, and I find it exhausting and frustrating, and it would've failed on every level possible. Fractals I'm awful at and while they do provide a decent amount of visual detail, they need a lot of practice and routine to get right. I also didn't want something very basic 2D, like a byte-beat, because those have a very limited degree of variation available, and the end result always looks a bit crude.

Luckily a few months ago an article I saw do rounds was a write-up by Sasha Martinsen on how to do "FUI"-s, or Fictional User Interfaces; overly complicated and abstract user interfaces that are prominent in sci-fi, with Gmunk being the Michael Jordan of the genre.

Image courtesy of Sasha Martinsen.

Sasha's idea is simple: make a few basic decent looking elements, and then just pile them on top of each other until it looks nice, maybe choose some careful colors, move them around a bit, place them around tastefully in 3D, et voilà, you're hacking the Gibson. It's something I attempted before, if somewhat unsuccessfully, in "Reboot", but I came back to it a few more times in my little private motion graphics experiments with much better results, and my prediction was that it would be doable in the given timeframe - or at least I hoped that my hazy 3AM brain was on the right track.

A bit of math

How to make this whole thing work? First, let's think about our rendering: We have a single rectangle and a single-pass shader that runs on it: this means no meshes, no geometry, no custom textures, no postprocessing, no particle systems and no fonts, which isn't a good place to start from. However, looking at some of Sasha's 3D GIFs, some of them look like they're variations of the same render put on planes one after the other - and as long as we can do one, we can do multiple of that.

Rough sketch of what we want to do; the planes would obviously be infinite in size but this representation is good enough for now.

Can we render multiple planes via a single shader? Sure, but we want them to look nice, and that requires a bit of thinking: The most common technique to render a "2D" shader and get a "3D" look is raymarching, specifically with signed distance fields - starting on a ray, and continually testing distances until a hit is found. This is a good method for "solid-ish" looking objects and scenes, but the idea for us is to have many infinite planes that also have some sort of alpha channel, so we'd have a big problem with 1) inaccuracy, as we'd never find a hit, just something "reasonably close", and even that would take us a few dozen steps, which is costly even for a single plane and 2) the handling of an alpha map can be really annoying, since we'd only find out our alpha value after our initial march, after which if our alpha is transparent we'd need to march again.

But wait - it's just infinite planes and a ray, right? So why don't we just assume that our ray is always hitting the plane (which it is, since we're looking at it), and just calculate an intersection the analytical way?

Note: I would normally refer to this method as "raytracing", but after some consultation with people smarter than I am, we concluded that the terms are used somewhat ambiguously, so let's just stick to "analytical ray solving" or something equally pedantic.

We know the mathematical equation for a ray is position = origin + direction * t (where t is a scalar that represents the distance/progress from the ray origin), and we know that the formula for a plane is A * x + B * y + C * z + D = 0, where (A, B, C) is the normal vector of the plane, and D is the distance from the origin. First, since the intersection will be the point in space that satisfies both equations, we substitute the ray (the above o + d * t for each axis) into the plane:

A * (ox + dx * t) + B * (oy + dy * t) + C * (oz + dz * t) + D = 0

To find out where this point is in space, we need to solve this for t, but it's currently mighty complicated. Luckily, since we assume that our planes are parallel to the X-Y plane, we know our (A, B, C) normal is (0, 0, 1), so we can simplify it down to:

oz + dz * t + D = 0

Which we can easily solve to t:

t = (D - oz) / dz

That's right: analytically finding a ray hit of a plane is literally a single subtraction and a division! Our frame rate (on this part) should be safe, and we're always guaranteed a hit as long as we're not looking completely perpendicular to the planes; we should have everything to start setting up our code.

Full disclosure: Given my (and in a way IQ's) lack of "live coding" experience, we agreed that there would be no voting for the round, and it'd be for glory only, but also that I'd be allowed to use a small cheat sheet of math like the equations for 2D rotation or e.g. the above final equation since I don't do this often enough to remember these things by heart, and I only had a few hours notice before the whole thing.

Setting up the rendering

Time to start coding then. First, let's calculate our texture coordinates in the 0..1 domain using the screen coordinates and the known backbuffer resolution (which is provided to us in Bonzomatic):

vec2 uv = vec2(gl_FragCoord.x / v2Resolution.x, gl_FragCoord.y / v2Resolution.y);

Then, let's create a ray from that:

vec3 rayDir = vec3( uv * 2 - 1, -1.0 ); rayDir.x *= v2Resolution.x / v2Resolution.y; // adjust for aspect ratio vec3 rayOrigin = vec3( 0, 0, 0 );

This creates a 3D vector for our direction that is -1,-1,-1 in the top left corner and 1,1,-1 in the bottom right (i.e. we're looking so that Z is decreasing into the screen), then we adjust the X coordinate since our screen isn't square, but our coordinates currently are - no need to even bother with normalizing, it'll be fine. Our origin is currently just sitting in the center.

Then, let's define (loosely) our plane, which is parallel to the XY plane:

float planeDist = 1.0f; // distance between each plane float planeZ = -5.0f; // Z position of the first plane

And solve our equation to t, as math'd out above:

float t = (planeZ - rayOrigin.z) / rayDir.z;

Then, calculate WHERE the hit is by taking that t by inserting it back to the original ray equation using our current direction and origin:

vec3 hitPos = rayOrigin + t * rayDir;

And now we have our intersection; since we already know the Z value, we can texture our plane by using the X and Y components to get a color value:

vec4 color = fui( hitPos.xy ); // XY plane our_color = color;

Of course we're gonna need the actual FUI function, which will be our procedural animated FUI texture, but let's just put something dummy there now, like a simple circle:

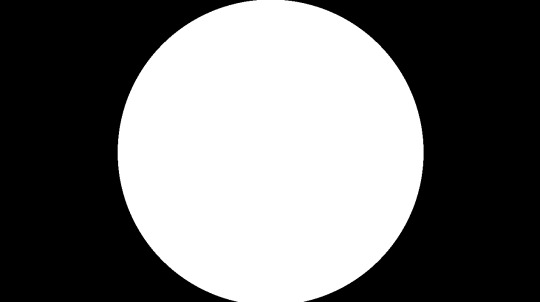

vec4 fui ( vec2 uv ) { return length(uv - 0.5) < 0.5 ? vec4(1) : vec(0); }

And here we go:

Very good, we have a single circle and if we animate the camera we can indeed tell that it is on a plane.

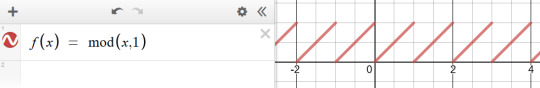

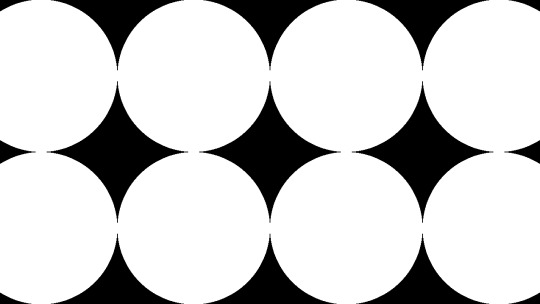

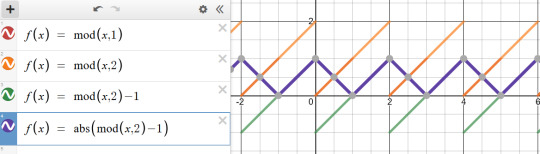

So first, let's tile it by using a modulo function; the modulo (or modulus) function simply wraps a number around another number (kinda like the remainder after a division, but for floating point numbers) and thus becomes extremely useful for tiling or repeating things:

We'll be using the modulo function rather extensively in this little exercise, so strap in. (Illustration via the Desmos calculator.)

vec4 layer = fui( mod( hitPos.xy, 1.0 ) );

This will wrap the texture coordinates of -inf..inf between 0..1:

We also need multiple planes, but how do we combine them? We could just blend them additively, but with the amount of content we have, we'd just burn them in to white and it'd look like a mess (and not the good kind of mess). We could instead just use normal "crossfade" / "lerp" blending based on the alpha value; the only trick here is to make sure we're rendering them from back to front since the front renders will blend over the back renders:

int steps = 10; float planeDist = 1.0f; for (int i=steps; i>=0; i--) { float planeZ = -1.0f * i * planeDist; float t = (planeZ - rayOrigin.z) / rayDir.z; if (t > 0.0f) // check if "t" is in front of us { vec3 hitPos = rayOrigin + t * rayDir; vec4 layer = fui( hitPos.xy, 2.0 ); // blend layers based on alpha output colour = mix( colour, layer, layer.a ); } }

And here we go:

We decreased the circles a bit in size to see the effect more.

Not bad! First thing we can do is just fade off the back layers, as if they were in a fog:

layer *= (steps - i) / float(steps);

We have a problem though: we should probably increase the sci-fi effect by moving the camera continually forward, but if we do, we're gonna run into a problem: Currently, since our planeZ is fixed to the 0.0 origin, they won't move with the camera. We could just add our camera Z to them, but then they would be fixed with the camera and wouldn't appear moving. What we instead want is to just render them AS IF they would be the closest 10 planes in front of the camera; the way we could do that is that if e.g. our planes' distance from each other is 5, then round the camera Z down to the nearest multiple of 5 (e.g. if the Z is at 13, we round down to 10), and start drawing from there; rounding up would be more accurate, but rounding down is easier, since we can just subtract the division remainder from Z like so:

float planeZ = (rayOrigin.z - mod(rayOrigin.z, planeDist)) - i * planeDist;

And now we have movement! Our basic rendering path is done.

Our little fictional UI

So now that we have the basic pipeline in place, let's see which elements can we adapt from Sasha's design pieces.

The first one I decided to go with wasn't strictly speaking in the set, but it was something that I saw used as design elements over the last two decades, and that's a thick hatch pattern element; I think it's often used because it has a nice industrial feel with it. Doing it in 2D is easy: We just add X and Y together, which will result in a diagonal gradient, and then we just turn that into an alternating pattern using, again, the modulo. All we need to do is limit it between two strips, and we have a perfectly functional "Police Line Do Not Cross" simulation.

return mod( uv.x + uv.y, 1 ) < 0.5 ? vec4(1) : vec4(0);

So let's stop here for a few moments; this isn't bad, but we're gonna need a few things. First, the repetition doesn't give us the nice symmetric look that Sasha recommends us to do, and secondly, we want them to look alive, to animate a bit.

Solving symmetry can be done just by modifying our repetition code a bit: instead of a straight up modulo with 1.0 that gives us a 0..1 range, let's use 2.0 to get a 0..2 range, then subtract 1.0 to get a -1..1 range, and then take the absolute value.

vec4 layer = fui( abs( mod( hitPos.xy, 2.0 ) - 1 ) );

This will give us a triangle-wave-like function, that goes from 0 to 1, then back to 0, then back to 1; in terms of texture coordinates, it will go back and forth between mirroring the texture in both directions, which, let's face it, looks Totally Sweet.

For animation, first I needed some sort of random value, but one that stayed deterministic based on a seed - in other words, I needed a function that took in a value, and returned a mangled version of it, but in a way that if I sent that value in twice, it would return the same mangled value twice. The most common way of doing it is taking the incoming "seed" value, and then driving it into some sort of function with a very large value that causes the function to alias, and then just returning the fraction portion of the number:

float rand(float x) { return fract(sin(x) * 430147.8193); }

Does it make any sense? No. Is it secure? No. Will it serve our purpose perfectly? Oh yes.

So how do we animate our layers? The obvious choice is animating both the hatch "gradient" value to make it crawl, and the start and end of our hatch pattern which causes the hatched strip to move up and down: simply take a random - seeded by our time value - of somewhere sensible (like between 0.2 and 0.8 so that it doesn't touch the edges) and add another random to it, seasoned to taste - we can even take a binary random to pick between horizontal and vertical strips:

The problems here are, of course, that currently they're moving 1) way too fast and 2) in unison. The fast motion obviously happens because the time value changes every frame, so it seeds our random differently every frame - this is easy to solve by just rounding our time value down to the nearest integer: this will result in some lovely jittery "digital" motion. The unison is also easy to solve: simply take the number of the layer, and add it to our time, thus shifting the time value for each layer; I also chose to multiply the layer ID with a random-ish number so that the layers actually animate independently, and the stutter doesn't happen in unison either:

vec4 fui( vec2 uv, float t ) { t = int(t); float start = rand(t) * 0.8 + 0.1; float end = start + 0.1; [...] } vec4 layer = fui( abs(mod(hitPos.xy, 2.0)-1), fGlobalTime + i * 4.7 );

Lovely!

Note: In hindsight using the Z coordinate of the plane would've given a more consistent result, but the way it animates, it doesn't really matter.

So let's think of more elements: the best looking one that seems to get the best mileage out in Sasha's blog is what I can best describe as the "slant" or "hockey stick" - a simple line, with a 45-degree turn in it. What I love about it is that the symmetry allows it to create little tunnels, gates, corridors, which will work great for our motion.

Creating it is easy: We just take a thin horizontal rectangle, and attach another rectangle to the end, but shift the coordinate of the second rectangle vertically, so that it gives us the 45-degree angle:

float p1 = 0.2; float p2 = 0.5; float p3 = 0.7; float y = 0.5; float thicc = 0.0025; if (p1 < uv.x && uv.x < p2 && y - thicc < uv.y && uv.y < y + thicc ) { return vec4(1); } if (p2 < uv.x && uv.x < p3 && y - thicc < uv.y - (uv.x - p2) && uv.y - (uv.x - p2) < y + thicc ) { return vec4(1); }

Note: In the final code, I had a rect() call which I originally intended to use as baking glow around my rectangle using a little routine I prototyped out earlier that morning, but I was ultimately too stressed to properly pull that off. Also, it's amazing how juvenile your variable names turn when people are watching.

Looks nice, but since this is such a thin sparse element, let's just... add more of it!

So what more can we add? Well, no sci-fi FUI is complete without random text and numbers, but we don't really have a font at hand. Or do we? For years, Bonzomatic has been "shipping" with this really gross checkerboard texture ostensibly for UV map testing:

What if we just desaturate and invert it?

We can then "slice" it up and render little sprites all over our texture: we already know how to draw a rectangle, so all we need is just 1) calculate which sprite we want to show 2) calculate the texture coordinate WITHIN that sprite and 3) sample the texture:

float sx = 0.3; float sy = 0.3; float size = 0.1; if (sx < uv.x && uv.x < sx + size && sy < uv.y &&uv.y < sy + size) { float spx = 2.0 / 8.0; // we have 8 tiles in the texture float spy = 3.0 / 8.0; vec2 spriteUV = (uv - vec2(sx,sy)) / size; vec4 sam = texture( texChecker, vec2(spx,spy) + spriteUV / 8.0 ); return dot( sam.rgb, vec3(0.33) ); }

Note: In the final code, I was only using the red component instead of desaturation because I forgot the texture doesn't always have red content - I stared at it for waaaay too long during the round trying to figure out why some sprites weren't working.

And again, let's just have more of it:

Getting there!

At this point the last thing I added was just circles and dots, because I was running out of ideas; but I also felt my visual content amount was getting to where I wanted them to be; it was also time to make it look a bit prettier.

Post-production / compositing

So we have our layers, they move, they might even have colors, but I'm still not happy with the visual result, since they are too single-colored, there's not enough tone in the picture.

The first thing I try nowadays when I'm on a black background is to just add either a single color, or a gradient:

vec4 colour = renderPlanes(uv); vec4 gradient = mix( vec4(0,0,0.2,1), vec4(0,0,0,1), uv.y); vec4 finalRender = mix( gradient, vec4(colour.xyz,1), colour.a);

This added a good chunk of depth considerably to the image, but I was still not happy with the too much separation between colors.

A very common method used in compositing in digital graphics is to just add bloom / glow; when used right, this helps us add us more luminance content to areas that would otherwise be solid color, and it helps the colors to blend a bit by providing some middle ground; unfortunately if we only have a single pass, the only way to get blur (and by extension, bloom) is repeatedly rendering the picture, and that'd tank our frame rate quickly.

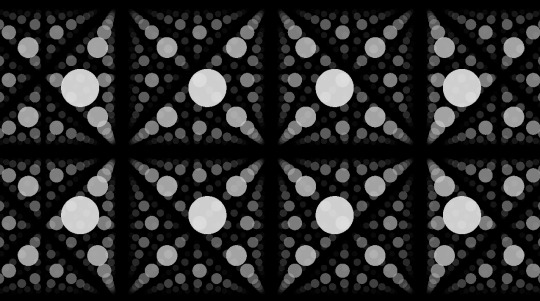

Instead, I went back to one of the classics: the Variform "pixelize" overlay:

This is almost the same as a bloom effect, except instead of blurring the image, all you do is turn it into a lower resolution nearest point sampled version of itself, and blend that over the original image - since this doesn't need more than one sample per pixel (as we can reproduce pixelation by just messing with the texture coordinates), we can get away by rendering the scene only twice:

vec4 colour = renderPlanes(uv); colour += renderPlanes(uv - mod( uv, 0.1 ) ) * 0.4;

Much better tonal content!

So what else can we do? Well, most of the colors I chose are in the blue/orange/red range, and we don't get a lot of the green content; one of the things that I learned that it can look quite pretty if one takes a two-tone picture, and uses color-grading to push the midrange of a third tone - that way, the dominant colors will stay in the highlights, and the third tone will cover the mid-tones. (Naturally you have to be careful with this.)

"Boosting" a color in the mids is easy: lucky for us, if we consider the 0..1 range, exponential functions suit our purpose perfectly, because they start at 0, end at 1, but we can change how they get here:

So let's just push the green channel a tiny bit:

finalRender.g = pow(finalRender.g, 0.7);

Now all we need is to roll our camera for maximum cyberspace effect and we're done!

Best laid plans of OBS

As you can see from the code I posted the above, I wrote the final shader in GLSL; those who know me know that I'm a lot more comfortable with DirectX / HLSL, and may wonder why I switched, but of course there's another story here:

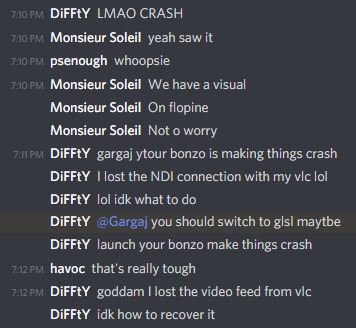

Given the remote nature of the event, all of the shader coding competition was performed online as well: since transmitting video from the coder's computer to a mixer, and then to another mixer, and then to a streaming provider, and then to the end user would've probably turned the image to mush, Alkama and Nusan came up with the idea of skipping a step and rigging up a version of Bonzo that ran on the coder's computer, but instead of streaming video, it sent the shader down to another instance of Bonzo, running on Diffty's computer, who then captured that instance and streamed it to the main Revision streaming hub. This, of course, meant that in a three-way, Diffty had to run three separate instances of Bonzo - but it worked fine with GLSL earlier, so why worry?

What we didn't necessarily realize at the time, is that the DirectX 11 shader compiler takes no hostages, and as soon as the shader reached un-unrollable level of complexity, it thoroughly locked down Diffty's machine, to the point that even the video of the DJ set he was playing started to drop out. I, on the other hand, didn't notice any of this, since my single local instance was doing fine, so I spent the first 15 minutes casually nuking Diffty's PC to shreds remotely, until I noticed Diffty and Havoc pleading on Discord to switch to GLSL because I'm setting things on fire unknowingly.

This is fine.

I was reluctant to do so, simply because of the muscle memory, but I was also aware that I should keep the show going if I can because if I bow out without a result, that would be a colossal embarrassment to everyone involved, and I only can take one of those once every week, and I was already above my quota - so, I quickly closed the DX11 version of Bonzo, loaded the shader up in a text editor, replaced "floatX" with "vecX" (fun drinking game: take a shot every time I messed it up during the live event), commented the whole thing out, loaded it into a GLSL bonzo, and quickly fixed all the other syntax differences (of which there were luckily not many, stuff like "mix" instead of "lerp", constructors, etc.), and within a few minutes I was back up and running.

This, weirdly, helped my morale a bit, because it was the kind of clutch move that for some reason appealed to me, and made me quite happy - although at that point I locked in so bad that not only did I pay absolutely not attention to the stream to see what the other two are doing, but that the drinks and snacks I prepared for the hour of battling went completely untouched.

In the end, when the hour clocked off, the shader itself turned out more or less how I wanted it, it worked really well with Bullet's techno-/psy-/hardtrance mix (not necessarily my jam, as everyone knows I'm more a broken beat guy, but pounding monotony can go well with coding focus), and I came away satisfied, although the perhaps saddest point of the adventure was yet to come: the lack of cathartic real-life ending that was taken from us due to the physical distance, when after all the excitement, all the cheers and hugs were merely lines of text on a screen - but you gotta deal with what you gotta deal with.

A small sampling of the Twitch reaction.

Conclusion

In the end, what was my takeaway from the experience?

First off, scoping is everything: Always aim to get an idea where you can maximize the outcome of the time invested with the highest amount of confidence of pulling it off. In this case, even though I was on short notice and in an environment I was unfamiliar with, I relied on something I knew, something I've done before, but no one else really has.

Secondly, broaden your influence: You never know when you can take something that seems initially unrelated, and bend it into something that you're doing with good results.

Thirdly, and perhaps most importantly, step out of your comfort zone every so often; you'll never know what you'll find.

(And don't agree to everything willy-nilly, you absolute moron.)

10 notes

·

View notes

Text

“PILEDRIVER” - Driving an aging demo engine to its limits

The first memory I can recall of working on what became ÜDS3 was sitting on the floor of San Francisco International Airport in 2008, right after NVSCENE, next to a power socket and trying to spend the remaining time until boarding with some coding, in hopes that one day it would be an engine.

My hope was lofty: an engine that would last for a long enough time in terms of feature-set and convenience that I would only need to do perhaps incremental upgrades, and an ability to do project-specific code, but keep most of the core system intact so that it can be moved from project to project without having to reinvent the wheel.

It is now almost a decade later. After having built more than a dozen demos on the same engine that I started sitting on the airport carpet, I have recently released "PILEDRIVER", a gigantic several month effort with easily the largest amount of tech and content in a demo I've ever done. With this finished, I have now made the decision to cease using this engine, as I feel it has served above and beyond its purpose.

youtube

The final demo.

I've been meaning to sunset ÜDS3 for a long time now, and while making the demo it became apparent to me that this would be the last demo I'd make with this engine - for reasons that I'll detail later in this post. That being said, I'm also now confident that the fundamental principles of the engine hold up really well, and looking back to both the several year development time of the tool/engine, and the 3-4 month development time of the demo itself, I thought it'd be fun to take stock of a few things: What updates I had to do for this particular demo, and how certain aspects of the engine held up or didn't hold up over time.

(Note: This particular post will less be about PILEDRIVER itself; I do plan on writing some more about how to pull off such a large project, from a scheduling / management / production perspective, but that'll be a whole different post.)

Deferred shadows

This was less of a new feature and more a refinement of an old one; I already had decent support for variance shadowmapping in previous demos, but the big problem with them was that I could never really properly integrate them into the node-based concept: With a forward rendering engine, the idea of having an input scene and an output texture was relatively straightforward; with a deferred engine, the G-buffer object was used several times even after the final colour-buffer was rendered, but the G-buffer itself didn't contain any shadow-map information, so the "wins" of having any amount of lightsources ended up being negated by having the shadows render for every lightsource.

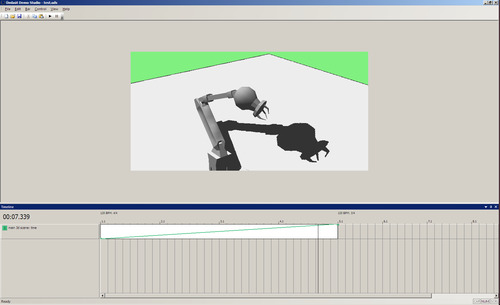

This particular scene has a light source attached to every spike of the thing (10 in total) that don't cast shadows, but also 2 lights around the scene that do.

The update for PILEDRIVER was relatively simple in this sense: with some careful index-management internally, I was able to selectively allow only specific lightsources to cast shadows. This allowed me to have a large amount of light sources again, but only waste time on rendering shadow maps for the ones I needed shadows for - sounds like an obvious thing, but with a node-based system some of these things were counter-intuitive, and I had to throw some caution in the wind. to get things working.

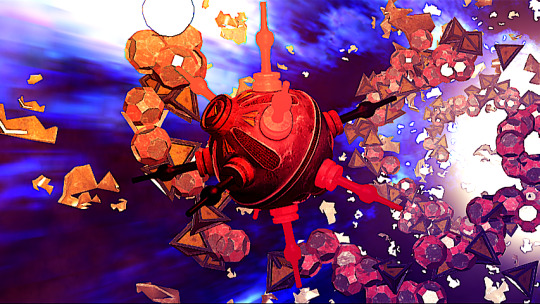

Particles

One of the few things that I felt in hindsight "Along For The Ride" could've used more of to add some depth is particles. Particles have many advantages: large particles can be used to create things like dust clouds and fog, to add a bit of complexity and movement to an otherwise static scene, while small particles can add tiny details like welding sparks, or more fine-grained dust floating in the air, a commonly used technique to add visual depth cues to a camera movement.

Another nice thing about particles is that while then common way of animating them is some sort of physics calculation, you don't really need that: most of the time it's perfectly acceptable for them to just move in a certain direction within a given volume, which means the entire deterministic movement can be done within the vertex shader: a fast, hot-pluggable scripting system allowing for rapid iteration. The only place I wanted something that resembled physics were a few bits that resembled welding sparks, but a projectile arc and some bouncing was always easy to fake, especially once I added support to billboard stretching based on the particle "velocity" (in reality a fake sampling of the previous position based on time) to achieve something that resembles a particle trail.

I've had particle support in the engine since Sosincs Vége, but it never felt like I've utilized it properly; all the particle effects I've done were very representative (explosions, etc.), rather than image detail. This time, naturally inspired by Zoom's use of particles in "When Silence Dims The Stars Above", I went for the more artistic use, adding large fluffy particles to provide depth and motion to a scene, and to aid the eventual colour-grading process by providing colour data where otherwise there wouldn't be any.

Particles allow you to add a good amount of definition to your visuals if used well.

Ultimately, I ended up with about a dozen shaders for particles, each with various movement types or blending types; I probably could've written a more general solution to encompass most of these, but in the end I think I was happy with how fast I was able to integrate chunks of particles into my scenes.

Raymarching

So I'm aware that there's this large movement of people using raymarching / sphere tracing / distance fields for both 4k intros, and live coding (and I'm aware that at least part of it is my fault), but I never really dug the process: I always felt I was more comfortable with using actual vertices and polygons and textures - even if it was a bit more tedious at the start, it felt like when it came to detail and craftsmanship, it paid off in the end. Where such situations have an upper hand are mathematically defined, "abstract" objects such as blobs or fractals; something that, to me, often had an air of running out of ideas - which meant they were perfect for imbuing some more irony in the demo.

The rendering technique itself is relatively simple and there are a ton of tutorials, so I'll be brief: Instead of working with vertices and projection, you treat the screen as a 2D surface, and the camera as a point looking at that 2D surface. You then shoot (cast) rays out of the camera through each point of the screen, and see what that ray hits. How you see what it hit can be found out a number of ways, and I won't go in-depth with it, but in this particular case my surfaces all worked with distance values, so eventually I would retain a colour and a depth value; all I needed is to be able to, theoretically, stitch raymarched geometry into a non-raymarched scene.

How to do this was relatively simple: paint a fullscreen quad over the scene, and write a shader that renders the fullscreen pixel shader with a depth value that corresponds to the depth values that were already in my depth buffer, rendered by the polygon geometry. A quick explanation of this (for OpenGL) can be found in cpdt's breakdown, but the general idea is that you usually work with a [0..1] range for depths, and as long as your "2D" shader outputs the same range, you should be able to have the raymarched object clip correctly with your polygons.

The horns from the ceiling correctly occlude the raymarched blobs.