#you have to establish that falls in the range of 'better but not qualitatively different'

Text

"just because what china is doing with BRI is better than colonialism doesn't mean it's not a form of colonialism"

"just because free ice cream and puppy dogs dancing through meadows is better than burning in fire for 513 years doesn't mean free ice cream and puppy dogs dancing through meadows aren't a form of burning in fire for 513 years"

#if you make a claim without bounding the range of the clauses then youre not rly saying much#i see this sorta logic all the time#like. either your metric of 'better' is unbounded & so broad so as to be analytically useless#or theres a qualitative gap youre not accounting for where you do have a bound & something can be of a different 'kind'#but then you have to establish that the object is of that kind in the first place which just gets you back to square 1!#you have to establish that falls in the range of 'better but not qualitatively different'#which assumedly is what the argument is over in the first place right#this is what happens when you extinguish popular analysis of colonialism of any economic analytic & just turn it to 'bad people'#plus what happens when you dont have a concept of superprofits & how to maintain them vs how to maintain profits#or how quantity becomes quality (at critical points of intensity)#but if you just want a thought terminating cliche you can just say#'at a certain point yes it does lol'

135 notes

·

View notes

Text

Digital marketing in 2020: you need to know these trends

Eng News 24h

Digital marketing in 2020: you need to know these trends

The place that digital marketing occupies in daily business operations is large. But the speed with which digital is developing is difficult to keep up with, even though it is an indispensable part of any business plan. But a little marketer does get warm from a challenge, so you immerse yourself in the digital marketing trends of 2020 and tackle these new developments with both hands to your company future proof to make.

One year you can collect unlimited data for marketing purposes, the other becoming marketers established legal ties. Constant technological developments and increasingly complex IT are also quite challenging.

1. Personalization & user experience

Personalization has the definition of buzzword long gone. This is not a hype, it's here to stay. After all, the customer also requires this from companies. Whether it is the huge range of online retailers or taking out insurance yourself: the focus is on self-service and the ultimate customer experience. Companies no longer think from their own needs, but from the needs of the customer. That means increasingly offering friendly user experience, more flexibility and modular models: putting together a product or service completely adapted to your personal wishes.

The ultimate customer experience

To make the ultimate customer experience possible, marketers rely heavily on data. Bringing together data sources makes it possible to get to know and predict behavior. Take the Zeeland family business Omoda. The retailer grew at lightning speed into a major player in the Dutch and Flemish markets with the help of smart digital marketing. Collecting data through online interaction and purchasing behavior provided insights that made segmentation possible. In this way Omoda was able to predict purchasing behavior and subdivide customers into different types of customers who had different needs. These are valuable insights that can be converted by marketers into actions that can keep a loyal fan happy and one wardrober (someone who buys a lot but also returns a lot) can steer towards qualitative purchases.

Better use of information

However, we can use the wealth of information that data gives us even better.

How do you use the data about a doubting customer who loads his / her shopping basket, but never settles it? You can try to pull that over with some retargeting towards a blog about the latest trends, but that is not actually the information that the person needs at that time. A blog then has little success.

It is better to exclude a customer who does a lot of returns from your marketing campaigns. You process the negative revenue from this in your conversion measurement at Google and Facebook to improve their algorithms. You then offer service-oriented content, such as a size chart, on your site.

Another example is a customer who you know will only make a possible purchase in x weeks. You pause marketing efforts for this customer. By the time a possible purchase approaches, you activate it again with branding and then product information.

Someone you know is in a certain place in the customer journey, you provide content suggestions about the next step and not about final conversion, both on your site and in your marketing.

In this way you effectively translate the collected data about your customers across different platforms with various content concepts and you reach your customer in an integrated manner within a customer journey that logically connects to each other.

2. The death of cookies

There is no such thing as privacy on the internet – we know everything. Or not? As much as marketers also want to collect data for personalization, it is not made easy for them. We need cookies to collect data, but to be honest, they are dead. The combination of adblockers, AVG (GDPR) and Intelligence Tracking Prevention (ITP) is expected to have an impact on twenty to forty percent of cookies. An impact that makes collecting data considerably more difficult.

Less and less data to track

See the group of internet users in front of you, part of which has already set up an adblocker, data tracking is no longer possible. This part therefore falls off. Then we have the increasingly strict regulations of the AVG (GDPR) which in turn makes it difficult to collect data from the remaining part. The group of internet users for whom you can still collect data has already shrunk drastically. With the blow of it all ITP third party data removed immediately or after 24 hours. This again consumes a large part of your group of users.

In other words: with less volume you need to be able to collect enough data to be able to personalize. Therefore, make optimum use of the group that makes itself known and get a higher return from it. How? First party data. Provide a striking cookie bar, so that the website visitor can identify himself on the site. This can also be done by having someone log in. Connect these collected first party data (then from analytics, CRM, loyalty) to each other across different systems, so that you can offer powerful personalization with the help of, for example, segmentation. Because of this you tempt users to share their data, because it gives them advantages in experience. The group that you can track is getting smaller and smaller, so it's up to the digital marketer to deliver with less quality.

3. The role of the marketer is changing

Robots taking over the world, self-driving cars, Stephen Hawking predicting that technology means the end of humanity: it is the specter of ultimate automation. At the same time there is still someone behind the wheel, people are still at the buttons of robots and people are feeding the algorithms. It is true that automation is rapidly taking over the work of marketers, largely developed by marketers themselves. But that does not mean that people become superfluous. The role of the marketer is changing.

Control variables

With every technological revolution that humanity is experiencing, many professions have to reinvent themselves. This also applies to digital marketers. Work that they are used to doing is no longer tenable. Ever smarter algorithm models take over those tasks and can do this without human assistance. So instead of manually optimizing campaigns, it is important to take matters into your own hands and take control of the building and control of the machines that run the models. As a marketer, ask yourself: how can I control those variables?

Making & controlling machines

Take bidding on keywords in campaigns. For a long time it was human work. Now that has been taken over by Google algorithms (smart bidding). But they are not completely in themselves, because the marketer is still at the optimization buttons by influencing algorithms with additional information. Consider return information, data from end customers, et cetera. For example, marketers suddenly create and control algorithms that do the work that was previously done manually. Marketers make and control the machine.

Digital natives

For companies it therefore applies that they must make smart use of the knowledge and skills of marketers if they want to remain or become precursors. That also means that talented staff who can keep up with these developments in digital are highly desirable. Digital maturity is increasing, but the roles are difficult to fulfill. More and more companies are expanding their marketing department and are arranging everything in-house, where others make smart use of (temporary) digital teams full digital natives who give your company a digital injection that guarantees your position as a leader; for now and in the future.

The bottom line is: don't waste valuable time on something that machines can already do, but use the capacities of smart people and insightful data to be able to serve your customers to the maximum. Do not be the person who realizes just too late that what you are doing is superfluous. We need ourselves disrupt, otherwise others do that for us.

The future starts today

Broadly speaking, we see cross-sectoral trends whose development has already begun, but whose complexity and speed will increase in 2020. The role of the marketer continues to evolve as algorithms become increasingly smarter. At the same time, the same marketer must also deal with the pressing issue of privacy and trust. But let's not see this as a big thundercloud above our work. These challenges bring new opportunities, force innovation and, in particular, drive for more relevance. And the latter is precisely the purpose of technological and legal developments. Ultimately, it's all about better service. It makes our work more fun and is better for our customers. Win win! It will be a beautiful year.

Source: frankwatching.com

Eng News 24h

Digital marketing in 2020: you need to know these trends

from WordPress https://ift.tt/309HpWX

via IFTTT

0 notes

Text

Top 6 ways to jump into Human Resource Career

Everything you need to know about advancing your career in HR, Let’s understand how to be on top HR Manager.

For those considering a career in human resources, job prospects have never been better. After high-profile HR nightmares at companies like Uber and Thinx, the field’s importance is as apparent as ever.

Want to capitalize on the trend? As your day-to-day responsibilities pile on, it may seem hard to step back and find the time to focus on your career. Between navigating an ever-changing compliance landscape and staying current with industry developments, investing in your own career—not just the careers of your employees—seems like a stretch. It doesn’t have to be.

There are easy, low-cost ways to broaden your skills and strengthen your credentials. Whether you’re just getting started or looking to make your next move up the career ladder, we’ve put together everything you need to know to take the next step.

However, as companies grow, so too do opportunities for HR professionals to take on new responsibilities and contribute in meaningful ways. Specialized HR careers ensure that every stage of the employee lifecycle has a clear owner and a process—so nothing gets left in a blind spot.

Whatever HR job title you land on, you have a wide range of career paths to choose from. Where do you start? We’ve outlined five tracks to help you find your ideal future in HR.

1. The HR Generalist

For the jack-of-all-trades and multi-tasker extraordinaire, HR Generalist roles may be the fit for you. The HR Generalist has a hand in all pieces of the HR machine, including benefits, onboarding, performance management, talent acquisition, and compliance. The HR Generalist plays a vital role in ensuring that everything runs without a hitch. While this can be a lot for one person, it gives you a 360 degree view of the company’s ecosystem (and can help you identify where else you might want to specialize down the line.)

Qualifications

Minimum 3 years of related HR experience

Bachelor’s degree or higher

Exceptional organization and communication skills

Responsibilities

Own execution of compensation including payroll and benefits

Manage the hiring process from recruiting to onboarding

Attend to employee relations, concerns, and culture

Administer performance reporting

Create high level goals and processes

Ensure company compliance across the board

National Average Salary*

$55,880

2. The Talent Acquisition Specialist

As companies grow, workforce planning becomes more important than ever. The Talent Acquisition Specialist is responsible for building and shaping an organization's structure. In this role, you understand the importance of hiring candidates that are both qualified and a culture add. This role manages all efforts to recruit, interview, and onboard top talent. Over time, talent acquisition can be broken into even more specific roles, such as department-specific recruiters or onboarding specialists.

Qualifications

2 years of relevant experience

Bachelor’s degree

Excellent communication and critical evaluation skills

Responsibilities

Work closely with hiring manager to define the ideal candidate

Promote job openings and scout external talent

Screen and interview candidates

Drive the hiring process, including verifications and offers

Provide orientation and onboarding training to new employees

National Average Salary

$50,800

3. The HR Data Analyst

Data is becoming increasingly important to every department within an organization, and HR is no exception. The HR Data Analyst tracks metrics throughout the talent lifecycle to inform better processes. This role is still relatively new in the field, so it requires a lot of creativity and strategic data-driven thinking in order to develop useful and actionable metrics.

Qualifications

5 years relevant experience in data analysis or statistics

Bachelor’s degree or higher

A comprehensive understanding of the HR space

Responsibilities

Establish core metrics to measure success of talent lifecycle

Track metrics and identify fluctuations over time

Create surveys to obtain qualitative insights

Work with the rest of the team to implement metric-driven changes

National Average Salary

$80,000

4. The Payroll and Benefits Administrator

Employees’ primary communication with their HR team typically centers around compensation and benefits. Whether it’s a missed paycheck, open enrollment, or tax season, employees rely on the Payroll and Benefits Administrator to answer their questions and make sure everything is in order. The Payroll and Benefits Administrator maintains processes to ensure that employees are properly compensated.

Qualifications

3-5 years of relevant experience in benefits and payroll

Bachelor’s degree or higher

Able to communicate confusing concepts to employees

Responsibilities

Establish processes for distribution and management of payroll and benefits

Run payroll for employees

Research and implement benefits plans

Communicate insurance plans to employees

Address any employee payroll concerns

National Average Salary

$42,908

5. The Employee Relations Manager

Employees need a central contact for their disputes, personal concerns, and questions. Within the HR department, the Employee Relations Manager handles all things people. This means the Employee Relations Manager works collaboratively across the HR team, relying on peers for expert guidance when different employee situations arise—but the role’s primary focus is to advise and consult employees on any concerns.

Qualifications

3-5 years relevant HR experience

Bachelor’s degree or higher

Strong communication and relationship management skills

Responsibilities

Handle employee personal concerns that affect their work life

Facilitate a safe and comfortable workspace

Advise management on practices and policies

Administer colleague relations and grievance procedures

Ensure compliance with employment regulations

National Average Salary

$76,988

HR touches every part of the employee experience and as the field grows, there are more and more HR career paths to choose from. The best way to find your niche is to dive right in, get your hands dirty, and see what tasks align most with your goals and interests. Here’s to finding the right stops along your path to Chief People Officer, Chief Happiness Officer, or even Chief Listening Officer!

*All national salary averages come from Glassdoor.

Expert Tips for Better HR Career

1. Be Proactive

A proactive attitude can help in any career, but it’s especially important in HR. Why? You’ll face a variety of situations that require creative solutions. The more innovation and enthusiasm you bring to your role, the easier it is for leadership to trust you and know that you’re doing a quality job.

At growing companies, small HR teams often have to start from scratch to create and implement processes that will scale effectively. In most cases, it falls on HR teams to take the lead on initiatives that build a set of cultural values designed to support the company long-term. Here’s a tip: don't wait for someone to assign these responsibilities to you—recognize what your company needs and act on it.

Work beyond your job scope to identify systems and processes that need attention and lend your expertise. Don't sit back and watch your company grow or wait for your role to unfold; look for opportunities and act. Everyone loves a positive go-getter who has the company’s best interest in mind.

Follow these three steps to be proactive once you identify challenges or gaps within your organization:

Get Leadership Support

As you approach uncharted territory, make sure you work closely with your leadership team. Want to introduce a new performance cycle? Thinking of incentivizing employees to go paperless? Pitch ideas to your C-level executives and keep them in the loop as you implement. There’s always room for innovation, but it’s important to have leadership buy-in as you try new things and scale your efforts.

Ask for Feedback

Never be afraid to experiment, but always be sure to measure your effectiveness. If your ultimate goal is to increase employee engagement and retention, it’s critical to gather direct feedback on your new initiatives. At smaller companies, have as many in-person conversations as possible, and as you scale, check in with employees through pulse surveys.

Learn from Mistakes

When building out HR processes, expect a lot of trial and error. If you introduce Summer Fridays and notice a big decrease in productivity, you’ll need to backtrack and rethink the perk. If your goal was to reward and incentivize employees, there may be a better way to do so that doesn’t negatively impact the business. Perhaps a summer company outing or monthly team happy hours would be a better alternative, for examples

2. Learn the Full Scope of HR

There are a variety of career paths that HR professionals might follow as they advance their careers. From talent acquisition associate to payroll guru, it’s helpful to understand each aspect of HR before settling on a specialization. HR generalist roles are a great way to dip your toes into a variety of functions and gain insights into HR’s most common challenges.

If you’re on a small (or one-person) HR team, you’ll most likely be asked to perform an array of tasks and be challenged to execute across disciplines. You’ll likely have a hand in culture, benefits, payroll, and hiring—but as your team grows, you’ll have more time to focus on a specific function that appeals to you. Whichever function you zero in on, having generalist experience can make you well-positioned to take on a managerial role down the road.

Explore all areas of HR before deciding on one specialization—there are a lot of different areas of HR (benefits, payroll, employee development, etc.). It's nice to see all aspects before deciding to specialize in a particular area—or you may even decide to be an HR generalist that handles a variety of duties in the long run.

There are three ways to gain exposure to the full spectrum of HR duties. We’ve summed them up below:

Shadow Your Peers

Consider shadowing members of your own, or even external, teams to gain exposure to different roles. Spend time with the HR Director to see if you like managing a team; learn how to process payroll from the finance team; or even talk through current benefits plans with your broker. Your colleagues won’t fault you for deepening your understanding of the business, and you can learn a lot from simply watching.

Experiment with New Initiatives

If your role doesn’t touch on an aspect of HR that interests you, work on initiatives that give you exposure to those areas. For example, if your main focus is recruiting, but you’re interested in employee engagement, dedicate some time to a cultural initiative. Suggest a wellness, charity, or social activity and help bring it to life. If it’s successful, you may get to spend more time on similar projects in the future.

Understand Long-term Opportunities

Is your ultimate goal to manage others? Work with the CEO to fill leadership roles? Or be the go-to resource for people analytics? Each role has distinct responsibilities that require a diverse skillset. As you explore different opportunities at the ground level, be sure to keep your long-term path in mind and consider what experiences will be most valuable to you down the road.

3. Consider Certification

While many HR practitioners at small and mid-sized companies “fell” into the profession, it’s important that your growing HR experience is reflected on your resume in order to advance your career. If you started as office admin for a five-person company and developed into an all-encompassing recruiting, payroll, and benefits role, you want your credentials to reflect your on-the-job expertise.

This might mean going back to school for an MBA in an HR-related field—or it might mean getting an industry certification, like a Professional in Human Resources (PHR) or Society for Human Resource Management (SHRM) certification. With a certification in your back pocket, it can be much easier to show your qualifications and advance your career.

Take advantage of every opportunity to advance your skills, including certification. If your superiors know that you are serious about building skills and see that you are engaged, you will get more opportunities to advance your career.

Consider these three approaches as you take steps toward HR certification:

Consult Your Mentors

Whether your ultimate goal is to be a director or a specialist, someone has done it before, so learn which certifications helped them the most in their journey. Some professionals may have found that higher education was crucial, whereas others might think industry certifications were just as useful for their career advancement.

Do Your Research

What’s the difference between PHR, SPHR, SHRM-CP, or an MBA? What can each do for you? Which will provide the best ongoing resources and opportunities? Each has its advantages and there’s no wrong decision, but make sure to identify the certification that best aligns with your experience and career goals by putting in the time to research.

Get Involved in HR Communities

Whatever route you choose, you will be among like-minded peers who can help you further develop your skillset. If you pursue a Master’s, make real connections with your classmates. If you go for your SHRM certification, utilize the organization’s online resources and attend conferences to get involved with their network.

4. Spend Time Outside the HR Bubble

It can be easy to get caught up in the HR bubble—in other words, to fall into the trap of HR tunnel vision. Whether you tend to only converse with HR peers or just get into a heads-down routine, it’s important to come up for air once in awhile. It may seem counterintuitive to recommend that you not focus solely on HR. But you can actually make a greater HR impact if you have a well-rounded understanding of your company’s business and its people.

Spend time walking around the office, getting to know employees, or pursuing mentorships with leaders outside of the HR space to help broaden your perspective—all of which you can apply back to your role.

There are countless resources outside of the HR bubble. Here are a few ways to start taking advantage of them:

Take a Walk

Set aside time daily or weekly to step outside of your department and walk around your office. Chat with employees in common spaces, invite them to grab a coffee, learn what they did over the weekend, find out what projects they’re working on right now. This puts the human in human resources and not only gives you a better understanding of your employees, but gives them a better understanding of you (and gives a face to the HR department!).

Cultivate a Range of Mentors

HR mentors are great in terms of best practices, troubleshooting, and career advancement, but it’s also important to have mentors outside of the HR space who can broaden your perspective and expand your toolset. Leaders in other departments can help you better understand business practices and inspire creative solutions.

Gain New Skills

HR is a perfect landscape for creativity. Amassing skills outside of the direct HR function can be applicable to many of your own initiatives. Tune into a webinar on marketing and apply what you learn to talent acquisition. Take a Photoshop workshop to help you better communicate internal events or explain complex benefits topic in a visually appealing way. Whatever your outside interests, there is likely a way to translate them into HR.

5. Utilize Free Resources

Thanks to the internet, you now have a world of free resources right at your fingertips. These resources can help you with everything from answering basic how-to questions to finding a network of HR professionals. There will always be paid resources, events, and certifications that you can try, but don’t overlook the opportunities that are publically available at no charge.

Continually challenge yourself and put yourself in the way of learning. Ask for projects from your managers, attend conferences, get an HR certification, ask deep questions, and connect with local HR peers to swap stories and get support. The best things in life are free—even in HR. There are a wealth of free HR resources available to you if you know where to look. Here’s how to get started:

Network

Making connections is free and the best way to do this is to start reaching out. Read a blog post that inspires you? Email the author. Interested in learning how other small HR teams are building company culture? Reach out on LinkedIn. Join online networks, attend local meetups, and before you know it, you’ll have a whole network at hand whenever a question arises.

Learn

There are countless free ways to grow your industry knowledge. Webinars, meetups, and local roundtables are just a few ways to enhance your HR knowledge. Subscribe to informational newsletters and participate in community networks such as LinkedIn or Slack groups to stay in tune with thought leadership in the HR space. Engage with open communities, like HR Open Source to share ideas among a network of HR practitioners with a range of experience.

Give Back

Whether you have six months of experience or six years, you most likely have unique insights to offer. Wrestled with a payroll issue, culture initiative, or benefits communication? Odds are someone else is up against a similar hurdle and would love to hear how you overcame the challenge. Don’t discount your own experience or thought leadership.

6. Highlight Relevant Skills

HR experience doesn’t only come from HR roles. No matter what previous titles you’ve held, you may have amassed numerous skills that are applicable to a wide range of HR positions. Don’t underestimate just how well your other experiences—be it in design, sales, or customer support, for example—might translate into HR qualifications.

When you’re looking to make the jump to your next role, having on-the-ground experience, even with an administrative title, can make you just as qualified as someone with the “right” HR title. Work with your peers and mentors to make sure that your resume clearly reflects your HR abilities. Highlight any people management, coaching, mentoring or other HR-related duties that you have had in previous roles.

Consider these tips to help you capture the full range of your qualifications as you prepare to make a career move:

Audit Your Job Duties

When you’re ready to make the next step in your career, write down all of your job duties so that you can translate them to the requirements of the future role. For example, for someone looking to move from office admin to HR Generalist, it will be crucial to show that your office duties prepared you with experience in onboarding, payroll administration, or employee event organization, to name a few.

Ask for Help

It’s almost guaranteed that someone before you has forged a path, so reach out to others who have had a similar career progression. Get a sense of what skills were useful to them when moving into a new role and what was challenging in their transitions so that you’re prepared.

Aim High, but Don't be Afraid to Start Low

Aim high as you build your career in HR, but don’t be afraid to accept a title that is seemingly below your experience level either. Titles in the HR landscape can represent vastly different responsibilities depending on company size and structure. You could be an intern on a one-person HR team, doing everything from recruiting to payroll—which might give you more experience than as an HR Associate on a five person team.

Conclusion

Every HR person follows a unique path, and while it can sometimes feel like a lonely road, there are a wealth of networks and resources available at every stage of the journey. Whether it be blogs, conferences, social networks, or working with a mentor, the best way to advance your career is to get involved in the HR community. Cultivating that support network will help you gain confidence, drive innovation at your company, and move your career forward.

0 notes

Text

30+ Secret Tips For Building High Quality Backlinks

Before I delve deeper and look for ways to build high-quality backlinks, it is essential to know what backlinks are and why your websites need them. In this article, I will discuss in detail backlinks and the best practices in building them.

Let’s get started. Note, I am sticking to white hat link building practices.

What Are Backlinks?

Backlinks are incoming links to a website. They help the search engines analyze essential metrics like popularity, trust flow, and authority related to your site. Quality backlinks from high authority sites help you in achieving higher rankings in search engine result pages, also called SERPs.

Whether your website is designed to promote your business or service, or you sell products online, a ranking is critical. Speaking of selling online.

Let’s understand backlinks with the help of an analogy. Imagine you are on a posh street with a range of branded shops and showrooms. These are the high authority sites on the internet. Now, suppose that you have recently opened a new store nearby. Compare this new store with your newly launched website with little to no visitors.

You want to attract more and more customers to your newly opened shop and are looking for publicity. Thus, one day, you thought of putting a billboard nearby a branded shop - XYZ. As a result, whenever customers visited the shop XYZ, they would see the sign and would think of stopping by your shop.

Slowly and gradually, more and more customers start coming to your shop.

A billboard is similar to backlinks on high-authority websites.

High-authority websites are already well-established and receive a significant amount of organic traffic. Whenever people land on these websites, the chances are high that they may click on the backlink that leads to your site. Googles algorithm views these links as signals of trust and authority. Any expert will tell you how important these factors are in organically ranking.

This pretty much explains why backlinks are beneficial.

Now, let’s see how backlinks work.

How do backlinks work?

Backlinks work in two ways.

First, they boost your traffic. Second, they let the search engines know about your website and increase your online reputation.

But, how do they that?

It has been well-researched that legitimate sites tend to link other authentic sites; while on the other hand, spam sites do not receive quality links from reliable sources. Using link data, although it can never be entirely accurate, it allows one to adopt a more scientific approach towards the SEO strategies.

I recommended focussing on quality backlinks and anchor text variation for better search results.

Backlink building is an important strategy and needs carefully devised planning for successful execution.

If you plan to start a link building campaign from scratch, but are not sure from where to begin, here are a few link building guides which would help you out.

Social Networks for Reputable Backlinks

Social sharing is a great tactic to build natural backlinks and increase your social signals

This section focuses on social networks and places where you can build an online presence and post your content in a way that others can share and link for gaining additional value.

1. LinkedIn

LinkedIn offers an array of options where you can link your content for value. In addition to linking your content to your profile, you can also link regular short blog posts to longer and more detailed posts on your main website.

2. Pinterest

Pinterest is useful for linking content that deals with specific genres such as DIY and others. It can also be used for infographics and other moderate visual contents. This site can be used in conjunction with different link building techniques down the line.

3. Reddit

Reddit undoubtedly is very picky regarding the type of content that it hosts. That said; there are various threads where you can find something related to your niche. You can put your link as a read more while replying to someone else’s comment and it’ll be more likely seen as adding value rather than spamming and advertising.

4. Google+

Despite the Panda update, Google+ can still be used to drop some relevant links. It can best be used for getting a direct link for better and faster indexing.

5. Instagram

This site is an excellent medium for dissemination of all sorts of visual content including images, quotes, videos and pictorial messages for the people at large.

6. Imgur

Imgur is a useful website if you want to convey a meaningful message accompanied with an image and compelling infographics.

7. HubPages

This is a go-between a social network an article directory. It allows users to create hubs centered on their chosen field of expertise.

8. BizSugar

This site can be efficiently used to share links and swap partnerships with other related bloggers. It is a vital social network for links with specified roles within a community.

9. Tumblr

This site is quite popular among younger folks. Links are difficult to come by, and the interface is a bit tricky to use.

10. Triberr

This network is a guarded community for influencers and an excellent medium to link with other influencers.

Apart from the social networks, article directories can also help your link acquisition strategy. Let’s have a look.

Top Article Directories For Building Free Links

Directory submission can be risky, especially when you choose to create links from low-quality article directories. This is why we have curated a list of high-quality article directories you should stick to if you want to generate high-quality backlinks.

1. GrowthHackers

This site is primarily curated by bloggers who are looking for quick quality information with minimum effort. It is a favorite hangout for digital marketing professionals.

2. Slashdot

Slashdot is a go-to directory for geeks and computer engineers. This article directory provides valuable yet crisp information related to technology and the impact of technology on politics, primarily focused on news and technology. If your niche fits well with the niche of this directory, your articles can surely perform well.

3. Alltop

This directory can be considered as a jack of all trades as it deals with information and content of every genre. It is one of the most valuable general directories that we have with us today. All you need to do is create your account and submit the articles.

4. BlogEngage

This is another general purpose directory you can submit your articles too. Since it deals with a range of genres, chances are high that you will find your niche here. It is still one of the least spammy directories you can stick to.

5. Synacor

This directory explores the niches related to technology and advertisements. Keep producing the high-quality content, and you should be good to go.6

SelfGrowth

SelfGrowth is an all-pervasive directory, curated carefully to cater to one’s plethora of needs ranging from success in business to personal matters related to health, lifestyle, relationships, and financial issues.

8. Triond

This one again is a go-between a social networking site and directory for articles and blogs. You can sign in with your Facebook profile and then proceed to submit your content.

9. EzineArticles

It is one of the largest and oldest databases available on the internet for submitting all sorts of content regardless of its niche.

10. ArticlesBase

It deals with articles related to general interest with a seasoned and qualitative content invested with knowledge, credibility and well-established credentials.

Other Techniques To build high-quality backlinks

The techniques mentioned below do not fall under a particular category but instead are a combination of methods.

1. ’The Mozs Guide To Broken Link Building

This is a Moz’s guide to broken link building. It helps you in identifying the broken links and resurrecting the pages to preserve the link juice.

2. Converting Blog Posts To Podcasts

It is used to convert your content into an audio format for gaining a more significant foothold and earning links from podcast storefronts and reviewers. This further helps your followers to go through your content quickly while they are traveling.

3. Help A Reporter Out

It acts as a link between a news reporter and someone from the industry wanting to share opinions and incidents inside the industry. HARO can be an excellent way to establish yourself as an authority.

HARO is one of my favorite PR tools although it takes hard work and persistence.

4. Converting Blog Posts to Videos

It is used primarily to turn your blogs into videos for advertisement through social networking sites including YouTube and Facebook.

5. Answering Questions on Quora

Similar to Yahoo Answers, Quora is yet another platform where one gets to know the precise and well-informed answers to their question by a set of expert contributors. All you need to do is answer the questions related to your niche and add your blog’s link.

6. Converting Blog Posts to Slides

Convert content into presentable PowerPoint slides. You can showcase the PowerPoint slides on Slideshare and at the same time invite other bloggers to use your presentation.

7. Creating Roundup Posts

Explore and search other bloggers who are interested in sharing information and expertise related to your industry.

8. Writing Testimonials

This is another method through which you can generate backlinks. All you need to do is write testimonials for the products and services that you used, and ask the business to link the testimonial back to your blog or website.

9. Converting Implied Links into Real Links

There are a lot of instances where you are mentioned in a post but not linked. You can use this to your advantage by asking them to turn that mention into a link.

10. Guest Posting

Guest posting is still alive and is an excellent way to earn some quality backlinks. All you need to do is find well-reputed blogs and websites you can guest post on. Start by contacting the blog’s owner.

I’m sure; you must be ready to kick-start your backlinking strategy by now. However, there are a few things you need to take care of. We will discuss them in detail in the forthcoming sections of this article.

11. Press Releases

Press releases are often underestimated when it comes to a link building strategy. However, used correctly they can be beneficial. Are you going to rank your site off press release backlinks only? No. Press releases can create quick and easy publicity. Links from a credible press release service can also be used to optimize your anchor text ratio.

For Press Releases I suggest:

Additional Link Building Resources

Link Building Resources

1. ’Backlinks Definitive Guide to Link Building

This is a genuinely enlightening guide to everything about backlinks, all-inclusive. The website, its author and the information and guidelines provided are authentic and something that you can bank upon to build high-quality links.

It is the best guide available.

2. ’Mozs Guide to Growing Popularity & Links

This forms an integral part of the Moz’s beginner guide to SEO. This chapter encapsulates all the things related to backlinks, and it also gives you a vital insight on how Google views the backlinks.

3. ’Kissmetrics Natural Link Building 101

An excellent guidebook if you want to boost your online presence and want to link your website with high-authority resources. Well, it can be said that this guide differs mainly from deliberate link building but, it provides a complementary set of instructions to be followed.

4. ’Quick sprout Advanced Guide to Link Building

This guide goes one step ahead by incorporating all the related information in addition to providing the advanced strategies. This guide is co-written by the same authors of the backlink's guide - Neil Patel and Brian Dean.

5. ’Mozs Guide to Competitive Backlink Analysis

This is an exhaustive guide as to how one can analyze the links that are moving around in your niche. Also, the guide would also help you in approaching high-authority websites and would help you analyze how your competitors are gaining backlinks.

6. ’Point Blank SEO Link Building Database

This guide presents an incredibly colossal list of link building techniques that are arranged into a filterable database that can be used with much ease and perfection. The methods can also be organized and sorted according to the estimated time they take for their execution. If you are well abreast with the conventional methods and trends or have particular requirements, then this is an excellent guide for finding techniques that accommodate the bill.

7. ’WordStreams Guide to Free Link Building

Link building methods always involve investment, be it financially your time, or both. This might be in the form of buying the links directly, buying software, building satellite sites and using paid tools among others. This guide focuses on methods through which you can create links for free.

8. ’Matthew Woodwards Guide to Tiered Link Building

Tiered link building is a gray hat technique and is placed lower on the pedestal. However, this guide has some vital information that one should know. Also, it is a good illustration as to how a technique can be sold as valuable and useful despite being it’s irrelevant and faulty.

9. ’Search Engine Journals Guide to Qualifying Backlink Sources

This is an exclusively designed guide to help one examine the sources of incoming links on the site.

10. ’Monitor Backlinks Tips for Link Building

This guide is specially created for all those people who need a quick refresher or if they are looking for a quick version of some of the guides listed above. It works perfectly well despite the fact that it’s not as well complete.

The guides about would have undoubtedly helped you in getting started with the process of link building.

Care should be taken that links are fetched only from highly reputed sites. Social networks enjoy high credibility and are loved by search engines. Thus, they are an ideal choice for building backlinks. If you want to learn more about their SEO TOOL check out this massive list I have been working on.

Things To Keep In Mind While Building Backlinks

How to backlink your website

In addition to making use of the resources mentioned above for creating links, it is essential that you stay the course and avoid shortcuts.

Here are a few things that you should keep in mind while acquiring backlinks:

1. Do not forget social media

As we have already discussed above, social networking sites can also help you in earning backlinks and boosting your overall ranking. Pinterest, for example, is a DA 90+ website and allows you to add one link to your profile.

Similarly, you can make use of Google+, and LinkedIn by making your company profiles and adding a link.

2. Generate quality backlinks by featuring others

Feature other bloggers on your blog is a proven strategy to build backlink to your website. This will not only boost your relationship with other bloggers but would also help you in increasing your domain authority. The chances are high that when you feature other bloggers, they will link back to your posts, thus, providing you with free exposure.

3. Invest time in creating infographics

Catchy and detailed infographics can also help you in catching all the attention. Create visually appealing infographics with the aid of websites like piktochart and others and then share them on social networking websites. You can also encourage other bloggers to use your infographics as it is, or you can choose to submit your infographics to infographics submission sites for more visibility.

4. All the inbound links in the world will not rank your site if you lack quality content.

Techniques To Avoid

Create backlinks manually to avoid a penalty like Google Penguin

· Comment Spam

Avoid leaving low value and inferior quality comments along with the links on the blogs related to your niche. This technique might earn you some links but won’t add any value. Stick to high-quality blog comments on blogs associated with your niche.

· Purchasing Cheap Links

Avoid buying low-quality links. Period.

· Churn and Burn expired domains

This method makes use of the recently expired sites. It is a type of link set up which you set up for yourself with minimum effort. Avoid it.

· Article Spinning

Article spinning is a process of creating a quick piece of content by changing words here and there. It sure can produce articles quickly, but lacks quality, and should be avoided.

Stay away from these low-quality techniques.

Do not make these Link Building Mistakes Either:

1. Avoid linking to sites with poor backlinks profiles

Stay away from sites with a negative reputation or backlink profile.

These include the likes of illegal sites, gambling sites, porn sites, and others. Linking to any of the bad reputation are appreciated by search engines You risk harming your search engine ranking instead of boosting it.

Bonus tip: Study your competitor’s backlinks and target those first.

2. Don’t get involved in buying and selling of links

Avoid buying links from other websites just to get backlinks. This is considered spam. You might even end up in need of my google penalty recovery services. Focus on organic methods to build natural links.

3. Don’t try to get links from websites that are not niche relevant

It is highly recommended to get backlinks only from high authority websites which belong to the same niche as yours.

4. Do not use spammy anchor text

Anchor texts play an important role. Refer to this chart I made.

5. Don’t build tons of backlinks in a short time span

Take it slow and steady. Avoid generating a lot of backlinks within a short span as this might impact your reputation adversely in the eyes of Google. Link velocity is a small ranking factor.

Should I just hire a high-quality backlink service?

The bad news is that 98% of the link building services online will do more harm than good. There are no shortcuts, avoid grey hat. If it sounds too good to be true, it is. Period. The other 2% are going to be expensive. But, if you have a budget contact me and I can help or point you in the right direction.

Over To You

I hope that the backlink resources mentioned above will help you in creating high-quality backlinks. With a little bit of hard work and effort, you can quickly boost your blog or website’s authority significantly.

I can not stress enough; you should avoid practicing unethical marketing tactics to increase your reputation. You will end up doing more harm than good in the long run.

Be alert, and avoid falling for quick fix techniques to build backlinks.

Always remember that building quality backlinks and boosting the domain authority of your website is a slow and steady process, and takes time.

What are your views on this?

Which best practices do you employ to build links to your website?

What are you going to do first?

Do let us know in the comments.

0 notes

Text

The Ideal Assessment Tracking Regime?

In various blog posts and twitter exchanges I have critiqued several widely used approaches to assessment tracking and reporting. Reasons for my critique include the following:

Forcing teachers across very different disciplines to morph their organic, authentic subject specific assessments – including wide-ranging quality and difficulty models – into a common grading system at an excessive number of times determined by the needs of the system, not the teachers.

Using measures and scales that give a false impression of being reliable, valid, secure, absolute measures of standards or progress but are actually subjective, arbitrary or even totally spurious.

Basing ideas of attainment and progress on an imagined ladder of absolute standards when, usually, all that’s being measured are bell-curve positions within a cohort. This includes the widely inappropriate use of GCSE grades as milestone markers in a ladder 5 to 6 to 7 etc.

Projecting from small-scale teacher devised assessments to comparisons with national cohort distributions and national examination grades without the appropriate level of caution and acknowledgement of the margin of error.

A general failure to accept margins of error within assessment including the totally spurious use of decimalised sub-levelling for GCSE grades – bad at any point but especially bad a long way out from completing the course.

The false but widespread assumption that teachers are not only able to be consistent teacher to teacher within a subject team but also between subjects, even though no moderation processes exist to align Art, Maths, History and PE and there is no real sense in which learning in these domains can be compared.

The overly optimistic assumption that teachers can meaningfully evaluate student attitudes consistently across subjects so that their ‘attitude to learning grades’ say more about variation in student attitudes than teacher perceptions.

The attempt to track progression through a massive curriculum by checklisting against massively long statement banks using dubious mastery level descriptors, can-do statements, RAG coding and so on as if it is meaningful to state “I can explain how… I know that…” without reference to the depth of answers and the knowledge content included. Turning statement tracking into sub-levelled GCSE grade flightpaths is possibly the worst data-crime there is: spuriousness upon spuriousness. I’ve been hectored by email and online by sellers of these systems for ‘dissing’ their products but I’m sorry – they’re Emperor’s New Clothes.

Excessive workload-to-impact. Assessment should help teachers, not burden them. Far too much data that is collected does not support any form of response – its collection serves no purpose beyond creating false patterns and trajectories at a granularity that isn’t related to the reality of what students know and can do and the actions teachers take to deepen learning,

The idea that targets are inherently a good thing that provide a lever to secure better outcomes. This links to the confusion of terms for reported grades: Predicted, forecast, realistic, estimated, aspirational, working at, most likely to achieve, minimum target, FFT5, FFT20, current….laced with all the horrible psychology of teachers second guessing how they will be judged according to how accurate or optimistic they are.

Let’s face it – tracking systems and parents’ reports are riddled with spurious data noise. My son’s teachers have always been wonderful wonderful people; he’s been in capable hands. But some of his reports have made little sense: too much about the system, not enough about the child. Bad reports are unactionable. They fail the simplest of tests. Is my child doing well or not? Is s/he working hard enough or not? Is s/he on track to achieve the grades they should – or not? It’s possible to receive a report which leaves you none the wiser.

So… what should be done!?

I really do think there are multiple ways really sensible assessment and reporting can be done provided some key principles are adhered to:

Maximum value is given to authentic formative assessment expressed in the form most useful to teachers – not anyone else.

We report attainment based on what we actually know for sure – things like test scores – or we are absolutely explicit that we’re making a judgement with a margin of error.

We answer parents’ questions honestly and directly whilst acknowledging that the answers are judgements, not absolutes: How is my child doing? What else can they do to improve still further? Are they on track for future success given their starting point? We are absolutely explicit that teacher judgement about attitudes, progress and sometimes even attainment, is subjective and will vary teacher to teacher.

We do not collect more data than teachers can usefully use to lever improvement: ie the frequency and nature of assessment data is driven by the needs of teacher-student teaching-learning feedback and improvement interactions, not the macro accountability systems. Leaders go to where the small-scale data is; we don’t distort data into common formats as far as is possible.

Where we can, we reference discussions about attainment and progress to detailed information about what students need to know in order to achieve success away from the semantics of nebulous qualifying descriptors.

Processes are in place to benchmark internal data against national cohort data or, failing that, local reference data, in every subject for every programme of study.

We devise systems that support leaders, pastoral teams and parents to glean actionable information from the assessment data.

What can this look like in practice? There are multiple permutations, but here is a suggestion:

Baseline tests using widely used national systems: eg CATS. MiDYIS.

FFT data is used for internal use only: using FFT5 to challenge any low expectations: stare at it: embrace the possibility! FFT20 might be more reasonable in lower prior attaining schools.

To track cohorts, if the curriculum matches, national standardised tests like GL Assessments can also be useful. However, every department needs an external reference mechanism to gauge standards: establish it and use it to evaluate standards at KS3.

Set up internal assessment regimes with multiple use of low stakes tests/assessments that track understanding of the curriculum and incremental improvement in performance. Teachers form a rounded view of student attainment in a format that suits them in a timeframe that is meaningful. Senior leaders develop an understanding of internal departmental data. Test scores, essay marks, portfolio assessments.

Ensure that a rigorous system of ‘gap closing’ happens whereby students use formative assessment feedback to improve their work and deepen their understanding. This is part of a feedback policy that is supported by excellent information about standards and knowledge-requirements so students, teachers and parents can engage in conversations about the actual learning content, not the numbers.

Ensure attitude grades are handled with caution and are supported by more detailed statements from a statement bank. Statement banks have been available to schools for 25 years and are underused. The emphasis is on describing the tangible actions students should take – not on trying to describe subjective features of their character.

At one mid-year point and one end-of-year point, gather information in a centralised data-drop to form a picture of where students are. In examination courses, there is value in linking this to terminal grades but not using ‘current grade’ which is an invalid notion. It uses teacher judgement to forecast a likely terminal grade or grade range based on what is known to-date, including FFT projections. However, nothing more granular than whole grades should be used, providing illusory precision. No school or MAT really needs more centralised data than this, especially if they are keeping close to the real formative information,

At KS3, you do not need exam-linked grades; this is false; premature. We can either report raw scores in some more major assessments with some reference point alongside – eg class average – or we convert raw scores into scaled, standardised scores for the year group. Or we simply use a simple descriptor system: Excellent, Very Good, Average etc or other defined scale that simply reports the teachers’ view of a student’s attainment compared to the cohort. It’s honestly in its qualitative nature. There are no target grades; we refer parents to the formative feedback given to students about tangible improvement steps.

Is there an example?

Well, yes there is. I found most of what I’ve described here in action at Saffron Walden County High School in Essex. They have a very sensible set of systems.

Strong use of formative assessment: lots of feedback and ‘close the gap’ responses; students focus on continual improvement. It’s strongly evident in books and lessons.

Use of a SWCHS grade that typically falls between FFT5 and FFT20, informed by teacher judgements. Progress reports use simple descriptors at KS3 – not false GCSE grade projections. At KS4, they use the very sensible terminology of ‘forecast’. Forecasting implies margin of error, balance of judgement with evidence, not being set in stone.

Progress checks are run and reported to parents twice yearly for KS3 and three times at KS4. Attitude grades are used accompanied by a comprehensive set of statements in a statement bank- all run through configuring the Go4Schools system. Teachers have a wide range of options to describe where students need to focus in order to improve, all through a set of codes.

Rather brilliantly, their data manager has devised an excel-based system for exporting codes for the statements into a condensed format that pastoral staff can access through their VLE. It looks like this:

At a glance, a year leader can see the issues via the coding and, where needed, can quickly refer to the origins by subject. This is rich info from a data drop: it goes beyond crude attainment averages or subjective comments about ‘working harder’. It goes beyond making major inferences from the attainment grade data – which is impossible without knowing what the formative data and curriculum mapping is. It indicates homework, work rate, attendance, in-class contributions – whatever the specifics are, neatly condensed to inform conversations.

So – there you are. No ludicrously big knowledge statement trackers, no spurious can-do statements, no spurious flight paths, no false-granularity GCSE grades. Just sensible organic authentic assessment packaged up to be useful, informative and, where appropriate, linked to exam outcomes, with high expectations built-in. It’s not rocket science. But it comes from a place of intelligent understanding of curriculum and assessment and a spirit of giving value to professional expertise.

The Ideal Assessment Tracking Regime? published first on https://medium.com/@KDUUniversityCollege

0 notes

Link

The following blog post, unless otherwise noted, was written by a member of Gamasutra’s community.

The thoughts and opinions expressed are those of the writer and not Gamasutra or its parent company.

Co-authored with Andrea Abney

Andrea is an accomplished User Researcher. She has lead UX Research teams at Disney Interactive in the past and currently spearheads User Research at mobile gaming juggernaut Scopely.

If usability testing lies at the heart of UX validation then building a sound prototype is the key to unlock it’s full potential. - Om

We all know that UX design relies heavily on UX research and usability testing for generating valuable insights which shape the future of products and businesses.

Image above shows various touch points for prototype testing in pre-prod cycle.

Not only will applying UX methodology to generate user behaviour insights before building a feature save multiple iterative efforts post launch but also millions of dollars worth of dev. time. In the previous walkthrough series we covered how usability testing should be set up and conducted in sanitised conditions with a moderator, what aspects to avoid and best practices.

As promised, we will walk you through a hypothetical feature and apply all the UX steps we would in a real world environment.

A typical agile flow process for a feature in pre-production generally looks like this:

High Level Brief

Product managers across organizations create a high level brief of a feature regarding the app or game they are working on. This brief may be targeted at boosting KPI’s like retention, monetisation, engagement or a new USP not seen in competitor products.

Examples #1 Demonstrates what a one-page high level brief may look like for a games' "Inventory" feature:

High level concepts, as above, will translate into a more granular specs fleshing out details and forming an early base for tech dependencies like front end, platform or backend. It could also integrate Game designers/Business Analysts who get involved whilst working closely with other leads to generate a concrete spec.

NOTE: At this stage there is input from UX from a research perspective. Included will be any pre-built target personas, demographic studies or competitor benchmarks to integrate best practices for this specific feature.

Sketches & Low Fidelity Wireframes

Once the brief has been iterated upon by stakeholders, it is handed over to the UX designers to build flows to better understand how player will journey through different scenarios, screens and required actions.

Post blocking the flow, sketch sessions are held to brainstorm with different permutation combinations of content placement and action hierarchy. Wireframe creation stage can involve exploration starting from white-boarding which is then further refined to create digital counterparts.

It’s a good idea to start with some early pencil sketches or white-boarding to create a wide range of options. Sketching gives iteration speed and removes tunnel vision which is often seen while designing a software/game.

Some best practices and pitfalls to avoid when developing wireframes:

Explore a Wide Spectrum: It is good to come up with at least 2-3 variations of a feature, not only in terms of the layout but also functionality and aspirational design. Here is why:

Most features start out as MVPs. What makes it into the game depends on costing in terms of dev. effort, projected timeline and release schedule.

MVP versions may be sufficient enough for launch but it is good to have atleast 1 aspirational version designed as well. For example: use of micro animations, 3D elements for more immersion vs 2D elements, parallax mapping etc. Even if the need of the hour is to launch with low-tech (low dev cost) feature, gradually over time in various updates this feature can be updated to the aspirational one.

It often happens that road maps shuffle as we move along, some features are dropped that could be the breather space to make a push for your aspirational version. If you have it ready.

By future proofing your aspirational design along with the MVP version, you avoid context switching as both versions can be designed at one go, rather than designers having to shuffle back and forth to solve the same problem over and over again.

Resist Temptation to Use UI: UX designers should avoid falling for the lure of designing their initial flows using some hacked up UI or UI widgets available from your existing products library to make flows look prettier or more aspirational.

I have often seen UX designers succumb to the pressure of using UI screens.

Why? There are several reasons for it:

1) Product groups or key stakeholders may not be used to just looking at wireframe flows and make decisions regarding the feature. They may not understand why everything is in B/W or grey scale while the products look and feels different. This could also be because of their past conditioning.

2) Sometimes brief/feature owner’s may directly approach the UI designers to build the flow using UI screens as:

They may not think UX plays a role or is important enough in the feature

They may be pressed for time to get the spec. approved or may not feel confident about people evaluating solely on basis of wireframes

It is merely a matter of educating the product group and establishing a culture of UX, when a flow or design is in approval stage they need to be evaluated based on low-fidelity wireframes and only once the flows along with the spec have been hardened should it move on to UI and it can be presented for final approval.

Question: How do you ask your top leadership to adapt to this change? This is present the arguments below:

Tell them how insisting on UI flows at UX design stage can...

Hampers Iteration speed: Imagine you are dealing with a flow which has multiple screens and it is still in flux receiving comments from various stakeholders and leads, engineering even and a small change in text could have gigantic ramifications.

UI designers make the change in multiple screens (every screen in the flow) which can be seen as wasting valuable production time. If there are 10X changes, which do you think will be easier to quickly iterate on - wireframes or UI visuals?

Higher % margin for error: In any production environment, time is a luxury. By indulging in flow stage with UI, most of the effort and time is directed towards just putting the flow together, making it pixel perfect, rather than spending time thinking about edge and use cases. While UX generally accounts for 95% of all use cases, I have seen this margin for error go up when UX designers indulge in high-fidelity designs early in UX phase.

Example above shows the amount of time a UX designer spends on various processes.

UX & UI stepping on each other’s toes: In many cases, UX designers may not be well versed with visual design, so when UXer is forced to build UI flows, it implies two departments are stepping on each other’s toes. With UI designers solving UX issues, the potential for friction heightens. Not to mention the inefficient utilization of each resource’s strength.

Assembling a Prototype

Once a flow has been iterated upon and signed off, it is good idea to build a prototype for first round of usability testing. This also helps the higher up and dev. teamsunderstand how a feature looks on the device/screen.

Remember: Until this point we have been toying with assumptions & ideas of what users need & how they would like us to design them. In order to validate those assumptions, it is important to test them out!

Tools for Prototyping

Prototypes can vary from simplistic printouts of your wireframes to PowerPoint click throughs to on-device prototypes using a variety of designer friendly softwares available in the market like Invision.

Be it quick printouts, powerpoint click throughs or device ready prototypes, ensure you test your designs. This is the only window early in design phase to get actual user feedback!

Design friendly softwares like above allow you to bring your wi-fi's to life.

Below is an example of a prototype we have assembled in a designer-friendly software based on brief, spec and wireframes shown above, which allows us to test a device ready version with our TA group to generate qualitative data.

[embedded content]

Conducting a Test

For conducting a test it is extremely important to liason with a user researcher who has expertise in conducting these kind of test sessions, like Andrea here who is not invested in the project as much as the product/development team and can perform the same in a remote lab. This helps mitigate biases that result in analysis based on feedback rather than personal opinions (You can read more about biases to avoid here).

Preparation for conducting a test

Recruiting: It's crucial you recruit the right test subjects to get the most valuable feedback.

Facebook, Google analytics and existing online communities are a goldmine for recruiting test subjects!

Know who you are designing for and then recruit similar people.

Recruit the target demo for your product. This means recruiting people who use similar products. For example players who play mid-core games of competitors.

Talk to them on the phone before they come in. Have a conversation about their behaviours and products they use, make sure they are really in your target demo.

4. It's good to have a mix of users/players profiles like beginners, average level users and power users. Based on their experience, their expectations of the product will change. A power user may not need much on-boarding or instructions but a beginner might. It prevents skewing the experience design towards any particular archetypes.

In Part-2 of this series, we will look at results of prototype testing in real time with real players & the process of gathering & sorting inferences for folding feedback into the design. Stay tuned!

If you liked this post, you can check out my other Game UX Deconstructs. Feel free to connect with me for my future article.

0 notes

Text

Build Better Strategies! Part 2: Model-Based Systems

Trading systems come in two flavors: model-based and data-mining. This article deals with model based strategies. The algorithms are often astoundingly simple, but properly developing them has its difficulties and pitfalls (otherwise anyone would be doing it). Even a significant market inefficiency gives a system only a relatively small edge. A little mistake can turn a winning strategy into a losing one. And you will not necessarily see this in the backtest.

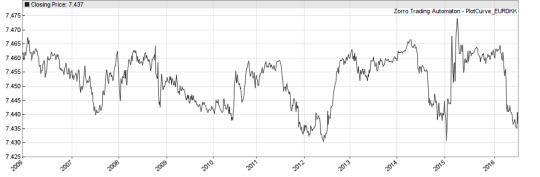

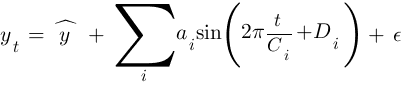

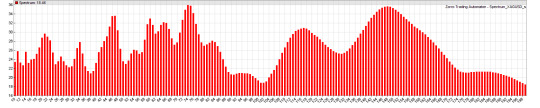

Developing a model-based strategy begins with the market inefficiency that you want to exploit. The inefficiency produces a price anomaly or price pattern that you can describe with a qualitative or quantitative model. Such a model predicts the current price yt from the previous price yt-1 plus some function f of a limited number of previous prices plus some noise term ε:

The time distance between the prices yt is the time frame of the model; the number n of prices used in the function f is the lookback period of the model. The higher the predictive f term in relation to the nonpredictive ε term, the better is the strategy. Some traders claim that their favorite method does not predict, but ‘reacts on the market’ or achieves a positive return by some other means. On a certain trader forum you can even encounter a math professor who re-invented the grid trading system, and praised it as non-predictive and even able to trade a random walk curve. But systems that do not predict yt in some way must rely on luck; they only can redistribute risk, for instance exchange a high risk of a small loss for a low risk of a high loss. The profit expectancy stays negative. As far as I know, the professor is still trying to sell his grid trader, still advertising it as non-predictive, and still regularly blowing his demo account with it.

Trading by throwing a coin loses the transaction costs. But trading by applying the wrong model – for instance, trend following to a mean reverting price series – can cause much higher losses. The average trader indeed loses more than by random trading (about 13 pips per trade according to FXCM statistics). So it’s not sufficient to have a model; you must also prove that it is valid for the market you trade, at the time you trade, and with the used time frame and lookback period.

Not all price anomalies can be exploited. Limiting stock prices to 1/16 fractions of a dollar is clearly an inefficiency, but it’s probably difficult to use it for prediction or make money from it. The working model-based strategies that I know, either from theory or because we’ve been contracted to code some of them, can be classified in several categories. The most frequent are:

1. Trend

Momentum in the price curve is probably the most significant and most exploited anomaly. No need to elaborate here, as trend following was the topic of a whole article series on this blog. There are many methods of trend following, the classic being a moving average crossover. This ‘hello world’ of strategies (here the scripts in R and in C) routinely fails, as it does not distinguish between real momentum and random peaks or valleys in the price curve.

The problem: momentum does not exist in all markets all the time. Any asset can have long non-trending periods. And contrary to popular belief this is not necessarily a ‘sidewards market’. A random walk curve can go up and down and still has zero momentum. Therefore, some good filter that detects the real market regime is essential for trend following systems. Here’s a minimal Zorro strategy that uses a lowpass filter for detecting trend reversal, and the MMI indicator for determining when we’re entering trend regime:

function run() { vars Price = series(price()); vars Trend = series(LowPass(Price,500)); vars MMI_Raw = series(MMI(Price,300)); vars MMI_Smooth = series(LowPass(MMI_Raw,500)); if(falling(MMI_Smooth)) { if(valley(Trend)) reverseLong(1); else if(peak(Trend)) reverseShort(1); } }

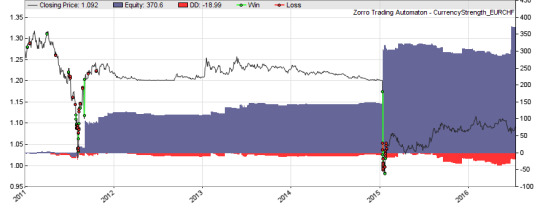

The profit curve of this strategy:

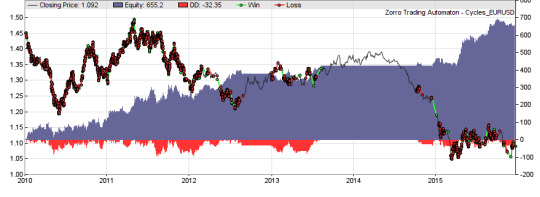

(For the sake of simplicity all strategy snippets on this page are barebone systems with no exit mechanism other than reversal, and no stops, trailing, parameter training, money management, or other gimmicks. Of course the backtests mean in no way that those are profitable systems. The P&L curves are all from EUR/USD, an asset good for demonstrations since it seems to contain a little bit of every possible inefficiency).

2. Mean reversion

A mean reverting market believes in a ‘real value’ or ‘fair price’ of an asset. Traders buy when the actual price is cheaper than it ought to be in their opinion, and sell when it is more expensive. This causes the price curve to revert back to the mean more often than in a random walk. Random data are mean reverting 75% of the time (proof here), so anything above 75% is caused by a market inefficiency. A model:

= price at bar t

= fair price

= half-life factor

= some random noise term

The higher the half-life factor, the weaker is the mean reversion. The half-life of mean reversion in price series ist normally in the range of 50-200 bars. You can calculate λ by linear regression between yt-1 and (yt-1-yt). The price series need not be stationary for experiencing mean reversion, since the fair price is allowed to drift. It just must drift less as in a random walk. Mean reversion is usually exploited by removing the trend from the price curve and normalizing the result. This produces an oscillating signal that can trigger trades when it approaches a top or bottom. Here’s the script of a simple mean reversion system:

function run() { vars Price = series(price()); vars Filtered = series(HighPass(Price,30)); vars Signal = series(FisherN(Filtered,500)); var Threshold = 1.0; if(Hurst(Price,500) < 0.5) { // do we have mean reversion? if(crossUnder(Signal,-Threshold)) reverseLong(1); else if(crossOver(Signal,Threshold)) reverseShort(1); } }