#woot woot! support other devs!

Text

Annotations for the latest Week in Eth News

I tweeted this week:

Feels like an accurate reflection of the broader week in the Ethereum ecosystem. Just take a look at the most clicked:

“Yield farming” is the idea of figuring out how to leverage up to get the most yield, where part of the yield is usually a native token for the platform/protocol. (Please do so very cautiously...if you get leveraged up, you’re juicing returns but taking large risk of losses).

With Compound, this meant various “trades,” which changed through the week. First people were lending (and resupplying) Tether, because that had the highest rates. Then the trade switched to BAT because some whale figured out (the advantages of scale!) that it wouldn’t be hard to drive BAT rates up even higher than Tether (USDT) and all the sudden an insane amount of BAT moved to Compound. I kid you not: at the moment there is about $250m USD worth of BAT in Compound - though only 6% of supply as it gets circulated through a few times

Leveraged up? Be careful! If BAT price doubles, how many people would get liquidated?

Hint: it’s those of you who are over 50% on the borrow limit you can see from your account page on the Compound UI. Looking at exchanges, the order books are rather thin - how much would it cost a liquidator to drive all the BAT price up 2x compared to how much it could make liquidating? Or what if Brave announces a big partnership? Crypto is an adversarial environment (ahem, look at all those YouTubers with huge followings trying to sell you on the latest pump of some worthless token)

These order books are thinner than normal because....so much BAT got sucked into Compound from the exchange’s order books. So the price is now easier to push higher.

Meanwhile, Balancer started its “liquidity mining” (same thing as yield farming) before Compound, but just released its token today. And now it’s trading at $15 last I looked, or 1.5 billion USD fully diluted market cap.

Signs of a bull market? Feels like it to me.

These aren’t the only liquidity mining opportunities - and you’ll see a bunch more people do it now that this is what is bringing in users.

Back to Compound, it got listed on Coinbase Pro today and the price actually fell, as all the people who had “farmed” it got liquid, plus presumably some others as well. However, it eventually held (at time of writing) at about $280. (that’s a 2.8b USD fully diluted valuation). It had been at $380 and looking at the orderbook when it opened, it appeared that the first trade (for a tiny amount) happened at $440. We’ll see what happens when Coinbase opens it to retail.

The DeFi narrative is strong. Seems clear that there is some demand for folks wanting to own a bit of what might be the next big financial platforms.

-------

The final thing I always call out in my intro is high-level things I suggest Eth holders might read:

Matter Labs’ ZK Sync rollup is live – tiny transaction fees, withdrawals to Eth mainnet in 15 mins, 300 transactions per second (with 2000 tps coming)

Reddit announces scaling competition to move Reddit’s community points to mainnet

It seems the mysterious and massive transaction fees were from a hacked korean ponzi called GoodCycle. Various miners have handled differently: Ethermine (already paid out). Sparkpool (said it would pay out but then victim identified, unclear to me if yet resolved). f2pool (said they’d return to new address)

ETH disrupting SWIFT: why fintech VCs are missing DeFi

As always, reverse order:

Looking at ETH as a distruptor for SWIFT is a pretty interesting lens. I’ve always rolled my eyes a little at “fintech” because it seems like playing fast with regulations and then if you get a certain scale hiring lawyers and lobbyists to hopefully make your issues go away. This article argues that the real innovation is further down in the financial “stack” - Ethereum taking the place of antiquated SWIFT.

Personally I don’t think the massive mistake/hack transaction fees are a big deal, but it seems to be something that the crypto clickbait jumps on. It’s not a danger to any normal user. Just check the transaction fee before sending.

Reddit wants to put its Community Points on Eth mainnet, likely through a rollup or sidechain. Very neat - it does feel like their deadline is just a little ambitious for rollups which might make them use a sidechain, which would be a bit of a shame if they can get better trust assumptions from a rollup by waiting an extra month or two.

And speaking of rollups, ZkSync is live. Fast, cheap transfers with the data onchain and the execution offchain. Woot!

Eth1

Trinity v0.1.0-alpha.36 (Python client) – BeamSync improvements, metrics tracking (influxDB/Grafana), partial eth/65 support

Updated Eth on ARM images. Geth fast syncs a full node in 40 hours on 8GB Raspberry Pi4

Miners began bumping up the gas limit (12m now), which sparked some polemics about the tradeoff between state growth versus user fees. Higher gas limit resulted in safelow gas fees in the teens for the first time in weeks.

Speaking of yield farming ruling the week, the gas prices are back to 30 gwei despite the fact that that throughput went up 20%. My strong suspicion is that this has a lot to do with yield farming.

For the record, the max transactions per second of Ethereum right now is about 44 transactions per second. It’s an easy calc to do (12m divided by 13.1 block time divided by 21000 gas per simple eth transfer).

Of course that doesn’t include rollups, who put their data onchain to the point where they are arguably layer 1.5.

Personally I think we should make this gas limit increase “temporary” when gas prices go back down.

Eth2

Prysmatic (Go) client update – stable Onyx testnet, 80% validators community run, RAM usage optimizations

Nimbus (Nim) client update – up to spec, 10-50x processing speedup, splitting node and validator clients

SigmaPrime’s update on their Eth2 fuzzer – found some Prysmatic bugs, fuzzing Lodestar (Javascript client), Lighthouse ENR crate bug, dockerizing the fuzzer so the community can run it

Jonny Rhea’s Packetology posts (one and two) on identifying validators

Attack nets – a testnet specifically for attacks

When Sigma Prime’s fuzzer is dockerized, does “are you fuzzing any eth2 clients” become the cool new question that Eth folks ask each other, instead of “are you running any testnets?”

There’s not much more to say otherwise. This is the final slog to getting the eth2 chain launched. The final tinkering, the testnets, thinking about validator privacy and cost of attack, an attack net for white hats.

Layer2

Matter Labs’ ZK Sync rollup is live – tiny transaction fees, withdrawals to Eth mainnet in 15 mins, 300 transactions per second (with 2000 tps coming)

Minimally viable rollback in Validium/Volition

The flipside to high gas prices is layer2. It’s hard to get people to excited about layer2 when you can get onchain transactions done in a couple minutes at 1 gwei. At 30 gwei, people get more excited about layer2, and stuff is working.

Network effects are real: layer2 also becomes much better to use the more people who are using it. So there is a silver lining to higher gas prices, because it provides the incentive to push people to superior alternatives. Obviously a really fast and cheap ETH/token transfer rollup is increasingly more valuable the more people are using it.

Crypto

a GKR inside a snark to speed up SNARK proving 200x

Attacking the Diogenes setup ceremony for Eth2’s VDF

Isogenies VDFs: delay encryption

Kate polynomial commitments explainer from Dankrad Feist

Reputable List Curation from Decentralized Voting Crites, Maller, Meiklejohn and Mercer paper for construction of private TCR voting

Debut of the “crypto” section. It seemed like it was getting lost in the general.

Placement (compared to other sections) was rather random. Categorization can be somewhat arbitrary, that’s something the newsletter will hopefully constantly evolve.

This newsletter is made possible thanks to Matcha by 0x!

0x is excited to sponsor Week in Ethereum News and invite readers to try out our new DEX!

Sign up here to get early access to Matcha, your new home for fast, secure token trading.

Stuff for developers

Waffle v3 with ethers v5 support

WalletConnect v1 release, now with mobile linking

ethers-rs, a port of ethers to Rust

Solidity v0.6.10. error codes and bugfix for externally calling a function that returns variables with calldata location.

Inheritance in Solidity v0.6

Sorting without comparison in Solidity

Create dynamic NFTs using oracles

Deploying with libraries on Remix IDE

Wyre’s WalletPasses allow push notifications for dapps

Bunch of neat stuff in here. I’ve said it before, ethers is increasingly the thing that people use, even while most of the eth tutorials are still using web3js.

Code security

OpenZeppelin found a bug that affected 61 Argent wallets

Bancor bug: public method allowed anyone to drain user balances. Amusingly, the white hat draining got frontrun

DeFiSaver exchange vulnerability. They white hat drained it and also got frontrun.

Database of audit reports

Check out this newsletter’s weekly job listings below the general section

A special security section to break up the “stuff for devs” since it was a little big.

The whole “white hat drainers” get frontrun theme was...well, I used the word amusing in the newsletter, but I don’t think that’s quite the right word.

Ecosystem

Reddit announces scaling competition to move Reddit’s community points to mainnet

It seems the mysterious and massive transaction fees were from a hacked korean ponzi called GoodCycle. Various miners have handled differently: Ethermine (already paid out). Sparkpool (said it would pay out but then victim identified, unclear to me if yet resolved). f2pool (said they’d return to new address)

By default, Geth will no longer accept transaction fees over 1 eth

3box on demystifying the many facets of digital identity

The death (and web3 rebirth?) of privacy

Ethereum Foundation invests in Unicef’s CryptoFund startups

Unicef’s press release didn’t mention the Ethereum Foundation (and barely mentioned Ethereum! strange) but in fact EF did provide the capital. Very strange that Unicef barely mentioned Ethereum.

And yes, I still love a good privacy essay. I’m not a privacy nut, but I do think people should have the right to at least know when our every online action action is being surveiled.

Enterprise

WEF, IADB and Colombian government project to reduce corruption in procurement

EY launches crypto tax reporting app

EY continues to push things for enterprise, and dealing with taxes is presumably just one more hurdle that they’re knocking down. Of course many enterprises also still refuse to own crypto (even on a centralized exchange), so I remain curious as to whether

the anti-corruption procurement project in Colombia suffers a similar problem: to be actually used, the Colombian government requires secret bids. So they either have to change the law to try it, or they have to integrate...something like EY’s Nightfall

DAOs and Standards

EIP2733: Transaction package

Anonymous voting using MACI and BrightID

Arguably the anonymous voting using MACI could’ve been in the crypto section, but it felt slightly more applicable here.

Application layer

$COMP was distributed and liquidity mining (“yield farming”) blew up. Compound passed Maker for #1 on DeFiPulse, and $COMP has had a fully diluted market cap over $3.5 billion

Uniswap v2 passes v1 in liquidity

Streamr’s data unions framework is live for anyone to create their own

5m KNC burned milestone

Yield farming on steroids from Synthetix, Ren, and Curve

A yield farming for normies (and the risks!) tweetstorms from Tony Sheng

this artwork is always on sale, v2 with 100% per year tax instead of 5%

My weekly what fraction of applayer section is DeFi: 5/7.

I was somewhat surprised Uniswap v2 took over this quickly. I suppose that’s a data point for “the power of frontends.”

Tokens/Business/Regulation

ETH disrupting SWIFT: why fintech VCs are missing DeFi

Nick Tomaino on the economics of Eth2

Personal token vote on Alex Masmej’s life decisons

Liechtenstein company tokenizes 1.1m USD collectable Ferrari

Opyn: hedging with calls

It does seem like the economics of Eth2 are still vastly underrated by “crypto” at large. In my view that largely reflects the skepticism that Eth2 ever launches, as Silicon Valley went very skeptical on ETH 2 years ago when they pivoted away from FFG.

New tokens from protocols valued in the billions and tokenized Ferraris. It’s starting to feel like the true beginnings of a bull market.

No general section this week; I was surprised as you, but lately the general section had been dominated by cryptography and that got its own section.

That’s it for the annotations!

Please RT this on Twitter if you enjoyed it:

https://twitter.com/evan_van_ness/status/1275551414350237702

Job Listings

Synthetix: Deep Solidity engineer, 2+ years exp & US/EU friendly timezone

Chainlink: Product Manager for Blockchain Integrations and Lead Test Engineer

0x is hiring full-stack, back-end, front-end engineers + 1 data scientist

Celer Network: Android developer

Trail of Bits is looking for masters of low-level security. Apply here.

Want your job listing here? $250 per line (~75 character limit including spaces), payable in ETH/DAI/USDC to evan.ethereum.eth. Questions? thecryptonewspodcast -at-gmail

Housekeeping

Follow me on Twitter @evan_van_ness to get the annotated edition of this newsletter on Monday or Tuesday. Plus I tweet most of what makes it into the newsletter.

Did you get forwarded this newsletter? Sign up to receive it weekly

Permalink: https://weekinethereumnews.com/week-in-ethereum-news-june-21-2020/

Dates of Note

Upcoming dates of note (new/changes in bold):

June 24 – EIP1559 call

June 25 – Eth2 call

June 26 – Core devs call

June 29 – Swarm first public event

July 3 – Gitcoin matching grants ends (here’s my grant)

July 6-Aug 6 – HackFS Filecoin/IPFS and Ethereum hackathon

July 20 – Fork the World MetaCartel hackathon

Aug 2 – ENS grace period begins to end

Oct 2-Oct 30 – EthOnline hackathon

0 notes

Text

Building Your First Serverless Service With AWS Lambda Functions

Many developers are at least marginally familiar with AWS Lambda functions. They’re reasonably straightforward to set up, but the vast AWS landscape can make it hard to see the big picture. With so many different pieces it can be daunting, and frustratingly hard to see how they fit seamlessly into a normal web application.

The Serverless framework is a huge help here. It streamlines the creation, deployment, and most significantly, the integration of Lambda functions into a web app. To be clear, it does much, much more than that, but these are the pieces I’ll be focusing on. Hopefully, this post strikes your interest and encourages you to check out the many other things Serverless supports. If you’re completely new to Lambda you might first want to check out this AWS intro.

There’s no way I can cover the initial installation and setup better than the quick start guide, so start there to get up and running. Assuming you already have an AWS account, you might be up and running in 5–10 minutes; and if you don’t, the guide covers that as well.

Your first Serverless service

Before we get to cool things like file uploads and S3 buckets, let’s create a basic Lambda function, connect it to an HTTP endpoint, and call it from an existing web app. The Lambda won’t do anything useful or interesting, but this will give us a nice opportunity to see how pleasant it is to work with Serverless.

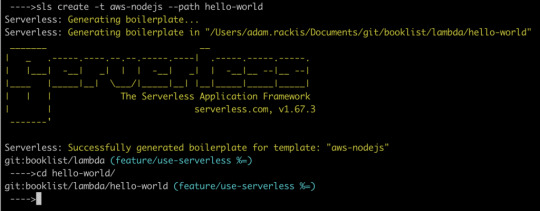

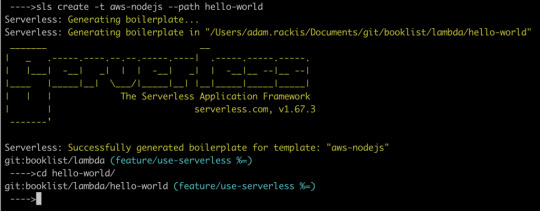

First, let’s create our service. Open any new, or existing web app you might have (create-react-app is a great way to quickly spin up a new one) and find a place to create our services. For me, it’s my lambda folder. Whatever directory you choose, cd into it from terminal and run the following command:

sls create -t aws-nodejs --path hello-world

That creates a new directory called hello-world. Let’s crack it open and see what’s in there.

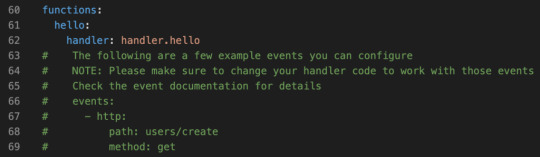

If you look in handler.js, you should see an async function that returns a message. We could hit sls deploy in our terminal right now, and deploy that Lambda function, which could then be invoked. But before we do that, let’s make it callable over the web.

Working with AWS manually, we’d normally need to go into the AWS API Gateway, create an endpoint, then create a stage, and tell it to proxy to our Lambda. With serverless, all we need is a little bit of config.

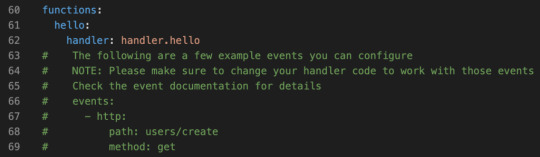

Still in the hello-world directory? Open the serverless.yaml file that was created in there.

The config file actually comes with boilerplate for the most common setups. Let’s uncomment the http entries, and add a more sensible path. Something like this:

functions: hello: handler: handler.hello # The following are a few example events you can configure # NOTE: Please make sure to change your handler code to work with those events # Check the event documentation for details events: - http: path: msg method: get

That’s it. Serverless does all the grunt work described above.

CORS configuration

Ideally, we want to call this from front-end JavaScript code with the Fetch API, but that unfortunately means we need CORS to be configured. This section will walk you through that.

Below the configuration above, add cors: true, like this

functions: hello: handler: handler.hello events: - http: path: msg method: get cors: true

That’s the section! CORS is now configured on our API endpoint, allowing cross-origin communication.

CORS Lambda tweak

While our HTTP endpoint is configured for CORS, it’s up to our Lambda to return the right headers. That’s just how CORS works. Let’s automate that by heading back into handler.js, and adding this function:

const CorsResponse = obj => ({ statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "*", "Access-Control-Allow-Methods": "*" }, body: JSON.stringify(obj) });

Before returning from the Lambda, we’ll send the return value through that function. Here’s the entirety of handler.js with everything we’ve done up to this point:

'use strict'; const CorsResponse = obj => ({ statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "*", "Access-Control-Allow-Methods": "*" }, body: JSON.stringify(obj) });

module.exports.hello = async event => { return CorsResponse("HELLO, WORLD!"); };

Let’s run it. Type sls deploy into your terminal from the hello-world folder.

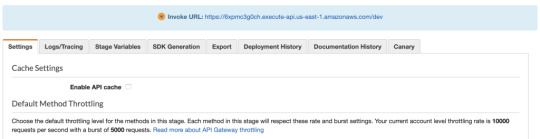

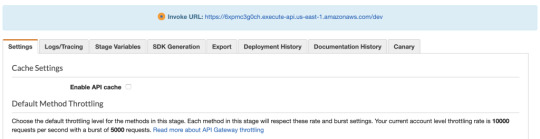

When that runs, we’ll have deployed our Lambda function to an HTTP endpoint that we can call via Fetch. But… where is it? We could crack open our AWS console, find the gateway API that serverless created for us, then find the Invoke URL. It would look something like this.

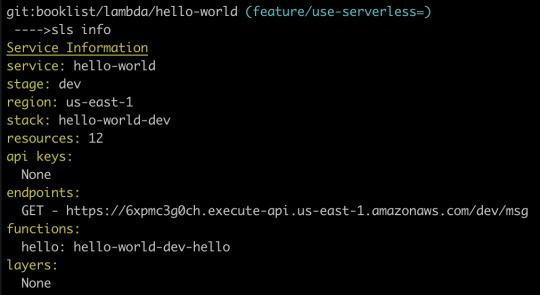

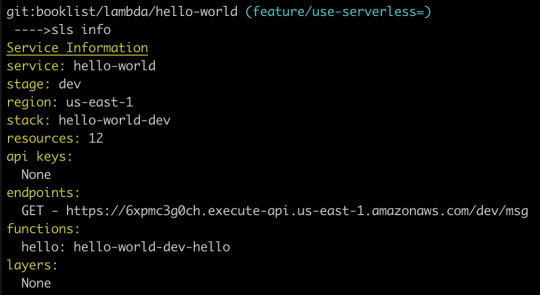

Fortunately, there is an easier way, which is to type sls info into our terminal:

Just like that, we can see that our Lambda function is available at the following path:

https://6xpmc3g0ch.execute-api.us-east-1.amazonaws.com/dev/ms

Woot, now let’s call It!

Now let’s open up a web app and try fetching it. Here’s what our Fetch will look like:

fetch("https://6xpmc3g0ch.execute-api.us-east-1.amazonaws.com/dev/msg") .then(resp => resp.json()) .then(resp => { console.log(resp); });

We should see our message in the dev console.

Now that we’ve gotten our feet wet, let’s repeat this process. This time, though, let’s make a more interesting, useful service. Specifically, let’s make the canonical “resize an image” Lambda, but instead of being triggered by a new S3 bucket upload, let’s let the user upload an image directly to our Lambda. That’ll remove the need to bundle any kind of aws-sdk resources in our client-side bundle.

Building a useful Lambda

OK, from the start! This particular Lambda will take an image, resize it, then upload it to an S3 bucket. First, let’s create a new service. I’m calling it cover-art but it could certainly be anything else.

sls create -t aws-nodejs --path cover-art

As before, we’ll add a path to our HTTP endpoint (which in this case will be a POST, instead of GET, since we’re sending the file instead of receiving it) and enable CORS:

// Same as before events: - http: path: upload method: post cors: true

Next, let’s grant our Lambda access to whatever S3 buckets we’re going to use for the upload. Look in your YAML file — there should be a iamRoleStatements section that contains boilerplate code that’s been commented out. We can leverage some of that by uncommenting it. Here’s the config we’ll use to enable the S3 buckets we want:

iamRoleStatements: - Effect: "Allow" Action: - "s3:*" Resource: ["arn:aws:s3:::your-bucket-name/*"]

Note the /* on the end. We don’t list specific bucket names in isolation, but rather paths to resources; in this case, that’s any resources that happen to exist inside your-bucket-name.

Since we want to upload files directly to our Lambda, we need to make one more tweak. Specifically, we need to configure the API endpoint to accept multipart/form-data as a binary media type. Locate the provider section in the YAML file:

provider: name: aws runtime: nodejs12.x

…and modify if it to:

provider: name: aws runtime: nodejs12.x apiGateway: binaryMediaTypes: - 'multipart/form-data'

For good measure, let’s give our function an intelligent name. Replace handler: handler.hello with handler: handler.upload, then change module.exports.hello to module.exports.upload in handler.js.

Now we get to write some code

First, let’s grab some helpers.

npm i jimp uuid lambda-multipart-parser

Wait, what’s Jimp? It’s the library I’m using to resize uploaded images. uuid will be for creating new, unique file names of the sized resources, before uploading to S3. Oh, and lambda-multipart-parser? That’s for parsing the file info inside our Lambda.

Next, let’s make a convenience helper for S3 uploading:

const uploadToS3 = (fileName, body) => { const s3 = new S3({}); const params = { Bucket: "your-bucket-name", Key: `/${fileName}`, Body: body };

return new Promise(res => { s3.upload(params, function(err, data) { if (err) { return res(CorsResponse({ error: true, message: err })); } res(CorsResponse({ success: true, url: `https://${params.Bucket}.s3.amazonaws.com/${params.Key}` })); }); }); };

Lastly, we’ll plug in some code that reads the upload files, resizes them with Jimp (if needed) and uploads the result to S3. The final result is below.

'use strict'; const AWS = require("aws-sdk"); const { S3 } = AWS; const path = require("path"); const Jimp = require("jimp"); const uuid = require("uuid/v4"); const awsMultiPartParser = require("lambda-multipart-parser");

const CorsResponse = obj => ({ statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "*", "Access-Control-Allow-Methods": "*" }, body: JSON.stringify(obj) });

const uploadToS3 = (fileName, body) => { const s3 = new S3({}); var params = { Bucket: "your-bucket-name", Key: `/${fileName}`, Body: body }; return new Promise(res => { s3.upload(params, function(err, data) { if (err) { return res(CorsResponse({ error: true, message: err })); } res(CorsResponse({ success: true, url: `https://${params.Bucket}.s3.amazonaws.com/${params.Key}` })); }); }); };

module.exports.upload = async event => { const formPayload = await awsMultiPartParser.parse(event); const MAX_WIDTH = 50; return new Promise(res => { Jimp.read(formPayload.files[0].content, function(err, image) { if (err || !image) { return res(CorsResponse({ error: true, message: err })); } const newName = `${uuid()}${path.extname(formPayload.files[0].filename)}`; if (image.bitmap.width > MAX_WIDTH) { image.resize(MAX_WIDTH, Jimp.AUTO); image.getBuffer(image.getMIME(), (err, body) => { if (err) { return res(CorsResponse({ error: true, message: err })); } return res(uploadToS3(newName, body)); }); } else { image.getBuffer(image.getMIME(), (err, body) => { if (err) { return res(CorsResponse({ error: true, message: err })); } return res(uploadToS3(newName, body)); }); } }); }); };

I’m sorry to dump so much code on you but — this being a post about Amazon Lambda and serverless — I’d rather not belabor the grunt work within the serverless function. Of course, yours might look completely different if you’re using an image library other than Jimp.

Let’s run it by uploading a file from our client. I’m using the react-dropzone library, so my JSX looks like this:

<Dropzone onDrop={files => onDrop(files)} multiple={false} > <div>Click or drag to upload a new cover</div> </Dropzone>

The onDrop function looks like this:

const onDrop = files => { let request = new FormData(); request.append("fileUploaded", files[0]);

fetch("https://yb1ihnzpy8.execute-api.us-east-1.amazonaws.com/dev/upload", { method: "POST", mode: "cors", body: request }) .then(resp => resp.json()) .then(res => { if (res.error) { // handle errors } else { // success - woo hoo - update state as needed } }); };

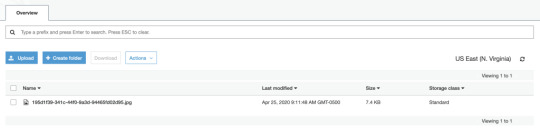

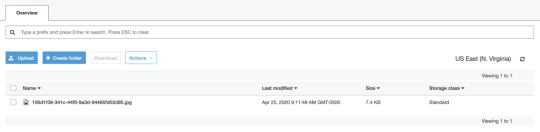

And just like that, we can upload a file and see it appear in our S3 bucket!

An optional detour: bundling

There’s one optional enhancement we could make to our setup. Right now, when we deploy our service, Serverless is zipping up the entire services folder and sending all of it to our Lambda. The content currently weighs in at 10MB, since all of our node_modules are getting dragged along for the ride. We can use a bundler to drastically reduce that size. Not only that, but a bundler will cut deploy time, data usage, cold start performance, etc. In other words, it’s a nice thing to have.

Fortunately for us, there’s a plugin that easily integrates webpack into the serverless build process. Let’s install it with:

npm i serverless-webpack --save-dev

…and add it via our YAML config file. We can drop this in at the very end:

// Same as before plugins: - serverless-webpack

Naturally, we need a webpack.config.js file, so let’s add that to the mix:

const path = require("path"); module.exports = { entry: "./handler.js", output: { libraryTarget: 'commonjs2', path: path.join(__dirname, '.webpack'), filename: 'handler.js', }, target: "node", mode: "production", externals: ["aws-sdk"], resolve: { mainFields: ["main"] } };

Notice that we’re setting target: node so Node-specific assets are treated properly. Also note that you may need to set the output filename to handler.js. I’m also adding aws-sdk to the externals array so webpack doesn’t bundle it at all; instead, it’ll leave the call to const AWS = require("aws-sdk"); alone, allowing it to be handled by our Lamdba, at runtime. This is OK since Lambdas already have the aws-sdk available implicitly, meaning there’s no need for us to send it over the wire. Finally, the mainFields: ["main"] is to tell webpack to ignore any ESM module fields. This is necessary to fix some issues with the Jimp library.

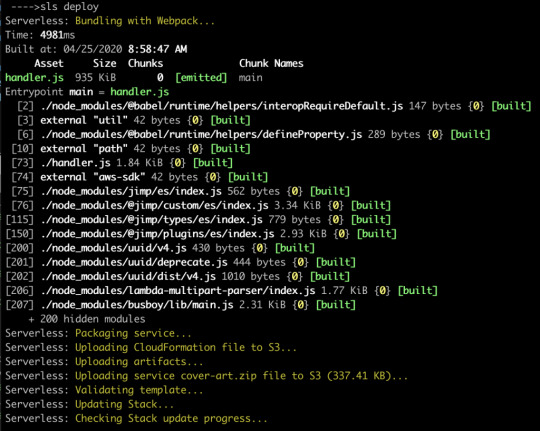

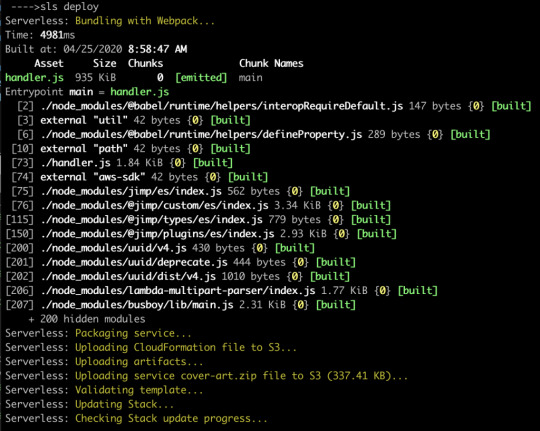

Now let’s re-deploy, and hopefully we’ll see webpack running.

Now our code is bundled nicely into a single file that’s 935K, which zips down further to a mere 337K. That’s a lot of savings!

Odds and ends

If you’re wondering how you’d send other data to the Lambda, you’d add what you want to the request object, of type FormData, from before. For example:

request.append("xyz", "Hi there");

…and then read formPayload.xyz in the Lambda. This can be useful if you need to send a security token, or other file info.

If you’re wondering how you might configure env variables for your Lambda, you might have guessed by now that it’s as simple as adding some fields to your serverless.yaml file. It even supports reading the values from an external file (presumably not committed to git). This blog post by Philipp Müns covers it well.

Wrapping up

Serverless is an incredible framework. I promise, we’ve barely scratched the surface. Hopefully this post has shown you its potential, and motivated you to check it out even further.

If you’re interested in learning more, I’d recommend the learning materials from David Wells, an engineer at Netlify, and former member of the serverless team, as well as the Serverless Handbook by Swizec Teller

Serverless Workshop: A repo to learn the core concepts of serverless

Serverless Auth Strategies: A repo that walks through different strategies for authorizing access to functions.

Netlify Functions Worksop: Netlify lessons on the core concepts of using serverless functions

Serverless Handbook: Getting started with serverless technologies

The post Building Your First Serverless Service With AWS Lambda Functions appeared first on CSS-Tricks.

Building Your First Serverless Service With AWS Lambda Functions published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Building Your First Serverless Service With AWS Lambda Functions

Many developers are at least marginally familiar with AWS Lambda functions. They’re reasonably straightforward to set up, but the vast AWS landscape can make it hard to see the big picture. With so many different pieces it can be daunting, and frustratingly hard to see how they fit seamlessly into a normal web application.

The Serverless framework is a huge help here. It streamlines the creation, deployment, and most significantly, the integration of Lambda functions into a web app. To be clear, it does much, much more than that, but these are the pieces I’ll be focusing on. Hopefully, this post strikes your interest and encourages you to check out the many other things Serverless supports. If you’re completely new to Lambda you might first want to check out this AWS intro.

There’s no way I can cover the initial installation and setup better than the quick start guide, so start there to get up and running. Assuming you already have an AWS account, you might be up and running in 5–10 minutes; and if you don’t, the guide covers that as well.

Your first Serverless service

Before we get to cool things like file uploads and S3 buckets, let’s create a basic Lambda function, connect it to an HTTP endpoint, and call it from an existing web app. The Lambda won’t do anything useful or interesting, but this will give us a nice opportunity to see how pleasant it is to work with Serverless.

First, let’s create our service. Open any new, or existing web app you might have (create-react-app is a great way to quickly spin up a new one) and find a place to create our services. For me, it’s my lambda folder. Whatever directory you choose, cd into it from terminal and run the following command:

sls create -t aws-nodejs --path hello-world

That creates a new directory called hello-world. Let’s crack it open and see what’s in there.

If you look in handler.js, you should see an async function that returns a message. We could hit sls deploy in our terminal right now, and deploy that Lambda function, which could then be invoked. But before we do that, let’s make it callable over the web.

Working with AWS manually, we’d normally need to go into the AWS API Gateway, create an endpoint, then create a stage, and tell it to proxy to our Lambda. With serverless, all we need is a little bit of config.

Still in the hello-world directory? Open the serverless.yaml file that was created in there.

The config file actually comes with boilerplate for the most common setups. Let’s uncomment the http entries, and add a more sensible path. Something like this:

functions: hello: handler: handler.hello # The following are a few example events you can configure # NOTE: Please make sure to change your handler code to work with those events # Check the event documentation for details events: - http: path: msg method: get

That’s it. Serverless does all the grunt work described above.

CORS configuration

Ideally, we want to call this from front-end JavaScript code with the Fetch API, but that unfortunately means we need CORS to be configured. This section will walk you through that.

Below the configuration above, add cors: true, like this

functions: hello: handler: handler.hello events: - http: path: msg method: get cors: true

That’s the section! CORS is now configured on our API endpoint, allowing cross-origin communication.

CORS Lambda tweak

While our HTTP endpoint is configured for CORS, it’s up to our Lambda to return the right headers. That’s just how CORS works. Let’s automate that by heading back into handler.js, and adding this function:

const CorsResponse = obj => ({ statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "*", "Access-Control-Allow-Methods": "*" }, body: JSON.stringify(obj) });

Before returning from the Lambda, we’ll send the return value through that function. Here’s the entirety of handler.js with everything we’ve done up to this point:

'use strict'; const CorsResponse = obj => ({ statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "*", "Access-Control-Allow-Methods": "*" }, body: JSON.stringify(obj) });

module.exports.hello = async event => { return CorsResponse("HELLO, WORLD!"); };

Let’s run it. Type sls deploy into your terminal from the hello-world folder.

When that runs, we’ll have deployed our Lambda function to an HTTP endpoint that we can call via Fetch. But… where is it? We could crack open our AWS console, find the gateway API that serverless created for us, then find the Invoke URL. It would look something like this.

Fortunately, there is an easier way, which is to type sls info into our terminal:

Just like that, we can see that our Lambda function is available at the following path:

https://6xpmc3g0ch.execute-api.us-east-1.amazonaws.com/dev/ms

Woot, now let’s call It!

Now let’s open up a web app and try fetching it. Here’s what our Fetch will look like:

fetch("https://6xpmc3g0ch.execute-api.us-east-1.amazonaws.com/dev/msg") .then(resp => resp.json()) .then(resp => { console.log(resp); });

We should see our message in the dev console.

Now that we’ve gotten our feet wet, let’s repeat this process. This time, though, let’s make a more interesting, useful service. Specifically, let’s make the canonical “resize an image” Lambda, but instead of being triggered by a new S3 bucket upload, let’s let the user upload an image directly to our Lambda. That’ll remove the need to bundle any kind of aws-sdk resources in our client-side bundle.

Building a useful Lambda

OK, from the start! This particular Lambda will take an image, resize it, then upload it to an S3 bucket. First, let’s create a new service. I’m calling it cover-art but it could certainly be anything else.

sls create -t aws-nodejs --path cover-art

As before, we’ll add a path to our HTTP endpoint (which in this case will be a POST, instead of GET, since we’re sending the file instead of receiving it) and enable CORS:

// Same as before events: - http: path: upload method: post cors: true

Next, let’s grant our Lambda access to whatever S3 buckets we’re going to use for the upload. Look in your YAML file — there should be a iamRoleStatements section that contains boilerplate code that’s been commented out. We can leverage some of that by uncommenting it. Here’s the config we’ll use to enable the S3 buckets we want:

iamRoleStatements: - Effect: "Allow" Action: - "s3:*" Resource: ["arn:aws:s3:::your-bucket-name/*"]

Note the /* on the end. We don’t list specific bucket names in isolation, but rather paths to resources; in this case, that’s any resources that happen to exist inside your-bucket-name.

Since we want to upload files directly to our Lambda, we need to make one more tweak. Specifically, we need to configure the API endpoint to accept multipart/form-data as a binary media type. Locate the provider section in the YAML file:

provider: name: aws runtime: nodejs12.x

…and modify if it to:

provider: name: aws runtime: nodejs12.x apiGateway: binaryMediaTypes: - 'multipart/form-data'

For good measure, let’s give our function an intelligent name. Replace handler: handler.hello with handler: handler.upload, then change module.exports.hello to module.exports.upload in handler.js.

Now we get to write some code

First, let’s grab some helpers.

npm i jimp uuid lambda-multipart-parser

Wait, what’s Jimp? It’s the library I’m using to resize uploaded images. uuid will be for creating new, unique file names of the sized resources, before uploading to S3. Oh, and lambda-multipart-parser? That’s for parsing the file info inside our Lambda.

Next, let’s make a convenience helper for S3 uploading:

const uploadToS3 = (fileName, body) => { const s3 = new S3({}); const params = { Bucket: "your-bucket-name", Key: `/${fileName}`, Body: body };

return new Promise(res => { s3.upload(params, function(err, data) { if (err) { return res(CorsResponse({ error: true, message: err })); } res(CorsResponse({ success: true, url: `https://${params.Bucket}.s3.amazonaws.com/${params.Key}` })); }); }); };

Lastly, we’ll plug in some code that reads the upload files, resizes them with Jimp (if needed) and uploads the result to S3. The final result is below.

'use strict'; const AWS = require("aws-sdk"); const { S3 } = AWS; const path = require("path"); const Jimp = require("jimp"); const uuid = require("uuid/v4"); const awsMultiPartParser = require("lambda-multipart-parser");

const CorsResponse = obj => ({ statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "*", "Access-Control-Allow-Methods": "*" }, body: JSON.stringify(obj) });

const uploadToS3 = (fileName, body) => { const s3 = new S3({}); var params = { Bucket: "your-bucket-name", Key: `/${fileName}`, Body: body }; return new Promise(res => { s3.upload(params, function(err, data) { if (err) { return res(CorsResponse({ error: true, message: err })); } res(CorsResponse({ success: true, url: `https://${params.Bucket}.s3.amazonaws.com/${params.Key}` })); }); }); };

module.exports.upload = async event => { const formPayload = await awsMultiPartParser.parse(event); const MAX_WIDTH = 50; return new Promise(res => { Jimp.read(formPayload.files[0].content, function(err, image) { if (err || !image) { return res(CorsResponse({ error: true, message: err })); } const newName = `${uuid()}${path.extname(formPayload.files[0].filename)}`; if (image.bitmap.width > MAX_WIDTH) { image.resize(MAX_WIDTH, Jimp.AUTO); image.getBuffer(image.getMIME(), (err, body) => { if (err) { return res(CorsResponse({ error: true, message: err })); } return res(uploadToS3(newName, body)); }); } else { image.getBuffer(image.getMIME(), (err, body) => { if (err) { return res(CorsResponse({ error: true, message: err })); } return res(uploadToS3(newName, body)); }); } }); }); };

I’m sorry to dump so much code on you but — this being a post about Amazon Lambda and serverless — I’d rather not belabor the grunt work within the serverless function. Of course, yours might look completely different if you’re using an image library other than Jimp.

Let’s run it by uploading a file from our client. I’m using the react-dropzone library, so my JSX looks like this:

<Dropzone onDrop={files => onDrop(files)} multiple={false} > <div>Click or drag to upload a new cover</div> </Dropzone>

The onDrop function looks like this:

const onDrop = files => { let request = new FormData(); request.append("fileUploaded", files[0]);

fetch("https://yb1ihnzpy8.execute-api.us-east-1.amazonaws.com/dev/upload", { method: "POST", mode: "cors", body: request }) .then(resp => resp.json()) .then(res => { if (res.error) { // handle errors } else { // success - woo hoo - update state as needed } }); };

And just like that, we can upload a file and see it appear in our S3 bucket!

An optional detour: bundling

There’s one optional enhancement we could make to our setup. Right now, when we deploy our service, Serverless is zipping up the entire services folder and sending all of it to our Lambda. The content currently weighs in at 10MB, since all of our node_modules are getting dragged along for the ride. We can use a bundler to drastically reduce that size. Not only that, but a bundler will cut deploy time, data usage, cold start performance, etc. In other words, it’s a nice thing to have.

Fortunately for us, there’s a plugin that easily integrates webpack into the serverless build process. Let’s install it with:

npm i serverless-webpack --save-dev

…and add it via our YAML config file. We can drop this in at the very end:

// Same as before plugins: - serverless-webpack

Naturally, we need a webpack.config.js file, so let’s add that to the mix:

const path = require("path"); module.exports = { entry: "./handler.js", output: { libraryTarget: 'commonjs2', path: path.join(__dirname, '.webpack'), filename: 'handler.js', }, target: "node", mode: "production", externals: ["aws-sdk"], resolve: { mainFields: ["main"] } };

Notice that we’re setting target: node so Node-specific assets are treated properly. Also note that you may need to set the output filename to handler.js. I’m also adding aws-sdk to the externals array so webpack doesn’t bundle it at all; instead, it’ll leave the call to const AWS = require("aws-sdk"); alone, allowing it to be handled by our Lamdba, at runtime. This is OK since Lambdas already have the aws-sdk available implicitly, meaning there’s no need for us to send it over the wire. Finally, the mainFields: ["main"] is to tell webpack to ignore any ESM module fields. This is necessary to fix some issues with the Jimp library.

Now let’s re-deploy, and hopefully we’ll see webpack running.

Now our code is bundled nicely into a single file that’s 935K, which zips down further to a mere 337K. That’s a lot of savings!

Odds and ends

If you’re wondering how you’d send other data to the Lambda, you’d add what you want to the request object, of type FormData, from before. For example:

request.append("xyz", "Hi there");

…and then read formPayload.xyz in the Lambda. This can be useful if you need to send a security token, or other file info.

If you’re wondering how you might configure env variables for your Lambda, you might have guessed by now that it’s as simple as adding some fields to your serverless.yaml file. It even supports reading the values from an external file (presumably not committed to git). This blog post by Philipp Müns covers it well.

Wrapping up

Serverless is an incredible framework. I promise, we’ve barely scratched the surface. Hopefully this post has shown you its potential, and motivated you to check it out even further.

If you’re interested in learning more, I’d recommend the learning materials from David Wells, an engineer at Netlify, and former member of the serverless team, as well as the Serverless Handbook by Swizec Teller

Serverless Workshop: A repo to learn the core concepts of serverless

Serverless Auth Strategies: A repo that walks through different strategies for authorizing access to functions.

Netlify Functions Worksop: Netlify lessons on the core concepts of using serverless functions

Serverless Handbook: Getting started with serverless technologies

The post Building Your First Serverless Service With AWS Lambda Functions appeared first on CSS-Tricks.

source https://css-tricks.com/building-your-first-serverless-service-with-aws-lambda-functions/

from WordPress https://ift.tt/2ZPUMO4

via IFTTT

0 notes

Text

Game Dev Update | 3.8.17

There are seven-- SEVEN-- delivery ends for this battleaxe. Sadly, there aren’t seven different ways for this axe to connect with your opponent in Exiles, but that biggest big blade there will connect for sure. It was designed to destroy the FACE of your enemy when they dare to oppose you. This axe has been a regular entrant to many previous screenshots which heavily featured the Warrior Class.

So it’s fitting to use a Warrior weapon to say goodbye to the Warrior for a little bit-- this Dev Update will be so much moar about MAGES. Last time, we dug into the visual telegraph system for gear, the state of PVE, our upcoming presence at GDC and a couple Ability Deep Dives.

This update, we are focused on running. Cuz now we run. The game version that we brought to GDC was the first time Exiles was running entirely from the backend-- with PVP battles, PVE Quests, Choose-Your-Own-Adventure choices and even hidden treasure pushed to your device from a magickal computer in the sky.

Why care?

Cuz now we run-- all of the static screenshots and concept art and whatnot we’ve shown throughout development will now make their way INTO the game, and it will happen like Chain Lightning.

EXILES GOT TO GDC!

The GDC booth for Epic’s Unreal Engine was gigantic, and Exiles was honored to play a role within it as a showcase mobile title. All week last week, the Gunslinger team demoed to showgoers, players and press. We got a ton of great feedback and some new ideas from the show, and swung by the Touch Arcade GDC homebase to do a video demo you can see here:

youtube

If you want to see what TA had to say, the full article is here. As always when I demo, I hid some stuff-- I hid a series of numbers in this discussion-- they’re throughout the video. 2...15...20...5...10...13. If you can tell me where they “show up” in the demo, PM TheWizard in the forums with the answer, and you’ll receive a free Chest of loot in the game when it launches.

SO. MANY. MAGES.

At long last, wizarding is at hand. There are always a few times during the development of a game when you go “yes finally! WOOT” and this is one of them. The three starting Classes in Exiles are Warrior, Mage and Rogue (each with three specialties) along with three others designed, but we’ve been working through development with the Warrior as the template. Now that the game is getting ready for testing, Exiles systems support the input of any number of Classes (<-- foreshadowing), and the remaining few are going in.

We have a huge number of Rogue fans in the community, so a word of caution: don’t expect the Rogue in next week-- we need to fill out the Mage’s gear. And fill we will!

Robes, boots, shoulders, staves, books (yes, books), orbs, gloves etc are all rumbling through the art pipeline. A Legendary or two will make an appearance and then we’ll get Mr/Ms Thief McPocketses into the game.

Mage Talent Trees are up as well! Here’s a look at the Elementalist Tree, one of the three specialties within the Mage Class (the other two being Blood Mage and Warcaster). Characters can pick and choose from each tree as they like.

THE NEW WILDEWOODS

The Zones (regions) of Embermark have been evolving since day one and the starting Zone, Wildewoods, has been a particular favorite for the team. We’ve been working on Lore behind every town, landmark, ruin and port in Embermark and there will be plenty to find (and fight) once the game is live (remember, the world map in Exiles functions like a Google Map, with various activities, Quests and events appearing in different places and at different levels of zoom).

To see how the map art is evolving, take a look at what Wildewoods used to look like and where it is now:

The new icons there in the screen represent where a Choose-Your-Own-Adventure type choice would be made (affecting your Motivations and Standings) and the other one is... TRAYSURE. These can be visible or hidden depending upon your character’s development, the current Season’s plot and what events are live.

(And if you want to know where that old Bog Hag lives and what she’s up to, look no further. Is she evil or good or purely pragmatic? You decide...)

PVE IS LEGIT

Huzzah! I’ve said it often, but one of the ambitions of Exiles is to have a living, breathing world, with ebbs and flows of faction, politics and motivations (even morality, if Lord British can dig it), so having our PvE system online is a big milestone for the team.

Questing, events, making choices and finding caches of valuables are all driven by your actions (and achievements) in the game and that system saw its debut in this last build. The screenshot below is a big deal for us, not because the Ogre is trying to squash a bug, but because his actions are driven by the backend of this game and that means we can push any NPC at any time with any abilities and any loot into the game in real-time. With no update. You’ll just get the goodness in your game.

STAVES

What’s an RPG without them? And this one is looking at you...

A CLOSER LOOK AT THE MAGE IN ACTION

Here’s a bunch of GIFs that illustrate how the Mage is coming along. Ol’ “MadeOfMagicks” here starts off with a classic-- the Fireball, which does decent damage (affected by stats and gear of course) along with a chance to Burn the enemy. (he rocks a Crit too, so Addrighar is in trouble)

Blizzard doesn’t do a ton of damage, but its Chill effect slows down the other player for a few rounds, offering some first-shot chances and decreasing the enemy’s defense.

Poor Addriggar. Poor, poor Addrighar...

Huzzah!

(please note that these GIFs made the Warrior look like a knucklehead. He is not a knucklehead and will soon have his revenge)

TRAYSURE

Last update, we showed what you might find in the placed (and random) treasure caches found around Embermark. Oftentimes, you’ll get a drop (random-ish), but sometimes you’ll get something specific. Something that matters to someone that might not be you...

ABILITY & TALENT BREAKDOWNS

Each Update, we’re featuring a couple of Abilities (active) and/or Talents (passive) to preview what kinds of effects the choices you make in developing your character have. This time, we’ve got one of each, both from the Warrior Class:

Devastating Swing

So the “devastating” part of this swing isn’t when it connects. It’s what comes after. This Warrior Ability does a decent amount of damage (affected by stats and what weapon you wield, of course), and then leads to an effect-over-time. It increases Crushing damage for 2 rounds after the move is played. So if you follow with Bash, Shoulder Rush or the armor-increased Skull Crush, your enemy is in for it.

Blood Madness

“No, my friend. You’re crazy.” This one isn’t an active Ability. This one’s passive, despite its name and disconcerting icon. Bleeding (an effect that can sit on either combatant) causes the Warrior to flip his/her lid, increasing the weapon damage they can do, and it’s increased if you spend more Talent Points on the Talent (max of 5). The interesting part is this-- either combatant bleeding trigger this effect, and both means PUNISH.

THE 3D LOOT INSPECTOR

Want to check out that new piece of loot that just dropped on your character without having to equip it (or upgrade it... or salvage it)? Booyah:

See that triple-dot on the bottom-right part of the armor’s description box? Click that to get all the details you could ever want about a Pounding Mage Chest.

REMEMBER

We’ll keep sharing details as we head into testing (remember to PM TheWizard on the Exiles forums if you want in on closed testing & beta later), and you can count on early impressions from the testers throughout our various channels.

If you haven’t already, follow along with the Exiles development on Twitter, Instagram and Facebook. And if you haven’t, I’ll find you. And SMITE you.

DISCORD, IF YOU WANT TO KNOW...

If you want to hear about the game, ask questions or connect with others who are helping the development team think about features, design and narrative, hop into the Discord Channel for live chat and say hi-- it’s a friendly lot and there are daily shenanigans (there’s even a Shenaniganizer).

BONUS: A VERY BAD GUY

Very. ‘Cross the Breaches came many a monster. Not all of them were rampaging. Some were measured, thoughtful, insightful...

(back-only is shown to you on purpose for dramatic effect)

BONUS BONUS: SCREENSHOT

Oh boy-- Armadillo is coming in with Destruction’s Wake, and Nezuja’s not super-happy about it, given his 74 remaining HP...

Ha! You thought after the Bonus Bonus, we were done!

WRONG.

Remember how I’ve been showing the evolution of Embermark’s awful, awful wolves from concept to in-the-game? Well, here’s the next step-- what kind of RPG wolf would she be without a blood-curdling howl?

Now we’re done (till next month)!

1 note

·

View note