#im smarter than Elon musk

Text

my brother being pathetic again haha we love to see it. i feel like we had one week where the threat of our parents wasnt right behind us and we talked normally but now theyre back and hes like 'and ummm... how much money did you make over the summer 😂😂'

#'im always grinding for real ☝️🤓' then why have i worked 38 hours a week for 3 weeks#'oh well work smarter not harder then ☝️🤓' you just play the sport you already play that our dad fully supports dumb bitch#my dad used to be a lifeguard but never heard of inservice training and never worked over 5 hours a shift. do you think he fw me#brother was like 'you probably have less money than me now 😁😁'#'yes i spend it' AND THIS MF LOOKED AT ME ALL FAKE SHOCKED#SORRY MOMMY AND DADDY DONT GIVE ME MY FUCKIN MONEY#this just means he doesnt get rides home for a week unless he pays for gas. alright elon musk lets see it yk

1 note

·

View note

Text

Against AGI

I don't like the term "AGI" (Short for "Artificial General Intelligence").

Essentially, I think it functions to obscure the meaning of "intelligence", and that arguments about AGIs, alignment, and AI risk involve using several subtly different definitions of the term "intelligence" depending on which part of the argument we're talking about.

I'm going to use this explanation by @fipindustries as my example, and I am going to argue with it vigorously, because I think it is an extremely typical example of the way AI risk is discussed:

In that essay (originally a script for a YouTube Video) @fipindustries (Who in turn was quoting a discord(?) user named Julia) defines intelligence as "The ability to take directed actions in response to stimulus, or to solve problems, in pursuit of an end goal"

Now, already that is two definitions. The ability to solve problems in pursuit of an end goal almost certainly requires the ability to take directed actions in response to stimulus, but something can also take directed actions in response to stimulus without an end goal and without solving problems.

So, let's take that quote to be saying that intelligence can be defined as "The ability to solve problems in pursuit of an end goal"

Later, @fipindustries says, "The way Im going to be using intelligence in this video is basically 'how capable you are to do many different things successfully'"

In other words, as I understand it, the more separate domains in which you are capable of solving problems successfully in pursuit of an end goal, the more intelligent you are.

Therefore Donald Trump and Elon Musk are two of the most intelligent entities currently known to exist. After all, throwing money and subordinates at a problem allows you to solve almost any problem; therefore, in the current context the richer you are the more intelligent you are, because intelligence is simply a measure of your ability to successfully pursue goals in numerous domains.

This should have a radical impact on our pedagogical techniques.

This is already where the slipperiness starts to slide in. @fipindustries also often talks as though intelligence has some *other* meaning:

"we have established how having more intelligence increases your agency."

Let us substitute the definition of "intelligence" given above:

"we have established how the ability to solve problems in pursuit of an end goal increases your agency"

Or perhaps,

"We have established how being capable of doing many different things successfully increases your agency"

Does that need to be established? It seems like "Doing things successfully" might literally be the definition of "agency", and if it isn't, it doesn't seem like many people would say, "agency has nothing to do with successfully solving problems, that's ridiculous!"

Much later:

''And you may say well now, intelligence is fine and all but there are limits to what you can accomplish with raw intelligence, even if you are supposedly smarter than a human surely you wouldn’t be capable of just taking over the world uninmpeeded, intelligence is not this end all be all superpower."

Again, let us substitute the given definition of intelligence;

"And you may say well now, being capable of doing many things successfully is fine and all but there are limits to what you can accomplish with the ability to do things successfully, even if you are supposedly much more capable of doing things successfully than a human surely you wouldn’t be capable of just taking over the world uninmpeeded, the ability to do many things successfully is not this end all be all superpower."

This is... a very strange argument, presented as though it were an obvious objection. If we use the explicitly given definition of intelligence the whole paragraph boils down to,

"Come on, you need more than just the ability to succeed at tasks if you want to succeed at tasks!"

Yet @fipindustries takes it as not just a serious argument, but an obvious one that sensible people would tend to gravitate towards.

What this reveals, I think, is that "intelligence" here has an *implicit* definition which is not given directly anywhere in that post, but a number of the arguments in that post rely on said implicit definition.

Here's an analogy; it's as though I said that "having strong muscles" is "the ability to lift heavy weights off the ground"; this would mean that, say, a 98lb weakling operating a crane has, by definition, stronger muscles than any weightlifter.

Strong muscles are not *defined* as the ability to lift heavy objects off the ground; they are a quality which allow you to be more efficient at lifting heavy objects off the ground with your body.

Intelligence is used the same way at several points in that talk; it is discussed not as "the ability to successfully solve tasks" but as a quality which increases your ability to solve tasks.

This I think is the only way to make sense of the paragraph, that intelligence is one of many qualities, all of which can be used to accomplish tasks.

Speaking colloquially, you know what I mean if I say, "Having more money doesn't make you more intelligent" but this is an oxymoron if we define intelligence as the ability to successfully accomplish tasks.

Rather, colloquially speaking we understand "intelligence" as a specific *quality* which can increase your ability to accomplish tasks, one of *many* such qualities.

Say we want to solve a math problem; we could reason about it ourselves, or pay a better mathematician to solve it, or perhaps we are very charismatic and we convince a mathematician to solve it.

If intelligence is defined as the ability to successfully solve the problem, then all of those strategies are examples of intelligence, but colloquially, we would really only refer to the first as demonstrating "intelligence".

So what is this mysterious quality that we call "intelligence"?

Well...

This is my thesis, I don't think people who talk about AI risk really define it rigorously at all.

For one thing, to go way back to the title of this monograph, I am not totally convinced that a "General Intelligence" exists at all in the known world.

Look at, say, Michael Jordan. Everybody agrees that he is an unmatched basketball player. His ability to successfully solve the problems of basketball, even in the face of extreme resistance from other intelligent beings is very well known.

Could he apply that exact same genius to, say, advancing set theory?

I would argue that the answer is no, because he couldn't even transfer that genius to baseball, which seems on the surface like a very closely related field!

It's not at all clear to me that living beings have some generalized capacity to solve tasks; instead, they seem to excel at some and struggle heavily with others.

What conclusions am I drawing?

Don't get me wrong, this is *not* an argument that AI risk cannot exist, or an argument that nobody should think about it.

If anything, it's a plea to start thinking more carefully about this stuff precisely because it is important.

So, my first conclusion is that, lacking a model for a "General Intelligence" any theorizing about an "Artificial General Intelligence" is necessarily incredibly speculative.

Second, the current state of pop theory on AI risks is essentially tautology. A dangerous AGI is defined as, essentially, "An AI which is capable of doing harmful things regardless of human interference." And the AI safety rhetoric is "In order to be safe, we should avoid giving a computer too much of whatever quality would render it unsafe."

This is essentially useless, the equivalent of saying, "We need to be careful not to build something that would create a black hole and crush all matter on Earth into a microscopic point."

I certainly agree with the sentiment! But in order for that to be useful you would have to have some idea of what kind of thing might create a black hole.

This is how I feel about AI risk. In order to talk about what it might take to have a safe AI, we need a far more concrete definition than "Some sort of machine with whatever quality renders a machine uncontrollable".

26 notes

·

View notes

Text

bac for a minute only to bitch about this guy in my class. hes a goddamn grade A asshole. quotes andrew potate chips all the time, says there are some positive aspects to slavery and how he wishes black people were still slaves in america, calls dark skinned guys nigger and whatnot and tells them to go back and work in cotton fields, is genereally a pervert lmao like today he took my friends brand new eraser and played with it by pulling it out of the apsara cover and putting in back in and saying really weird sexual stuff like mmm yeah nice and slow damn thats a tight one no worries i can get it in what a long eraser. today in english class the guys were being rowdy and shit and my teacher is like the girls of this class are so much smarter and more empathetic than the guys and he shouts "if girls are so smart then why is there no female elon musk or female mukesh ambani or female messi or female da vincis. females cant do anything lol" i swear im going to fucking lose it if i be in his prsence for more than a month i will actually kill him i told him to go kill himself once but hes under the notion that i like him or somethign anyway i hope i can obliterate people because he deserves to have his balls ripped off and eyeballs made into soup. one note = one cast

5 notes

·

View notes

Text

jesus christ okay visiting relatives yesterday was alright but like jesus christ my aunts husband tried to debate me on why he thinks elon musk is a genius and is changing the world it was so fkn miserable like this guy was just convinced that elon is the most green guy ever and that he also invented solar panels solar energy electric cars etc. it was so embarrassing cause i could almost physically feel this guy switch into debate mode the moment he realized i wasnt big on elon and like he kept trying to catch me in contradictions by bringing up other stuff like volkswagen but since im sorta a bit smarter than i give myself credit for he didnt and it was just SUCH a awfully boring conversation. he also later insisited that elon invented paypal and bet 20$ on it but i didnt wanna humor him but then immediately after two of my family members googled it and got like the actual list of like 8 ppl or so who actually invented it so that was kinda amusing o ya also when i casually mentioned like preferring toyota (honestly just to mention any other brand) he said i need to support american cars and started saying i love “j*p” cars and used that word like 100 time OH YEA also anytime i mentioned something unarguably awful or stupid elon has done he would go “yea well he’s on the spectrum” and he legit did this like 5 times like that exact phrase jesus... it was soooo embarrassing not the worst tho cause i was a bit surprised how like normal i was acting abt ‘debating’ such an insufferable person UGH like this guy was straight up convinced elon is like the modern day jesus or something lol and would also keep going “well we’ll see how it plays out as things go along” when i mentioned my lack of interest in his ‘inventions’ due to them existing solely as luxury items and not actual things that changed the whole industry like smthn like the iphone. also luckily since the guy was like heavy on games it wasnt the worst day since i could easily just talk abt that w/ him whenever conversation arose. but still JESUS

#aside from taht yesterday was p good well like bearable#and even w/ that i didnt give him the satisfaction of anything so it was like not the worst thing#but rly annoying nonethelss

2 notes

·

View notes

Text

im so fuckin sick of people talking about billionaires like they have any value that i actually real life pissed off my coworker by continuously cutting her off while she tried to tell us how elon musk is like, surprisingly smart! He’s not. hes not hes not.che had capital and other people do shit for him. he doesnt buy the land he doesnt design the cars he doesnt do whatever he claims to while on some stupid podcast shilling himself. and it was really rude of me to cut her off like that and i get it. shut up anyway. smarter people than you and i have extensively debunked every one of his stupid claims ideas and thoughts.

1 note

·

View note

Photo

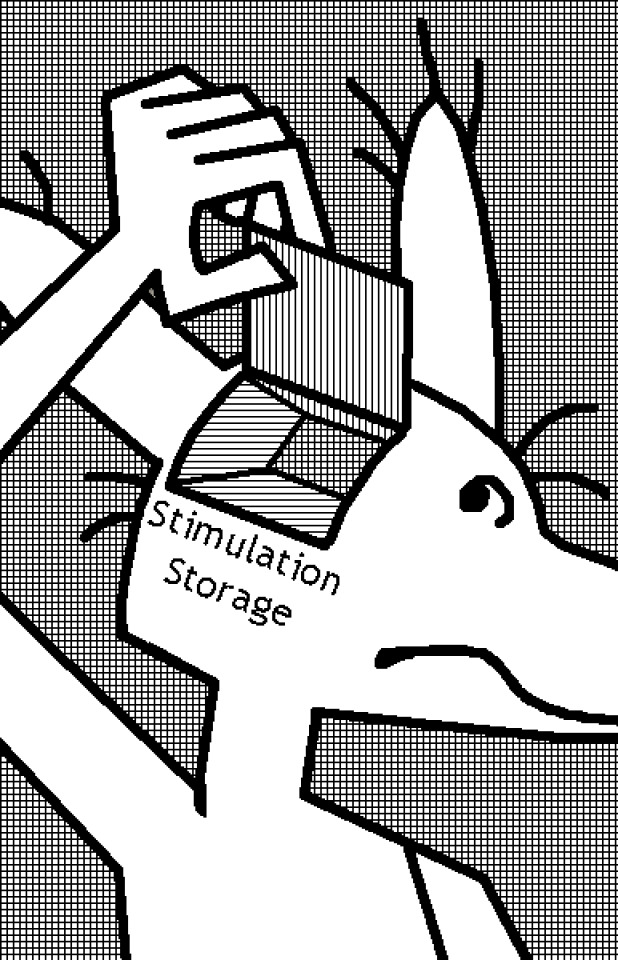

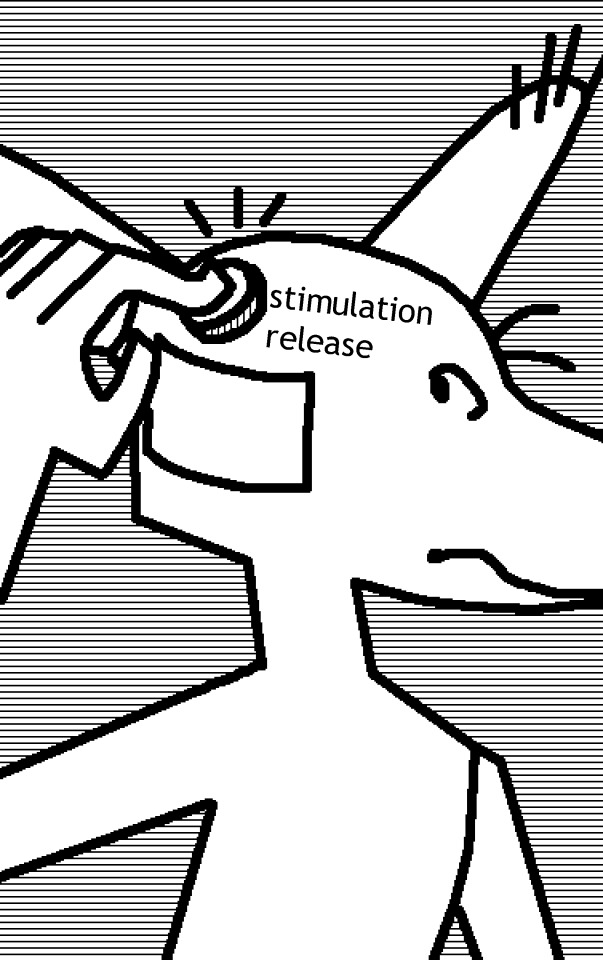

My Epic New Invention For ADHD That Will Put Big Adderall Out Of Business

#this idea came to me from being constantly at home for all these months for quarentine#thinking about how nice it wouldve been if I could have somehow saved up all that stimulation from all 4 awful years of highschool#where i was constantly overstimulated every day#its the perfect solution. u absorb unwanted stimulation when overstimulated to store for later#and release it when you're understimulated#elon musk is working on that brain chip when he should be working on THIS#fucking idiot im smarter than him#art#comic#digital art#gif#adhd#pixel art#everyones a dog its just like A Goofy Movie

12K notes

·

View notes

Note

alex do u know what veni vidi vici means also look -> 🚀 rocket ship did you know elon musk went on a rocket ship apparently he the worlds richest person and made fun of jeff bezo for being number two also his wife divorced him i think that’s very funny if i had a wife we would have a divorce because it’s be funny if i had a wife his name would be pepsi after pepsi cola did you know pepsi very carefully planned their logo with things such as gravitational pull that’s kinda weirdchamp yknow people always call people like issac newton really smart but like was he all he did was discover gravity and gravity’s a pretty obvious thing like if i was around then i could’ve discovered gravity im like ninety nine percent sure i’m smarter than issac newton i could obliterate him alex do you know what veni vidi veci means it sounds like latin and you know latin ily 🚀

veni vidi vici is usually translated as "i came, i saw, i conquered" its a very famous quote by caesar

and yes its latin i love you too

7 notes

·

View notes

Text

Google’s AI ambitions show promise- ‘if it doesn’t kill us’

Googles path to developing machine-learning tools illustrates the stark challenge that tech companies face in trying to make machines act like humans

Machines may yet take over the world, but first they must learn to recognize your dog.

To hear Google executives tell it at their annual developer conference this week, the technology industry is on the cusp of an artificial intelligence, or AI, revolution. Computers, without guidance, will be able to spot disease, engage humans in conversation and creatively outsmart world champions in competition. Such breakthroughs in machine learning have been the stuff of science fiction since Stanley Kubricks 1968 film 2001: A Space Odyssey.

Im incredibly excited about the progress were making, CEO Sundar Pichai told a crowd of 7,000 developers at Google I/O from an outdoor concert stage. Humans can achieve a lot more with the support of AI assisting them.

For better and worse, the companys near-term plans for the technology are more Office Space than Terminator. Think smartphones that can recognize pets in photos, appropriately respond to text messages, and find a window in your schedule where you should probably go to the gym. Googlers repeatedly boasted about how its computers could now automatically tag all of someones pictures with a pet.

Mario Klingemann, a self-described code artist, said he is using Googles machine-learning tools to have his computer make art for him by sorting through pictures on his computer and combining them to form new images.

All I have to do is sit back and let whatever it has created pass by and decide if I like it or not, Klingemann told the audience on Thursday night. In one of his pieces, called Run, Hipster. Run, Googles software had attached some fashionable leather boots to a hip bone.

It may seem like the latest example where Silicon Valley talks about changing society yet gives the world productivity apps. But it also illustrates the stark challenge that technology companies face in trying to make machines act like humans.

Itll be really, really small things that are just a bit more intuitive, said Patrick Fuentes, 34, a mobile developer for Nerdery in Minneapolis. He considered autocorrect on touchscreen keyboards a modern victory for machine learning. Referring to Skynet, the malicious computer network that turns against the human race in Terminator, Fuentes said: Were not there yet.

Mario Queiroz introduces Google Home during the Google I/O 2016 developers conference. Photograph: Stephen Lam/Reuters

Google is considered the sectors leader in artificial intelligence after it began pouring resources into the area about four years ago. During a three-day conference that took on the vibe of a music festival with outdoor merchandise and beer vendors, Pichai made clear he sees machine learning as his companys future.

He unveiled the new Google Assistant, a disembodied voice that will help users decide what movie to see, keep up with email, and control lights and music at home. After showing how Googles machines can now recognize many dogs, he explained how he wants to use the same image recognition technology to spot damage to the eyes caused by diabetes. He boasted that Googles AI software, AlphaGo, showed creativity when it beat a world champion at Go, the Korean board game considered more difficult than chess.

This might seem like an odd push for a firm that makes its money from cataloging the web and showing people ads. But the focus is part of a broader transition in the technology sector from helping consumers explore unlimited options online to telling them the best choice.

For instance, several developers gave the example of a smarter ways to predict what people are looking for online given their past interests.

If this guy likes sports and, I dont know, drinks, you should give him these suggestions, said Mikhail Ivashchenko, the chief technology officer of BeSmart in Kyrgyzstan. It will know exactly what youre looking for.

Unprompted, Ivashchenko said, its not quite Skynet. His nearby friend, David Renton, a recent computer science graduate from Galway, Ireland, then mused how it would be awesome if Google could eventually develop a Skynet equivalent. Think of the applications if it doesnt kill us, Renton said.

John Giannandrea, a Google vice-president of engineering who focuses on machine intelligence, said he wont declare victory until Googles software can read a text and naturally paraphrase it. Another challenge is that even the smartest machines these days have trouble transferring their knowledge from one activity to another.

For instance, AlphaGo, Googles software from the Go competition, wouldnt be able to apply its accumulated skills to chess or tic-tac-toe.

Still, Giannandrea said its hard not to get excited by recent gains in teaching computers how to recognize patterns in images.

The field is getting a little bit overhyped because of the progress were seeing, he said. Things that are hard for people to do we can teach computers to do. Things that are easy for people are hard for computers.

Of course, delegating even small decisions to machines has caused a flurry of discussions about the ethics of artificial intelligence. Several technology leaders, including Steven Hawking and Elon Musk, have called for more research on the social impact of artificial intelligence.

For instance, Klingemann, the code artist, said he is already contemplating whether he needs to change his title.

I have become more of a curator than a creator, he said.

Read more: www.theguardian.com

The post Google’s AI ambitions show promise- ‘if it doesn’t kill us’ appeared first on caredogstips.com.

from WordPress http://ift.tt/2sZTAXf

via IFTTT

0 notes