#Hadoop Ecosystem

Text

Java's Lasting Impact: A Deep Dive into Its Wide Range of Applications

Java programming stands as a towering pillar in the world of software development, known for its versatility, robustness, and extensive range of applications. Since its inception, Java has played a pivotal role in shaping the technology landscape. In this comprehensive guide, we will delve into the multifaceted world of Java programming, examining its wide-ranging applications, discussing its significance, and highlighting how ACTE Technologies can be your guiding light in mastering this dynamic language.

The Versatility of Java Programming:

Java programming is synonymous with adaptability. It's a language that transcends boundaries and finds applications across diverse domains. Here are some of the key areas where Java's versatility shines:

1. Web Development: Java has long been a favorite choice for web developers. Robust and scalable, it powers dynamic web applications, allowing developers to create interactive and feature-rich websites. Java-based web frameworks like Spring and JavaServer Faces (JSF) simplify the development of complex web applications.

2. Mobile App Development: The most widely used mobile operating system in the world, Android, mainly relies on Java for app development. Java's "write once, run anywhere" capability makes it an ideal choice for creating Android applications that run seamlessly on a wide range of devices.

3. Desktop Applications: Java's Swing and JavaFX libraries enable developers to craft cross-platform desktop applications with sophisticated graphical user interfaces (GUIs). This cross-platform compatibility ensures that your applications work on Windows, macOS, and Linux.

4. Enterprise Software: Java's strengths in scalability, security, and performance make it a preferred choice for developing enterprise-level applications. Customer Relationship Management (CRM) systems, Enterprise Resource Planning (ERP) software, and supply chain management solutions often rely on Java to deliver reliability and efficiency.

5. Game Development: Java isn't limited to business applications; it's also a contender in the world of gaming. Game developers use Java, along with libraries like LibGDX, to create both 2D and 3D games. The language's versatility allows game developers to target various platforms.

6. Big Data and Analytics: Java plays a significant role in the big data ecosystem. Popular frameworks like Apache Hadoop and Apache Spark utilize Java for processing and analyzing massive datasets. Its performance capabilities make it a natural fit for data-intensive tasks.

7. Internet of Things (IoT): Java's ability to run on embedded devices positions it well for IoT development. It is used to build applications for smart homes, wearable devices, and industrial automation systems, connecting the physical world to the digital realm.

8. Scientific and Research Applications: In scientific computing and research projects, Java's performance and libraries for data analysis make it a valuable tool. Researchers leverage Java to process and analyze data, simulate complex systems, and conduct experiments.

9. Cloud Computing: Java is a popular choice for building cloud-native applications and microservices. It is compatible with cloud platforms such as AWS, Azure, and Google Cloud, making it integral to cloud computing's growth.

Why Java Programming Matters:

Java programming's enduring significance in the tech industry can be attributed to several compelling reasons:

Platform Independence: Java's "write once, run anywhere" philosophy allows code to be executed on different platforms without modification. This portability enhances its versatility and cost-effectiveness.

Strong Ecosystem: Java boasts a rich ecosystem of libraries, frameworks, and tools that expedite development and provide solutions to a wide range of challenges. Developers can leverage these resources to streamline their projects.

Security: Java places a strong emphasis on security. Features like sandboxing and automatic memory management enhance the language's security profile, making it a reliable choice for building secure applications.

Community Support: Java enjoys the support of a vibrant and dedicated community of developers. This community actively contributes to its growth, ensuring that Java remains relevant, up-to-date, and in line with industry trends.

Job Opportunities: Proficiency in Java programming opens doors to a myriad of job opportunities in software development. It's a skill that is in high demand, making it a valuable asset in the tech job market.

Java programming is a dynamic and versatile language that finds applications in web and mobile development, enterprise software, IoT, big data, cloud computing, and much more. Its enduring relevance and the multitude of opportunities it offers in the tech industry make it a valuable asset in a developer's toolkit.

As you embark on your journey to master Java programming, consider ACTE Technologies as your trusted partner. Their comprehensive training programs, expert guidance, and hands-on experiences will equip you with the skills and knowledge needed to excel in the world of Java development.

Unlock the full potential of Java programming and propel your career to new heights with ACTE Technologies. Whether you're a novice or an experienced developer, there's always more to discover in the world of Java. Start your training journey today and be at the forefront of innovation and technology with Java programming.

8 notes

·

View notes

Text

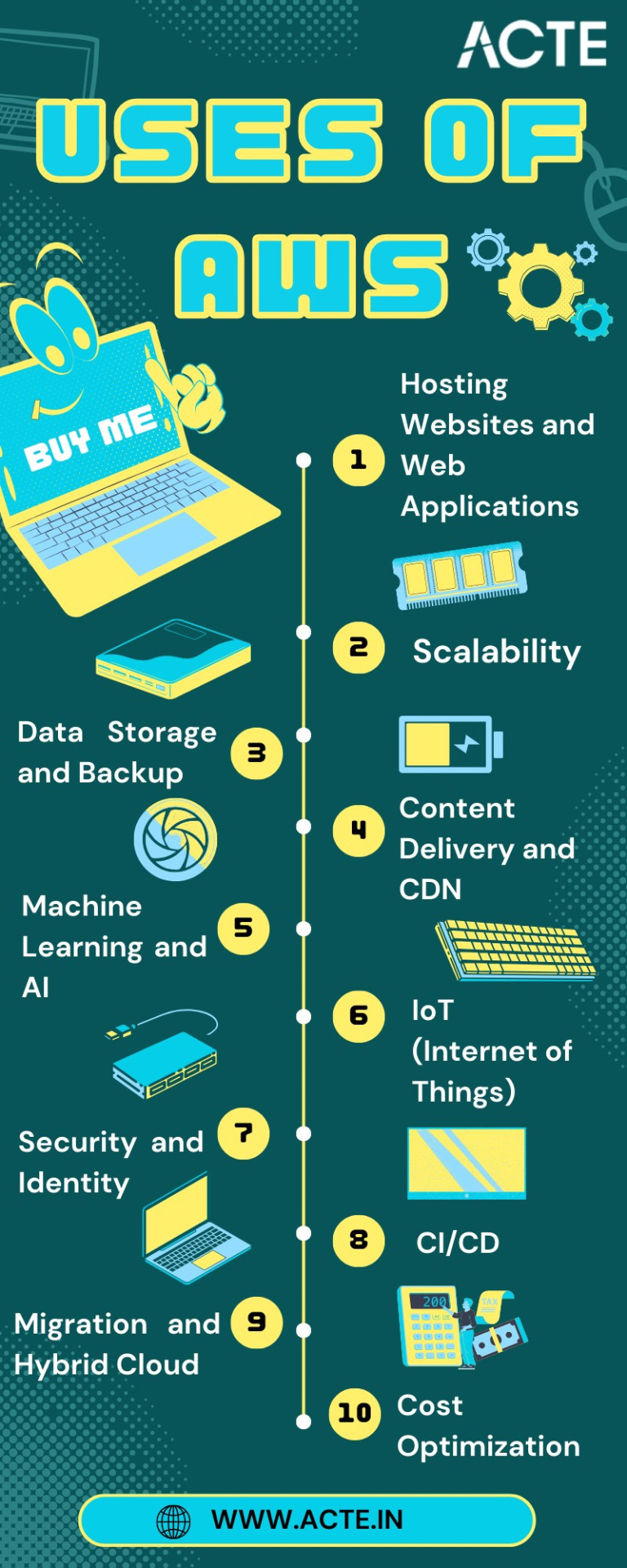

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

Unlock the World of Data Analysis: Programming Languages for Success!

💡 When it comes to data analysis, choosing the right programming language can make all the difference. Here are some popular languages that empower professionals in this exciting field

https://www.clinicalbiostats.com/

🐍 Python: Known for its versatility, Python offers a robust ecosystem of libraries like Pandas, NumPy, and Matplotlib. It's beginner-friendly and widely used for data manipulation, visualization, and machine learning.

📈 R: Built specifically for statistical analysis, R provides an extensive collection of packages like dplyr, ggplot2, and caret. It excels in data exploration, visualization, and advanced statistical modeling.

🔢 SQL: Structured Query Language (SQL) is essential for working with databases. It allows you to extract, manipulate, and analyze large datasets efficiently, making it a go-to language for data retrieval and management.

💻 Java: Widely used in enterprise-level applications, Java offers powerful libraries like Apache Hadoop and Apache Spark for big data processing. It provides scalability and performance for complex data analysis tasks.

📊 MATLAB: Renowned for its mathematical and numerical computing capabilities, MATLAB is favored in academic and research settings. It excels in data visualization, signal processing, and algorithm development.

🔬 Julia: Known for its speed and ease of use, Julia is gaining popularity in scientific computing and data analysis. Its syntax resembles mathematical notation, making it intuitive for scientists and statisticians.

🌐 Scala: Scala, with its seamless integration with Apache Spark, is a valuable language for distributed data processing and big data analytics. It combines object-oriented and functional programming paradigms.

💪 The key is to choose a language that aligns with your specific goals and preferences. Embrace the power of programming and unleash your potential in the dynamic field of data analysis! 💻📈

#DataAnalysis#ProgrammingLanguages#Python#RStats#SQL#Java#MATLAB#JuliaLang#Scala#DataScience#BigData#CareerOpportunities#biostatistics#onlinelearning#lifesciences#epidemiology#genetics#pythonprogramming#clinicalbiostatistics#datavisualization#clinicaltrials

3 notes

·

View notes

Text

Data Engineering Bootcamp Training – Featuring Everything You Need to Accelerate Growth

If you want your team to master data engineering skills, you should explore the potential of data engineering bootcamp training focusing on Python and PySpark. That will provide your team with extensive knowledge and practical experience in data engineering. Here is a closer look at the details of how data engineering bootcamps can help your team grow.

Big Data Concepts and Systems Overview for Data Engineers

This foundational data engineering boot camp module offers a comprehensive understanding of big data concepts, systems, and architectures. The topics covered in this module include emerging technologies such as Apache Spark, distributed computing, and Hadoop Ecosystem components. The topics discussed in this module equip teams to manage complex data engineering challenges in real-world settings.

Translating Data into Operational and Business Insights

Unlike what most people assume, data engineering is a whole lot more than just processing data. It also involves extracting actionable insights to drive business decisions. Data engineering bootcamps course emphasize translating raw data into actionable and operational business insights. Learners are equipped with techniques to transform, aggregate, and analyze data so that they can deliver meaningful insights to stakeholders.

Data Processing Phases

Efficient data engineering requires a deep understanding of the data processing life cycle. With data engineering bootcamps, teams will be introduced to various phases of data processing, such as data storage, processing, ingestion, and visualization. Employees will also gain practical experience in designing and deploying data processing pathways using Python and PySpark. This translates into improved efficiency and reliability in data workflow.

Running Python Programs, Control Statements, and Data Collections

Python is one of the most popular programming languages and is widely used for data engineering purposes. For this reason, data engineering bootcamps offer an introduction to Python programming and cover basic concepts such as running Python programs, common data collections, and control statements. Additionally, teams learn how to create efficient and secure Python code to process and manipulate data efficiently.

Functions and Modules

Effective data engineering workflow demands creating modular and reusable code. Consequently, this module is necessary to understand data engineering work processes comprehensively. The module focuses on functions and modules in Python, enabling teams to transform logic into functions and manage code as a reusable module. The course introduces participants to optimal code organization, thereby improving productivity and sustainability in data engineering projects.

Data Visualization in Python

Clarity in data visualization is vital to communicating key insights and findings to stakeholders. This Data engineering bootcamp module on data visualization emphasizes techniques that utilize libraries such as Seaborn and Matplotlib in Python. During the course, teams learn how to design informative and visually striking charts, plots, and dashboards to communicate complex data relationships effectively.

Final word

To sum up, data engineering bootcamp training using Python and PySpark provides a gateway for teams to venture into the rapidly growing realm of data engineering. The training endows them with a solid foundation in big data concepts, practical experience in Python, and hands-on skills in data processing and visualization. Ensure that you choose an established course provider to enjoy the maximum benefits of data engineering courses.

For more information visit: https://www.webagesolutions.com/courses/WA3020-data-engineering-bootcamp-training-using-python-and-pyspark

0 notes

Text

Java Programming in High Demand: Invest in Your Future with a Boston Java Course

The tech landscape is constantly evolving, and Java remains a dominant force. In Boston, a city brimming with innovation and a thriving tech scene, Java developers are in high demand. Whether you're a recent graduate or looking for a career change, a well-designed Java programming course Boston can equip you with the skills and knowledge to thrive in this dynamic field.

This blog post delves into the reasons why Java programming remains a top choice for businesses and developers alike. We'll explore the lucrative career paths a Java programming course Boston can unlock, the essential skills you'll acquire, and how to choose the right program to launch your Java development journey.

Why Java Programming? A Timeless Language for Modern Applications

Java's popularity stems from its numerous advantages that continue to make it a valuable asset for businesses:

Platform Independence: Java boasts the "Write Once, Run Anywhere" (WORA) principle. Code written in Java can run on any platform with a Java Virtual Machine (JVM), making it highly portable and adaptable across various operating systems.

Object-Oriented Design: Java's object-oriented paradigm promotes code reusability, maintainability, and modularity. This leads to cleaner, more scalable applications that are easier to maintain and update in the long run.

Vast Ecosystem of Libraries and Frameworks: The Java ecosystem offers an extensive collection of pre-written code for common functionalities. Popular libraries and frameworks like Spring, Hibernate, and JUnit streamline development, saving developers time and effort.

Large and Active Community: Java enjoys a vast and supportive developer community. This translates to readily available resources, tutorials, forums, and online communities to assist you throughout your learning journey.

Strong Demand and Lucrative Careers: Java developers remain highly sought-after professionals. A Java programming course Boston can equip you with the skills to secure exciting and well-paying positions in the dynamic Boston tech scene.

These factors combined make Java a powerful and versatile language, ensuring its continued relevance in today's rapidly changing technological landscape.

Career Launchpad: High-Demand Roles for Java Developers in Boston

Boston's booming tech sector presents a wealth of opportunities for aspiring Java developers. Here are some of the promising career paths a Java programming course Boston can empower you to pursue:

Java Software Developer: Design, develop, test, and maintain software applications using Java technologies. You'll work on various projects, from building core functionalities to integrating with existing systems.

Web Developer (Java): Build the backend components of web applications using Java frameworks like Spring Boot. This role involves creating APIs, handling data persistence, and ensuring the smooth flow of data within a web application.

Android Developer: Develop mobile applications for the Android platform, which leverages Java as its core programming language. You'll design user interfaces, write application logic, and optimize performance for a mobile environment.

Enterprise Application Developer: Create and maintain large-scale business applications for organizations in various sectors, such as finance, healthcare, and retail. These applications are often mission-critical and require a strong understanding of Java and enterprise architecture principles.

Big Data Developer: Utilize Java to manage and analyze massive datasets using frameworks like Apache Hadoop and Spark. This field is rapidly growing, with businesses increasingly relying on data insights to make informed decisions.

These are just a few examples, and the possibilities are vast. A Java programming course Boston can provide you with the foundational skills and knowledge to launch your career in various exciting and in-demand Java development roles.

Mastering the Essentials: Skills Gained Through a Java Programming Course Boston

A well-designed Java programming course Boston equips you with the critical skills and knowledge sought after by Boston's leading companies. Here are some key areas you'll delve into:

Java Fundamentals: Gain a solid understanding of Java programming basics, including syntax, data types, control flow statements, object-oriented programming concepts like classes, inheritance, and polymorphism.

Core Java Libraries and APIs: Learn how to utilize essential Java libraries like java.util, java.io, and java.net to perform common tasks such as working with collections, handling input/output operations, and network communication.

Java Frameworks: Explore popular Java frameworks like Spring Boot, which simplifies building web applications by providing a pre-configured development environment with features like dependency injection and auto-configuration.

Database Connectivity: Learn how to connect Java applications to relational databases using technologies like JDBC and object-relational mapping (ORM) tools like Hibernate. This allows you to store and retrieve data efficiently for your applications.

Testing and Debugging: Develop robust applications by understanding testing methodologies and utilizing tools like JUnit to write unit tests and identify potential bugs in your code.

Version Control Systems (VCS): Master tools like Git for version control, allowing you to track code changes, collaborate effectively with other developers, and revert to previous versions if necessary.

Problem-Solving and Algorithmic Thinking: Refine your problem-solving skills and learn how to design efficient algorithms to solve complex programming challenges. This skill is crucial for writing clean, maintainable, and scalable Java code.

Beyond Technical Skills: A well-rounded Java programming course Boston program may also incorporate essential soft skills such as communication, teamwork, and critical thinking. These skills are essential for success in any development environment, allowing you to collaborate effectively with team members, understand project requirements, and communicate technical concepts clearly.

Finding the Perfect Fit: Choosing the Right Java Programming Course Boston

With a plethora of Java programming course Boston options available, selecting the right program is crucial for a successful learning experience. Here are some key factors to consider:

Curriculum and Course Content: Look for a comprehensive curriculum that covers the essential Java fundamentals, object-oriented programming concepts, core Java libraries and frameworks like Spring, and practical exercises to solidify your understanding.

Industry-Experienced Instructors: Instructors with real-world Java development experience can provide valuable insights into industry best practices and current trends. The ability to learn from seasoned professionals can significantly enhance your learning journey.

Hands-on Learning and Project-Based Learning: Effective Java programming course Boston programs emphasize hands-on learning through labs, projects, and simulations. This practical approach allows you to apply your theoretical knowledge to real-world scenarios, preparing you for the demands of a developer role.

Career Support Services: Many programs offer career support services such as resume writing workshops, interview preparation techniques, and job placement assistance. These services can significantly enhance your job search prospects after completing your Java programming course Boston.

Learning Format and Flexibility: Consider your learning style and schedule when choosing a course format. Many programs offer full-time, part-time, online, or blended learning options to cater to diverse needs.

By carefully considering these factors, you can choose a Java programming course Boston that aligns with your learning goals and career aspirations.

Invest in Your Future: Embark on Your Java Development Journey

The world of technology is constantly evolving, and Java remains a cornerstone of software development. By equipping yourself with the essential skills through a well-designed Java programming course Boston, you'll be well-positioned to secure a rewarding career in this dynamic field. Here are some additional tips to optimize your learning experience:

Set SMART Goals: Define Specific, Measurable, Achievable, Relevant, and Time-bound goals for your Java learning journey. Setting clear goals will help you stay focused and motivated throughout the course.

Practice Consistently: Regular coding practice is essential for mastering Java syntax, concepts, and problem-solving skills. Dedicate time each day to work on coding exercises, projects, or personal challenges.

Embrace the Community: Don't hesitate to connect with your instructors, classmates, or online Java communities. Ask questions, share your experiences, and learn from others. The Java developer community is generally open and supportive, and this network can be invaluable throughout your career.

Stay Updated: The tech landscape is ever-changing. Make a habit of staying updated on the latest Java trends, frameworks, and libraries by following industry blogs, attending meetups, and participating in online forums.

Join the Thriving Boston Tech Scene

Boston offers a vibrant tech ecosystem with established companies and innovative startups seeking skilled Java developers. A Java programming course Boston can be your gateway to exciting career opportunities in this dynamic city.

Ready to launch your Java programming journey and become a sought-after developer in Boston? Don't wait any longer! Enroll in our comprehensive Java Programming Course Boston and unlock a world of possibilities in Boston's thriving tech scene.

Our program provides the in-depth curriculum, experienced instructors, and hands-on training you need to master the critical skills sought after by top companies in Boston. Here's what sets our Java Programming Course Boston apart:

Industry-aligned curriculum: Learn the latest Java technologies, object-oriented programming concepts, and frameworks that are in high demand.

Expert instructors: Gain knowledge from seasoned Java professionals with real-world experience who can guide you towards success.

Hands-on Labs and Projects: Apply your learning through practical exercises and projects, simulating real-world development scenarios.

Career Support Services: Get personalized guidance on resume writing, interview preparation, and job search strategies to land your dream Java developer role.

Flexible Learning Options: Choose from full-time, part-time, online, or blended learning formats to fit your schedule and learning style.

Enroll in our Java Programming Course Boston today and take the first step towards a rewarding career in the cloud! Visit our website to learn more about our program details, curriculum, and upcoming course schedules.

Invest in your future – enroll now and become a sought-after Java developer in Boston!

0 notes

Text

Transforming Business Insights: Leading Data Analysis Companies in Bangalore.

Introduction

Bangalore, often dubbed the Silicon Valley of India, stands as a dynamic hub for technology companies and startups. Amidst this thriving IT ecosystem, data analysis firms are pivotal in converting raw data into strategic business insights across diverse industries. In this article, we explore the forefront of Data Analysis Companies in Bangalore by showcasing leading companies that drive innovation and reshape business strategies through data-driven decision-making.

Importance of Data Analysis in Bangalore

Bangalore, recognized as a hub for data science and analytics, owes its prominence to a convergence of factors: a deep pool of skilled professionals, robust IT infrastructure, and a supportive startup ecosystem. Today, data analysis is indispensable for businesses seeking a competitive edge, operational efficiency, enhanced customer experience, and sustained growth. Consequently, Bangalore has seen the emergence of numerous data analysis companies offering specialized services spanning predictive analytics and business intelligence solutions.

Key Players in Bangalore's Data Analysis Industry

1. Pioneering Advanced Analytics

At the forefront of advanced analytics solutions in Bangalore, our company boasts a team of seasoned data scientists and domain experts specializing in predictive modeling, machine learning algorithms, and AI-driven analytics. We have successfully implemented data-driven strategies for leading enterprises in diverse sectors such as e-commerce, healthcare, finance, and manufacturing, enabling our clients to optimize processes and unlock new revenue streams.

2. Innovating with Big Data

In the bustling landscape of Bangalore's data analysis industry, one company stands out for its expertise in big data analytics. Specializing in leveraging cutting-edge technologies like Hadoop and Spark, The company has carved a niche for itself, empowering businesses to harness the potential of large datasets for strategic decision-making and better business outcomes across diverse industry verticals, including retail, telecommunications, and logistics.

3. Driving Business Intelligence Excellence

Amidst Bangalore's dynamic technology landscape, one company has earned a distinguished reputation for its exceptional expertise in business intelligence and data visualization services. By integrating disparate data sources and deploying intuitive dashboards, they empower clients with real-time analytics and interactive reporting tools. This capability enables stakeholders to make informed decisions swiftly, resulting in enhanced operational efficiency and improved performance across departments.

4. Specializing in Healthcare Analytics

Amidst Bangalore's dynamic technology ecosystem, one company has emerged as a trailblazer in healthcare analytics, specializing in tailored solutions for the medical sector. Leveraging advanced analytics platforms that harness electronic health records (EHRs), medical imaging data, and patient demographics, this company's innovative approach assists healthcare providers in optimizing treatments, reducing costs, and ultimately enhancing patient outcomes. The impact of their work extends far beyond Bangalore, with clients spanning across India and globally.

Industry Trends and Challenges

The data analysis industry in Bangalore is dynamic, characterized by continuous innovation and evolving technologies. Several trends are shaping its trajectory:

AI and Machine Learning: Artificial Intelligence (AI) and Machine Learning (ML) are transformative technologies that have revolutionized various industries, including data analysis, healthcare, finance, retail, and more.

IoT Integration: Internet of Things (IoT) integration is a critical aspect of modern technology ecosystems, especially in cities like Bangalore known for its innovative and tech-driven environment.

Data Privacy and Security: Data privacy and security are critical aspects of information management, especially in today's digital age where data breaches and privacy violations are increasingly prevalent.

Demand for Data Talent: The demand for data talent, including data scientists, data analysts, and data engineers, continues to grow rapidly in Bangalore and globally. This demand is driven by the increasing volume and complexity of data generated by organizations across various industries.

Conclusion

The landscape of Data Analysis Companies in Bangalore is a testament to the city's global standing as a technology hub. These companies play a crucial role in driving digital transformation and empowering businesses to harness data as a strategic asset. In Bangalore's dynamic environment of innovation and entrepreneurship, data analysis firms are poised to shape the future of the industry and contribute significantly to economic growth in the region. Key areas of expertise among these companies include advanced analytics, big data processing, business intelligence, and specialized domains such as healthcare analytics. Their capabilities exemplify the transformative impact of data-driven insights, enabling businesses to make informed decisions, optimize operations, and unlock new opportunities.

0 notes

Text

Data Engineering Course in Hyderabad | AWS Data Engineer Training

Overview of AWS Data Modeling

Data modeling in AWS involves designing the structure of your data to effectively store, manage, and analyse it within the Amazon Web Services (AWS) ecosystem. AWS provides various services and tools that can be used for data modeling, depending on your specific requirements and use cases. Here's an overview of key components and considerations in AWS data modeling

AWS Data Engineer Training

Understanding Data Requirements: Begin by understanding your data requirements, including the types of data you need to store, the volume of data, the frequency of data updates, and the anticipated usage patterns.

Selecting the Right Data Storage Service: AWS offers a range of data storage services suitable for different data modeling needs, including:

Amazon S3 (Simple Storage Service): A scalable object storage service ideal for storing large volumes of unstructured data such as documents, images, and logs.

Amazon RDS (Relational Database Service): Managed relational databases supporting popular database engines like MySQL, PostgreSQL, Oracle, and SQL Server.

Amazon Redshift: A fully managed data warehousing service optimized for online analytical processing (OLAP) workloads.

Amazon DynamoDB: A fully managed NoSQL database service providing fast and predictable performance with seamless scalability.

Amazon Aurora: A high-performance relational database compatible with MySQL and PostgreSQL, offering features like high availability and automatic scaling. - AWS Data Engineering Training

Schema Design: Depending on the selected data storage service, design the schema to organize and represent your data efficiently. This involves defining tables, indexes, keys, and relationships for relational databases or determining the structure of documents for NoSQL databases.

Data Ingestion and ETL: Plan how data will be ingested into your AWS environment and perform any necessary Extract, Transform, Load (ETL) operations to prepare the data for analysis. AWS provides services like AWS Glue for ETL tasks and AWS Data Pipeline for orchestrating data workflows.

Data Access Control and Security: Implement appropriate access controls and security measures to protect your data. Utilize AWS Identity and Access Management (IAM) for fine-grained access control and encryption mechanisms provided by AWS Key Management Service (KMS) to secure sensitive data.

Data Processing and Analysis: Leverage AWS services for data processing and analysis tasks, such as - AWS Data Engineering Training in Hyderabad

Amazon EMR (Elastic MapReduce): Managed Hadoop framework for processing large-scale data sets using distributed computing.

Amazon Athena: Serverless query service for analysing data stored in Amazon S3 using standard SQL.

Amazon Redshift Spectrum: Extend Amazon Redshift queries to analyse data stored in Amazon S3 data lakes without loading it into Redshift.

Monitoring and Optimization: Continuously monitor the performance of your data modeling infrastructure and optimize as needed. Utilize AWS CloudWatch for monitoring and AWS Trusted Advisor for recommendations on cost optimization, performance, and security best practices.

Scalability and Flexibility: Design your data modeling architecture to be scalable and flexible to accommodate future growth and changing requirements. Utilize AWS services like Auto Scaling to automatically adjust resources based on demand. - Data Engineering Course in Hyderabad

Compliance and Governance: Ensure compliance with regulatory requirements and industry standards by implementing appropriate governance policies and using AWS services like AWS Config and AWS Organizations for policy enforcement and auditing.

By following these principles and leveraging AWS services effectively, you can create robust data models that enable efficient storage, processing, and analysis of your data in the cloud.

Visualpath is the Leading and Best Institute for AWS Data Engineering Online Training, in Hyderabad. We at AWS Data Engineering Training provide you with the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit: https://www.visualpath.in/aws-data-engineering-with-data-analytics-training.html

#AWS Data Engineering Online Training#AWS Data Engineering Training#Data Engineering Training in Hyderabad#AWS Data Engineering Training in Hyderabad#Data Engineering Course in Ameerpet#AWS Data Engineering Training Ameerpet#Data Engineering Course in Hyderabad#AWS Data Engineering Training Institute

0 notes

Text

How can you achieve your dream job with AIDS engineering?

Dream Job with AIDS

Technology has become an integral part of our lives. A quick glance at the technology landscape points us towards artificial intelligence (AI) and its power. From smart assistants like Siri or Alexa, customer care chatbots, robotic vacuum cleaners and high-tech home appliances to self-driving vehicles and facial recognition, AI-led technologies are rapidly becoming life’s mainstays. This, along with the rising demand for high-performance technologies in various industries, is a positive sign for job seekers of top engineering colleges in Jaipur looking to make a career in AI.

We are already seeing AI becoming an important aspect of automated, smart business ecosystems and enabling smart solutions. Innovations in AI are empowering industry verticals, such as healthcare, automobile, finance, manufacturing and retail. In healthcare, AI is being trained to mimic human cognition to undertake the critical tasks of diagnosis, assistance for surgeries and beyond. Similarly, the retail sector is deploying AI-led systems for enhancing customer journey with solutions like chatbots as well as to improve demand forecasting, pricing decisions and product placement. With such applications, AI is bringing technology to the core of all businesses. From life-saving equipment to self-driving cars, AI will soon be in almost all applications and devices.

Career Opportunities

There are several roles that students of best engineering colleges in Jaipur can explore are as follows:

Computer vision - Concepts like computer vision can open doors for some of the most exciting opportunities for curious technology students and professionals. It is one of the many recent innovations driving AI and machine learning (ML) job trends. Computer vision helps tech teams of private engineering colleges in Jaipur to gather insights through audio, video and other formats. This data or pattern is then analysed for training AI-led systems or feeding systems to arrive at smart decisions. This is an emerging field for tech enthusiasts to explore. Freshers can also explore a career as a computer vision professional with the right training.

AI and ML developer/engineer - AnAI and ML engineer/developer oversees performing statistical analysis, running tests and implementing AI designs. Furthermore, they also develop deep learning systems, manage ML programmes, implement ML algorithms, among other things. For a career as an AI and ML developer, an individual need excellent programming skills in Python, Scala and Java, and experience on frameworks like Azure ML Studio, Apache Hadoop, and Amazon ML.

AI analyst/specialist - An AI analyst or specialist is similar to an AI engineer of Best BTech college in Jaipur. The key responsibility is to enhance the services delivered by an industry, using data analysis skills to study the trends and patterns of certain datasets. With increasing use of AI systems across sectors, AI analysts or specialists are becoming very important. They must have a good background in programming, system analysis and computational statistics. A bachelor’s or equivalent degree can help you land an entry-level position, but a master’s or equivalent is a must for core AI analyst positions.

Data scientist - The role involves identifying valuable data streams and sources, working along with data engineers of best engineering colleges in Jaipur for the automation of data collection processes, dealing with big data and analysing massive amounts of data to learn trends and patterns for developing predictive ML models. A data scientist is also responsible for coming up with solutions and strategies for decision-makers with the help of visualisation tools and techniques. SQL, Python, Scala, SAS, SSAS and R are the most useful tools for a data scientist. They are required to work on frameworks such as Amazon ML, Azure ML Studio, Spark MLlib and so on.

Key Skills

Now is the perfect time to explore or begin a career in AI. With the right learning and willingness to explore, you are sure to make a promising career out of it. However, to explore a career in AI, along with the right skills you must acquire the relevant technical knowledge and skill sets. First, experience with calculus and linear algebra are crucial. Second, you should have some knowledge and experience in at least one of the following programming languages out of Python, C/C++, MATLAB, etc. Along with the above, it is beneficial to also have some additional skills and knowledge including Industry knowledge and awareness, Problem-solving skills, Deep knowledge of or expertise in applied mathematics and algorithms, Knowledge of AI and ML systems, Management and leadership skills, etc.

First Steps

If students of engineering colleges Jaipur are not a technology professional or from the industry, the first step is to research and gather more knowledge. They can read several articles available online, talk to AI professionals, research technology colleges and programmes and also try to understand what interests you.

Although an undergraduate degree in computer science is a good starting point, a master’s degree in AI can provide first-hand experience and knowledge that can help you secure a position and give you an advantage over other candidates.

There are several undergraduate and graduate courses specific to data analytics and AI that you can choose at technology institutes and colleges across nation. These courses not only offer great insights into the industry and prepare you with the right skills, they also help you figure out your area of interest.

Career opportunities in AI and related sectors are very promising. It is also an interesting field to explore careers across industry sectors and seek global exposure. Industry predictions suggest that the industry will continue to grow explosively with rising demand for technology solutions in industries. All you need to do is find out what interests you and to keep learning.

Source: Click Here

#best btech college in jaipur#best engineering college in jaipur#best btech college in rajasthan#best engineering college in rajasthan#best private engineering college in jaipur#top engineering college in jaipur

0 notes

Text

Unveiling Java's Multifaceted Utility: A Deep Dive into Its Applications

In software development, Java stands out as a versatile and ubiquitous programming language with many applications across diverse industries. From empowering enterprise-grade solutions to driving innovation in mobile app development and big data analytics, Java's flexibility and robustness have solidified its status as a cornerstone of modern technology.

Let's embark on a journey to explore the multifaceted utility of Java and its impact across various domains.

Powering Enterprise Solutions

Java is the backbone for developing robust and scalable enterprise applications, facilitating critical operations such as CRM, ERP, and HRM systems. Its resilience and platform independence make it a preferred choice for organizations seeking to build mission-critical applications capable of seamlessly handling extensive data and transactions.

Shaping the Web Development Landscape

Java is pivotal in web development, enabling dynamic and interactive web applications. With frameworks like Spring and Hibernate, developers can streamline the development process and build feature-rich, scalable web solutions. Java's compatibility with diverse web servers and databases further enhances its appeal in web development.

Driving Innovation in Mobile App Development

As the foundation for Android app development, Java remains a dominant force in the mobile app ecosystem. Supported by Android Studio, developers leverage Java's capabilities to craft high-performance and user-friendly mobile applications for a global audience, contributing to the ever-evolving landscape of mobile technology.

Enabling Robust Desktop Solutions

Java's cross-platform compatibility and extensive library support make it an ideal choice for developing desktop applications. With frameworks like Java Swing and JavaFX, developers can create intuitive graphical user interfaces (GUIs) for desktop software, ranging from simple utilities to complex enterprise-grade solutions.

Revolutionizing Big Data Analytics

In big data analytics, Java is a cornerstone for various frameworks and tools to process and analyze massive datasets. Platforms like Apache Hadoop, Apache Spark, and Apache Flink leverage Java's capabilities to unlock valuable insights from vast amounts of data, empowering organizations to make data-driven decisions.

Fostering Innovation in Scientific Research

Java's versatility extends to scientific computing and research, where it is utilized to develop simulations, modeling tools, and data analysis software. Its performance and extensive library support make it an invaluable asset in bioinformatics, physics, and engineering, driving innovation and advancements in scientific research.

Empowering Embedded Systems

With its lightweight runtime environment, Java Virtual Machine (JVM), Java finds applications in embedded systems development. From IoT devices to industrial automation systems, Java's flexibility and reliability make it a preferred choice for building embedded solutions that require seamless performance across diverse hardware platforms.

In summary, Java's multifaceted utility and robustness make it an indispensable tool in the arsenal of modern software developers. Whether powering enterprise solutions, driving innovation in mobile app development, or revolutionizing big data analytics, Java continues to shape the technological landscape and drive advancements across various industries. As a versatile and enduring programming language, Java remains at the forefront of innovation, paving the way for a future powered by cutting-edge software solutions.

2 notes

·

View notes

Text

"Apache Spark: The Leading Big Data Platform with Fast, Flexible, Developer-Friendly Features Used by Major Tech Giants and Government Agencies Worldwide."

What is Apache Spark? The Big Data Platform that Crushed Hadoop

Apache Spark is a powerful data processing framework designed for large-scale SQL, batch processing, stream processing, and machine learning tasks. With its fast, flexible, and developer-friendly nature, Spark has become the leading platform in the world of big data. In this article, we will explore the key features and real-world applications of Apache Spark, as well as its significance in the digital age.

Apache Spark defined

Apache Spark is a data processing framework that can quickly perform processing tasks on very large data sets. It can distribute data processing tasks across multiple computers, either on its own or in conjunction with other distributed computing tools. This capability is crucial in the realm of big data and machine learning, where massive computing power is necessary to analyze and process vast amounts of data. Spark eases the programming burden of these tasks by offering an easy-to-use API that abstracts away much of the complexities of distributed computing and big data processing.

What is Spark in big data

In the context of big data, the term "big data" refers to the rapid growth of various types of data - structured data in database tables, unstructured data in business documents and emails, semi-structured data in system log files and web pages, and more. Unlike traditional analytics, which focused solely on structured data within data warehouses, modern analytics encompasses insights derived from diverse data sources and revolves around the concept of a data lake. Apache Spark was specifically designed to address the challenges posed by this new paradigm.

Originally developed at U.C. Berkeley in 2009, Apache Spark has become a prominent distributed processing framework for big data. Flexibility lies at the core of Spark's appeal, as it can be deployed in various ways and supports multiple programming languages such as Java, Scala, Python, and R. Furthermore, Spark provides extensive support for SQL, streaming data, machine learning, and graph processing. Its widespread adoption by major companies and organizations, including Apple, IBM, and Microsoft, highlights its significance in the big data landscape.

Spark RDD

Resilient Distributed Dataset (RDD) forms the foundation of Apache Spark. An RDD is an immutable collection of objects that can be split across a computing cluster. Spark performs operations on RDDs in a parallel batch process, enabling fast and scalable parallel processing. The RDD concept allows Spark to transform user's data processing commands into a Directed Acyclic Graph (DAG), which serves as the scheduling layer determining the tasks, nodes, and sequence of execution.

Apache Spark can create RDDs from various data sources, including text files, SQL databases, NoSQL stores like Cassandra and MongoDB, Amazon S3 buckets, and more. Moreover, Spark's core API provides built-in support for joining data sets, filtering, sampling, and aggregation, offering developers powerful data manipulation capabilities.

Spark SQL

Spark SQL has emerged as a vital component of the Apache Spark project, providing a high-level API for processing structured data. Spark SQL adopts a dataframe approach inspired by R and Python's Pandas library, making it accessible to both developers and analysts. Alongside standard SQL support, Spark SQL offers a wide range of data access methods, including JSON, HDFS, Apache Hive, JDBC, Apache ORC, and Apache Parquet. Additional data stores, such as Apache Cassandra and MongoDB, can be integrated using separate connectors from the Spark Packages ecosystem.

Spark SQL utilizes Catalyst, Spark's query optimizer, to optimize data locality and computation. Since Spark 2.x, Spark SQL's dataframe and dataset interfaces have become the recommended approach for development, promoting a more efficient and type-safe method for data processing. While the RDD interface remains available, it is typically used when lower-level control or specialized performance optimizations are required.

Spark MLlib and MLflow

Apache Spark includes libraries for machine learning and graph analysis at scale. MLlib offers a framework for building machine learning pipelines, facilitating the implementation of feature extraction, selection, and transformations on structured datasets. The library also features distributed implementations of clustering and classification algorithms, such as k-means clustering and random forests.

MLflow, although not an official part of Apache Spark, is an open-source platform for managing the machine learning lifecycle. The integration of MLflow with Apache Spark enables features such as experiment tracking, model registries, packaging, and user-defined functions (UDFs) for easy inference at scale.

Structured Streaming

Structured Streaming provides a high-level API for creating infinite streaming dataframes and datasets within Apache Spark. It supersedes the legacy Spark Streaming component, addressing pain points encountered by developers in event-time aggregations and late message delivery. With Structured Streaming, all queries go through Spark's Catalyst query optimizer and can be run interactively, allowing users to perform SQL queries against live streaming data. The API also supports watermarking, windowing techniques, and the ability to treat streams as tables and vice versa.

Delta Lake

Delta Lake is a separate project from Apache Spark but has become essential in the Spark ecosystem. Delta Lake augments data lakes with features such as ACID transactions, unified querying semantics for batch and stream processing, schema enforcement, full data audit history, and scalability for exabytes of data. Its adoption has contributed to the rise of the Lakehouse Architecture, eliminating the need for a separate data warehouse for business intelligence purposes.

Pandas API on Spark

The Pandas library is widely used for data manipulation and analysis in Python. Apache Spark 3.2 introduced a new API that allows a significant portion of the Pandas API to be used transparently with Spark. This compatibility enables data scientists to leverage Spark's distributed execution capabilities while benefiting from the familiar Pandas interface. Approximately 80% of the Pandas API is currently covered, with ongoing efforts to increase coverage in future releases.

Running Apache Spark

An Apache Spark application consists of two main components: a driver and executors. The driver converts the user's code into tasks that can be distributed across worker nodes, while the executors run these tasks on the worker nodes. A cluster manager mediates communication between the driver and executors. Apache Spark can run in a stand-alone cluster mode, but is more commonly used with resource or cluster management systems such as Hadoop YARN or Kubernetes. Managed solutions for Apache Spark are also available on major cloud providers, including Amazon EMR, Azure HDInsight, and Google Cloud Dataproc.

Databricks Lakehouse Platform

Databricks, the company behind Apache Spark, offers a managed cloud service that provides Apache Spark clusters, streaming support, integrated notebook development, and optimized I/O performance. The Databricks Lakehouse Platform, available on multiple cloud providers, has become the de facto way many users interact with Apache Spark.

Apache Spark Tutorials

If you're interested in learning Apache Spark, we recommend starting with the Databricks learning portal, which offers a comprehensive introduction to Apache Spark (with a slight bias towards the Databricks Platform). For a more in-depth exploration of Apache Spark's features, the Spark Workshop is a great resource. Additionally, books such as "Spark: The Definitive Guide" and "High-Performance Spark" provide detailed insights into Apache Spark's capabilities and best practices for data processing at scale.

Conclusion

Apache Spark has revolutionized the way large-scale data processing and analytics are performed. With its fast and developer-friendly nature, Spark has surpassed its predecessor, Hadoop, and become the leading big data platform. Its extensive features, including Spark SQL, MLlib, Structured Streaming, and Delta Lake, make it a powerful tool for processing complex data sets and building machine learning models. Whether deployed in a stand-alone cluster or as part of a managed cloud service like Databricks, Apache Spark offers unparalleled scalability and performance. As companies increasingly rely on big data for decision-making, mastering Apache Spark is essential for businesses seeking to leverage their data assets effectively.

Sponsored by RoamNook

This article was brought to you by RoamNook, an innovative technology company specializing in IT consultation, custom software development, and digital marketing. RoamNook's main goal is to fuel digital growth by providing cutting-edge solutions for businesses. Whether you need assistance with data processing, machine learning, or building scalable applications, RoamNook has the expertise to drive your digital transformation. Visit https://www.roamnook.com to learn more about how RoamNook can help your organization thrive in the digital age.

0 notes

Text

Unlocking the Power of Big Data: Industry Software Training on Spark, PySpark AWS, Spark Applications, Spark Ecosystem, Hadoop, and Mastering PySpark

In the era of big data, organizations are constantly seeking ways to extract valuable insights from vast amounts of information. This has led to the emergence of powerful tools and technologies that enable professionals to effectively process and analyze big data. In this blog post, we will explore the significance of industry software training on Spark, PySpark AWS, Spark applications, Spark Ecosystem, Hadoop, and mastering PySpark. These technologies have revolutionized the field of big data analytics and provide professionals with a competitive edge in the industry.

Spark is an open-source distributed computing system that allows for efficient and scalable data processing. By training on Spark, professionals gain the ability to handle complex analytics tasks with ease, thanks to its in-memory processing capabilities. PySpark, the Python API for Spark, offers a user-friendly interface for data manipulation, making it accessible to a wider range of professionals. Mastering Spark and PySpark provides professionals with the tools to tackle big data challenges and deliver valuable insights.

AWS (Amazon Web Services) is a leading cloud computing platform that seamlessly integrates with PySpark. By training on PySpark AWS, professionals can harness the benefits of cloud computing, such as scalability, flexibility, and cost-effectiveness. AWS offers a range of services that complement PySpark, including Amazon EMR (Elastic MapReduce), which simplifies the setup and management of Spark clusters. With AWS, professionals can leverage the elasticity of the cloud to scale resources based on workload demands, leading to optimized performance and reduced costs.

Spark provides a versatile platform for building a wide range of applications, including batch processing, interactive queries, streaming, and machine learning. By training on Spark applications, professionals can design and implement data processing pipelines to transform raw data into actionable insights. Additionally, Spark seamlessly integrates with other popular big data tools and frameworks, forming a robust ecosystem. This integration with tools like Hadoop, Hive, and Kafka allows professionals to leverage existing data infrastructure and maximize the capabilities of Spark.

PySpark simplifies data manipulation and analysis with its expressive Python interface. By mastering PySpark, professionals gain the ability to clean, transform, and analyze large datasets using Python's rich ecosystem of libraries. Furthermore, PySpark provides extensive support for machine learning, making it a powerful tool for building and deploying scalable models. By training on mastering PySpark, professionals can unlock the full potential of machine learning algorithms and apply them to real-world business problems.

Industry software training on Spark, PySpark AWS, Spark applications, Spark Ecosystem, Hadoop, and mastering PySpark offers professionals a unique opportunity to excel in the field of big data analytics. By acquiring these skills, individuals can effectively process and analyze massive datasets, derive valuable insights, and drive data-driven decision-making in their organizations. The demand for professionals with expertise in these technologies is continuously growing, and career opportunities in data engineering, data science, and big data analytics await those who invest in mastering these industry-leading tools. So, whether you are an aspiring data professional or looking to enhance your existing skill set, consider embarking on this IT training journey to unlock the full potential of big data analytics.

1 note

·

View note

Text

"Harnessing the Power of Data: Dive into TechMindz's Big Data Course"

In today's digital age, data is king. Organizations across industries are collecting vast amounts of data, but the real challenge lies in analyzing and deriving meaningful insights from this data. Big Data technologies have emerged as the solution to this challenge, enabling organizations to process, store, and analyze massive datasets quickly and efficiently. TechMindz's Big Data course is designed to help you master the tools and techniques needed to work with Big Data and unlock its full potential. Here's why you should consider enrolling in this transformative course:

Why Big Data?

Big Data technologies are revolutionizing the way organizations operate by enabling them to make data-driven decisions. By analyzing large datasets, organizations can gain valuable insights that can help them improve operations, enhance customer experiences, and drive innovation. With Big Data skills, you can become a valuable asset to any organization looking to leverage data for strategic advantage.

What You Will Learn

TechMindz's Big Data course covers a comprehensive range of topics to ensure you have a thorough understanding of Big Data technologies. Some of the key topics covered in the course include:

Introduction to Big Data and its importance

Understanding the Big Data ecosystem, including Hadoop, Spark, and Kafka

Hands-on experience with Big Data tools and technologies

Data processing and analysis using MapReduce and Spark

Data storage and management using HDFS and NoSQL databases

Real-world case studies and projects

Course Format

The Big Data course at TechMindz is designed to be interactive and engaging. The course includes video lectures, hands-on exercises, and quizzes to test your knowledge. You'll also have access to expert instructors who can provide guidance and support throughout the course. Whether you're a beginner or an experienced professional, the course is suitable for all skill levels.

Career Opportunities

Big Data is one of the fastest-growing fields in technology, with high demand for skilled professionals. By completing TechMindz's Big Data course, you'll be well-equipped to pursue a career as a Big Data engineer, data analyst, or data scientist. The skills you'll gain in this course are highly sought after by organizations around the world, making it a valuable investment in your future.

Conclusion

If you're looking to take your career to the next level and become a data expert, TechMindz's Big Data course is the perfect choice for you. Enroll now and unlock the power of Big Data! Techmindz Infopark Kochi Kerala

https://www.techmindz.com/big-data-analytics-training/

0 notes

Text

The Role of Open Source Data Analytics Software in Empowering Organizations

In the data-driven landscape of the modern business world, the ability to analyze vast quantities of information has become indispensable. Data analytics software stands at the forefront of this revolution, offering tools that transform raw data into actionable insights. Among these, open source data analytics software has emerged as a powerful force, democratizing access to advanced analytics capabilities and empowering organizations of all sizes. The adoption of open source solutions such as R, Python, and Apache Hadoop is reshaping the way businesses approach data analysis, offering a blend of accessibility, flexibility, and community-supported innovation.

Democratizing Data Analytics

One of the most significant contributions of open source data analytics software is its role in democratizing data analytics. Traditional proprietary analytics tools often come with high licensing fees, putting them out of reach for small to medium-sized enterprises (SMEs) and startups. Open source software, on the other hand, is freely available, enabling organizations with limited budgets to leverage sophisticated analytics capabilities. This accessibility fosters a more level playing field where SMEs can compete with larger corporations, driving innovation and efficiency across industries.

Flexibility and Customization

Open source data analytics software offers unparalleled flexibility, allowing organizations to tailor tools to their specific needs. Unlike closed-source platforms, which may limit customization or require expensive add-ons for additional functionality, open source solutions enable users to modify and extend the software. This adaptability is crucial for businesses operating in niche markets or with unique data processing requirements. The ability to customize analytics tools not only enhances operational efficiency but also allows for the development of bespoke solutions that can provide a competitive edge.

Fostering Innovation through Community Collaboration

The open source model thrives on community collaboration, drawing on the collective expertise of developers, data scientists, and industry experts worldwide. This collaborative environment accelerates the pace of innovation, with continuous improvements, new features, and bug fixes being contributed regularly. Users benefit from a rapidly evolving ecosystem of tools that are often at the cutting edge of data analytics technology. Moreover, the open exchange of ideas and solutions within the community fosters learning and skill development, enabling users to stay abreast of the latest trends and techniques in data science.

Challenges and Considerations

Despite its many advantages, the adoption of open source data analytics software is not without challenges. Organizations may face issues related to software support, as community-based assistance varies in responsiveness and expertise. Ensuring data security and compliance with industry regulations when using open source tools can also be a concern, necessitating additional measures to protect sensitive information. Furthermore, successfully integrating open source analytics into existing IT infrastructures requires a certain level of technical proficiency, highlighting the importance of investing in training and development for staff.

The Future of Open Source in Data Analytics

Looking forward, the role of open source software in data analytics is poised to grow even more significant. As businesses increasingly recognize the value of data-driven decision-making, the demand for accessible, flexible analytics tools will continue to rise. The open source community's commitment to innovation and collaboration suggests that these platforms will remain at the forefront of technological advancements, offering powerful solutions for predictive analytics, machine learning, and beyond.

Moreover, the trend towards hybrid models that combine open source flexibility with the support and security features of proprietary software indicates a promising direction for the future of data analytics. Such models can provide the best of both worlds, enabling organizations to leverage the strengths of open source while mitigating its limitations.

Conclusion

Open source data analytics software has fundamentally transformed the landscape of business intelligence, offering tools that empower organizations to harness the power of data. By providing free access to sophisticated analytics capabilities, fostering a culture of collaboration and innovation, and offering unparalleled flexibility, open source solutions are enabling businesses to drive efficiency, innovation, and competitive advantage. As the data analytics field continues to evolve, open source software will undoubtedly play a crucial role in shaping its future, democratizing access to information and insights that fuel organizational success.

0 notes

Text

Benefits of Learning Java in 2024

In the fast-paced world of technology, Java continues to stand out as a prominent programming language with numerous benefits for both beginners and experienced developers. As we navigate through 2024, the importance of learning Java remains significant, offering a range of advantages that contribute to personal growth, career opportunities, and the development of innovative software solutions, Top mobile app development company in New York.

Versatility and Platform Independence:

Java's write-once-run-anywhere (WORA) principle allows developers to write code once and run it on any platform that supports Java, making it highly versatile. This platform independence extends to various devices, including mobile phones, tablets, desktops, servers, and embedded systems, providing a broad scope for application development, mobile app development company in New York.

Robustness and Reliability:

Java's robust architecture and strong memory management make it a reliable choice for building complex and scalable applications. Its automatic garbage collection feature helps manage memory efficiently, reducing the risk of memory leaks and improving overall application stability, best mobile application development company in New York.

Wide Range of Applications:

Java is used extensively in diverse domains such as web development, mobile app development (Android apps are predominantly built using Java), enterprise solutions, scientific computing, big data technologies (Hadoop, Spark), Internet of Things (IoT), and more. Learning Java opens doors to a multitude of career paths and project opportunities across various industries, mobile application development company in New York.

Community Support and Resources:

Java benefits from a vast and active community of developers, educators, and enthusiasts. This community support translates into a wealth of resources, including documentation, tutorials, forums, libraries, and frameworks like Spring, Hibernate, and Apache Struts, which expedite development and enhance productivity,website development company in New York .

High Demand and Job Opportunities:

Java consistently ranks among the top programming languages in demand by employers. Acquiring Java skills increases employability and opens doors to lucrative job opportunities in software development, application architecture, cloud computing, cybersecurity, data analysis, and more. Moreover, Java developers often enjoy competitive salaries and career growth prospects, top mobile application development company in New York.

Scalability and Performance:

Java's scalability is evident in its ability to handle large-scale enterprise applications with ease. By leveraging features like multi-threading, concurrency utilities, and distributed computing frameworks, Java applications can achieve optimal performance and scalability, catering to growing user demands.

Compatibility with Modern Technologies:

Java remains relevant in the era of modern technologies such as microservices, containerization (Docker, Kubernetes), cloud computing (AWS, Azure, Google Cloud), and DevOps practices. Integration with these technologies is seamless, allowing Java developers to stay at the forefront of software development trends.

Security and Stability:

Java's emphasis on security features like sandboxing, bytecode verification, and encryption mechanisms makes it a preferred choice for developing secure applications. Its mature ecosystem and regular updates ensure stability and address vulnerabilities, enhancing overall software security.

In conclusion, learning Java in 2024 offers a plethora of benefits ranging from versatility and reliability to high demand in the job market and compatibility with modern technologies. Whether you're a novice programmer or an experienced developer, mastering Java opens doors to a world of opportunities, fosters continuous learning, and empowers you to create robust, scalable, and innovative software solutions across various domains.

#avigma tech llc#web app development#mobile app development#android#ios#marketing analytics#affiliate marketing#digital marketing#email marketing

0 notes

Text

Mastering Data Science: A Guide to Programming Languages

In the dynamic field of data science, mastering programming languages is essential for extracting insights from vast amounts of data. These languages serve as the foundation for data manipulation, analysis, and visualization, enabling data scientists to uncover valuable insights and make data-driven decisions. In this guide, we'll explore the key programming languages for data scientists, highlighting their features, use cases, and importance in mastering the art of data science.

Python:

Python stands out as one of the most popular programming languages for data scientists due to its simplicity, versatility, and robust ecosystem of libraries and frameworks. With libraries such as NumPy, Pandas, and Matplotlib, Python facilitates data manipulation, analysis, and visualization tasks with ease. Its intuitive syntax and readability make it accessible to beginners, while its scalability and performance ensure it remains a favorite among seasoned professionals. Python's extensive support for machine learning and deep learning libraries such as scikit-learn and TensorFlow further solidifies its position as a go-to language for data scientists.

R:

R is another widely used programming language in the field of data science, renowned for its powerful statistical analysis capabilities and extensive collection of packages. Developed specifically for statistical computing and graphics, R excels in data exploration, visualization, and modeling tasks. Its rich ecosystem of packages, including ggplot2, dplyr, and caret, provides data scientists with tools for data manipulation, visualization, and machine learning. R's interactive environment and built-in support for statistical analysis make it a preferred choice for researchers, statisticians, and analysts working with complex datasets.

SQL:

Structured Query Language (SQL) is indispensable for data scientists working with relational databases and large-scale datasets. SQL enables data scientists to query databases, manipulate data, and perform complex analyses with ease. Its declarative syntax and powerful querying capabilities allow for efficient data retrieval, aggregation, and transformation. Data scientists use SQL to extract insights from structured datasets, perform data cleaning and preprocessing tasks, and create reports and visualizations for stakeholders. While SQL is not a traditional programming language, its importance in data science cannot be overstated, particularly in industries where relational databases are prevalent.

Java:

Java is a versatile programming language widely used in enterprise applications, including data-intensive systems and big data processing frameworks. While not as popular among data scientists as Python or R, Java's scalability, performance, and robustness make it well-suited for building and deploying data-intensive applications. Java is commonly used in big data technologies such as Apache Hadoop and Apache Spark for distributed data processing and analysis. Data scientists proficient in Java can leverage its capabilities to build scalable data pipelines, implement distributed algorithms, and deploy machine learning models in production environments.

Julia:

Julia is a high-level programming language designed for numerical and scientific computing, with a focus on performance and ease of use. Julia combines the flexibility of dynamic languages like Python with the speed of statically compiled languages like C or Fortran, making it well-suited for computationally intensive tasks in data science. Julia's syntax is similar to MATLAB and Python, making it accessible to users familiar with these languages. Data scientists use Julia for tasks such as numerical simulations, optimization, and parallel computing, where performance and scalability are critical.

Best Practices for Mastering Programming Languages in Data Science:

Choose the right tool for the job: Understand the strengths and weaknesses of each programming language and select the most appropriate language for your specific data science tasks and requirements.