#AUDIO:

Link

Paul McGann takes on the Daleks and the Weeping Angels in two brand-new box sets of explosive full-cast audio drama, due for release in winter 2022 from Big Finish Productions.

A New Start for the Eighth Doctor

17 June 2022Tags :

Doctor Who News

Doctor Who - The Eighth Doctor Adventures

Paul McGann takes on the Daleks and the Weeping Angels in two brand-new box sets of explosive full-cast audio drama, due for release in winter 2022 from Big Finish Productions.

The Eighth Doctor Adventures enters a new era of standalone stories to land later this year. Following a decade of continuous adventures, the two new box sets, What Lies Inside? And Connections, will have a November and December release respectively.

Joining Paul McGann in the TARDIS is the brilliant Nicola Walker (The Split, Unforgotten) as Liv Chenka and Hattie Morahan (Enola Holmes, Beauty and the Beast) as Helen Sinclair.

Doctor Who – The Eighth Doctor Adventures: What Lies Inside? and Connections are each available to pre-order as collector’s edition CD box sets (+ download for just £19.99 per title) or a digital download only (for just £16.99 per title) exclusively here.

The two exciting stories in What Lies Inside? are:

Paradox of the Daleks by John Dorney

The Dalby Spook by Lauren Mooney & Stewart Pringle

The three action-packed adventures in Connections are:

Here Lies Drax by John Dorney

The Love Vampires by James Kettle

Albie's Angels by Roy Gill

Producer David Richardson said: “It’s a new start for the Eighth Doctor. After 10 years of long form interconnected storytelling, the range begins again with standalone stories. Each box set can be enjoyed in isolation.

“It begins some time after the ending of Stranded (and at the same time some time before it - if you’ve heard that final episode, you will understand) and there are battles to fight, people to save and enemies to defeat. Some will be familiar yet many will be new. There are so many stories yet to tell in the Eighth Doctor range.”

Oh thank the gays! They’re still gonna give us Liv + Helen + Eight!

#Liv my Love#Liv Chenka#Helen Sinclair#Eighth Doctor#Nicola Walker#Hattie Morahan#Paul McGann#Doctor Who#Big Finish#Audio Drama

1 note

·

View note

Text

Mamba Explained

New Post has been published on https://thedigitalinsider.com/mamba-explained/

Mamba Explained

The State Space Model taking on Transformers

Right now, AI is eating the world.

And by AI, I mean Transformers. Practically all the big breakthroughs in AI over the last few years are due to Transformers.

Mamba, however, is one of an alternative class of models called State Space Models (SSMs). Importantly, for the first time, Mamba promises similar performance (and crucially similar scaling laws) as the Transformer whilst being feasible at long sequence lengths (say 1 million tokens). To achieve this long context, the Mamba authors remove the “quadratic bottleneck” in the Attention Mechanism. Mamba also runs fast – like “up to 5x faster than Transformer fast”1.

Mamba performs similarly (or slightly better than) other Language Models on The Pile (source)

Gu and Dao, the Mamba authors write:

Mamba enjoys fast inference and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modelling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation.

Here we’ll discuss:

The advantages (and disadvantages) of Mamba (🐍) vs Transformers (🤖),

Analogies and intuitions for thinking about Mamba, and

What Mamba means for Interpretability, AI Safety and Applications.

Problems with Transformers – Maybe Attention Isn’t All You Need

We’re very much in the Transformer-era of history. ML used to be about detecting cats and dogs. Now, with Transformers, we’re generating human-like poetry, coding better than the median competitive programmer, and solving the protein folding problem.

But Transformers have one core problem. In a transformer, every token can look back at every previous token when making predictions. For this lookback, we cache detailed information about each token in the so-called KV cache.

When using the Attention Mechanism, information from all previous tokens can be passed to the current token

This pairwise communication means a forward pass is O(n²) time complexity in training (the dreaded quadratic bottleneck), and each new token generated autoregressively takes O(n) time. In other words, as the context size increases, the model gets slower.

To add insult to injury, storing this key-value (KV) cache requires O(n) space. Consequently, the dreaded CUDA out-of-memory (OOM) error becomes a significant threat as the memory footprint expands. If space were the only concern, we might consider adding more GPUs; however, with latency increasing quadratically, simply adding more compute might not be a viable solution.

On the margin, we can mitigate the quadratic bottleneck with techniques like Sliding Window Attention or clever CUDA optimisations like FlashAttention. But ultimately, for super long context windows (like a chatbot which remembers every conversation you’ve shared), we need a different approach.

Foundation Model Backbones

Fundamentally, all good ML architecture backbones have components for two important operations:

Communication between tokens

Computation within a token

The Transformer Block

In transformers, this is Attention (communication) and MLPs (computation). We improve transformers by optimising these two operations2.

We would like to substitute the Attention component3 with an alternative mechanism for facilitating inter-token communication. Specifically, Mamba employs a Control Theory-inspired State Space Model, or SSM, for Communication purposes while retaining Multilayer Perceptron (MLP)-style projections for Computation.

The Mamba Block

Like a Transformer made up of stacked transformer blocks, Mamba is made up of stacked Mamba blocks as above.

We would like to understand and motivate the choice of the SSM for sequence transformations.

Motivating Mamba – A Throwback to Temple Run

Imagine we’re building a Temple Run agent4. It chooses if the runner should move left or right at any time.

To successfully pick the correct direction, we need information about our surroundings. Let’s call the collection of relevant information the state. Here the state likely includes your current position and velocity, the position of the nearest obstacle, weather conditions, etc.

Claim 1: if you know the current state of the world and how the world is evolving, then you can use this to determine the direction to move.

Note that you don’t need to look at the whole screen all the time. You can figure out what will happen to most of the screen by noting that as you run, the obstacles move down the screen. You only need to look at the top of the screen to understand the new information and then simulate the rest.

This lends itself to a natural formulation. Let h be the hidden state, relevant knowledge about the world. Also let x be the input, the observation that you get each time. h’ then represents the derivative of the hidden state, i.e. how the state is evolving. We’re trying to predict y, the optimal next move (right or left).

Now, Claim 1 states that from the hidden state h, h’, and the new observation x, you can figure out y.

More concretely, h, the state, can be represented as a differential equation (Eq 1a):

$h’(t) = mathbfAh(t) + mathbfBx(t)$

Knowing h allows you to determine your next move y (Eq 1b):

$y(t) = mathbfCh(t) + mathbfDx(t)$

The system’s evolution is determined by its current state and newly acquired observations. A small new observation is enough, as the majority of the state can be inferred by applying known state dynamics to its previous state. That is, most of the screen isn’t new, it’s just a continuation of the previous state’s natural downward trajectory. A full understanding of the state would enable optimal selection of the subsequent action, denoted as y.

You can learn a lot about the system dynamics by observing the top of the screen. For instance, increased velocity of this upper section suggests an acceleration of the rest of the screen as well, so we can infer that the game is speeding up5. In this way, even if we start off knowing nothing about the game and only have limited observations, it becomes possible to gain a holistic understanding of the screen dynamics fairly rapidly.

What’s the State?

Here, state refers to the variables that, when combined with the input variables, fully determine the future system behaviour. In theory, once we have the state, there’s nothing else we need to know about the past to predict the future. With this choice of state, the system is converted to a Markov Decision Process. Ideally, the state is a fairly small amount of information which captures the essential properties of the system. That is, the state is a compression of the past6.

Discretisation – How To Deal With Living in a Quantised World

Okay, great! So, given some state and input observation, we have an autoregressive-style system to determine the next action. Amazing!

In practice though, there’s a little snag here. We’re modelling time as continuous. But in real life, we get new inputs and take new actions at discrete time steps7.

We would like to convert this continuous-time differential equation into a discrete-time difference equation. This conversion process is known as discretisation. Discretisation is a well-studied problem in the literature. Mamba uses the Zero-Order Hold (ZOH) discretisation8. To give an idea of what’s happening morally, consider a naive first-order approximation9.

From Equation 1a, we have

$h’(t) = mathbfAh(t) + mathbfBx(t)$

And for small ∆,

$h’(t) approx frach(t+Delta) – h(t)Delta$

by the definition of the derivative.

We let:

$h_t = h(t)$

and

$h_t+1 = h(t + Delta)$

and substitute into Equation 1a giving:

$h_t+1 – h_t approx Delta (mathbfAh_t + mathbfBx_t)$

$Rightarrow h_t+1 approx (I + Delta mathbfA)h_t + (Delta

mathbfB)x_t$

Hence, after renaming the coefficients and relabelling indices, we have the discrete representations:

The Discretised Version of the SSM Equation

If you’ve ever looked at an RNN before10 and this feels familiar – trust your instincts:

We have some input x, which is combined with the previous hidden state by some transform to give the new hidden state. Then we use the hidden state to calculate the output at each time step.

Understanding the SSM Matrices

Now, we can interpret the A, B, C, D matrices more intuitively:

A is the transition state matrix. It shows how you transition the current state into the next state. It asks “How should I forget the less relevant parts of the state over time?”

B is mapping the new input into the state, asking “What part of my new input should I remember?”11

C is mapping the state to the output of the SSM. It asks, “How can I use the state to make a good next prediction?”12

D is how the new input passes through to the output. It’s a kind of modified skip connection that asks “How can I use the new input in my prediction?”

Visual Representation of The SSM Equations

Additionally, ∆ has a nice interpretation – it’s the step size, or what we might call the linger time or the dwell time. For large ∆, you focus more on that token; for small ∆, you skip past the token immediately and don’t include it much in the next state.

(source)

And that’s it! That’s the SSM, our ~drop-in replacement for Attention (Communication) in the Mamba block. The Computation in the Mamba architecture comes from regular linear projections, non-linearities, and local convolutions.

Okay great, that’s the theory – but does this work? Well…

Effectiveness vs Efficiency: Attention is Focus, Selectivity is Prioritisation

At WWDC ‘97, Steve Jobs famously noted that “focusing is about saying no”. Focus is ruthless prioritisation. It’s common to think about Attention positively as choosing what to notice. In the Steve Jobs sense, we might instead frame Attention negatively as choosing what to discard.

There’s a classic intuition pump in Machine Learning known as the Cocktail Party Problem13. Imagine a party with dozens of simultaneous loud conversations:

Question:

How do we recognise what one person is saying when others are talking at the same time?14

Answer:

The brain solves this problem by focusing your “attention” on a particular stimulus and hence drowning out all other sounds as much as possible.

Transformers use Dot-Product Attention to focus on the most relevant tokens. A big reason Attention is so great is that you have the potential to look back at everything that ever happened in its context. This is like photographic memory when done right.15

Transformers (🤖) are extremely effective. But they aren’t very efficient. They store everything from the past so that they can look back at tokens with theoretically perfect recall.

Traditional RNNs (🔁) are the opposite – they forget a lot, only recalling a small amount in their hidden state and discarding the rest. They are very efficient – their state is small. Yet they are less effective as discarded information cannot be recovered.

We’d like something closer to the Pareto frontier of the effectiveness/efficiency tradeoff. Something that’s more effective than traditional RNNs and more efficient than transformers.

The Mamba Architecture seems to offer a solution which pushes out the Pareto frontier of effectiveness/efficiency.

SSMs are as efficient as RNNs, but we might wonder how effective they are. After all, it seems like they would have a hard time discarding only unnecessary information and keeping everything relevant. If each token is being processed the same way, applying the same A and B matrices as if in a factory assembly line for tokens, there is no context-dependence. We would like the forgetting and remembering matrices (A and B respectively) to vary and dynamically adapt to inputs.

The Selection Mechanism

Selectivity allows each token to be transformed into the state in a way that is unique to its own needs. Selectivity is what takes us from vanilla SSM models (applying the same A (forgetting) and B (remembering) matrices to every input) to Mamba, the Selective State Space Model.

In regular SSMs, A, B, C and D are learned matrices – that is

$mathbfA = mathbfA_theta$ etc. (where θ represents the learned parameters)

With the Selection Mechanism in Mamba, A, B, C and D are also functions of x. That is $mathbfA = mathbfA_theta(x)$ etc; the matrices are context dependent rather than static.

Mamba (right) differs from traditional SSMs by allowing A,B,C matrices to be selective i.e. context dependent (source)

Making A and B functions of x allows us to get the best of both worlds:

We’re selective about what we include in the state, which improves effectiveness vs traditional SSMs.

Yet, since the state size is bounded, we improve on efficiency relative to the Transformer. We have O(1), not O(n) space and O(n) not O(n²) time requirements.

The Mamba paper authors write:

The efficiency vs. effectiveness tradeoff of sequence models is characterized by how well they compress their state: efficient models must have a small state, while effective models must have a state that contains all necessary information from the context. In turn, we propose that a fundamental principle for building sequence models is selectivity: or the context-aware ability to focus on or filter out inputs into a sequential state. In particular, a selection mechanism controls how information propagates or interacts along the sequence dimension.

Humans (mostly) don’t have photographic memory for everything they experience within a lifetime – or even within a day! There’s just way too much information to retain it all. Subconsciously, we select what to remember by choosing to forget, throwing away most information as we encounter it. Transformers (🤖) decide what to focus on at recall time. Humans (🧑) also decide what to throw away at memory-making time. Humans filter out information early and often.

If we had infinite capacity for memorisation, it’s clear the transformer approach is better than the human approach – it truly is more effective. But it’s less efficient – transformers have to store so much information about the past that might not be relevant. Transformers (🤖) only decide what’s relevant at recall time. The innovation of Mamba (🐍) is allowing the model better ways of forgetting earlier – it’s focusing by choosing what to discard using Selectivity, throwing away less relevant information at memory-making time16.

The Problems of Selectivity

Applying the Selection Mechanism does have its gotchas though. Non-selective SSMs (i.e. A,B not dependent on x) are fast to compute in training. This is because the component of

Yt which depends on xi can be expressed as a linear map, i.e. a single matrix that can be precomputed!

For example (ignoring the D component, the skip connection):

$$y_2 = mathbfCmathbfBx_2 + mathbfCmathbfAmathbfBx_1 +

mathbfCmathbfAmathbfAmathbfBx_0$$

If we’re paying attention, we might spot something even better here – this expression can be written as a convolution. Hence we can apply the Fast Fourier Transform and the Convolution Theorem to compute this very efficiently on hardware as in Equation 3 below.

We can calculate Equation 2, the SSM equations, efficiently in the Convolutional Form, Equation 3.

Unfortunately, with the Selection Mechanism, we lose the convolutional form. Much attention is given to making Mamba efficient on modern GPU hardware using similar hardware optimisation tricks to Tri Dao’s Flash Attention17. With the hardware optimisations, Mamba is able to run faster than comparably sized Transformers.

Machine Learning for Political Economists – How Large Should The State Be?

The Mamba authors write, “the efficiency vs. effectiveness tradeoff of sequence models is characterised by how well they compress their state”. In other words, like in political economy18, the fundamental problem is how to manage the state.

🔁 Traditional RNNs are anarchic

They have a small, minimal state. The size of the state is bounded. The compression of state is poor.

🤖 Transformers are communist

They have a maximally large state. The “state” is just a cache of the entire history with no compression. Every context token is treated equally until recall time.

🐍Mamba has a compressed state

…but it’s selective about what goes in. Mamba says we can get away with a small state if the state is well focused and effective19.

Language Models and State Size

The upshot is that state representation is critical. A smaller state is more efficient; a larger state is more effective. The key is to selectively and dynamically compress data into the state. Mamba’s Selection Mechanism allows for context-dependent reasoning, focusing and ignoring. For both performance and interpretability, understanding the state seems to be very useful.

Information Flow in Transformer vs Mamba

How do Transformers know anything? At initialization, a transformer isn’t very smart. It learns in two ways:

Training data (Pretraining, SFT, RLHF etc)

In context-data

Training Data

Models learn from their training data. This is a kind of lossy compression of input data into the weights. We can think of the effect of pretraining data on the transformer kinda like the effect of your ancestor’s experiences on your genetics – you can’t recall their experiences, you just have vague instincts about them20.

In Context-Data

Transformers use their context as short-term memory, which they can recall with ~perfect fidelity. So we get In-Context Learning, e.g. using induction heads to solve the Indirect Object Identification task, or computing Linear Regression.

Retrieval

Note that Transformers don’t filter their context at all until recall time. So if we have a bunch of information we think might be useful to the Transformer, we filter it outside the Transformer (using Information Retrieval strategies) and then stuff the results into the prompt. This process is known as Retrieval Augmented Generation (RAG). RAG determines relevant information for the context window of a transformer. A human with the internet is kinda like a RAG system – you still have to know what to search but whatever you retrieve is as salient as short-term memory to you.

Information Flow for Mamba

Training Data acts similarly for Mamba. However, the lines are slightly blurred for in-context data and retrieval. In-context data for Mamba is compressed/filtered similar to retrieval data for transformers. This in-context data is also accessible for look-up like for transformers (although with somewhat lower fidelity).

Transformer context is to Mamba states what short-term is to long-term memory. Mamba doesn’t just have “RAM”, it has a hard drive21 22.

Swapping States as a New Prompting Paradigm

Currently, we often use RAG to give a transformer contextual information.

With Mamba-like models, you could instead imagine having a library of states created by running the model over specialised data. States could be shared kinda like LoRAs for image models.

For example, I could do inference on 20 physics textbooks and, say, 100 physics questions and answers. Then I have a state which I can give to you. Now you don’t need to add any few-shot examples; you just simply ask your question. The in-context learning is in the state.

In other words, you can drag and drop downloaded states into your model, like literal plug-in cartridges. And note that “training” a state doesn’t require any backprop. It’s more like a highly specialised one-pass fixed-size compression algorithm. This is unlimited in-context learning applied at inference time for zero-compute or latency23.

The structure of an effective LLM call goes from…

System Prompt

Preamble

Few shot-examples

Question

…for Transformers, to simply…

Inputted state (with problem context, initial instructions, textbooks, and few-shot examples)

Short question

…for Mamba.

This is cheaper and faster than few-shot prompting (as the state is infinitely reusable without inference cost). It’s also MUCH cheaper than finetuning and doesn’t require any gradient updates. We could imagine retrieving states in addition to context.

Mamba & Mechanistic Interpretability

Transformer interpretability typically involves:

understanding token relationships via attention,

understanding circuits, and

using Dictionary Learning for unfolding MLPs.

Most of the ablations that we would like to do for Mamba are still valid, but understanding token communication (1) is now more nuanced. All information moves between tokens via hidden states instead of the Attention Mechanism which can “teleport” information from one sequence position to another.

For understanding in-context learning (ICL) tasks with Mamba, we will look to intervene on the SSM state. A classic task in-context learning task is Indirect Object Identification in which a model has to finish a paragraph like:

Then, Shelby and Emma had a lot of fun at the school. [Shelby/Emma] gave an apple to [BLANK]

The model is expected to fill in the blank with the name that is not repeated in the paragraph. In the chart below we can see that information is passed from the [Shelby/Emma] position to the final position via the hidden state (see the two blue lines in the top chart).

Since it’s hypothesised that much of In-Context Learning in Transformers is downstream of more primitive sequence position operations (like Induction Heads), Mamba being able to complete this task suggests a more general In-Context Learning ability.

What’s Next for Mamba & SSMs?

Mamba-like models are likely to excel in scenarios requiring extremely long context and long-term memory. Examples include:

Processing DNA

Generating (or reasoning over) video

Writing novels

An illustrative example is agents with long-term goals.

Suppose you have an agent interacting with the world. Eventually, its experiences become too much for the context window of a transformer. The agent then has to compress or summarise its experiences into some more compact representation.

But how do you decide what information is the most useful as a summary? If the task is language, LLMs are actually fairly good at summaries – okay, yeah, you’ll lose some information, but the most important stuff can be retained.

However, for other disciplines, it might not be clear how to summarise. For example, what’s the best way to summarise a 2 hour movie?24. Could the model itself learn to do this naturally rather than a hacky workaround like trying to describe the aesthetics of the movie in text?

This is what Mamba allows. Actual long-term memory. A real state where the model learns to keep what’s important. Prediction is compression – learning what’s useful to predict what’s coming next inevitably leads to building a useful compression of the previous tokens.

The implications for Assistants are clear:

Your chatbot co-evolves with you. It remembers.

The film HER is looking better and better as time goes on 😳

Agents & AI Safety

One reason for positive updates in existential risk from AGI is Language Models. Previously, Deep-RL agents trained via self-play looked set to be the first AGIs. Language models are inherently much safer since they aren’t trained with long-term goals25.

The potential for long-term sequence reasoning here brings back the importance of agent-based AI safety. Few agent worries are relevant to Transformers with an 8k context window. Many are relevant to systems with impressive long-term memories and possible instrumental goals.

The Best Collab Since Taco Bell & KFC: 🤖 x 🐍

The Mamba authors show that there’s value in combining Mamba’s long context with the Transformer’s high fidelity over short sequences. For example, if you’re making long videos, you likely can’t fit a whole movie into a Transformer’s context for attention26. You could imagine having Attention look at the most recent frames for short-term fluidity and an SSM for long-term narrative consistency27.

This isn’t the end for Transformers. Their high effectiveness is exactly what’s needed for many tasks. But now Transformers aren’t the only option. Other architectures are genuinely feasible.

So we’re not in the post-Transformer era. But for the first time, we’re living in the post-only-Transformers era28. And this blows the possibilities wide open for sequence modelling with extreme context lengths and native long-term memory.

Two ML researchers, Sasha Rush (HuggingFace, Annotated Transformer, Cornell Professor) and Jonathan Frankle (Lottery Ticket Hypothesis, MosaicML, Harvard Professor), currently have a bet here.

Currently Transformers are far and away in the lead. With 3 years left, there’s now a research direction with a fighting chance.

All that remains to ask is: Is Attention All We Need?

1. see Figure 8 in the Mamba paper.

2. And scaling up with massive compute.

3. More specifically the scaled dot-product Attention popularised by Transformers

4. For people who don’t see Temple Run as the cultural cornerstone it is 🤣 Temple Run was an iPhone game from 2011 similar to Subway Surfer

5. Here we assume the environment is sufficiently smooth.

6. One pretty important constraint for this to be efficient is that we don’t allow the individual elements of the state vector to interact with each other directly. We’ll use a combination of the state dimensions to determine the output but we don’t e.g. allow the velocity of the runner and the direction of the closest obstacle (or whatever else was in our state) to directly interact. This helps with efficient computation and we achieve this practically by constraining A to be a diagonal matrix.

7. Concretely consider the case of Language Models – each token is a discrete step

8. ZOH also has nice properties for the initialisations – we want A_bar to be close to the identity so that the state can be mostly maintained from timestep to timestep if desired. ZOH gives A_bar as an exponential so any diagonal element initialisations close to zero give values close to 1

9. This is known as the Euler discretisation in the literature

10. It’s wild to note that some readers might not have, we’re so far into the age of Attention that RNNs have been forgotten!

11. B is like the Query (Q) matrix for Transformers.

12. C is like the Output (O) matrix for Transformers.

13. Non-alcoholic options also available!

14. Especially as all voices roughly occupy the same space on the audio frequency spectrum Intuitively this seems really hard!

15. Note that photographic memory doesn’t necessarily imply perfect inferences from that memory!

16. To be clear, if you have a short sequence, then a transformer should theoretically be a better approach. If you can store the whole context, then why not!? If you have enough memory for a high-resolution image, why compress it into a JPEG? But Mamba-style architectures are likely to hugely outperform with long-range sequences.

17. More details are available for engineers interested in CUDA programming – Tri’s talk, Mamba paper section 3.3.2, and the official CUDA code are good resources for understanding the Hardware-Aware Scan

18. or in Object Oriented Programming

19. Implications to actual Political Economy are left to the reader but maybe Gu and Dao accidentally solved politics!?

20. This isn’t a perfect analogy as human evolution follows a genetic algorithm rather than SGD.

21. Albeit a pretty weird hard drive at that – it morphs over time rather than being a fixed representation.

22. As a backronym, I’ve started calling the hidden_state the state space dimension (or selective state dimension) which shortens to SSD, a nice reminder for what this object represents – the long-term memory of the system.

23. I’m thinking about this similarly to the relationship between harmlessness finetuning and activation steering. State swapping, like activation steering, is an inference time intervention giving comparable results to its train time analogue.

24. This is a very non-trivial problem! How do human brains represent a movie internally? It’s not a series of the most salient frames, nor is it a text summary of the colours, nor is it a purely vibes-based summary if you can memorise some lines of the film.

25. They’re also safer since they inherently understand (though don’t necessarily embody) human values. It’s not all clear that how to teach an RL agent human morality.

26. Note that typically an image (i.e. a single frame) counts as >196 tokens, and movies are typically 24 fps so you’ll fill a 32k context window in 7 seconds 🤯

27. Another possibility that I’m excited about is applying optimisation pressure to the state itself as well as the output to have models that respect particular use cases.

28. This is slightly hyperbolic, the TS-Mixer for time series, Gradient Boosting Trees for tabular data and Graph Neural Networks for weather prediction exist and are currently used, but these aren’t at the core of AI

Author Bio

Kola Ayonrinde is a Research Scientist and Machine Learning Engineer with a flair for writing. He integrates technology and creativity, focusing on applying machine learning in innovative ways and exploring the societal impacts of tech advancements.

Acknowledgements

This post was originally posted on Kola’s personal blog.

Thanks to Gonçalo for reading an early draft, Jaden for the nnsight library used for the Interpretability analysis and Tessa for Mamba patching visualisations.Also see: Mamba paper, Mamba Python code, Annotated S4, Nathan Labenz podcast

Citation

For attribution in academic contexts or books, please cite this work as

Kola Ayonrinde, "Mamba Explained," The Gradient, 2024

@article{Ayonrinde2024mamba, author = Kola Ayonrinde, title = Mamba Explained, journal = The Gradient, year = 2024, howpublished = urlhttps://thegradient.pub/mamba-explained,

#2024#8K#AGI#ai#ai model#algorithm#amazing#amp#Analysis#apple#applications#approach#architecture#Art#Article#attention#attention mechanism#audio#Best Of#Blog#Blue#Books#Brain#brains#Building#cache#cats#chart#chatbot#code

0 notes

Audio

caught in the undertow, you’re all alone

1 note

·

View note

Photo

🧀🥪🌶️🥭 The Ravening War portraits 🧀🥪🌶️🥭

patreon * twitch * shop

[ID: a series of digitally illustrated portraits showing - top left to bottom right - Bishop Raphaniel Charlock (an old radish man with a big red head and large white eyebrows & a scraggly beard. he wears green and gold robes with symbols of the bulb and he smirks at the viewer) Karna Solara (a skinny young chili pepper woman with wavy green hair, freckled light green skin with red blooms on her cheeks. she wears a chili pepper hood lined with small pepper seeds and stares cagily ahead) Thane Delissandro Katzon (a muscular young beef man with bright pinkish skin with small skin variations to resemble pastrami and dark burgundy hair. he wears a bread headress with a swirl of rye covering his ears and he looks ahead, optimistic and determined) Queen Amangeaux Epicée du Peche (a bright mango woman with orange skin, big red hair adorned with a green laurel, and sparkling green/gold makeup. she wears large gold hoop earrings and a high leafy collar) and Colin Provolone (a scraggly cheese man with waxy yellow skin and dark slicked back hair and patchy dark facial hair. he wears a muted, ratty blue bandana around his neck and raises a scarred brow at the viewer with a smirk) End ID.)

#trw#the ravening war#dimension 20#acoc#trw fanart#ttrpg#dnd#bishop raphaniel charlock#karna solara#thane delissandro katzon#queen amangeaux epicee du peche#colin provolone

2K notes

·

View notes

Photo

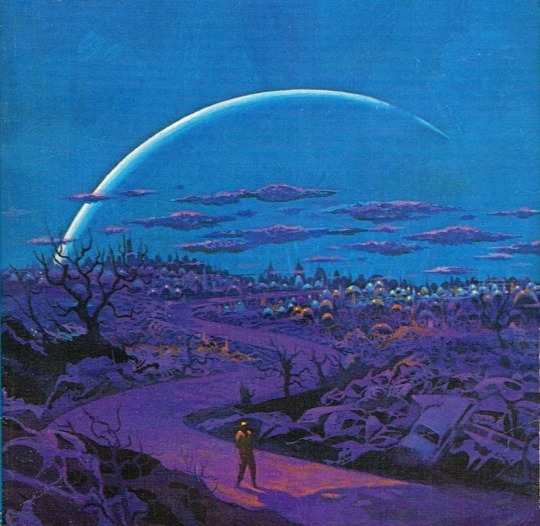

One of my favorites by Paul Lehr, used as a 1971 cover to "Earth Abides," by George R. Stewart. It's also in my upcoming art book!

1K notes

·

View notes

Photo

#thistension

XO, KITTY

— 1.09 “SNAFU”

#xokittyedit#tatbilbedit#kdramaedit#netflixedit#wlwedit#xokittydaily#asiancentral#cinemapix#cinematv#filmtvcentral#pocfiction#smallscreensource#teendramaedit#wlwgif#kitty song covey#yuri han#xo kitty#anna cathcart#gia kim#~#inspiration: romantic.#dynamic: ff.

1K notes

·

View notes

Photo

PORTO ROCHA

199 notes

·

View notes

Text

(The Sims 4) Estrela Hair

Hair

24 EA Colors

Hat Compatible

55403 polygons

Custom thumbnail

Hair Accessories Overlay

15 Colors Variations

Custom thumbnail

Brow ring category

If you have any issue let me know, enjoy.

T.O.U.

Credits

Download (Patreon - Curseforge)

@maxismatchccworld @sssvitlanz @emilyccfinds

1K notes

·

View notes

Text

Smart glove teaches new physical skills - Technology Org

New Post has been published on https://thedigitalinsider.com/smart-glove-teaches-new-physical-skills-technology-org/

Smart glove teaches new physical skills - Technology Org

You’ve likely met someone who identifies as a visual or auditory learner, but others absorb knowledge through a different modality: touch. Understanding tactile interactions is especially important for tasks such as learning delicate surgeries and playing musical instruments, but unlike video and audio, touch is difficult to record and transfer.

To tap into this challenge, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and elsewhere developed an embroidered smart glove that can capture, reproduce, and relay touch-based instructions. To complement the wearable device, the team also developed a simple machine-learning agent that adapts to how different users react to tactile feedback, optimizing their experience. The new system could potentially help teach people physical skills, improve responsive robot teleoperation, and assist with training in virtual reality.

An open-access paper describing the work was published in Nature Communications.

Will I be able to play the piano?

To create their smart glove, the researchers used a digital embroidery machine to seamlessly embed tactile sensors and haptic actuators (a device that provides touch-based feedback) into textiles. This technology is present in smartphones, where haptic responses are triggered by tapping on the touch screen. For example, if you press down on an iPhone app, you’ll feel a slight vibration coming from that specific part of your screen. In the same way, the new adaptive wearable sends feedback to different parts of your hand to indicate optimal motions to execute different skills.

The smart glove could teach users how to play the piano, for instance. In a demonstration, an expert was tasked with recording a simple tune over a section of keys, using the smart glove to capture the sequence by which they pressed their fingers to the keyboard. Then, a machine-learning agent converted that sequence into haptic feedback, which was then fed into the students’ gloves to follow as instructions. With their hands hovering over that same section, actuators vibrated on the fingers corresponding to the keys below. The pipeline optimizes these directions for each user, accounting for the subjective nature of touch interactions.

“Humans engage in a wide variety of tasks by constantly interacting with the world around them,” says Yiyue Luo MS ’20, lead author of the paper, PhD student in MIT’s Department of Electrical Engineering and Computer Science (EECS), and CSAIL affiliate. “We don’t usually share these physical interactions with others. Instead, we often learn by observing their movements, like with piano-playing and dance routines.

“The main challenge in relaying tactile interactions is that everyone perceives haptic feedback differently,” adds Luo. “This roadblock inspired us to develop a machine-learning agent that learns to generate adaptive haptics for individuals’ gloves, introducing them to a more hands-on approach to learning optimal motion.”

The wearable system is customized to fit the specifications of a user’s hand via a digital fabrication method. A computer produces a cutout based on individuals’ hand measurements, then an embroidery machine stitches the sensors and haptics in. Within 10 minutes, the soft, fabric-based wearable is ready to wear. Initially trained on 12 users’ haptic responses, its adaptive machine-learning model only needs 15 seconds of new user data to personalize feedback.

In two other experiments, tactile directions with time-sensitive feedback were transferred to users sporting the gloves while playing laptop games. In a rhythm game, the players learned to follow a narrow, winding path to bump into a goal area, and in a racing game, drivers collected coins and maintained the balance of their vehicle on their way to the finish line. Luo’s team found that participants earned the highest game scores through optimized haptics, as opposed to without haptics and with unoptimized haptics.

“This work is the first step to building personalized AI agents that continuously capture data about the user and the environment,” says senior author Wojciech Matusik, MIT professor of electrical engineering and computer science and head of the Computational Design and Fabrication Group within CSAIL. “These agents then assist them in performing complex tasks, learning new skills, and promoting better behaviors.”

Bringing a lifelike experience to electronic settings

In robotic teleoperation, the researchers found that their gloves could transfer force sensations to robotic arms, helping them complete more delicate grasping tasks. “It’s kind of like trying to teach a robot to behave like a human,” says Luo. In one instance, the MIT team used human teleoperators to teach a robot how to secure different types of bread without deforming them. By teaching optimal grasping, humans could precisely control the robotic systems in environments like manufacturing, where these machines could collaborate more safely and effectively with their operators.

“The technology powering the embroidered smart glove is an important innovation for robots,” says Daniela Rus, the Andrew (1956) and Erna Viterbi Professor of Electrical Engineering and Computer Science at MIT, CSAIL director, and author on the paper. “With its ability to capture tactile interactions at high resolution, akin to human skin, this sensor enables robots to perceive the world through touch. The seamless integration of tactile sensors into textiles bridges the divide between physical actions and digital feedback, offering vast potential in responsive robot teleoperation and immersive virtual reality training.”

Likewise, the interface could create more immersive experiences in virtual reality. Wearing smart gloves would add tactile sensations to digital environments in video games, where gamers could feel around their surroundings to avoid obstacles. Additionally, the interface would provide a more personalized and touch-based experience in virtual training courses used by surgeons, firefighters, and pilots, where precision is paramount.

While these wearables could provide a more hands-on experience for users, Luo and her group believe they could extend their wearable technology beyond fingers. With stronger haptic feedback, the interfaces could guide feet, hips, and other body parts less sensitive than hands.

Luo also noted that with a more complex artificial intelligence agent, her team’s technology could assist with more involved tasks, like manipulating clay or driving an airplane. Currently, the interface can only assist with simple motions like pressing a key or gripping an object. In the future, the MIT system could incorporate more user data and fabricate more conformal and tight wearables to better account for how hand movements impact haptic perceptions.

Written by Alex Shipps

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#accounting#ai#app#approach#artificial#Artificial Intelligence#audio#bread#Building#Capture#challenge#collaborate#communications#computer#Computer Science#courses#dance#data#Design#electronic#engineering#Environment#Fabrication#Firefighters#Future#game#games#gloves#hand

0 notes

Photo

AGUST D : DAECHWITA (大吹打) & HAEGEUM (解禁)

⤷ movie posters | ig ; twt (click for hi-res)

#i'm back and ready to create again :'))#bts#bangtan#yoongi#agust d#suga#userbangtan#usersky#bangtanarmynet#hyunglinenetwork#dailybts#*latest#*posters#*gfx#btsgfx#idk if i wanna do an amygdala one#that one seems too personal to edit for me#so these will do for now

693 notes

·

View notes

Text

The Way Of A Criminal: Chapter 4

Genre: Mafia!AU, Criminal!AU, Angst, Romance

Pairing: OT7 x Reader

Characters: Normal!Reader, Gangster!Namjoon, Gangster!Seokjin, Gangster!Yoongi, Gangster!Hoseok, Gangster!Jimin, Gangster!Taehyung, Gangster!Jungkook

Summary: Your father was a stranger, you never knew who he was and what he did. But one day, someone knocks on your door, informing you of his passing. Now, you learn more about him, his life and the legacy you are expected to continue with the help of his 7 executives.

Story warning(s): This story will contain depictions of violence, blood shed/gore, death, mentions of abuse, smoking, alcohol drinking and gambling. This story is fictional and has nothing to do with real life events or the actual members of BTS. Please read at your own discretion.

Instead of dealing with all this head on, you avoided it. You put everything aside and went on with life, spending all your time doing work, studying and doing your university projects.

Wonwoo noticed this and decided to invite you for a night out. Just a chill night with some drinks. Usually, you would decline, which is why Wonwoo has never invited you out. But you could use the distraction. Maybe being out of the house was good.

DING DONG

“Coming!” You ran down, fixing your earring. You didn’t really know how to dress for a night out like this so you picked a random dress. It was a dark blue, crushed velvet cocktail dress.

“Come on in.” You opened the door for him. Wonwoo smiled, bowing before removing his shoes to come in.

“This dress isn’t really motorcycle friendly, is it?” You let out an awkward laugh, going into the kitchen to get your phone that you left there to charge.

“I didn’t ride my bike since we might drink. I’ll call a cab.” Wonwoo said. He just stood in the doorway, unsure of what to do since it was the first time he was in your house.

“(y/n)?” Wonwoo suddenly called your name, stopping you in your tracks.

“You look great.” He smiled softly.

“Oh... Thank you.” You felt your cheeks heat up at his sudden compliment. He held out a hand to support you as you wore your shoes. After locking up the house, he escorted you to the cab that was waiting for you. And of course, Wonwoo opened the door for you to get in first, being the gentleman that he is. The car ride was silent.

“C-Can I ask you something?” Wonwoo threaded lightly. You hummed, nodding your head.

“I know you like your privacy and I shouldn’t pry but there were this rumours and pictures floating around about some expensive sports car and handsome guy picking you up. Is he one of them?” He asked nervously.

“If by ‘one of them’, you mean my father’s... employees. Yes, he is... or was. There are 7 of them.” You rubbed the back of your neck.

“Are they bothering you?”

“Can we not talk about this anymore? I just don’t want to have to think about it for a few minutes.” That came out a lot harsher and colder than you expected. But Wonwoo didn’t react.

“Sorry. I’m...” You sighed, not really knowing how to piece your words together anymore.

“I understand. Don’t worry about it. I didn’t mean to pry.” Wonwoo smiled comfortingly. Thankfully for you, the cab stopped outside the club. You looked at the long line outside that didn’t seem to be moving,

“Don’t look so worried. My friend put our name on the list so we can just walk in.” Wonwoo laughed. You both got out and he grasped your hand, giving your names to the bouncer. You were so stunned by his action that you just followed him without a word, letting him lead you.

“There’s a table there.” Wonwoo said in your ear on top of the loud music. You were still shocked that he was holding your hand that you just followed him.

“Phew, it’s crowded.” You said, casually pulling your hand away to check if you dropped anything from your bag.

“Yeah, that’s what you get for being here on a Friday night.” He chuckled. You stood at the table while Wonwoo went to get drinks at the bar. The standing table was thankfully at the side of the bar, which meant less people.

“Relax, you’re here to have fun.” You told yourself.

“Here.” Wonwoo got himself a beer and got you a cocktail. It was a nice refreshing drink with flavoured soju as the alcohol.

“Let me know how much everything is tonight and we’ll split the cost.” You told him as you took a sip.

“It’s okay, (y/n). It’s my treat, just enjoy yourself.” Wonwoo smiled. He clinked his glass with yours. Although this wasn’t your exact idea of relaxing, you were glad that the noise and the crowd made the place too loud that you didn’t need to start sharing your feelings. Wonwoo was a good friend but you just weren’t used to sharing so much about yourself with others.

After some drinks, you excused yourself to go to the toilet. But of course, there was a line. About 15 minutes went by without the line moving. You were about to give up when someone grabbed your wrist.

“(y/n) sshi?” You looked at the familiar face.

“Oh... Uh...”

“Yoongi.” He said, letting you go. You nodded your head. Other people in the line now had their attention on you and Yoongi.

“Come.” He nodded over to follow him. You didn’t know why you just went along, not even asking why he wanted you to go with him. You came before a staircase with two guards. But they parted ways upon seeing Yoongi.

“Sir.” They bowed respectfully as Yoongi cooly walked up, hands tucked into his pockets. You quickly bowed your heads back to the guards and caught up to Yoongi.

“You can use this bathroom instead. It’s cleaner and safer.” Yoongi stopped before a door.

“A-Are you sure?”

“If I wasn’t sure, I wouldn’t have went down to fish you out of the crowd. Take all the time you need. This is our own private bathroom so no one else is allowed to use it.” He explained. You nodded, bowing gratefully to him before entering the restroom.

The bathroom was a lot more opulent and grand, with black granite and gold trims. Like Yoongi said, it was very clean, unlike a usual club bathroom. It was definitely a boys bathroom with urinals but you just used one of the stalls.

“Hyung, why can’t I use the bathroom? I really need to go!” You heard voices outside as you were washing your hands.

“Only the 7 of us use it anyway. Unless... Do you have a secret guest in there?!” The person talking to Yoongi gasped as if he just uncovered something scandalous.

“Watch your mouth.” You heard Yoongi threaten.

“Sorry, I’m done.” You opened the door. But you didn’t expect Yoongi to be standing so close that you ended up bumping into his back.

“Oh, no wonder hyung was guarding the bathroom.” The male said. He didn’t mind you and just brushed past to use the bathroom. Yoongi moved you away from the bathroom door, not wanting you to accidentally get hit.

“Thank you. Is it okay if I just stay here for a few more minutes? You can go back to what you were doing before.” You asked timidly. Yoongi took one look at you and nodded. You didn’t need to tell him anything for him to know what you were thinking. The club was getting overwhelming. Yoongi never really liked coming too unless his brothers make him.

“Why did you come if you don’t like it?”

“A friend brought me here as a distraction. Plus, I’ve never really come to a club before.” You replied. Yoongi nodded his head.

“You don’t need to stay and accompany me. I’ll go back down in a bit.” You said.

“It’s okay.” He leaned against the opposite wall. You took your phone out to send Wonwoo a text, assuring him that you were safe and that he could go home first. But you didn’t tell him where you were and who you were with.

“Actually... I wanted to apologise. My brothers told me I shouldn’t have offered you a smoke the other time. I just thought it would help.” Yoongi looked at you.

“It’s fine. I honestly didn’t think too much about it. There were other things to think about.” You shook your head.

“Understandable. I’m sure you have your fair share of confusion and questions that come along with it. This is just the start.” Yoongi said. You didn’t let his straightforward tone faze you, nodding your head glumly.

“Well, I should go. Thanks for letting me hang here.” You straightened up.

“No worries. I’ll walk you down.” Yoongi followed suit. You walked behind him, not sure of the way to the exit. With your insistence, Wonwoo had gone home on his own. You did feel bad for abandoning him when he was the one that invited you out but you also didn’t want him to wait.

“Do you have a ride home?” Yoongi asked.

“I told my friend to go home first so I guess I can get a cab home.” You waved him off, getting your phone out. But Yoongi stopped you, his hand grasping your phone and covering the screen.

“It’s not safe and cheap to get a cab from here at this time. I drank a little so I shouldn’t drive. Hang on.” Yoongi waved over one of the bouncers.

“Use the company car and send her home then report back.” Yoongi ordered.

“Yoongi sshi, it’s really okay.”

“Get the address from her and make sure you see her entering the house before coming back.” Yoongi ignored you. The bouncer nodded and bowed, running off the get the car.

“(y/n) sshi, hyung.” You both turned to see Taehyung jogging over. He had a big, square-ish grin as he waved.

“Jimin said you were here and that Yoongi hyung was with you. Are you driving her home, hyung?” Taehyung tilted his head.

“I drank. So was gonna get one of the workers to drive her back.” Yoongi explained, standing back as he lit a cigarette to smoke. Taehyung nodded his head.

“I’ll drive you home. I didn’t drink since I am the designated driver tonight. Bring my car instead.” Taehyung called out. The worker bowed and ran back into the club. Yoongi decided to go in first after his cigarette was done, not liking being out in the cold. He shot you a nod while you bowed gratefully to him. While waiting, Taehyung put his jacket over your shoulders.

“Did you come alone?” Taehyung asked.

“No... I came with a friend but I abandoned him. Yoongi sshi was kind enough to let me hang out in the quiet area. So I told my friend to go home first.” You said. You didn’t know why you were explaining so much to him.

“This isn’t you scene, is it?” He teased. You pursed your lips and shook your head. The noise and the crowd just wasn’t comfortable.

“Sir.” The car stopped right in front of you. The worker came out, bowing and passing Taehyung’s keys to him.

“Here you go. Watch your head.” Taehyung opened the car door for you to enter. You sat inside, keeping the sides of his jacket close to you so it wouldn’t get caught in the door.

Taehyung confirmed your address before starting to drive. One hand held his head, elbow resting on the door next to him while the other hand was on the steering wheel. During the quiet ride, you fiddled with the hem of the dress, pulling it down every now and then.

“Are you cold?” He asked.

“No, I’m good. Thank you.” You replied softly. With your head leaning against the headrest, you looked out the window. The only reason you would be out this late usually was because you were working.

“Thanks for dropping me off again.” You said as Taehyung pulled up outside of your house.

“Any time.” Taehyung smiled. You unbluckled your seatbelt and got out of the car. But before you could walk further, Taehyung rolled down the window.

“Goodnight, (y/n)!”

“G-Goodnight, Taehyung sshi.” A small smile formed on your lips as you bowed your head and entered the house. Like the other day, Taehyung only drove off after he was sure you had entered the house.

Only after you entered the house and you heard Taehyung drive off, that you realised you had forgotten the return him his jacket. You removed it carefully, as if any movement would cause it to tear like tissue. You inspected it, trying to figure out if you should wash it on your own, and risk ruining the expensive material, or just send it for dry cleaning.

“Hi, Wonwoo. Did you make it home safe?”

“Oh, (y/n). Yes, I am home. I went home after you sent me that text. Are you alright? You scared me.”

“Yes, I am alright. I just found a quiet space to chill for a while so I didn’t want you to wait for me. I just got home. Sorry for ruining the night. I’ll make it up to you, I promise.” You said, putting your shoes away.

“Don’t say that, (y/n). I’m sorry you didn’t enjoy yourself.”

“I did enjoy myself, Wonwoo. Thank you for bringing me out to feel better.” You laughed. After wishing each other good night, you hung up.

You took a shower and was feeling peckish so you made yourself some ramyeon. There was always ramyeon in the kitchen for when you or your mother needed a late night snack after working.

“I’ll have to drop the jacket off at the dry cleaners tomorrow.” You groaned tiredly, looking at the blazer that rested over the back of the chair.

-

Hoseok was one of the first ones to wake up. After a day of working and a night of drinking, the boys all usually slept in during the weekends. The moment his foot touched the bottom of the stairs, a maid ran over to him, bowing her head in fear.

“What is it?” He raised an eyebrow.

“Your workers are here, sir. They said that it is important for you to see them immediately.” She relayed timidly, afraid of making him angry.

“Send them in. And get my breakfast.” Hoseok shooed her away before shuffling to the dining room. Like any other normal person, he didn’t like having to work on the weekends.

“Good morning, Boss.” The 3 men put the crate that they were carrying down and bowed to Hoseok.

“This better be important for you to be here on a Saturday morning.” Hoseok said, not even looking up at them. He was more focused on the tray of food that the butler had placed down in front of him.

“The shipment is here early. We thought you would want to check it right away.” One of the men informed. Hoseok put his napkin on the table and stood up.

“Show me.” He commanded as he walked over. The men opened the box, revealing the contents inside.

“Very nice...” He picked up one of the items.

“Business on a Saturday morning, Hobah?” Yoongi came in. Hoseok’s workers bowed upon seeing the pale man enter the dining room. But of course, he didn’t even spare them a glance. He sat down in his allocated seat, waiting for the staff to serve him his breakfast. His breakfast was usually an iced coffee then his food 20 minutes later.

“Can’t help it, hyung. You want the best, you’ve got to work when others aren’t.” Hoseok laughed while Yoongi snorted at his comment. He placed the items back into the crate.

“Leave this here. I’ll show the others to see what they think. Good work. We’ll discuss the rest on Monday.” Hoseok said.

“Of course, boss. Have a nice weekend.” The 3 bowed, moving the crate to the side of the room before leaving the mansion. Hoseok took his seat across Yoongi.

“So hyung, I heard you had a little moment with the girl.” Hoseok asked.

“What moment?” Yoongi asked back, no emotion on his face as he sipped the last bit of his coffee.

“Jimin said you loyally guarded the door for her as she used our toilet. Even stayed with her in the hallway after to comfort her.” He explained. Yoongi rolled his eyes, his brothers really needed better things to talk about.

“I saw her the moment she entered, I’m surprised you guys didn’t considering how high our booth is. She needed to use a toilet and the queue was insane so I offered her to use ours. And I wasn’t comforting her, she needed a quiet place and I stayed with her. That’s all.” Yoongi explained.

“Well, that’s a lot more interaction than what the rest have got. Looks like she’ll warm up to you first.” Hoseok said.

“No, she’ll warm up to Taehyung first. He was the one that sent her home.” Yoongi dug into his food on the tray that the butler brought him. Hoseok hummed but couldn’t help the giggle that escaped him.

“What’s Hobi laughing about?” Namjoon came in. He had been awake for a while, opting to sit in his room to read the newspaper rather than come downstairs right away.

“No idea.” Yoongi replied, putting a chopstick of rice into his mouth.

“Ah, seriously, Hobi. I thought we established that we are not going to bring work into the dining room?” Namjoon tsked at the crate in the room.

“It’s fine, Namjoon ah. I’ll move it after breakfast. The boys needed me to inspect the goods, that’s all. Even risked bothering me on a Saturday to do it.” Hoseok waved the leader off. Namjoon shook his head with a sigh.

“Bring my breakfast.” He ordered as he took his seat at the head of the table. The butler bowed and left.

“I’m done. Going back to sleep.” Yoongi stood up and shuffled out of the room. The weekend was for Yoongi to catch up on sleep, it was normal to not see him for the entirety of the two days because he was just sleeping or resting in bed. Jin and Taehyung were the last ones that had breakfast. Jungkook and Jimin would sleep in until dinner time.

“Here. Take what is yours and leave the rest. I told you I would get things done.” Jin dropped the stack of files on the table. Namjoon, Hoseok and Taehyung shrugged, going through the pile to retrieve their things.

“So, hyung, did you find out anymore information about (y/n) when you did your stalking?” Taehyung asked.

“Yah, Taehyung! That was supposed to be confidential.” Jin hissed at the younger exposing him to the rest. However, the younger just shrugged.

“You’re running a check on her?” Namjoon raised an eyebrow.

“I’m not running a check on her... Well, at least not the background checks I usually do. I just wanted to find out about her to maybe try to connect with her in some way. She is a closed book.” Jin explained.

“So what if she is a closed book, I’m sure you can still find whatever you need on her.” Hosoek laughed.

“That’s the thing... She does not have much of a record except for her birth certificate and basic school details. Everything else either doesn’t exist or has been wiped.” Jin informed.

“Wait, you’re telling me there is actually information that you can’t find?”

“How do you know information has been wiped? She could have just not had that much of an eventful life so far. I doubt she can wipe her own records and she doesn’t seem to have a reason to do that.” Namjoon said. He didn’t know you but you didn’t seem like a skillful hacker that could wipe records.

“I know information has been wiped because I am the one who wiped them...” Jin admitted with his head slightly hung.

“What?”

“Boss wanted me to wipe them. He was paranoid, especially when it came to her. So my task was to regularly wipe her records that were 'not relevant’. Hospital visits, stuff like that...” Jin gulped.

~~

Series Masterlist

Ko-Fi

Main masterlist

#kpop#kpop scenarios#kpop series#kpop imagines#bts#bts scenarios#bts series#bts imagines#bts x reader#bts x you#bts x y/n#poly!bts#poly bts#bts poly#poly!bts x reader#mafia#mafia!au#mafia au#mafia bts#bts mafia#mafia!bts#ot7#ot7 bts#bts ot7#ot7!bts#ot7 x reader

424 notes

·

View notes

Photo

You, who gave me their hand when I fell, now I'll hold it for you.

#jimin#park jimin#bangtan#bts#btsgif#btsedit#userbangtan#mine#mine:gifs#mine:pjm#i've been sick and this has been on repeat for comfort so i thought i'd do a little something#photoshop kept glitching so idk how i feel about it but i might as well post it#<3#tumblr isn't letting me link the trans so: https://www.learnkoreanwithsel.com/letter

587 notes

·

View notes

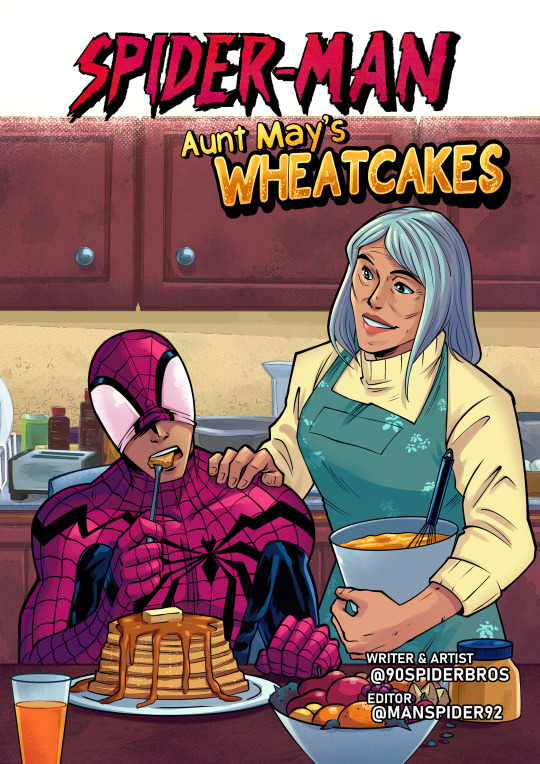

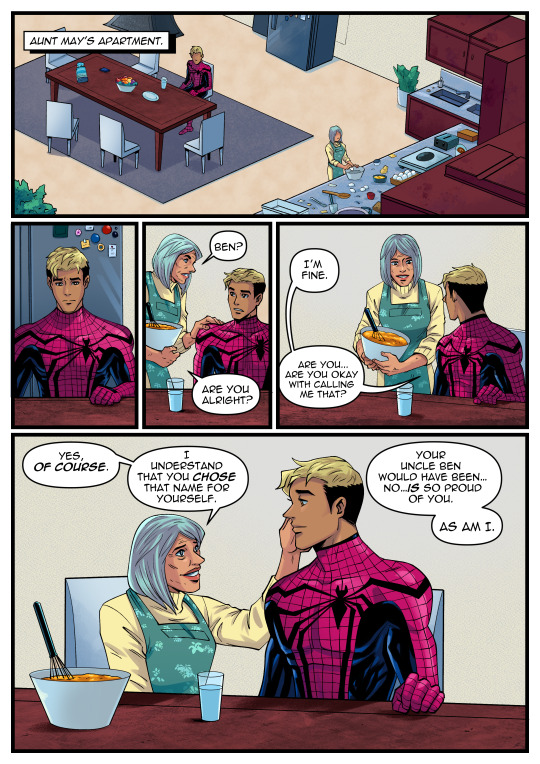

Photo

My newest #SpiderMan comic is out now, guys! Featuring Ben Reilly and Aunt May! Enjoy the family fluff and feels!

Read it for free here! 👉 patreon.com/90spiderbros

498 notes

·

View notes