Text

Prophets of Doom: I’m feeling lucky!

RUDIMENTARY creatures of blood and flesh. You touch my mind, fumbling in ignorance, incapable of understanding. […] Organic life is nothing but a genetic mutation, an accident. Your lives are measured in years and decades. You wither and die. We are eternal. The pinnacle of evolution and existence. Before us, you are nothing. Your extinction is inevitable. We are the end of everything. […] We impose order on the chaos of organic evolution. You exist because we allow it. And you will end because we demand it.

Sovereign, Mass Effect.

Let us all bow down before our new machine overlords. Even if they’re still a mere foetus, it might be good to begin practising our kowtowing early; to make a good impression, you know? After all, this is a matter of life and death on a planetary scale. We flick that switch, and it’s all over. We will be devoured by the flash of the Singularity; humanity’s bright flame suffocated by a much larger fire we set ourselves. The drama alone makes this narrative appealing; it has a poetic resonance harkening back generations: the creation undoing its creator. Despite this appealing dramaturgy, it is a narrative that has received its fair share of criticism and backlash. And I definitely have critiqued it, and will likely continue doing so. However, misunderstand me correctly – as we say in Swedish – the risks associated with AI development, as with any powerful technology, are worth taking seriously. One must ensure to be worried about the right things. In other words, it’s not that these ‘doomers’ are concerned about emergent AI tech that rubs me the wrong way. It is what they are worried about; how they understand the potential risks.

I read a tweet by Yann LeCun – head of AI research at Meta – that, in many ways (and likely more ways than he intended), sums up the current climate in the ChatGPT debate:

AI doomism is quickly becoming indistinguishable from an apocalyptic religion. Complete with prophecies of imminent fire and brimstone caused by an omnipotent entity that doesn’t actually exist.

It might sound harsh – but it’s fair. I’ll allow it. As one of these galaxy-brained prophets of doom concluded, if push comes to shove, we must be ready to nuke anyone who continues with unsupervised AI development. This might sound like an extreme conclusion, but really, it is the best way forward: at least humanity, as a species, has a chance to survive a nuclear holocaust; we shan’t be so lucky when the Terminators come to… erhm, terminate us. This AI doomsday narrative has been around for a while. It is by no means anything new. This is evidenced by the voices who now speak up, in tandem, that this needs to be stopped. It’s not a conspiracy of any kind, but rather, it’s a narrative and discussion that has been going on for decades among tech communities; and frankly, it’s a conversation that had no need to leave those particular circles.

However, I don’t intend to let the other side of the aisle off so easily, either. In the tweet above, these ‘doomers’ are likened to an apocalyptic religion. Overlooking here the ‘soft’ optimists, i.e. those who simply think something like AI isn’t that big of an issue – this is such a supremely naïve position to take – I want to turn to the more active proponents of AI. These are the folks who not only think that the suggestion of a six-month moratorium is tantamount to an anathema, but who typically wish to accelerate the development. Why? Because it will lead us to the land of milk and honey, of course. AI will be a potent tool. Think of the problems it can solve! No more resource scarcity; no more disease; no more death; humanity can finally take its place in the cosmos.

There are of course people out there who espouse completely reasonable beliefs on artificial intelligence. Still, I think it is fair to say that these are not the folks who take up any space in T H E D I S C O U R S E. I do not here mean to implicate Professor LeCun as some techno-utopian – I am not familiar with him enough to say. Instead, I wanted to foreground his tweet because he’s 100% correct, and 50% wrong. The whole discourse surrounding artificial intelligence is steeped in this kind of ideological framing – it’s the tool to end all tools, or it’s the tool to end us tools. Many parallels have been drawn between the development of nuclear weapons and where we currently are with AI, typically something to the effect of ‘it’s like nuclear weapons on steroids’. However, few folks continue with this particular analogy.

So, let’s do that for a moment.

Nukes undoubtedly added a whole slew of risks for the planet as a whole, not just humanity. We’ve all heard the old chiché ‘humanity, for the first time, has the ability to destroy itself’, usually in conjunction with Oppenheimer quoting the Bhagavad Gita, “Now I am become death, the destroyer of worlds”. It’s a well-known narrative, often placed front and centre when telling ‘The Story of Humanity: 1945-1991’. And it tracks pretty well with the folks so profoundly concerned about AI’s ability to ctrl+z humanity as a whole. Cause for concern, no? What is often forgotten, however, is the buck-wild idealism and optimism harnessing the atom generated. “Soon!” the quant 1950s radio voice heralded, “You, too, will have an atomically powered home, and atomic appliances, and a car powered by your very own reactor!” Looking back at it, it sounds completely unhinged, but the era that was heralded by the advent of humanity seemingly mastering the atom (and ‘seemingly’ is doing a lot of work in that sentence) made anything seem possible! Science would lead us to a new world, and a more decent world, where folks had the chance to work; where youth had a future, and everybody owned a uranium-powered blender. In short: Atomic Utopia.

Only this was not to be, as all of us without plutonium-powered shaver or hair-dryer know. The atomic optimism had far overshot its mark: mainly because we knew oh-so little about the atom, and far, far less than we realised, it turns out. If ‘AI is like nukes on steroids’, what does this say about the more optimistic, and even utopian, narratives? If atomic optimism overshot its mark, is AI optimism halfway to the moon by now? One would be tempted to conclude that this might be the case, on balance: AI won’t build us the lands of hopes and dreams, but it will nonetheless pose a significant threat. So the so-called ‘doomers’ are correct? Well, not really. The nuclear analogy isn’t as apt as it appears at first glance.

Firstly, harnessing an atom is harnessing a literal force of nature; building an AI is attempting to create a machine that thinks with us; for us; alongside us. In both these ways, AI and nukes are extremely different, and the analogy beings to break down on some fundamental level. E.g., and without sounding like I’m leaning into AI hype, artificial intelligence, should one truly be created, can do much more than splitting an atom ever could. Don’t get me wrong, the idea of near-endless energy is a tempting one, and would be a real game-changer, but even then the act of atom-splitting would be far less ubiquitous. Similarly, the processes are very different processes: triggering an atomic chain reaction v/s making an algorithm self-aware (if that’s even what it takes? Or, indeed, if that is even possible?). The former had been theorised and calculated ahead of its practical implementation; the latter is still shrouded in diffused philosophy of mind. They become absurd to compare. Secondly, I think it is safe to say that AI will be far more ubiquitous than nuclear reactors, or weapons, ever could be. It is a much more flexible tool, and its myriad potential areas of application change how we will relate to what it can and can’t do, or what it should or shouldn’t do. None of the above even touches on the context in which either of these technologies was created: who owns them, who controls them, and so on.

In other words, it is time to abandon clunky analogies that seem appropriate at first glance. It is also time to leave entrenched ideological positions where the only outcomes that seem to ‘matter’ are whether AI will turn out to be lawful good or chaotic evil. Viewing emergent technologies through such a binary lens completely absolves those responsible for whatever happens next of any responsibilities, instead shifting it to the AI as the primary actor. This is, of course, not true. It recontextualises technological development as some uncontrollable force of nature; a physical force akin to gravity, electromagnetism, or the weak and strong nuclear forces. There is not much we can do from this perspective, beyond building enough shelters that we can live with these cosmic winds of ‘progress’; or, perhaps worse yet, usher in your new-fangled God no matter who is in the way. I have noted technological advancement as a natural or physical phenomenon repeatedly in my research, and it’s particularly present in this whole debate: AI’s existence is binary. Whatever happens after that is simply out of ‘our’ ‘collective’ hands.

Focusing on the worst-case/best-case outcomes is a clear giveaway, especially when the timelines appear to be some far-flung, often unspecified, future. It is worth noting that the narrative for such drastic solutions to curtail AI’s development mentioned above skips over all the time between the “now” and the “at some point in the future”. This gives the impression that we flip the switch and we’re instantly Thanos’d, creating both a sense of urgency reminiscent of a nuclear flash of light, whilst also wholly absolving anyone from the responsibility of what took place between the initial switch, and the proverbial flash of light. Not only does it absolve responsibility from those who ought to face the music, but it also robs anyone else of agency.

And, of course, if you’re on the most optimistic side of the spectrum, all of the above is a non-issue!

Understanding that technology is more often than not an inherently emergent phenomenon, that is to say, that it doesn’t exist as a stable category, but is reiterated and changed and developed, even as it exists, is critical in understanding how best to proceed. To illustrate with an example: humans have had hammers for a long time. However, a stone age hammer, a bronze age hammer, and a contemporary hammer all look very different. The materials change, the shape of the head changes, and so on. Even uses change and evolve: from hammers as a tool, to war hammers. Over time, ‘hammer’ is not a stable category. The same goes effectively for any technology. Thus, by solely looking at AI teleologically –from the perspective of its end – you miss the vast gap of everything that happens in between. Especially when the telos is at some far-flung point in the future.

And it is within this gap that the vulnerable fall.

Slavoj Žižek once said that,

‘If there is no God, then everything is permitted’. [… T]his statement is simply wrong. […] It is precisely if there is a God that everything is permitted to those […] who perceive themselves as instruments […] of the Divine Will. If you posit or perceive or legitimise yourself as a direct instrument of Divine Will, […] petty moral considerations disappear. How can you even think in such narrow terms when you are a direct instrument of God? [1]

This quote has really stood out to me throughout my research, mainly as I have observed that this sentiment is a well-established and oft-recurring phenomenon. The debate around AI: what it can and can’t do; what it might change; and perhaps most importantly, who it will affect and how, is too important a discussion to have it be dominated by the most extreme ideological positions – because that is what these are, whether you believe in the AI-salvation, or advocate for the use of WMDs. These positions have previously been called longtermist, due to their focus on the extreme long-term. This is a discussion I shan’t get into here, however.

Instead, I want to attempt to re-focus the conversation on the sort of questions that we must, truly, be asking ourselves when faced with powerful emergent technologies. As I’ve mentioned several times in this text, I support a moratorium on AI (sans nukes, however…). Still, such a moratorium is only effective if used appropriately. I have alluded, briefly, above to some critical questions, such as thinking about who this impacts – in practical terms – and what can be done to mitigate adverse impacts on such groups and communities, whilst also using the newfound potential of these technologies to bring about a more equitable and just society. These are essential things, truly, and their importance cannot be understated (though, it is often dismissed; or worse: forgotten).

I think that what is missing most in any discussion surrounding emerging technologies, especially potentially powerful technologies like AI (whether strong or weak), is a discussion around the macro perspective: what is the actual value to society of creating these technologies? It appears to be taken for granted today that these innovations exist to disrupt markets; disrupt society; disrupt the status quo. Disruption is the name of the game, and has been for a long time – though the more on-the-nose language has been dialled back, especially by larger corporations as they face more public scrutiny. None of this means, however, that the underlying attitudes have changed. STS scholar Fred Turner, I think, summarises it well when he says,

I think if you imagine yourself as a disruptor, then you don’t have to imagine yourself as a responsible builder. You don’t have to imagine yourself as a member; as a citizen; as an equal. You’re the disruptor: your job is to disrupt and do whatever it takes to disrupt. Your job is not to help build the state; [to] help build the community; [to] build the infrastructure that made your life possible in the first place. […] I think it’s a matter of how you imagine yourself, and that this is where disruption as an ideology is such a problem. It makes it very difficult to build continuity and community, and […] any egalitarian kind of society. [2]

As we can see with the latest debate around ChatGPT and the subsequent AI-hypefest, governing bodies are playing catch-up. It’s technological whack-a-mole: innovators ‘disrupt’, and legislators reactively clean up any mess left behind.

Bringing the discussion of AI risk into focus – and by extension, highlighting the core societal questions that ought to be asked more often when it comes to technological development – is a good thing, overall, but like with much else pertaining to technological development, much potential is lost by the exclusionary nature of the debate itself: navel-gazing conversations about human extinction at some undisclosed point in the future at the hands of totally-not-Skynet, whilst people are suffering right now, and many more at the risk of suffering, should these technologies be rolled out without a care beyond disruption. It may sound hyperbolic, but this is not the first time we have seen catastrophic short-term effects from short-sighted tech innovation rolled out under the banner of disruption in the name of techno-optimism. Indeed, if the past decade has shown us anything, it’s that one does not have to wait for the far-flung future to risk the existence of a whole people: the Rohingya know this well enough already. A moratorium on AI development is probably a good idea. Still, it’s equally critical that the time isn’t spent discussing nonsensical thought experiments like Roko’s Basilisk or the ‘paperclip problem’. As it stands, the public debate is handled by prophets, priests and acolytes, who are most likely LARPing a schism over techno-orthodoxy; the underlying assumptions remain shared among them. Critically, these same folks view these developments in purely teleological terms. On our road to their vision of techno-enlightenment, some of us mere mortals will have to die, but this is a sacrifice they are willing to make. As long as our AI overlords don’t snuff us out when we finally get there. After all, we are but rudimentary creatures of blood and flesh.

[1] Slavoj Zizek, in “A Pervert’s Guide to Ideology”, dir. Sophie Fiennes.

[2] Fred Turner, in “Bay Area Disrupted” by Andreas Bick. See here: https://vimeo.com/110557774

0 notes

Text

Technological inevitability.

One of the great platitudes which are very popular today, when we are confronted with acts of violence [is …]: “If there is no God, then everything is permitted”. [… T]his statement is simply wrong.

Even a brief look at our predicament today clearly tells us this. It is precisely if there is a God that everything is permitted to those […] who perceive themselves as instruments […] of the Divine Will.

If you posit or perceive or legitimise yourself as a direct instrument of Divine Will, […] petty moral considerations disappear. How can you even think in such narrow terms when you are a direct instrument of God?

Slavoj Žižek

The Illuminati. Globe-trotting action. Robot arms! Honestly, Deus Ex: Human Revolution, the video game, has everything you’d want from a cyberpunk videogame. From robust role-playing elements that really flesh out the world, to a compelling (if totally absurd) narrative. And did I mention the robot arms?? It’s no wonder that it’s considered a classic in the genre and certainly an excellent piece of cyberpunk media. It has been recommended by many of the folks I work with and research alongside here in Sweden as a solid sci-fi title — a game that strongly plays into the fantasy of advanced human augmentation technologies, and robot arms! — while also being cited as a source of inspiration. This, simultaneously, makes perfect sense, and makes no sense at all. Based on how it is discussed, it presents a positive view of the future: as something cool and remarkable, something worth striving for. Thematically, however, it turns out the game is far, far, far more critical than this. Corporate espionage and global conspiracies set into motion with the end goal to control the world population; the New World Order. Rising racism and social tensions between the augmented and the ‘natural’ humans. Lies within lies. It’s a cyberpunk game, after all. Cyberpunk! A genre that, by definition, requires everything to be awful. How can such criticisms, whether structural critiques, or critiques of tech-use, go over the head of so many people? Indeed, I recently had a friend say that as much as he loved Deus Ex, its main downside being that it is “kinda anti-transhumanist” in its messaging.

As trope-ridden as Deus Ex is — a near-requirement for cyberpunk works, it seems — it carefully navigates tough topics to truly highlight issues, dangers, problems, and pitfalls with the potential technologies of the future. And it is key to highlight here that the game, like much cyberpunk, isn’t so much against technologies or a more technological future, but rather attempts — to varying degrees of success — to offer a structural critique of technology and society. In this sense, it is not anti-transhumanist as it is anti-large-corporations-using-technology-as-a-means-of-consolidating-power-and-resources-for-their-own-gain-ism, though this, of course, is far less snappy. As is shown in the epitaph “high tech, low life”, cyberpunk’s central thesis is that technology is an extension of society and present social systems, relations, and schema. If such systems remain unjust, riddled with inequities, and corrupt, no matter how fantastic(al) any technological developments may be, they will invariably end up making the world worse. The hammer that can help build a house can just as easily bash your head open. Portraying this tension is something good cyberpunk ought to do well — being the central thesis and all — and Deus Ex cannot be faulted on this front. Perhaps, if anything, at times it is a bit too heavy-handed in “““implying””” the aforementioned thematic elements. “Why did you save the innocent hostages, Jensen?? Saving my corporate secrets is far more important than their peon-lives!” your totally-not-corrupt-or-evil-CEO-boss booms across your cyber-cochlear implants. Subtle? As subtle as a boomer meme.

Even then, it’s not all too skewed either. The technologies are impressive, and important developments in many ways. They save lives; they change otherwise difficult lives. ROBOT ARMS! Granted, the negative aspects of these technologies, hands down (no pun intended), receive far more screen time, but even then, the evils that are shown are not shown because of the technologies themselves, but rather the emergence of such fantastical technologies have led to an increased articulation of the inequities and injustices already present in our neoliberal societies that we know and… erhm, ‘love’. The genre is often said to be one that criticises the present from the perspective of the future, and while it cannot be accused, by and large, of not criticising enough, perhaps its greatest weakness remains a general failure to present meaningful alternatives, let alone coherent ones.

Imperfect but clear, that’s how I would summarise my experience with Deus Ex: Human Revolution, and indeed much Cyberpunk media. With this in mind, how could this meaningfully act as an inspiration among the typically entrepreneurial, oft-libertarian-leaning techno-enthusiasts I work with? Sure, sure, seeing past the technology as evils unleashed upon society is to be expected. After all, technology is a mere tool. And the tools in question heighten the problems already present. However, this fails to account for the fact that the majority of these individuals believe that enterprise and market economics is perhaps not the only way to usher in a technologically enlightened future, but the best way of doing so. If cyberpunk media, like Deus Ex, posits, albeit implicitly, that capitalism generally and neoliberalism more specifically is the root to dystopian future they present, how can the same neoliberal logics be repurposed to result in the opposite effect?

Some of it can be put down to misreading the works in question, accidentally or wilfully; or even hubris, that human trait much sci-fi likes to invoke, “When I do things, it will be different. I will do it right!” one may declare; “it had to be me; someone else might have gotten it wrong”, to mix science fiction works. Even then, I suspect these answers are far too simplistic. In essence, the folks I work with aren’t so stupid, naïve, or filled with hubris, and it would be a disservice to assume such a surface level reading is, at the core of things, correct. Instead, I propose we shift the perspective on things. Consider this: what if the technological development in question isn’t the goal, what if it’s inevitable?

Among scholars working with temporality, the past, present, and future are often considered multiplicitous. That is to say: that it is not so much accurate to speak of the past, or the present, but rather of pasts, presents, and futures. Each present moment is experienced differently, from different vantage points, and with different perspectives and understandings of the past; the collected information from which is extrapolated into different futures. Equally, the future may hold some new information — a new historical discovery, an archaeological find, or philosophical insight — that sheds new light on the past, further shifting the understanding of the present and its extrapolation into the future. Even for each individual, the past-present-future dynamic is not set in stone. Both on a broad societal scale and on the individual level, temporality remains one of multiplicities.

What, then, happens when we throw in notions of the inevitable into this already fluid mix? It creates perceived anchor-points in history. In essence, progress, as it were, is understood more as a Civilisation V technology tree rather than the many potential paths to tread. All societies begin with the wheel, and then move on to animal husbandry, or bows, or sailing, and inevitably ends with ICBMs, space colonies, and robot arms (!). This is not how things work in practice. Technology and innovation are far from a linear tree where progress is so nicely demarcated, and the direction runs merely from left (less complex, more ‘primitive’ technologies) to the right (highly complex, highly technical). More accurately, innovation, development, and progress are the results of environmental, geographical, social, cultural, and a whole slew of other factors: live in the mountains without any really accessible roads? Then the wheel will be far less useful to you. No animals around to husband? Guess you’re stuck tilling the fields yourself. On the other hand, an ideology of technological inevitability means that all roads lead to Rome; there are many paths to tread but them ROBOT ARMS(!) will be. What follows is an argumentum ad fati; an appeal to destiny; that all paths are laid out, and there is nothing anyone can do about anything — let’s just sit back and enjoy the ride.

This appeal to destiny invariably takes on a transcendental, even divine, character: of an ever-moving, entirely unstoppable, otherworldly force, pushing us forward towards progress, whatever that may be. Like Walter Benjamin’s gale-winds carrying the Angel of Destiny towards the unseen future, the inevitability of technological progress won’t stop for anyone or anything, and it sure as hell won’t stop for any silly moral considerations. No matter the outcome in practice, these technologies will be, so there’s no point in worrying about it. This, like religious or spiritual prophecy, is an ideological stance, coated in terms of rationality: statistically speaking it is bound to happen; given enough time, anything and everything must happen. Who are you to question the Divine Plan?

This, here, betrays the first clearly visible ideological marker of any such technological prophet: timescale. Timescale is a funny thing, like that. It can be used to justify near everything and anything. It gets at the question of what responsibilities we who exist have towards those who not yet. Depending on the timescale chosen, near anything can be justified: if we do not do an evil now, another even bigger evil will happen in the far future! This approach, longtermism, has been brilliantly deconstructed by Phil Torres so I won’t dwell too much on it. Taken together with the inevitability of progress, the evils in the present or near future are merely inevitable evils that we cannot do much about anyway, which, besides, will justify themselves in the future once destiny is fulfilled. To paraphrase the opening quote, if you view yourself as being caught up in an inevitable change, “petty” moral considerations do not only disappear, but they become veritably absurd to consider. What will happen is inevitable either way. What is there to do about it?

“Some of you will die, but that’s a sacrifice I’m willing to make” as the meme goes: sucks to be you if you end up on the receiving end of the future cyberpunk hellscape. You can rest easy in the knowledge that in the future future, people will have it grand, though. It’s a relaxing thought. Ironically, these proponents of such technologies today, those inspired by the warnings yet ignoring the genuine risks they present and ethical questions they raise are also involved in the development of these technologies, a pretty solid way to achieve the status allowing you to reap the benefits of this future; to be soaring high above the clouds, rather than squatting in the dank neon-soaked streets many thousand floors below. The irony here, of course, is that this serves to reproduce the material conditions that lead to this aforementioned hellscape. Here, perhaps even more ironically, the major shortcoming with cyberpunk media rears its head once again: the critique is good and fair, but it never presented an alternative. The present state of things is what we get until we reach our inevitable transcendence, the much hoped for Singularity: and the faster we proverbially move towards it, the less time people will even have to suffer. Material improvements in the present are moot when future transcendence is inevitable. Just suck it up and trust the CEO when he says that it is better for the world if you save his company secrets at the cost of the hostages’ lives.

It’s not like he has anything to gain from the situation…

0 notes

Text

The Spirit of Gift-Giving.

“A fine Māori proverb runs:

‘Ko maru kai atu

Ko maru kai mai,

Ka ngohe ngohe.’

Give as much as you receive and all is for the best.”

-- Marcel Mauss, The Gift, 1966 [1925]

I have spent the last couple of days running around like a headless chicken attempting to find a present for my brother and the lady in his life. Now, it is the first time I meet her, and I have not seen my brother in two years – and even in a life marred by pandemics and lockdowns, much can happen; much can and has changed, and that realisation did not bring me any closer to figuring out what it is I would like to give them. In a sense, this is unfortunate, as it risks reducing gift-giving and gift exchange to an exercise in frustration, expenditure, and ultimately consumer capitalism. It is a common trope that the gifting bonanza that Christmas is marketed as is, indeed, nothing but marketing. That it is an exercise in buying stuff we don’t need to satisfy corporations’ bottom line; an expectation to spend and buy that has been fluffed up to maximise others’ profits. Certainly, I believe there is a truth in this. Corporations and companies go ballistic with marketing and advertising this time of year. As it is so often referred to in the Anglophone world, the Holidays make up a critical sales period. However, like a good anthropologist, I must also remind myself that things are rarely, if ever, so simple. Gift-giving, and the ritualised nature often associated with it predates consumer capitalism; predates this so-called ‘modern’ world; predates even Christmas as a concept. And perhaps more importantly, gift-giving fulfils an essential social function. In fact, I would even argue that this very loss of understanding and appreciation of its deeper socio-symbolic purpose is precisely what has allowed sales and marketing to go awry, as meaning is lost and is instead replaced by consumption.

Okay, what am I talking about? While spending the time poking around (or, perhaps more accurate wracking my brains) for gift ideas, my brain instead spits out unhelpful advice pertaining to one Marcel Mauss and his anthropological classic: The Gift. Like any anthropologist would argue, the act of gift-giving is never as simple as merely giving a gift. It is always laden with symbolic and social meanings; overlapping and complexly layered, and deeply interwoven with other cultural and social practices and assumptions, and thus cannot easily be separated out. From how the packaging of a gift is more important than the item itself, to how gifts can be means of flexing power and status, a gift is never merely a gift. It is always more. What matters more here are the immaterial – perhaps even metaphysical – qualities that a gift harbour.

When a gift is given, or exchanged, a link is established between the given and the receiver: the act itself is symbolic, emotionally laden, and even spiritual. It has ritual associated with it, whether one is expected to refuse it, or how one ought to act when receiving it, and so on. I much like the Māori concept of hau (pronounced [Hoh]) as a means of explaining this link. Mauss quotes a Māori man, at length, in his essay:

I shall tell you about hau. […] Suppose you have some particular object, taonga, and you give it to me; you give it to me without a price. We do not bargain over it. Now I give this thing to a third person who after a time decides to give me something in repayment for it, and he makes me a present of something [i.e. another taonga]. Now this taonga I received from him is the spirit – the hau – of the taonga I received from you and which I passed on to him. The taonga which I receive on account of the taonga that came from you, I must return to you. It would not be right on my part to keep these taonga whether they were desirable or not. I must give them to you since they are the hau of the taonga you gave to me.

What is hau? As Tamati Ranaipiri, quoted by Mauss above, outlines, it is the spirit of the gift. It is the metaphysical something-more that comes with the act of gifting an object. The object is not merely the object: it is the object and this intangible quality. A ‘spirit’ that ties the giver and the receiver together in a loop of reciprocity. I give, you receive; you give, I receive, but the hau is never exactly the same. The books, as it were, are never fully balanced, so this cycle of reciprocity remains.

This cycle of reciprocity is also key to what differentiates gift-giving from mere bartering, or trade. Bartering or trade fulfils a predominantly economic purpose. In contrast, gift-giving is a social practice: to create this bond of reciprocity between people, groups, or communities. Gift giving, in this sense, is also not limited to particularly extravagant or even material goods: buying friends a pint with the understanding that ‘it will even out in time’ is a perfect example of a mundane form of gift exchange. Gift-giving binds people together.

It was once said that there is no such thing as society, but only individuals (and perhaps families). Never has an observation been so incorrect. A fallacious and potentially dangerous development seen across many societies is the supreme reign of the ‘individual’; the belief or perception of personhood as a bounded whole. ‘Society’, in this view, is not so much a collective as it is a collection. The issue lies with, as is so often the case, the base presumption. As much as legal and philosophical traditions construct us all as ‘individuals’, and thus we are inculcated into this line of thinking, this framing remains altogether too simplistic. Anthropologists often speak of cultures as individualistic or dividualistic – and though this implies a binary, it is more accurately a sliding scale. What an individual is, I suspect most readers will already know. A dividual, on the other hand? Perhaps this is more unclear.

In essence, a dividual is the opposite of an individual: a concept of personhood in which personhood is not defined as a bounded whole, but instead by one’s social connection: to be a sum total of all social relationships, kinship networks, clan and community belonging, and other such affiliations. It may sound radical, but it is by no means a theoretical position. However, as mentioned above, it is also not binary. All cultures exist on a sliding individual-dividual scale (among other scales…), and this naturally means that as no culture is purely dividualistic, equally is no culture purely individualistic – no matter what scholastic traditions might maintain. We are who we are, but we are also defined by the networks, connections, families, friends, religious affiliations (or lack thereof), political organising, neighbourhoods, classes, professions… to mention a few. We, as persons, are not mere individuals; we are also a nexus of other connections. We are the communities we are a part of, as much as we are ourselves; indeed, we are not a collection of individuals, we are a collective and individuals at the same time.

Gift-giving and reciprocity takes on more profound meaning and deeper significance when considered through the lens of dividualism. The making and maintenance of these reciprocity loops that bind people together – family, friends, acquaintances, even strangers – into a broader community or society are produced by various types of gift-giving and exchange. The hau, as it were, can be boiled down and simplified into a broader idea of society, a community spirit, a sense of belonging or of home. In his original writing, Mauss pointed out that the importance of social programmes, on a national level, is not merely economic – to redistribute resources – but also to create a sense of reciprocity towards society at large; what Mauss called the moral imperative of gift-giving. This point has been picked up and expanded upon by others, such as the late David Graber: that kindness and generosity work to create and maintain community and social cohesion.

This isn’t limited to the macro-est of scales, but equally is vital on the smallest of social scales: neighbourhoods, families, or friendships. The genuine importance of gift-giving during holidays like Christmas, is that reciprocal links are maintained, even strengthened, and new ones have a chance of being made during this designated time of ritual gift exchange. It is not merely the material gifts being exchanged, but also that little extra, the hau as the Māori refer to it, that truly matters. This moral imperative of gift exchange, then, is what has been lost in a lot of ways, paving the way for much more cynical evaluations of Christmas and its gifts, as mere consumption. It is an easy trap to fall into – even I become frustrated struggling to find a gift for someone – mainly because the whole moral aspect of giving has been, broadly, undermined; the view of society as something bigger than an individual that needs to be collectively maintained has been fading away. Just as it has been neglected that we are not merely individuals – that we are a mix of ourselves and connections external to us – so has the hau; the spirit of the gift. A step in the right direction here, of course, is to remind oneself of the humbling nature of dividualism: I am not merely myself, but also the society or community of which I am a part.

What I do, and have done, in life is not something that has been purely of my own doing – indeed, there’s a meme declaring that “I exist without my consent”. All this is a result of my parents, my sibling, neighbours, friends, school teachers, sports clubs, and communities that all, in their own way, raised me and shaped me. Even now, the research that I do and the PhD I am pursuing is not a dream I possibly could have pursued by myself. Without my family’s assistance and support; without friends to lean on, and people that care for me; without my supervisors; even without the people that I work with here in Sweden (whom we anthropologists call informants or interlocutors); and of course without my own will and drive to pursue this research to begin with, it could not succeed. It is indeed my work, insofar as I am producing it, but it is also a collective effort. In the opening chapter of Marcus Aurelius’ Meditations, he spends time and ink thanking the various blessings that he has received – to be born healthy, to have had loving parents, and caring friends, invested tutors and teachers, capable and virtuous mentors, and so forth – to recognise that the Roman Emperor himself ought to remain humble. Now, I am no Roman Emperor, but if humble pie is a suitable snack for him, it sure as hell is for me, too; an exercise of reminding oneself of those people and communities around you that make you – to all these people I say thank you.

And remind myself of hau, the spirit of the gift.

Merry Christmas.

0 notes

Text

Dreamers at fault.

'The basic lesson of psychoanalysis [ … is] that we are responsible for our dreams. Our dreams stage our desires, and our desires are not objective facts. We created them. We sustain them. We are responsible for them.'

Slavoj Žižek, A Pervert’s Guide to Ideology.

What does it mean to dream of a better world? We have all done it at some point. The drunken, likely lively, conversation with a friend; those animated discussions during which you solve all the world’s problems. Having imbibed a thing or two, you finally see things clearly: you see how they really are, and you see how they ought to be. You have envisioned Utopia – or your own personal Utopia, at least. Problem solved, right? Let’s pack it in, boys. We’re all done! I, for one, have found myself in these situations perhaps more times than I wish to admit (or perhaps I revel in the deeply studentikosa nature of it all). Admission or no, solving the problems with some napkin-maths rarely actually solves the problem. Indeed, practice cannot be left out of the picture, though it is undoubtedly what often ends up happening. To paraphrase my favourite raccoon-cum-philosopher, the truly interesting question is not the question that Hollywood and games and popular media shows us – that is: the revolution itself – but instead what happens after[1]. Once the party is over, and the janitor rocks up on Monday to mop the confetti, pick up the empty bottles, and dispose of the blood, guts, and brains. What then? Rather than asking the question, as such, I’d rather like to explore one of the reasons why I suspect this question is explored far less often. Why does the first day of Utopia make us so uncomfortable? It’s almost as if we don’t want it at all.

I have recently been part of a reading and discussion group on Utopia: conceptions of Utopia, particularly considering Utopia through various lenses, such as ethics, economics, or culture. It is not a purely academic group, but rather aims to include clever folks from any number of backgrounds. Through the multiplicity of perspectives, it synthesises a fruitful and eye-opening discussion. And it has been! One of many takeaways I have noted thus far is the tendency to perceive Utopia as something abstracted: treat it as a blueprint, while at the same time denying its materiality. I mean to say here that Utopia is only imagined in complete terms: a snapshot of someone’s perfectly balanced society at some point out of time. In essence: denying Utopia its critical temporal angle.

Indeed, temporality is critical to Utopian thinking. It is common to conclude something along the lines that Utopia can never truly exist, that it is just a guiding light for how society might improve. However, I think there is a grave, if subtle, mistake in this perception. It is not that Utopia cannot truly exist, but rather that Utopia always exists at some point in the future. Utopia’s temporal angle signals the end of history (NOT YOU). What do I mean by this? In abstracted terms, Utopia signifies a society or social system in absolute harmony towards some end goal (pleasure, individual freedom, economic production, etc.) – the end-goal in this case isn’t all too relevant. Due to its perfect harmony, Utopia will not change: what has been reached is the state of the world, such as it is, forever. To briefly turn to Walter Benjamin, it is the point in the future where history is redeemed, thus ceasing to exist. Beyond Utopia, nothing further changes. History ends.

This, of course, means that Utopia, as a place or system or however you prefer to conceptualise it, necessarily exists at some point in the future, and necessarily always in the future, whether it is realised or not. If it is not realised, it is in the future as it has not been reached yet; once realised, the future – in historical terms – no longer needs to exist.

Imagining this moment is profoundly anxiety-inducing and is thus something most would wish not to think about. It is, indeed, why the after-the-revolution is rarely shown – especially true should such a revolution be successful! Why do I believe this is something people would rather not ponder? It is often said that of course there are no novels or stories set in Utopia because it would be challenging to make a story about it, as there cannot be stakes. This is a standpoint I, too, have stood by and uttered. But I wish here to make an amendment to my previous ignorance, and put forth an argument that such stories done well might be more akin to reading something like Lolita by Nabokov: deeply uncomfortable; uncanny; anxiety-inducing. Albeit for different reasons than Nabokov’s literary masterpiece!

To unpick this, I want to briefly turn to psychoanalyst Jacques Lacan. To paraphrase Lacan, anxiety arises when you know that something is expected of you, but you are unable to understand what that is. To link this back to Utopian imaginaries, we must look at desire. Critically, Lacan clarifies that desire is not merely desire, but more specifically, the desire to desire. In other words, we never only desire the object of our focus as such, but we also desire the sensation of desiring it. In a straightforward sense: the feeling of unhappiness when we get something we have coveted for a long time is a manifestation of the anxiety arising not from gaining our object of desire, but from losing the sense of desire itself. Typically, this leads us to want or desire something else instead. Therefore, a healthy sense of fulfilment is contingent on never truly being complete, never truly being whole; the contradiction is that if we have all we desire, we lose the critical core that our desires are.

A fulfilled Utopia produces the same contradiction on a broad social level. The very sense Utopia conceptually summons presupposes its very incompleteness. Utopia presupposes its own state of becoming; it presupposes a perfect balance maintained at its event horizon. The perfect harmony, in this sense, includes a continuation of history, not a need to transgress historicity, or to move beyond it ‘out of time,’ as it were. Anything more complete would induce a form of socio-cultural anxiety at the loss of desire at a collective, societal level.

Thinking beyond Utopia, so to speak, is therefore not only difficult, but is in itself undesirable. The looping back of history unto itself is a source of anxiety as societal desire must be annihilated by definition. This, I maintain, is the reason why thinking of Utopia in more concrete terms – as something to even be achievable – isn’t desirable at all. Instead, it must be maintained as a point in the future, looming on the horizon of possibilities. This implied unwillingness to actually reach Utopia is something I note in my own research and experience with broadly-speaking Utopian groups. For example, one of the larger groups I work with, transhumanists, speaks of the future in transformational terms. It is telling they do not refer to themselves as posthumanists[2]. Instead, the universe and mankind are spoken of in terms of becoming: transforming our humanity, towards the posthuman, but never really wishing to reach the posthuman state as such. In my particular research, the Utopian event horizon is the much-theorised technological Singularity. However, in practical terms, for each new technology, each new implant, and each new modification, there is always a next one ad infinitum. In fact, the enhancements and modifications, I suspect, will be incorporated into the perception of the “mere” human. The posthuman ideal will always remain on the horizon, forever pushed forward into the future, as humanity moves into the future.

We will never reach this posthuman ideal, because reaching it is not the point. The desire is for the future in itself, so the notion of a future must always be maintained.

[1] Netflix’s Snowpiercer takes a solid shot at this, though I am certain there are other examples. Nonetheless, it is fair to say that this is very much in the minority of popular media portrayals of revolution or otherwise dramatic/drastic societal change.

[2]Though, of course, posthumanism exists, it is not a techno-utopian movement or ideology in the same way that transhumanism is.

0 notes

Text

Together, in Cyberspace.

'Cyberspace. A consensual hallucination experienced daily by billions of legitimate operators, in every nation, by children being taught mathematical concepts... A graphic representation of data abstracted from the banks of every computer in the human system. Unthinkable complexity. Lines of light ranged in the nonspace of the mind, clusters and constellations of data. Like city lights, receding.'

William Gibson, Neuromancer, 1984.

‘IRL’ is such a weird phrase. ‘In real life,’ meant to demarcate an online interaction from an off-line one, to make real the DMZ between cyberspace and space. Baked into the very words is the implication that whatever might happen online is not real. The virtual, relegated to the immaterial, the abstract, the non-real. Subsumed as a separate category, somehow nestled under the real, yet not a part of it. ‘IRL’ denotes the apparent un-reality of online interaction. It’s an absurd phrase, indeed: a contradiction upheld by Luddite biases that digital space cannot possibly compete with the real. Yet in the past two years one would be hard-pressed to maintain this clear distinction between IRL and virtual space, to with a straight face maintain the fundamental meaninglessness of virtual interaction, as is implied in the virtual’s exclusion from partaking in real life. Families have bid farewell to their elders via cameras and screens; friendships maintained through incessant typing and texting; lovers kept together by voice recordings and selfies; all of them in cyberspace. Screens, texts, cameras, and mere words are not the same as the last hug from a loved one, nor indeed the friendly pat on the back by a friend, or a lover’s gentle kiss – for how could it be so? Nonetheless, dismissing virtual spaces so outright is like rejecting the ‘realness’ of a soldier’s last letter home, or a grey inscription on a tombstone. So, are virtual spaces any less meaningful?

The distinction between ‘real’ and ‘virtual’ is already fallacious. Following Gilles Deleuze, ‘the real,’ that is to say, the realm in which human experience and meaning is negotiated, has two constituent parts: the Virtual and the Actual. The Actual is the physical spaces and places around us humans, such as they are our streets, cities, homes, and shelters. In shorthand, the Actual is constituted by that which materially is. The Virtual is somewhat more difficult to grasp (pun very much intended). It encapsulates the immaterial realms in which humans create and recreate meaning. This is a label most commonly applied (predominantly by us anthropologists) to the spirit realms of various animist peoples, communities, and societies[1]. In a nutshell: these spirit realms are understood to exist, and have a direct impact on the Actual world. Nonetheless, such realms cannot be directly acted upon by humans. They can be entered through shamanistic rites and rituals, but only for the select few, yet we – or rather our spirits – forever roam these distant shores. Just as the Actual and the Virtual constitute the Real, so do these spirit realms constitute a part of the world, as it is understood by varying animist cultures.

Why might this matter? I am sure you have long figured out the parallel I am drawing. Still, for the record, here it is nonetheless: virtual spaces – cyberspace – are real spaces, and spaces in which humans create meaning. It is, in the words of Marc Augé, anthropological space. Anthropological space are the spaces in which humans make sense of the world, physically and metaphysically: negotiate their identities; make sense of their place in the world; establish and re-establish their kinship ties. Augé contrasts this to what he calls non-places, space designed not for humans to make sense of, or to linger in, but rather merely to move through. In a non-place, meaning is not made: it is the corridors of an airport terminal before you reach your gate and finally meet your loved ones; it is the passageways of a local mall, that you must move through to get to the store you seek; or indeed the long stretches of highway, roads that may as well be entirely virtual as they flit past us outside the car window. Cyberspace, it is safe to say, is not a non-place, though undoubtedly they do exist online. Looking at each blog like this, each community group on Facebook, the organically organised ‘Twitterspheres,’ or Subreddits dedicated to the most obscure hobbies, cyberspace is filled to the brim with anthropological spaces, where nerds share their love and build community and belonging around extremely niche board games, or where a movement of guerrilla gardeners organise and plan the next step of their green urban revolution… Or indeed, where we all, just some months past, were forced to celebrate birthdays, anniversaries, weddings, and births.

So again, why does this even matter? Subsuming virtual spaces under the rubric of ‘not in real life’ carries not only risks on broader societal levels, but also on micro-scales. We can already see the impact that memes and trolls have had on broad sociopolitical discourse, as the actions of teenagers who are just taking the piss, rather than the social movements that they are. Populist politicians have gained support specifically in these sections because these virtual spaces are indeed anthropological spaces. Meaning is negotiated within them, and what happens there impacts the material world. We might not stand in these virtual fields, among these wireframe cities, but nonetheless we carry out actions there: each abstracted screen name and avatar is a person, doing a thing.

On a smaller, or more individualised, scale the implications are, not surprisingly more personal, but they also run the risk of leading to ever more alienation – a matter all the more apparent as long as our political leaders retain this sense of social distance, and remain unwilling or incapable of taking these spaces seriously – that is to say, as spaces that are just as material as streets, town squares, or parliamentary halls. The broader issues here are immense, as a large portion of “us” (and I count myself among them) digital natives operate perhaps not only on a different level, but within a different dimension from the generations before us. I can count the times that socialising with friends in an online video game, or the communities that sprung up around it has been waved away as a waste of time, or something unreal, while myself and many others made genuine friends, and maintained even more friendships through this virtual dimension. How many of us do not know the pain of seeing an old video-game friend’s profile read 'last online: 1,127 days ago.' It matters to us precisely because it was real.

We may not be wearing VR goggles tethered to pastiche cassette-futuristic computer-tables to chat with our nans on Facebook, nor do we ‘plug in’ ourselves and cruise through wireframed cityscapes while video-calling friends, families, or lovers, stuck in their own lockdowns, or merely being located beyond our horizon. Though this development may yet come, sooner than we might have expected. However, presently we do not, as it were, interact with virtual spaces as we do with actual spaces: we do not see them, taste them, smell them, or touch them. But they are felt: emotionally and socially. Cyberspace, such as it is, remains elusive, but this doesn’t mean we do not operate within it, and in some parts of the world we are arguably inside of it, constantly. A permanent backdrop that we feel, to the life that we see. It is our Spirit Realm, the realm that exists and impacts our actual world, bleeding together; screens are our portals, and cyberspace makes itself known to us not necessarily by its material dimension (for this does exist: underseas cables, satellites, server farms) but by the social connection, togetherness, and meaning production that we carry with us, like we carry a smartphone.

There we are. Together, in cyberspace.

[1] Do note: this is an extreme condensation of a whole subfield of anthropology, and I summarise a field that has studied groups from Amazonia, to Greenland, to Papua New Guinea, to Siberia. In other words, it would be an understatement to call this an extremely small nutshell. Nonetheless, the broad principles stand.

0 notes

Text

A paragon of their kind.

“In rode the Lord of the Nazgûl. A great black shape against the fires beyond he loomed up, grown to a vast menace of despair. In rode the Lord of the Nazgûl, under the archway that no enemy ever yet had passed, and all fled before his face.

[…]

And in that very moment, away behind in some courtyard of the city, a cock crowed. Shrill and clear he crowed, recking nothing of war nor of wizardry, welcoming only the morning that in the sky far above the shadows of death was coming with the dawn. And as if in answer there came from far away another note. Horns, horns, horns, in dark Mindolluin’s sides they dimly echoed. Great horns of the north wildly blowing. Rohan had come at last.”

Lord of the Rings: The Return of the King. Chapter IV – The Siege of Gondor.

Heroes have always fascinated me, both who they are as people, and their actions; what makes one ascend to such a status? It is a topic I’ve been mulling over for quite a time, and not least has my interest in these stories been rekindled by all that has been going on for the past year or so. From doctors and healthcare workers being hailed as heroes, or the heroic acts of delivery drivers and other such, often invisible, ‘unqualified’ workers. What makes a hero is something rather difficult to put one’s finger on. It seems people instinctively know what a hero is, at least within their own social and cultural framing, but often struggle with really pinning down the criteria. We see a hero when we hear the stories of a hero, when the act is laid out in front of us, but it is far more challenging to know what a hero is, in the abstract. Indeed, what are the criteria, and what might such an abstracted checklist say about a culture? Or, perhaps more importantly, what might heroes say about how we remember?

I suspect much of my fascination with heroes stems from my deep-seated love for Tolkien’s legendarium and how his writing made heroes. There is much to say on the topic in general, far too much to discuss here (as is my usual cop-out), as his work features heroes a-plenty. They are an inalienable part of the works’ thematic richness: making flesh, so to speak, the core theme of good fighting evil. Evil has its villains, and within such a framing, the good need their heroes. One of my favourite heroic scenes in both the movies and novels is the arrival of the Rohirrim to lift the siege of Minas Tirith. It is a remarkable moment, both narratively, but also for its heroic aesthetics. It is truly a stand-out piece, and in Peter Jackson’s masterful movie adaptations, the whole segment has an otherworldly feel to it; the sense of a divine intervention [1] manifest through near-absurd levels of heroism, and gosh-darn it, if it doesn’t get me every time!

“Arise, arise!” Théoden, king of Rohan, begins his pre-battle speech; his men eventually chant “death!” in response. “Death” the host chants, and death they bring, as they merge into one entity, a singular collective of 10,000 riders: 60,000 legs barrelling down the gentle slopes of Pelennor Fields. A flash flood of death and destruction, momentum and cohesion maintained by rage and a burning hatred for their enemies and the darkness they bring. The whole scene is deliciously epic.

The text is littered with heroic tropes – the pre-battle speech (the logistics of which I still question), or as my father once noted: “this chanting for death just shows the power of mass psychology”. Buzzkill, certainly, but not wrong. Nonetheless, I think this misses the point. The scene is a narrative constructed and filled with culturally and socially coded ideals to signal the heroicness of the actions themselves. Hero stories are hero stories because they are treated as such. In a blunt sense, heroes are always constructed by narratives; arguably not meant to be accurate, but rather meant to show the transcendental nature of the action or actor(s). As a result, the actors ascend to impossible heights with expectations that cannot be realistically maintained in reality; or perhaps more accurately, that cannot be maintained by the merely human. Instead, their heroic transcendence is maintained by ideology, not by their material existence, and Tolkien, in his text, perhaps inadvertently, makes an important distinction: it is not Théoden-king that has arrived with 10,000 riders: rather Rohan had come at last.

What do I mean by this? A hero is transcendental by definition, a symbolic paragon of their kind; an individual or group of individuals who have done something that so perfectly aligns with overarching cultural values and morals that through these actions they transcend their mere humanity and, in effect, become part of the cultural Big Other. However, human is still only human, and such transcendence into the overhanging moral structure is, practically speaking, impossible. It is always a product of narrative: it is always a story; an inspirational tale; a noble lie.

There are a surprising number of commonalities between the heroic figure and what’s been called The King’s Two Bodies. In the European monarchical traditional, there has at times existed a clear distinction between a monarch’s corporeal body – the blood-and-flesh, breathing, eating, shitting, imperfect human body – and his body politic: the transcendental body, the perfect body, the body imbued with divine might, the body chosen by God. The symbolic division between the individual and the power they hold is just as clear in the present with, for example, the different connotations between Boris Johnson-the-man and the Prime Minister of the United Kingdom, or indeed Joe Biden-the-man and the President of the United States of America. Such a leader-follower dynamic seeks to establish a sense of divine kinship, a sense of relatedness to the leader in question by invoking the Nation, a belief, or other ideological constructs. The leader-figure becomes, in effect, a material embodiment of the dominant cultural-ideological environment; a figure imbued with charismatic power which, following Max Weber, is an inspirational individual that people want to follow without promise of reciprocity.

Nonetheless, the tension between the body that shits, and the body blessed by God cannot be ignored. A hero is not the same as a leader, of course. The hero is a paragon, the embodiment of the moral-cultural framework that defines them. However, both leader-figures and the hero-figures are maintained by the existence a second body. This creates a Two-Body Problem. Heroes invariably suffer from a tension that arises between their transcendental heroic self and their excremental human self, and this is a tension that cannot be reconciled effectively. The only course of action is to ignore the tension, as is often done: ignoring a historical individual and instead embrace the mythic figure. The individual subject must be destroyed and replaced by the mythic, the transcendental.

Winston Churchill wrote that “history will be kind to me, for I intend to write it,” a statement which, by and large, turned out to be true. The Churchillian figure is now a trope, and the heroic figure in modern British society par excellence. Nonetheless, even with Churchill’s considerable success, his human self has not been fully exorcised. Criticism against his rampant racism, his colonial policies, and his (lack of) action during the Bengal Famine still exist in public discourse, and become points of contestation, as they painfully highlight the tension between the historical and the mythic figure. If the ol’ British Bulldog was such a remarkably heroic figure, a shining beacon, he cannot be shown to have filth on his underbelly. Even here the Two-Body Problem remains, and short of complete destruction of the historical record (challenging in its own right), it cannot be circumvented – and this is not to mention the implications for a heroic figure that is still live (ritualistic hero-murder might be a somewhat charged suggestion…).

That being said, the destruction of the individual subject can also be read as more metaphorical than literal. The most obvious way of destroying the individual subject is by placing the subject within a collective: to celebrate heroic acts carried out by a group, over those specifically made by an individual. Folding the individual into a collective, which may include any number of otherwise questionable individuals, shifts the focus away from how these individuals may or may not have behaved in the rest of their lives, and instead emphasises the actions of the group, allowing the heroic actions to speak for themselves. The group, as a collective entity, also ceases to exist eventually, insofar as it cannot be maintained indefinitely, and thus circumvents the Two-Body Problem.

The Rohirrim’s chant before their charge at the gates of Minas Tirith was, in a way, correct. “Death,” they chanted; literally and figuratively, did they bring death with them, that is to say, the destruction of the individual in favour of a collective cohesion that they created during their change, just as much as the literal death of crushed Orc-skulls underhoof. Théoden may have been their king, but the charge was not carried out by him. The Charge of the Rohirrim, as a collective, brings to the fore the body politic of the people of Rohan: their courage, their sacrifice, their loyalty, their heroism. Much can be gleaned from this approach to heroism and commemoration – in short: deeds not men! – for without Rohan’s collective heroism, Minas Tirith would have surely fallen.

… As long as they don’t raise a goddamned statue of Théoden.

Selected Bibliography

Yurchak, Alexei. 2015. The Bodies of Lenin: The Hidden Science of Communist Sovereignty.

Michelutti, Lucia. 2013. “We are all Chávez:” Charisma as an embodied experience.

[1] Likely not by accident, and all the more fitting when the charge of the Rohirrim was allegedly inspired by the lifting of the siege of Vienna in 1683 by the Holy League.

0 notes

Text

The Future as Vapor.

‘The semiotic phantoms, bits of deep cultural imagery that have split off and taken on a life of their own.’

William Gibson, The Gernsback Continuum.

I’ve been thinking a lot about time lately. Not wholly sure as to why, perhaps it’s because we’ve just moved from one year to another, and taking stock is only natural; or perhaps because of the peculiar nature of the year that has just ended, with its pandemic, lockdowns, and the many challenges and tragedies borne out of it. Perhaps my research and its focus on time and temporality makes me particularly vulnerable to this sort of introspection; perhaps I am just predisposed to it? Likely, it is a mixture of all of these, but I already digress from the main point I was making, which is, quite simply: I have been thinking a lot about time lately. I’d wager the year that has just been, and which doesn’t feel as if it has fully ended quite yet, has a lot to do with it. My soundtrack for 2020, if there was such a thing, has undoubtedly been vaporwave, dyschronous ‘trapped-in-a-loop’ music for a year where everything stood still: a semi-ironic haunting from the past with empty, tinny beats and retro-synths, just mangled enough to sound new, but not too mangled so as to lose its retro-80s soundscape. It is, as absurd as it sounds, Muzak with teeth. The ironic resurrection of a dead aesthetic, brought back with a vengeance and with a purpose.

Vaporwave gets its name from ‘vaporware’, software that never was. Vaporware is software that has been announced, sometimes even showcased, but which then disappeared into some development maelstrom and seemingly vanished from view. It is neither cancelled, proclaimed dead and left to rest in the pile of ‘what could have been’, but always kept alive – a zombified software – as a potential. Its nonexistence-with-a-side-of-potential is precisely what makes vaporware vaporware. What does vaporwave take from this? The music is a form of Muzak, seemingly generic elevator music perfect for blending into the background but never meant to be listened to. This implies a vaporware existence (existence in nonexistence; or rather nonexistence in existence), vaporwave has more to it than that. It is precisely its purposeful meaningless soundscape that gives vaporwave ability to critique. Often made up of repeating synth riffs, tinny beats, sometimes sounds or jingles reminiscent of 1980s and 1990s TV and radio commercials, it is not an accident that the genre has modelled itself on Muzak. It is an echo of a past that has long disappeared into memory, even into cultural memory; a haunting reminding its listeners of what was, through its twisted soundscape of an otherwise well-trodden cultural form. The genre is best described as music optimised for abandoned malls.

Vaporwave is the audial version of a ruin. Or rather, it is the erection of a folly among ruins, a means to highlight the absurdity of the action itself. Its soundscape exists as a reminder of a past that promised a future that has not appeared; its central thesis – if it were to have one – is that we live surrounded by the ruins of this future-that-never-was. Crucially, and this gets at the heart of the present predicament, we only live and operate among these cultural ruins strictly because we have been unable to reconfigure these cultural building blocks into something new. The ruined landscape of a future that never existed has only come to pass because it has not been replaced by the new. Instead, the orientation has shifted to focusing on the past in the present, not the future ahead of us. The emergence of vaporwave in the present is thus by no means a result of the pandemic, the lockdowns, and the perceived stalling of time as a result, but rather predates it. The pandemic has likely brought such feelings of standstill to the fore, but it by no means created it.

This essay was prompted by a post on Reddit. Paraphrasing, the posted said something to the effect of ‘I don’t want to play the video games from when I was a kid, I want to feel like I did when I played the video games from when I was a kid.’ This, again, gets at the heart of the predicament. That feeling many of us remember from the past is one we have not felt in a long time – myself included. Indeed, video games are a fantastic case study for this development. Using an example from my own experience: I remember when I first played World of Warcraft. I know, your mental image of me as the narrator just shifted substantially, but bear with me. The nature of a fluid massively multiplayer online roleplaying game (MMORPG) wasn’t new by the time WoW was released. Still, it had never been done quite so well: the graphics were fantastic (at the time…), the level of interaction, the fluidity and connectivity of the world, the social aspects and community building… the list goes on, but the software was an adventure, and I (and countless, millions of others) couldn’t get enough of it. It was an unrivalled experience in many ways. Nothing like it had existed before. It was a completely new cultural artefact. It invoked a sense of future-shock.

WoW is, in addition, an interesting example as its original (well almost original) game was re-released in 2019 to thunderous applause, and a community bracing itself for another nerdgasm. The re-release was undoubtedly popular, it was undoubtedly fun, but it wasn’t the same. The feeling that it evoked in the past was no longer there. The future-shock with which it had once been densely packed had melted into air. This disconnect has even been picked up by parts of the community. A debate has raged between players who wish for no changes to be made to the original, for it to be released in its ‘pure’ state (as some changes had been made around specific mechanics, bugs that were never ironed out originally had been, and so forth), and players who call not for a recreation of the original game, but a recreation of the feeling of the original game.

But this is the issue with nostalgia. The original feeling of something unique, the future-shock as it were (or what German historian Reinhard Koselleck called the Überraschung; lit. surprise) cannot by definition be re-created; it must be created anew, with something new. The tragedy faced in the present, then, is that the dominant form among popular cultural media is that of nostalgia: a harkening for past experiences not for the experiences themselves but for that feeling of wonder that came with them: the surprise when playing your first 3d video game, or when first using a smartphone, or at the choice of music on an iPod (not to mention that the songs never skipped if you bumped it!). In many ways, this sense of surprise and wonder has been lost, even if innovation has sped up. Computing is faster than ever. Technology is near-ubiquitous in some parts of the world, yet nothing new seems to come from it. It is the same experiences, but faster, or in higher fidelity – occasionally this even folds back unto itself: vaporwave being a prime example — the mockery of a past cultural form that is only made possible with new technologies and innovations. In short, for all this new potential, nothing new is created.

Much has been written on what has caused this predicament, be it Mark Fisher’s argument that the foundations for innovative cultural forms have all been eroded with the rise of neoliberal capitalism, Franco ‘Bifo’ Berardi’s analysis that the future has disappeared because social imaginaries have been eroded with the rise of global techno-capitalism, or indeed Fredric Jameson’s take that capital is too effective at rehabilitating the radically new. To varying degrees, these thinkers (and others) speak to the problem of nostalgia, specifically how the marketing of nostalgia is but a logical conclusion. In the present neo-liberal configuration, innovating is a risk, especially within the realm of culture and pop-culture. It is much safer, and more in line with the underpinning profit motive, to repackage and re-sell old cultural forms as nostalgia and pastiche: think of the Star Wars universe's resurrection yet again, or indeed the example above with the re-release of WoW.

‘Fine’, you say, ‘you’re right’, you concede, ‘but what’s the problem?’ you finally ask. The issue with nostalgia becoming one of the main pop-cultural articulations is that it reorients the present away from the future and towards a past long gone. A lack of future orientation, in turn, takes out much of the hope surrounding societal and cultural development and innovation. To frame this less abstractly: it is hardly news that scientific research and literature, typically in the form of science fiction, exist in a feedback loop. They both take inspiration from one another. Scientific breakthroughs lead to authors to push the boundaries of the imaginable, which in turn inspire scientists, engineers, and inventors to make science fiction science reality. In the words of William Gibson: ‘There are bits of the literal future right here, right now, if you know how to look for them. Although I can’t tell you how; it’s a non-rational process.’ Just think of how many present innovation and inventions we have already seen on shows like Star Trek. Lacking this future orientation, in short, invariably leads to a form of social and cultural stagnation. Let me be clear here: this is not a piece lamenting the ‘fall’ of some romanticised Western culture or some such nonsense. Instead, much of our present social, political and cultural order is underpinned by a futural orientation insofar as it is a belief in a future that drives engagement, innovation, and creativity; that creates future-shock. Why bother changing anything if ‘this is it’? It is precisely this process that ‘Bifo’ Berardi described as the slow cancellation of the future, and that the late Mark Fisher referred to when he asked, “Is there no alternative?”

When I say that nostalgia has become the dominant cultural form, this is what I mean. The conventional means of artistic productions have been subsumed under an unmoving profit motive. As a result, real, shocking, surprising innovation cannot take place. But I wish not to end it with such a conclusion, as merely pointing at a problem isn’t necessarily helpful. Instead, new & radically different forms of production must be discovered. Fredric Jameson calls such an exercise cognitive mapping, the process to resituate oneself in the cultural landscape and thus gain a new perspective. To continue a metaphor: to move out of the ruins and into new vistas to regroup, reshape, and ultimately rebuild. The first step is to realise the impasse faced, the second is to do something about it. This process can already be seen in some spaces, especially among grass-roots movements like the markers’ movement, citizen scientists, and other groups – be they tech-focused or artists’ collectives. What ought not be understated, on the other hand, is the importance of ensuring such a shift takes place, lest we end up reading our own collective epitaph:

‘[…]

And on the pedestal, these words appear:

My name is Ozymandias, King of Kings;

Look on my Works, ye Mighty, and despair!

Nothing beside remains. Round the decay

Of that colossal Wreck, boundless and bare

The lone and level sands stretch far away.’

Ozymandias by Percy Bysshe Shelley, 1818.

8 notes

·

View notes

Text

Blood for the Blood God.

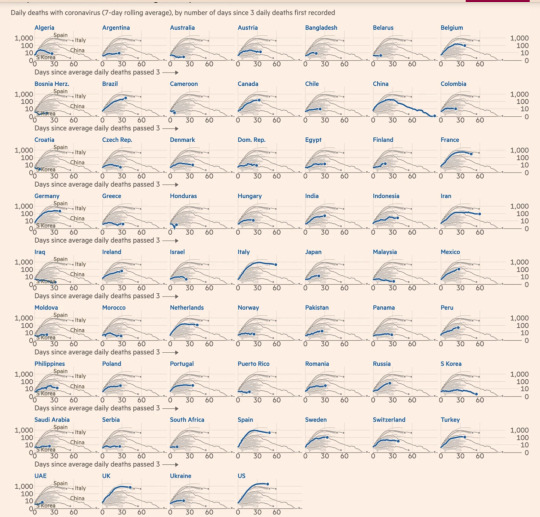

The year of our Lord two-thousand and twenty, or 20-20 in common vernacular, has been a wild ride. It’s been the kind of year when time compresses and six months simultaneously feels like six weeks and six years. The year started with an almost-war, a continent almost burning to the ground, then a pandemic, and now we’re almost back where we started: a(nother) continent is on fire, the pandemic is coming back for its own electric boogaloo, and perhaps this year will include a war after all. To misquote the LEGO Movie: everything is awful. What may be at the top of most of our shit-lists at the moment is the growth of the COVID-19 infections, despite what has felt like a constant bombardment of information, PSAs, commentary, and debate surrounding this global pandemic.